Best AI tools for< Data Engineer >

Infographic

100 - AI tool Sites

Eztrackr

Eztrackr is an AI-powered application designed to help job seekers organize their job hunt efficiently. It offers features such as job tracking, AI answer generation, skill matching, cover letter generation, resume building, and powerful statistics to streamline the job search process. With Eztrackr, users can track job applications effortlessly, gain valuable insights, and manage their job hunt all in one place.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes through self-optimizing, lossless compression. It works seamlessly across various data platforms like Iceberg, Delta, Trino, Spark, Snowflake, BigQuery, and Databricks, offering significant cost savings and improved query performance. Granica is trusted by data and AI leaders globally for its ability to reduce data bloat, speed up queries, and enhance data lake optimization. The tool is built for structured AI, providing transparent deployment, continuous adaptation, hands-off orchestration, and trusted controls for data security and compliance.

babs.ai

babs.ai is an AI-powered job matching platform that connects talent with opportunities. It leverages intelligent matching algorithms to streamline the recruitment process and ensure a seamless experience for both job seekers and employers. The platform caters to a wide range of job roles and industries, making it a versatile solution for all types of users.

Lume AI

Lume AI is an AI-powered data mapping suite that automates the process of mapping, cleaning, and validating data in various workflows. It offers a comprehensive solution for building pipelines, onboarding customer data, and more. With AI-driven insights, users can streamline data analysis, mapper generation, deployment, and maintenance. Lume AI provides both a no-code platform and API integration options for seamless data mapping. Trusted by market leaders and startups, Lume AI ensures data security with enterprise-grade encryption and compliance standards.

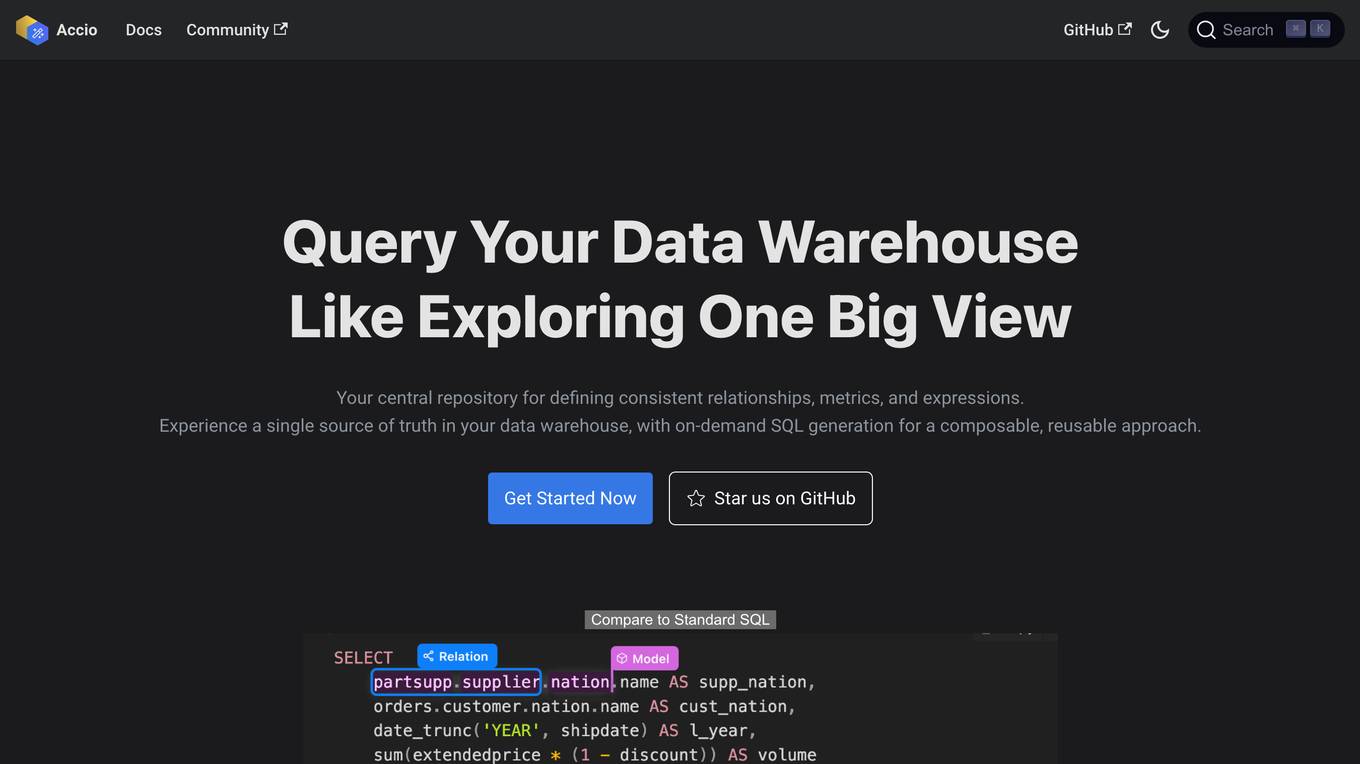

Accio

Accio is a data modeling tool that allows users to define consistent relationships, metrics, and expressions for on-the-fly computations in reports and dashboards across various BI tools. It provides a syntax similar to GraphQL that allows users to define models, relationships, and metrics in a human-readable format. Accio also offers a user-friendly interface that provides data analysts with a holistic view of the relationships between their data models, enabling them to grasp the interconnectedness and dependencies within their data ecosystem. Additionally, Accio utilizes DuckDB as a caching layer to accelerate query performance for BI tools.

Coginiti

Coginiti is a collaborative analytics platform and tools designed for SQL developers, data scientists, engineers, and analysts. It offers capabilities such as AI assistant, data mesh, database & object store support, powerful query & analysis, and share & reuse curated assets. Coginiti empowers teams and organizations to manage collaborative practices, data efficiency, and deliver trusted data products faster. The platform integrates modular analytic development, collaborative versioned teamwork, and a data quality framework to enhance productivity and ensure data reliability. Coginiti also provides an AI-enabled virtual analytics advisor to boost team efficiency and empower data heroes.

Text2SQL.AI

Text2SQL.AI is an AI-powered SQL query builder that helps users generate optimized SQL queries effortlessly. It supports various AI-powered services, including SQL query building from textual instructions, SQL query explanation to plain English, SQL query error fixation, adding custom database schemas, SQL dialects for various database types, Microsoft Excel and Google Sheets formula generation and explanation, and Regex expression generation and explanation. The tool is designed to improve SQL skills, save time, and assist beginners, data analysts, data scientists, data engineers, and software developers in their work.

Chadview

Chadview is a real-time meetings assistant powered by ChatGPT that helps you answer questions during job interviews. It listens to your Zoom, Google Meet, or Teams call and provides instant answers to any questions asked. Chadview is easy to use, simply install the Chrome extension and start your free trial. It supports multiple languages and can be used for any technical role. Chadview is a valuable tool for anyone looking to improve their performance in job interviews.

Compact Data Science

Compact Data Science is a data science platform that provides a comprehensive set of tools and resources for data scientists and analysts. The platform includes a variety of features such as data preparation, data visualization, machine learning, and predictive analytics. Compact Data Science is designed to be easy to use and accessible to users of all skill levels.

SID

SID is a data ingestion, storage, and retrieval pipeline that provides real-time context for AI applications. It connects to various data sources, handles authentication and permission flows, and keeps information up-to-date. SID's API allows developers to retrieve the right piece of data for a given task, enabling them to build AI apps that are fast, accurate, and scalable. With SID, developers can focus on building their products and leave the data management to SID.

NLSQL

NLSQL is a B2B SaaS tool that empowers employees with an intuitive text interface to inform and speed up business decisions with significant benefits for enterprises. It works as the first NLP to SQL API, which doesn't require any sensitive or confidential data transfer outside the corporate IT ecosystem. NLSQL supports integrations to all main database types and corporate messengers, which helps drive businesses forward faster with data-driven business decisions.

Latitude

Latitude is an open-source framework for building interactive data apps using code. It provides a workspace for data analysts to streamline their workflow, connect to various data sources, perform data transformations, create visualizations, and collaborate with others. Latitude aims to simplify the data analysis process by offering features such as data snapshots, a data profiler, a built-in AI assistant, and tight integration with dbt.

Defog.ai

Defog.ai provides fine-tuned AI models for enterprise SQL. It helps businesses speed up data analyses in SQL, Python, and R with AI assistants and agents tailored for their business - without sharing their data. Defog.ai's key features include the ability to ask questions of data in natural language, get results when needed, integrate with any SQL database or data warehouse, automatically visualize data as tables and charts, and fine-tune on your metadata to give results you can trust.

Amazon Q in QuickSight

Amazon Q in QuickSight is a generative BI assistant that makes it easy to build and consume insights. With Amazon Q, BI users can build, discover, and share actionable insights and narratives in seconds using intuitive natural language experiences. Analysts can quickly build visuals and calculations and refine visuals using natural language. Business users can self-serve data and insights using natural language. Amazon Q is built with security and privacy in mind. It can understand and respect your existing governance identities, roles, and permissions and use this information to personalize its interactions. If a user doesn't have permission to access certain data without Amazon Q, they can't access it using Amazon Q either. Amazon Q in QuickSight is designed to meet the most stringent enterprise requirements from day one—none of your data or Amazon Q inputs and outputs are used to improve underlying models of Amazon Q for anyone but you.

CodeSquire

CodeSquire is an AI-powered code writing assistant that helps data scientists, engineers, and analysts write code faster and more efficiently. It provides code completions and suggestions as you type, and can even generate entire functions and SQL queries. CodeSquire is available as a Chrome extension and works with Google Colab, BigQuery, and JupyterLab.

Ocular

Ocular is an AI-powered search platform that allows users to search, visualize, and take action on their work and engineering tools and data on one unified platform. It is designed to help engineers work more efficiently and effectively by providing them with a single, central location to access all of their relevant information.

Qubinets

Qubinets is a cloud data environment solutions platform that provides building blocks for building big data, AI, web, and mobile environments. It is an open-source, no lock-in, secured, and private platform that can be used on any cloud, including AWS, Digital Ocean, Google Cloud, and Microsoft Azure. Qubinets makes it easy to plan, build, and run data environments, and it streamlines and saves time and money by reducing the grunt work in setup and provisioning.

Tredence

Tredence is a data science and AI services company that provides end-to-end solutions for businesses across various industries. The company's services include data engineering, data analytics, AI consulting, and machine learning operations (MLOps). Tredence has a team of experienced data scientists and engineers who use their expertise to help businesses solve complex data challenges and achieve their business goals.

RIDO Protocol

RIDO Protocol is a decentralized data protocol that allows users to extract value from their personal data in Web2 and Web3. It provides users with a variety of features, including programmable data generation, programmable access control, and cross-application data sharing. RIDO also has a data marketplace where users can list or offer their data information and ownership. Additionally, RIDO has a DataFi protocol which promotes the flowing of data information and value.

DataCamp

DataCamp is an online learning platform that offers courses in data science, AI, and machine learning. The platform provides interactive exercises, short videos, and hands-on projects to help learners develop the skills they need to succeed in the field. DataCamp also offers a variety of resources for businesses, including team training, custom content development, and data science consulting.

Appen

Appen is a leading provider of high-quality data for training AI models. The company's end-to-end platform, flexible services, and deep expertise ensure the delivery of high-quality, diverse data that is crucial for building foundation models and enterprise-ready AI applications. Appen has been providing high-quality datasets that power the world's leading AI models for decades. The company's services enable it to prepare data at scale, meeting the demands of even the most ambitious AI projects. Appen also provides enterprises with software to collect, curate, fine-tune, and monitor traditionally human-driven tasks, creating massive efficiencies through a trustworthy, traceable process.

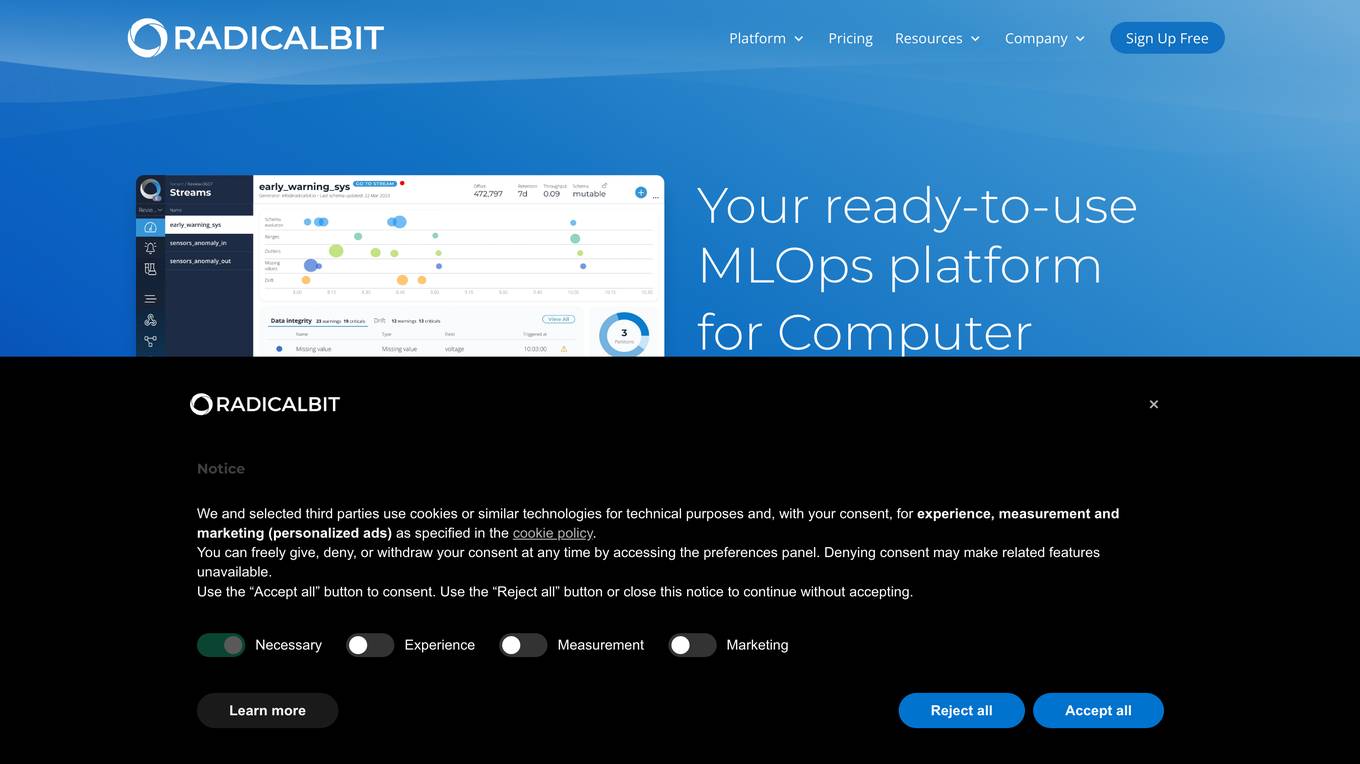

Radicalbit

Radicalbit is an MLOps and AI Observability platform that helps businesses deploy, serve, observe, and explain their AI models. It provides a range of features to help data teams maintain full control over the entire data lifecycle, including real-time data exploration, outlier and drift detection, and model monitoring in production. Radicalbit can be seamlessly integrated into any ML stack, whether SaaS or on-prem, and can be used to run AI applications in minutes.

Seudo

Seudo is a data workflow automation platform that uses AI to help businesses automate their data processes. It provides a variety of features to help businesses with data integration, data cleansing, data transformation, and data analysis. Seudo is designed to be easy to use, even for businesses with no prior experience with AI. It offers a drag-and-drop interface that makes it easy to create and manage data workflows. Seudo also provides a variety of pre-built templates that can be used to get started quickly.

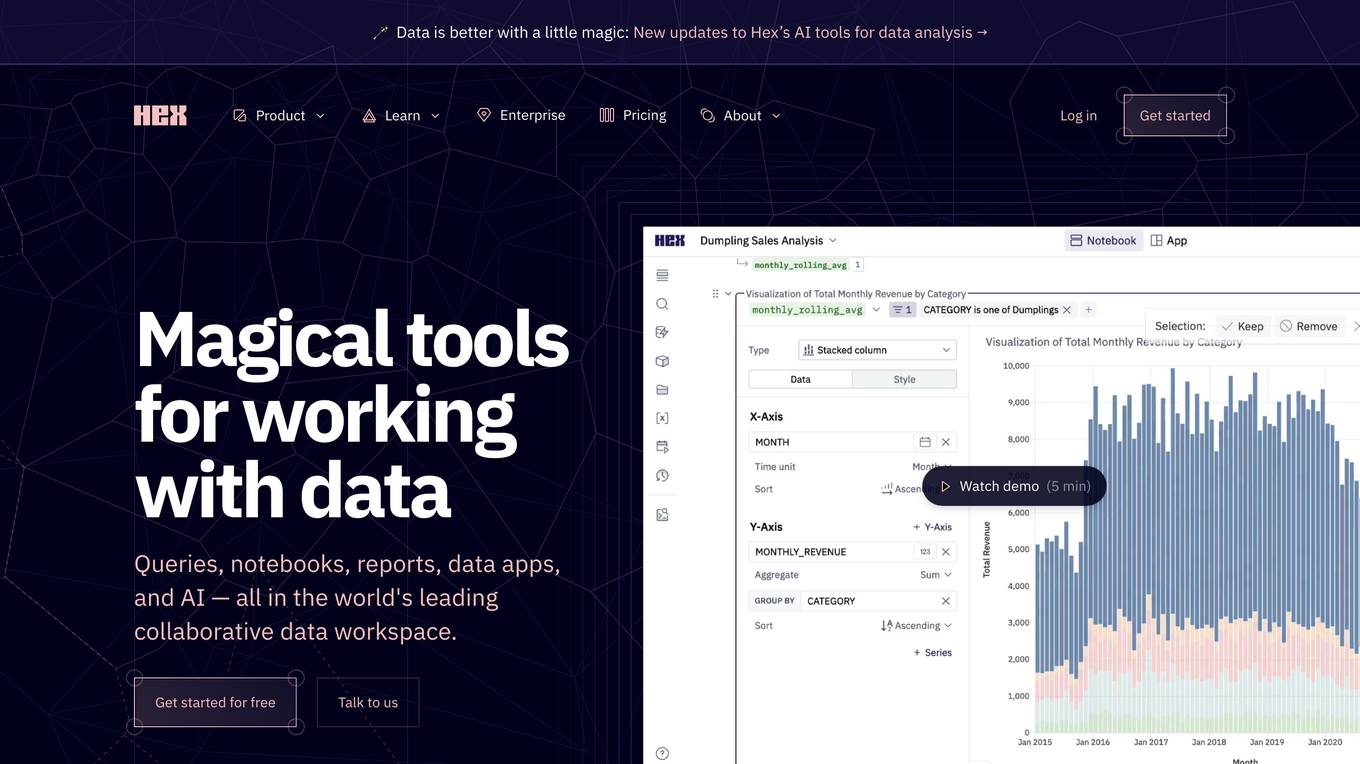

Hex

Hex is a collaborative data workspace that provides a variety of tools for working with data, including queries, notebooks, reports, data apps, and AI. It is designed to be easy to use for people of all technical skill levels, and it integrates with a variety of other tools and services. Hex is a powerful tool for data exploration, analysis, and visualization.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables data scientists and IT leaders to build, deploy, and manage AI models at scale. It provides a unified platform for accessing data, tools, compute, models, and projects across any environment. Domino also fosters collaboration, establishes best practices, and tracks models in production to accelerate and scale AI while ensuring governance and reducing costs.

Databricks

Databricks is a data and AI company that provides a unified platform for data, analytics, and AI. The platform includes a variety of tools and services for data management, data warehousing, real-time analytics, data engineering, data science, and AI development. Databricks also offers a variety of integrations with other tools and services, such as ETL tools, data ingestion tools, business intelligence tools, AI tools, and governance tools.

Datamation

Datamation is a leading industry resource for B2B data professionals and technology buyers. Datamation’s focus is on providing insight into the latest trends and innovation in AI, data security, big data, and more, along with in-depth product recommendations and comparisons. More than 1.7M users gain insight and guidance from Datamation every year.

ConsciousML

ConsciousML is a blog that provides in-depth and beginner-friendly content on machine learning, data engineering, and productivity. The blog covers a wide range of topics, including ML model deployment, data pipelines, deep work, data engineering, and more. The articles are written by experts in the field and are designed to help readers learn about the latest trends and best practices in machine learning and data engineering.

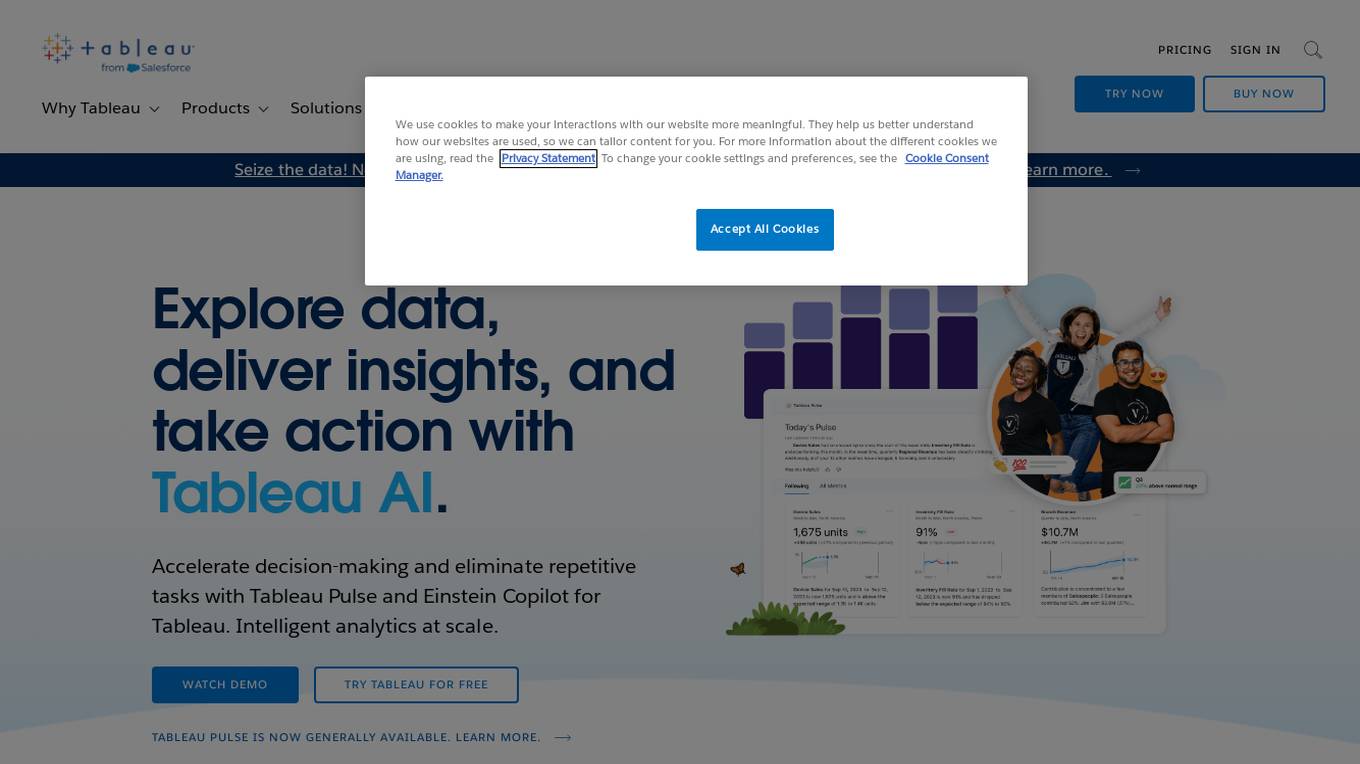

Tableau Augmented Analytics

Tableau Augmented Analytics is a class of analytics powered by artificial intelligence (AI) and machine learning (ML) that expands a human’s ability to interact with data at a contextual level. It uses AI to make analytics accessible so that more people can confidently explore and interact with data to drive meaningful decisions. From automated modeling to guided natural language queries, Tableau's augmented analytics capabilities are powerful and trusted to help organizations leverage their growing amount of data and empower a wider business audience to discover insights.

Supersimple

Supersimple is an AI-native data analytics platform that combines a semantic data modeling layer with the ability to answer ad hoc questions, giving users reliable, consistent data to power their day-to-day work.

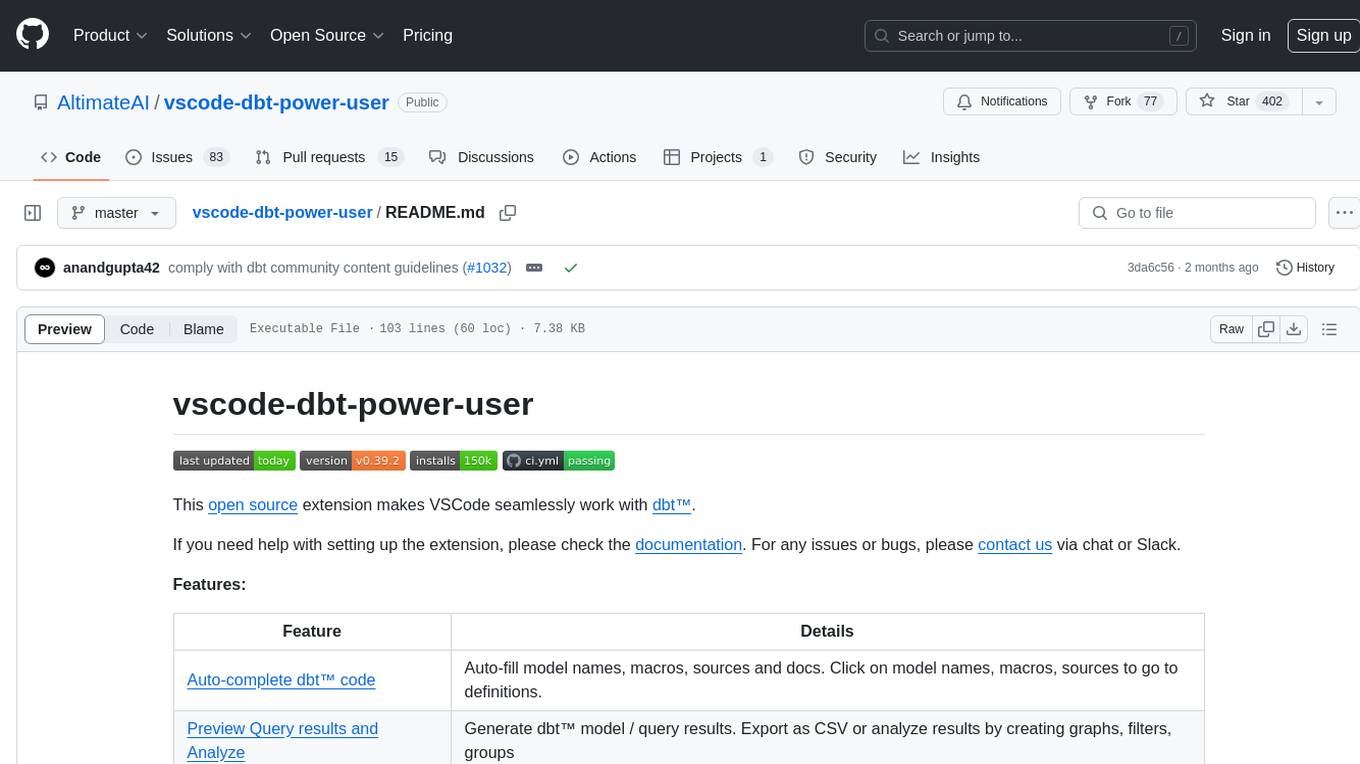

ChatDBT

ChatDBT is a DBT designer with prompting that helps you write better DBT code. It provides a user-friendly interface that makes it easy to create and edit DBT models, and it includes a number of features that can help you improve the quality of your code.

Tableau

Tableau is a visual analytics platform that helps people see, understand, and act on data. It is used by organizations of all sizes to solve problems, make better decisions, and improve operations. Tableau's platform is intuitive and easy to use, making it accessible to people of all skill levels. It also offers a wide range of features and capabilities, making it a powerful tool for data analysis and visualization.

KNIME

KNIME is a data science platform that enables users to analyze, blend, transform, model, visualize, and deploy data science solutions without coding. It provides a range of features and advantages for business and domain experts, data experts, end users, and MLOps & IT professionals across various industries and departments.

Dataiku

Dataiku is an end-to-end platform for data and AI projects. It provides a range of capabilities, including data preparation, machine learning, data visualization, and collaboration tools. Dataiku is designed to make it easy for users to build, deploy, and manage AI projects at scale.

Goptimise

Goptimise is a no-code AI-powered scalable backend builder that helps developers craft scalable, seamless, powerful, and intuitive backend solutions. It offers a solid foundation with robust and scalable infrastructure, including dedicated infrastructure, security, and scalability. Goptimise simplifies software rollouts with one-click deployment, automating the process and amplifying productivity. It also provides smart API suggestions, leveraging AI algorithms to offer intelligent recommendations for API design and accelerating development with automated recommendations tailored to each project. Goptimise's intuitive visual interface and effortless integration make it easy to use, and its customizable workspaces allow for dynamic data management and a personalized development experience.

Snaplet

Snaplet is a data management tool for developers that provides AI-generated dummy data for local development, end-to-end testing, and debugging. It uses a real programming language (TypeScript) to define and edit data, ensuring type safety and auto-completion. Snaplet understands database structures and relationships, automatically transforming personally identifiable information and seeding data accordingly. It integrates seamlessly into development workflows, providing data where it's needed most: on local machines, for CI/CD testing, and preview environments.

Alteryx

Alteryx offers a leading AI Platform for Enterprise Analytics that delivers actionable insights by automating analytics. The platform combines the power of data preparation, analytics, and machine learning to help businesses make better decisions faster. With Alteryx, businesses can connect to a wide variety of data sources, prepare and clean data, perform advanced analytics, and build and deploy machine learning models. The platform is designed to be easy to use, even for non-technical users, and it can be deployed on-premises or in the cloud.

Commabot

Commabot is an online CSV editor that allows users to view, edit, and convert CSV files with the help of an AI-powered assistant. It features an intuitive spreadsheet interface, data operations capabilities, an AI virtual assistant, and transformation and conversion functionalities.

LlamaIndex

LlamaIndex is a leading data framework designed for building LLM (Large Language Model) applications. It allows enterprises to turn their data into production-ready applications by providing functionalities such as loading data from various sources, indexing data, orchestrating workflows, and evaluating application performance. The platform offers extensive documentation, community-contributed resources, and integration options to support developers in creating innovative LLM applications.

Stack Overflow Blog

The Stack Overflow Blog is a platform that provides insights, updates, and discussions on various topics related to software development, technology, AI/ML, and career advice. It offers a space for developers and technologists to collaborate, share knowledge, and engage with the community. The blog covers a wide range of subjects, including product releases, podcast episodes, and industry trends. Users can explore articles, podcasts, and announcements to stay informed and connected with the tech community.

Databricks

Databricks is a data and AI company that offers a Data Intelligence Platform to help users succeed with AI by developing generative AI applications, democratizing insights, and driving down costs. The platform maintains data lineage, quality, control, and privacy across the entire AI workflow, enabling users to create, tune, and deploy generative AI models. Databricks caters to industry leaders, providing tools and integrations to speed up success in data and AI. The company offers resources such as support, training, and community engagement to help users succeed in their data and AI journey.

Innodata Inc.

Innodata Inc. is a global data engineering company that delivers AI-enabled software platforms and managed services for AI data collection/annotation, AI digital transformation, and industry-specific business processes. They provide a full-suite of services and products to power data-centric AI initiatives using artificial intelligence and human expertise. With a 30+ year legacy, they offer the highest quality data and outstanding service to their customers.

DevRev

DevRev is an AI-native modern support platform that offers a comprehensive solution for customer experience enhancement. It provides data engineering, knowledge graph, and customizable LLMs to streamline support, product management, and software development processes. With features like in-browser analytics, consumer-grade social collaboration, and global scale API calls, DevRev aims to bring together different silos within a company to drive efficiency and collaboration. The platform caters to support people, product managers, and developers, automating tasks, assisting in decision-making, and elevating collaboration levels. DevRev is designed to empower digital product teams to assimilate customer feedback in real-time, ultimately powering the next generation of technology companies.

Getin.AI

Getin.AI is a platform that focuses on AI jobs, career paths, and company profiles in the fields of artificial intelligence, machine learning, and data science. Users can explore various job categories, such as Analyst, Consulting, Customer Service & Support, Data Science & Analytics, Engineering, Finance & Accounting, HR & Recruiting, Legal, Compliance and Ethics, Marketing & PR, Product, Sales And Business Development, Senior Management / C-level, Strategy & M&A, and UX, UI & Design. The platform provides a comprehensive list of remote job opportunities and features detailed job listings with information on job titles, companies, locations, job descriptions, and required skills.

Supertype

Supertype is a full-cycle data science consultancy offering a range of services including computer vision, custom BI development, managed data analytics, programmatic report generation, and more. They specialize in providing tailored solutions for data analytics, business intelligence, and data engineering services. Supertype also offers services for developing custom web dashboards, computer vision research and development, PDF generation, managed analytics services, and LLM development. Their expertise extends to implementing data science in various industries such as e-commerce, mobile apps & games, and financial markets. Additionally, Supertype provides bespoke solutions for enterprises, advisory and consulting services, and an incubator platform for data scientists and engineers to work on real-world projects.

integrate.ai

integrate.ai is a platform that enables data and analytics providers to collaborate easily with enterprise data science teams without moving data. Powered by federated learning technology, the platform allows for efficient proof of concepts, data experimentation, infrastructure agnostic evaluations, collaborative data evaluations, and data governance controls. It supports various data science jobs such as match rate analysis, exploratory data analysis, correlation analysis, model performance analysis, feature importance & data influence, and model validation. The platform integrates with popular data science tools like Azure, Jupyter, Databricks, AWS, GCP, Snowflake, Pandas, PyTorch, MLflow, and scikit-learn.

ChatViz

ChatViz is an AI-powered data visualization tool that leverages ChatGPT to enhance data visualization capabilities. It offers features such as SQL translator and chart suggestion to streamline the visualization process. By utilizing ChatViz, users can optimize development time, simplify data visualization, and say goodbye to dashboard complexity. The tool provides a new way to visualize data, reducing development time and improving user experience.

GAIA

GAIA is a powerful creation engine designed for the AI Age. It provides users with advanced tools and capabilities to develop AI applications, machine learning models, and data analytics solutions. With a user-friendly interface and robust features, GAIA empowers individuals and organizations to harness the potential of artificial intelligence for various projects and initiatives. Whether you are a data scientist, developer, or AI enthusiast, GAIA offers a comprehensive platform to bring your ideas to life and drive innovation in the rapidly evolving AI landscape.

Sicara

Sicara is a data and AI expert platform that helps clients define and implement data strategies, build data platforms, develop data science products, and automate production processes with computer vision. They offer services to improve data performance, accelerate data use cases, integrate generative AI, and support ESG transformation. Sicara collaborates with technology partners to provide tailor-made solutions for data and AI challenges. The platform also features a blog, job offers, and a team of experts dedicated to enhancing productivity and quality in data projects.

Teraflow.ai

Teraflow.ai is an AI-enablement company that specializes in helping businesses adopt and scale their artificial intelligence models. They offer services in data engineering, ML engineering, AI/UX, and cloud architecture. Teraflow.ai assists clients in fixing data issues, boosting ML model performance, and integrating AI into legacy customer journeys. Their team of experts deploys solutions quickly and efficiently, using modern practices and hyper scaler technology. The company focuses on making AI work by providing fixed pricing solutions, building team capabilities, and utilizing agile-scrum structures for innovation. Teraflow.ai also offers certifications in GCP and AWS, and partners with leading tech companies like HashiCorp, AWS, and Microsoft Azure.

Hopsworks

Hopsworks is an AI platform that offers a comprehensive solution for building, deploying, and monitoring machine learning systems. It provides features such as a Feature Store, real-time ML capabilities, and generative AI solutions. Hopsworks enables users to develop and deploy reliable AI systems, orchestrate and monitor models, and personalize machine learning models with private data. The platform supports batch and real-time ML tasks, with the flexibility to deploy on-premises or in the cloud.

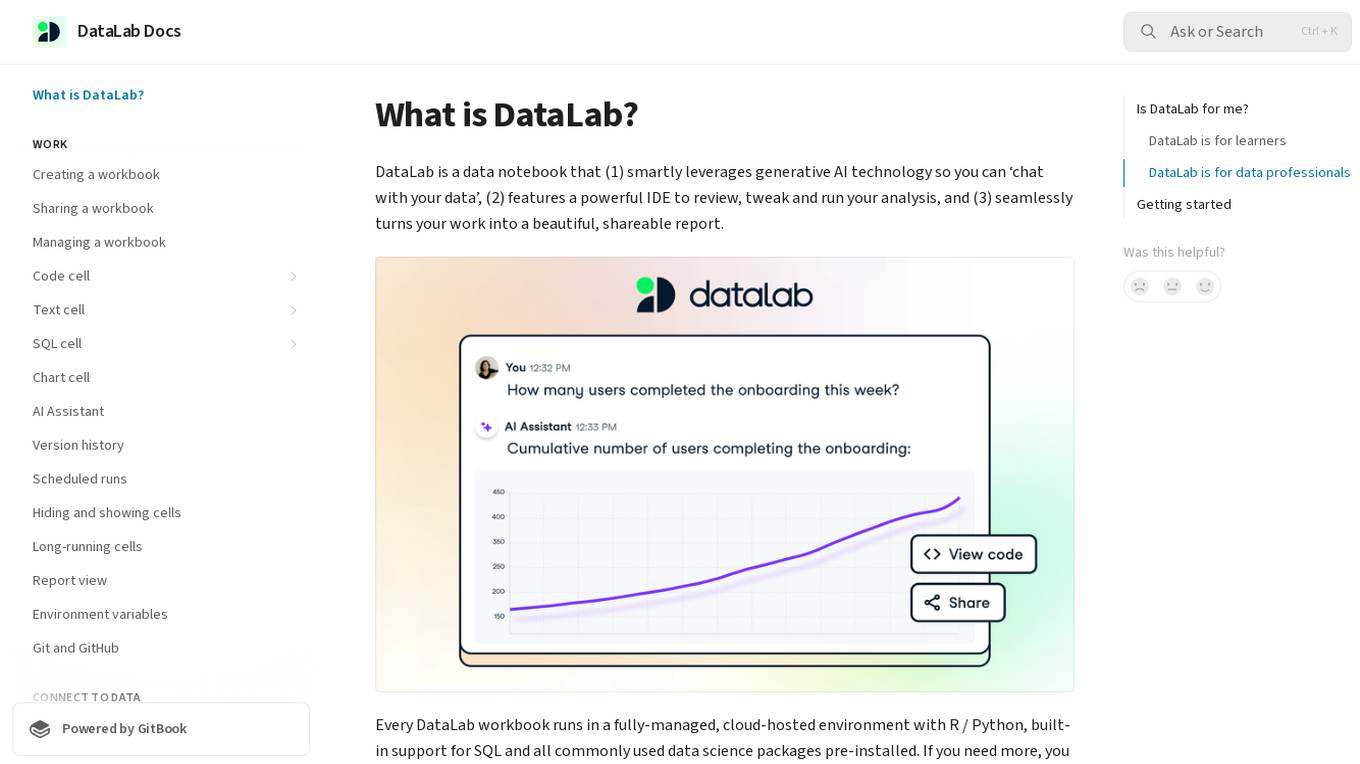

DataLab

DataLab is a data notebook that smartly leverages generative AI technology to enable users to 'chat with their data'. It features a powerful IDE for analysis, and seamlessly transforms work into shareable reports. The application runs in a cloud-hosted environment with support for R/Python, SQL, and various data science packages. Users can connect to external databases, collaborate in real-time, and utilize an AI Assistant for code generation and error correction.

New Relic

New Relic is an AI monitoring platform that offers an all-in-one observability solution for monitoring, debugging, and improving the entire technology stack. With over 30 capabilities and 750+ integrations, New Relic provides the power of AI to help users gain insights and optimize performance across various aspects of their infrastructure, applications, and digital experiences.

Alluxio

Alluxio is a data orchestration platform designed for the cloud, offering seamless access, management, and running of AI/ML workloads. Positioned between compute and storage, Alluxio provides a unified solution for enterprises to handle data and AI tasks across diverse infrastructure environments. The platform accelerates model training and serving, maximizes infrastructure ROI, and ensures seamless data access. Alluxio addresses challenges such as data silos, low performance, data engineering complexity, and high costs associated with managing different tech stacks and storage systems.

Sherloq

Sherloq is an AI-powered platform designed for SQL users in data-driven teams. It provides a single source of truth for SQL data, offering deep analysis capabilities and time-saving features. With a focus on accessibility and collaboration, Sherloq allows users to get quick answers to specific questions, share insights with saved queries, and manage SQL repositories efficiently. The platform prioritizes data security, being SOC2 Audit certified, and requires no integrations into user data or metadata. Sherloq is trusted by over 1000 SQL users and is recognized for its fast growth and user satisfaction.

PandasAI

PandasAI is an open-source AI tool designed for conversational data analysis. It allows users to ask questions in natural language to their enterprise data and receive real-time data insights. The tool is integrated with various data sources and offers enhanced analytics, actionable insights, detailed reports, and visual data representation. PandasAI aims to democratize data analysis for better decision-making, offering enterprise solutions for stable and scalable internal data analysis. Users can also fine-tune models, ingest universal data, structure data automatically, augment datasets, extract data from websites, and forecast trends using AI.

Magic Regex Generator

Magic Regex Generator is an AI-powered tool that simplifies the process of generating, testing, and editing Regular Expression patterns. Users can describe what they want to match in English, and the AI generates the corresponding regex in the editor for testing and refining. The tool is designed to make working with regex easier and more efficient, allowing users to focus on meaningful tasks without getting bogged down in complex pattern matching.

Dot Group Data Advisory

Dot Group is an AI-powered data advisory and solutions platform that specializes in effective data management. They offer services to help businesses maximize the potential of their data estate, turning complex challenges into profitable opportunities using AI technologies. With a focus on data strategy, data engineering, and data transport, Dot Group provides innovative solutions to drive better profitability for their clients.

DQLabs

DQLabs is a modern data quality platform that leverages observability to deliver reliable and accurate data for better business outcomes. It combines the power of Data Quality and Data Observability to enable data producers, consumers, and leaders to achieve decentralized data ownership and turn data into action faster, easier, and more collaboratively. The platform offers features such as data observability, remediation-centric data relevance, decentralized data ownership, enhanced data collaboration, and AI/ML-enabled semantic data discovery.

Dflux

Dflux is a cloud-based Unified Data Science Platform that offers end-to-end data engineering and intelligence with a no-code ML approach. It enables users to integrate data, perform data engineering, create customized models, analyze interactive dashboards, and make data-driven decisions for customer retention and business growth. Dflux bridges the gap between data strategy and data science, providing powerful SQL editor, intuitive dashboards, AI-powered text to SQL query builder, and AutoML capabilities. It accelerates insights with data science, enhances operational agility, and ensures a well-defined, automated data science life cycle. The platform caters to Data Engineers, Data Scientists, Data Analysts, and Decision Makers, offering all-round data preparation, AutoML models, and built-in data visualizations. Dflux is a secure, reliable, and comprehensive data platform that automates analytics, machine learning, and data processes, making data to insights easy and accessible for enterprises.

PurpleCube.ai

PurpleCube.ai is an AI-powered platform that revolutionizes data engineering by unifying, automating, and activating data processes. The platform offers real-time Gen AI assistance to enhance data team productivity, efficiency, and accuracy. PurpleCube.ai empowers data experts to drive business innovation, collaborate seamlessly, and deliver impactful business value through advanced analytics and data engineering capabilities. The platform is trusted by various enterprises globally for its comprehensive metadata management, governance, and generative AI features.

File Transcribe

File Transcribe is an AI-powered application that offers accurate and effortless transcription of audio and video files. The platform utilizes advanced AI technology, including features like diarization, summaries, speaker identification, and more, to simplify the transcription process. With File Transcribe, users can easily convert spoken words into written text, save time, and work more efficiently. The application provides comprehensive transcription solutions, customizable settings, and expert assistance to ensure a smooth transcription experience for individuals and businesses.

Dot Analytics

Dot Analytics is a growth-focused data analytics agency that offers a wide range of services including data analytics, data engineering, data visualization, data science, big data analytics, AI consulting, and more. They specialize in providing analytics solutions for data-driven business managers seeking accuracy, statistics, and data to drive revenue growth. With over 6 years of experience, they offer tailored analytics solutions to optimize customer acquisition cost, lifetime value, average order value, and conversions. Dot Analytics partners with clients from various industries to provide transparent, maintenance, and optimization services.

Deepnote

Deepnote is an AI-powered analytics and data science notebook platform designed for teams. It allows users to turn notebooks into powerful data apps and dashboards, combining Python, SQL, R, or even working without writing code at all. With Deepnote, users can query various data sources, generate code, explain code, and create interactive visualizations effortlessly. The platform offers features like collaborative workspaces, scheduling notebooks, deploying APIs, and integrating with popular data warehouses and databases. Deepnote prioritizes security and compliance, providing users with control over data access and encryption. It is loved by a community of data professionals and widely used in universities and by data analysts and scientists.

SD Times

The website is a comprehensive platform for software development news, covering a wide range of topics such as AI, DevOps, Observability, CI/CD, Cloud Native, Data, Test Automation, Mobile, API, Performance, Security, DevSecOps, Enterprise Security, Supply Chain Security, Teams & Culture, Dev Manager, Agile, Value Stream, Productivity, and more. It provides news articles, webinars, podcasts, and white papers to keep developers informed about the latest trends and technologies in the software development industry.

Walter Shields Data Academy

Walter Shields Data Academy is an AI-powered platform offering premium training in SQL, Python, and Excel. With over 200,000 learners, it provides curated courses from bestselling books and LinkedIn Learning. The academy aims to revolutionize data expertise and empower individuals to excel in data analysis and AI technologies.

Strong Analytics

Strong Analytics is a data science consulting and machine learning engineering company that specializes in building bespoke data science, machine learning, and artificial intelligence solutions for various industries. They offer end-to-end services to design, engineer, and deploy custom AI products and solutions, leveraging a team of full-stack data scientists and engineers with cross-industry experience. Strong Analytics is known for its expertise in accelerating innovation, deploying state-of-the-art techniques, and empowering enterprises to unlock the transformative value of AI.

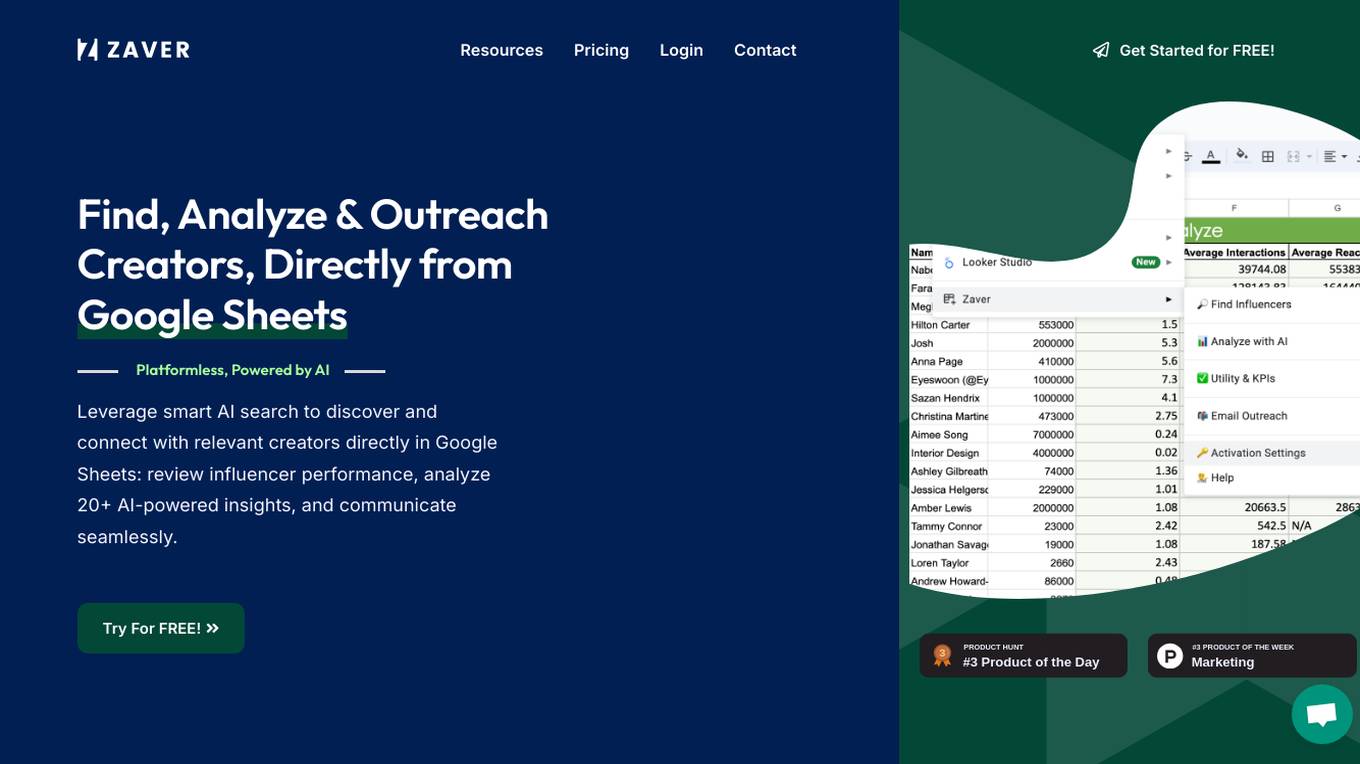

Zaver

Zaver is an AI-powered tool designed to help users find, analyze, and outreach creators directly from Google Sheets. It leverages smart AI search to discover relevant influencers, provides 20+ AI-powered insights, and facilitates seamless communication. Zaver streamlines influencer marketing campaigns by offering effortless management of influencer data, access to performance metrics, AI-based insights, and email outreach capabilities all within Google Sheets. The tool aims to save time, money, and enhance team collaboration in influencer marketing efforts.

ThirdEye Data

ThirdEye Data is a data and AI services & solutions provider that enables enterprises to improve operational efficiencies, increase production accuracies, and make informed business decisions by leveraging the latest Data & AI technologies. They offer services in data engineering, data science, generative AI, computer vision, NLP, and more. ThirdEye Data develops bespoke AI applications using the latest data science technologies to address real-world industry challenges and assists enterprises in leveraging generative AI models to develop custom applications. They also provide AI consulting services to explore potential opportunities for AI implementation. The company has a strong focus on customer success and has received positive reviews and awards for their expertise in AI, ML, and big data solutions.

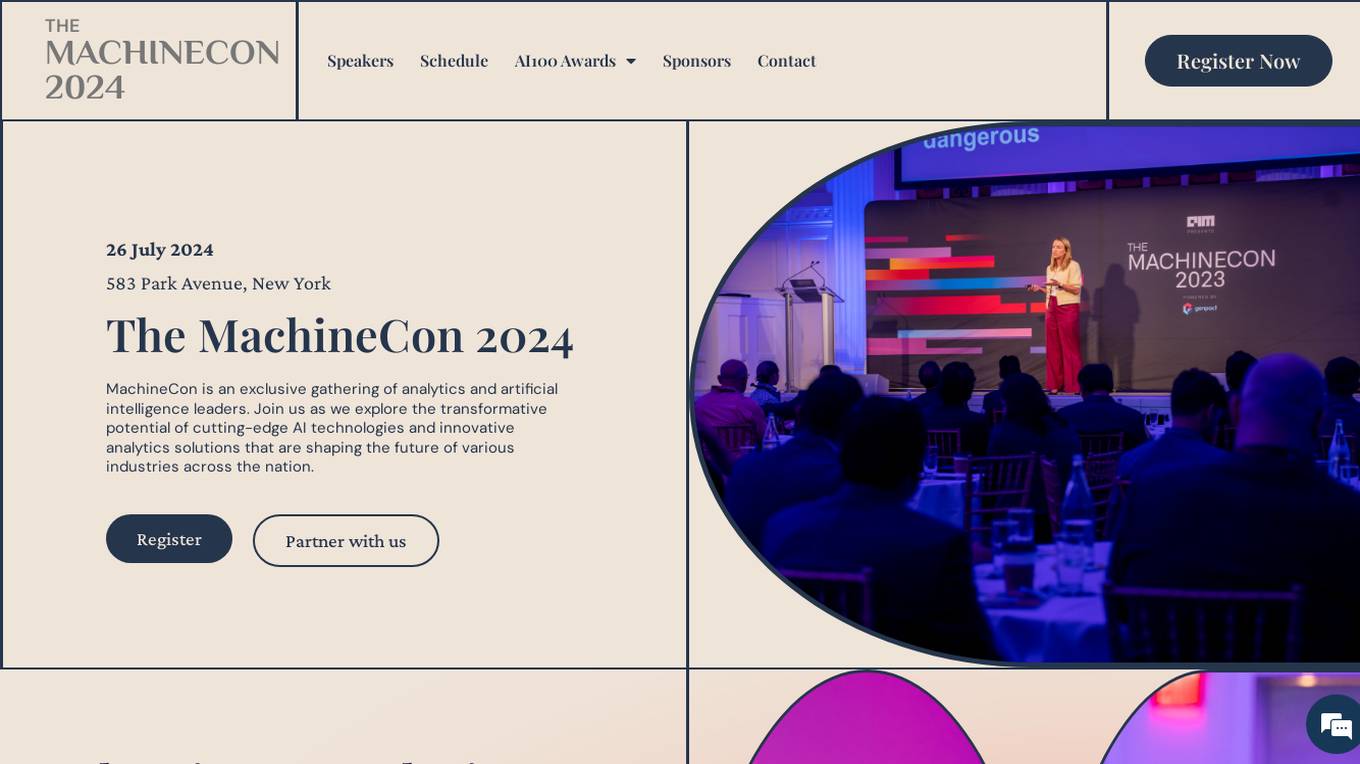

MachineCon 2024

MachineCon 2024 is an exclusive gathering of analytics and artificial intelligence leaders, organized by AIM Research. The conference focuses on exploring cutting-edge AI technologies and innovative analytics solutions that are shaping the future of various industries. It provides a platform for top analytics leaders to learn, network, and do business, emphasizing the transformative potential of data and AI in driving competitive advantage and business transformation.

Space-O Technologies

Space-O Technologies is a top-rated Artificial Intelligence Development Company with 14+ years of expertise in AI software development, consulting services, and ML development services. They excel in deep learning, NLP, computer vision, and AutoML, serving both startups and enterprises. Using advanced tools like Python, TensorFlow, and PyTorch, they create scalable and secure AI products to optimize efficiency, drive revenue growth, and deliver sustained performance.

Open Data Science

Open Data Science (ODS) is a community website offering a platform for data science enthusiasts to engage in tracks, competitions, hacks, tasks, events, and projects. The website serves as a hub for job opportunities and provides a space for privacy policy, service agreements, and public offers. ODS.AI, established in 2015, focuses on various data science topics such as machine learning, computer vision, natural language processing, and more. The platform hosts online and offline events, conferences, and educational courses to foster learning and networking within the data science community.

Global Nodes

Global Nodes is a global leader in innovative solutions, specializing in Artificial Intelligence, Data Engineering, Cloud Services, Software Development, and Mobile App Development. They integrate advanced AI to accelerate product development and provide custom, secure, and scalable solutions. With a focus on cutting-edge technology and visionary thinking, Global Nodes offers services ranging from ideation and design to precision execution, transforming concepts into market-ready products. Their team has extensive experience in delivering top-notch AI, cloud, and data engineering services, making them a trusted partner for businesses worldwide.

illumex

illumex is a generative semantic fabric platform designed to streamline the process of data and analytics interpretation and rationalization for complex enterprises. It offers augmented analytics creation, suggestive data and analytics utilization monitoring, and automated knowledge documentation to enhance agentic performance for analytics. The platform aims to solve the challenges of traditional tedious data analysis, incongruent data and metrics, and tribal knowledge of data teams.

Lightup

Lightup is a cloud data quality monitoring tool with AI-powered anomaly detection, incident alerts, and data remediation capabilities for modern enterprise data stacks. It specializes in helping large organizations implement successful and sustainable data quality programs quickly and easily. Lightup's pushdown architecture allows for monitoring data content at massive scale without moving or copying data, providing extreme scalability and optimal automation. The tool empowers business users with democratized data quality checks and enables automatic fixing of bad data at enterprise scale.

AgentQL

AgentQL is an AI-powered tool for painless data extraction and web automation. It eliminates the need for fragile XPath or DOM selectors by using semantic selectors and natural language descriptions to find web elements reliably. With controlled output and deterministic behavior, AgentQL allows users to shape data exactly as needed. The tool offers features such as extracting data, filling forms automatically, and streamlining testing processes. It is designed to be user-friendly and efficient for developers and data engineers.

Datacog

Datacog is an AI application that offers a comprehensive solution for efficient data warehouse management, application integration, and machine learning. It enables organizations to leverage the complete capabilities of their data assets through intuitive data organization and model training features. With zero configuration, instant deployment, scalability, and real-time monitoring, Datacog simplifies model training and streamlines decision-making. Join the ranks of industry leaders who have harnessed the power of organized data and automation with Datacog.

Datasparq

Datasparq is a specialist AI & data firm that designs, builds, and runs high-impact AI & data solutions. They help businesses at every stage of their AI journey, from value discovery to managing AI solutions. Datasparq combines data science, data engineering, product thinking, and design to deliver valuable, operational AI solutions quickly. Their focus is on creating AI tools that drive business improvements, efficiency, and effectiveness through data platforms, analytics, and machine learning.

Helicone

Helicone is an open-source platform designed for developers, offering observability solutions for logging, monitoring, and debugging. It provides sub-millisecond latency impact, 100% log coverage, industry-leading query times, and is ready for production-level workloads. Trusted by thousands of companies and developers, Helicone leverages Cloudflare Workers for low latency and high reliability, offering features such as prompt management, uptime of 99.99%, scalability, and reliability. It allows risk-free experimentation, prompt security, and various tools for monitoring, analyzing, and managing requests.

Datuum

Datuum is an AI-powered data onboarding solution that offers seamless integration for businesses. It simplifies the data onboarding process by automating manual tasks, generating code, and ensuring data accuracy with AI-driven validation. Datuum helps businesses achieve faster time to value, reduce costs, improve scalability, and enhance data quality and consistency.

Cambridge English Test AI

The AI-powered Cambridge English Test platform offers exercises for English levels B1, B2, C1, and C2. Users can select exercise types such as Reading and Use of English, including activities like Open Cloze, Multiple Choice, Word Formation, and more. The AI, developed by Shining Apps in partnership with Use of English PRO, provides a unique learning experience by generating exercises from a database of over 5000 official exams. It uses advanced Natural Language Processing (NLP) to understand context, tweak exercises, and offer detailed feedback for effective learning.

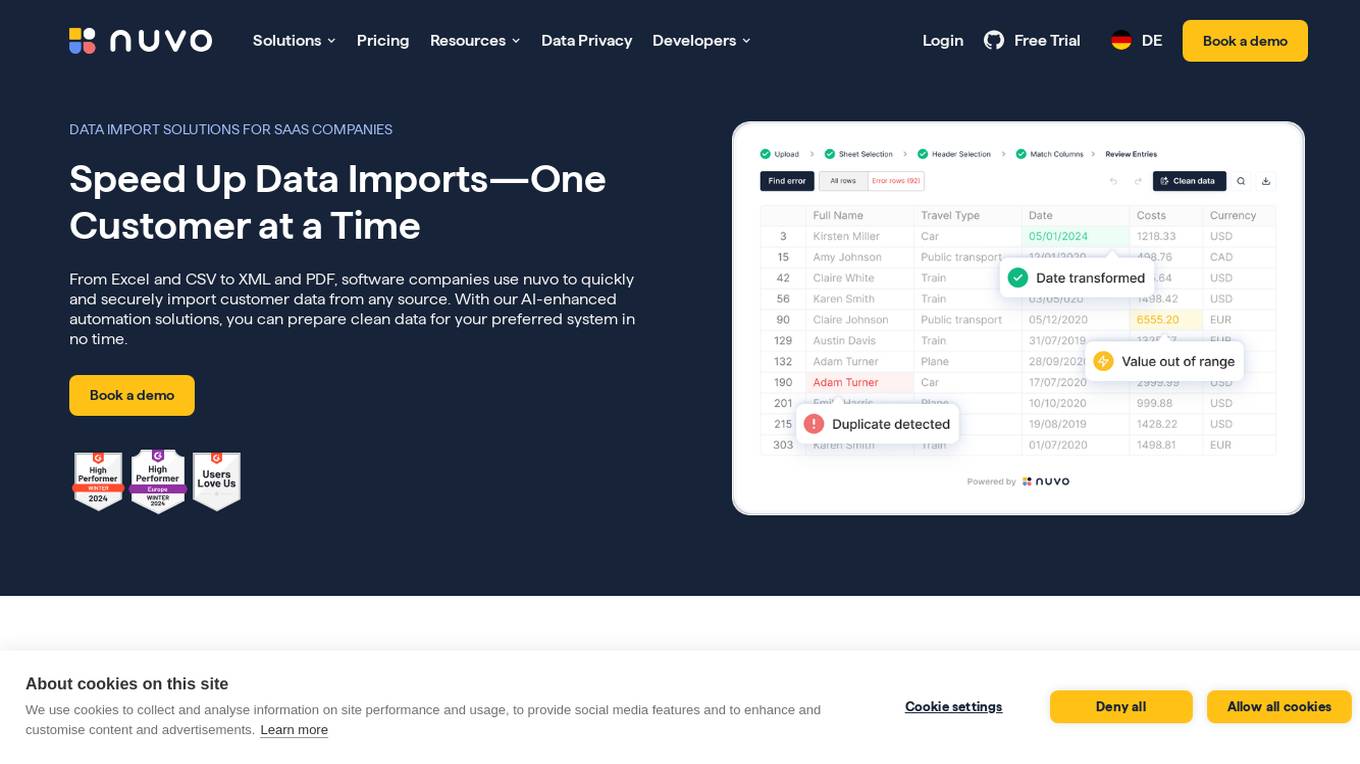

nuvo

nuvo is an AI-powered data import solution that offers fast, secure, and scalable data import solutions for software companies. It provides tools like nuvo Data Importer SDK and nuvo Data Pipeline to streamline manual and recurring ETL data imports, enabling users to manage data imports independently. With AI-enhanced automation, nuvo helps prepare clean data for preferred systems quickly and efficiently, reducing manual effort and improving data quality. The platform allows users to upload unlimited data in various formats, match imported data to system schemas, clean and validate data, and import clean data into target systems with just a click.

One Data

One Data is an AI-powered data product builder that offers a comprehensive solution for building, managing, and sharing data products. It bridges the gap between IT and business by providing AI-powered workflows, lifecycle management, data quality assurance, and data governance features. The platform enables users to easily create, access, and share data products with automated processes and quality alerts. One Data is trusted by enterprises and aims to streamline data product management and accessibility through Data Mesh or Data Fabric approaches, enhancing efficiency in logistics and supply chains. The application is designed to accelerate business impact with reliable data products and support cost reduction initiatives with advanced analytics and collaboration for innovative business models.

AIONTECH Solutions

AIONTECH Solutions is an AI and data solutions provider that empowers businesses to fully utilize data, fostering innovation and ensuring long-term success. They offer cutting-edge AI and data solutions, advanced data analytics, innovation, and a comprehensive suite to unlock the full potential of data. The company is trusted by clients for providing services in BI & Analytics, Cloud Services, Sustainability Services, Data Science and Analytics, and more.

AIxBlock

AIxBlock is an AI tool that empowers users to unleash their AI initiatives on the Blockchain. The platform offers a comprehensive suite of features for building, deploying, and monitoring AI models, including AI data engine, multimodal-powered data crawler, auto annotation, consensus-driven labeling, MLOps platform, decentralized marketplaces, and more. By harnessing the power of blockchain technology, AIxBlock provides cost-efficient solutions for AI builders, compute suppliers, and freelancers to collaborate and benefit from decentralized supercomputing, P2P transactions, and consensus mechanisms.

Pro5.ai

Pro5.ai is an AI-driven platform that connects businesses with the top 5% remote professionals across various tech and business domains. The platform utilizes AI-powered automation to source, vet, and match professionals, ensuring unbiased and efficient hiring processes. Pro5.ai offers a diverse pool of talents, ranging from backend developers to UX designers, and provides a streamlined approach to hiring full-time, long-term talent for remote collaboration.

Keebo

Keebo is an AI tool designed for Snowflake optimization, offering automated query, cost, and tuning optimization. It is the only fully-automated Snowflake optimizer that dynamically adjusts to save customers 25% and more. Keebo's patented technology, based on cutting-edge research, optimizes warehouse size, clustering, and memory without impacting performance. It learns and adjusts to workload changes in real-time, setting up in just 30 minutes and delivering savings within 24 hours. The tool uses telemetry metadata for optimizations, providing full visibility and adjustability for complex scenarios and schedules.

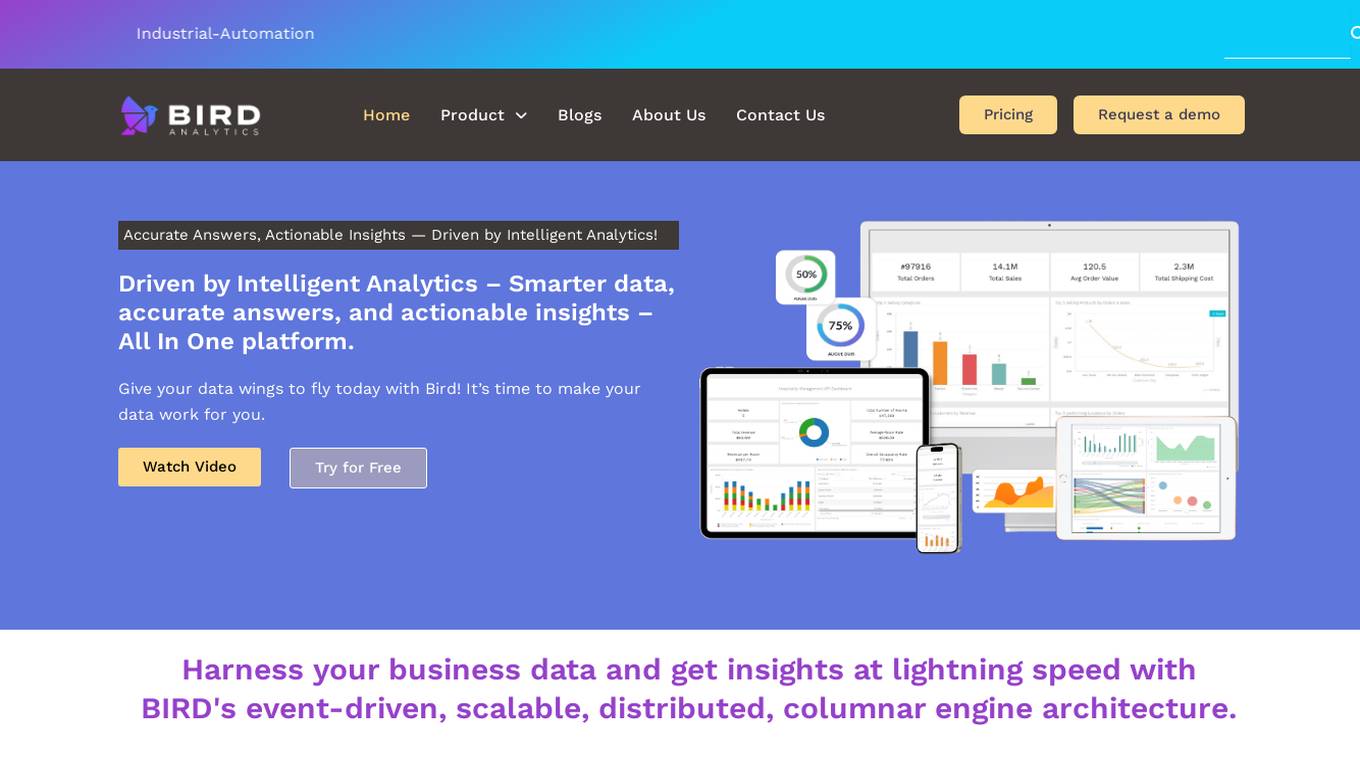

Bird Analytics

Bird Analytics is an AI-powered data analytics platform that offers a comprehensive suite of tools for businesses to manage and analyze their data effectively. With features like AI and Machine Learning, Visual Analysis, Anomaly Monitoring, and more, Bird Analytics provides users with actionable insights and intelligent data-driven solutions. The platform enables users to harness their business data, make better decisions, and predict future trends using advanced analytics capabilities.

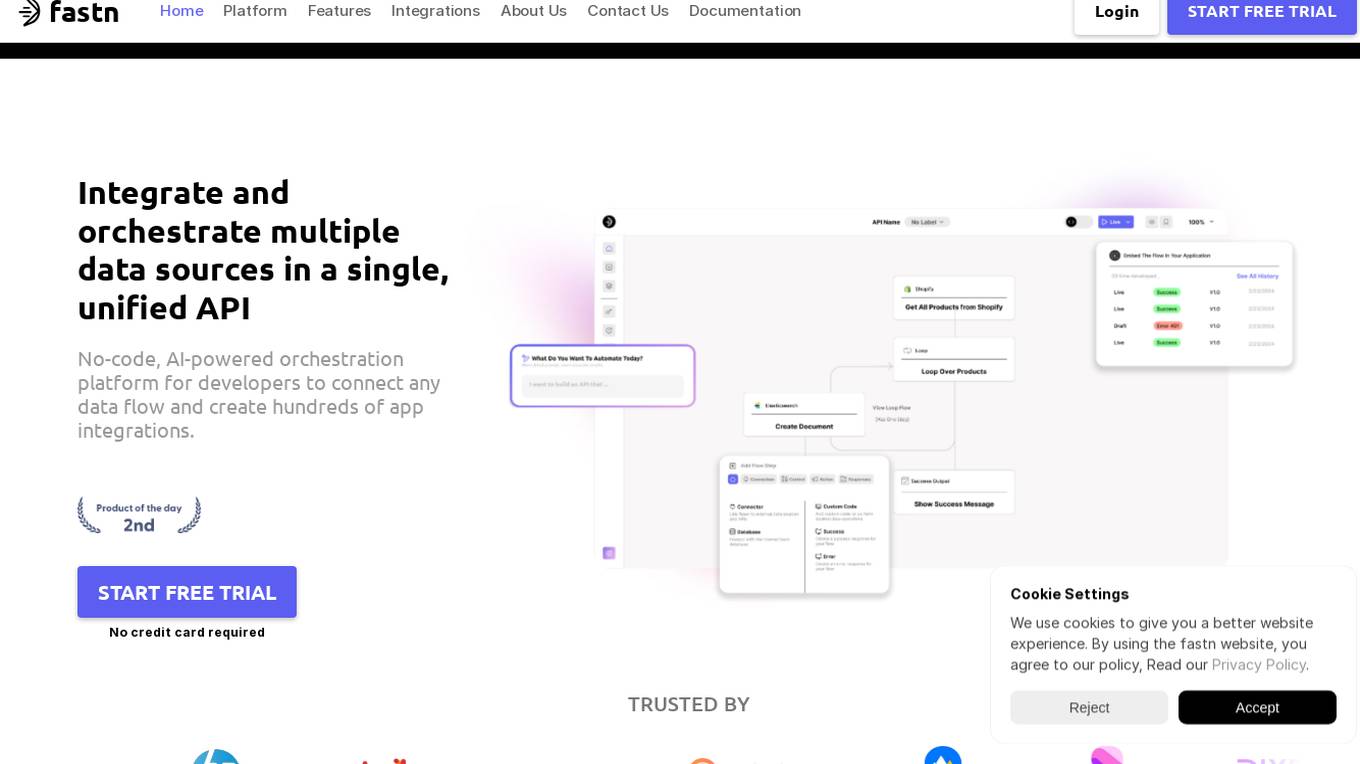

Fastn

Fastn is a no-code, AI-powered orchestration platform for developers to integrate and orchestrate multiple data sources in a single, unified API. It allows users to connect any data flow and create hundreds of app integrations efficiently. Fastn simplifies API integration, ensures API security, and handles data from multiple sources with features like real-time data orchestration, instant API composition, and infrastructure management on autopilot.

DATAFOREST

DATAFOREST is an AI-powered data engineering company that offers a wide range of services including generative AI, data science, web and mobile development, DevOps, cloud solutions, digital transformation, and more. They provide custom data-driven solutions for small and medium-sized businesses, focusing on efficiency improvement, revenue growth, and cost reduction. With over 15 years of experience, DATAFOREST helps businesses automate complex tasks, enhance decision-making, boost productivity, and streamline operations through AI and machine learning technologies.

Valohai

Valohai is a scalable MLOps platform that enables Continuous Integration/Continuous Deployment (CI/CD) for machine learning and pipeline automation on-premises and across various cloud environments. It helps streamline complex machine learning workflows by offering framework-agnostic ML capabilities, automatic versioning with complete lineage of ML experiments, hybrid and multi-cloud support, scalability and performance optimization, streamlined collaboration among data scientists, IT, and business units, and smart orchestration of ML workloads on any infrastructure. Valohai also provides a knowledge repository for storing and sharing the entire model lifecycle, facilitating cross-functional collaboration, and allowing developers to build with total freedom using any libraries or frameworks.

Tecton

Tecton is an AI data platform that helps build smarter AI applications by simplifying feature engineering, generating training data, serving real-time data, and enhancing AI models with context-rich prompts. It automates data pipelines, improves model accuracy, and lowers production costs, enabling faster deployment of AI models. Tecton abstracts away data complexity, provides a developer-friendly experience, and allows users to create features from any source. Trusted by top engineering teams, Tecton streamlines ML delivery processes, improves customer interactions, and automates release processes through CI/CD pipelines.

Medeloop

Medeloop is a revolutionary platform in health research that leverages machine learning and big data analytics to accelerate breakthrough discoveries in disease research. The platform provides a comprehensive data-linking infrastructure to solve the problem of wasted health and medical data for both patients and researchers. Medeloop's multi-modal data linkage platform enables researchers to access and analyze diverse data types using analytical tools and programming languages. By utilizing machine learning and artificial intelligence algorithms, Medeloop drives the discovery and development of new therapies, making it a key player in changing the nature of healthcare for the better.

Oxygen Digital Recruitment

Oxygen Digital Recruitment is a specialized AI and Data Science recruitment platform that focuses on providing talent solutions for cutting-edge markets, including Geospatial & ESG, Energy Trading, Renewable Energy, Artificial Intelligence, and Data Science. The platform offers various services such as Permanent Search, Embedded Specialist Talent, Short-term Staffing, Retained Search, and Fractional Advisory. Oxygen Digital aims to accelerate decarbonization by delivering top talent to drive change in the industry. The platform collaborates with start-ups, scale-ups, and global enterprises to build domain-specific innovation teams, providing access to deep passive networks and the ability to hire blended workforces.

Astera Software

Astera Software offers enterprise-ready data management solutions, including data integration, unstructured data management, data warehousing, and EDI Connect. The platform provides automated data processing, data governance, and AI capabilities to transform data into powerful insights, enabling smarter decisions and innovation. Astera simplifies data management with features like data pipeline builder, data warehouse automation, and EDI transaction optimization. Trusted by leading enterprises worldwide, Astera boosts operational efficiency, accelerates time to market, ensures data accuracy, and reduces operational costs through AI-powered data management.

Vizio AI

Vizio AI is an advanced data analytics and automation services provider that empowers businesses with real-time data and AI insights. They offer services such as data app development, automated reporting, RPA bot development, dashboard development, and generative AI. Vizio AI collaborates with clients to connect and visualize data from various sources, automate tasks, and make AI-powered decisions with ease. Their expert data engineers and analysts work on data app development, dashboard creation, and RPA bot development to streamline business operations and enhance decision-making processes.

Talynce

Talynce is an AI-powered technical interview platform that revolutionizes the recruitment process by automating candidate screening through live coding interviews and technical Q&A sessions. It helps companies assess coding skills and theoretical knowledge efficiently, empowering them to identify top technical talent faster.

Domo

Domo is an AI and Data Products Platform that empowers users to connect and prepare data from any source, expand data access for exploration, and build powerful data products to accelerate business-critical insights with AI assistance at every step. It offers features such as Data Integration, Business Intelligence, Workflows and Intelligent Automation, and AI agents for various use cases.

Wizeline

Wizeline is an AI application that offers practical AI solutions for various industries such as media & entertainment, finance, healthcare, and retail. The application provides AI marketing, AI broadcast, and AI core services to help businesses boost revenue, enhance operational agility, and drive growth through AI-powered solutions. Wizeline excels in consultative thinking, AI innovation, and scaling operations with AI. The application is known for its deep industry expertise, real-world solutioning, and partnership with global tech leaders.

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

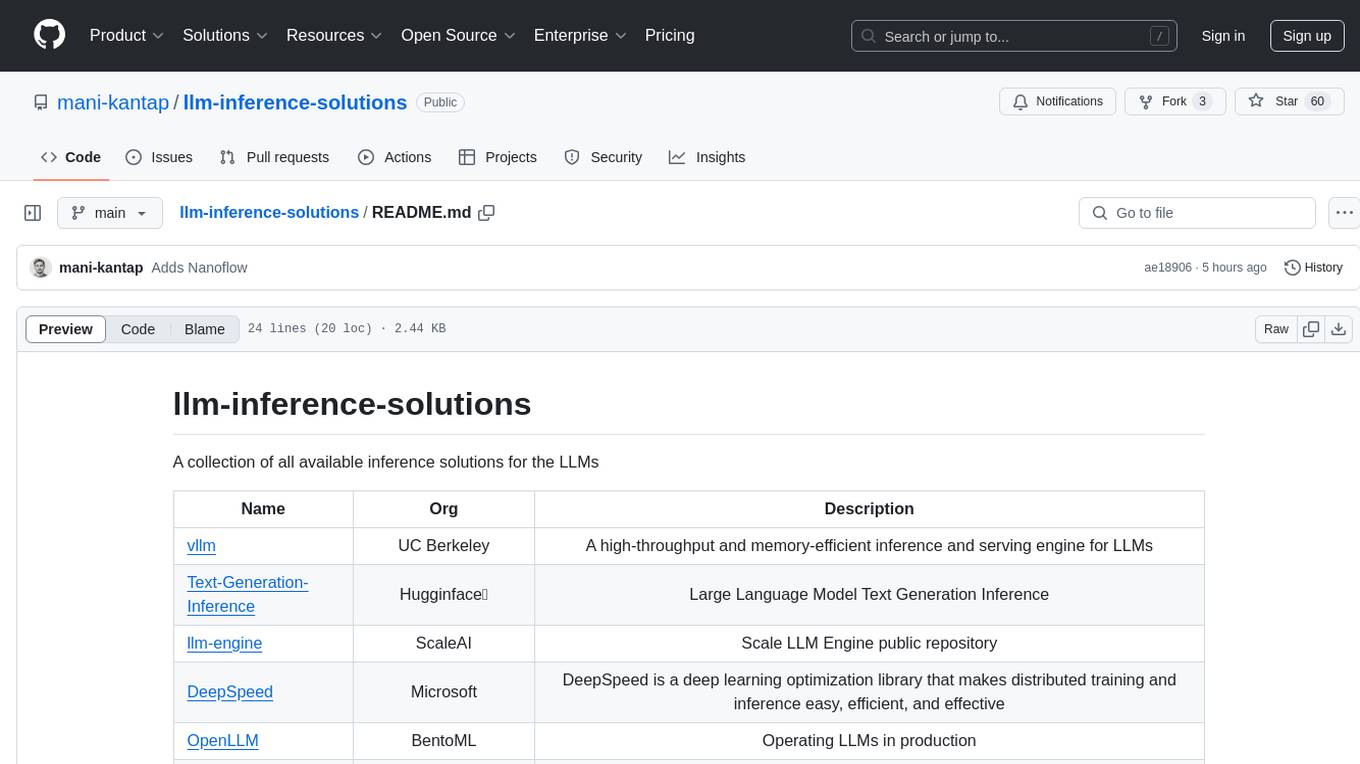

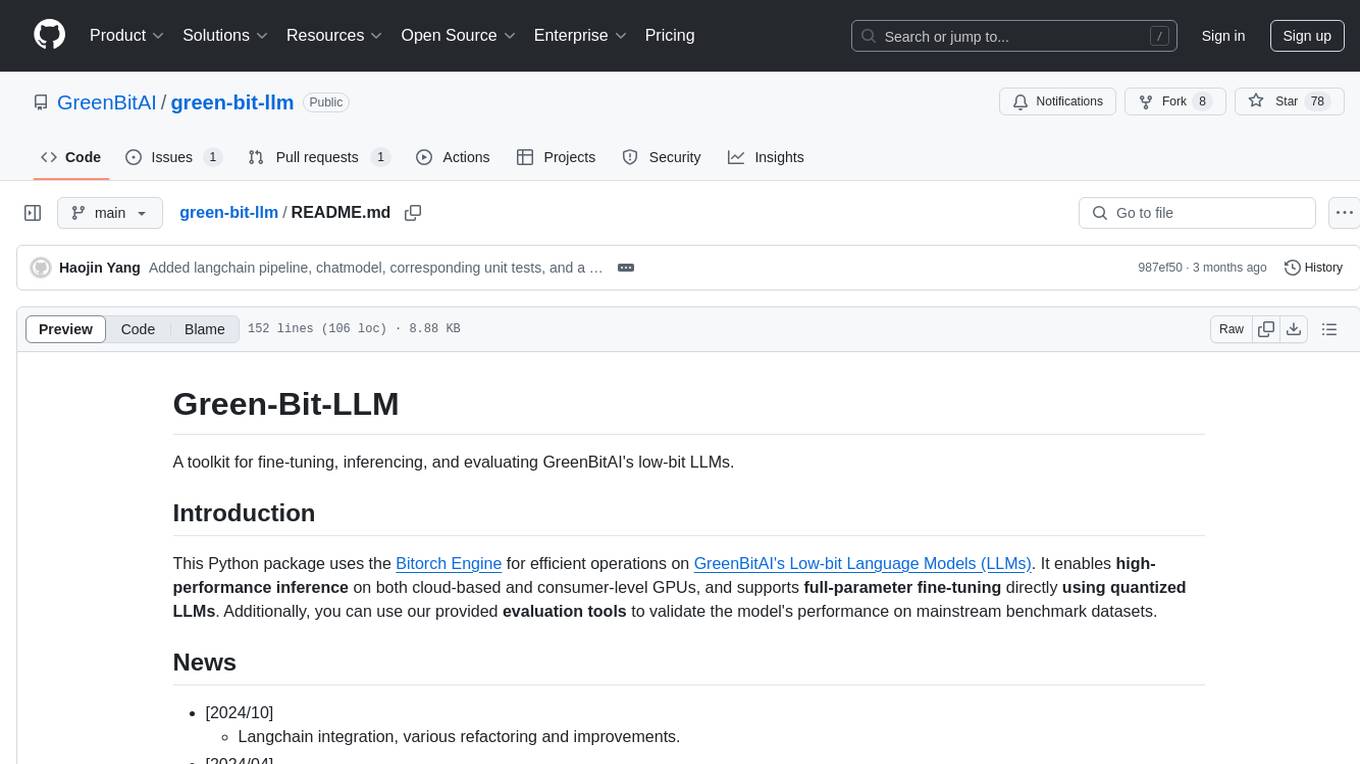

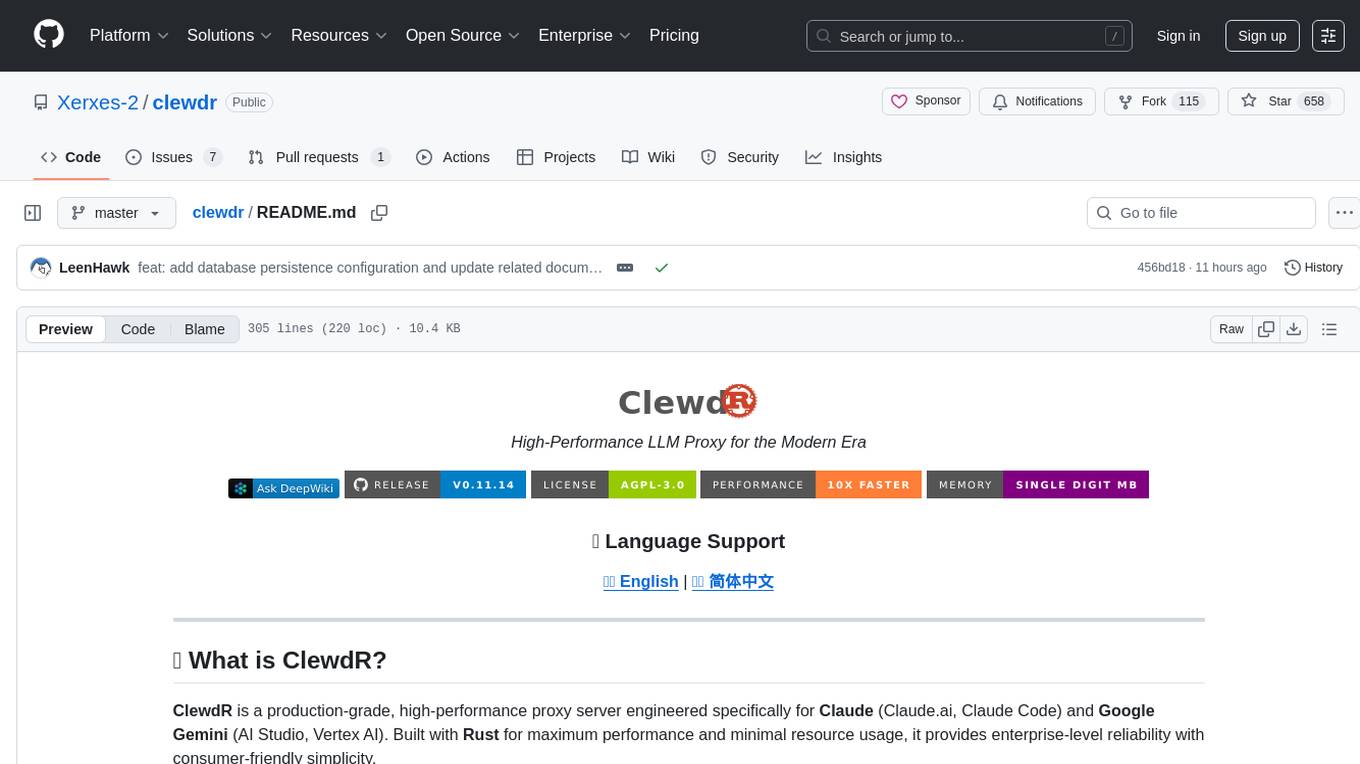

286 - Open Source Tools

db2rest

DB2Rest is a modern low-code REST DATA API platform that simplifies the development of intelligent applications. It seamlessly integrates existing and new databases with language models (LMs/LLMs) and vector stores, enabling the rapid delivery of context-aware, reasoning applications without vendor lock-in.

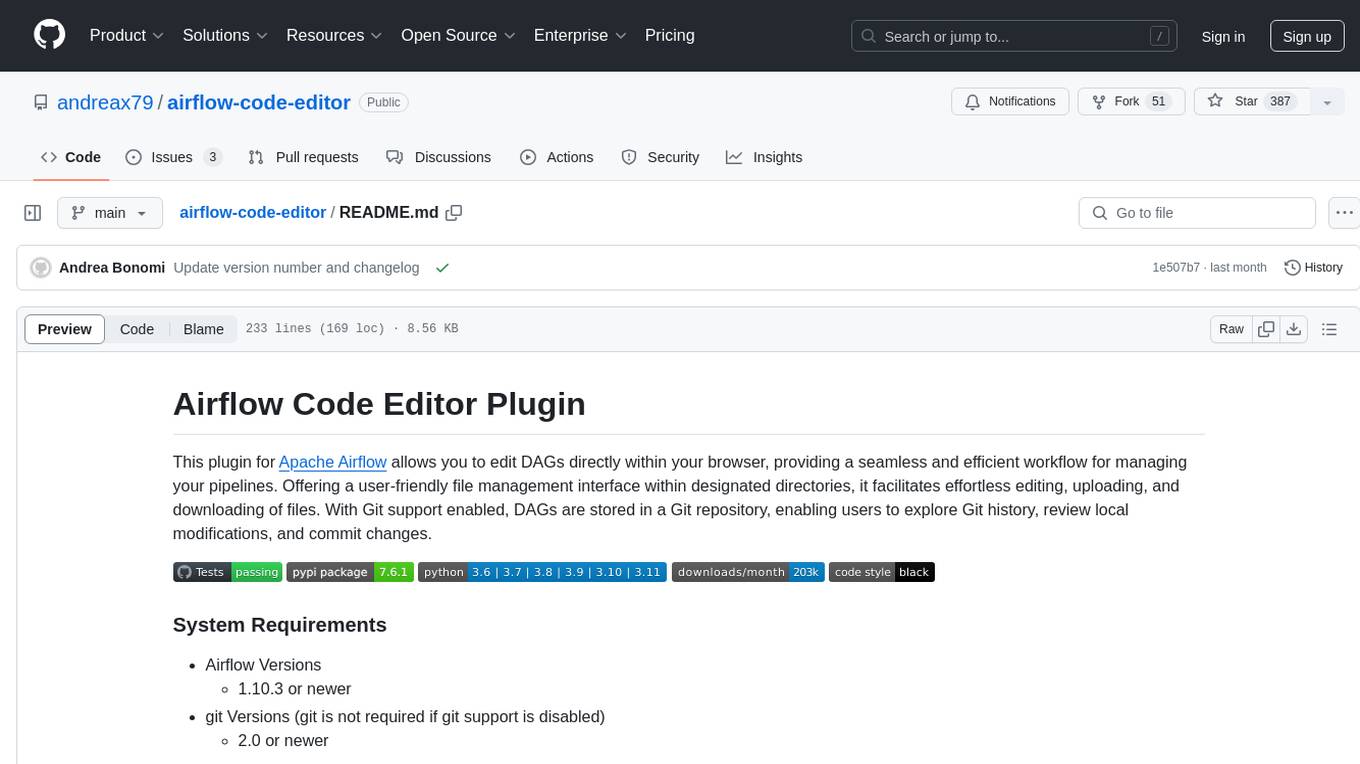

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

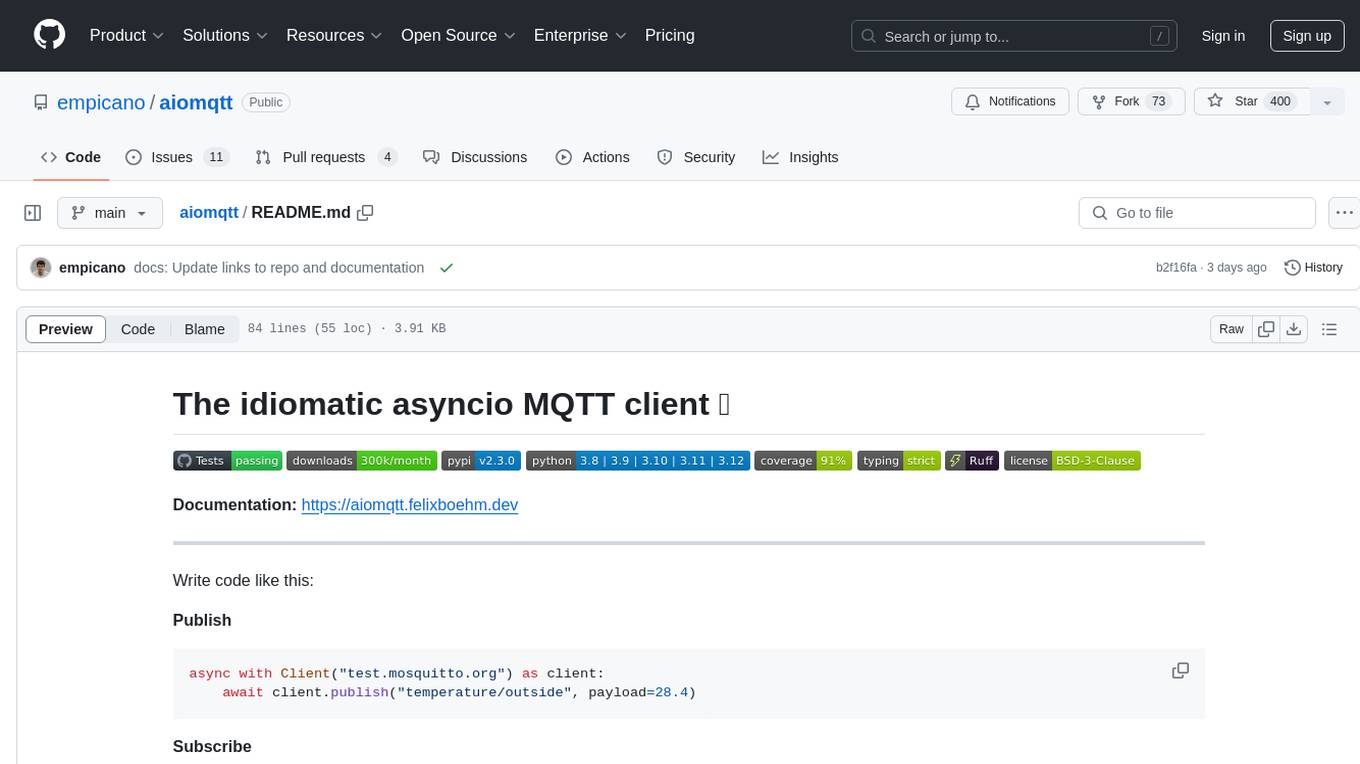

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

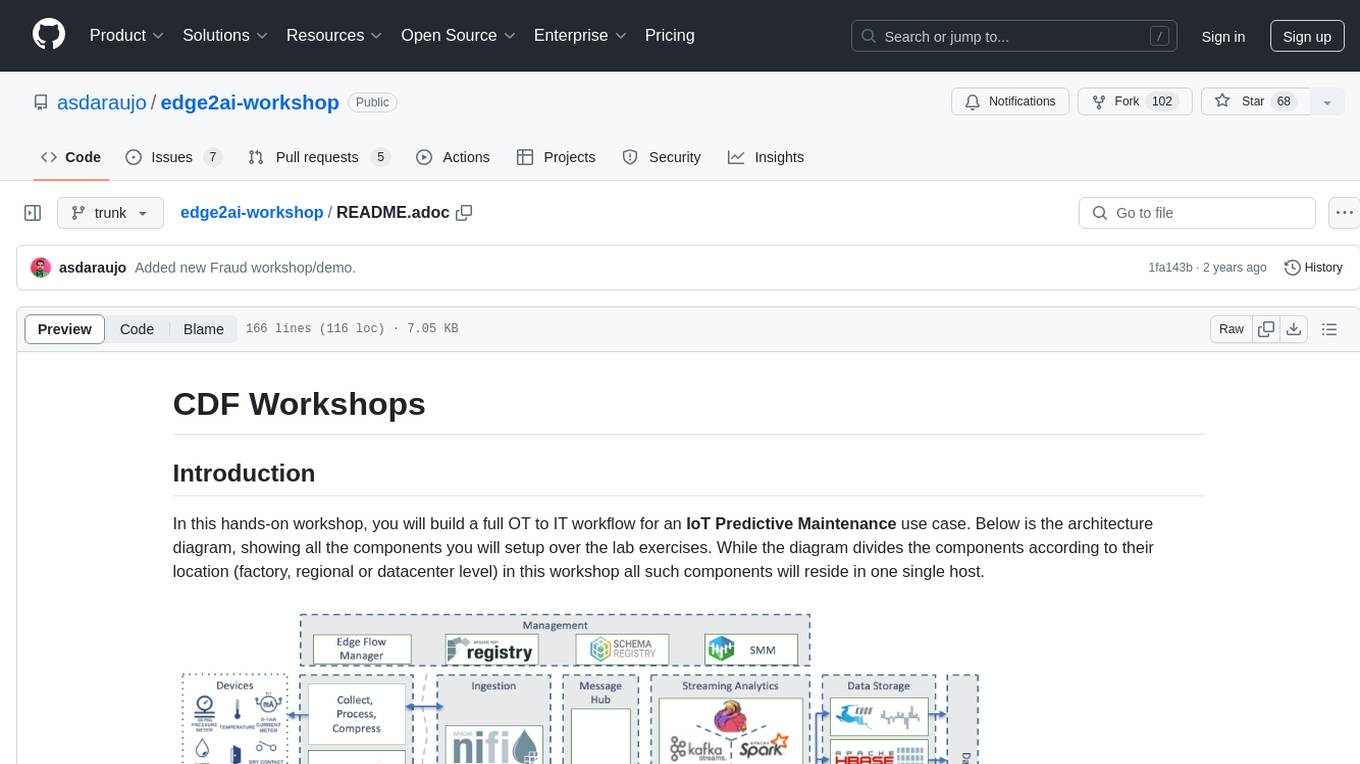

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

airflow

Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative. Use Airflow to author workflows as directed acyclic graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

airbyte-platform

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's low-code Connector Development Kit (CDK). Airbyte is used by data engineers and analysts at companies of all sizes to move data for a variety of purposes, including data warehousing, data analysis, and machine learning.

chronon

Chronon is a platform that simplifies and improves ML workflows by providing a central place to define features, ensuring point-in-time correctness for backfills, simplifying orchestration for batch and streaming pipelines, offering easy endpoints for feature fetching, and guaranteeing and measuring consistency. It offers benefits over other approaches by enabling the use of a broad set of data for training, handling large aggregations and other computationally intensive transformations, and abstracting away the infrastructure complexity of data plumbing.

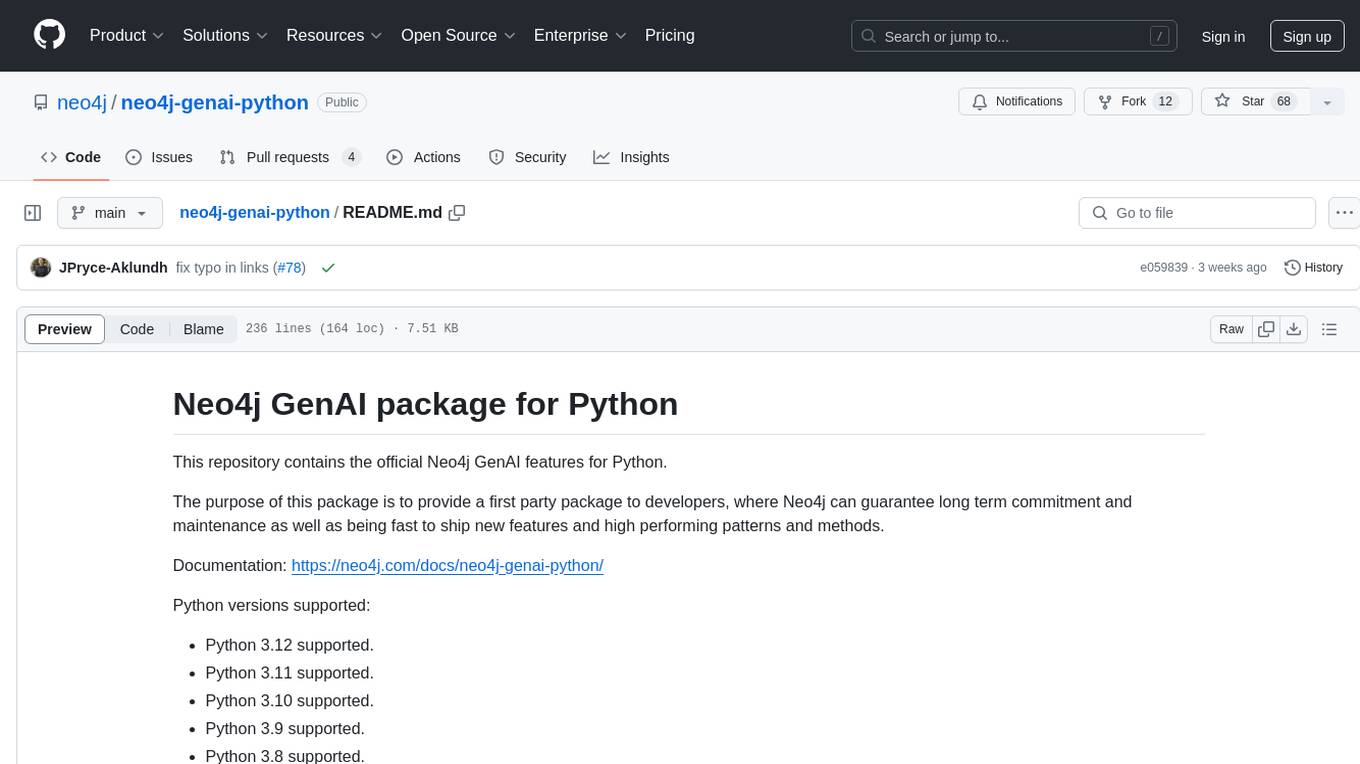

llama_index

LlamaIndex is a data framework for building LLM applications. It provides tools for ingesting, structuring, and querying data, as well as integrating with LLMs and other tools. LlamaIndex is designed to be easy to use for both beginner and advanced users, and it provides a comprehensive set of features for building LLM applications.

unstructured

The `unstructured` library provides open-source components for ingesting and pre-processing images and text documents, such as PDFs, HTML, Word docs, and many more. The use cases of `unstructured` revolve around streamlining and optimizing the data processing workflow for LLMs. `unstructured` modular functions and connectors form a cohesive system that simplifies data ingestion and pre-processing, making it adaptable to different platforms and efficient in transforming unstructured data into structured outputs.

deeplake

Deep Lake is a Database for AI powered by a storage format optimized for deep-learning applications. Deep Lake can be used for: 1. Storing data and vectors while building LLM applications 2. Managing datasets while training deep learning models Deep Lake simplifies the deployment of enterprise-grade LLM-based products by offering storage for all data types (embeddings, audio, text, videos, images, pdfs, annotations, etc.), querying and vector search, data streaming while training models at scale, data versioning and lineage, and integrations with popular tools such as LangChain, LlamaIndex, Weights & Biases, and many more. Deep Lake works with data of any size, it is serverless, and it enables you to store all of your data in your own cloud and in one place. Deep Lake is used by Intel, Bayer Radiology, Matterport, ZERO Systems, Red Cross, Yale, & Oxford.

ethereum-etl-airflow

This repository contains Airflow DAGs for extracting, transforming, and loading (ETL) data from the Ethereum blockchain into BigQuery. The DAGs use the Google Cloud Platform (GCP) services, including BigQuery, Cloud Storage, and Cloud Composer, to automate the ETL process. The repository also includes scripts for setting up the GCP environment and running the DAGs locally.

pathway

Pathway is a Python data processing framework for analytics and AI pipelines over data streams. It's the ideal solution for real-time processing use cases like streaming ETL or RAG pipelines for unstructured data. Pathway comes with an **easy-to-use Python API** , allowing you to seamlessly integrate your favorite Python ML libraries. Pathway code is versatile and robust: **you can use it in both development and production environments, handling both batch and streaming data effectively**. The same code can be used for local development, CI/CD tests, running batch jobs, handling stream replays, and processing data streams. Pathway is powered by a **scalable Rust engine** based on Differential Dataflow and performs incremental computation. Your Pathway code, despite being written in Python, is run by the Rust engine, enabling multithreading, multiprocessing, and distributed computations. All the pipeline is kept in memory and can be easily deployed with **Docker and Kubernetes**. You can install Pathway with pip: `pip install -U pathway` For any questions, you will find the community and team behind the project on Discord.

milvus

Milvus is an open-source vector database built to power embedding similarity search and AI applications. Milvus makes unstructured data search more accessible, and provides a consistent user experience regardless of the deployment environment. Milvus 2.0 is a cloud-native vector database with storage and computation separated by design. All components in this refactored version of Milvus are stateless to enhance elasticity and flexibility. For more architecture details, see Milvus Architecture Overview. Milvus was released under the open-source Apache License 2.0 in October 2019. It is currently a graduate project under LF AI & Data Foundation.

airbyte-connectors

This repository contains Airbyte connectors used in Faros and Faros Community Edition platforms as well as Airbyte Connector Development Kit (CDK) for JavaScript/TypeScript.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

indexify

Indexify is an open-source engine for building fast data pipelines for unstructured data (video, audio, images, and documents) using reusable extractors for embedding, transformation, and feature extraction. LLM Applications can query transformed content friendly to LLMs by semantic search and SQL queries. Indexify keeps vector databases and structured databases (PostgreSQL) updated by automatically invoking the pipelines as new data is ingested into the system from external data sources. **Why use Indexify** * Makes Unstructured Data **Queryable** with **SQL** and **Semantic Search** * **Real-Time** Extraction Engine to keep indexes **automatically** updated as new data is ingested. * Create **Extraction Graph** to describe **data transformation** and extraction of **embedding** and **structured extraction**. * **Incremental Extraction** and **Selective Deletion** when content is deleted or updated. * **Extractor SDK** allows adding new extraction capabilities, and many readily available extractors for **PDF**, **Image**, and **Video** indexing and extraction. * Works with **any LLM Framework** including **Langchain**, **DSPy**, etc. * Runs on your laptop during **prototyping** and also scales to **1000s of machines** on the cloud. * Works with many **Blob Stores**, **Vector Stores**, and **Structured Databases** * We have even **Open Sourced Automation** to deploy to Kubernetes in production.

lance

Lance is a modern columnar data format optimized for ML workflows and datasets. It offers high-performance random access, vector search, zero-copy automatic versioning, and ecosystem integrations with Apache Arrow, Pandas, Polars, and DuckDB. Lance is designed to address the challenges of the ML development cycle, providing a unified data format for collection, exploration, analytics, feature engineering, training, evaluation, deployment, and monitoring. It aims to reduce data silos and streamline the ML development process.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide

raft

RAFT (Reusable Accelerated Functions and Tools) is a C++ header-only template library with an optional shared library that contains fundamental widely-used algorithms and primitives for machine learning and information retrieval. The algorithms are CUDA-accelerated and form building blocks for more easily writing high performance applications.

DeepBI

DeepBI is an AI-native data analysis platform that leverages the power of large language models to explore, query, visualize, and share data from any data source. Users can use DeepBI to gain data insight and make data-driven decisions.

instill-core

Instill Core is an open-source orchestrator comprising a collection of source-available projects designed to streamline every aspect of building versatile AI features with unstructured data. It includes Instill VDP (Versatile Data Pipeline) for unstructured data, AI, and pipeline orchestration, Instill Model for scalable MLOps and LLMOps for open-source or custom AI models, and Instill Artifact for unified unstructured data management. Instill Core can be used for tasks such as building, testing, and sharing pipelines, importing, serving, fine-tuning, and monitoring ML models, and transforming documents, images, audio, and video into a unified AI-ready format.

argilla

Argilla is a collaboration platform for AI engineers and domain experts that require high-quality outputs, full data ownership, and overall efficiency. It helps users improve AI output quality through data quality, take control of their data and models, and improve efficiency by quickly iterating on the right data and models. Argilla is an open-source community-driven project that provides tools for achieving and maintaining high-quality data standards, with a focus on NLP and LLMs. It is used by AI teams from companies like the Red Cross, Loris.ai, and Prolific to improve the quality and efficiency of AI projects.

oio-sds

OpenIO SDS is a software solution for object storage, targeting very large-scale unstructured data volumes.

n8n-docs

n8n is an extendable workflow automation tool that enables you to connect anything to everything. It is open-source and can be self-hosted or used as a service. n8n provides a visual interface for creating workflows, which can be used to automate tasks such as data integration, data transformation, and data analysis. n8n also includes a library of pre-built nodes that can be used to connect to a variety of applications and services. This makes it easy to create complex workflows without having to write any code.

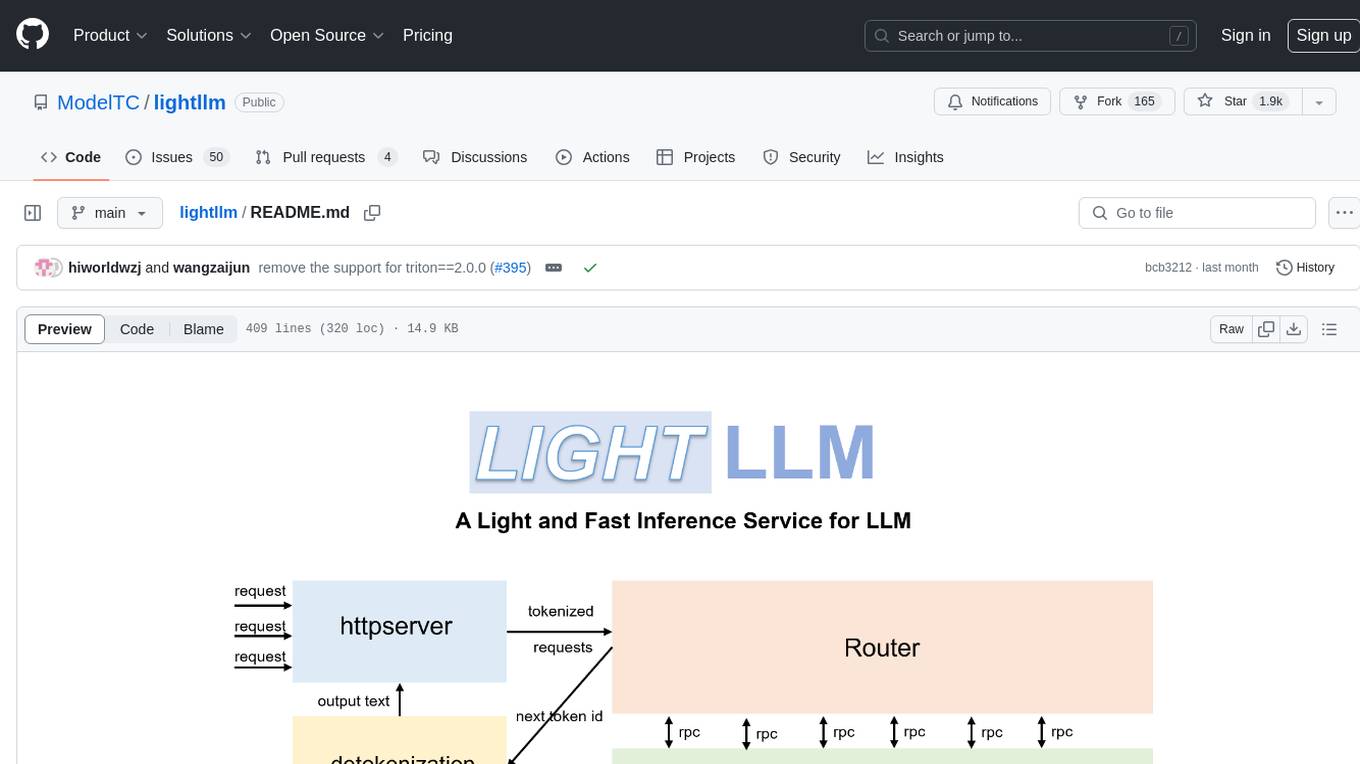

WrenAI

WrenAI is a data assistant tool that helps users get results and insights faster by asking questions in natural language, without writing SQL. It leverages Large Language Models (LLM) with Retrieval-Augmented Generation (RAG) technology to enhance comprehension of internal data. Key benefits include fast onboarding, secure design, and open-source availability. WrenAI consists of three core services: Wren UI (intuitive user interface), Wren AI Service (processes queries using a vector database), and Wren Engine (platform backbone). It is currently in alpha version, with new releases planned biweekly.

opendataeditor

The Open Data Editor (ODE) is a no-code application to explore, validate and publish data in a simple way. It is an open source project powered by the Frictionless Framework. The ODE is currently available for download and testing in beta.

terraform-provider-aiven

The Terraform provider for Aiven.io, an open source data platform as a service. See the official documentation to learn about all the possible services and resources.

vulcan-sql

VulcanSQL is an Analytical Data API Framework for AI agents and data apps. It aims to help data professionals deliver RESTful APIs from databases, data warehouses or data lakes much easier and secure. It turns your SQL into APIs in no time!

airflow-chart

This Helm chart bootstraps an Airflow deployment on a Kubernetes cluster using the Helm package manager. The version of this chart does not correlate to any other component. Users should not expect feature parity between OSS airflow chart and the Astronomer airflow-chart for identical version numbers. To install this helm chart remotely (using helm 3) kubectl create namespace airflow helm repo add astronomer https://helm.astronomer.io helm install airflow --namespace airflow astronomer/airflow To install this repository from source sh kubectl create namespace airflow helm install --namespace airflow . Prerequisites: Kubernetes 1.12+ Helm 3.6+ PV provisioner support in the underlying infrastructure Installing the Chart: sh helm install --name my-release . The command deploys Airflow on the Kubernetes cluster in the default configuration. The Parameters section lists the parameters that can be configured during installation. Upgrading the Chart: First, look at the updating documentation to identify any backwards-incompatible changes. To upgrade the chart with the release name `my-release`: sh helm upgrade --name my-release . Uninstalling the Chart: To uninstall/delete the `my-release` deployment: sh helm delete my-release The command removes all the Kubernetes components associated with the chart and deletes the release. Updating DAGs: Bake DAGs in Docker image The recommended way to update your DAGs with this chart is to build a new docker image with the latest code (`docker build -t my-company/airflow:8a0da78 .`), push it to an accessible registry (`docker push my-company/airflow:8a0da78`), then update the Airflow pods with that image: sh helm upgrade my-release . --set images.airflow.repository=my-company/airflow --set images.airflow.tag=8a0da78 Docker Images: The Airflow image that are referenced as the default values in this chart are generated from this repository: https://github.com/astronomer/ap-airflow. Other non-airflow images used in this chart are generated from this repository: https://github.com/astronomer/ap-vendor. Parameters: The complete list of parameters supported by the community chart can be found on the Parameteres Reference page, and can be set under the `airflow` key in this chart. The following tables lists the configurable parameters of the Astronomer chart and their default values. | Parameter | Description | Default | | :----------------------------- | :-------------------------------------------------------------------------------------------------------- | :---------------------------- | | `ingress.enabled` | Enable Kubernetes Ingress support | `false` | | `ingress.acme` | Add acme annotations to Ingress object | `false` | | `ingress.tlsSecretName` | Name of secret that contains a TLS secret | `~` | | `ingress.webserverAnnotations` | Annotations added to Webserver Ingress object | `{}` | | `ingress.flowerAnnotations` | Annotations added to Flower Ingress object | `{}` | | `ingress.baseDomain` | Base domain for VHOSTs | `~` | | `ingress.auth.enabled` | Enable auth with Astronomer Platform | `true` | | `extraObjects` | Extra K8s Objects to deploy (these are passed through `tpl`). More about Extra Objects. | `[]` | | `sccEnabled` | Enable security context constraints required for OpenShift | `false` | | `authSidecar.enabled` | Enable authSidecar | `false` | | `authSidecar.repository` | The image for the auth sidecar proxy | `nginxinc/nginx-unprivileged` | | `authSidecar.tag` | The image tag for the auth sidecar proxy | `stable` | | `authSidecar.pullPolicy` | The K8s pullPolicy for the the auth sidecar proxy image | `IfNotPresent` | | `authSidecar.port` | The port the auth sidecar exposes | `8084` | | `gitSyncRelay.enabled` | Enables git sync relay feature. | `False` | | `gitSyncRelay.repo.url` | Upstream URL to the git repo to clone. | `~` | | `gitSyncRelay.repo.branch` | Branch of the upstream git repo to checkout. | `main` | | `gitSyncRelay.repo.depth` | How many revisions to check out. Leave as default `1` except in dev where history is needed. | `1` | | `gitSyncRelay.repo.wait` | Seconds to wait before pulling from the upstream remote. | `60` | | `gitSyncRelay.repo.subPath` | Path to the dags directory within the git repository. | `~` | Specify each parameter using the `--set key=value[,key=value]` argument to `helm install`. For example, sh helm install --name my-release --set executor=CeleryExecutor --set enablePodLaunching=false . Walkthrough using kind: Install kind, and create a cluster We recommend testing with Kubernetes 1.25+, example: sh kind create cluster --image kindest/node:v1.25.11 Confirm it's up: sh kubectl cluster-info --context kind-kind Add Astronomer's Helm repo sh helm repo add astronomer https://helm.astronomer.io helm repo update Create namespace + install the chart sh kubectl create namespace airflow helm install airflow -n airflow astronomer/airflow It may take a few minutes. Confirm the pods are up: sh kubectl get pods --all-namespaces helm list -n airflow Run `kubectl port-forward svc/airflow-webserver 8080:8080 -n airflow` to port-forward the Airflow UI to http://localhost:8080/ to confirm Airflow is working. Login as _admin_ and password _admin_. Build a Docker image from your DAGs: 1. Start a project using astro-cli, which will generate a Dockerfile, and load your DAGs in. You can test locally before pushing to kind with `astro airflow start`. `sh mkdir my-airflow-project && cd my-airflow-project astro dev init` 2. Then build the image: `sh docker build -t my-dags:0.0.1 .` 3. Load the image into kind: `sh kind load docker-image my-dags:0.0.1` 4. Upgrade Helm deployment: sh helm upgrade airflow -n airflow --set images.airflow.repository=my-dags --set images.airflow.tag=0.0.1 astronomer/airflow Extra Objects: This chart can deploy extra Kubernetes objects (assuming the role used by Helm can manage them). For Astronomer Cloud and Enterprise, the role permissions can be found in the Commander role. yaml extraObjects: - apiVersion: batch/v1beta1 kind: CronJob metadata: name: "{{ .Release.Name }}-somejob" spec: schedule: "*/10 * * * *" concurrencyPolicy: Forbid jobTemplate: spec: template: spec: containers: - name: myjob image: ubuntu command: - echo args: - hello restartPolicy: OnFailure Contributing: Check out our contributing guide! License: Apache 2.0 with Commons Clause

data-juicer

Data-Juicer is a one-stop data processing system to make data higher-quality, juicier, and more digestible for LLMs. It is a systematic & reusable library of 80+ core OPs, 20+ reusable config recipes, and 20+ feature-rich dedicated toolkits, designed to function independently of specific LLM datasets and processing pipelines. Data-Juicer allows detailed data analyses with an automated report generation feature for a deeper understanding of your dataset. Coupled with multi-dimension automatic evaluation capabilities, it supports a timely feedback loop at multiple stages in the LLM development process. Data-Juicer offers tens of pre-built data processing recipes for pre-training, fine-tuning, en, zh, and more scenarios. It provides a speedy data processing pipeline requiring less memory and CPU usage, optimized for maximum productivity. Data-Juicer is flexible & extensible, accommodating most types of data formats and allowing flexible combinations of OPs. It is designed for simplicity, with comprehensive documentation, easy start guides and demo configs, and intuitive configuration with simple adding/removing OPs from existing configs.

aistore