instill-core

🔮 Instill Core is a full-stack AI infrastructure tool for data, model and pipeline orchestration, designed to streamline every aspect of building versatile AI-first applications

Stars: 2285

Instill Core is an open-source orchestrator comprising a collection of source-available projects designed to streamline every aspect of building versatile AI features with unstructured data. It includes Instill VDP (Versatile Data Pipeline) for unstructured data, AI, and pipeline orchestration, Instill Model for scalable MLOps and LLMOps for open-source or custom AI models, and Instill Artifact for unified unstructured data management. Instill Core can be used for tasks such as building, testing, and sharing pipelines, importing, serving, fine-tuning, and monitoring ML models, and transforming documents, images, audio, and video into a unified AI-ready format.

README:

A complete unstructured data solution: ETL processing, AI-readiness, open-source LLM hosting, and RAG capabilities in one powerful platform.

Follow the installation steps below or documentation for more details to build versatile AI applications locally.

Instill Core is an end-to-end AI platform for data, pipeline and model orchestration.

🔮 Instill Core simplifies infrastructure hassle and encompasses these core features:

- 💧 Pipeline: Quickly build versatile AI-first APIs or automated workflows.

- ⚙️ Component: Connect essential building blocks to construct powerful pipelines.

- 💾 Artifact: Transform unstructured data (e.g., documents, images, audio, video) into AI-ready formats.

- ⚗️ Model: Deploy and monitor AI models without GPU infrastructure hassles.

- 📖 Parsing PDF Files to Markdown: Cookbook

- 🧱 Generating Structured Outputs from LLMs: Cookbook & Tutorial

- 🕸️ Web scraping & Google Search with Structured Insights

- 🌱 Instance segmentation on microscopic plant stomata images: Cookbook

See Examples for more!

| Operating System | Requirements and Instructions |

|---|---|

| macOS or Linux | Instill Core works natively |

| Windows | • Use Windows Subsystem for Linux (WSL2) • Install latest yq from GitHub Repository• Install latest Docker Desktop and enable WSL2 integration (tutorial) • (Optional) Install cuda-toolkit on WSL2 (NVIDIA tutorial) |

| All Systems | • Docker Engine v25 or later • Docker Compose v2 or later • Install latest stable Docker and Docker Compose |

Execute the following commands to pull pre-built images with all the dependencies to launch:

git clone -b v0.56.0 https://github.com/instill-ai/instill-core.git && cd instill-core

# Launch all services

make runThat's it! Once all the services are up with health status, the UI is ready to go at http://localhost:3000. Please find the default login credentials in the documentation.

To shut down all running services:

make downVisit the Deployment Overview for more details.

- 📺 Console

- ⌨️ CLI

-

📦 SDK:

- Python SDK

- TypeScript SDK

- Stay tuned, as more SDKs are on the way!

Please visit our official documentation for more.

Additional resources:

We welcome contributions from our community! Checkout the methods below:

-

Cookbooks: Help us create helpful pipelines and guides for the community. Visit our Cookbook repository to get started.

-

Issues: Contribute to improvements by raising tickets using templates here or discuss in existing ones you think you can help with.

We are committed to maintaining a respectful and welcoming atmosphere for all contributors. Before contributing, please read:

Get help by joining our Discord community where you can post any questions on our #ask-for-help channel.

See the LICENSE file for licensing information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for instill-core

Similar Open Source Tools

instill-core

Instill Core is an open-source orchestrator comprising a collection of source-available projects designed to streamline every aspect of building versatile AI features with unstructured data. It includes Instill VDP (Versatile Data Pipeline) for unstructured data, AI, and pipeline orchestration, Instill Model for scalable MLOps and LLMOps for open-source or custom AI models, and Instill Artifact for unified unstructured data management. Instill Core can be used for tasks such as building, testing, and sharing pipelines, importing, serving, fine-tuning, and monitoring ML models, and transforming documents, images, audio, and video into a unified AI-ready format.

midscene

Midscene.js is an AI-powered automation SDK that allows users to control web pages, perform assertions, and extract data in JSON format using natural language. It offers features such as natural language interaction, understanding UI and providing responses in JSON, intuitive assertion based on AI understanding, compatibility with public multimodal LLMs like GPT-4o, visualization tool for easy debugging, and a brand new experience in automation development.

llm-rag-vectordb-python

This repository provides sample applications and tutorials to showcase the power of Amazon Bedrock with Python. It helps Python developers understand how to harness Amazon Bedrock in building generative AI-enabled applications. The resources also demonstrate integration with vector databases using RAG (Retrieval-augmented generation) and services like Amazon Aurora, RDS, and OpenSearch. Additionally, it explores using langchain and streamlit to create effective experimental applications.

suna

Kortix is an open-source platform designed to build, manage, and train AI agents for various tasks. It allows users to create autonomous agents, from general-purpose assistants to specialized automation tools. The platform offers capabilities such as browser automation, file management, web intelligence, system operations, API integrations, and agent building tools. Users can create custom agents tailored to specific domains, workflows, or business needs, enabling tasks like research & analysis, browser automation, file & document management, data processing & analysis, and system administration.

gateway

CentralMind Gateway is an AI-first data gateway that securely connects any data source and automatically generates secure, LLM-optimized APIs. It filters out sensitive data, adds traceability, and optimizes for AI workloads. Suitable for companies deploying AI agents for customer support and analytics.

ClaudeSync

ClaudeSync is a powerful tool designed to seamlessly synchronize local files with Claude.ai projects. It bridges the gap between local development environment and Claude.ai's knowledge base, offering real-time synchronization, CLI for easy management, support for multiple organizations and projects, intelligent file filtering, configurable sync interval, two-way synchronization, and more. It ensures data privacy, open source transparency, and comes with disclaimers for use at own risk. Users can quickly start syncing by installing, logging in, selecting organization and project, and running sync. Advanced features include API, organization, project, file, chat management, configuration, synchronization modes, scheduled sync, providers, custom ignore file, and troubleshooting. Contributions are welcome, and communication channels include GitHub Issues and Discord. Licensed under MIT License.

datahub

DataHub is an open-source data catalog designed for the modern data stack. It provides a platform for managing metadata, enabling users to discover, understand, and collaborate on data assets within their organization. DataHub offers features such as data lineage tracking, data quality monitoring, and integration with various data sources. It is built with contributions from Acryl Data and LinkedIn, aiming to streamline data management processes and enhance data discoverability across different teams and departments.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

ApeRAG

ApeRAG is a production-ready platform for Retrieval-Augmented Generation (RAG) that combines Graph RAG, vector search, and full-text search with advanced AI agents. It is ideal for building Knowledge Graphs, Context Engineering, and deploying intelligent AI agents for autonomous search and reasoning across knowledge bases. The platform offers features like advanced index types, intelligent AI agents with MCP support, enhanced Graph RAG with entity normalization, multimodal processing, hybrid retrieval engine, MinerU integration for document parsing, production-grade deployment with Kubernetes, enterprise management features, MCP integration, and developer-friendly tools for customization and contribution.

wanwu

Wanwu AI Agent Platform is an enterprise-grade one-stop commercially friendly AI agent development platform designed for business scenarios. It provides enterprises with a safe, efficient, and compliant one-stop AI solution. The platform integrates cutting-edge technologies such as large language models and business process automation to build an AI engineering platform covering model full life-cycle management, MCP, web search, AI agent rapid development, enterprise knowledge base construction, and complex workflow orchestration. It supports modular architecture design, flexible functional expansion, and secondary development, reducing the application threshold of AI technology while ensuring security and privacy protection of enterprise data. It accelerates digital transformation, cost reduction, efficiency improvement, and business innovation for enterprises of all sizes.

paperless-ai

Paperless-AI is an automated document analyzer tool designed for Paperless-ngx users. It utilizes the OpenAI API and Ollama (Mistral, llama, phi 3, gemma 2) to automatically scan, analyze, and tag documents. The tool offers features such as automatic document scanning, AI-powered document analysis, automatic title and tag assignment, manual mode for analyzing documents, easy setup through a web interface, document processing dashboard, error handling, and Docker support. Users can configure the tool through a web interface and access a debug interface for monitoring and troubleshooting. Paperless-AI aims to streamline document organization and analysis processes for users with access to Paperless-ngx and AI capabilities.

skypilot

SkyPilot is a framework for running LLMs, AI, and batch jobs on any cloud, offering maximum cost savings, highest GPU availability, and managed execution. SkyPilot abstracts away cloud infra burdens: - Launch jobs & clusters on any cloud - Easy scale-out: queue and run many jobs, automatically managed - Easy access to object stores (S3, GCS, R2) SkyPilot maximizes GPU availability for your jobs: * Provision in all zones/regions/clouds you have access to (the _Sky_), with automatic failover SkyPilot cuts your cloud costs: * Managed Spot: 3-6x cost savings using spot VMs, with auto-recovery from preemptions * Optimizer: 2x cost savings by auto-picking the cheapest VM/zone/region/cloud * Autostop: hands-free cleanup of idle clusters SkyPilot supports your existing GPU, TPU, and CPU workloads, with no code changes.

app

WebDB is a comprehensive and free database Integrated Development Environment (IDE) designed to maximize efficiency in database development and management. It simplifies and enhances database operations with features like DBMS discovery, query editor, time machine, NoSQL structure inferring, modern ERD visualization, and intelligent data generator. Developed with robust web technologies, WebDB is suitable for both novice and experienced database professionals.

synmetrix

Synmetrix is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube.js to consolidate metrics from various sources and distribute them downstream via a SQL API. Use cases include data democratization, business intelligence and reporting, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

note-companion

Note Companion is an AI-powered Obsidian plugin that automatically organizes and formats notes. It provides organizing suggestions, custom format AI prompts, automated workflows, handwritten note digitization, audio transcription, atomic note generation, YouTube summaries, and context-aware AI chat. Key use cases include smart vault management, handwritten notes digitization, and intelligent meeting notes. The tool offers advanced features like custom AI templates and multi-modal support for processing various content types. Users can seamlessly integrate with mobile workflows and utilize iOS shortcuts for sending Apple Notes to Obsidian. Note Companion enhances productivity by streamlining note organization and management tasks with AI assistance.

mlcraft

Synmetrix (prev. MLCraft) is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube (Cube.js) for flexible data models that consolidate metrics from various sources, enabling downstream distribution via a SQL API for integration into BI tools, reporting, dashboards, and data science. Use cases include data democratization, business intelligence, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

For similar tasks

instill-core

Instill Core is an open-source orchestrator comprising a collection of source-available projects designed to streamline every aspect of building versatile AI features with unstructured data. It includes Instill VDP (Versatile Data Pipeline) for unstructured data, AI, and pipeline orchestration, Instill Model for scalable MLOps and LLMOps for open-source or custom AI models, and Instill Artifact for unified unstructured data management. Instill Core can be used for tasks such as building, testing, and sharing pipelines, importing, serving, fine-tuning, and monitoring ML models, and transforming documents, images, audio, and video into a unified AI-ready format.

fastRAG

fastRAG is a research framework designed to build and explore efficient retrieval-augmented generative models. It incorporates state-of-the-art Large Language Models (LLMs) and Information Retrieval to empower researchers and developers with a comprehensive tool-set for advancing retrieval augmented generation. The framework is optimized for Intel hardware, customizable, and includes key features such as optimized RAG pipelines, efficient components, and RAG-efficient components like ColBERT and Fusion-in-Decoder (FiD). fastRAG supports various unique components and backends for running LLMs, making it a versatile tool for research and development in the field of retrieval-augmented generation.

ai-on-openshift

AI on OpenShift is a site providing installation recipes, patterns, and demos for AI/ML tools and applications used in Data Science and Data Engineering projects running on OpenShift. It serves as a comprehensive resource for developers looking to deploy AI solutions on the OpenShift platform.

sematic

Sematic is an open-source ML development platform that allows ML Engineers and Data Scientists to write complex end-to-end pipelines with Python. It can be executed locally, on a cloud VM, or on a Kubernetes cluster. Sematic enables chaining data processing jobs with model training into reproducible pipelines that can be monitored and visualized in a web dashboard. It offers features like easy onboarding, local-to-cloud parity, end-to-end traceability, access to heterogeneous compute resources, and reproducibility.

SuperKnowa

SuperKnowa is a fast framework to build Enterprise RAG (Retriever Augmented Generation) Pipelines at Scale, powered by watsonx. It accelerates Enterprise Generative AI applications to get prod-ready solutions quickly on private data. The framework provides pluggable components for tackling various Generative AI use cases using Large Language Models (LLMs), allowing users to assemble building blocks to address challenges in AI-driven text generation. SuperKnowa is battle-tested from 1M to 200M private knowledge base & scaled to billions of retriever tokens.

ZetaForge

ZetaForge is an open-source AI platform designed for rapid development of advanced AI and AGI pipelines. It allows users to assemble reusable, customizable, and containerized Blocks into highly visual AI Pipelines, enabling rapid experimentation and collaboration. With ZetaForge, users can work with AI technologies in any programming language, easily modify and update AI pipelines, dive into the code whenever needed, utilize community-driven blocks and pipelines, and share their own creations. The platform aims to accelerate the development and deployment of advanced AI solutions through its user-friendly interface and community support.

AdalFlow

AdalFlow is a library designed to help developers build and optimize Large Language Model (LLM) task pipelines. It follows a design pattern similar to PyTorch, offering a light, modular, and robust codebase. Named in honor of Ada Lovelace, AdalFlow aims to inspire more women to enter the AI field. The library is tailored for various GenAI applications like chatbots, translation, summarization, code generation, and autonomous agents, as well as classical NLP tasks such as text classification and named entity recognition. AdalFlow emphasizes modularity, robustness, and readability to support users in customizing and iterating code for their specific use cases.

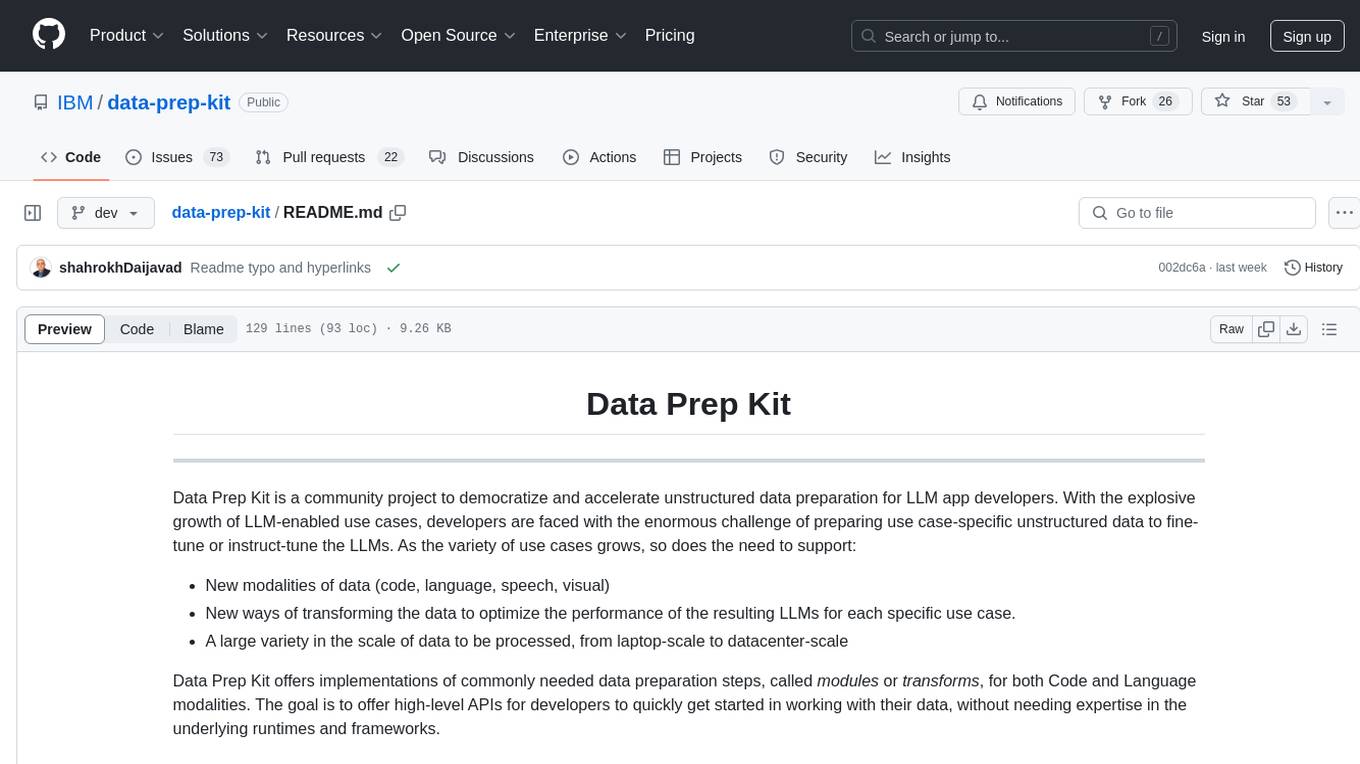

data-prep-kit

Data Prep Kit is a community project aimed at democratizing and speeding up unstructured data preparation for LLM app developers. It provides high-level APIs and modules for transforming data (code, language, speech, visual) to optimize LLM performance across different use cases. The toolkit supports Python, Ray, Spark, and Kubeflow Pipelines runtimes, offering scalability from laptop to datacenter-scale processing. Developers can contribute new custom modules and leverage the data processing library for building data pipelines. Automation features include workflow automation with Kubeflow Pipelines for transform execution.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.