agenta

The open-source LLMOps platform: prompt playground, prompt management, LLM evaluation, and LLM observability all in one place.

Stars: 3831

Agenta is an open-source LLM developer platform for prompt engineering, evaluation, human feedback, and deployment of complex LLM applications. It provides tools for prompt engineering and management, evaluation, human annotation, and deployment, all without imposing any restrictions on your choice of framework, library, or model. Agenta allows developers and product teams to collaborate in building production-grade LLM-powered applications in less time.

README:

Agenta is a platform for building production-grade LLM applications. It helps engineering and product teams create reliable LLM apps faster through integrated prompt management, evaluation, and observability.

Collaborate with Subject Matter Experts (SMEs) on prompt engineering and make sure nothing breaks in production.

- Interactive LLM Playground: Compare prompts side by side against your test cases

- Multi-Model Support: Experiment with 50+ LLM models or bring-your-own models

- Version Control: Version prompts and configurations with branching and environments

- Complex Configurations: Enable SMEs to collaborate on complex configuration schemas beyond simple prompts

Evaluate your LLM applications systematically with both human and automated feedback.

- Flexible Testsets: Create testcases from production data, playground experiments, or upload CSVs

- Pre-built and Custom Evaluators: Use LLM-as-judge, one of our 20+ pre-built evaluators, or you custom evaluators

- UI and API Access: Run evaluations via UI (for SMEs) or programmatically (for engineers)

- Human Feedback Integration: Collect and incorporate expert annotations

Explore evaluation frameworks →

Get visibility into your LLM applications in production.

- Cost & Performance Tracking: Monitor spending, latency, and usage patterns

- LLM Tracing: Debug complex workflows with detailed traces

- Open Standards: OpenTelemetry native tracing compatible with OpenLLMetry, and OpenInference

- Integrations: Comes with pre-built integrations for most models and frameworks

The easiest way to get started is through Agenta Cloud. Free tier available with no credit card required.

- Clone Agenta:

git clone https://github.com/Agenta-AI/agenta && cd agenta- Copy configuration:

Before starting the services, create the environment file from the example:

cp hosting/docker-compose/oss/env.oss.gh.example hosting/docker-compose/oss/.env.oss.gh- Start Agenta services:

docker compose -f hosting/docker-compose/oss/docker-compose.gh.yml --env-file hosting/docker-compose/oss/.env.oss.gh --profile with-web --profile with-traefik up -d- Access Agenta at

http://localhost.

For deploying on a remote host, or using different ports refers to our self-hosting and remote deployment documentation.

Find help, explore resources, or get involved:

- 📚 Documentation – Full guides and API reference

- 📋 Changelog – Track recent updates

- 💬 Slack Community – Ask questions and get support

We welcome contributions of all kinds — from filing issues and sharing ideas to improving the codebase.

- 🐛 Report bugs – Help us by reporting problems you encounter

- 💡 Share ideas and feedback – Suggest features or vote on ideas

- 🔧 Contribute to the codebase – Read the guide and open a pull request

Consider giving us a star! It helps us grow our community and gets Agenta in front of more developers.

Thanks goes to these wonderful people (emoji key):

This project follows the all-contributors specification. Contributions of any kind are welcome!

By default, Agenta automatically reports anonymized basic usage statistics. This helps us understand how Agenta is used and track its overall usage and growth. This data does not include any sensitive information. To disable anonymized telemetry set AGENTA_TELEMETRY_ENABLED to false in your .env file.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agenta

Similar Open Source Tools

agenta

Agenta is an open-source LLM developer platform for prompt engineering, evaluation, human feedback, and deployment of complex LLM applications. It provides tools for prompt engineering and management, evaluation, human annotation, and deployment, all without imposing any restrictions on your choice of framework, library, or model. Agenta allows developers and product teams to collaborate in building production-grade LLM-powered applications in less time.

matrixone

MatrixOne is the industry's first database to bring Git-style version control to data, combined with MySQL compatibility, AI-native capabilities, and cloud-native architecture. It is a HTAP (Hybrid Transactional/Analytical Processing) database with a hyper-converged HSTAP engine that seamlessly handles transactional, analytical, full-text search, and vector search workloads in a single unified system—no data movement, no ETL, no compromises. Manage your database like code with features like instant snapshots, time travel, branch & merge, instant rollback, and complete audit trail. Built for the AI era, MatrixOne is MySQL-compatible, AI-native, and cloud-native, offering storage-compute separation, elastic scaling, and Kubernetes-native deployment. It serves as one database for everything, replacing multiple databases and ETL jobs with native OLTP, OLAP, full-text search, and vector search capabilities.

codemod

Codemod platform is a tool that helps developers create, distribute, and run codemods in codebases of any size. The AI-powered, community-led codemods enable automation of framework upgrades, large refactoring, and boilerplate programming with speed and developer experience. It aims to make dream migrations a reality for developers by providing a platform for seamless codemod operations.

bitcart

Bitcart is a platform designed for merchants, users, and developers, providing easy setup and usage. It includes various linked repositories for core daemons, admin panel, ready store, Docker packaging, Python library for coins connection, BitCCL scripting language, documentation, and official site. The platform aims to simplify the process for merchants and developers to interact and transact with cryptocurrencies, offering a comprehensive ecosystem for managing transactions and payments.

poco-agent

Poco Agent is a cloud-based tool that provides a secure sandbox environment for running tasks without affecting the host machine. It offers a modern UI with mobile adaptability, easy configuration through Docker, and extensive capabilities with support for MCP protocol and custom skills. Users can run tasks asynchronously and schedule them, even when the web interface is closed. Additional features include a built-in browser for internet research and GitHub repository integration. Poco Agent aims to be a more secure, visually appealing, and user-friendly alternative to OpenClaw.

NeuroAI_Course

Neuromatch Academy NeuroAI Course Syllabus is a repository that contains the schedule and licensing information for the NeuroAI course. The course is designed to provide participants with a comprehensive understanding of artificial intelligence in neuroscience. It covers various topics related to AI applications in neuroscience, including machine learning, data analysis, and computational modeling. The content is primarily accessed from the ebook provided in the repository, and the course is scheduled for July 15-26, 2024. The repository is shared under a Creative Commons Attribution 4.0 International License and software elements are additionally licensed under the BSD (3-Clause) License. Contributors to the project are acknowledged and welcomed to contribute further.

ClaraVerse

ClaraVerse is a privacy-first AI assistant and agent builder that allows users to chat with AI, create intelligent agents, and turn them into fully functional apps. It operates entirely on open-source models running on the user's device, ensuring data privacy and security. With features like AI assistant, image generation, intelligent agent builder, and image gallery, ClaraVerse offers a versatile platform for AI interaction and app development. Users can install ClaraVerse through Docker, native desktop apps, or the web version, with detailed instructions provided for each option. The tool is designed to empower users with control over their AI stack and leverage community-driven innovations for AI development.

AI-on-the-edge-device

AI-on-the-edge-device is a project that enables users to digitize analog water, gas, power, and other meters using an ESP32 board with a supported camera. It integrates Tensorflow Lite for AI processing, offers a small and affordable device with integrated camera and illumination, provides a web interface for administration and control, supports Homeassistant, Influx DB, MQTT, and REST API. The device captures meter images, extracts Regions of Interest (ROIs), runs them through AI for digitization, and allows users to send data to MQTT, InfluxDb, or access it via REST API. The project also includes 3D-printable housing options and tools for logfile management.

CGraph

CGraph is a cross-platform **D** irected **A** cyclic **G** raph framework based on pure C++ without any 3rd-party dependencies. You, with it, can **build your own operators simply, and describe any running schedules** as you need, such as dependence, parallelling, aggregation and so on. Some useful tools and plugins are also provide to improve your project. Tutorials and contact information are show as follows. Please **get in touch with us for free** if you need more about this repository.

Jarvis

Jarvis is a powerful virtual AI assistant designed to simplify daily tasks through voice command integration. It features automation, device management, and personalized interactions, transforming technology engagement. Built using Python and AI models, it serves personal and administrative needs efficiently, making processes seamless and productive.

fastapi-admin

智元 Fast API is a one-stop API management system that unifies various LLM APIs in terms of format, standards, and management to achieve the ultimate in functionality, performance, and user experience. It includes features such as model management with intelligent and regex matching, backup model functionality, key management, proxy management, company management, user management, and chat management for both admin and user ends. The project supports cluster deployment, multi-site deployment, and cross-region deployment. It also provides a public API site for registration with a contact to the author for a 10 million quota. The tool offers a comprehensive dashboard, model management, application management, key management, and chat management functionalities for users.

CTFCrackTools

CTFCrackTools X is the next generation of CTFCrackTools, featuring extreme performance and experience, extensible node-based architecture, and future-oriented technology stack. It offers a visual node-based workflow for encoding and decoding processes, with 43+ built-in algorithms covering common CTF needs like encoding, classical ciphers, modern encryption, hashing, and text processing. The tool is lightweight (< 15MB), high-performance, and cross-platform, supporting Windows, macOS, and Linux without the need for a runtime environment. It aims to provide a beginner-friendly tool for CTF enthusiasts to easily work on challenges and improve their skills.

simpletransformers

Simple Transformers is a library based on the Transformers library by HuggingFace, allowing users to quickly train and evaluate Transformer models with only 3 lines of code. It supports various tasks such as Information Retrieval, Language Models, Encoder Model Training, Sequence Classification, Token Classification, Question Answering, Language Generation, T5 Model, Seq2Seq Tasks, Multi-Modal Classification, and Conversational AI.

LotteryMaster

LotteryMaster is a tool designed to fetch lottery data, save it to Excel files, and provide analysis reports including number prediction, number recommendation, and number trends. It supports multiple platforms for access such as Web and mobile App. The tool integrates AI models like Qwen API and DeepSeek for generating analysis reports and trend analysis charts. Users can configure API parameters for controlling randomness, diversity, presence penalty, and maximum tokens. The tool also includes a frontend project based on uniapp + Vue3 + TypeScript for multi-platform applications. It provides a backend service running on Fastify with Node.js, Cheerio.js for web scraping, Pino for logging, xlsx for Excel file handling, and Jest for testing. The project is still in development and some features may not be fully implemented. The analysis reports are for reference only and do not constitute investment advice. Users are advised to use the tool responsibly and avoid addiction to gambling.

BiBi-Keyboard

BiBi-Keyboard is an AI-based intelligent voice input method that aims to make voice input more natural and efficient. It provides features such as voice recognition with simple and intuitive operations, multiple ASR engine support, AI text post-processing, floating ball input for cross-input method usage, AI editing panel with rich editing tools, Material3 design for modern interface style, and support for multiple languages. Users can adjust keyboard height, test input directly in the settings page, view recognition word count statistics, receive vibration feedback, and check for updates automatically. The tool requires Android 10.0 or higher, microphone permission for voice recognition, optional overlay permission for the floating ball feature, and optional accessibility permission for automatic text insertion.

Awesome-LLM-RAG-Application

Awesome-LLM-RAG-Application is a repository that provides resources and information about applications based on Large Language Models (LLM) with Retrieval-Augmented Generation (RAG) pattern. It includes a survey paper, GitHub repo, and guides on advanced RAG techniques. The repository covers various aspects of RAG, including academic papers, evaluation benchmarks, downstream tasks, tools, and technologies. It also explores different frameworks, preprocessing tools, routing mechanisms, evaluation frameworks, embeddings, security guardrails, prompting tools, SQL enhancements, LLM deployment, observability tools, and more. The repository aims to offer comprehensive knowledge on RAG for readers interested in exploring and implementing LLM-based systems and products.

For similar tasks

agenta

Agenta is an open-source LLM developer platform for prompt engineering, evaluation, human feedback, and deployment of complex LLM applications. It provides tools for prompt engineering and management, evaluation, human annotation, and deployment, all without imposing any restrictions on your choice of framework, library, or model. Agenta allows developers and product teams to collaborate in building production-grade LLM-powered applications in less time.

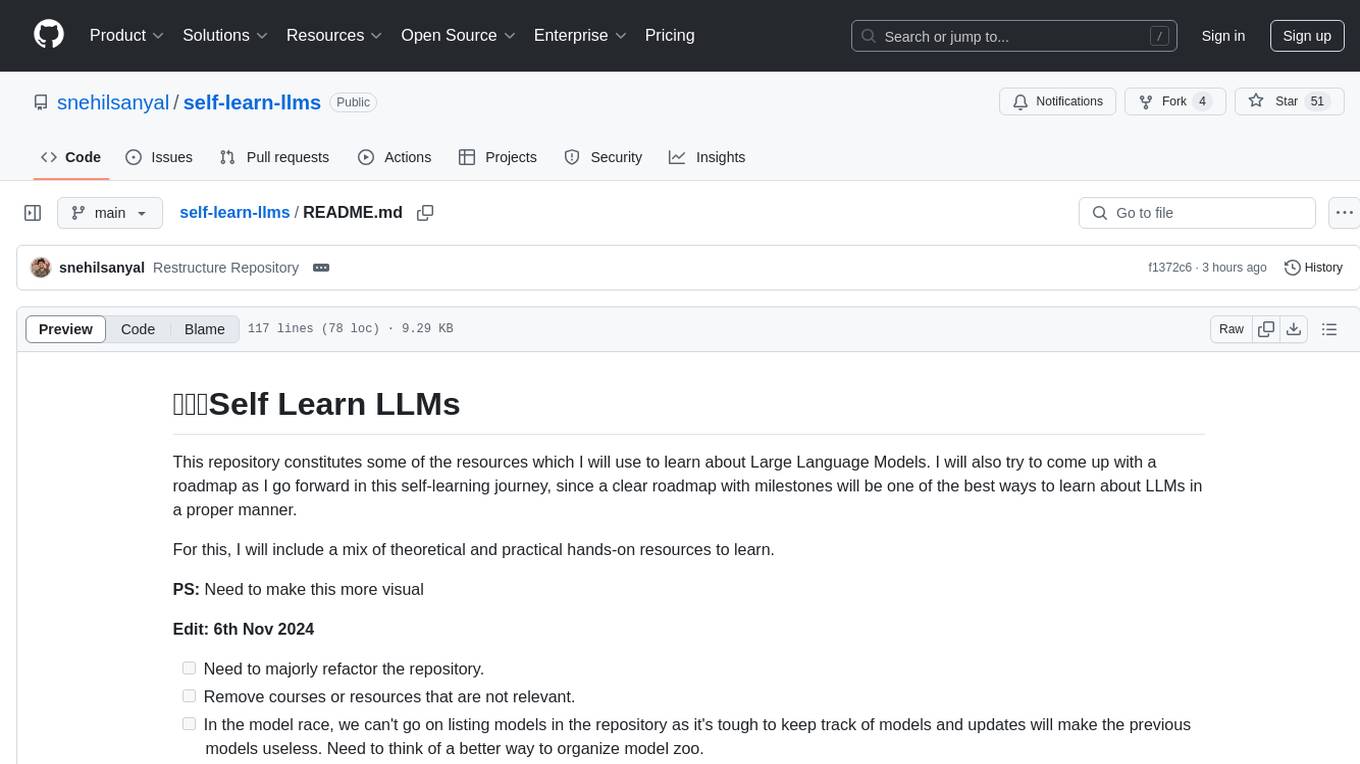

self-learn-llms

Self Learn LLMs is a repository containing resources for self-learning about Large Language Models. It includes theoretical and practical hands-on resources to facilitate learning. The repository aims to provide a clear roadmap with milestones for proper understanding of LLMs. The owner plans to refactor the repository to remove irrelevant content, organize model zoo better, and enhance the learning experience by adding contributors and hosting notes, tutorials, and open discussions.

litgpt

LitGPT is a command-line tool designed to easily finetune, pretrain, evaluate, and deploy 20+ LLMs **on your own data**. It features highly-optimized training recipes for the world's most powerful open-source large-language-models (LLMs).

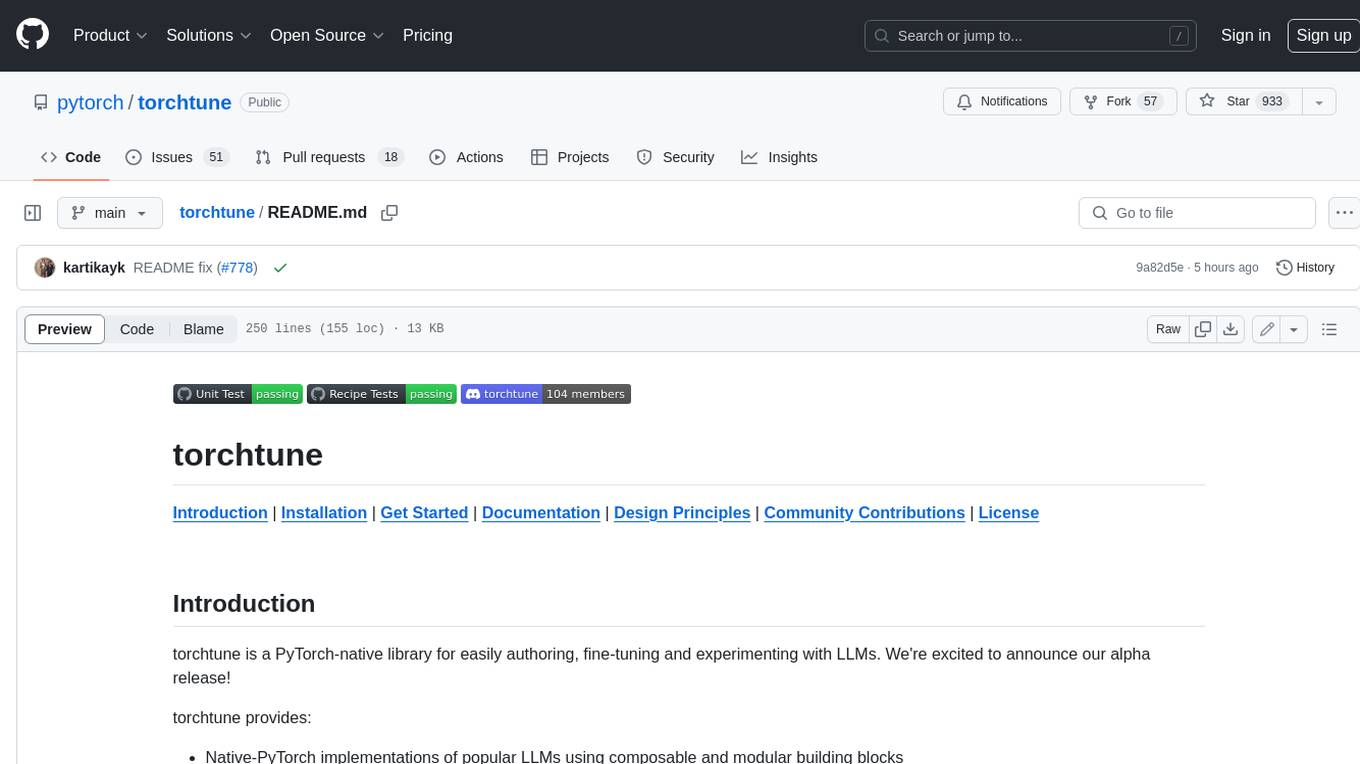

torchtune

Torchtune is a PyTorch-native library for easily authoring, fine-tuning, and experimenting with LLMs. It provides native-PyTorch implementations of popular LLMs using composable and modular building blocks, easy-to-use and hackable training recipes for popular fine-tuning techniques, YAML configs for easily configuring training, evaluation, quantization, or inference recipes, and built-in support for many popular dataset formats and prompt templates to help you quickly get started with training.

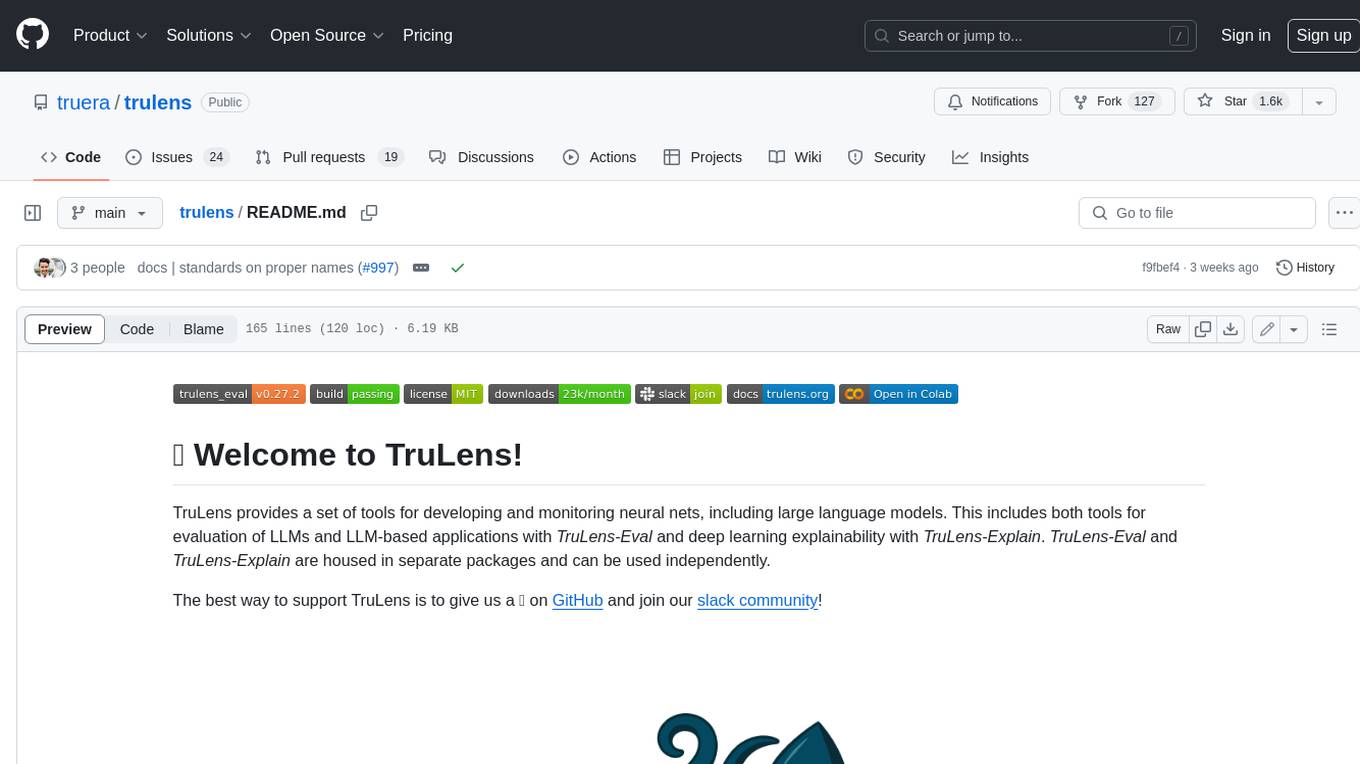

trulens

TruLens provides a set of tools for developing and monitoring neural nets, including large language models. This includes both tools for evaluation of LLMs and LLM-based applications with _TruLens-Eval_ and deep learning explainability with _TruLens-Explain_. _TruLens-Eval_ and _TruLens-Explain_ are housed in separate packages and can be used independently.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

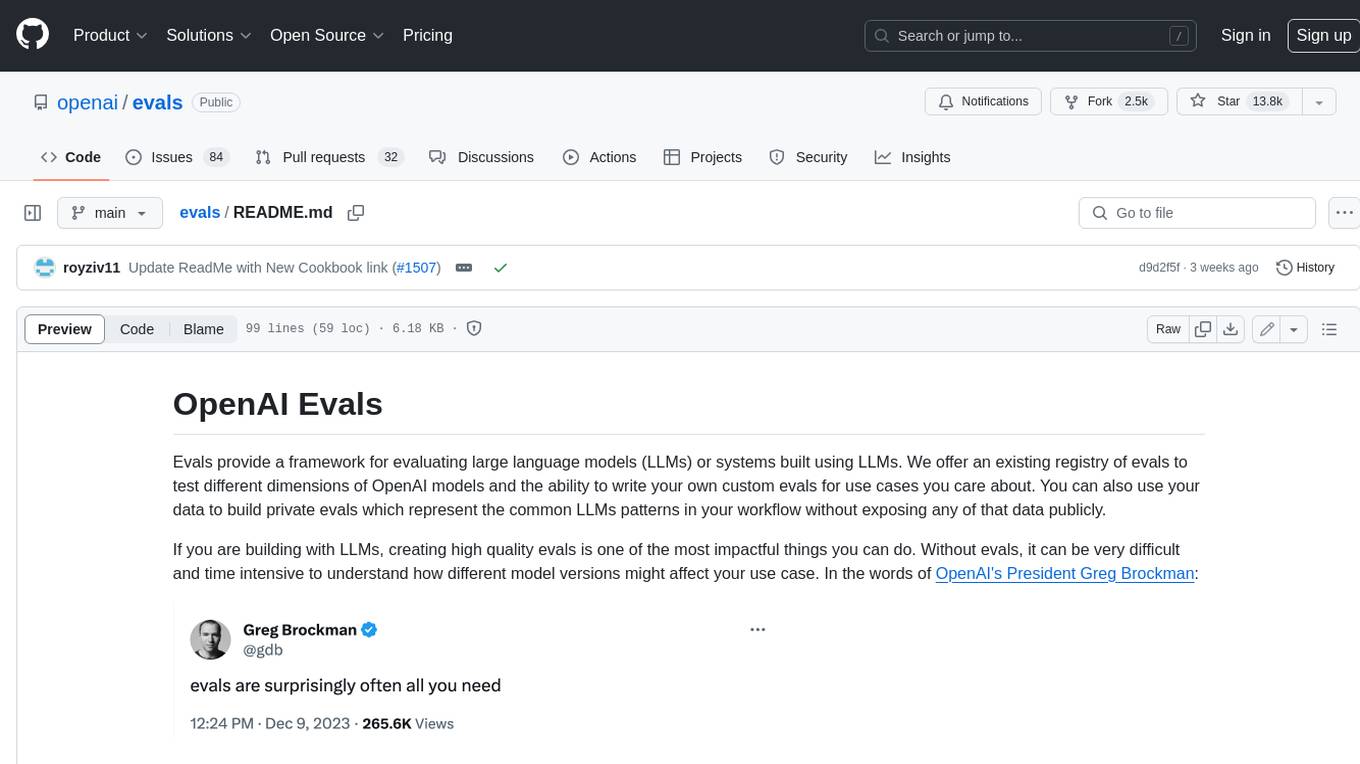

evals

Evals provide a framework for evaluating large language models (LLMs) or systems built using LLMs. We offer an existing registry of evals to test different dimensions of OpenAI models and the ability to write your own custom evals for use cases you care about. You can also use your data to build private evals which represent the common LLMs patterns in your workflow without exposing any of that data publicly.

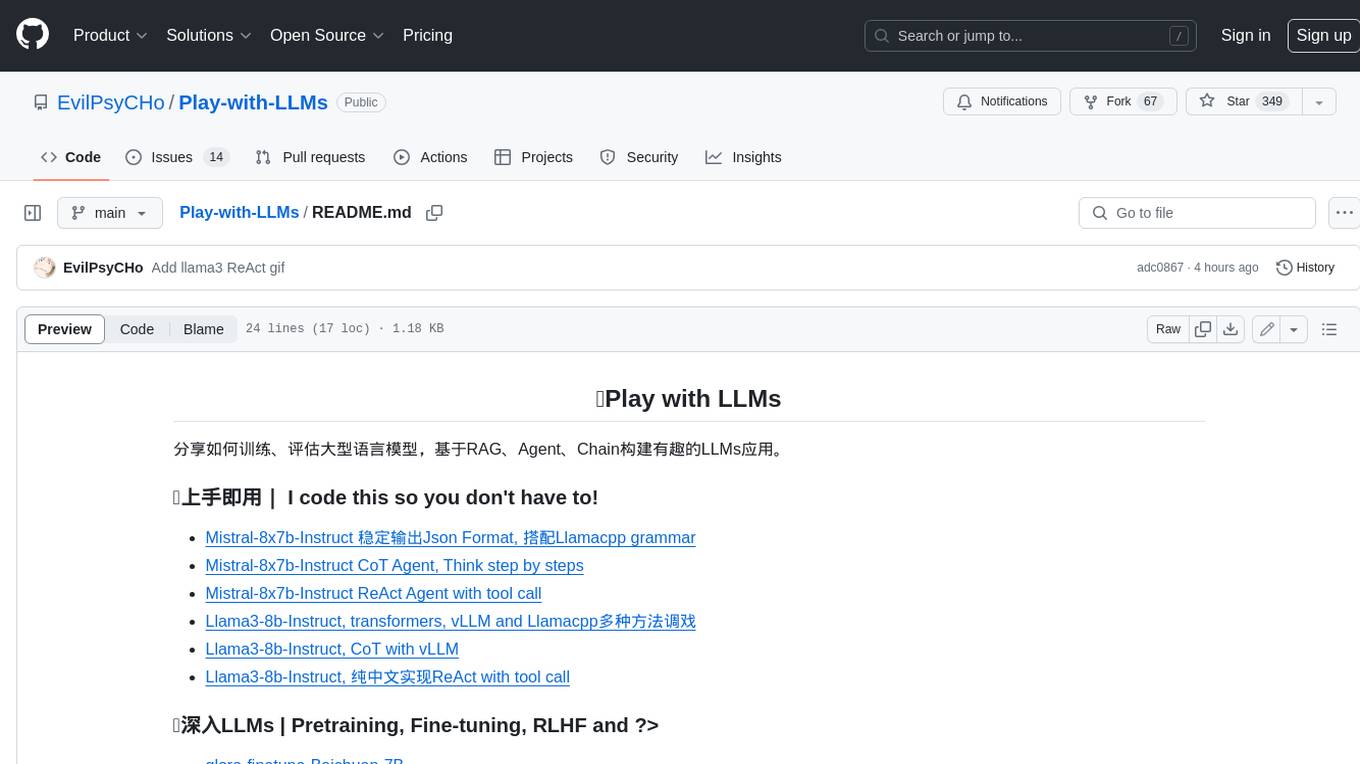

Play-with-LLMs

This repository provides a comprehensive guide to training, evaluating, and building applications with Large Language Models (LLMs). It covers various aspects of LLMs, including pretraining, fine-tuning, reinforcement learning from human feedback (RLHF), and more. The repository also includes practical examples and code snippets to help users get started with LLMs quickly and easily.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.