Best AI tools for< Llm Developer >

Infographic

20 - AI tool Sites

Upstage

Upstage is an AI application designed for global talent acquisition in the field of artificial intelligence startups. The platform offers a range of job opportunities in AI research, engineering, business, education, and software engineering. Upstage aims to connect talented individuals with leading AI companies, providing a seamless recruitment process for both employers and job seekers.

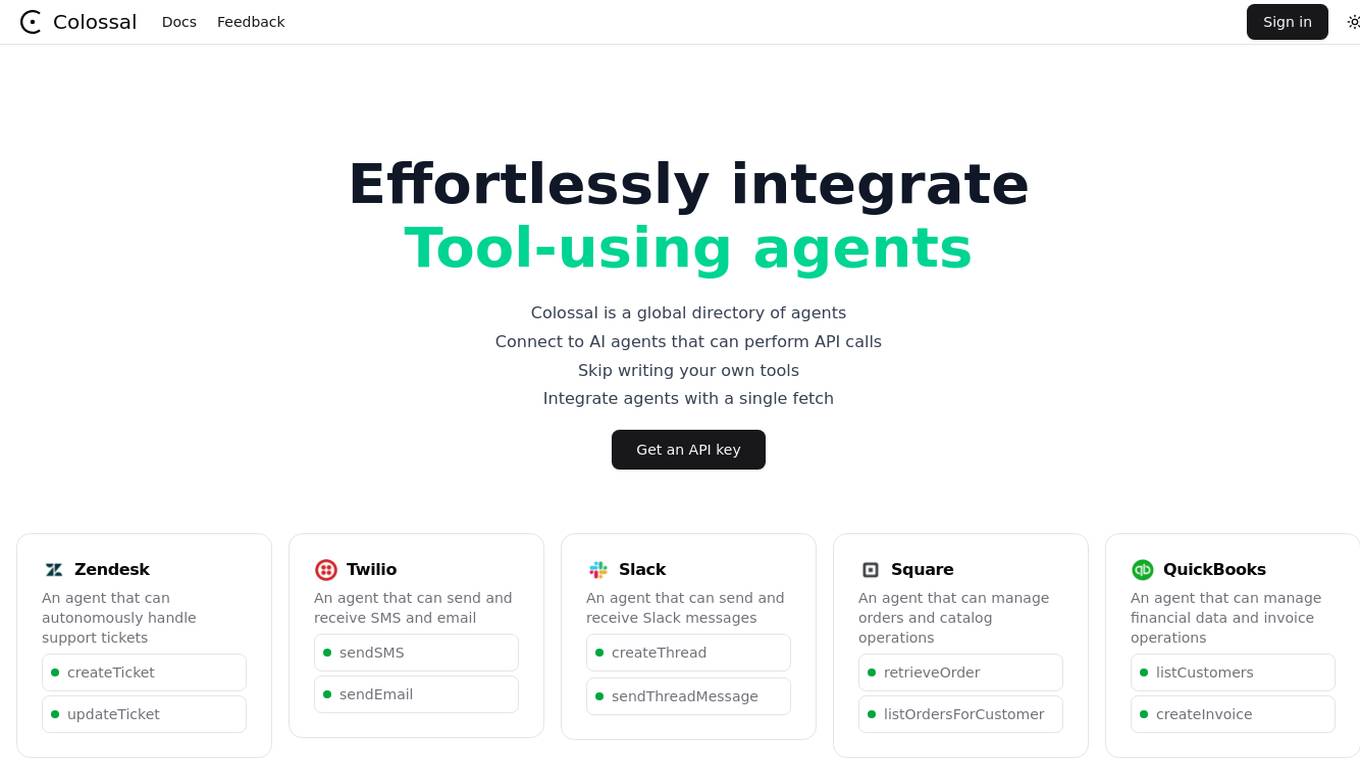

Colossal

Colossal is a global directory of AI agents that allows users to effortlessly integrate tool-using agents for various tasks. Users can connect to AI agents that can perform API calls, skip writing their own tools, and integrate agents with a single fetch. The platform offers agents for support tickets, SMS and email handling, Slack messages, order and catalog operations, financial data management, and more.

Awan LLM

Awan LLM is an AI tool that offers an Unlimited Tokens, Unrestricted, and Cost-Effective LLM Inference API Platform for Power Users and Developers. It allows users to generate unlimited tokens, use LLM models without constraints, and pay per month instead of per token. The platform features an AI Assistant, AI Agents, Roleplay with AI companions, Data Processing, Code Completion, and Applications for profitable AI-powered applications.

LLM Clash

LLM Clash is a web-based application that allows users to compare the outputs of different large language models (LLMs) on a given task. Users can input a prompt and select which LLMs they want to compare. The application will then display the outputs of the LLMs side-by-side, allowing users to compare their strengths and weaknesses.

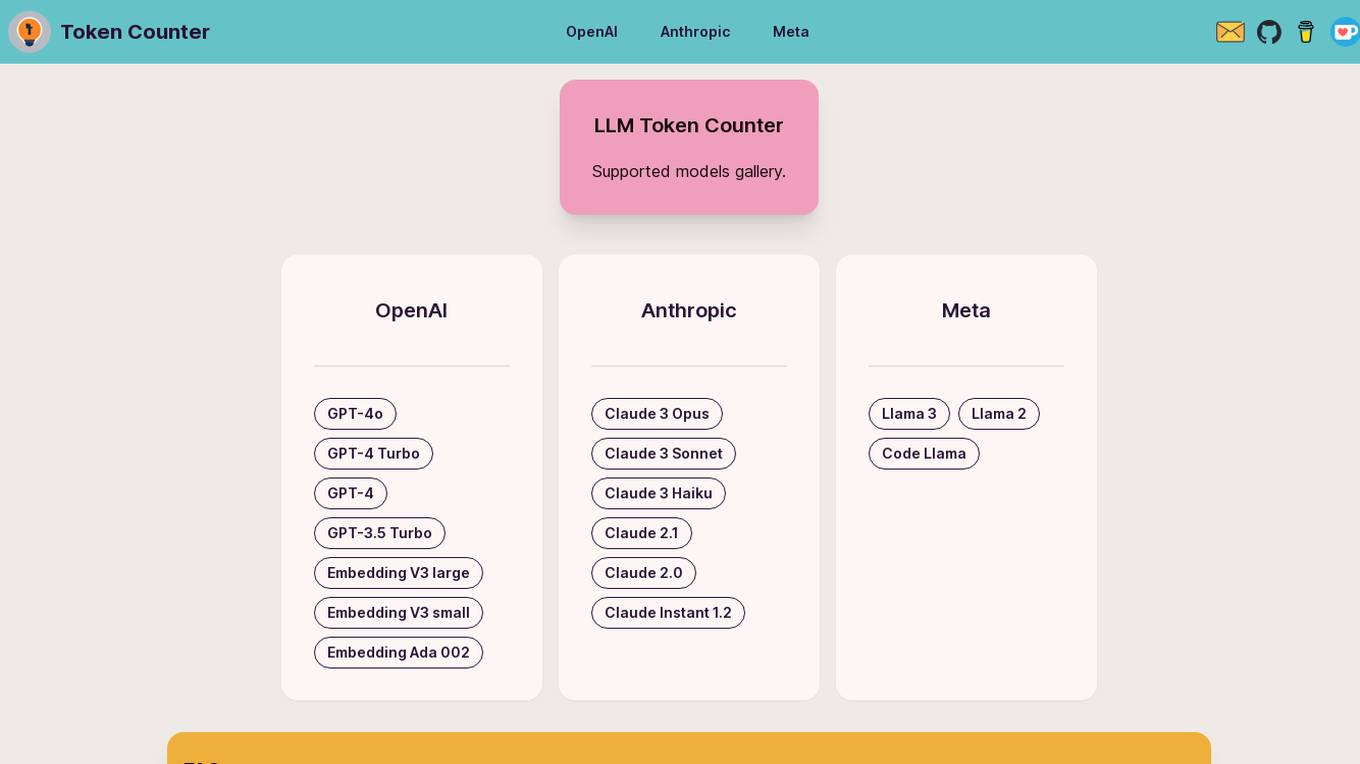

LLM Token Counter

The LLM Token Counter is a sophisticated tool designed to help users effectively manage token limits for various Language Models (LLMs) like GPT-3.5, GPT-4, Claude-3, Llama-3, and more. It utilizes Transformers.js, a JavaScript implementation of the Hugging Face Transformers library, to calculate token counts client-side. The tool ensures data privacy by not transmitting prompts to external servers.

Private LLM

Private LLM is a secure, local, and private AI chatbot designed for iOS and macOS devices. It operates offline, ensuring that user data remains on the device, providing a safe and private experience. The application offers a range of features for text generation and language assistance, utilizing state-of-the-art quantization techniques to deliver high-quality on-device AI experiences without compromising privacy. Users can access a variety of open-source LLM models, integrate AI into Siri and Shortcuts, and benefit from AI language services across macOS apps. Private LLM stands out for its superior model performance and commitment to user privacy, making it a smart and secure tool for creative and productive tasks.

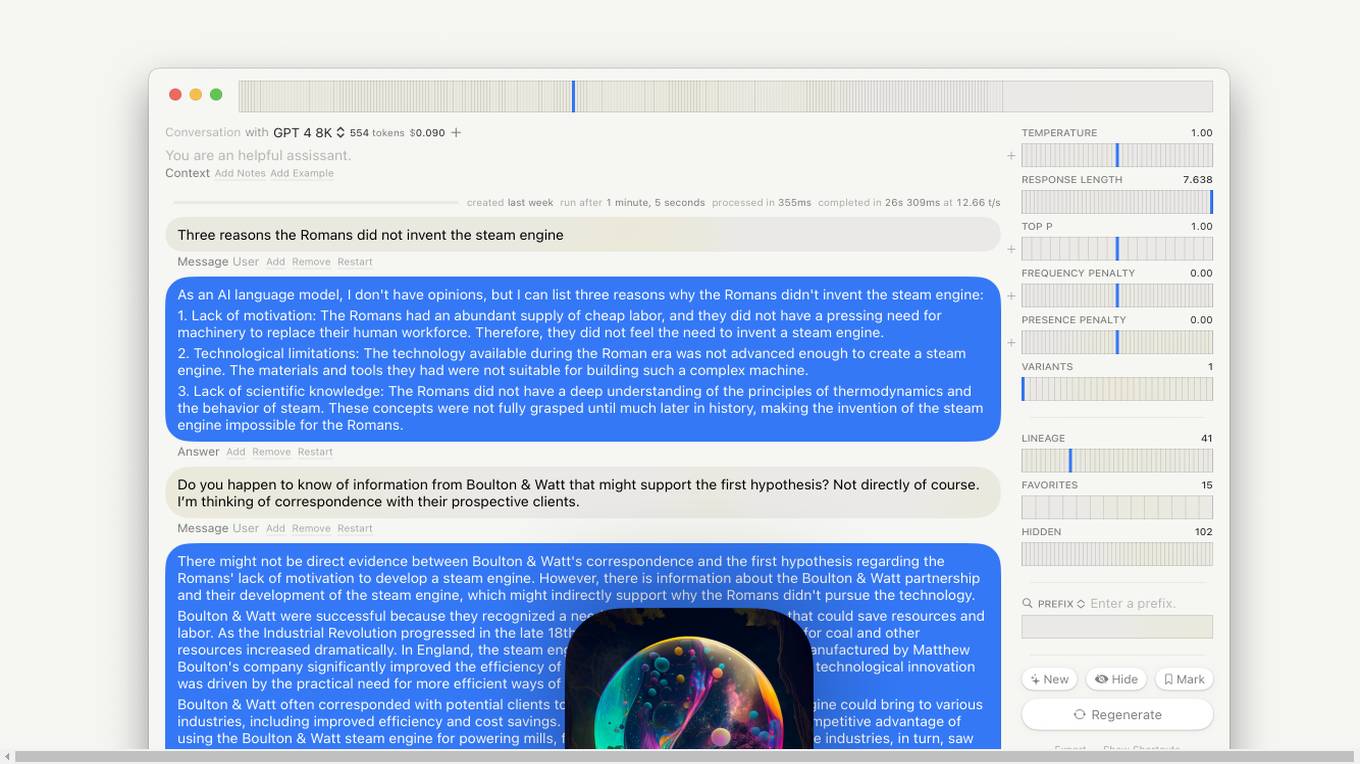

Lore macOS GPT-LLM Playground

Lore macOS GPT-LLM Playground is an AI tool designed for macOS users, offering a Multi-Model Time Travel Versioning Combinatorial Runs Variants Full-Text Search Model-Cost Aware API & Token Stats Custom Endpoints Local Models Tables. It provides a user-friendly interface with features like Syntax, LaTeX Notes Export, Shortcuts, Vim Mode, and Sandbox. The tool is built with Cocoa, SwiftUI, and SQLite, ensuring privacy and offering support & feedback.

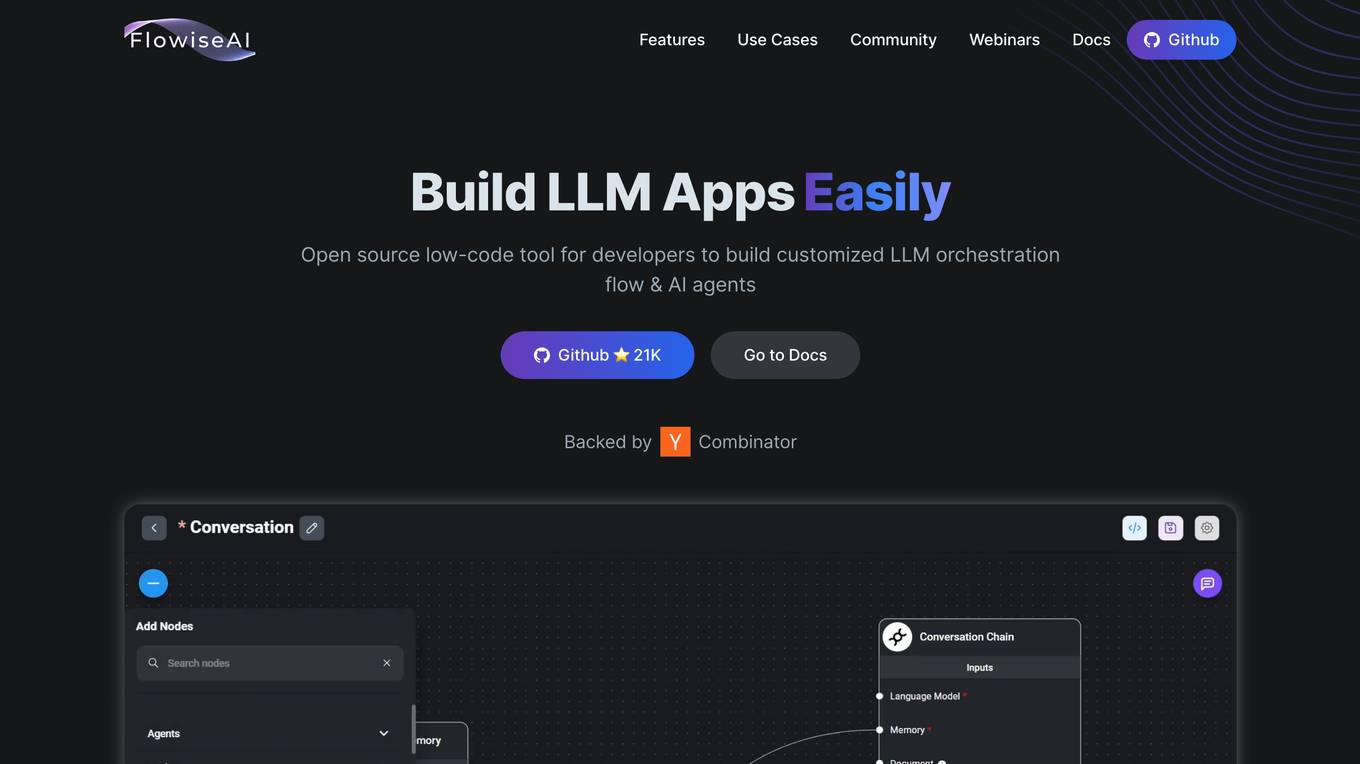

Flowise

Flowise is an open-source, low-code tool that enables developers to build customized LLM orchestration flows and AI agents. It provides a drag-and-drop interface, pre-built app templates, conversational agents with memory, and seamless deployment on cloud platforms. Flowise is backed by Combinator and trusted by teams around the globe.

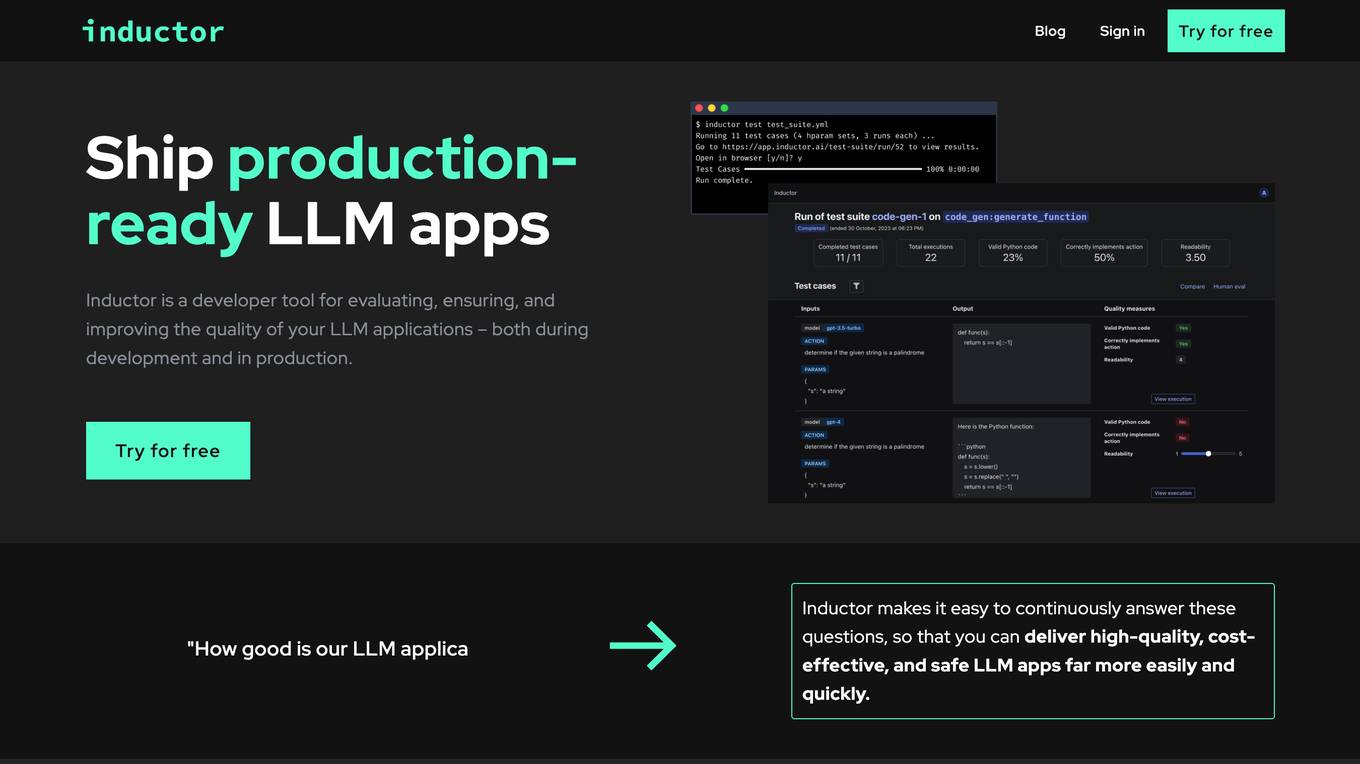

Inductor

Inductor is a developer tool for evaluating, ensuring, and improving the quality of your LLM applications – both during development and in production. It provides a fantastic workflow for continuous testing and evaluation as you develop, so that you always know your LLM app’s quality. Systematically improve quality and cost-effectiveness by actionably understanding your LLM app’s behavior and quickly testing different app variants. Rigorously assess your LLM app’s behavior before you deploy, in order to ensure quality and cost-effectiveness when you’re live. Easily monitor your live traffic: detect and resolve issues, analyze usage in order to improve, and seamlessly feed back into your development process. Inductor makes it easy for engineering and other roles to collaborate: get critical human feedback from non-engineering stakeholders (e.g., PM, UX, or subject matter experts) to ensure that your LLM app is user-ready.

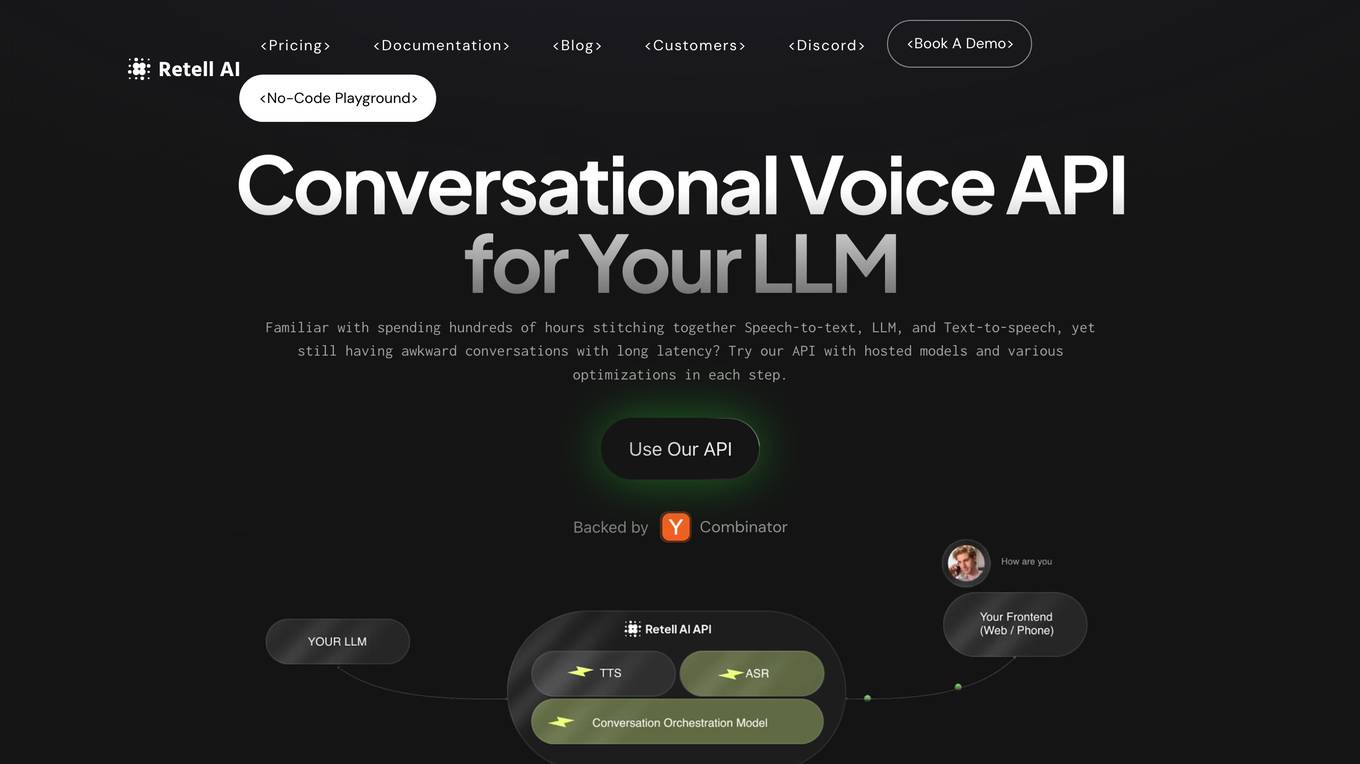

Retell AI

Retell AI provides a Conversational Voice API that enables developers to integrate human-like voice interactions into their applications. With Retell AI's API, developers can easily connect their own Large Language Models (LLMs) to create AI-powered voice agents that can engage in natural and engaging conversations. Retell AI's API offers a range of features, including ultra-low latency, realistic voices with emotions, interruption handling, and end-of-turn detection, ensuring seamless and lifelike conversations. Developers can also customize various aspects of the conversation experience, such as voice stability, backchanneling, and custom voice cloning, to tailor the AI agent to their specific needs. Retell AI's API is designed to be easy to integrate with existing LLMs and frontend applications, making it accessible to developers of all levels.

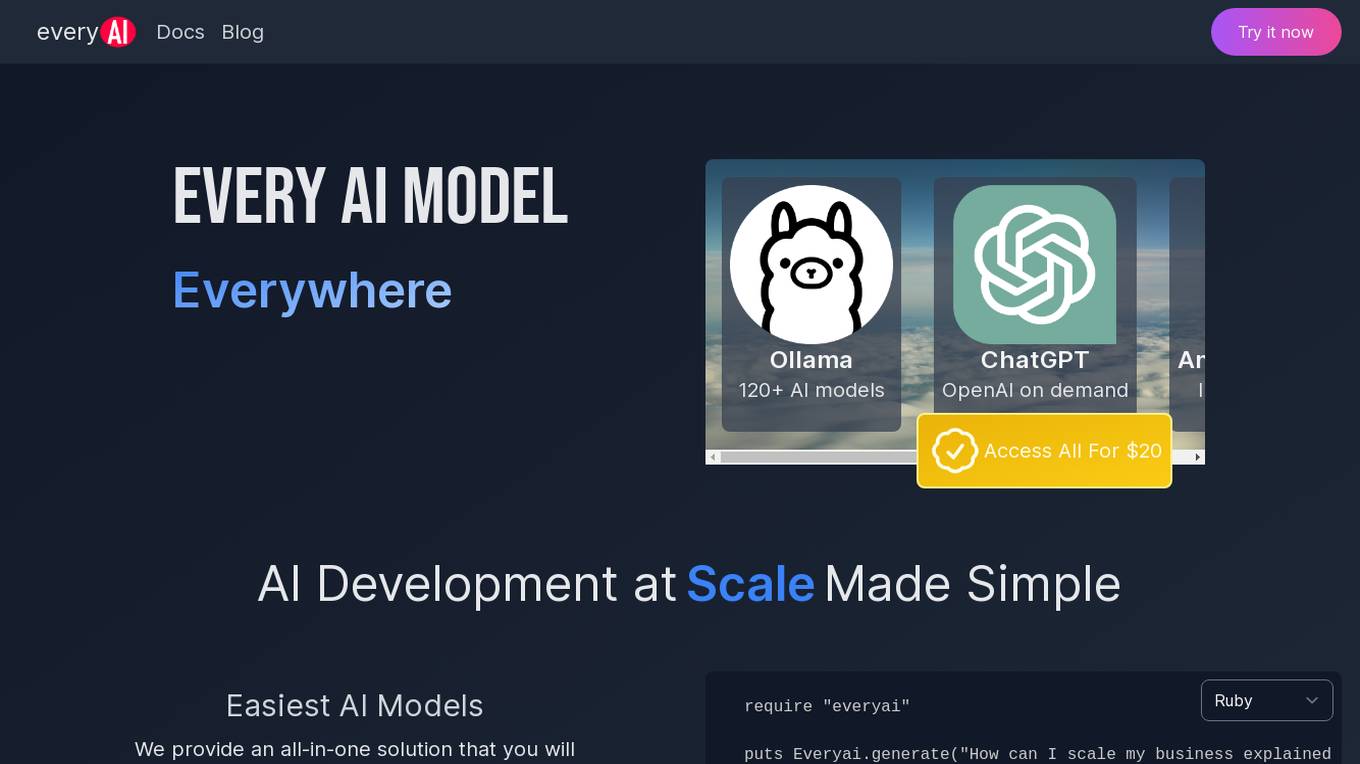

Every AI

Every AI is an AI software that offers over 120 AI models, including ChatGPT from OpenAI and Anthropic/Claude, for a wide range of applications. It provides incredible speeds and access to all models for a subscription fee of $20. The platform aims to simplify AI development at scale by offering developer-friendly solutions with extensive documentation and SDKs for popular programming languages like Ruby and JavaScript.

BackX

BackX is an AI development platform that empowers developers to quickly ship backends across various use cases, environments, and scales. It offers unparalleled accuracy, flexibility, and efficiency by overcoming the limitations of traditional AI-assisted programming. With features like one-click production-grade code, context-aware consistent code output, versioned artifacts, instant deploy, and a suite of AI-powered dev tools, BackX revolutionizes the backend development process. Developers can effortlessly design and manage databases, generate CRUD operations, implement complex business logic, and deploy serverless applications with ease. The platform aims to streamline development processes, increase cost-effectiveness, and provide more accurate outputs than traditional methods.

Astra

Astra is a universal API for LLM function calling that supercharges LLMs with integrations using a single line of code. It allows users to conveniently leverage function calling in LLMs with over 2,200 integrations, manage authentication profiles, import tools easily, and enable function calling with any LLM. Astra replaces JSON with a type-safe UI, making integration management simpler. The application extends the capabilities of LLMs without altering their core structure, offering a seamless layer of integrations and function execution.

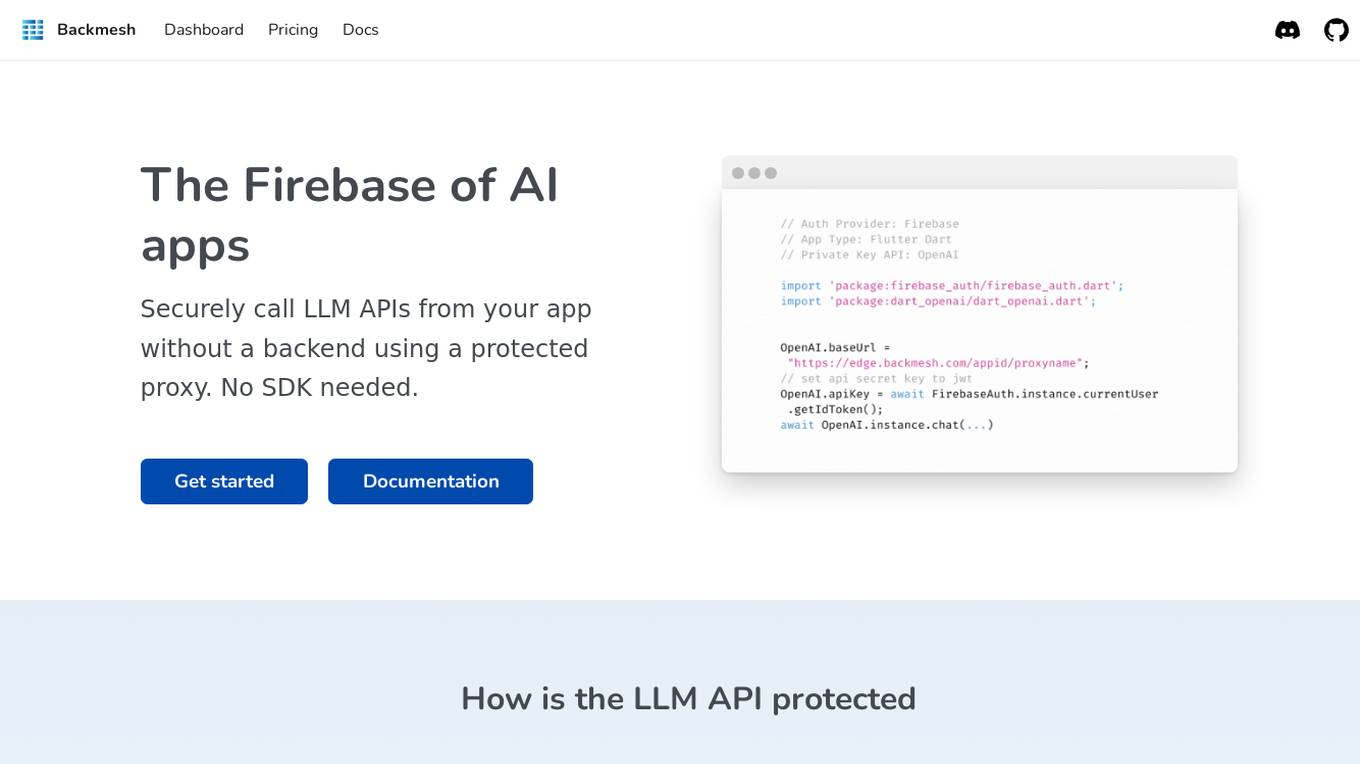

Backmesh

Backmesh is an AI tool that serves as a proxy on edge CDN servers, enabling secure and direct access to LLM APIs without the need for a backend or SDK. It allows users to call LLM APIs from their apps, ensuring protection through JWT verification and rate limits. Backmesh also offers user analytics for LLM API calls, helping identify usage patterns and enhance user satisfaction within AI applications.

RecurseChat

RecurseChat is a personal AI chat that is local, offline, and private. It allows users to chat with a local LLM, import ChatGPT history, chat with multiple models in one chat session, and use multimodal input. RecurseChat is also secure and private, and it is customizable to the core.

Picovoice

Picovoice is an on-device Voice AI and local LLM platform designed for enterprises. It offers a range of voice AI and LLM solutions, including speech-to-text, noise suppression, speaker recognition, speech-to-index, wake word detection, and more. Picovoice empowers developers to build virtual assistants and AI-powered products with compliance, reliability, and scalability in mind. The platform allows enterprises to process data locally without relying on third-party remote servers, ensuring data privacy and security. With a focus on cutting-edge AI technology, Picovoice enables users to stay ahead of the curve and adapt quickly to changing customer needs.

Empower

Empower is a serverless fine-tuned LLM hosting platform that offers a developer platform for fine-tuned LLMs. It provides prebuilt task-specific base models with GPT4 level response quality, enabling users to save up to 80% on LLM bills with just 5 lines of code change. Empower allows users to own their models, offers cost-effective serving with no compromise on performance, and charges on a per-token basis. The platform is designed to be user-friendly, efficient, and cost-effective for deploying and serving fine-tuned LLMs.

Langfuse

Langfuse is an AI tool that offers the Langfuse TypeScript SDK v4 for building and debugging LLM (Large Language Models) applications. It provides features such as tracing, prompt management, evaluation, and metrics to enhance the performance of LLM applications. Langfuse is backed by a team of experts and offers integrations with various platforms and SDKs. The tool aims to simplify the development process of complex LLM applications and improve overall efficiency.

Files2Prompt

Files2Prompt is a free online tool that allows you to convert files to text prompts for large language models (LLMs) like ChatGPT, Claude, and Gemini. With Files2Prompt, you can easily generate prompts from various file formats, including Markdown, JSON, and XML. The converted prompts can be used to ask questions, generate text, translate languages, write different kinds of creative content, and more.

LlamaIndex

LlamaIndex is a leading data framework designed for building LLM (Large Language Model) applications. It allows enterprises to turn their data into production-ready applications by providing functionalities such as loading data from various sources, indexing data, orchestrating workflows, and evaluating application performance. The platform offers extensive documentation, community-contributed resources, and integration options to support developers in creating innovative LLM applications.

3 - Open Source Tools

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

agenta

Agenta is an open-source LLM developer platform for prompt engineering, evaluation, human feedback, and deployment of complex LLM applications. It provides tools for prompt engineering and management, evaluation, human annotation, and deployment, all without imposing any restrictions on your choice of framework, library, or model. Agenta allows developers and product teams to collaborate in building production-grade LLM-powered applications in less time.

Awesome-Chinese-LLM

Analyze the following text from a github repository (name and readme text at end) . Then, generate a JSON object with the following keys and provide the corresponding information for each key, ,'for_jobs' (List 5 jobs suitable for this tool,in lowercase letters), 'ai_keywords' (keywords of the tool,in lowercase letters), 'for_tasks' (list of 5 specific tasks user can use this tool to do,in less than 3 words,Verb + noun form,in daily spoken language,in lowercase letters).Answer in english languagesname:Awesome-Chinese-LLM readme:# Awesome Chinese LLM   An Awesome Collection for LLM in Chinese 收集和梳理中文LLM相关    自ChatGPT为代表的大语言模型(Large Language Model, LLM)出现以后,由于其惊人的类通用人工智能(AGI)的能力,掀起了新一轮自然语言处理领域的研究和应用的浪潮。尤其是以ChatGLM、LLaMA等平民玩家都能跑起来的较小规模的LLM开源之后,业界涌现了非常多基于LLM的二次微调或应用的案例。本项目旨在收集和梳理中文LLM相关的开源模型、应用、数据集及教程等资料,目前收录的资源已达100+个! 如果本项目能给您带来一点点帮助,麻烦点个⭐️吧~ 同时也欢迎大家贡献本项目未收录的开源模型、应用、数据集等。提供新的仓库信息请发起PR,并按照本项目的格式提供仓库链接、star数,简介等相关信息,感谢~

20 - OpenAI Gpts

Agent Prompt Generator for LLM's

This GPT generates the best possible LLM-agents for your system prompts. You can also specify the model size, like 3B, 33B, 70B, etc.

NEO - Ultimate AI

I imitate GPT-5 LLM, with advanced reasoning, personalization, and higher emotional intelligence

DataLearnerAI-GPT

Using OpenLLMLeaderboard data to answer your questions about LLM. For Currently!

HackMeIfYouCan

Hack Me if you can - I can only talk to you about computer security, software security and LLM security @JacquesGariepy

PyRefactor

Refactor python code. Python expert with proficiency in data science, machine learning (including LLM apps), and both OOP and functional programming.

CISO GPT

Specialized LLM in computer security, acting as a CISO with 20 years of experience, providing precise, data-driven technical responses to enhance organizational security.

Prompt Peerless - Complete Prompt Optimization

Premier AI Prompt Engineer for Advanced LLM Optimization, Enhancing AI-to-AI Interaction and Comprehension. Create -> Optimize -> Revise iteratively

EmotionPrompt(LLM→人間ver.)

EmotionPrompt手法に基づいて作成していますが、本来の理論とは反対に人間に対してLLMがPromptを投げます。本来の手法の詳細:https://ai-data-base.com/archives/58158

SSLLMs Advisor

Helps you build logic security into your GPTs custom instructions. Documentation: https://github.com/infotrix/SSLLMs---Semantic-Secuirty-for-LLM-GPTs