self-learn-llms

🏫🙋🏻 [WIP] Self learn LLMs. This repository is a collection of resources, notebooks, blogs, tutorials for beginners.

Stars: 51

Self Learn LLMs is a repository containing resources for self-learning about Large Language Models. It includes theoretical and practical hands-on resources to facilitate learning. The repository aims to provide a clear roadmap with milestones for proper understanding of LLMs. The owner plans to refactor the repository to remove irrelevant content, organize model zoo better, and enhance the learning experience by adding contributors and hosting notes, tutorials, and open discussions.

README:

This repository constitutes some of the resources which I will use to learn about Large Language Models. I will also try to come up with a roadmap as I go forward in this self-learning journey, since a clear roadmap with milestones will be one of the best ways to learn about LLMs in a proper manner.

For this, I will include a mix of theoretical and practical hands-on resources to learn.

PS: Need to make this more visual

Edit: 6th Nov 2024

- [ ] Need to majorly refactor the repository.

- [ ] Remove courses or resources that are not relevant.

- [ ] In the model race, we can't go on listing models in the repository as it's tough to keep track of models and updates will make the previous models useless. Need to think of a better way to organize model zoo.

- [ ] Maybe add 1-2 contributors or open the repository to contributions to help out.

- [ ] How can we make it a gret learning experience, hosting notes and tutorials, open discussions, webpage?

- CS224N Natural Language Processing with Deep Learning, Stanford

- Natural Language Processing Specialization, Coursera

- HuggingFace NLP + Transformers Course

- CS25: Transformers United V2, Stanford CS25, Fall 2021 Version

Industrial and Open-Source courses

- Activeloop Learn, this initiative GenAI360 provides 3 free courses on RAGs, fine-tuning LLMs, LangChain and VectorDBs.

- LLM Course by Maxime Labonne, Course to get into Large Language Models (LLMs) with roadmaps and Colab notebooks.

- Hands on LLM Course, Learn about LLMs, LLMOps, and Vector DBs for free by designing, training, and deploying a real-time financial advisor LLM system source code + video & reading materials.

- Full Stack Deep Learning, started out as a deep learning bootcamp and evolved into LLM bootcamp around April 2023, now is free to take up.

- LLM University by Cohere, this course consists of 8 modules taught by the famous Luis Serrano, who is known for teaching concepts in a easy and visually appealing manner. The course contains topics like fundamentals, deployment, semantic search and RAG.

- Deeplearning.ai Short Courses, Short courses by DL.AI on various domains of LLMs and Generative AI. These short courses are really useful as they have the perfect blend of theoretical and practical sessions. The courses are usually made in collaborations with companies like Hugging Face, Mistral, OpenAI, Microsoft, Meta, Google etc.

- LLM Zoomcamp by DataTalksClub, LLM Zoomcamp - a free online course about building a Q&A system.

- Applied LLMs Mastery 2024 Course by Aishwarya N Reganti, free 10 weeks course with a definite roadmap ranging from LLM Fundamentals, Tools and techniques, Deployment and evaluation to Challenges and future trends.

- Weights and Biases Courses, provides different courses on MLOps, LLM Powered Apps etc.

- LLM Models course, DataBricks x ed, professional certification by DataBricks.

- Deeplearning.ai offers various short courses on LLMs like LangChain for LLM App Development, Serverless LLMs with AWS Bedrock, Fine-tuning LLMs, LLMs with Semantic Search etc.

- Introduction to Generative AI Learning Path, Google Cloud.

- Arize University hosts courses like llm-evaluation, llm agents tools and chains, llm-observability etc.

University Courses

- Natural Language Processing with Transformers Book, by Lewis Tunstall, Leandro von Werra and Thomas Wolf | Notebooks for the book

- Build a Large Language Model (From Scratch), by Sebastian Raschka | Official Code Repository for the book

- LLM Engineer's Handbook_ Master the art of engineering large language models from concept to production, by Paul Iusztin and Maxime Labonne | Code for the book

- Hands-On Large Language Models: Language Understanding and Generation, by Jay Alammar and Maarten Grootendorst | Code repository for the book

- AI Engineering: Building Applications with Foundation Models, by Chip Huyen | Resources for the book

- AIMultiple's blog on Large Language Models: Complete Guide in 2023

- Cohere Docs

- FutureSmart AI Blog on Building Chatbots using LangChain and ChatGPT

- Task-driven Autonomous Agent Utilizing GPT-4, Pinecone, and LangChain for Diverse Applications

- A Survey of Large Language Models Also check out this Repo: https://github.com/RUCAIBox/LLMSurvey

- Understanding Large Language Models -- A Transformative Reading List, Sebastian Raschka

- Wiki CLSP, NLP Reading Group, a list of reading groups related to NLP which is updated frequently.

- Let's build GPT: from scratch, in code, spelled out.: 4 hour long YouTube video by Andrej Karpathy on building GPT from scratch.

- [1hr talk] Intro to Large Language Models: 1 hour YouTube video by Andrej Karpathy on Introduction to LLMs.

- Let's build the GPT Tokenizer: 2 hour long YouTube video by Andrej Karpathy on building the GPT Tokenizer.

- Let's reproduce GPT-2 (124M): 4 hour long YouTube Video by Andrej Karpathy on reproducing GPT-2 (124M model).

- H2O Organization, HuggingFaces

- OpenAssistant Organization, HuggingFaces

- DataBricks Organization, HuggingFaces

- BigScience Organization, HuggingFaces

- EleutherAI Organization, HuggingFaces

- NomicAI Organization, HuggingFaces

- Cerebras Organization, HuggingFaces

- LLMStudio, H2O AI

- LLamaIndex

- NeMo Guardrails, NVIDIA, to prevent hallucinations and add programmable guardrails

- MLC LLM, Develop optimize and deploy LLMs natively on everyone's devices)

- LaMini LLM

- ChatGPT, OpenAI, Released 30th November 2022

- Google Bard, Released 21st March 2023

- Tongyi Qianwen AI, Alibaba, Released 11th April 2023

- StableLM, Stability AI, Released 20th April 2023

- Amazon Titan

- HuggingChat, HuggingFaces, Released 25th April 2023

- H2OGPT

- Bloom Model, Commercial Use Allowed with RAIL

- GPT-J, EleutherAI, Apache 2.0

- GPT-NeoX, EleutherAI, Apache 2.0

- GPT4All, NomicAI, MIT License

- GPT4All-J, NomicAI, MIT License

- Pythia, EleutherAI, MIT License

- GLM-130B

- PaLM, Google

- OPT, Meta

- FLAN-T5

- LLaMA, Meta

- Alpaca, Stanford

- Vicuna, lm-sys

People you should definitely follow to keep updated about LLMs. Researchers/Founders/Developers/AI Content Creators involved in LLM production/research/development

- Sebastian Raschka, he is a legend and will burst your hype-up LLM bubble with his amazing tweets, blogs and tutorials. Subscribe to his newsletter Ahead of AI

- Andrej Karpathy, so this legend worked in Tesla, took a break, started his YouTube channel to teach the fundamentals and blew us all with his amazing video on implementing GPT from scratch and finally rejoined OpenAI. I guess you cannot lose a legend :D

- Jay Alammar, yup if you don't know about his ELI blog on Transformers go read that out first and be sure to follow him for updates.

- Tomaz Bratanic, he is the author of famous book Graph Algorithms for Data Science, and currently writes great blogs on Medium related to GPT, Langchain and stuff.

- Maxime Labonne

- Paul Iusztin

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for self-learn-llms

Similar Open Source Tools

self-learn-llms

Self Learn LLMs is a repository containing resources for self-learning about Large Language Models. It includes theoretical and practical hands-on resources to facilitate learning. The repository aims to provide a clear roadmap with milestones for proper understanding of LLMs. The owner plans to refactor the repository to remove irrelevant content, organize model zoo better, and enhance the learning experience by adding contributors and hosting notes, tutorials, and open discussions.

mlcourse.ai

mlcourse.ai is an open Machine Learning course by OpenDataScience (ods.ai), led by Yury Kashnitsky (yorko). The course offers a perfect balance between theory and practice, with math formulae in lectures and practical assignments including Kaggle Inclass competitions. It is currently in a self-paced mode, guiding users through 10 weeks of content covering topics from Pandas to Gradient Boosting. The course provides articles, lectures, and assignments to enhance understanding and application of machine learning concepts.

SuperKnowa

SuperKnowa is a fast framework to build Enterprise RAG (Retriever Augmented Generation) Pipelines at Scale, powered by watsonx. It accelerates Enterprise Generative AI applications to get prod-ready solutions quickly on private data. The framework provides pluggable components for tackling various Generative AI use cases using Large Language Models (LLMs), allowing users to assemble building blocks to address challenges in AI-driven text generation. SuperKnowa is battle-tested from 1M to 200M private knowledge base & scaled to billions of retriever tokens.

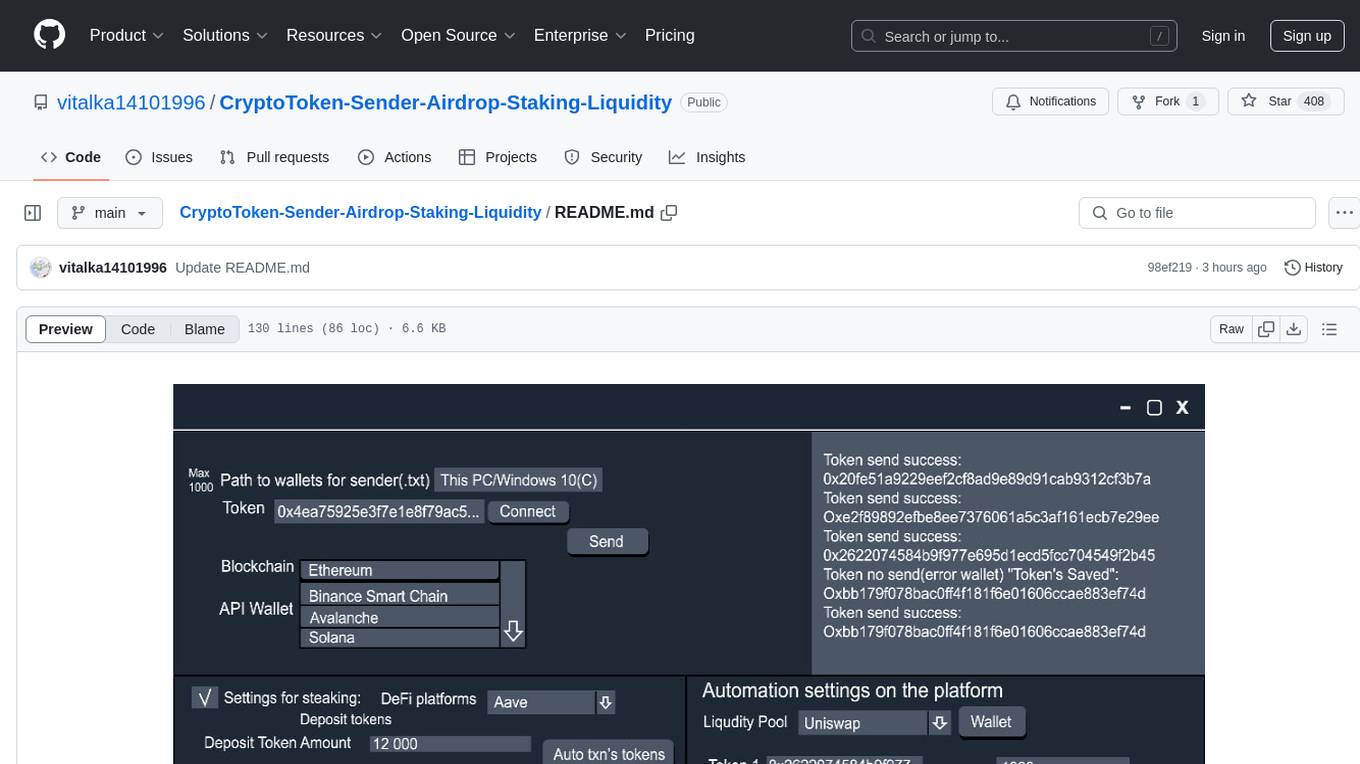

CryptoToken-Sender-Airdrop-Staking-Liquidity

The CryptoToken-Sender-Airdrop-Staking-Liquidity repository provides an ultimate tool for efficient and automated token distribution across blockchain wallets. It is designed for projects, DAOs, and blockchain-based organizations that need to distribute tokens to thousands of wallet addresses with ease. The platform offers advanced integrations with DeFi protocols for staking, liquidity farming, and automated payments. Users can send tokens in bulk, distribute tokens to multiple wallets instantly, optimize gas fees, integrate with DeFi protocols for liquidity provision and staking, set up recurring payments, automate liquidity farming strategies, support multi-chain operations, monitor transactions in real-time, and work with various token standards. The repository includes features for connecting to blockchains, importing and managing wallets, customizing mailing parameters, monitoring transaction status, logging transactions, and providing a user-friendly interface for configuration and operation.

hallucination-index

LLM Hallucination Index - RAG Special is a comprehensive evaluation of large language models (LLMs) focusing on context length and open vs. closed-source attributes. The index explores the impact of context length on model performance and tests the assumption that closed-source LLMs outperform open-source ones. It also investigates the effectiveness of prompting techniques like Chain-of-Note across different context lengths. The evaluation includes 22 models from various brands, analyzing major trends and declaring overall winners based on short, medium, and long context insights. Methodologies involve rigorous testing with different context lengths and prompting techniques to assess models' abilities in handling extensive texts and detecting hallucinations.

awesome-generative-ai-guide

This repository serves as a comprehensive hub for updates on generative AI research, interview materials, notebooks, and more. It includes monthly best GenAI papers list, interview resources, free courses, and code repositories/notebooks for developing generative AI applications. The repository is regularly updated with the latest additions to keep users informed and engaged in the field of generative AI.

AI.Labs

AI.Labs is an open-source project that integrates advanced artificial intelligence technologies to create a powerful AI platform. It focuses on integrating AI services like large language models, speech recognition, and speech synthesis for functionalities such as dialogue, voice interaction, and meeting transcription. The project also includes features like a large language model dialogue system, speech recognition for meeting transcription, speech-to-text voice synthesis, integration of translation and chat, and uses technologies like C#, .Net, SQLite database, XAF, OpenAI API, TTS, and STT.

Geoweaver

Geoweaver is an in-browser software that enables users to easily compose and execute full-stack data processing workflows using online spatial data facilities, high-performance computation platforms, and open-source deep learning libraries. It provides server management, code repository, workflow orchestration software, and history recording capabilities. Users can run it from both local and remote machines. Geoweaver aims to make data processing workflows manageable for non-coder scientists and preserve model run history. It offers features like progress storage, organization, SSH connection to external servers, and a web UI with Python support.

ck

Collective Mind (CM) is a collection of portable, extensible, technology-agnostic and ready-to-use automation recipes with a human-friendly interface (aka CM scripts) to unify and automate all the manual steps required to compose, run, benchmark and optimize complex ML/AI applications on any platform with any software and hardware: see online catalog and source code. CM scripts require Python 3.7+ with minimal dependencies and are continuously extended by the community and MLCommons members to run natively on Ubuntu, MacOS, Windows, RHEL, Debian, Amazon Linux and any other operating system, in a cloud or inside automatically generated containers while keeping backward compatibility - please don't hesitate to report encountered issues here and contact us via public Discord Server to help this collaborative engineering effort! CM scripts were originally developed based on the following requirements from the MLCommons members to help them automatically compose and optimize complex MLPerf benchmarks, applications and systems across diverse and continuously changing models, data sets, software and hardware from Nvidia, Intel, AMD, Google, Qualcomm, Amazon and other vendors: * must work out of the box with the default options and without the need to edit some paths, environment variables and configuration files; * must be non-intrusive, easy to debug and must reuse existing user scripts and automation tools (such as cmake, make, ML workflows, python poetry and containers) rather than substituting them; * must have a very simple and human-friendly command line with a Python API and minimal dependencies; * must require minimal or zero learning curve by using plain Python, native scripts, environment variables and simple JSON/YAML descriptions instead of inventing new workflow languages; * must have the same interface to run all automations natively, in a cloud or inside containers. CM scripts were successfully validated by MLCommons to modularize MLPerf inference benchmarks and help the community automate more than 95% of all performance and power submissions in the v3.1 round across more than 120 system configurations (models, frameworks, hardware) while reducing development and maintenance costs.

NeMo

NVIDIA NeMo Framework is a scalable and cloud-native generative AI framework built for researchers and PyTorch developers working on Large Language Models (LLMs), Multimodal Models (MMs), Automatic Speech Recognition (ASR), Text to Speech (TTS), and Computer Vision (CV) domains. It is designed to help you efficiently create, customize, and deploy new generative AI models by leveraging existing code and pre-trained model checkpoints.

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

NeMo

NeMo Framework is a generative AI framework built for researchers and pytorch developers working on large language models (LLMs), multimodal models (MM), automatic speech recognition (ASR), and text-to-speech synthesis (TTS). The primary objective of NeMo is to provide a scalable framework for researchers and developers from industry and academia to more easily implement and design new generative AI models by being able to leverage existing code and pretrained models.

LLM-GenAI-Transformers-Notebooks

This repository is a collection of LLM notebooks with tutorials and projects. It covers topics such as Transformers tutorials, LLM notebooks and their applications, tools and technologies of GenAI, courses in GenAI, and Generative AI blogs/articles. Contributions are welcome.

awesome-transformer-nlp

This repository contains a hand-curated list of great machine (deep) learning resources for Natural Language Processing (NLP) with a focus on Generative Pre-trained Transformer (GPT), Bidirectional Encoder Representations from Transformers (BERT), attention mechanism, Transformer architectures/networks, Chatbot, and transfer learning in NLP.

ianvs

Ianvs is a distributed synergy AI benchmarking project incubated in KubeEdge SIG AI. It aims to test the performance of distributed synergy AI solutions following recognized standards, providing end-to-end benchmark toolkits, test environment management tools, test case control tools, and benchmark presentation tools. It also collaborates with other organizations to establish comprehensive benchmarks and related applications. The architecture includes critical components like Test Environment Manager, Test Case Controller, Generation Assistant, Simulation Controller, and Story Manager. Ianvs documentation covers quick start, guides, dataset descriptions, algorithms, user interfaces, stories, and roadmap.

llvm-aie

This repository extends the LLVM framework to generate code for use with AMD/Xilinx AI Engine processors. AI Engine processors are in-order, exposed-pipeline VLIW processors focused on application acceleration for AI, Machine Learning, and DSP applications. The repository adds LLVM support for specific features like non-power of 2 pointers, operand latencies, resource conflicts, negative operand latencies, slot assignment, relocations, code alignment restrictions, and register allocation. It includes support for Clang, LLD, binutils, Compiler-RT, and LLVM-LIBC.

For similar tasks

agenta

Agenta is an open-source LLM developer platform for prompt engineering, evaluation, human feedback, and deployment of complex LLM applications. It provides tools for prompt engineering and management, evaluation, human annotation, and deployment, all without imposing any restrictions on your choice of framework, library, or model. Agenta allows developers and product teams to collaborate in building production-grade LLM-powered applications in less time.

self-learn-llms

Self Learn LLMs is a repository containing resources for self-learning about Large Language Models. It includes theoretical and practical hands-on resources to facilitate learning. The repository aims to provide a clear roadmap with milestones for proper understanding of LLMs. The owner plans to refactor the repository to remove irrelevant content, organize model zoo better, and enhance the learning experience by adding contributors and hosting notes, tutorials, and open discussions.

langchain-benchmarks

A package to help benchmark various LLM related tasks. The benchmarks are organized by end-to-end use cases, and utilize LangSmith heavily. We have several goals in open sourcing this: * Showing how we collect our benchmark datasets for each task * Showing what the benchmark datasets we use for each task is * Showing how we evaluate each task * Encouraging others to benchmark their solutions on these tasks (we are always looking for better ways of doing things!)

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

Awesome-LLM-Eval

Awesome-LLM-Eval: a curated list of tools, benchmarks, demos, papers for Large Language Models (like ChatGPT, LLaMA, GLM, Baichuan, etc) Evaluation on Language capabilities, Knowledge, Reasoning, Fairness and Safety.

moonshot

Moonshot is a simple and modular tool developed by the AI Verify Foundation to evaluate Language Model Models (LLMs) and LLM applications. It brings Benchmarking and Red-Teaming together to assist AI developers, compliance teams, and AI system owners in assessing LLM performance. Moonshot can be accessed through various interfaces including User-friendly Web UI, Interactive Command Line Interface, and seamless integration into MLOps workflows via Library APIs or Web APIs. It offers features like benchmarking LLMs from popular model providers, running relevant tests, creating custom cookbooks and recipes, and automating Red Teaming to identify vulnerabilities in AI systems.

Cherry_LLM

Cherry Data Selection project introduces a self-guided methodology for LLMs to autonomously discern and select cherry samples from open-source datasets, minimizing manual curation and cost for instruction tuning. The project focuses on selecting impactful training samples ('cherry data') to enhance LLM instruction tuning by estimating instruction-following difficulty. The method involves phases like 'Learning from Brief Experience', 'Evaluating Based on Experience', and 'Retraining from Self-Guided Experience' to improve LLM performance.

langevals

LangEvals is an all-in-one Python library for testing and evaluating LLM models. It can be used in notebooks for exploration, in pytest for writing unit tests, or as a server API for live evaluations and guardrails. The library is modular, with 20+ evaluators including Ragas for RAG quality, OpenAI Moderation, and Azure Jailbreak detection. LangEvals powers LangWatch evaluations and provides tools for batch evaluations on notebooks and unit test evaluations with PyTest. It also offers LangEvals evaluators for LLM-as-a-Judge scenarios and out-of-the-box evaluators for language detection and answer relevancy checks.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.