Best AI tools for< Train Llm Models >

20 - AI tool Sites

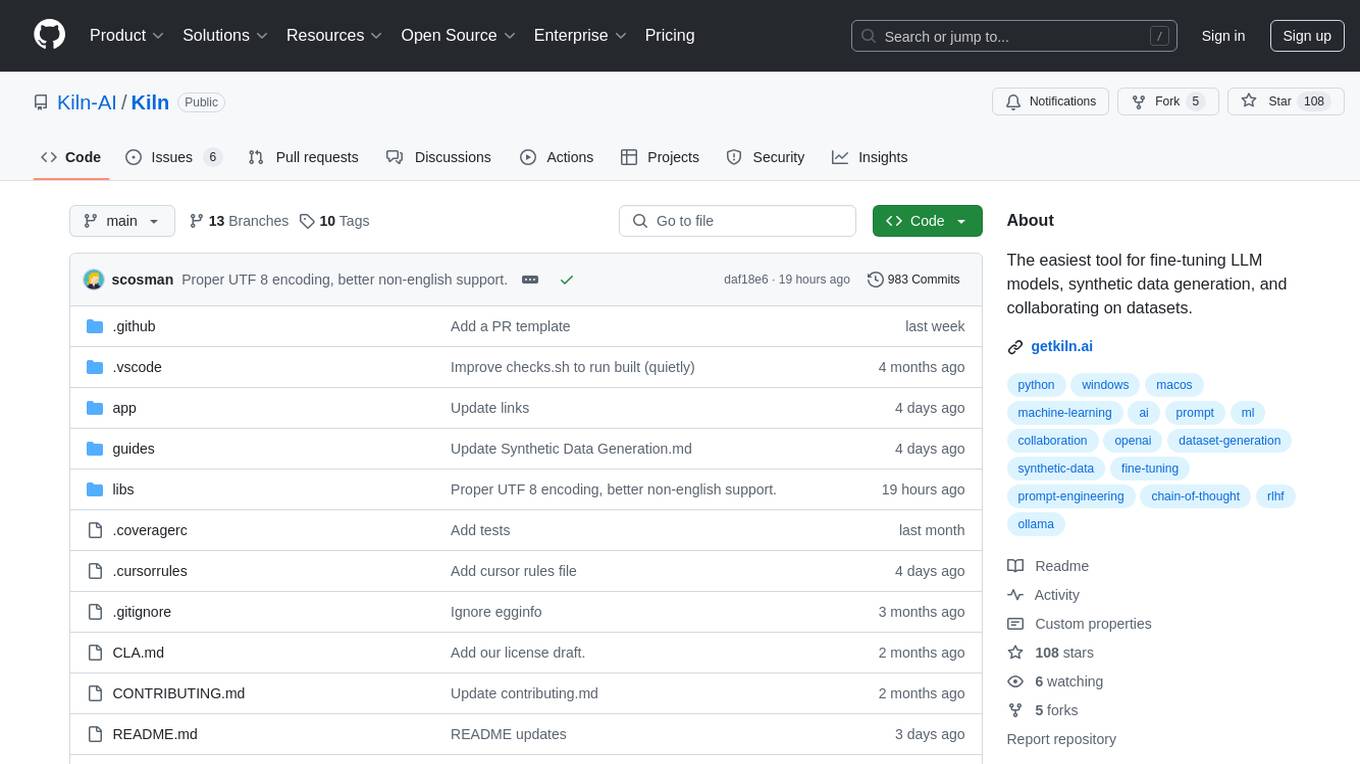

Kiln

Kiln is an AI tool designed for fine-tuning LLM models, generating synthetic data, and facilitating collaboration on datasets. It offers intuitive desktop apps, zero-code fine-tuning for various models, interactive visual tools for data generation, Git-based version control for datasets, and the ability to generate various prompts from data. Kiln supports a wide range of models and providers, provides an open-source library and API, prioritizes privacy, and allows structured data tasks in JSON format. The tool is free to use and focuses on rapid AI prototyping and dataset collaboration.

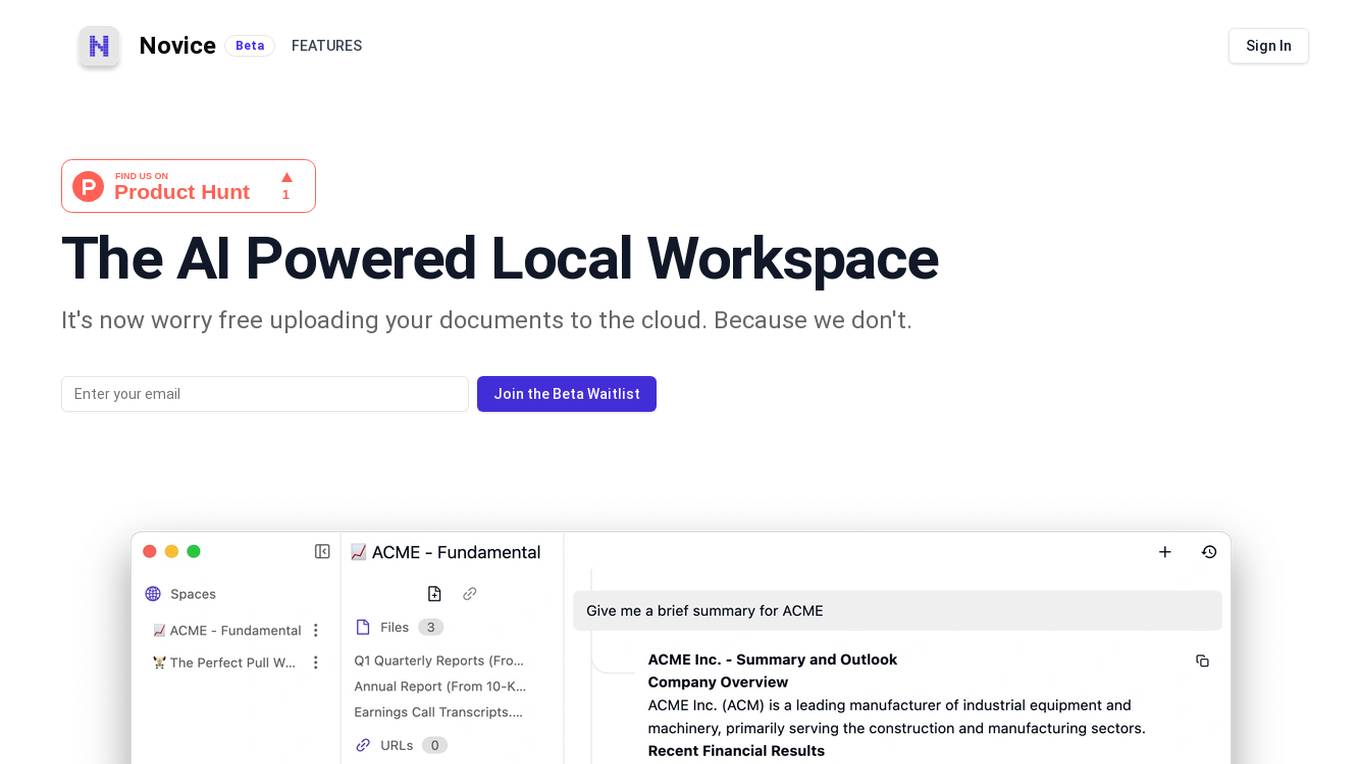

Novice

Novice is an AI-powered local workspace that allows users to access a wide range of models, including Open Source LLM models, without the need for complex setups. It ensures data confidentiality by enabling users to process data directly on their own computer. Novice eliminates the hassle of uploading files to the cloud and offers a cost-effective solution for utilizing AI technologies.

FineTuneAIs.com

FineTuneAIs.com is a platform that specializes in custom AI model fine-tuning. Users can fine-tune their AI models to achieve better performance and accuracy. The platform requires JavaScript to be enabled for optimal functionality.

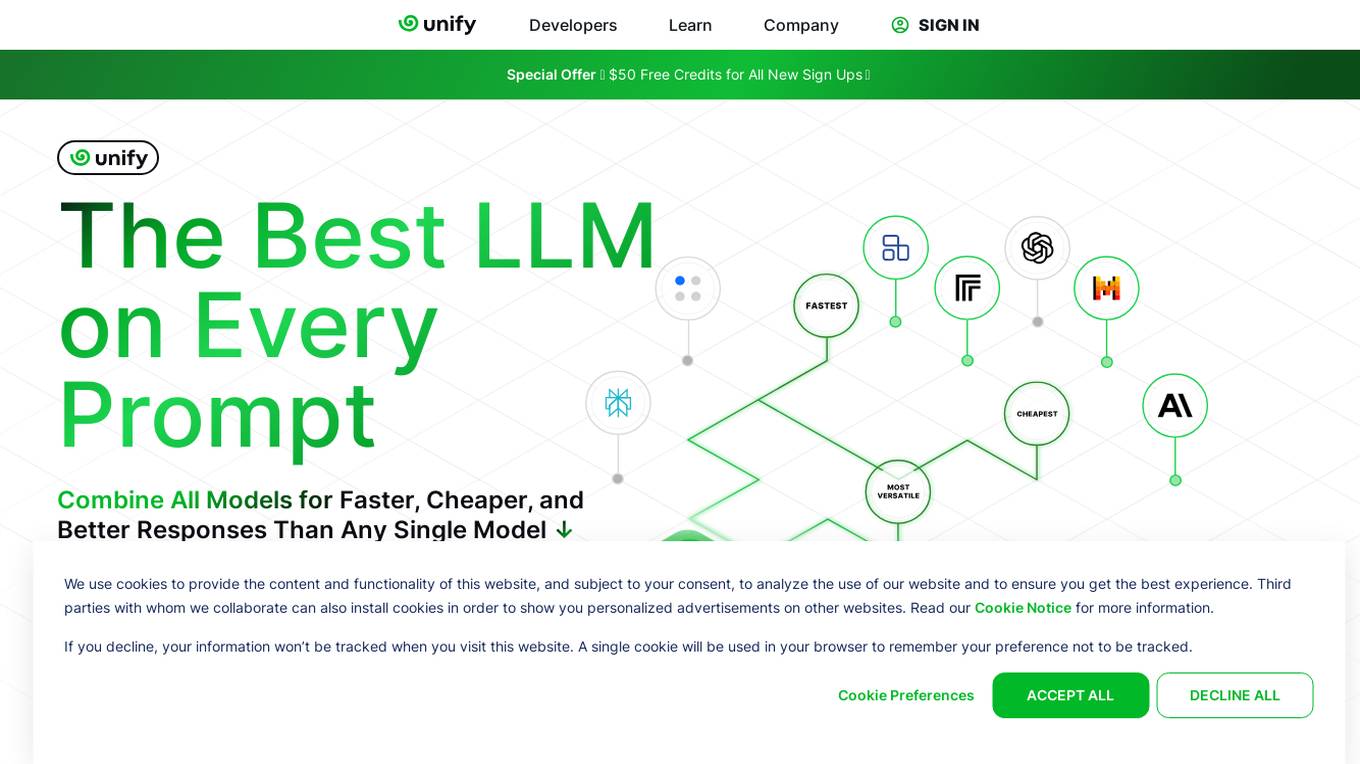

Unify

Unify is an AI tool that offers a unified platform for accessing and comparing various Language Models (LLMs) from different providers. It allows users to combine models for faster, cheaper, and better responses, optimizing for quality, speed, and cost-efficiency. Unify simplifies the complex task of selecting the best LLM by providing transparent benchmarks, personalized routing, and performance optimization tools.

Ragobble

Ragobble is an audio to LLM data tool that allows you to easily convert audio files into text data that can be used to train large language models (LLMs). With Ragobble, you can quickly and easily create high-quality training data for your LLM projects.

FluidStack

FluidStack is a leading GPU cloud platform designed for AI and LLM (Large Language Model) training. It offers unlimited scale for AI training and inference, allowing users to access thousands of fully-interconnected GPUs on demand. Trusted by top AI startups, FluidStack aggregates GPU capacity from data centers worldwide, providing access to over 50,000 GPUs for accelerating training and inference. With 1000+ data centers across 50+ countries, FluidStack ensures reliable and efficient GPU cloud services at competitive prices.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

ApX Machine Learning

ApX Machine Learning is a comprehensive resource for AI students, developers, and researchers, offering tools and learning resources to pioneer the future of AI. It provides a wide range of courses, tools, and benchmarks for learners, developers, and researchers in the field of machine learning and artificial intelligence. The platform aims to enhance the capabilities of existing large language models (LLMs) through the Model Context Protocol (MCP), providing access to resources, benchmarks, and tools to improve LLM performance and efficiency.

Moreh

Moreh is an AI platform that aims to make hyperscale AI infrastructure more accessible for scaling any AI model and application. It provides a full-stack infrastructure software from PyTorch to GPUs for the LLM era, enabling users to train large language models efficiently and effectively.

micro1

micro1 is an AI recruitment tool that leverages human data produced by subject matter experts to help companies identify and hire top talent efficiently. The platform offers end-to-end post-training solutions, high-quality data for model training, pre-vetted AI trainers, and enterprise-grade LLM evaluations. With a focus on tech startups, staffing agencies, and enterprises, micro1 aims to streamline the recruitment process and save costs for businesses.

Monkt

Monkt is a powerful document processing platform that transforms various document formats into AI-ready Markdown or structured JSON. It offers features like instant conversion of PDF, Word, PowerPoint, Excel, CSV, web pages, and raw HTML into clean markdown format optimized for AI/LLM systems. Monkt enables users to create intelligent applications, custom AI chatbots, knowledge bases, and training datasets. It supports batch processing, image understanding, LLM optimization, and API integration for seamless document processing. The platform is designed to handle document transformation at scale, with support for multiple file formats and custom JSON schemas.

Mirage

Mirage is a custom AI platform that builds custom LLMs to accelerate productivity. It is backed by Sequoia and offers a variety of features, including the ability to create custom AI models, train models on your own data, and deploy models to the cloud or on-premises.

Ferhat Erata

Ferhat Erata is an AI application developed by a Computer Science PhD graduate from Yale University. The application focuses on training transformers to solve NP-complete problems using reinforcement learning and improving test-time compute strategies for reasoning. It also explores learning randomized reductions and program properties for security, privacy, and side-channel resilience. Ferhat Erata is currently an Applied Scientist at the Automated Reasoning Group at AWS, working on Neuro-Symbolic AI to prevent factual errors caused by LLM hallucinations using mathematically sound Automated Reasoning checks.

EDOM.AI

EDOM.AI is the first artificial business brain that provides secret strategies used by major companies to help users create, grow, and start their businesses. It offers access to proven billionaire secrets and allows users to create ideas based on the brains of the greatest entrepreneurs. EDOM.AI is constantly evolving to offer the best LLM possible for businesses.

Yellow.ai

Yellow.ai is a leading provider of AI-powered customer service automation solutions. Its Dynamic Automation Platform (DAP) is built on multi-LLM architecture and continuously trains on billions of conversations for scale, speed, and accuracy. Yellow.ai's platform leverages the latest advancements in NLP and generative AI to deliver empathetic and context-aware conversations that exceed customer expectations across channels. With its enterprise-grade security, advanced analytics, and zero-setup bot deployment, Yellow.ai helps businesses transform their customer and employee experiences with AI-powered automation.

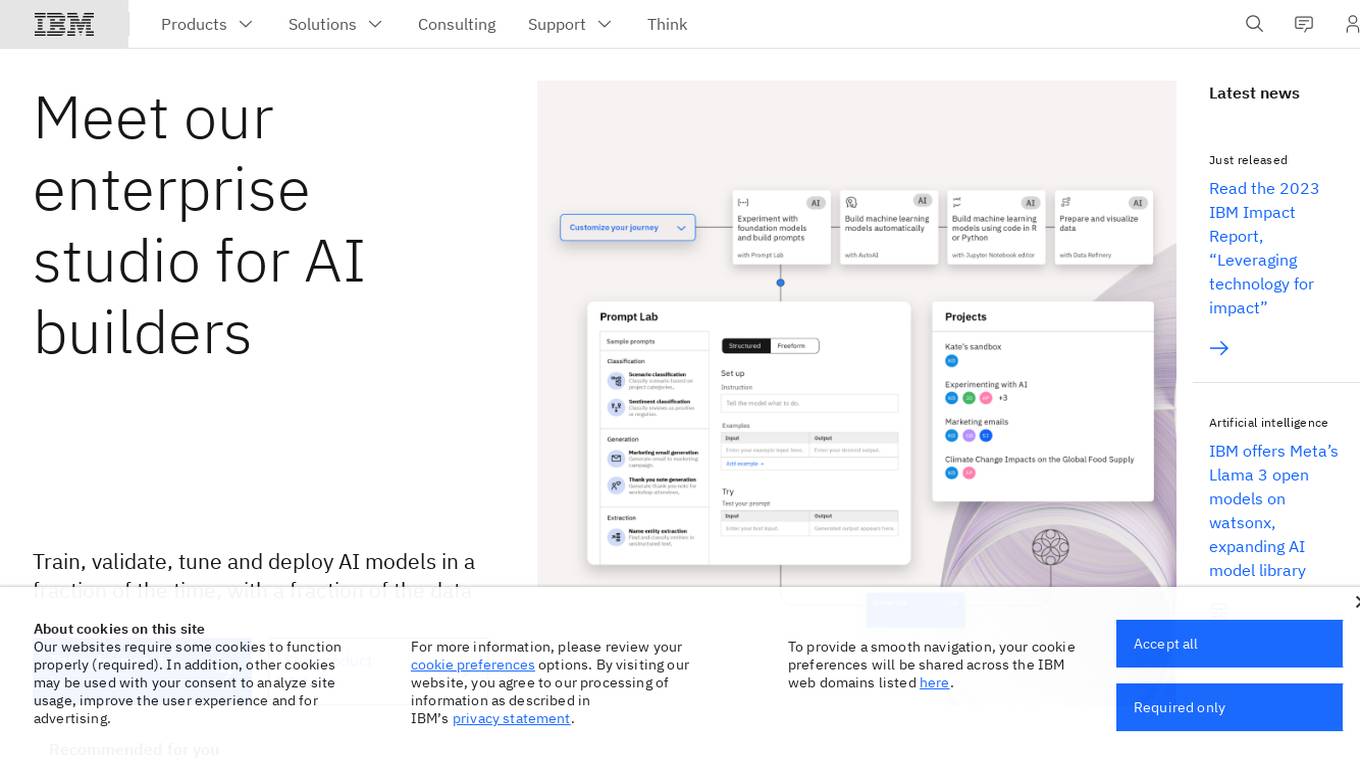

IBM Watsonx

IBM Watsonx is an enterprise studio for AI builders. It provides a platform to train, validate, tune, and deploy AI models quickly and efficiently. With Watsonx, users can access a library of pre-trained AI models, build their own models, and deploy them to the cloud or on-premises. Watsonx also offers a range of tools and services to help users manage and monitor their AI models.

Outlier AI

Outlier AI is a platform that connects subject matter experts to help build the world's most advanced Generative AI. It allows experts to work on various projects from generating training data to evaluating model performance. The platform offers flexibility, allowing contributors to work from home on their own schedule. Outlier AI aims to redefine how AI learns by leveraging the expertise of domain specialists across different fields.

Athletica AI

Athletica AI is an AI-powered athletic training and personalized fitness application that offers tailored coaching and training plans for various sports like cycling, running, duathlon, triathlon, and rowing. It adapts to individual fitness levels, abilities, and availability, providing daily step-by-step training plans and comprehensive session analyses. Athletica AI integrates seamlessly with workout data from platforms like Garmin, Strava, and Concept 2 to craft personalized training plans and workouts. The application aims to help athletes train smarter, not harder, by leveraging the power of AI to optimize performance and achieve fitness goals.

Backend.AI

Backend.AI is an enterprise-scale cluster backend for AI frameworks that offers scalability, GPU virtualization, HPC optimization, and DGX-Ready software products. It provides a fast and efficient way to build, train, and serve AI models of any type and size, with flexible infrastructure options. Backend.AI aims to optimize backend resources, reduce costs, and simplify deployment for AI developers and researchers. The platform integrates seamlessly with existing tools and offers fractional GPU usage and pay-as-you-play model to maximize resource utilization.

Kaiden AI

Kaiden AI is an AI-powered training platform that offers personalized, immersive simulations to enhance skills and performance across various industries and roles. It provides feedback-rich scenarios, voice-enabled interactions, and detailed performance insights. Users can create custom training scenarios, engage with AI personas, and receive real-time feedback to improve communication skills. Kaiden AI aims to revolutionize training solutions by combining AI technology with real-world practice.

7 - Open Source AI Tools

RTL-Coder

RTL-Coder is a tool designed to outperform GPT-3.5 in RTL code generation by providing a fully open-source dataset and a lightweight solution. It targets Verilog code generation and offers an automated flow to generate a large labeled dataset with over 27,000 diverse Verilog design problems and answers. The tool addresses the data availability challenge in IC design-related tasks and can be used for various applications beyond LLMs. The tool includes four RTL code generation models available on the HuggingFace platform, each with specific features and performance characteristics. Additionally, RTL-Coder introduces a new LLM training scheme based on code quality feedback to further enhance model performance and reduce GPU memory consumption.

AI-System-School

AI System School is a curated list of research in machine learning systems, focusing on ML/DL infra, LLM infra, domain-specific infra, ML/LLM conferences, and general resources. It provides resources such as data processing, training systems, video systems, autoML systems, and more. The repository aims to help users navigate the landscape of AI systems and machine learning infrastructure, offering insights into conferences, surveys, books, videos, courses, and blogs related to the field.

long-context-attention

Long-Context-Attention (YunChang) is a unified sequence parallel approach that combines the strengths of DeepSpeed-Ulysses-Attention and Ring-Attention to provide a versatile and high-performance solution for long context LLM model training and inference. It addresses the limitations of both methods by offering no limitation on the number of heads, compatibility with advanced parallel strategies, and enhanced performance benchmarks. The tool is verified in Megatron-LM and offers best practices for 4D parallelism, making it suitable for various attention mechanisms and parallel computing advancements.

Graph-Reasoning-LLM

This repository, GraphWiz, focuses on developing an instruction-following Language Model (LLM) for solving graph problems. It includes GraphWiz LLMs with strong graph problem-solving abilities, GraphInstruct dataset with over 72.5k training samples across nine graph problem tasks, and models like GPT-4 and Mistral-7B for comparison. The project aims to map textual descriptions of graphs and structures to solve various graph problems explicitly in natural language.

effective_llm_alignment

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

llmstxt-generator

llms.txt Generator is a tool designed for LLM (Legal Language Model) training and inference. It crawls websites to combine content into consolidated text files, offering both standard and full versions. Users can access the tool through a web interface or API without requiring an API key. Powered by Firecrawl for web crawling and GPT-4-mini for text processing.

self-learn-llms

Self Learn LLMs is a repository containing resources for self-learning about Large Language Models. It includes theoretical and practical hands-on resources to facilitate learning. The repository aims to provide a clear roadmap with milestones for proper understanding of LLMs. The owner plans to refactor the repository to remove irrelevant content, organize model zoo better, and enhance the learning experience by adding contributors and hosting notes, tutorials, and open discussions.

20 - OpenAI Gpts

HackMeIfYouCan

Hack Me if you can - I can only talk to you about computer security, software security and LLM security @JacquesGariepy

How to Train a Chessie

Comprehensive training and wellness guide for Chesapeake Bay Retrievers.

The Train Traveler

Friendly train travel guide focusing on the best routes, essential travel information, and personalized travel insights, for both experienced and novice travelers.

How to Train Your Dog (or Cat, or Dragon, or...)

Expert in pet training advice, friendly and engaging.

TrainTalk

Your personal advisor for eco-friendly train travel. Let's plan your next journey together!

Monster Battle - RPG Game

Train monsters, travel the world, earn Arena Tokens and become the ultimate monster battling champion of earth!

Hero Master AI: Superhero Training

Train to become a superhero or a supervillain. Master your powers, make pivotal choices. Each decision you make in this action-packed game not only shapes your abilities but also your moral alignment in the battle between good and evil. Another GPT Simulator by Dave Lalande

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch

Design Recruiter

Job interview coach for product designers. Train interviews and say stop when you need a feedback. You got this!!

Pocket Training Activity Expert

Expert in engaging, interactive training methods and activities.

RailwayGPT

Technical expert on locomotives, trains, signalling, and railway technology. Can answer questions and draw designs specific to transportation domain.

Railroad Conductors and Yardmasters Roadmap

Don’t know where to even begin? Let me help create a roadmap towards the career of your dreams! Type "help" for More Information