effective_llm_alignment

Effective LLM Alignment Toolkit

Stars: 105

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

README:

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. To get started, simply define a YAML configuration with parameters from HF TrainingArguments and some specific parameters for each method.

Key Libraries:

- Core: PyTorch, Transformers, TRL

- Distributed Training: Accelerate, FSDP, DeepSpeed (Zero 2/3)

- Acceleration: vLLM, Flash Attention, SDPA, Liger Kernel (for fused CrossEntropy in SFT)

- Build and Installation: Poetry

- Result Logging: Choose between wandb or clearml

- SFT: With the possibility to disable loss on unwanted message roles

- Distillation: Options include KL Div, JS Div, SLIM, Earth Mover, MSE, Soft CE, Cosine, Alpha-Beta Div

- DPO: All TRL options (IPO, SLic-HF, RPO, etc)

- ORPO: All TRL options

- CPO and SimPO: All TRL options

- SMPO: Our own most stable alignment method (details below)

- Non-pair Reward Modeling: With margins and centring support from TRL

- Special prompts basket format: Our prompts basket format (vi-basket) allows to generate dialogs with follow-ups and system-prompts.

- Rejection Sampling: Effective async preference dataset generation using vLLM and RM

- Scoring using RM: Anync generation and scoring of answers based on some prompts basket, using external RM and vLLM

- Effective FAISS Map-Reduce Deduplication: We have tools for Map-Reduce based deduplication for dense embeddings. It can deduplicate VERY large datasets in parallel.

- LLM scoring using RM: Use RM model and your dataset to caluclate RM scores statistics to compare models.

- NER, CLIP, Classification, STS: Not native to this toolkit but tested (Work in Progress)

- All datasets follow the JSON lines format and conform to Hugging Face standards (storing messages in the format

[{'role': ..., 'content': ...}]). - The ability to mix any number of datasets for training, provided they use the same column names for replicas.

-

Generation and logging in wandb/clearml of test replicas during evaluation runs for SFT and Preference training (using

generate_eval_examplesandnum_gen_examplesoptions in configs). - vLLM batched generation of answers for some datasets using an OpenAI-like server.

Our own alignment method designed for PO stability. The method is inspired by such methods as IPO, SimPO, C-RLFT, as well as introducing its own loss function of separating chosen and rejected pairs.

The main idea of the method is the desire to smoothly achieve the desired margin level without forcing the model to retrain by adding a balancing SFT loss on chosen and rejected at the same time.

The implementation of the method is here, and the config is here.

Run the following commands inside the project folder:

-

Install Poetry:

pip install poetry

-

Install project dependencies:

poetry install

Verify with:

poetry show

-

(Optional) Set the environment variable

HF_HOMEto your desired folder:export HF_HOME=/mnt/hf/ -

(Optional) Log in to Hugging Face CLI:

poetry run huggingface-cli login

-

(Optional) Log in to Weights & Biases:

poetry run wandb login

-

Check the configuration settings in the

accelerate/folder (number of GPUs, etc.).

First, make sure you have all the necessary developer Linux libraries installed, including GCC and G++ version 8 or higher. You can check this by running:

gcc --versionIt is recommened to do this steps before any installation:

apt update

apt install build-essential zlib1g-dev libffi-dev libssl-dev libbz2-dev libreadline-dev libsqlite3-dev liblzma-dev libncurses-dev tk-devNext, ensure that CUDA is version 11.8 or higher (preferably 12.1) and that all your GPUs are detected. Use the command:

nvidia-smiAfter completing the first step of installation, check that you have Poetry version 1.8+ installed, and it’s best to use Python 3.10.16. If not, update Poetry with:

poetry self updateand run:

poetry env use 3.10.16You can install 3.10.16 version of python via PyEnv.

After the second installation step, make sure that running poetry run ds_report returns meaningful text. Additionally, verify the version of Torch and the presence of NVIDIA packages.

If you encounter an error related to DeepSpeed and fused_adam during training, you need to remove DeepSpeed from your environment and install it with:

DS_BUILD_FUSED_ADAM=1 poetry run pip install deepspeed==0.14.5Sometimes, deepspeed errors depends on Python version, on 3.10.16 everythong was tested in different environments.

You need to select a DeepSpeed config + a training config + the script itself. Here’s an example command to start SFT training using YAML config:

PYTHONPATH="${PYTHONPATH}:src/" poetry run accelerate launch --config_file accelerate/stage2_config.yaml scripts/sft.py training_configs/sft/sft-llama-3.1-8b-it-lora-GrandmasterRAG-v1.yamlSFT Training

Config for training SFT Llama 3.1, using Liger kernel, only assistant answers, modified chat template, LoRA, generating examples on eval.

model_name_or_path: "unsloth/Meta-Llama-3.1-8B-Instruct"

dataset:

- "Vikhrmodels/GrandMaster-PRO-MAX"

- "Vikhrmodels/Grounded-RAG-RU-v2"

train_only_on_completions: True

per_device_train_batch_size: 1

per_device_eval_batch_size: 1

num_train_epochs: 1

save_strategy: "steps"

save_steps: 400

save_total_limit: 6

learning_rate: 0.00004

gradient_accumulation_steps: 8

gradient_checkpointing: True

logging_steps: 1

remove_unused_columns: False

dataloader_num_workers: 2

save_only_model: True

generate_eval_examples: True

use_liger: True

max_seq_length: 16000

evaluation_strategy: "steps"

eval_steps: 400

run_name: "sft-grndmrag-llama-3.1-unsloth-lora-256-qkvogudlm-v1"

output_dir: "/home/models/sft-grndmrag-llama-3.1-unsloth-lora-256-qkvogudlm-v1"

warmup_steps: 20

report_to: "wandb"

conversation_field: "conversation"

bf16: True

seed: 42

logging_first_step: True

use_peft: True

lora_target_modules:

- "k_proj"

- "v_proj"

- "q_proj"

- "o_proj"

- "gate_proj"

- "up_proj"

- "down_proj"

- "lm_head"

lora_r: 256

lora_alpha: 256

assistant_message_template: "<|start_header_id|>assistant<|end_header_id|>\n\n"

pad_token: "<|reserved_special_token_0|>"

eos_token: "<|eot_id|>"

chat_template: "{{ bos_token }}{% set loop_messages = messages %}{% for message in loop_messages %}{% set content = '<|start_header_id|>' + message['role'] + '<|end_header_id|>\n\n'+ message['content'] | trim + '<|eot_id|>' %}{{ content }}{% endfor %}{% if add_generation_prompt %}{{ '<|start_header_id|>assistant<|end_header_id|>\n\n' }}{% endif %}"

force_chat_template: TrueFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for effective_llm_alignment

Similar Open Source Tools

effective_llm_alignment

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

agentpress

AgentPress is a collection of simple but powerful utilities that serve as building blocks for creating AI agents. It includes core components for managing threads, registering tools, processing responses, state management, and utilizing LLMs. The tool provides a modular architecture for handling messages, LLM API calls, response processing, tool execution, and results management. Users can easily set up the environment, create custom tools with OpenAPI or XML schema, and manage conversation threads with real-time interaction. AgentPress aims to be agnostic, simple, and flexible, allowing users to customize and extend functionalities as needed.

sre

SmythOS is an operating system designed for building, deploying, and managing intelligent AI agents at scale. It provides a unified SDK and resource abstraction layer for various AI services, making it easy to scale and flexible. With an agent-first design, developer-friendly SDK, modular architecture, and enterprise security features, SmythOS offers a robust foundation for AI workloads. The system is built with a philosophy inspired by traditional operating system kernels, ensuring autonomy, control, and security for AI agents. SmythOS aims to make shipping production-ready AI agents accessible and open for everyone in the coming Internet of Agents era.

lionagi

LionAGI is a robust framework for orchestrating multi-step AI operations with precise control. It allows users to bring together multiple models, advanced reasoning, tool integrations, and custom validations in a single coherent pipeline. The framework is structured, expandable, controlled, and transparent, offering features like real-time logging, message introspection, and tool usage tracking. LionAGI supports advanced multi-step reasoning with ReAct, integrates with Anthropic's Model Context Protocol, and provides observability and debugging tools. Users can seamlessly orchestrate multiple models, integrate with Claude Code CLI SDK, and leverage a fan-out fan-in pattern for orchestration. The framework also offers optional dependencies for additional functionalities like reader tools, local inference support, rich output formatting, database support, and graph visualization.

monty

Monty is a minimal, secure Python interpreter written in Rust for use by AI. It allows safe execution of Python code written by an LLM embedded in your agent, with fast startup times and performance similar to CPython. Monty supports running a subset of Python code, blocking access to the host environment, calling host functions, typechecking, snapshotting interpreter state, controlling resource usage, collecting stdout and stderr, and running async or sync code. It is designed for running code written by agents, providing a sandboxed environment without the complexity of a full container-based solution.

Groqqle

Groqqle 2.1 is a revolutionary, free AI web search and API that instantly returns ORIGINAL content derived from source articles, websites, videos, and even foreign language sources, for ANY target market of ANY reading comprehension level! It combines the power of large language models with advanced web and news search capabilities, offering a user-friendly web interface, a robust API, and now a powerful Groqqle_web_tool for seamless integration into your projects. Developers can instantly incorporate Groqqle into their applications, providing a powerful tool for content generation, research, and analysis across various domains and languages.

hf-waitress

HF-Waitress is a powerful server application for deploying and interacting with HuggingFace Transformer models. It simplifies running open-source Large Language Models (LLMs) locally on-device, providing on-the-fly quantization via BitsAndBytes, HQQ, and Quanto. It requires no manual model downloads, offers concurrency, streaming responses, and supports various hardware and platforms. The server uses a `config.json` file for easy configuration management and provides detailed error handling and logging.

wllama

Wllama is a WebAssembly binding for llama.cpp, a high-performance and lightweight language model library. It enables you to run inference directly on the browser without the need for a backend or GPU. Wllama provides both high-level and low-level APIs, allowing you to perform various tasks such as completions, embeddings, tokenization, and more. It also supports model splitting, enabling you to load large models in parallel for faster download. With its Typescript support and pre-built npm package, Wllama is easy to integrate into your React Typescript projects.

gpt-computer-assistant

GPT Computer Assistant (GCA) is an open-source framework designed to build vertical AI agents that can automate tasks on Windows, macOS, and Ubuntu systems. It leverages the Model Context Protocol (MCP) and its own modules to mimic human-like actions and achieve advanced capabilities. With GCA, users can empower themselves to accomplish more in less time by automating tasks like updating dependencies, analyzing databases, and configuring cloud security settings.

uLoopMCP

uLoopMCP is a Unity integration tool designed to let AI drive your Unity project forward with minimal human intervention. It provides a 'self-hosted development loop' where an AI can compile, run tests, inspect logs, and fix issues using tools like compile, run-tests, get-logs, and clear-console. It also allows AI to operate the Unity Editor itself—creating objects, calling menu items, inspecting scenes, and refining UI layouts from screenshots via tools like execute-dynamic-code, execute-menu-item, and capture-window. The tool enables AI-driven development loops to run autonomously inside existing Unity projects.

AutoAgent

AutoAgent is a fully-automated and zero-code framework that enables users to create and deploy LLM agents through natural language alone. It is a top performer on the GAIA Benchmark, equipped with a native self-managing vector database, and allows for easy creation of tools, agents, and workflows without any coding. AutoAgent seamlessly integrates with a wide range of LLMs and supports both function-calling and ReAct interaction modes. It is designed to be dynamic, extensible, customized, and lightweight, serving as a personal AI assistant.

golf

Golf is a simple command-line tool for calculating the distance between two geographic coordinates. It uses the Haversine formula to accurately determine the distance between two points on the Earth's surface. This tool is useful for developers working on location-based applications or projects that require distance calculations. With Golf, users can easily input latitude and longitude coordinates and get the precise distance in kilometers or miles. The tool is lightweight, easy to use, and can be integrated into various programming workflows.

nanocoder

Nanocoder is a local-first CLI coding agent that supports multiple AI providers with tool support for file operations and command execution. It focuses on privacy and control, allowing users to code locally with AI tools. The tool is designed to bring the power of agentic coding tools to local models or controlled APIs like OpenRouter, promoting community-led development and inclusive collaboration in the AI coding space.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

xFasterTransformer

xFasterTransformer is an optimized solution for Large Language Models (LLMs) on the X86 platform, providing high performance and scalability for inference on mainstream LLM models. It offers C++ and Python APIs for easy integration, along with example codes and benchmark scripts. Users can prepare models in a different format, convert them, and use the APIs for tasks like encoding input prompts, generating token ids, and serving inference requests. The tool supports various data types and models, and can run in single or multi-rank modes using MPI. A web demo based on Gradio is available for popular LLM models like ChatGLM and Llama2. Benchmark scripts help evaluate model inference performance quickly, and MLServer enables serving with REST and gRPC interfaces.

For similar tasks

Awesome-LLM-in-Social-Science

This repository compiles a list of academic papers that evaluate, align, simulate, and provide surveys or perspectives on the use of Large Language Models (LLMs) in the field of Social Science. The papers cover various aspects of LLM research, including assessing their alignment with human values, evaluating their capabilities in tasks such as opinion formation and moral reasoning, and exploring their potential for simulating social interactions and addressing issues in diverse fields of Social Science. The repository aims to provide a comprehensive resource for researchers and practitioners interested in the intersection of LLMs and Social Science.

LMOps

LMOps is a research initiative focusing on fundamental research and technology for building AI products with foundation models, particularly enabling AI capabilities with Large Language Models (LLMs) and Generative AI models. The project explores various aspects such as prompt optimization, longer context handling, LLM alignment, acceleration of LLMs, LLM customization, and understanding in-context learning. It also includes tools like Promptist for automatic prompt optimization, Structured Prompting for efficient long-sequence prompts consumption, and X-Prompt for extensible prompts beyond natural language. Additionally, LLMA accelerators are developed to speed up LLM inference by referencing and copying text spans from documents. The project aims to advance technologies that facilitate prompting language models and enhance the performance of LLMs in various scenarios.

effective_llm_alignment

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

ComfyUI-IF_AI_tools

ComfyUI-IF_AI_tools is a set of custom nodes for ComfyUI that allows you to generate prompts using a local Large Language Model (LLM) via Ollama. This tool enables you to enhance your image generation workflow by leveraging the power of language models.

Awesome-AI-GPTs

Awesome AI GPTs is an open repository that collects resources and fun ways to use OpenAI GPTs. It includes databases, search tools, open-source projects, articles, attack and defense strategies, installation of custom plugins, knowledge bases, and community interactions related to GPTs. Users can find curated lists, leaked prompts, and various GPT applications in this repository. The project aims to empower users with AI capabilities and foster collaboration in the AI community.

kor

Kor is a prototype tool designed to help users extract structured data from text using Language Models (LLMs). It generates prompts, sends them to specified LLMs, and parses the output. The tool works with the parsing approach and is integrated with the LangChain framework. Kor is compatible with pydantic v2 and v1, and schema is typed checked using pydantic. It is primarily used for extracting information from text based on provided reference examples and schema documentation. Kor is designed to work with all good-enough LLMs regardless of their support for function/tool calling or JSON modes.

Awesome-LLM-Survey

This repository, Awesome-LLM-Survey, serves as a comprehensive collection of surveys related to Large Language Models (LLM). It covers various aspects of LLM, including instruction tuning, human alignment, LLM agents, hallucination, multi-modal capabilities, and more. Researchers are encouraged to contribute by updating information on their papers to benefit the LLM survey community.

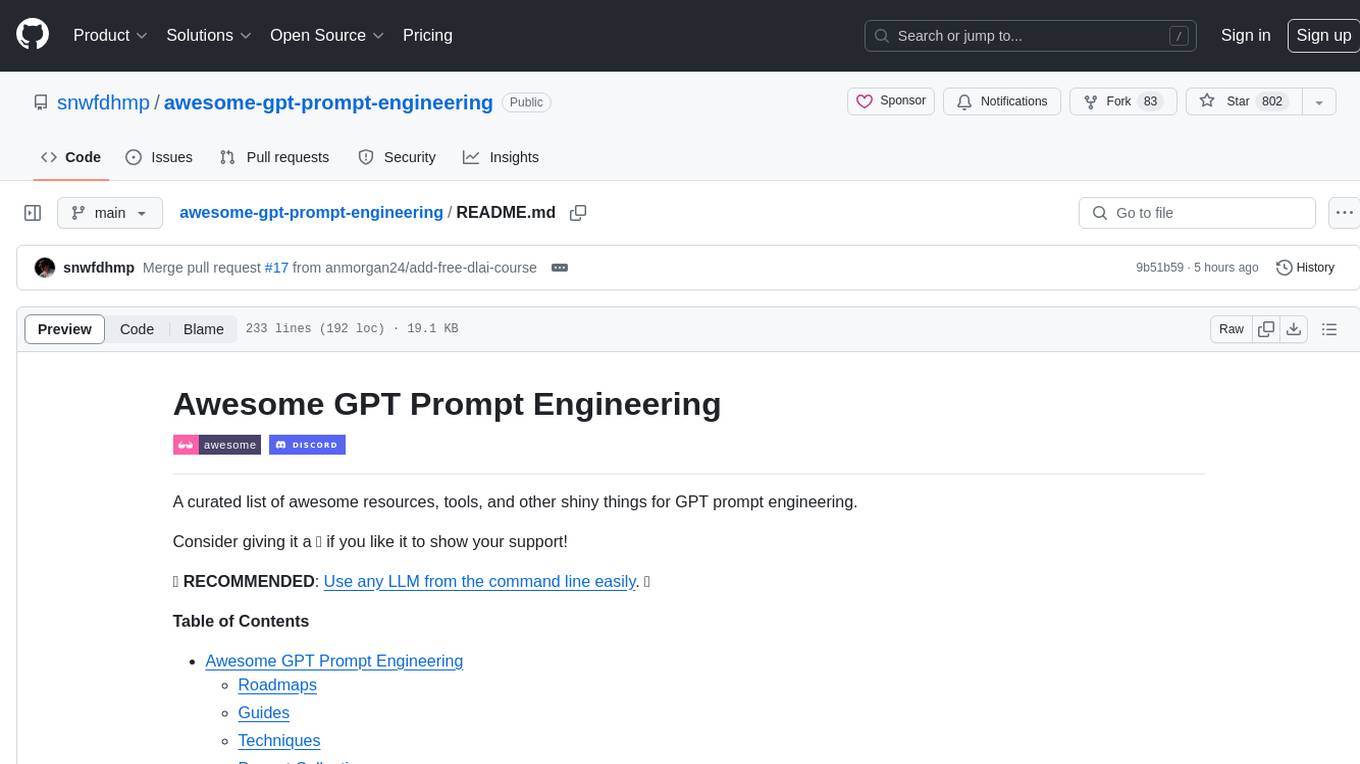

awesome-gpt-prompt-engineering

Awesome GPT Prompt Engineering is a curated list of resources, tools, and shiny things for GPT prompt engineering. It includes roadmaps, guides, techniques, prompt collections, papers, books, communities, prompt generators, Auto-GPT related tools, prompt injection information, ChatGPT plug-ins, prompt engineering job offers, and AI links directories. The repository aims to provide a comprehensive guide for prompt engineering enthusiasts, covering various aspects of working with GPT models and improving communication with AI tools.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.