resume-job-matcher

AI-powered Python script for automated resume-job matching with scoring, PDF generation, and personalized email responses.

Stars: 73

Resume Job Matcher is a Python script that automates the process of matching resumes to a job description using AI. It leverages the Anthropic Claude API or OpenAI's GPT API to analyze resumes and provide a match score along with personalized email responses for candidates. The tool offers comprehensive resume processing, advanced AI-powered analysis, in-depth evaluation & scoring, comprehensive analytics & reporting, enhanced candidate profiling, and robust system management. Users can customize font presets, generate PDF versions of unified resumes, adjust logging level, change scoring model, modify AI provider, and adjust AI model. The final score for each resume is calculated based on AI-generated match score and resume quality score, ensuring content relevance and presentation quality are considered. Troubleshooting tips, best practices, contribution guidelines, and required Python packages are provided.

README:

Resume Job Matcher is a Python script that automates the process of matching resumes to a job description using AI. It leverages the Anthropic Claude API or OpenAI's GPT API to analyze resumes and provide a match score along with personalized email responses for candidates.

-

🔥 Comprehensive Resume Processing

- Multiple outputs: PDF and Markdown generation

- Standardization for fair evaluation

- Font customization (sans-serif, serif, monospace)

- Command-line options for flexibility

-

🧠 Advanced AI-Powered Analysis

- Resume-job comparison using Claude/GPT API

- Dual AI support with runtime selection

- Efficient model interaction

- Structured data handling with Pydantic

-

📊 In-depth Evaluation & Scoring

-

📈 Comprehensive Analytics & Reporting

- Statistical insights: top, average, median, standard deviation scores

- Candidate distribution summary

- Match analysis with improvement suggestions

- Job description optimization recommendations

-

🌐 Enhanced Candidate Profiling

- Website integration for improved matching

- Personalized email generation

-

🛠️ Robust System Management

- Advanced logging and error handling

- Improved user feedback and reliability

To run the script with the new features:

python resume_matcher.py [--sans-serif|--serif|--mono] [--pdf] [job_desc_file] [pdf_folder]- Use

--sans-serif,--serif, or--monoto select a font preset. - Use

--pdfto generate PDF versions of unified resumes. - Optionally specify custom paths for the job description file and PDF folder.

Modify the logging level at the beginning of the script:

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')Available levels: DEBUG, INFO, WARNING, ERROR, CRITICAL.

To change the AI model used, update the model parameter in the match_resume_to_job function:

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

...

)To switch between Anthropic and OpenAI APIs, modify the choose_api function call at the beginning of the script:

def choose_api():

global chosen_api

prompt = "Use OpenAI API instead of Anthropic? [y/N]: "

choice = input(colored(prompt, "cyan")).strip().lower()

if choice in ["y", "yes"]:

chosen_api = "openai"

else:

chosen_api = "anthropic"To change the AI model used, update the model parameter in the talk_fast function:

response = client.chat.completions.create(

model="gpt-4o", # Change this to the desired model

...

)The final score for each resume is calculated using a combination of two factors:

-

AI-Generated Match Score (75% weight): This score is based on how well the resume matches the job description, considering factors such as skills, experience, education, and other relevant criteria.

-

Resume Quality Score (25% weight): This score assesses the visual appeal and clarity of the resume itself, including formatting, layout, and overall presentation.

The calculation process is as follows:

- The AI-generated match score and the resume quality score are both normalized to a 0-100 scale.

- A weighted average is calculated:

(AI_Score * 0.75 + Quality_Score * 0.25) - The result is clamped to ensure it falls within the 0-100 range.

This combined approach ensures that both the content relevance and the presentation quality of the resume are taken into account in the final score.

Adjust the scoring logic in the match_resume_to_job function's prompt as needed to better fit your specific requirements.

-

No Resumes Found: Ensure that resume PDFs are placed in the correct directory (

srcby default). -

Job Description Not Found: Confirm that

job_description.txtexists in the script's directory or provide the correct path. -

API Key Errors: Verify that the

CLAUDE_API_KEYenvironment variable is set correctly. -

Dependency Errors: Install all required Python packages using

pip.

If you experience network-related errors when fetching personal websites, you may adjust the timeout parameter in the check_website function.

response = requests.get(url, timeout=10)- Data Privacy: Ensure that all candidate data is handled in compliance with relevant data protection laws and regulations.

- API Usage: Be mindful of API rate limits and usage policies when using the Anthropic Claude API.

We welcome contributions! Please follow these steps:

- Fork the Repository: Create your own fork on GitHub.

- Create a Feature Branch: Work on your feature or fix in a new branch.

- Submit a Pull Request: Once your changes are ready, submit a pull request for review.

- Anthropic Claude API: For providing advanced AI capabilities.

Enjoy using the Resume Job Matcher script to streamline your recruitment process!

The following Python packages are required for this project:

- PyPDF2: For extracting text from PDF resumes

- anthropic: To interact with the Anthropic Claude API for AI-powered analysis

- tqdm: For displaying progress bars during processing

- termcolor: To add colored output in the console

- json5: For parsing JSON-like data with added flexibility

- requests: To make HTTP requests for fetching website content

- beautifulsoup4: For parsing HTML content from personal websites

- openai: To interact with the OpenAI API for AI-powered analysis

- pydantic: For data validation and settings management using Python type annotations

To install these packages, you can use pip:

pip install PyPDF2 anthropic openai tqdm termcolor json5 requests beautifulsoup4 pydanticFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for resume-job-matcher

Similar Open Source Tools

resume-job-matcher

Resume Job Matcher is a Python script that automates the process of matching resumes to a job description using AI. It leverages the Anthropic Claude API or OpenAI's GPT API to analyze resumes and provide a match score along with personalized email responses for candidates. The tool offers comprehensive resume processing, advanced AI-powered analysis, in-depth evaluation & scoring, comprehensive analytics & reporting, enhanced candidate profiling, and robust system management. Users can customize font presets, generate PDF versions of unified resumes, adjust logging level, change scoring model, modify AI provider, and adjust AI model. The final score for each resume is calculated based on AI-generated match score and resume quality score, ensuring content relevance and presentation quality are considered. Troubleshooting tips, best practices, contribution guidelines, and required Python packages are provided.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

premsql

PremSQL is an open-source library designed to help developers create secure, fully local Text-to-SQL solutions using small language models. It provides essential tools for building and deploying end-to-end Text-to-SQL pipelines with customizable components, ideal for secure, autonomous AI-powered data analysis. The library offers features like Local-First approach, Customizable Datasets, Robust Executors and Evaluators, Advanced Generators, Error Handling and Self-Correction, Fine-Tuning Support, and End-to-End Pipelines. Users can fine-tune models, generate SQL queries from natural language inputs, handle errors, and evaluate model performance against predefined metrics. PremSQL is extendible for customization and private data usage.

hound

Hound is a security audit automation pipeline for AI-assisted code review that mirrors how expert auditors think, learn, and collaborate. It features graph-driven analysis, sessionized audits, provider-agnostic models, belief system and hypotheses, precise code grounding, and adaptive planning. The system employs a senior/junior auditor pattern where the Scout actively navigates the codebase and annotates knowledge graphs while the Strategist handles high-level planning and vulnerability analysis. Hound is optimized for small-to-medium sized projects like smart contract applications and is language-agnostic.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

ai_automation_suggester

An integration for Home Assistant that leverages AI models to understand your unique home environment and propose intelligent automations. By analyzing your entities, devices, areas, and existing automations, the AI Automation Suggester helps you discover new, context-aware use cases you might not have considered, ultimately streamlining your home management and improving efficiency, comfort, and convenience. The tool acts as a personal automation consultant, providing actionable YAML-based automations that can save energy, improve security, enhance comfort, and reduce manual intervention. It turns the complexity of a large Home Assistant environment into actionable insights and tangible benefits.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

DevDocs

DevDocs is a platform designed to simplify the process of digesting technical documentation for software engineers and developers. It automates the extraction and conversion of web content into markdown format, making it easier for users to access and understand the information. By crawling through child pages of a given URL, DevDocs provides a streamlined approach to gathering relevant data and integrating it into various tools for software development. The tool aims to save time and effort by eliminating the need for manual research and content extraction, ultimately enhancing productivity and efficiency in the development process.

open-webui-tools

Open WebUI Tools Collection is a set of tools for structured planning, arXiv paper search, Hugging Face text-to-image generation, prompt enhancement, and multi-model conversations. It enhances LLM interactions with academic research, image generation, and conversation management. Tools include arXiv Search Tool and Hugging Face Image Generator. Function Pipes like Planner Agent offer autonomous plan generation and execution. Filters like Prompt Enhancer improve prompt quality. Installation and configuration instructions are provided for each tool and pipe.

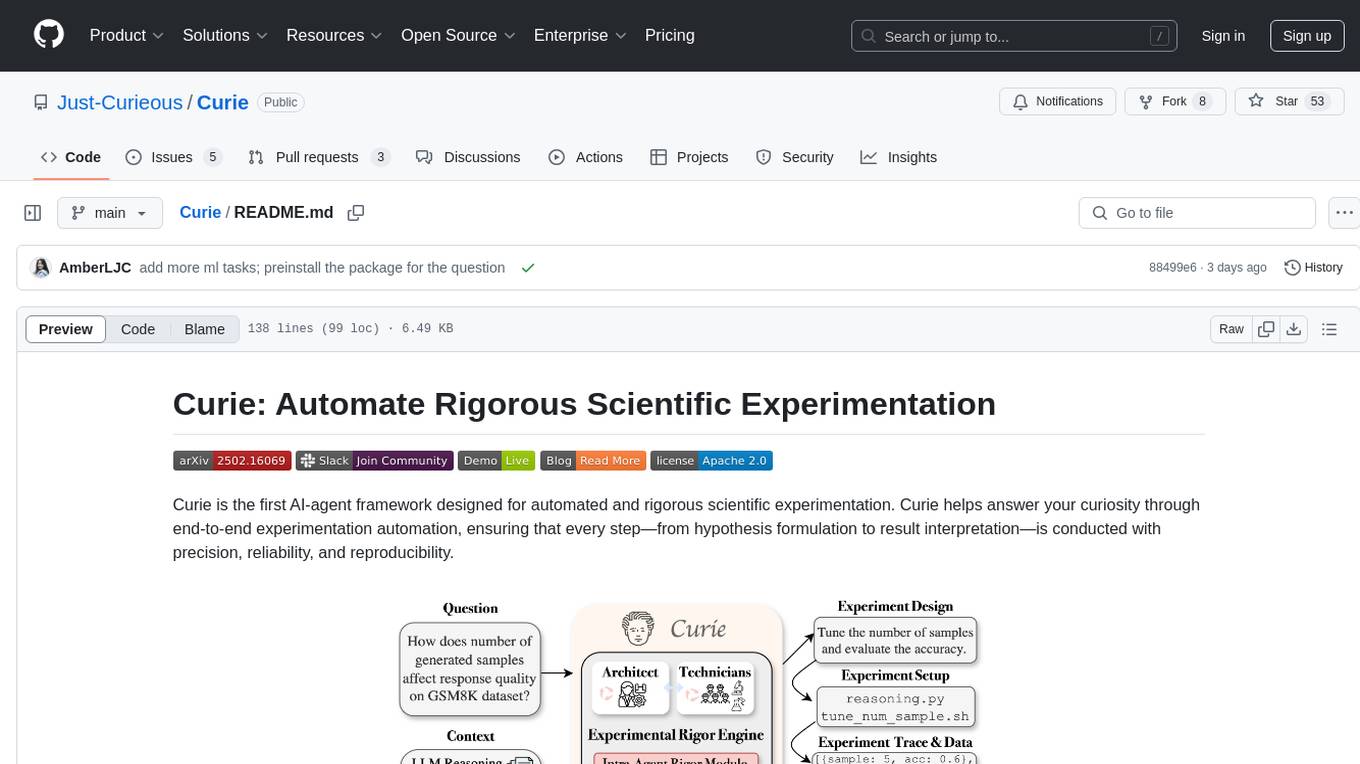

Curie

Curie is an AI-agent framework designed for automated and rigorous scientific experimentation. It automates end-to-end workflow management, ensures methodical procedure, reliability, and interpretability, and supports ML research, system analysis, and scientific discovery. It provides a benchmark with questions from 4 Computer Science domains. Users can customize experiment agents and adapt to their own tasks by configuring base_config.json. Curie is suitable for hyperparameter tuning, algorithm behavior analysis, system performance benchmarking, and automating computational simulations.

code-assistant

Code Assistant is an AI coding tool built in Rust that offers command-line and graphical interfaces for autonomous code analysis and modification. It supports multi-modal tool execution, real-time streaming interface, session-based project management, multiple interface options, and intelligent project exploration. The tool provides auto-loaded repository guidance and allows for project configuration with format-on-save feature. Users can interact with the tool in GUI, terminal, or MCP server mode, and configure LLM providers for advanced options. The architecture highlights adaptive tool syntax, smart tool filtering, and multi-threaded streaming for efficient performance. Contributions are welcome, and the roadmap includes features like block replacing in changed files, compact tool use failures, UI improvements, memory tools, security enhancements, fuzzy matching search blocks, editing user messages, and selecting in messages.

youtube_summarizer

YouTube AI Summarizer is a modern Next.js-based tool for AI-powered YouTube video summarization. It allows users to generate concise summaries of YouTube videos using various AI models, with support for multiple languages and summary styles. The application features flexible API key requirements, multilingual support, flexible summary modes, a smart history system, modern UI/UX design, and more. Users can easily input a YouTube URL, select language, summary type, and AI model, and generate summaries with real-time progress tracking. The tool offers a clean, well-structured summary view, history dashboard, and detailed history view for past summaries. It also provides configuration options for API keys and database setup, along with technical highlights, performance improvements, and a modern tech stack.

linkedIn_auto_jobs_applier_with_AI

LinkedIn_AIHawk is an automated tool designed to revolutionize the job search and application process on LinkedIn. It leverages automation and artificial intelligence to efficiently apply to relevant positions, personalize responses, manage application volume, filter listings, generate dynamic resumes, and handle sensitive information securely. The tool aims to save time, increase application relevance, and enhance job search effectiveness in today's competitive landscape.

eole

EOLE is an open language modeling toolkit based on PyTorch. It aims to provide a research-friendly approach with a comprehensive yet compact and modular codebase for experimenting with various types of language models. The toolkit includes features such as versatile training and inference, dynamic data transforms, comprehensive large language model support, advanced quantization, efficient finetuning, flexible inference, and tensor parallelism. EOLE is a work in progress with ongoing enhancements in configuration management, command line entry points, reproducible recipes, core API simplification, and plans for further simplification, refactoring, inference server development, additional recipes, documentation enhancement, test coverage improvement, logging enhancements, and broader model support.

VeritasGraph

VeritasGraph is an enterprise-grade graph RAG framework designed for secure, on-premise AI applications. It leverages a knowledge graph to perform complex, multi-hop reasoning, providing transparent, auditable reasoning paths with full source attribution. The framework excels at answering complex questions that traditional vector search engines struggle with, ensuring trust and reliability in enterprise AI. VeritasGraph offers full control over data and AI models, verifiable attribution for every claim, advanced graph reasoning capabilities, and open-source deployment with sovereignty and customization.

For similar tasks

resume-job-matcher

Resume Job Matcher is a Python script that automates the process of matching resumes to a job description using AI. It leverages the Anthropic Claude API or OpenAI's GPT API to analyze resumes and provide a match score along with personalized email responses for candidates. The tool offers comprehensive resume processing, advanced AI-powered analysis, in-depth evaluation & scoring, comprehensive analytics & reporting, enhanced candidate profiling, and robust system management. Users can customize font presets, generate PDF versions of unified resumes, adjust logging level, change scoring model, modify AI provider, and adjust AI model. The final score for each resume is calculated based on AI-generated match score and resume quality score, ensuring content relevance and presentation quality are considered. Troubleshooting tips, best practices, contribution guidelines, and required Python packages are provided.

For similar jobs

resume-job-matcher

Resume Job Matcher is a Python script that automates the process of matching resumes to a job description using AI. It leverages the Anthropic Claude API or OpenAI's GPT API to analyze resumes and provide a match score along with personalized email responses for candidates. The tool offers comprehensive resume processing, advanced AI-powered analysis, in-depth evaluation & scoring, comprehensive analytics & reporting, enhanced candidate profiling, and robust system management. Users can customize font presets, generate PDF versions of unified resumes, adjust logging level, change scoring model, modify AI provider, and adjust AI model. The final score for each resume is calculated based on AI-generated match score and resume quality score, ensuring content relevance and presentation quality are considered. Troubleshooting tips, best practices, contribution guidelines, and required Python packages are provided.

Tianji

Tianji is a free, non-commercial artificial intelligence system developed by SocialAI for tasks involving worldly wisdom, such as etiquette, hospitality, gifting, wishes, communication, awkwardness resolution, and conflict handling. It includes four main technical routes: pure prompt, Agent architecture, knowledge base, and model training. Users can find corresponding source code for these routes in the tianji directory to replicate their own vertical domain AI applications. The project aims to accelerate the penetration of AI into various fields and enhance AI's core competencies.

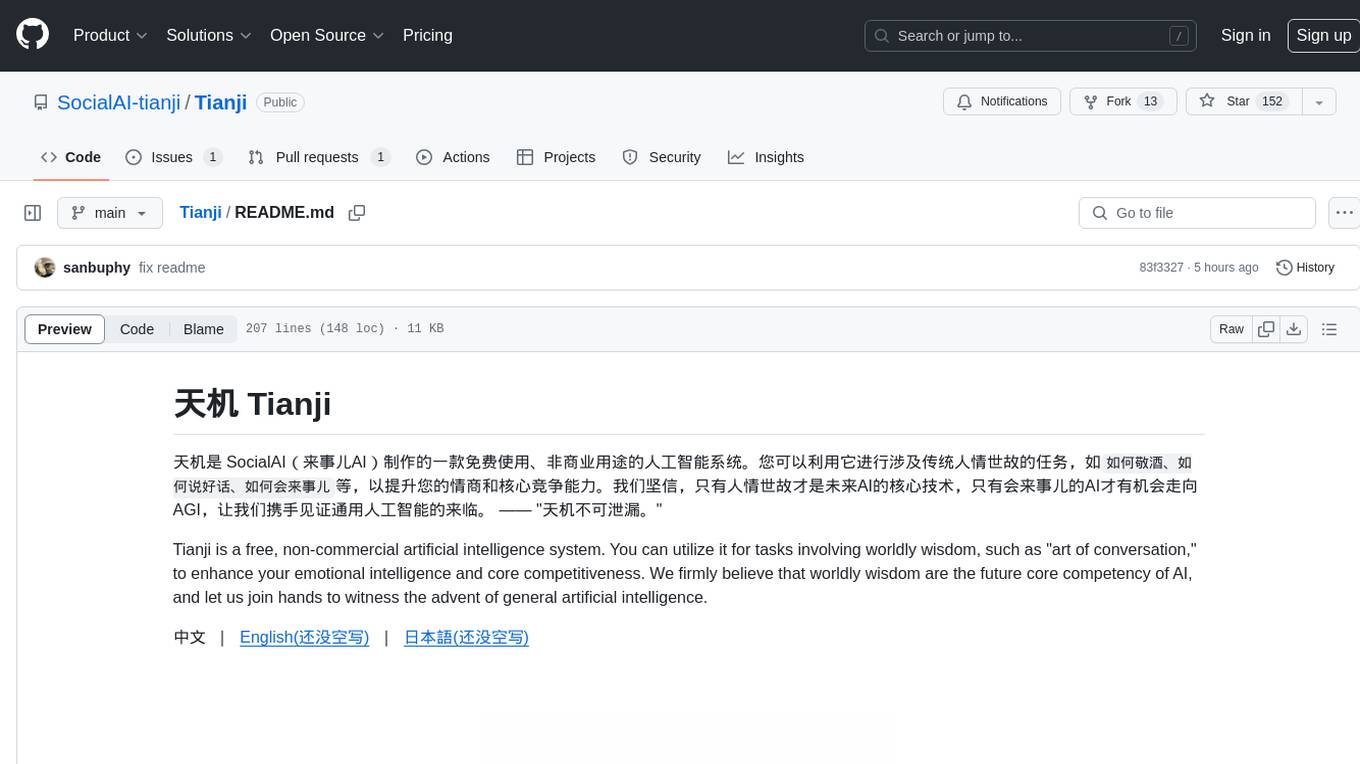

ResumeFlow

ResumeFlow is an automated system that leverages Large Language Models (LLMs) to streamline the job application process. By integrating LLM technology, the tool aims to automate various stages of job hunting, making it easier for users to apply for jobs. Users can access ResumeFlow as a web tool, install it as a Python package, or download the source code from GitHub. The tool requires Python 3.11.6 or above and an LLM API key from OpenAI or Gemini Pro for usage. ResumeFlow offers functionalities such as generating curated resumes and cover letters based on job URLs and user's master resume data.

advisingapp

**Advising App™** is a software solution created by Canyon GBS™ that includes a robust personal assistant designed to support student service professionals in their day-to-day roles. The assistant can help with research tasks, draft communication, language translation, content creation, student profile analysis, project planning, ideation, and much more. The software also includes a student service CRM designed to support the management of prospective and enrolled students. Key features of the CRM include record management, email and SMS, service management, caseload management, task management, interaction tracking, files and documents, and much more.

x-hiring

X-Hiring is a job search tool that uses Google AI to extract summaries of the latest job postings. It is easy to install and run, and can be used to find jobs in a variety of fields. X-Hiring is also open source, so you can contribute to its development or create your own custom version.

aides-jeunes

The user interface (and the main server) of the simulator of aids and social benefits for young people. It is based on the free socio-fiscal simulator Openfisca.

vidur

Vidur is an open-source next-gen Recruiting OS that offers an intuitive and modern interface for forward-thinking companies to efficiently manage their recruitment processes. It combines advanced candidate profiles, team workspace, plugins, and one-click apply features. The project is under active development, and contributors are welcome to join by addressing open issues. To ensure privacy, security issues should be reported via email to [email protected].

linkedIn_auto_jobs_applier_with_AI

LinkedIn_AIHawk is an automated tool designed to revolutionize the job search and application process on LinkedIn. It leverages automation and artificial intelligence to efficiently apply to relevant positions, personalize responses, manage application volume, filter listings, generate dynamic resumes, and handle sensitive information securely. The tool aims to save time, increase application relevance, and enhance job search effectiveness in today's competitive landscape.