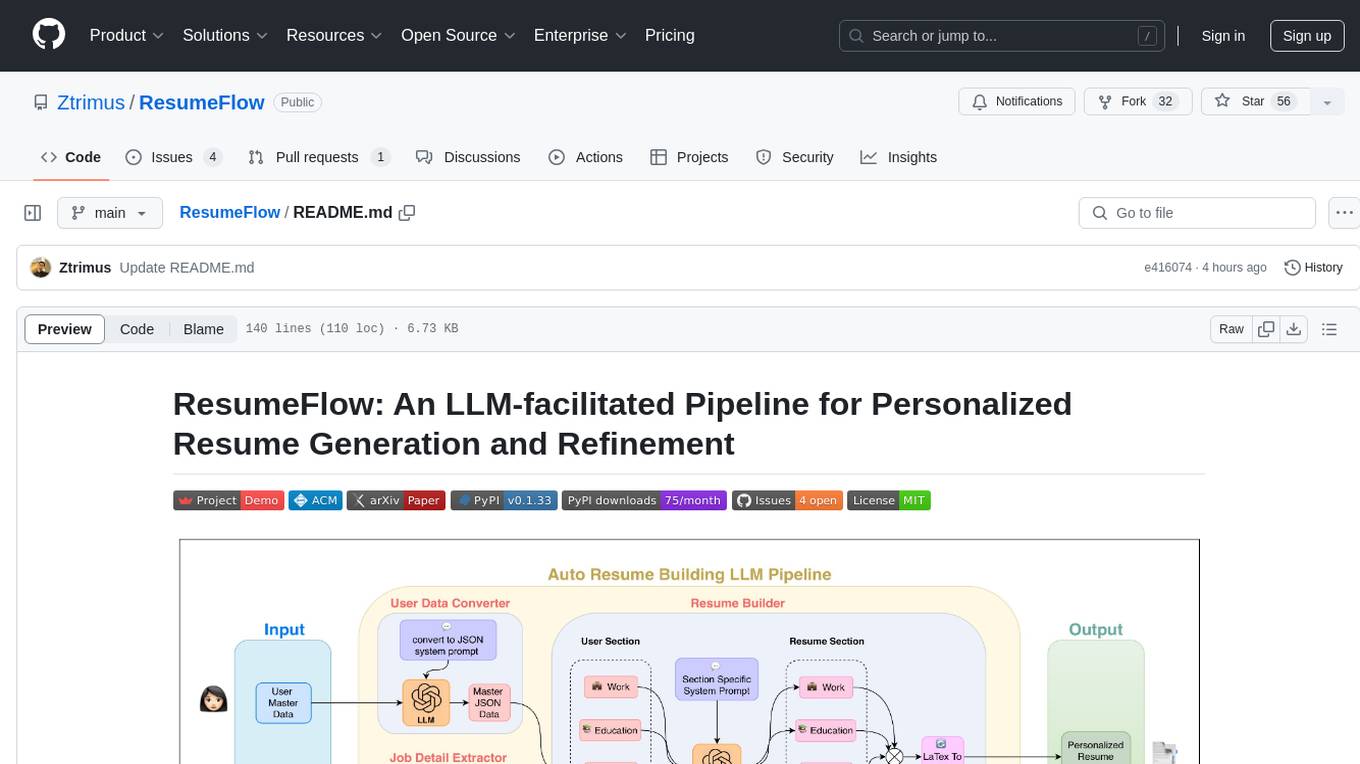

ResumeFlow

Simplify and improve the job hunting experience by integrating LLMs to automate tasks such as resume and cover letter generation, as well as application submission, saving users time and effort.

Stars: 93

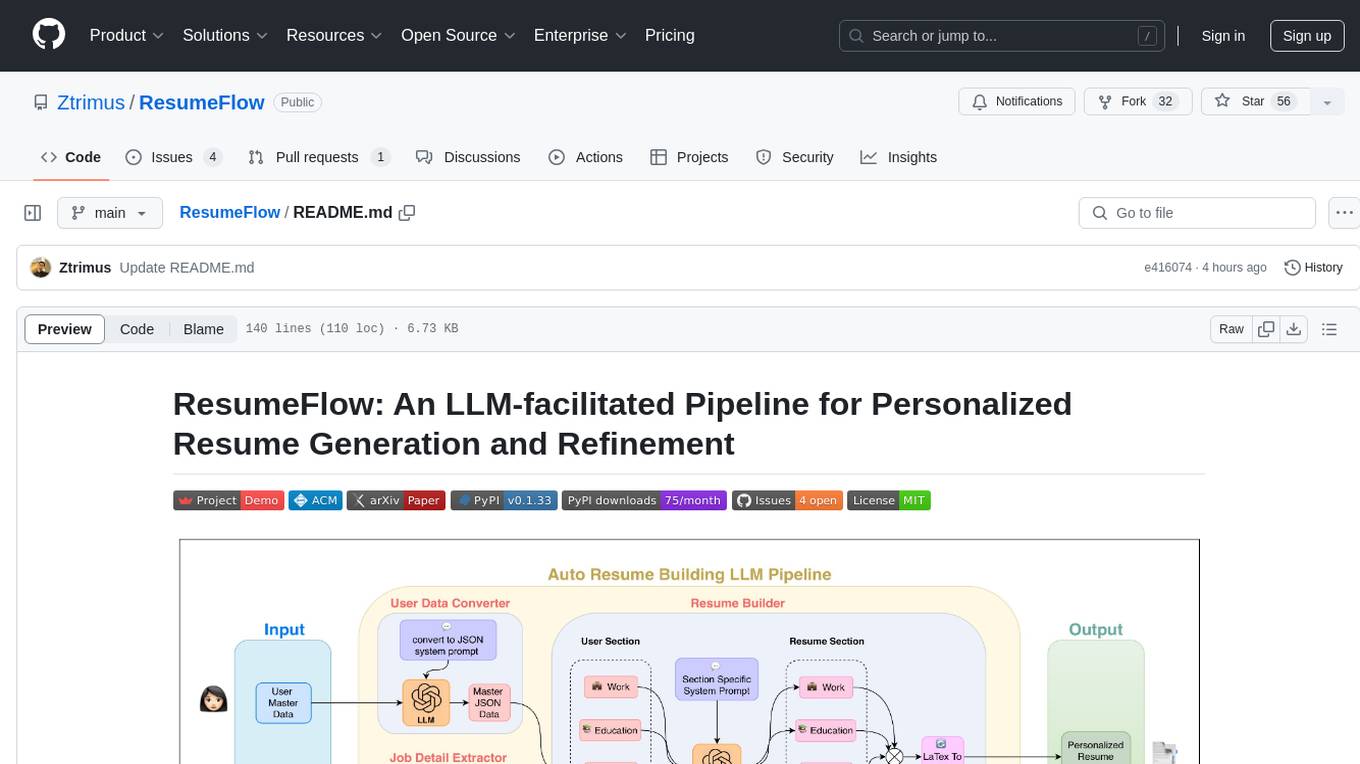

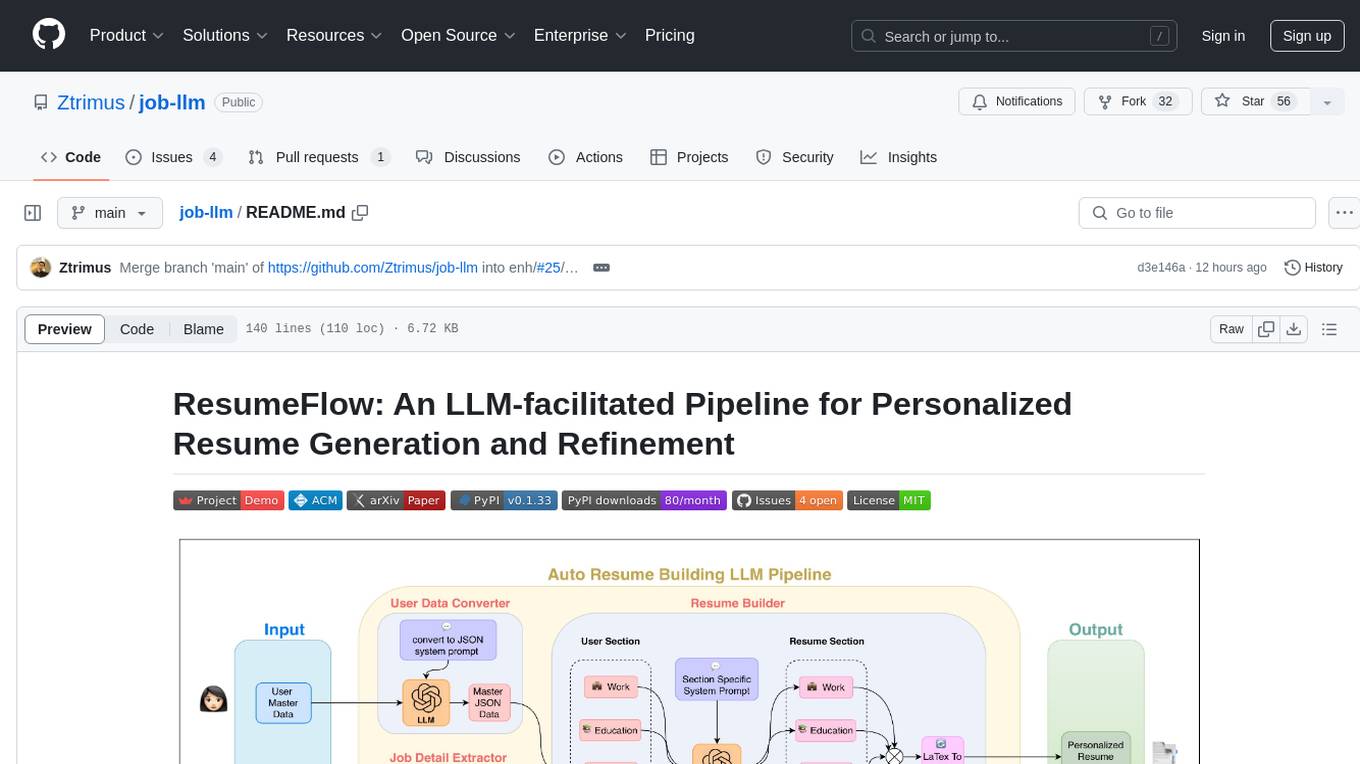

ResumeFlow is an automated system that leverages Large Language Models (LLMs) to streamline the job application process. By integrating LLM technology, the tool aims to automate various stages of job hunting, making it easier for users to apply for jobs. Users can access ResumeFlow as a web tool, install it as a Python package, or download the source code from GitHub. The tool requires Python 3.11.6 or above and an LLM API key from OpenAI or Gemini Pro for usage. ResumeFlow offers functionalities such as generating curated resumes and cover letters based on job URLs and user's master resume data.

README:

For Video Demonstration visit the YouTube link: https://youtu.be/Agl7ugyu1N4

Project can be:

- Access as a Web Tool from https://resumeflow.streamlit.app/

- Install as a Python Package from https://pypi.org/project/zlm/

- Download as Source Code from https://github.com/Ztrimus/job-llm.git

All other known bugs, fixes, feedbacks, and feature requests can be reported on the GitHub issues page.

Empower others, just like they helped you! Contribute to this open source project & make a difference. ✨ Create a branch, improve the code, & raise a pull request!

- Saurabh Zinjad | Ztrimus | [email protected]

- Amey Bhilegaonkar | ameygoes | [email protected]

- Amrita Bhattacharjee | Amritabh | [email protected]

We're aiming to create a automated system that makes applying for jobs a breeze. Job hunting has many stages, and we see a chance to automate things and use LLM (Language Model) to make it even smoother. We're looking at different ways, both the usual and some new ideas, to integrate LLM into the job application process. The goal is to reduce how much you have to do and let LLM do its thing, making the whole process easier for you.

1.3. Refer to this Paper for more details.

- OS : Linux, Mac

- Python : 3.11.6 and above

- LLM API key: OpenAI OR Gemini Pro

pip install zlm- Usage

from zlm import AutoApplyModel

job_llm = AutoApplyModel(

api_key="PROVIDE_API_KEY",

provider="ENTER PROVIDER <gemini> or <openai>",

downloads_dir="[optional] ENTER FOLDER PATH WHERE FILE GET DOWNLOADED, By default, 'downloads' folder"

)

job_llm.resume_cv_pipeline(

"ENTER_JOB_URL",

"YOUR_MASTER_RESUME_DATA" # .pdf or .json

) # Return and downloads curated resume and cover letter.git clone https://github.com/Ztrimus/job-llm.git

cd job-llm- Create and activate python environment (use

python -m venv .envor conda or etc.) to avoid any package dependency conflict. - Install Poetry package (dependency management and packaging tool)

pip install poetry

- Install all required packages.

- Refer pyproject.toml or poetry.lock for list of packages.

OR

poetry install

- If above command not working, we also provided requirements.txt file. But, we recommend using poetry.

pip install -r resources/requirements.txt

- Refer pyproject.toml or poetry.lock for list of packages.

- We also need to install following packages to conversion of latex to pdf

- For linux

NOTE: try

sudo apt-get install texlive-latex-base texlive-fonts-recommended texlive-fonts-extra

sudo apt-get updateif terminal unable to locate package. - For Mac

brew install basictex sudo tlmgr install enumitem fontawesome

- For linux

- If you want to run ollama models

ollama pull llama3.1

- Run following script to get result

>>> python main.py /

--url "JOB_POSTING_URL" /

--master_data="JSON_USER_MASTER_DATA" /

--api_key="YOUR_LLM_PROVIDER_API_KEY" / # put api_key considering provider

--downloads_dir="DOWNLOAD_LOCATION_FOR_RESUME_CV" /

--provider="openai" # openai, geminiIf you find JobLLM useful in your research or applications, please consider giving us a star 🌟 and citing it.

@inproceedings{10.1145/3626772.3657680,

author = {Zinjad, Saurabh Bhausaheb and Bhattacharjee, Amrita and Bhilegaonkar, Amey and Liu, Huan},

title = {ResumeFlow: An LLM-facilitated Pipeline for Personalized Resume Generation and Refinement},

series = {SIGIR '24},

booktitle = {Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval},

publisher = {Association for Computing Machinery},

doi = {10.1145/3626772.3657680},

url = {https://doi.org/10.1145/3626772.3657680},

year = {2024},

isbn = {9798400704314},

location = {Washington DC, USA},

address = {New York, NY, USA},

}@misc{zinjad2024resumeflow,

title={ResumeFlow: An LLM-facilitated Pipeline for Personalized Resume Generation and Refinement},

author={Saurabh Bhausaheb Zinjad and Amrita Bhattacharjee and Amey Bhilegaonkar and Huan Liu},

year={2024},

eprint={2402.06221},

archivePrefix={arXiv},

primaryClass={cs.CL}

}JobLLM is under the MIT License and is supported for commercial usage.

Need to find way to install following command in streamlit

ollama

playwright

"ollama pull llama3.1"

"ollama pull bge-m3"For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ResumeFlow

Similar Open Source Tools

ResumeFlow

ResumeFlow is an automated system that leverages Large Language Models (LLMs) to streamline the job application process. By integrating LLM technology, the tool aims to automate various stages of job hunting, making it easier for users to apply for jobs. Users can access ResumeFlow as a web tool, install it as a Python package, or download the source code from GitHub. The tool requires Python 3.11.6 or above and an LLM API key from OpenAI or Gemini Pro for usage. ResumeFlow offers functionalities such as generating curated resumes and cover letters based on job URLs and user's master resume data.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

SciPIP

SciPIP is a scientific paper idea generation tool powered by a large language model (LLM) designed to assist researchers in quickly generating novel research ideas. It conducts a literature review based on user-provided background information and generates fresh ideas for potential studies. The tool is designed to help researchers in various fields by providing a GUI environment for idea generation, supporting NLP, multimodal, and CV fields, and allowing users to interact with the tool through a web app or terminal. SciPIP uses Neo4j as its database and provides functionalities for generating new ideas, fetching papers, and constructing the database.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

trieve

Trieve is an advanced relevance API for hybrid search, recommendations, and RAG. It offers a range of features including self-hosting, semantic dense vector search, typo tolerant full-text/neural search, sub-sentence highlighting, recommendations, convenient RAG API routes, the ability to bring your own models, hybrid search with cross-encoder re-ranking, recency biasing, tunable popularity-based ranking, filtering, duplicate detection, and grouping. Trieve is designed to be flexible and customizable, allowing users to tailor it to their specific needs. It is also easy to use, with a simple API and well-documented features.

cognee

Cognee is an open-source framework designed for creating self-improving deterministic outputs for Large Language Models (LLMs) using graphs, LLMs, and vector retrieval. It provides a platform for AI engineers to enhance their models and generate more accurate results. Users can leverage Cognee to add new information, utilize LLMs for knowledge creation, and query the system for relevant knowledge. The tool supports various LLM providers and offers flexibility in adding different data types, such as text files or directories. Cognee aims to streamline the process of working with LLMs and improving AI models for better performance and efficiency.

llama_index

LlamaIndex is a data framework for building LLM applications. It provides tools for ingesting, structuring, and querying data, as well as integrating with LLMs and other tools. LlamaIndex is designed to be easy to use for both beginner and advanced users, and it provides a comprehensive set of features for building LLM applications.

labo

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

openai-kotlin

OpenAI Kotlin API client is a Kotlin client for OpenAI's API with multiplatform and coroutines capabilities. It allows users to interact with OpenAI's API using Kotlin programming language. The client supports various features such as models, chat, images, embeddings, files, fine-tuning, moderations, audio, assistants, threads, messages, and runs. It also provides guides on getting started, chat & function call, file source guide, and assistants. Sample apps are available for reference, and troubleshooting guides are provided for common issues. The project is open-source and licensed under the MIT license, allowing contributions from the community.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

copywriterproai-backend

CopywriterProAI is the world's first open-source AI writing platform for SEO and Ad Copy. The backend repository powers the AI capabilities and manages content processing for smooth operation. It provides an AI writing assistant that works behind the scenes to assist users in content creation.

refact-lsp

Refact Agent is a small executable written in Rust as part of the Refact Agent project. It lives inside your IDE to keep AST and VecDB indexes up to date, supporting connection graphs between definitions and usages in popular programming languages. It functions as an LSP server, offering code completion, chat functionality, and integration with various tools like browsers, databases, and debuggers. Users can interact with it through a Text UI in the command line.

aimeos-laravel

Aimeos Laravel is a professional, full-featured, and ultra-fast Laravel ecommerce package that can be easily integrated into existing Laravel applications. It offers a wide range of features including multi-vendor, multi-channel, and multi-warehouse support, fast performance, support for various product types, subscriptions with recurring payments, multiple payment gateways, full RTL support, flexible pricing options, admin backend, REST and GraphQL APIs, modular structure, SEO optimization, multi-language support, AI-based text translation, mobile optimization, and high-quality source code. The package is highly configurable and extensible, making it suitable for e-commerce SaaS solutions, marketplaces, and online shops with millions of vendors.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

For similar tasks

x-hiring

X-Hiring is a job search tool that uses Google AI to extract summaries of the latest job postings. It is easy to install and run, and can be used to find jobs in a variety of fields. X-Hiring is also open source, so you can contribute to its development or create your own custom version.

ResumeFlow

ResumeFlow is an automated system that leverages Large Language Models (LLMs) to streamline the job application process. By integrating LLM technology, the tool aims to automate various stages of job hunting, making it easier for users to apply for jobs. Users can access ResumeFlow as a web tool, install it as a Python package, or download the source code from GitHub. The tool requires Python 3.11.6 or above and an LLM API key from OpenAI or Gemini Pro for usage. ResumeFlow offers functionalities such as generating curated resumes and cover letters based on job URLs and user's master resume data.

PythonAgentAI

PythonAgentAI is a program designed to help individuals break into the tech industry and land entry-level software development roles. The program offers a self-paced learning experience with the potential for a starting salary of $70k+. It is an affordable alternative to expensive bootcamps or degrees, with a focus on preparing individuals for the 45,000+ job openings in the market. No prior experience is required, making it accessible to anyone determined to future-proof their career and unlock six-figure potential.

lib_resume_builder_AIHawk

`lib_resume_builder_AIHawk` is a Python library that simplifies the creation of personalized, professional resumes by integrating with GPT models. It allows users to generate tailored resumes based on job descriptions with various styles, offering a flexible approach to resume building with minimal effort.

geezap

Geezap-Job Aggregator is a Laravel-based application that simplifies the job search process by aggregating job listings from various platforms including LinkedIn, Upwork, Indeed, ZipRecruiter, and more. It not only consolidates job listings but also provides tools for application management, cover letter generation, and email communications. The platform offers features like unified search, real-time job updates, application tracking, customizable cover letter templates, personalized job recommendations, and social media sharing. Users can also receive weekly job digests, push notifications, and email alerts for saved jobs. The application utilizes technologies like Laravel, OpenAI API, MySQL, Livewire, and TailwindCSS.

job-llm

ResumeFlow is an automated system utilizing Large Language Models (LLMs) to streamline the job application process. It aims to reduce human effort in various steps of job hunting by integrating LLM technology. Users can access ResumeFlow as a web tool, install it as a Python package, or download the source code. The project focuses on leveraging LLMs to automate tasks such as resume generation and refinement, making job applications smoother and more efficient.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.