TaxHacker

Automatic AI analyzer for receipts, invoices, transactions, etc

Stars: 230

TaxHacker is a self-hosted accountant app designed for freelancers and small businesses to automate expense and income tracking using the power of GenAI. It can analyze uploaded photos, receipts, or PDFs to extract important data like name, total amount, date, merchant, and VAT, saving them as structured transactions. The tool supports automatic currency conversion, filters, multiple projects, import-export functionalities, custom categories, and allows users to create custom fields for extraction. TaxHacker simplifies reporting and tax filing by organizing and storing data efficiently.

README:

I'm a small self-hosted accountant app that can help you deal with invoices, receipts and taxes with power of GenAI.

Share TaxHacker

TaxHacker is a self-hosted accounting app for freelancers and small businesses who want to save time and automate expences and income tracking with power of GenAI. It can recognise uploaded photos, receipts or PDFs and extract important data (e.g. name, total amount, date, merchant, VAT) and save it as structured transactions to a table. You can also create your own custom fields to extract with your LLM prompts.

It supports automatic currency conversion on a day of transaction. Even for crypto!

Built-in system of filters, support for multiple projects, import-export of transactions for a certain time (along with attached files) and custom categories, allows you to simplify reporting and tax filing.

[!IMPORTANT]

This project is still at a very early stage. Use it at your own risk! Star Us to receive notifications about new bugfixes and features from GitHub ⭐️

https://github.com/user-attachments/assets/3326d0e3-0bf6-4c39-9e00-4bf0983d9b7a

Take a photo on upload or a PDF and TaxHacker will automatically recognise, categorise and store transaction information.

- Upload multiple documents and store in "unsorted" until you get the time to sort them out by hand or with an AI

- Use LLM to extract key information like date, amount, and vendor

- Automatically categorize transactions based on its content

- Store everything in a structured format for easy filtering and retrieval

- Organize your documents by a tax season

TaxHacker recognizes a wide variety of documents including store receipts, restaurant bills, invoices, bank checks, letters, even handwritten receipts.

TaxHacker automatically converts foreign currencies and even knows the historical exchange rates on the invoice date.

- Automatically detect currency in your documents

- Convert it to your base currency

- Historical exchange rate lookup for past transactions

- Support for over 170 world currencies and 14 popular cryptocurrencies (BTC, ETC, LTC, DOT, etc)!

You can customize LLM Prompts for built-in fields, categories, and projects, as well as modify global templates in the application settings. This allows to customize the quality of recognizing specific things to your specific use-cases.

- General prompt template is configurable is settings

- Create custom extraction rules for your specific needs

- Adjust field extraction priorities and naming conventions

- Fine-tune the AI for your industry-specific documents

The whole extraction process is under your contoll all the time!

Adapt TaxHacker to your specific tracking needs. You can create new fields, projects or categories to extract additional information from documents. For example, if you need to save emails, addresses, and any custom information into separate fields, you can do it. Custom fields will be available when exporting too.

- Create unlimited custom fields for transaction tracking

- Automatically extract custom field data using AI

- Include custom fields in exports and reports

- Create new categories or projects to organise your transactions and filter by them

Once all documents have been uploaded and analyzed, you can view, filter and export your transaction history.

- Filter transactions by time, category, and other features

- Use full-text search by recognized document content

- Export filtered transactions to CSV with attached documents

- Upload your entire income and expense history at the end of the year for your tax advisor to analyze

TaxHacker can be self-hosted on your own infrastructure for complete control over your data and application environment. We provide a Docker image and Docker Compose files that makes setting up TaxHacker simple:

curl -O https://raw.githubusercontent.com/vas3k/TaxHacker/main/docker-compose.yml

docker compose upThe Docker Compose setup includes:

- TaxHacker application container

- PostgreSQL 17 database container

- Automatic database migrations

- Volume mounts for persistent data storage

New docker image is automatically built and published on every new release. You can use specific version tags (e.g. v1.0.0) or latest for the most recent version.

For more advanced setups, you can adapt Docker Compose configuration to your own needs. The default configuration uses the pre-built image from GHCR, but you can still build locally using the provided Dockerfile if needed.

For example:

services:

app:

image: ghcr.io/vas3k/taxhacker:latest

ports:

- "7331:7331"

environment:

- SELF_HOSTED_MODE=true

- UPLOAD_PATH=/app/data/uploads

- DATABASE_URL=postgresql://postgres:postgres@localhost:5432/taxhacker

volumes:

- ./data:/app/data

restart: unless-stoppedConfigure TaxHacker to suit your needs with these environment variables:

| Variable | Required | Description | Example |

|---|---|---|---|

PORT |

No | Port to run the app on |

7331 (default) |

UPLOAD_PATH |

Yes | Local directory for uploading files | ./data/uploads |

DATABASE_URL |

Yes | PostgreSQL connection string | postgresql://user@localhost:5432/taxhacker |

SELF_HOSTED_MODE |

No | Set it to "true" if you're self-hosting the app: it enables auto-login, custom API keys, and more | false |

DISABLE_SIGNUP |

No | Disable new user registration on your instance | false |

OPENAI_API_KEY |

No | OpenAI API key for AI features. In self-hosted mode you can set it up in settings too. | sk-... |

RESEND_API_KEY |

No | Resend API key for email notifications | re_... |

RESEND_FROM_EMAIL |

No | Email address to send from | TaxHacker <[email protected]> |

We use:

- Next.js version 15+ or later

- Prisma for database models and migrations

- PostgreSQL as a database (PostgreSQL 17+ recommended)

- Ghostscript and graphicsmagick libs for PDF files (can be installed on macOS via

brew install gs graphicsmagick)

Set up a local development environment with these steps:

# Clone the repository

git clone https://github.com/vas3k/TaxHacker.git

cd TaxHacker

# Install dependencies

npm install

# Set up environment variables

cp .env.example .env

# Edit .env with your configuration

# Make sure to set DATABASE_URL to your PostgreSQL connection string

# Example: postgresql://user@localhost:5432/taxhacker

# Initialize the database

npx prisma generate && npx prisma migrate dev

# Start the development server

npm run devVisit http://localhost:7331 to see your local instance of TaxHacker.

For a production build, instead of npm run dev use the following commands:

# Build the application

npm run build

# Start the production server

npm run startContributions to TaxHacker are welcome and appreciated! Here's how you can help:

- Bug Reports: File detailed issues when you encounter problems

- Feature Requests: Share your ideas for new features

- Code Contributions: Submit pull requests to improve the application

- Documentation: Help improve documentation

All work is done on GitHub through issues and pull requests.

If TaxHacker has helped you - help us in return! You donations will support maintainance and development. If you find this project valuable for your personal or business use, consider making a donation.

TaxHacker is licensed under the MIT License - see the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for TaxHacker

Similar Open Source Tools

TaxHacker

TaxHacker is a self-hosted accountant app designed for freelancers and small businesses to automate expense and income tracking using the power of GenAI. It can analyze uploaded photos, receipts, or PDFs to extract important data like name, total amount, date, merchant, and VAT, saving them as structured transactions. The tool supports automatic currency conversion, filters, multiple projects, import-export functionalities, custom categories, and allows users to create custom fields for extraction. TaxHacker simplifies reporting and tax filing by organizing and storing data efficiently.

axoned

Axone is a public dPoS layer 1 designed for connecting, sharing, and monetizing resources in the AI stack. It is an open network for collaborative AI workflow management compatible with any data, model, or infrastructure, allowing sharing of data, algorithms, storage, compute, APIs, both on-chain and off-chain. The 'axoned' node of the AXONE network is built on Cosmos SDK & Tendermint consensus, enabling companies & individuals to define on-chain rules, share off-chain resources, and create new applications. Validators secure the network by maintaining uptime and staking $AXONE for rewards. The blockchain supports various platforms and follows Semantic Versioning 2.0.0. A docker image is available for quick start, with documentation on querying networks, creating wallets, starting nodes, and joining networks. Development involves Go and Cosmos SDK, with smart contracts deployed on the AXONE blockchain. The project provides a Makefile for building, installing, linting, and testing. Community involvement is encouraged through Discord, open issues, and pull requests.

SunoApi

SunoAPI is an unofficial client for Suno AI, built on Python and Streamlit. It supports functions like generating music and obtaining music information. Users can set up multiple account information to be saved for use. The tool also features built-in maintenance and activation functions for tokens, eliminating concerns about token expiration. It supports multiple languages and allows users to upload pictures for generating songs based on image content analysis.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

linkedin-api

The Linkedin API for Python allows users to programmatically search profiles, send messages, and find jobs using a regular Linkedin user account. It does not require 'official' API access, just a valid Linkedin account. However, it is important to note that this library is not officially supported by LinkedIn and using it may violate LinkedIn's Terms of Service. Users can authenticate using any Linkedin account credentials and access features like getting profiles, profile contact info, and connections. The library also provides commercial alternatives for extracting data, scraping public profiles, and accessing a full LinkedIn API. It is not endorsed or supported by LinkedIn and is intended for educational purposes and personal use only.

superflows

Superflows is an open-source alternative to OpenAI's Assistant API. It allows developers to easily add an AI assistant to their software products, enabling users to ask questions in natural language and receive answers or have tasks completed by making API calls. Superflows can analyze data, create plots, answer questions based on static knowledge, and even write code. It features a developer dashboard for configuration and testing, stateful streaming API, UI components, and support for multiple LLMs. Superflows can be set up in the cloud or self-hosted, and it provides comprehensive documentation and support.

OpenAdapt

OpenAdapt is an open-source software adapter between Large Multimodal Models (LMMs) and traditional desktop and web Graphical User Interfaces (GUIs). It aims to automate repetitive GUI workflows by leveraging the power of LMMs. OpenAdapt records user input and screenshots, converts them into tokenized format, and generates synthetic input via transformer model completions. It also analyzes recordings to generate task trees and replay synthetic input to complete tasks. OpenAdapt is model agnostic and generates prompts automatically by learning from human demonstration, ensuring that agents are grounded in existing processes and mitigating hallucinations. It works with all types of desktop GUIs, including virtualized and web, and is open source under the MIT license.

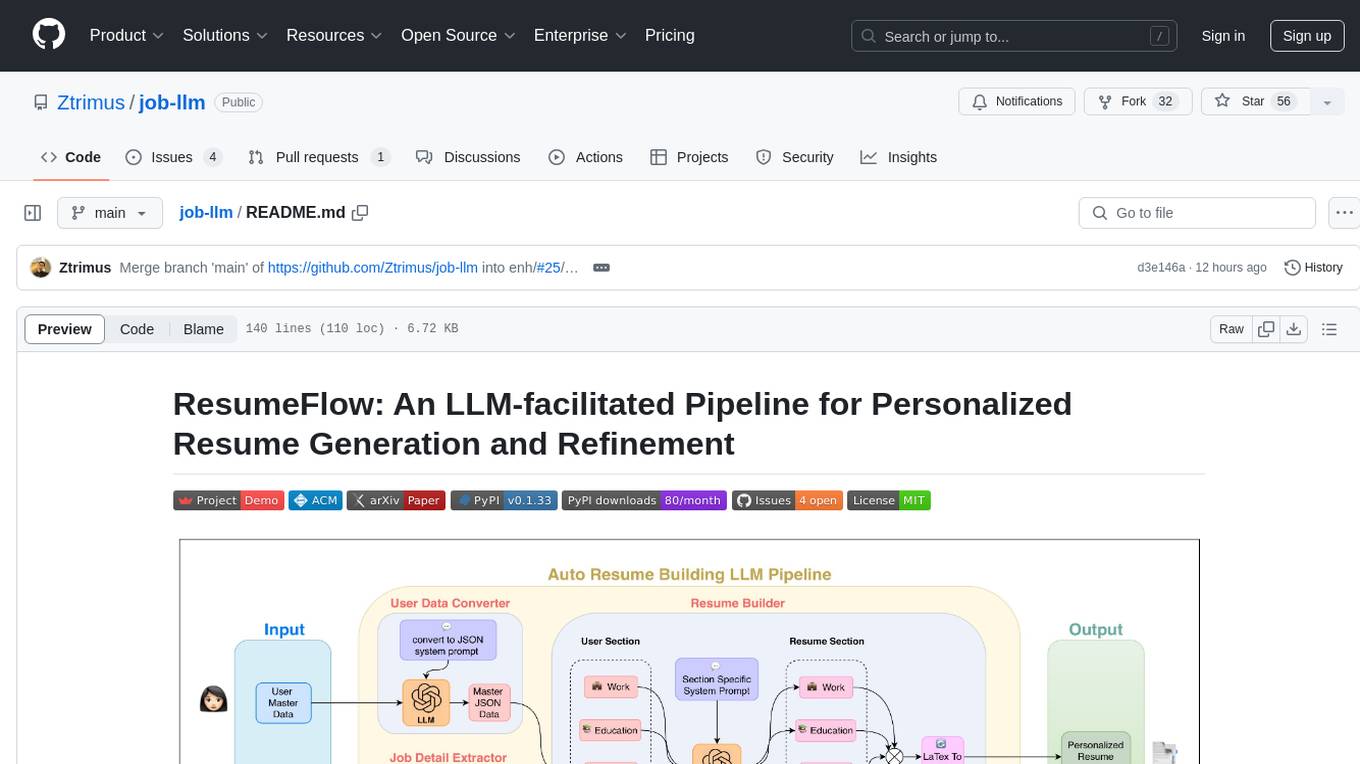

job-llm

ResumeFlow is an automated system utilizing Large Language Models (LLMs) to streamline the job application process. It aims to reduce human effort in various steps of job hunting by integrating LLM technology. Users can access ResumeFlow as a web tool, install it as a Python package, or download the source code. The project focuses on leveraging LLMs to automate tasks such as resume generation and refinement, making job applications smoother and more efficient.

NekoImageGallery

NekoImageGallery is an online AI image search engine that utilizes the Clip model and Qdrant vector database. It supports keyword search and similar image search. The tool generates 768-dimensional vectors for each image using the Clip model, supports OCR text search using PaddleOCR, and efficiently searches vectors using the Qdrant vector database. Users can deploy the tool locally or via Docker, with options for metadata storage using Qdrant database or local file storage. The tool provides API documentation through FastAPI's built-in Swagger UI and can be used for tasks like image search, text extraction, and vector search.

AgentLab

AgentLab is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides features for developing and evaluating agents on various benchmarks supported by BrowserGym. The framework allows for large-scale parallel agent experiments using ray, building blocks for creating agents over BrowserGym, and a unified LLM API for OpenRouter, OpenAI, Azure, or self-hosted using TGI. AgentLab also offers reproducibility features, a unified LeaderBoard, and supports multiple benchmarks like WebArena, WorkArena, WebLinx, VisualWebArena, AssistantBench, GAIA, Mind2Web-live, and MiniWoB.

vanna

Vanna is an open-source Python framework for SQL generation and related functionality. It uses Retrieval-Augmented Generation (RAG) to train a model on your data, which can then be used to ask questions and get back SQL queries. Vanna is designed to be portable across different LLMs and vector databases, and it supports any SQL database. It is also secure and private, as your database contents are never sent to the LLM or the vector database.

AgentBench

AgentBench is a benchmark designed to evaluate Large Language Models (LLMs) as autonomous agents in various environments. It includes 8 distinct environments such as Operating System, Database, Knowledge Graph, Digital Card Game, and Lateral Thinking Puzzles. The tool provides a comprehensive evaluation of LLMs' ability to operate as agents by offering Dev and Test sets for each environment. Users can quickly start using the tool by following the provided steps, configuring the agent, starting task servers, and assigning tasks. AgentBench aims to bridge the gap between LLMs' proficiency as agents and their practical usability.

codebox-api

CodeBox is a cloud infrastructure tool designed for running Python code in an isolated environment. It also offers simple file input/output capabilities and will soon support vector database operations. Users can install CodeBox using pip and utilize it by setting up an API key. The tool allows users to execute Python code snippets and interact with the isolated environment. CodeBox is currently in early development stages and requires manual handling for certain operations like refunds and cancellations. The tool is open for contributions through issue reporting and pull requests. It is licensed under MIT and can be contacted via email at [email protected].

merlinn

Merlinn is an open-source AI-powered on-call engineer that automatically jumps into incidents & alerts, providing useful insights and RCA in real time. It integrates with popular observability tools, lives inside Slack, offers an intuitive UX, and prioritizes security. Users can self-host Merlinn, use it for free, and benefit from automatic RCA, Slack integration, integrations with various tools, intuitive UX, and security features.

snd

Sales & Dungeons is a tool that utilizes thermal printers for creating customizable handouts, quick references, and more for Dungeons and Dragons sessions. It offers extensive templating and random generation systems, supports various connection methods, and allows importing/exporting templates and data sources. Users can access external data sources like Open5e, import data from CSV and other formats, and utilize AI prompt generation and translation. The tool supports cloud sync and is compatible with multiple operating systems and devices.

OpenLLM

OpenLLM is a platform that helps developers run any open-source Large Language Models (LLMs) as OpenAI-compatible API endpoints, locally and in the cloud. It supports a wide range of LLMs, provides state-of-the-art serving and inference performance, and simplifies cloud deployment via BentoML. Users can fine-tune, serve, deploy, and monitor any LLMs with ease using OpenLLM. The platform also supports various quantization techniques, serving fine-tuning layers, and multiple runtime implementations. OpenLLM seamlessly integrates with other tools like OpenAI Compatible Endpoints, LlamaIndex, LangChain, and Transformers Agents. It offers deployment options through Docker containers, BentoCloud, and provides a community for collaboration and contributions.

For similar tasks

TaxHacker

TaxHacker is a self-hosted accountant app designed for freelancers and small businesses to automate expense and income tracking using the power of GenAI. It can analyze uploaded photos, receipts, or PDFs to extract important data like name, total amount, date, merchant, and VAT, saving them as structured transactions. The tool supports automatic currency conversion, filters, multiple projects, import-export functionalities, custom categories, and allows users to create custom fields for extraction. TaxHacker simplifies reporting and tax filing by organizing and storing data efficiently.

LangGraph-Expense-Tracker

LangGraph Expense tracker is a small project that explores the possibilities of LangGraph. It allows users to send pictures of invoices, which are then structured and categorized into expenses and stored in a database. The project includes functionalities for invoice extraction, database setup, and API configuration. It consists of various modules for categorizing expenses, creating database tables, and running the API. The database schema includes tables for categories, payment methods, and expenses, each with specific columns to track transaction details. The API documentation is available for reference, and the project utilizes LangChain for processing expense data.

travel-planner-ai

Travel Planner AI is a Software as a Service (SaaS) product that simplifies travel planning by generating comprehensive itineraries based on user preferences. It leverages cutting-edge technologies to provide tailored schedules, optimal timing suggestions, food recommendations, prime experiences, expense tracking, and collaboration features. The tool aims to be the ultimate travel companion for users looking to plan seamless and smart travel adventures.

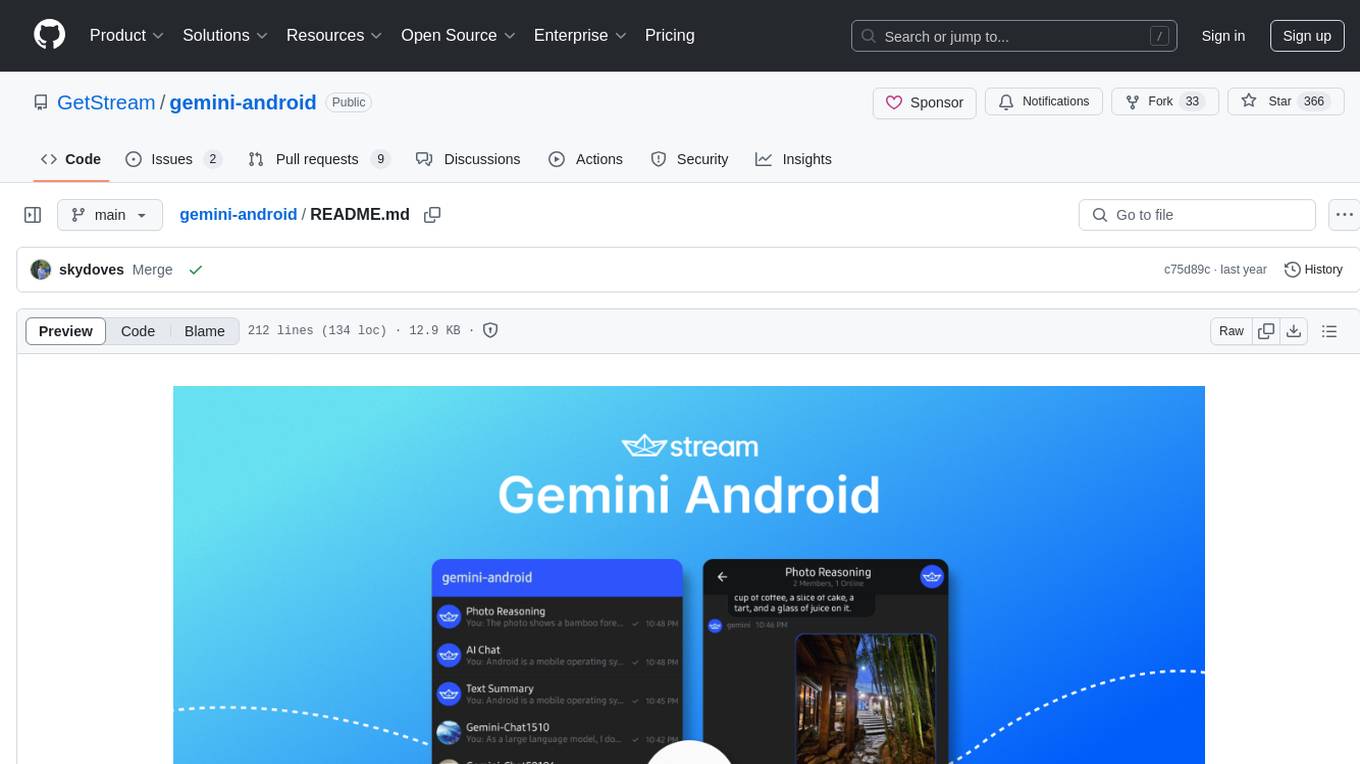

gemini-android

Gemini-Android is a mobile application that allows users to track their expenses and manage their finances on the go. The app provides a user-friendly interface for adding and categorizing expenses, setting budgets, and generating reports to help users make informed financial decisions. With Gemini-Android, users can easily monitor their spending habits, identify areas for saving, and stay on top of their financial goals.

wealth-tracker

Wealth Tracker is a personal finance management tool designed to help users track their income, expenses, and investments in one place. With intuitive features and customizable categories, users can easily monitor their financial health and make informed decisions. The tool provides detailed reports and visualizations to analyze spending patterns and set financial goals. Whether you are budgeting, saving for a big purchase, or planning for retirement, Wealth Tracker offers a comprehensive solution to manage your money effectively.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

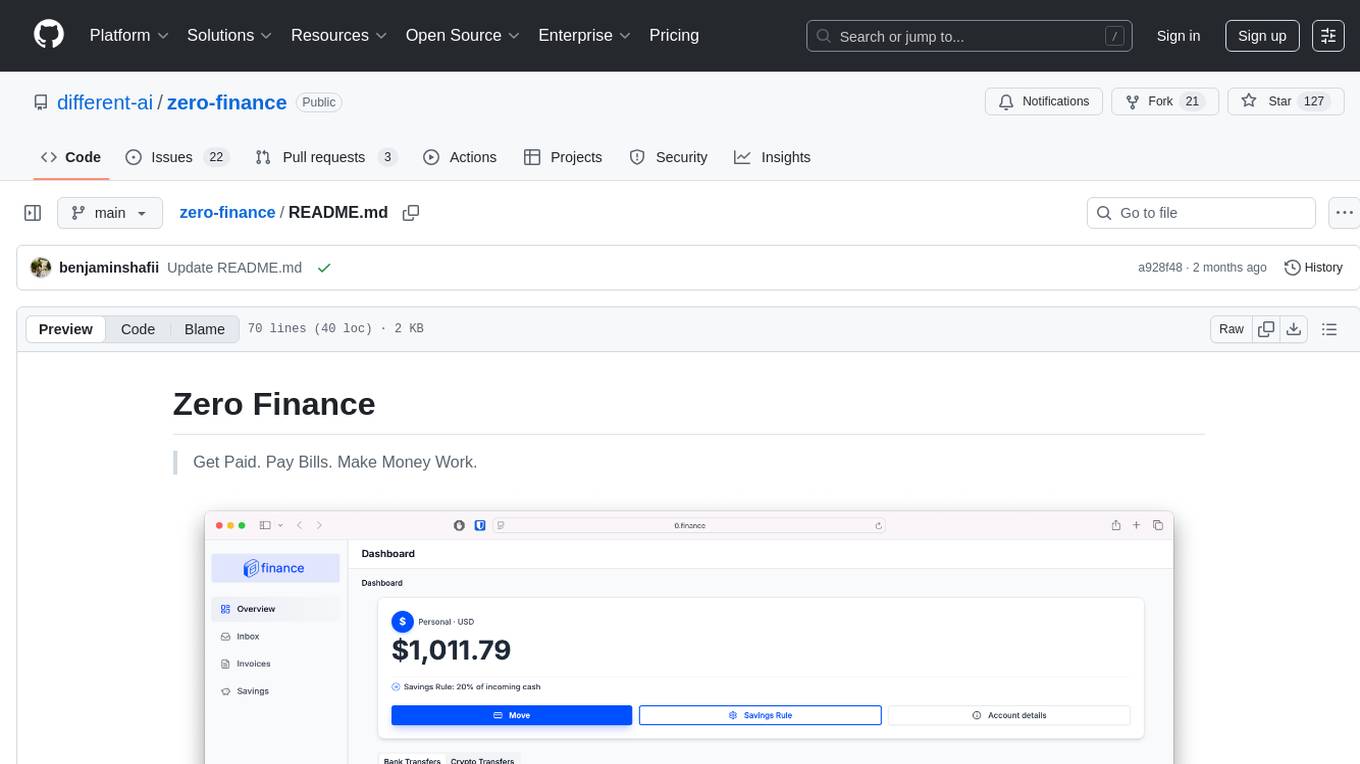

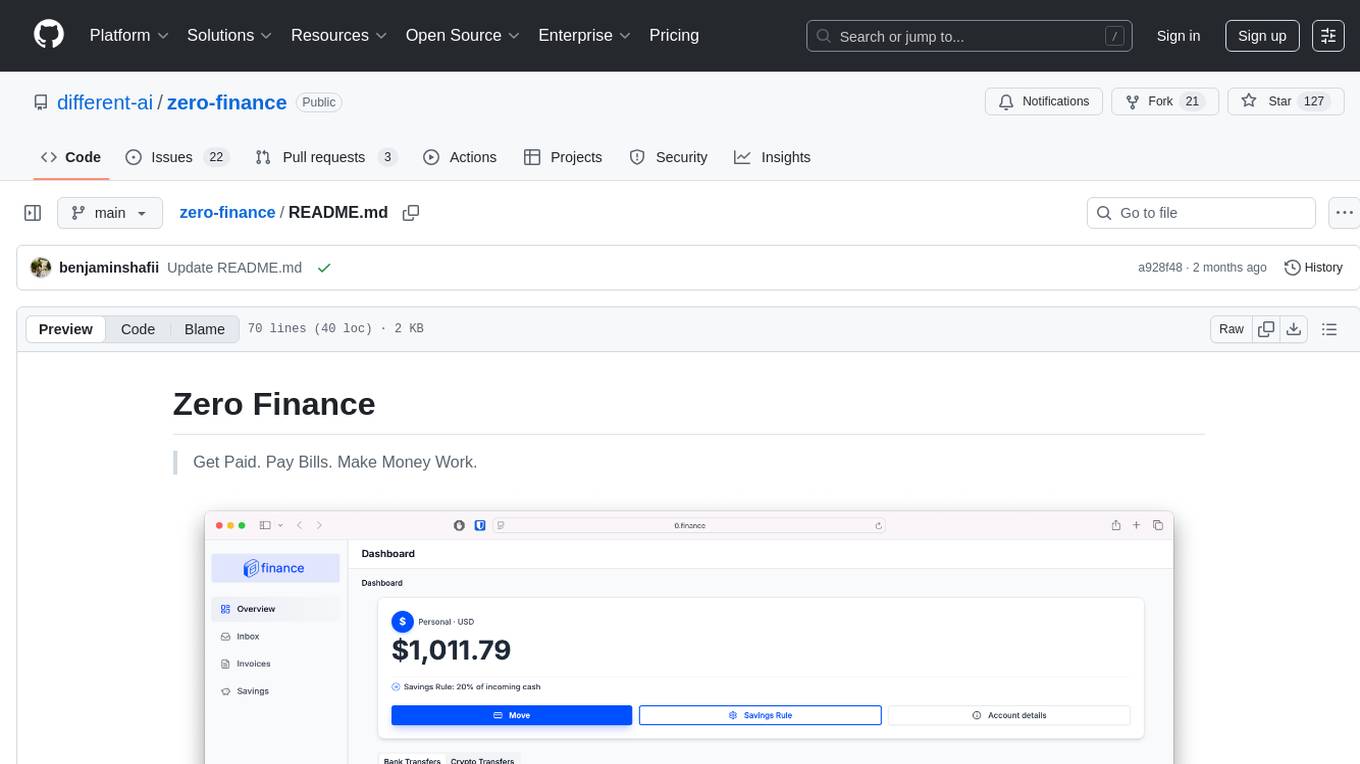

zero-finance

Zero Finance is a bank account that automates your finances, allowing you to easily create invoices, get paid directly to your personal IBAN, use a debit card worldwide with 0% conversion fees, optimize yield by automatically allocating idle funds to highest-yielding opportunities, and automate finances with a complete accounting system including expense tracking and tax optimization. The tool also syncs with various data sources to help you stay on track of your financial tasks by surfacing critical information, auto-categorizing based on AI-rules, auto-scheduling vendor payments from invoices via AI-rules, and allowing export to CSV. The project is structured as a monorepo containing multiple packages for the bank web app and a smart contract for securely automating savings.

pennywiseai-tracker

PennyWise AI Tracker is a free and open-source expense tracker that uses on-device AI to turn bank SMS into a clean and searchable money timeline. It offers smart SMS parsing, clear insights, subscription tracking, on-device AI assistant, auto-categorization, data export, and supports major Indian banks. All processing happens on the user's device for privacy. The tool is designed for Android users in India who want automatic expense tracking from bank SMS, with clean categories, subscription detection, and clear insights.

For similar jobs

SheetCopilot

SheetCopilot is an assistant agent that manipulates spreadsheets by following user commands. It leverages Large Language Models (LLMs) to interact with spreadsheets like a human expert, enabling non-expert users to complete tasks on complex software such as Google Sheets and Excel via a language interface. The tool observes spreadsheet states, polishes generated solutions based on external action documents and error feedback, and aims to improve success rate and efficiency. SheetCopilot offers a dataset with diverse task categories and operations, supporting operations like entry & manipulation, management, formatting, charts, and pivot tables. Users can interact with SheetCopilot in Excel or Google Sheets, executing tasks like calculating revenue, creating pivot tables, and plotting charts. The tool's evaluation includes performance comparisons with leading LLMs and VBA-based methods on specific datasets, showcasing its capabilities in controlling various aspects of a spreadsheet.

LangGraph-Expense-Tracker

LangGraph Expense tracker is a small project that explores the possibilities of LangGraph. It allows users to send pictures of invoices, which are then structured and categorized into expenses and stored in a database. The project includes functionalities for invoice extraction, database setup, and API configuration. It consists of various modules for categorizing expenses, creating database tables, and running the API. The database schema includes tables for categories, payment methods, and expenses, each with specific columns to track transaction details. The API documentation is available for reference, and the project utilizes LangChain for processing expense data.

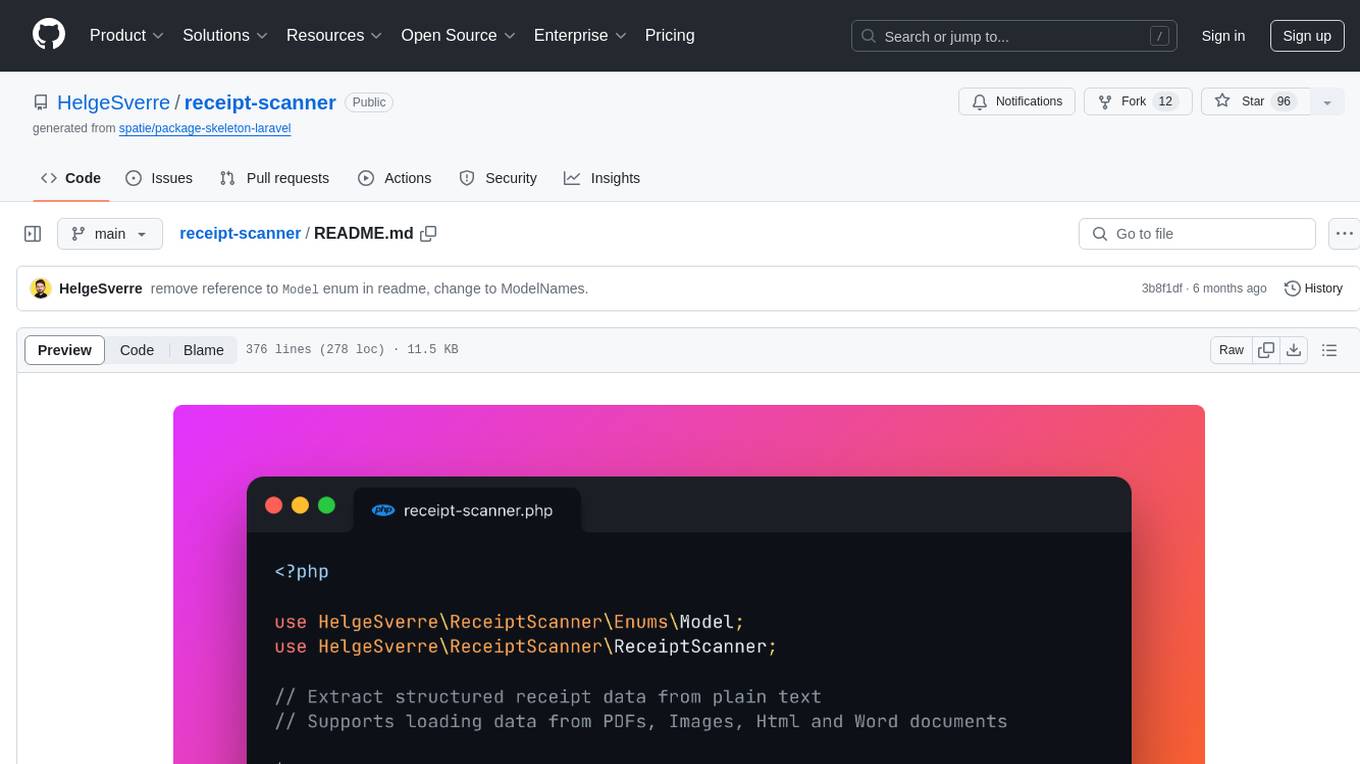

receipt-scanner

The receipt-scanner repository is an AI-Powered Receipt and Invoice Scanner for Laravel that allows users to easily extract structured receipt data from images, PDFs, and emails within their Laravel application using OpenAI. It provides a light wrapper around OpenAI Chat and Completion endpoints, supports various input formats, and integrates with Textract for OCR functionality. Users can install the package via composer, publish configuration files, and use it to extract data from plain text, PDFs, images, Word documents, and web content. The scanned receipt data is parsed into a DTO structure with main classes like Receipt, Merchant, and LineItem.

actual-ai

Actual AI is a project designed to categorize uncategorized transactions for Actual Budget using OpenAI or OpenAI specification compatible API. It sends requests to the OpenAI API to classify transactions based on their description, amount, and notes. Transactions that cannot be classified are marked as 'not guessed' in notes. The tool allows users to sync accounts before classification and classify transactions on a cron schedule. Guessed transactions are marked in notes for easy review.

gemini-android

Gemini-Android is a mobile application that allows users to track their expenses and manage their finances on the go. The app provides a user-friendly interface for adding and categorizing expenses, setting budgets, and generating reports to help users make informed financial decisions. With Gemini-Android, users can easily monitor their spending habits, identify areas for saving, and stay on top of their financial goals.

wealth-tracker

Wealth Tracker is a personal finance management tool designed to help users track their income, expenses, and investments in one place. With intuitive features and customizable categories, users can easily monitor their financial health and make informed decisions. The tool provides detailed reports and visualizations to analyze spending patterns and set financial goals. Whether you are budgeting, saving for a big purchase, or planning for retirement, Wealth Tracker offers a comprehensive solution to manage your money effectively.

TaxHacker

TaxHacker is a self-hosted accountant app designed for freelancers and small businesses to automate expense and income tracking using the power of GenAI. It can analyze uploaded photos, receipts, or PDFs to extract important data like name, total amount, date, merchant, and VAT, saving them as structured transactions. The tool supports automatic currency conversion, filters, multiple projects, import-export functionalities, custom categories, and allows users to create custom fields for extraction. TaxHacker simplifies reporting and tax filing by organizing and storing data efficiently.

zero-finance

Zero Finance is a bank account that automates your finances, allowing you to easily create invoices, get paid directly to your personal IBAN, use a debit card worldwide with 0% conversion fees, optimize yield by automatically allocating idle funds to highest-yielding opportunities, and automate finances with a complete accounting system including expense tracking and tax optimization. The tool also syncs with various data sources to help you stay on track of your financial tasks by surfacing critical information, auto-categorizing based on AI-rules, auto-scheduling vendor payments from invoices via AI-rules, and allowing export to CSV. The project is structured as a monorepo containing multiple packages for the bank web app and a smart contract for securely automating savings.