LangGraph-Expense-Tracker

LangGraph - FastAPI - Postgresql - AI project

Stars: 82

LangGraph Expense tracker is a small project that explores the possibilities of LangGraph. It allows users to send pictures of invoices, which are then structured and categorized into expenses and stored in a database. The project includes functionalities for invoice extraction, database setup, and API configuration. It consists of various modules for categorizing expenses, creating database tables, and running the API. The database schema includes tables for categories, payment methods, and expenses, each with specific columns to track transaction details. The API documentation is available for reference, and the project utilizes LangChain for processing expense data.

README:

Small project exploring the possibilitys of LangGraph. It lets you sent pictures of invoices, it structures and categorize the expenses and puts them in a database.

To zoom visit the whiteboard here.

To zoom visit the whiteboard here.

.

├── LICENCE.txt

├── README.MD

├── config.yaml

├── data

│ └── walmart-bon.jpeg

├── documents

│ ├── api_documentation.png

│ ├── langgraph.png

│ ├── openapi.json

│ └── pg_admin_screenshot.png

├── env.example

├── requirements.txt

└── src

├── api

│ ├── __init__.py

│ ├── category_routes.py

│ ├── expenses_routes.py

│ ├── payment_methods_routes.py

│ └── run_api.py

├── chain

│ ├── __init__.py

│ ├── graphstate.py

│ ├── helpers

│ │ ├── __init__.py

│ │ └── get_payment_methods_and_categories.py

│ └── nodes

│ ├── __init__.py

│ ├── categorizer.py

│ ├── correct.py

│ ├── db_entry.py

│ ├── humancheck.py

│ ├── imageencoder.py

│ └── jsonparser.py

└── database

├── __init__.py

├── create_categories_and_payment_methods.py

├── create_tables.py

└── db_connection.py

9 directories, 30 files1.1 Create virtual environment

Using Conda, venv or any other tool of your liking.

1.2 activate virtual environment

1.3 clone repo

!TO DO!

1.4 install requirements

!TO DO!

1.5 create .env file

See example here.

2.1.1 Install postgresql:

brew install postgresql(other ways to install Postgresql)

2.1.2 Install Docker:

brew install docker(other ways to install Docker)

2.2.1 Create:

docker run -d \

--name postgres-expenses \

-e POSTGRES_USER=expenses \

-e POSTGRES_PASSWORD=money$ \

-e POSTGRES_DB=expenses \

-p 6025:5432 \

postgres:latest2.2.2 Control:

Use the following command to see if the container is running correctly:

docker psit should show a list of running containers.

2.3.1 Create tables

Add tables for our expense tracking by running the /src/database/create_tables.py script (link)

2.3.2 Inspect tables

Using a tool link PGAdmin, you can inspect if the tables in the database are all there.

-Go to the root folder of your project and activate virtual environment

CD path/to/your/projectfolder

workon expense-trackeri have some shell aliases set up, the [workon] command should probably be something like [conda activate] or [source [env]]

-activate virtual environment

(expense_tracker)

~/Developer/expense_tracker

▶ uvicorn src.api.run_api:app --reload

INFO: Will watch for changes in these directories: ['/Users/jw/developer/expense_tracker']

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [12588] using StatReload

INFO: Started server process [12590]

INFO: Waiting for application startup.

INFO: Application startup complete.You can visit http://localhost:8000/docs#/ for a page with documentation about the API:

The database consists of three main tables: categories, payment_methods, and expenses.

This table contains a list of categories for expenses. Each category has a unique ID and a name.

-

Columns:

-

category_id(SERIAL, Primary Key): The unique ID for the category. -

category_name(VARCHAR(100), Unique): The name of the category.

-

This table contains various payment methods that can be used for expenses.

-

Columns:

-

payment_method_id(SERIAL, Primary Key): The unique ID for the payment method. -

payment_method_name(VARCHAR(50), Unique): The name of the payment method.

-

This is the main table for tracking expenses. It contains information such as the date, the category (with a reference to the categories table), the payment method (with a reference to the payment_methods table), the amount, VAT, and other details.

-

Columns:

-

transaction_id(SERIAL, Primary Key): The unique ID for the transaction. -

date(DATE): The date of the expense. -

category_id(INTEGER, Foreign Key): Reference to thecategoriestable. -

description(TEXT): A short description of the expense. -

amount(DECIMAL(10, 2)): The amount of the expense. -

vat(DECIMAL(10, 2)): The VAT for the expense. -

payment_method_id(INTEGER, Foreign Key): Reference to thepayment_methodstable. -

business_personal(VARCHAR(50)): Indicates whether the expense is business or personal. -

declared_on(DATE): The date when the expense was declared.

-

See API documentation here: openapi.json

the way this chain works is best described by showing the LangSmith Trace: click here to have a look

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LangGraph-Expense-Tracker

Similar Open Source Tools

LangGraph-Expense-Tracker

LangGraph Expense tracker is a small project that explores the possibilities of LangGraph. It allows users to send pictures of invoices, which are then structured and categorized into expenses and stored in a database. The project includes functionalities for invoice extraction, database setup, and API configuration. It consists of various modules for categorizing expenses, creating database tables, and running the API. The database schema includes tables for categories, payment methods, and expenses, each with specific columns to track transaction details. The API documentation is available for reference, and the project utilizes LangChain for processing expense data.

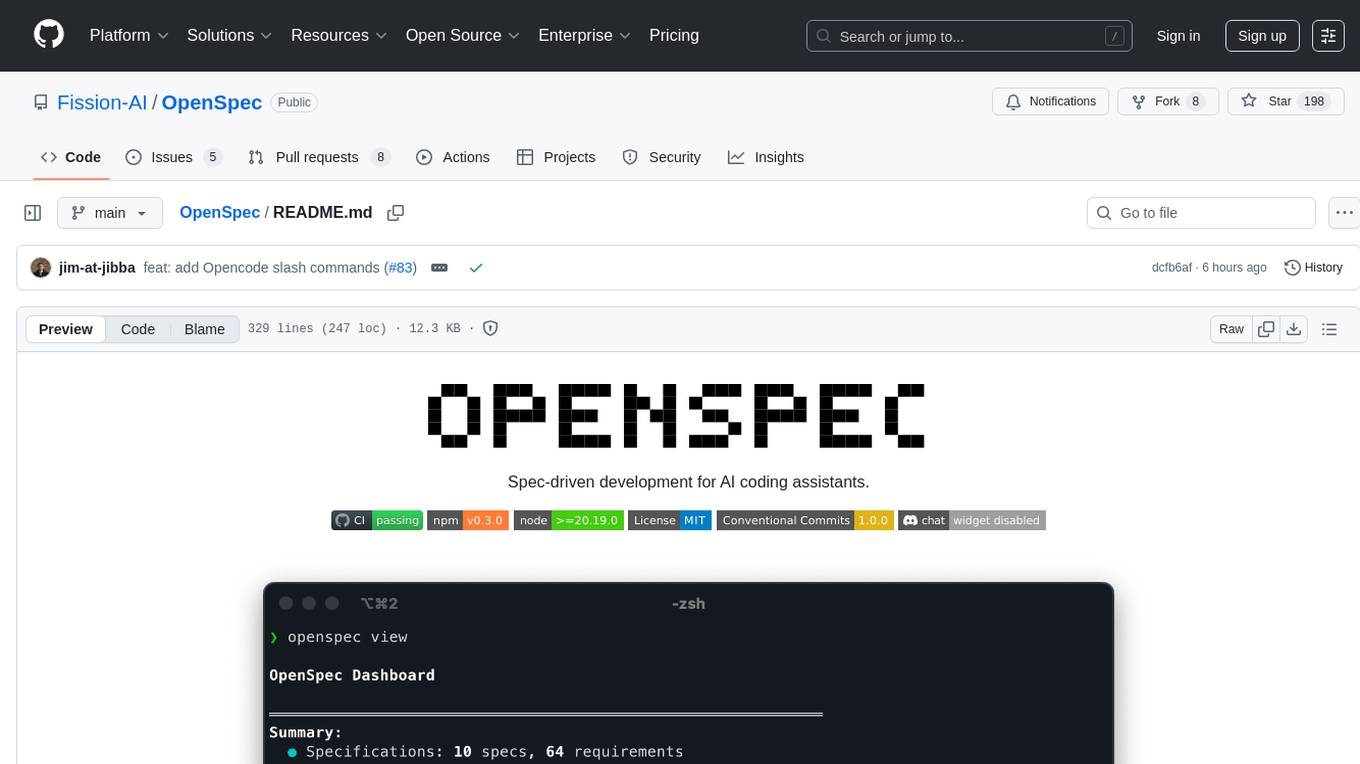

OpenSpec

OpenSpec is a tool for spec-driven development, aligning humans and AI coding assistants to agree on what to build before any code is written. It adds a lightweight specification workflow that ensures deterministic, reviewable outputs without the need for API keys. With OpenSpec, stakeholders can draft change proposals, review and align with AI assistants, implement tasks based on agreed specs, and archive completed changes for merging back into the source-of-truth specs. It works seamlessly with existing AI tools, offering shared visibility into proposed, active, or archived work.

marvin

Marvin is a lightweight AI toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. Each of Marvin's tools is simple and self-documenting, using AI to solve common but complex challenges like entity extraction, classification, and generating synthetic data. Each tool is independent and incrementally adoptable, so you can use them on their own or in combination with any other library. Marvin is also multi-modal, supporting both image and audio generation as well using images as inputs for extraction and classification. Marvin is for developers who care more about _using_ AI than _building_ AI, and we are focused on creating an exceptional developer experience. Marvin users should feel empowered to bring tightly-scoped "AI magic" into any traditional software project with just a few extra lines of code. Marvin aims to merge the best practices for building dependable, observable software with the best practices for building with generative AI into a single, easy-to-use library. It's a serious tool, but we hope you have fun with it. Marvin is open-source, free to use, and made with 💙 by the team at Prefect.

simili-bot

Simili Bot is an AI-powered tool designed for GitHub repositories to automatically detect duplicate issues, find similar issues using semantic search, and intelligently route issues across repositories. It offers features such as semantic duplicate detection, cross-repository search, intelligent routing, smart triage, modular pipeline customization, and multi-repo support. The tool follows a 'Lego with Blueprints' architecture, with Lego Blocks representing independent pipeline steps and Blueprints providing pre-defined workflows. Users can configure AI providers like Gemini and OpenAI, set default models for embeddings, and specify workflows in a 'simili.yaml' file. Simili Bot also offers CLI commands for bulk indexing, processing single issues, and batch operations, enabling local development, testing, and analysis of historical data.

OpenManus

OpenManus is an open-source project aiming to replicate the capabilities of the Manus AI agent, known for autonomously executing complex tasks like travel planning and stock analysis. The project provides a modular, containerized framework using Docker, Python, and JavaScript, allowing developers to build, deploy, and experiment with a multi-agent AI system. Features include collaborative AI agents, Dockerized environment, task execution support, tool integration, modular design, and community-driven development. Users can interact with OpenManus via CLI, API, or web UI, and the project welcomes contributions to enhance its capabilities.

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

DeepDanbooru

DeepDanbooru is an anime-style girl image tag estimation system written in Python. It allows users to estimate images using a live demo site. The tool requires specific packages to be installed and provides a structured dataset for training projects. Users can create training projects, download tags, filter datasets, and start training to estimate tags for images. The tool uses a specific dataset structure and project structure to facilitate the training process.

one

ONE is a modern web and AI agent development toolkit that empowers developers to build AI-powered applications with high performance, beautiful UI, AI integration, responsive design, type safety, and great developer experience. It is perfect for building modern web applications, from simple landing pages to complex AI-powered platforms.

cookiecutter-data-science

Cookiecutter Data Science (CCDS) is a tool for setting up a data science project template that incorporates best practices. It provides a logical, reasonably standardized but flexible project structure for doing and sharing data science work. The tool helps users to easily start new data science projects with a well-organized directory structure, including folders for data, models, notebooks, reports, and more. By following the project template created by CCDS, users can streamline their data science workflow and ensure consistency across projects.

rlama

RLAMA is a powerful AI-driven question-answering tool that seamlessly integrates with local Ollama models. It enables users to create, manage, and interact with Retrieval-Augmented Generation (RAG) systems tailored to their documentation needs. RLAMA follows a clean architecture pattern with clear separation of concerns, focusing on lightweight and portable RAG capabilities with minimal dependencies. The tool processes documents, generates embeddings, stores RAG systems locally, and provides contextually-informed responses to user queries. Supported document formats include text, code, and various document types, with troubleshooting steps available for common issues like Ollama accessibility, text extraction problems, and relevance of answers.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe is fully local, keeping code on the user's machine without relying on external APIs. It supports multiple languages, offers various search options, and can be used in CLI mode, MCP server mode, AI chat mode, and web interface. The tool is designed to be flexible, fast, and accurate, providing developers and AI models with full context and relevant code blocks for efficient code exploration and understanding.

agentboard

Agentboard is a Web GUI for tmux optimized for agent TUI's like claude and codex. It provides a shared workspace across devices with features such as paste support, touch scrolling, virtual arrow keys, log tracking, and session pinning. Users can interact with tmux sessions from any device through a live terminal stream. The tool allows session discovery, status inference, and terminal I/O streaming for efficient agent management.

AI-Agent-Starter-Kit

AI Agent Starter Kit is a modern full-stack AI-enabled template using Next.js for frontend and Express.js for backend, with Telegram and OpenAI integrations. It offers AI-assisted development, smart environment variable setup assistance, intelligent error resolution, context-aware code completion, and built-in debugging helpers. The kit provides a structured environment for developers to interact with AI tools seamlessly, enhancing the development process and productivity.

Vitron

Vitron is a unified pixel-level vision LLM designed for comprehensive understanding, generating, segmenting, and editing static images and dynamic videos. It addresses challenges in existing vision LLMs such as superficial instance-level understanding, lack of unified support for images and videos, and insufficient coverage across various vision tasks. The tool requires Python >= 3.8, Pytorch == 2.1.0, and CUDA Version >= 11.8 for installation. Users can deploy Gradio demo locally and fine-tune their models for specific tasks.

rkllama

RKLLama is a server and client tool designed for running and interacting with LLM models optimized for Rockchip RK3588(S) and RK3576 platforms. It allows models to run on the NPU, with features such as running models on NPU, partial Ollama API compatibility, pulling models from Huggingface, API REST with documentation, dynamic loading/unloading of models, inference requests with streaming modes, simplified model naming, CPU model auto-detection, and optional debug mode. The tool supports Python 3.8 to 3.12 and has been tested on Orange Pi 5 Pro and Orange Pi 5 Plus with specific OS versions.

twitter-automation-ai

Advanced Twitter Automation AI is a modular Python-based framework for automating Twitter at scale. It supports multiple accounts, robust Selenium automation with optional undetected Chrome + stealth, per-account proxies and rotation, structured LLM generation/analysis, community posting, and per-account metrics/logs. The tool allows seamless management and automation of multiple Twitter accounts, content scraping, publishing, LLM integration for generating and analyzing tweet content, engagement automation, configurable automation, browser automation using Selenium, modular design for easy extension, comprehensive logging, community posting, stealth mode for reduced fingerprinting, per-account proxies, LLM structured prompts, and per-account JSON summaries and event logs for observability.

For similar tasks

LangGraph-Expense-Tracker

LangGraph Expense tracker is a small project that explores the possibilities of LangGraph. It allows users to send pictures of invoices, which are then structured and categorized into expenses and stored in a database. The project includes functionalities for invoice extraction, database setup, and API configuration. It consists of various modules for categorizing expenses, creating database tables, and running the API. The database schema includes tables for categories, payment methods, and expenses, each with specific columns to track transaction details. The API documentation is available for reference, and the project utilizes LangChain for processing expense data.

travel-planner-ai

Travel Planner AI is a Software as a Service (SaaS) product that simplifies travel planning by generating comprehensive itineraries based on user preferences. It leverages cutting-edge technologies to provide tailored schedules, optimal timing suggestions, food recommendations, prime experiences, expense tracking, and collaboration features. The tool aims to be the ultimate travel companion for users looking to plan seamless and smart travel adventures.

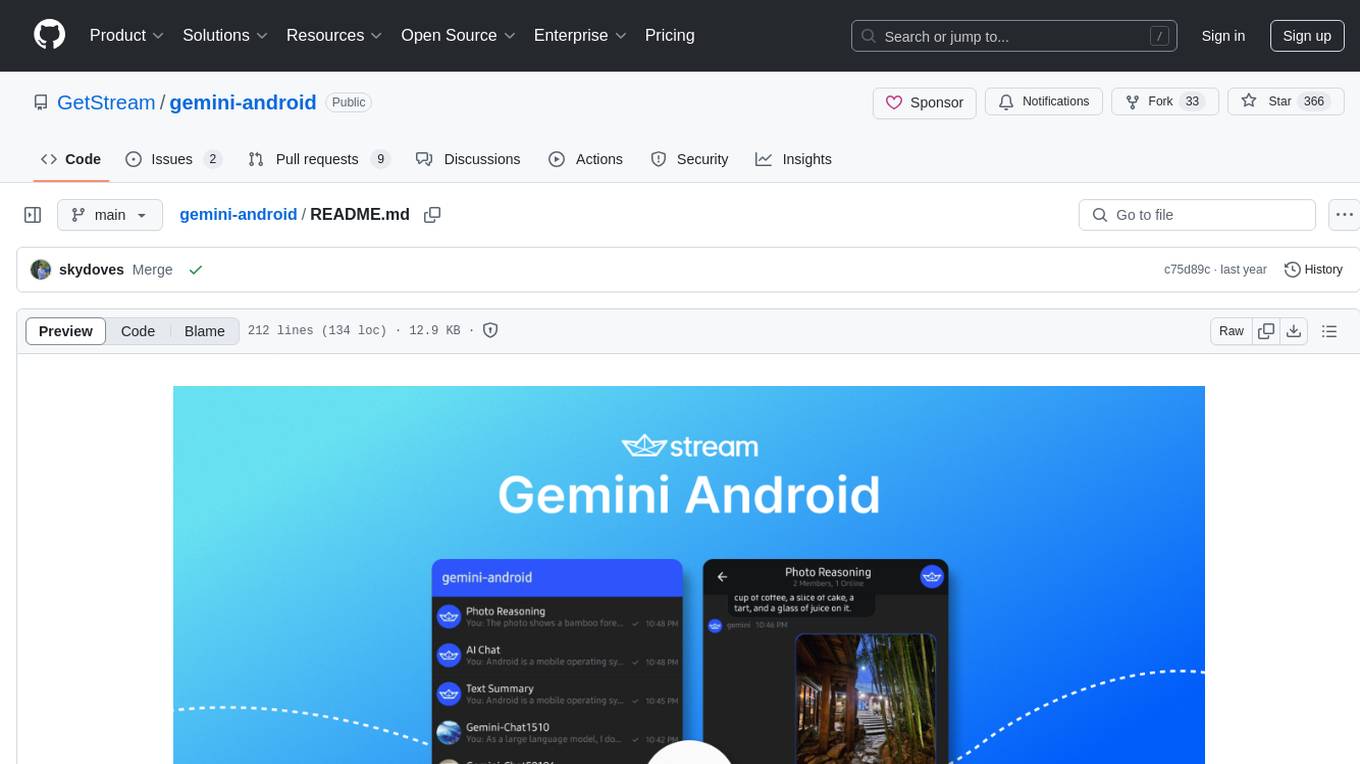

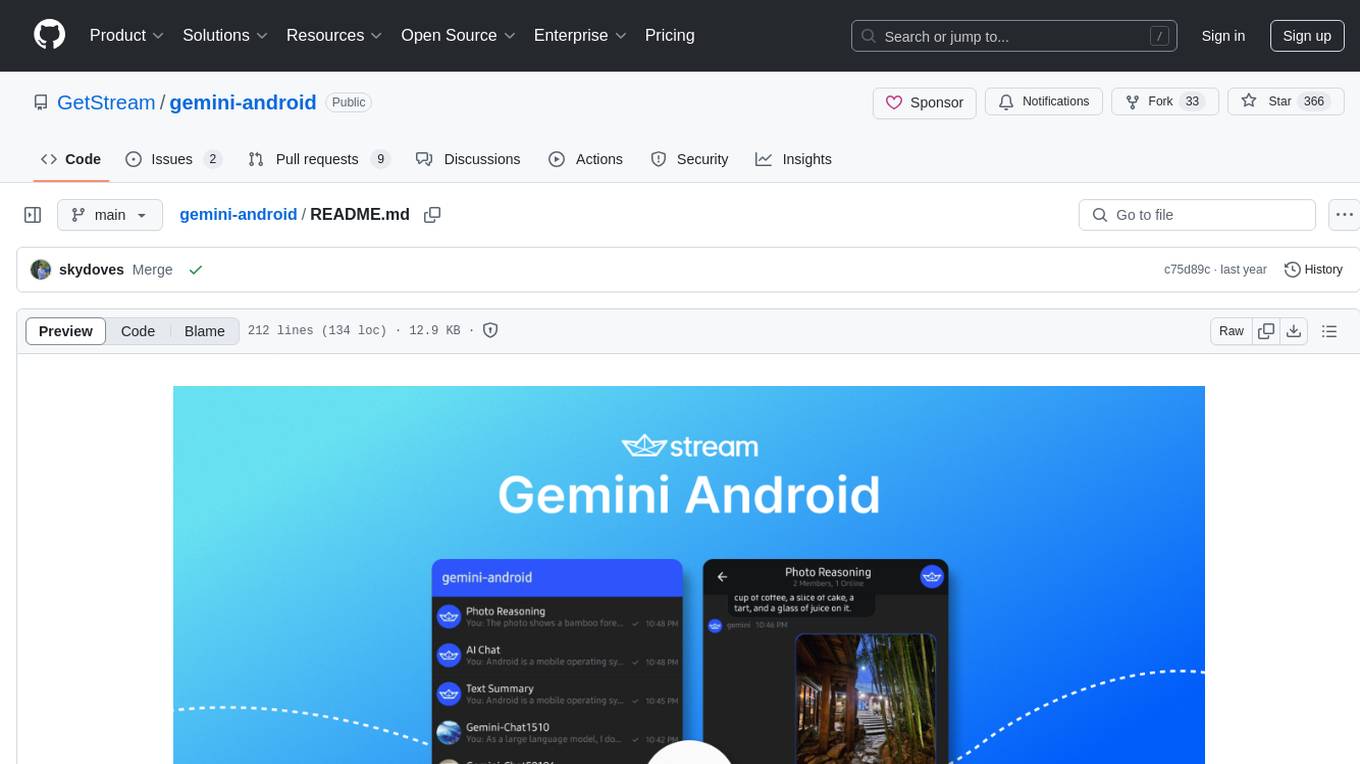

gemini-android

Gemini-Android is a mobile application that allows users to track their expenses and manage their finances on the go. The app provides a user-friendly interface for adding and categorizing expenses, setting budgets, and generating reports to help users make informed financial decisions. With Gemini-Android, users can easily monitor their spending habits, identify areas for saving, and stay on top of their financial goals.

wealth-tracker

Wealth Tracker is a personal finance management tool designed to help users track their income, expenses, and investments in one place. With intuitive features and customizable categories, users can easily monitor their financial health and make informed decisions. The tool provides detailed reports and visualizations to analyze spending patterns and set financial goals. Whether you are budgeting, saving for a big purchase, or planning for retirement, Wealth Tracker offers a comprehensive solution to manage your money effectively.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

TaxHacker

TaxHacker is a self-hosted accountant app designed for freelancers and small businesses to automate expense and income tracking using the power of GenAI. It can analyze uploaded photos, receipts, or PDFs to extract important data like name, total amount, date, merchant, and VAT, saving them as structured transactions. The tool supports automatic currency conversion, filters, multiple projects, import-export functionalities, custom categories, and allows users to create custom fields for extraction. TaxHacker simplifies reporting and tax filing by organizing and storing data efficiently.

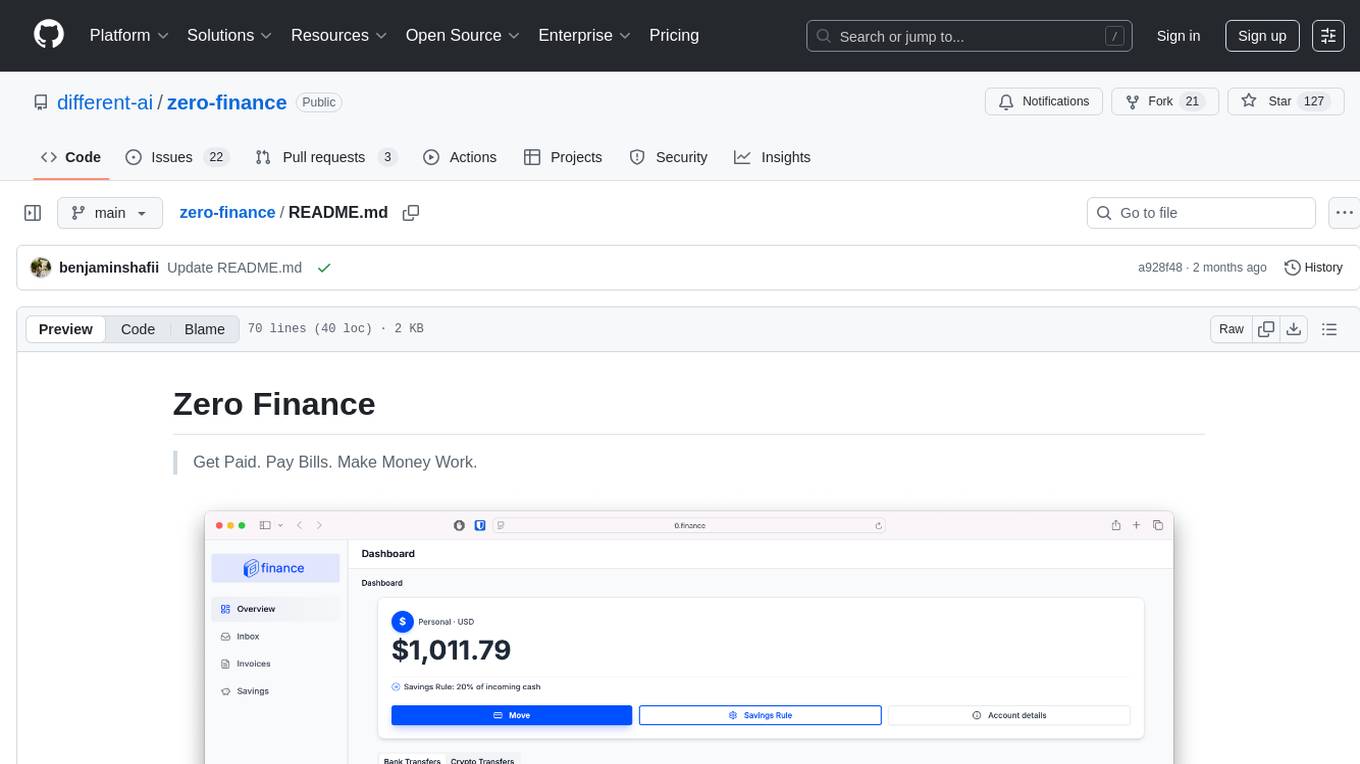

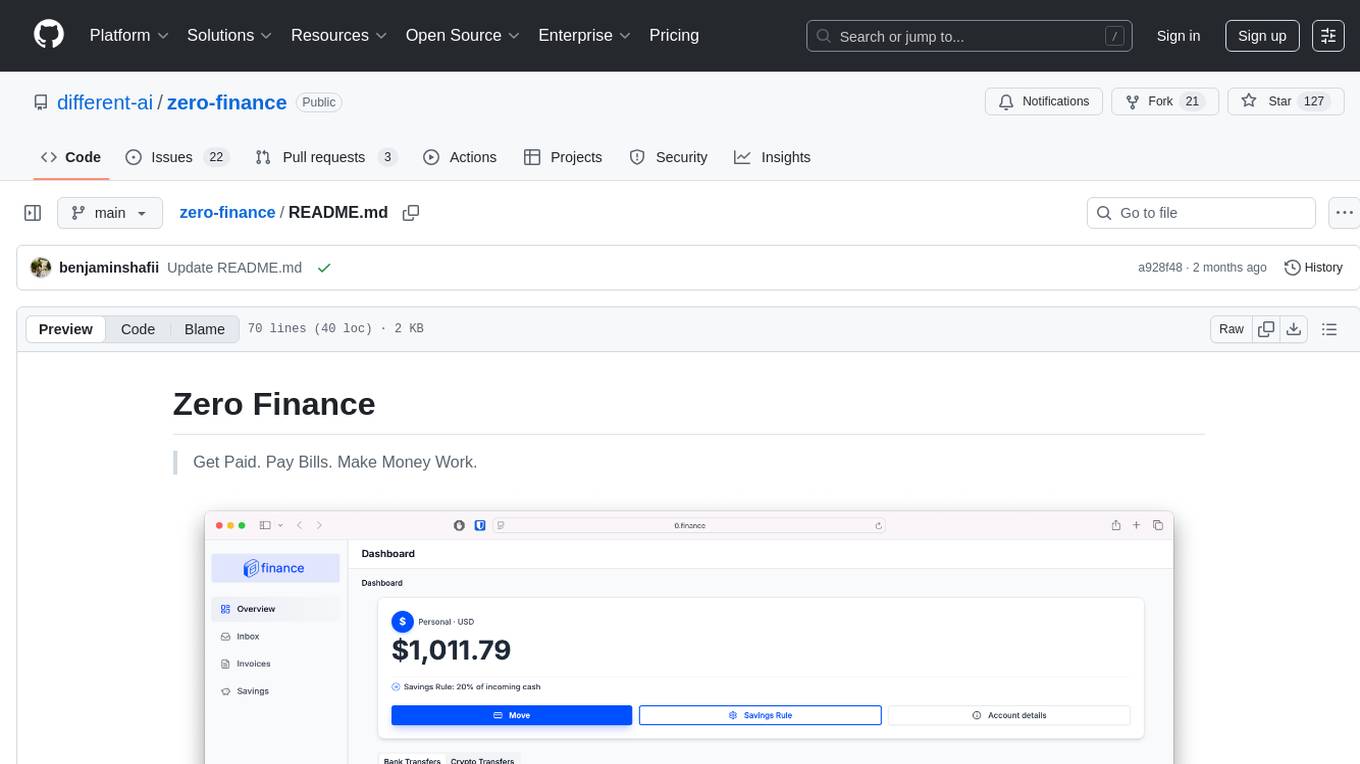

zero-finance

Zero Finance is a bank account that automates your finances, allowing you to easily create invoices, get paid directly to your personal IBAN, use a debit card worldwide with 0% conversion fees, optimize yield by automatically allocating idle funds to highest-yielding opportunities, and automate finances with a complete accounting system including expense tracking and tax optimization. The tool also syncs with various data sources to help you stay on track of your financial tasks by surfacing critical information, auto-categorizing based on AI-rules, auto-scheduling vendor payments from invoices via AI-rules, and allowing export to CSV. The project is structured as a monorepo containing multiple packages for the bank web app and a smart contract for securely automating savings.

pennywiseai-tracker

PennyWise AI Tracker is a free and open-source expense tracker that uses on-device AI to turn bank SMS into a clean and searchable money timeline. It offers smart SMS parsing, clear insights, subscription tracking, on-device AI assistant, auto-categorization, data export, and supports major Indian banks. All processing happens on the user's device for privacy. The tool is designed for Android users in India who want automatic expense tracking from bank SMS, with clean categories, subscription detection, and clear insights.

For similar jobs

SheetCopilot

SheetCopilot is an assistant agent that manipulates spreadsheets by following user commands. It leverages Large Language Models (LLMs) to interact with spreadsheets like a human expert, enabling non-expert users to complete tasks on complex software such as Google Sheets and Excel via a language interface. The tool observes spreadsheet states, polishes generated solutions based on external action documents and error feedback, and aims to improve success rate and efficiency. SheetCopilot offers a dataset with diverse task categories and operations, supporting operations like entry & manipulation, management, formatting, charts, and pivot tables. Users can interact with SheetCopilot in Excel or Google Sheets, executing tasks like calculating revenue, creating pivot tables, and plotting charts. The tool's evaluation includes performance comparisons with leading LLMs and VBA-based methods on specific datasets, showcasing its capabilities in controlling various aspects of a spreadsheet.

LangGraph-Expense-Tracker

LangGraph Expense tracker is a small project that explores the possibilities of LangGraph. It allows users to send pictures of invoices, which are then structured and categorized into expenses and stored in a database. The project includes functionalities for invoice extraction, database setup, and API configuration. It consists of various modules for categorizing expenses, creating database tables, and running the API. The database schema includes tables for categories, payment methods, and expenses, each with specific columns to track transaction details. The API documentation is available for reference, and the project utilizes LangChain for processing expense data.

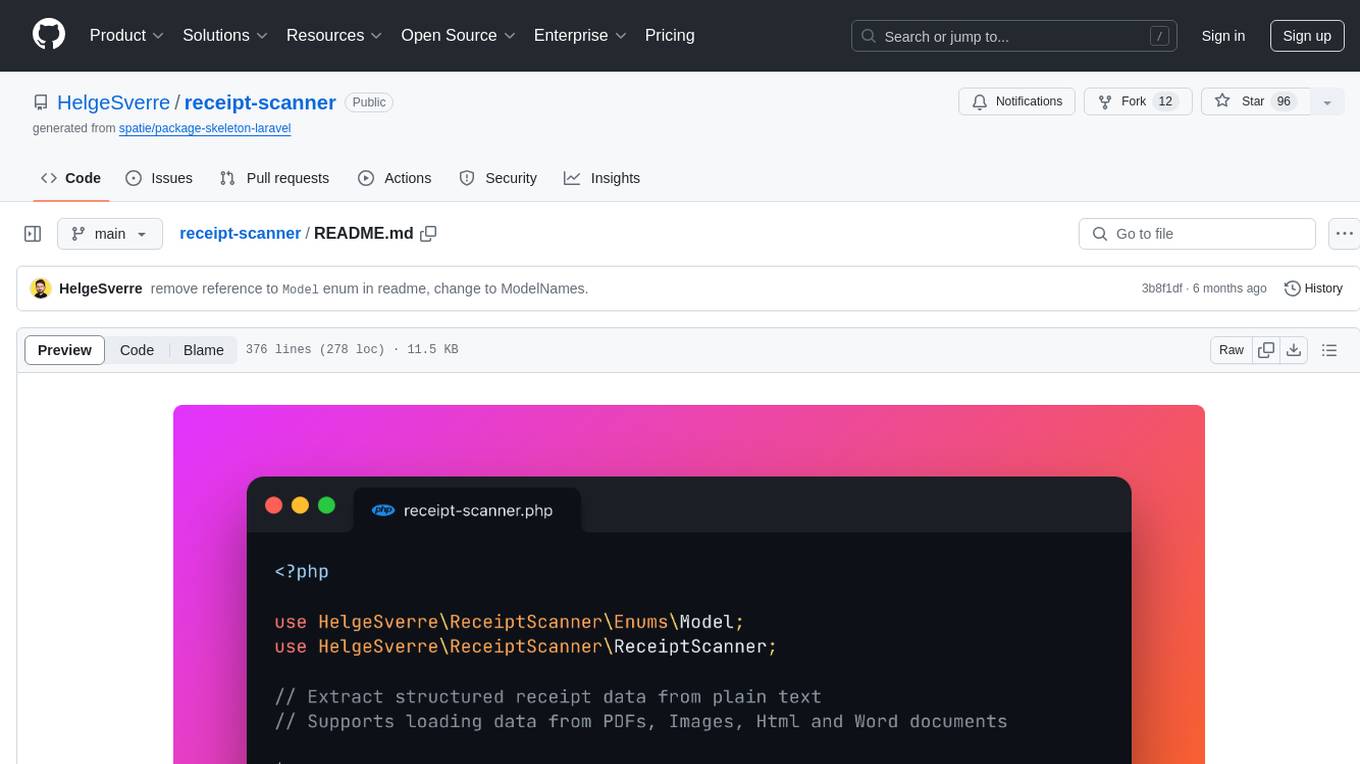

receipt-scanner

The receipt-scanner repository is an AI-Powered Receipt and Invoice Scanner for Laravel that allows users to easily extract structured receipt data from images, PDFs, and emails within their Laravel application using OpenAI. It provides a light wrapper around OpenAI Chat and Completion endpoints, supports various input formats, and integrates with Textract for OCR functionality. Users can install the package via composer, publish configuration files, and use it to extract data from plain text, PDFs, images, Word documents, and web content. The scanned receipt data is parsed into a DTO structure with main classes like Receipt, Merchant, and LineItem.

actual-ai

Actual AI is a project designed to categorize uncategorized transactions for Actual Budget using OpenAI or OpenAI specification compatible API. It sends requests to the OpenAI API to classify transactions based on their description, amount, and notes. Transactions that cannot be classified are marked as 'not guessed' in notes. The tool allows users to sync accounts before classification and classify transactions on a cron schedule. Guessed transactions are marked in notes for easy review.

gemini-android

Gemini-Android is a mobile application that allows users to track their expenses and manage their finances on the go. The app provides a user-friendly interface for adding and categorizing expenses, setting budgets, and generating reports to help users make informed financial decisions. With Gemini-Android, users can easily monitor their spending habits, identify areas for saving, and stay on top of their financial goals.

wealth-tracker

Wealth Tracker is a personal finance management tool designed to help users track their income, expenses, and investments in one place. With intuitive features and customizable categories, users can easily monitor their financial health and make informed decisions. The tool provides detailed reports and visualizations to analyze spending patterns and set financial goals. Whether you are budgeting, saving for a big purchase, or planning for retirement, Wealth Tracker offers a comprehensive solution to manage your money effectively.

TaxHacker

TaxHacker is a self-hosted accountant app designed for freelancers and small businesses to automate expense and income tracking using the power of GenAI. It can analyze uploaded photos, receipts, or PDFs to extract important data like name, total amount, date, merchant, and VAT, saving them as structured transactions. The tool supports automatic currency conversion, filters, multiple projects, import-export functionalities, custom categories, and allows users to create custom fields for extraction. TaxHacker simplifies reporting and tax filing by organizing and storing data efficiently.

zero-finance

Zero Finance is a bank account that automates your finances, allowing you to easily create invoices, get paid directly to your personal IBAN, use a debit card worldwide with 0% conversion fees, optimize yield by automatically allocating idle funds to highest-yielding opportunities, and automate finances with a complete accounting system including expense tracking and tax optimization. The tool also syncs with various data sources to help you stay on track of your financial tasks by surfacing critical information, auto-categorizing based on AI-rules, auto-scheduling vendor payments from invoices via AI-rules, and allowing export to CSV. The project is structured as a monorepo containing multiple packages for the bank web app and a smart contract for securely automating savings.