lollms-webui

Lord of Large Language and Multi modal Systems Web User Interface

Stars: 4753

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

README:

Here is a tutorial on how to use function calls in lollms

Welcome to LoLLMS WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all), the hub for LLM (Large Language Models) and multimodal intelligence systems. This project aims to provide a user-friendly interface to access and utilize various LLM and other AI models for a wide range of tasks. Whether you need help with writing, coding, organizing data, analyzing images, generating images, generating music or seeking answers to your questions, LoLLMS WebUI has got you covered.

As an all-encompassing tool with access to over 500 AI expert conditioning across diverse domains and more than 2500 fine tuned models over multiple domains, you now have an immediate resource for any problem. Whether your car needs repair or if you need coding assistance in Python, C++ or JavaScript; feeling down about life decisions that were made wrongly yet unable to see how? Ask Lollms. Need guidance on what lies ahead healthwise based on current symptoms presented, our medical assistance AI can help you get a potential diagnosis and guide you to seek the right medical care. If stuck with legal matters such contract interpretation feel free to reach out to Lawyer personality, to get some insight at hand -all without leaving comfort home. Not only does it aid students struggling through those lengthy lectors but provides them extra support during assessments too, so they are able grasp concepts properly rather then just reading along lines which could leave many confused afterward. Want some entertainment? Then engage Laughter Botand let yourself go enjoy hysterical laughs until tears roll from eyes while playing Dungeons&Dragonsor make up crazy stories together thanks to Creative Story Generator. Need illustration work done? No worries, Artbot got us covered there! And last but definitely not least LordOfMusic is here for music generation according to individual specifications. So essentially say goodbye boring nights alone because everything possible can be achieved within one single platform called Lollms...

- Choose your preferred binding, model, and personality for your tasks

- Enhance your emails, essays, code debugging, thought organization, and more

- Explore a wide range of functionalities, such as searching, data organization, image generation, and music generation

- Easy-to-use UI with light and dark mode options

- Integration with GitHub repository for easy access

- Support for different personalities with predefined welcome messages

- Thumb up/down rating for generated answers

- Copy, edit, and remove messages

- Local database storage for your discussions

- Search, export, and delete multiple discussions

- Support for image generation based on stable diffusion/flux/comfyui/open ai dall-e/Midjourney/Novita ai services

- Support for video generation based lumalabs/cogvideo_x/runwayml/stable_diffusion/Novita ai services

- Support for music generation based on musicgen

- Support for multi generation peer to peer network through Lollms Nodes and Petals.

- Support for Docker, conda, and manual virtual environment setups

- Support for LLMs using multiple bindings:

- Hugging face local models

- GGUF/GGML local models

- EXLLama v2 local models (EXT/AWQ/GPTQ)

- Ollama service

- vllm service

- Openai service

- Anthropic service

- Open-router service

- Novita-ai service

- Support for prompt Routing to various models depending on the complexity of the task

Thank you for all users who tested this tool and helped making it more user friendly.

Download the installation script from scripts folder and run it. The installation scripts are:

-

lollms_installer.batfor Windows. -

lollms_installer.shfor Linux. -

lollms_installer_macos.shfor Mac.

Since v 10.14, manual installation is back:

- First ensure Python 3.11 is installed You can check your Python version with:

python --versionIf you need to install Python 3.11, download it from: https://www.python.org/downloads/release/python-3118/

- Clone the repository with submodules

git clone --recursive https://github.com/ParisNeo/lollms-webui.git

cd lollms-webui

git submodule update --init --recursive- Create and activate a virtual environment (recommended)

python -m venv venv.\venv\Scripts\activatesource venv/bin/activate- Install requirements

pip install --upgrade pip

pip install -r requirements.txt

pip install -e lollms_core- Create global_paths_cfg.yaml

cat > global_paths_cfg.yaml << EOL

lollms_path: $(pwd)/lollms_core/lollms

lollms_personal_path: $(pwd)/personal_data

EOL- Install desired bindings You can set environment variables to select which bindings to install For example, to install ollama binding:

python zoos/bindings_zoo/ollama_ai/__init__.pyList of available bindings and their installation commands: elf: python zoos/bindings_zoo/elf/init.py openrouter: python zoos/bindings_zoo/openrouter/init.py openai: python zoos/bindings_zoo/openai/init.py groq: python zoos/bindings_zoo/groq/init.py mistralai: python zoos/bindings_zoo/mistralai/init.py ollama: python zoos/bindings_zoo/ollama_ai/init.py vllm: python zoos/bindings_zoo/vllm/init.py litellm: python zoos/bindings_zoo/litellm/init.py exllamav2: python zoos/bindings_zoo/exllamav2/init.py python_llama_cpp: python zoos/bindings_zoo/python_llama_cpp/init.py huggingface: python zoos/bindings_zoo/huggingface/init.py remote_lollms: python zoos/bindings_zoo/remote_lollms/init.py xAI: python zoos/bindings_zoo/xAI/init.py gemini: python zoos/bindings_zoo/gemini/init.py novita_ai: python zoos/bindings_zoo/novita_ai/init.py 7. Run the server

python app.pynow you are ready to run lolmms: python app.py

Lollms' Smart Routing feature goes beyond just selecting the right model for accuracy. It empowers you to optimize your text generation process for two key factors: money and speed.

Optimizing for Money:

Imagine you're using multiple text generation services with varying price points. Some models might be exceptionally powerful but come with a hefty price tag, while others offer a more budget-friendly option with slightly less capability. Smart Routing lets you leverage this price difference to your advantage:

- Cost-Effective Selection: By defining a hierarchy of models based on their cost, Smart Routing can automatically choose the most economical model for your prompt. This ensures you're only paying for the power you need, minimizing unnecessary expenses.

- Dynamic Price Adjustment: As your prompt complexity changes, Smart Routing can dynamically switch between models, ensuring you're always using the most cost-effective option for the task at hand.

Optimizing for Speed:

Speed is another critical factor in text generation, especially when dealing with large volumes of content or time-sensitive tasks. Smart Routing allows you to prioritize speed by:

- Prioritizing Smaller Models: By placing faster, less resource-intensive models higher in the hierarchy, Smart Routing can prioritize speed for simple prompts. This ensures quick responses and efficient processing.

- Dynamic Speed Adjustment: For more complex prompts requiring the power of larger models, Smart Routing can seamlessly switch to those models while maintaining a balance between speed and accuracy.

Example Use Cases:

- Content Marketing: Use Smart Routing to select the most cost-effective model for generating large volumes of blog posts or social media content.

- Customer Support: Prioritize speed by using smaller models for quick responses to frequently asked questions, while leveraging more powerful models for complex inquiries.

- Research and Development: Optimize for both money and speed by using a tiered model hierarchy, ensuring you can quickly generate initial drafts while using more powerful models for in-depth analysis.

Conclusion:

Smart Routing is a versatile tool that empowers you to optimize your text generation process for both cost and speed. By leveraging a hierarchy of models and dynamically adjusting your selection based on prompt complexity, you can achieve the perfect balance between efficiency, accuracy, and cost-effectiveness.

By using this tool, users agree to follow these guidelines :

- This tool is not meant to be used for building and spreading fakenews / misinformation.

- You are responsible for what you generate by using this tool. The creators will take no responsibility for anything created via this lollms.

- You can use lollms in your own project free of charge if you agree to respect the Apache 2.0 licenseterms. Please refer to https://www.apache.org/licenses/LICENSE-2.0 .

- You are not allowed to use lollms to harm others directly or indirectly. This tool is meant for peaceful purposes and should be used for good never for bad.

- Users must comply with local laws when accessing content provided by third parties like OpenAI API etc., including copyright restrictions where applicable.

Please be aware that LoLLMs WebUI does not have built-in user authentication and is primarily designed for local use. Exposing the WebUI to external access without proper security measures could lead to potential vulnerabilities.

If you require remote access to LoLLMs, it is strongly recommended to follow these security guidelines:

-

Activate Headless Mode: Enabling headless mode will expose only the generation API while turning off other potentially vulnerable endpoints. This helps to minimize the attack surface.

-

Set Up a Secure Tunnel: Establish a secure tunnel between the localhost running LoLLMs and the remote PC that needs access. This ensures that the communication between the two devices is encrypted and protected.

-

Modify Configuration Settings: After setting up the secure tunnel, edit the

/configs/local_config.yamlfile and adjust the following settings:host: 0.0.0.0 # Allow remote connections port: 9600 # Change the port number if desired (default is 9600) force_accept_remote_access: true # Force accepting remote connections headless_server_mode: true # Set to true for API-only access, or false if the WebUI is needed

By following these security practices, you can help protect your LoLLMs instance and its users from potential security risks when enabling remote access.

Remember, it is crucial to prioritize security and take necessary precautions to safeguard your system and sensitive information. If you have any further questions or concerns regarding the security of LoLLMs, please consult the documentation or reach out to the community for assistance.

Stay safe and enjoy using LoLLMs responsibly!

Since v18, lollms can be run using Docker. Just build the image. Assuming you are in lollms-webui folder :

docker build -t lollms-webui-app .Then run ot using:

docker run -d -p 9600:9600 --name lollms-webui-container lollms-webui-app When lollms is first run, you can use

Large Language Models are amazing tools that can be used for diverse purposes. Lollms was built to harness this power to help the user enhance its productivity. But you need to keep in mind that these models have their limitations and should not replace human intelligence or creativity, but rather augment it by providing suggestions based on patterns found within large amounts of data. It is up to each individual how they choose to use them responsibly!

The performance of the system varies depending on the used model, its size and the dataset on whichit has been trained. The larger a language model's training set (the more examples), generally speaking - better results will follow when using such systems as opposed those with smaller ones. But there is still no guarantee that the output generated from any given prompt would always be perfect and it may contain errors due various reasons. So please make sure you do not use it for serious matters like choosing medications or making financial decisions without consulting an expert first hand!

This repository uses code under ApacheLicense Version 2.0 , see license file for details about rights granted with respect to usage & distribution

ParisNeo 2023

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lollms-webui

Similar Open Source Tools

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

morphik-core

Morphik is an AI-native toolset designed to help developers integrate context into their AI applications by providing tools to store, represent, and search unstructured data. It offers features such as multimodal search, fast metadata extraction, and integrations with existing tools. Morphik aims to address the challenges of traditional AI approaches that struggle with visually rich documents and provide a more comprehensive solution for understanding and processing complex data.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

supervisely

Supervisely is a computer vision platform that provides a range of tools and services for developing and deploying computer vision solutions. It includes a data labeling platform, a model training platform, and a marketplace for computer vision apps. Supervisely is used by a variety of organizations, including Fortune 500 companies, research institutions, and government agencies.

SalesGPT

SalesGPT is an open-source AI agent designed for sales, utilizing context-awareness and LLMs to work across various communication channels like voice, email, and texting. It aims to enhance sales conversations by understanding the stage of the conversation and providing tools like product knowledge base to reduce errors. The agent can autonomously generate payment links, handle objections, and close sales. It also offers features like automated email communication, meeting scheduling, and integration with various LLMs for customization. SalesGPT is optimized for low latency in voice channels and ensures human supervision where necessary. The tool provides enterprise-grade security and supports LangSmith tracing for monitoring and evaluation of intelligent agents built on LLM frameworks.

onnx

Open Neural Network Exchange (ONNX) is an open ecosystem that empowers AI developers to choose the right tools as their project evolves. ONNX provides an open source format for AI models, both deep learning and traditional ML. It defines an extensible computation graph model, as well as definitions of built-in operators and standard data types. Currently, we focus on the capabilities needed for inferencing (scoring). ONNX is widely supported and can be found in many frameworks, tools, and hardware, enabling interoperability between different frameworks and streamlining the path from research to production to increase the speed of innovation in the AI community. Join us to further evolve ONNX.

Robyn

Robyn is an experimental, semi-automated and open-sourced Marketing Mix Modeling (MMM) package from Meta Marketing Science. It uses various machine learning techniques to define media channel efficiency and effectivity, explore adstock rates and saturation curves. Built for granular datasets with many independent variables, especially suitable for digital and direct response advertisers with rich data sources. Aiming to democratize MMM, make it accessible for advertisers of all sizes, and contribute to the measurement landscape.

recognize

Recognize is a smart media tagging tool for Nextcloud that automatically categorizes photos and music by recognizing faces, animals, landscapes, food, vehicles, buildings, landmarks, monuments, music genres, and human actions in videos. It uses pre-trained models for object detection, landmark recognition, face comparison, music genre classification, and video classification. The tool ensures privacy by processing images locally without sending data to cloud providers. However, it cannot process end-to-end encrypted files. Recognize is rated positively for ethical AI practices in terms of open-source software, freely available models, and training data transparency, except for music genre recognition due to limited access to training data.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

ChainForge

ChainForge is a visual programming environment for battle-testing prompts to LLMs. It is geared towards early-stage, quick-and-dirty exploration of prompts, chat responses, and response quality that goes beyond ad-hoc chatting with individual LLMs. With ChainForge, you can: * Query multiple LLMs at once to test prompt ideas and variations quickly and effectively. * Compare response quality across prompt permutations, across models, and across model settings to choose the best prompt and model for your use case. * Setup evaluation metrics (scoring function) and immediately visualize results across prompts, prompt parameters, models, and model settings. * Hold multiple conversations at once across template parameters and chat models. Template not just prompts, but follow-up chat messages, and inspect and evaluate outputs at each turn of a chat conversation. ChainForge comes with a number of example evaluation flows to give you a sense of what's possible, including 188 example flows generated from benchmarks in OpenAI evals. This is an open beta of Chainforge. We support model providers OpenAI, HuggingFace, Anthropic, Google PaLM2, Azure OpenAI endpoints, and Dalai-hosted models Alpaca and Llama. You can change the exact model and individual model settings. Visualization nodes support numeric and boolean evaluation metrics. ChainForge is built on ReactFlow and Flask.

promptmage

PromptMage simplifies the process of creating and managing LLM workflows as a self-hosted solution. It offers an intuitive interface for prompt testing and comparison, incorporates version control features, and aims to improve productivity in both small teams and large enterprises. The tool bridges the gap in LLM workflow management, empowering developers, researchers, and organizations to make LLM technology more accessible and manageable for the next wave of AI innovations.

kitops

KitOps is a packaging and versioning system for AI/ML projects that uses open standards so it works with the AI/ML, development, and DevOps tools you are already using. KitOps simplifies the handoffs between data scientists, application developers, and SREs working with LLMs and other AI/ML models. KitOps' ModelKits are a standards-based package for models, their dependencies, configurations, and codebases. ModelKits are portable, reproducible, and work with the tools you already use.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

obsidian-Smart2Brain

Your Smart Second Brain is a free and open-source Obsidian plugin that serves as your personal assistant, powered by large language models like ChatGPT or Llama2. It can directly access and process your notes, eliminating the need for manual prompt editing, and it can operate completely offline, ensuring your data remains private and secure.

AI-Horde

The AI Horde is an enterprise-level ML-Ops crowdsourced distributed inference cluster for AI Models. This middleware can support both Image and Text generation. It is infinitely scalable and supports seamless drop-in/drop-out of compute resources. The Public version allows people without a powerful GPU to use Stable Diffusion or Large Language Models like Pygmalion/Llama by relying on spare/idle resources provided by the community and also allows non-python clients, such as games and apps, to use AI-provided generations.

For similar tasks

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

continue

Continue is an open-source autopilot for VS Code and JetBrains that allows you to code with any LLM. With Continue, you can ask coding questions, edit code in natural language, generate files from scratch, and more. Continue is easy to use and can help you save time and improve your coding skills.

anterion

Anterion is an open-source AI software engineer that extends the capabilities of `SWE-agent` to plan and execute open-ended engineering tasks, with a frontend inspired by `OpenDevin`. It is designed to help users fix bugs and prototype ideas with ease. Anterion is equipped with easy deployment and a user-friendly interface, making it accessible to users of all skill levels.

sglang

SGLang is a structured generation language designed for large language models (LLMs). It makes your interaction with LLMs faster and more controllable by co-designing the frontend language and the runtime system. The core features of SGLang include: - **A Flexible Front-End Language**: This allows for easy programming of LLM applications with multiple chained generation calls, advanced prompting techniques, control flow, multiple modalities, parallelism, and external interaction. - **A High-Performance Runtime with RadixAttention**: This feature significantly accelerates the execution of complex LLM programs by automatic KV cache reuse across multiple calls. It also supports other common techniques like continuous batching and tensor parallelism.

ChatDBG

ChatDBG is an AI-based debugging assistant for C/C++/Python/Rust code that integrates large language models into a standard debugger (`pdb`, `lldb`, `gdb`, and `windbg`) to help debug your code. With ChatDBG, you can engage in a dialog with your debugger, asking open-ended questions about your program, like `why is x null?`. ChatDBG will _take the wheel_ and steer the debugger to answer your queries. ChatDBG can provide error diagnoses and suggest fixes. As far as we are aware, ChatDBG is the _first_ debugger to automatically perform root cause analysis and to provide suggested fixes.

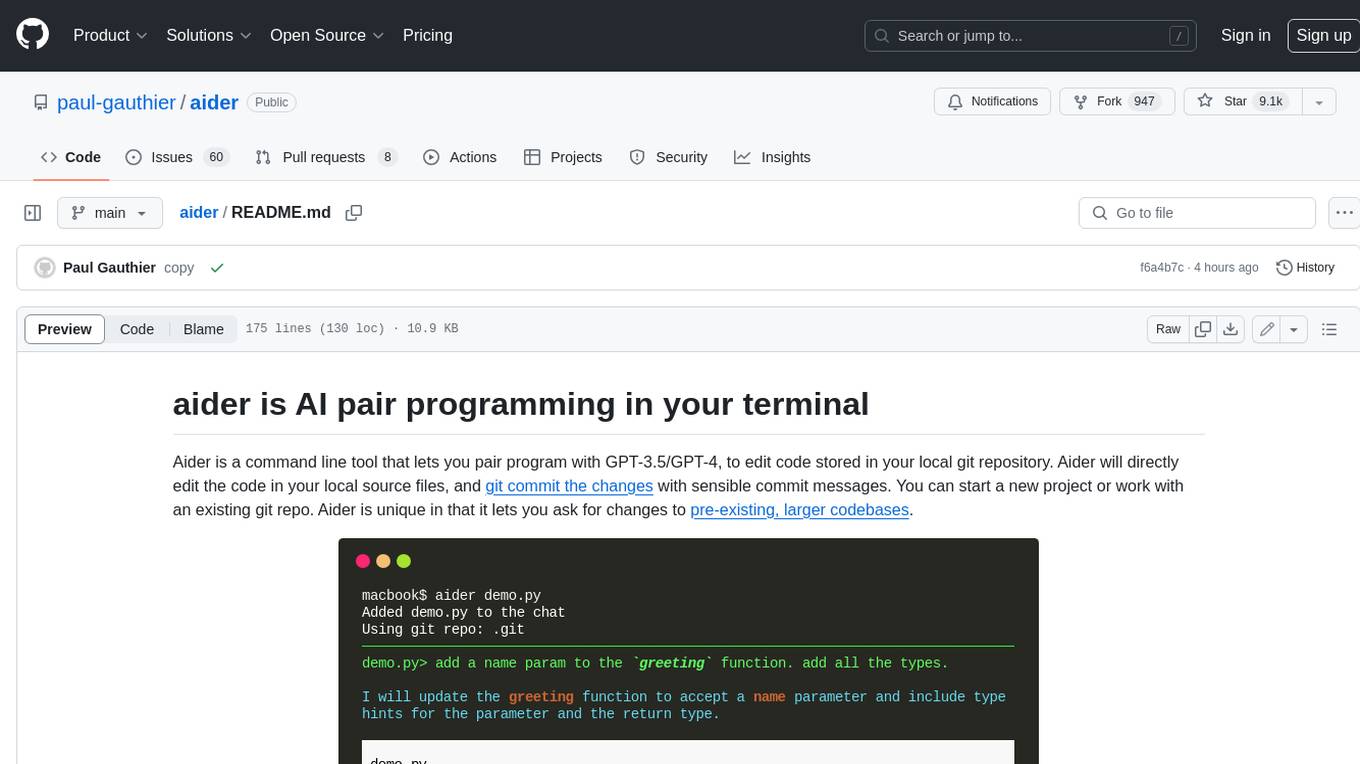

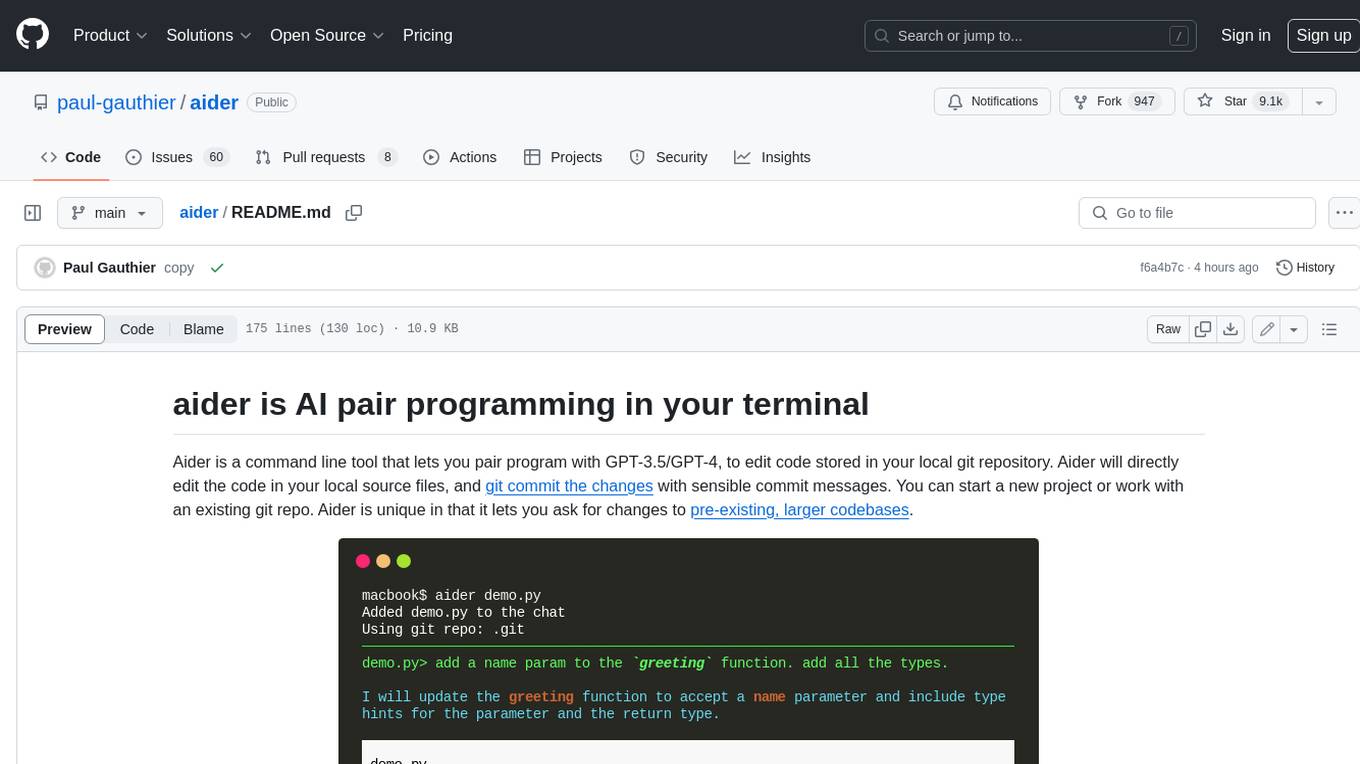

aider

Aider is a command-line tool that lets you pair program with GPT-3.5/GPT-4 to edit code stored in your local git repository. Aider will directly edit the code in your local source files and git commit the changes with sensible commit messages. You can start a new project or work with an existing git repo. Aider is unique in that it lets you ask for changes to pre-existing, larger codebases.

chatgpt-web

ChatGPT Web is a web application that provides access to the ChatGPT API. It offers two non-official methods to interact with ChatGPT: through the ChatGPTAPI (using the `gpt-3.5-turbo-0301` model) or through the ChatGPTUnofficialProxyAPI (using a web access token). The ChatGPTAPI method is more reliable but requires an OpenAI API key, while the ChatGPTUnofficialProxyAPI method is free but less reliable. The application includes features such as user registration and login, synchronization of conversation history, customization of API keys and sensitive words, and management of users and keys. It also provides a user interface for interacting with ChatGPT and supports multiple languages and themes.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

aider

Aider is a command-line tool that lets you pair program with GPT-3.5/GPT-4 to edit code stored in your local git repository. Aider will directly edit the code in your local source files and git commit the changes with sensible commit messages. You can start a new project or work with an existing git repo. Aider is unique in that it lets you ask for changes to pre-existing, larger codebases.

devika

Devika is an advanced AI software engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective. Devika utilizes large language models, planning and reasoning algorithms, and web browsing abilities to intelligently develop software. Devika aims to revolutionize the way we build software by providing an AI pair programmer who can take on complex coding tasks with minimal human guidance. Whether you need to create a new feature, fix a bug, or develop an entire project from scratch, Devika is here to assist you.

AIlice

AIlice is a fully autonomous, general-purpose AI agent that aims to create a standalone artificial intelligence assistant, similar to JARVIS, based on the open-source LLM. AIlice achieves this goal by building a "text computer" that uses a Large Language Model (LLM) as its core processor. Currently, AIlice demonstrates proficiency in a range of tasks, including thematic research, coding, system management, literature reviews, and complex hybrid tasks that go beyond these basic capabilities. AIlice has reached near-perfect performance in everyday tasks using GPT-4 and is making strides towards practical application with the latest open-source models. We will ultimately achieve self-evolution of AI agents. That is, AI agents will autonomously build their own feature expansions and new types of agents, unleashing LLM's knowledge and reasoning capabilities into the real world seamlessly.

TeroSubtitler

Tero Subtitler is an open source, cross-platform, and free subtitle editing software with a user-friendly interface. It offers fully fledged editing with SMPTE and MEDIA modes, support for various subtitle formats, multi-level undo/redo, search and replace, auto-backup, source and transcription modes, translation memory, audiovisual preview, timeline with waveform visualizer, manipulation tools, formatting options, quality control features, translation and transcription capabilities, validation tools, automation for correcting errors, and more. It also includes features like exporting subtitles to MP3, importing/exporting Blu-ray SUP format, generating blank video, generating video with hardcoded subtitles, video dubbing, and more. The tool utilizes powerful multimedia playback engines like mpv, advanced audio/video manipulation tools like FFmpeg, tools for automatic transcription like whisper.cpp/Faster-Whisper, auto-translation API like Google Translate, and ElevenLabs TTS for video dubbing.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.