minio

The Object Store for AI Data Infrastructure

Stars: 46001

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

README:

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

This README provides quickstart instructions on running MinIO on bare metal hardware, including container-based installations. For Kubernetes environments, use the MinIO Kubernetes Operator.

Use the following commands to run a standalone MinIO server as a container.

Standalone MinIO servers are best suited for early development and evaluation. Certain features such as versioning, object locking, and bucket replication require distributed deploying MinIO with Erasure Coding. For extended development and production, deploy MinIO with Erasure Coding enabled - specifically, with a minimum of 4 drives per MinIO server. See MinIO Erasure Code Overview for more complete documentation.

Run the following command to run the latest stable image of MinIO as a container using an ephemeral data volume:

podman run -p 9000:9000 -p 9001:9001 \

quay.io/minio/minio server /data --console-address ":9001"The MinIO deployment starts using default root credentials minioadmin:minioadmin. You can test the deployment using the MinIO Console, an embedded

object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the

root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

You can also connect using any S3-compatible tool, such as the MinIO Client mc commandline tool. See

Test using MinIO Client mc for more information on using the mc commandline tool. For application developers,

see https://min.io/docs/minio/linux/developers/minio-drivers.html to view MinIO SDKs for supported languages.

NOTE: To deploy MinIO on with persistent storage, you must map local persistent directories from the host OS to the container using the

podman -voption. For example,-v /mnt/data:/datamaps the host OS drive at/mnt/datato/dataon the container.

Use the following commands to run a standalone MinIO server on macOS.

Standalone MinIO servers are best suited for early development and evaluation. Certain features such as versioning, object locking, and bucket replication require distributed deploying MinIO with Erasure Coding. For extended development and production, deploy MinIO with Erasure Coding enabled - specifically, with a minimum of 4 drives per MinIO server. See MinIO Erasure Code Overview for more complete documentation.

Run the following command to install the latest stable MinIO package using Homebrew. Replace /data with the path to the drive or directory in which you want MinIO to store data.

brew install minio/stable/minio

minio server /dataNOTE: If you previously installed minio using

brew install miniothen it is recommended that you reinstall minio fromminio/stable/minioofficial repo instead.

brew uninstall minio

brew install minio/stable/minioThe MinIO deployment starts using default root credentials minioadmin:minioadmin. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

You can also connect using any S3-compatible tool, such as the MinIO Client mc commandline tool. See Test using MinIO Client mc for more information on using the mc commandline tool. For application developers, see https://min.io/docs/minio/linux/developers/minio-drivers.html/ to view MinIO SDKs for supported languages.

Use the following command to download and run a standalone MinIO server on macOS. Replace /data with the path to the drive or directory in which you want MinIO to store data.

wget https://dl.min.io/server/minio/release/darwin-amd64/minio

chmod +x minio

./minio server /dataThe MinIO deployment starts using default root credentials minioadmin:minioadmin. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

You can also connect using any S3-compatible tool, such as the MinIO Client mc commandline tool. See Test using MinIO Client mc for more information on using the mc commandline tool. For application developers, see https://min.io/docs/minio/linux/developers/minio-drivers.html to view MinIO SDKs for supported languages.

Use the following command to run a standalone MinIO server on Linux hosts running 64-bit Intel/AMD architectures. Replace /data with the path to the drive or directory in which you want MinIO to store data.

wget https://dl.min.io/server/minio/release/linux-amd64/minio

chmod +x minio

./minio server /dataThe following table lists supported architectures. Replace the wget URL with the architecture for your Linux host.

| Architecture | URL |

|---|---|

| 64-bit Intel/AMD | https://dl.min.io/server/minio/release/linux-amd64/minio |

| 64-bit ARM | https://dl.min.io/server/minio/release/linux-arm64/minio |

| 64-bit PowerPC LE (ppc64le) | https://dl.min.io/server/minio/release/linux-ppc64le/minio |

| IBM Z-Series (S390X) | https://dl.min.io/server/minio/release/linux-s390x/minio |

The MinIO deployment starts using default root credentials minioadmin:minioadmin. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

You can also connect using any S3-compatible tool, such as the MinIO Client mc commandline tool. See Test using MinIO Client mc for more information on using the mc commandline tool. For application developers, see https://min.io/docs/minio/linux/developers/minio-drivers.html to view MinIO SDKs for supported languages.

NOTE: Standalone MinIO servers are best suited for early development and evaluation. Certain features such as versioning, object locking, and bucket replication require distributed deploying MinIO with Erasure Coding. For extended development and production, deploy MinIO with Erasure Coding enabled - specifically, with a minimum of 4 drives per MinIO server. See MinIO Erasure Code Overview for more complete documentation.

To run MinIO on 64-bit Windows hosts, download the MinIO executable from the following URL:

https://dl.min.io/server/minio/release/windows-amd64/minio.exeUse the following command to run a standalone MinIO server on the Windows host. Replace D:\ with the path to the drive or directory in which you want MinIO to store data. You must change the terminal or powershell directory to the location of the minio.exe executable, or add the path to that directory to the system $PATH:

minio.exe server D:\The MinIO deployment starts using default root credentials minioadmin:minioadmin. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

You can also connect using any S3-compatible tool, such as the MinIO Client mc commandline tool. See Test using MinIO Client mc for more information on using the mc commandline tool. For application developers, see https://min.io/docs/minio/linux/developers/minio-drivers.html to view MinIO SDKs for supported languages.

NOTE: Standalone MinIO servers are best suited for early development and evaluation. Certain features such as versioning, object locking, and bucket replication require distributed deploying MinIO with Erasure Coding. For extended development and production, deploy MinIO with Erasure Coding enabled - specifically, with a minimum of 4 drives per MinIO server. See MinIO Erasure Code Overview for more complete documentation.

Use the following commands to compile and run a standalone MinIO server from source. Source installation is only intended for developers and advanced users. If you do not have a working Golang environment, please follow How to install Golang. Minimum version required is go1.21

go install github.com/minio/minio@latestThe MinIO deployment starts using default root credentials minioadmin:minioadmin. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

You can also connect using any S3-compatible tool, such as the MinIO Client mc commandline tool. See Test using MinIO Client mc for more information on using the mc commandline tool. For application developers, see https://min.io/docs/minio/linux/developers/minio-drivers.html to view MinIO SDKs for supported languages.

NOTE: Standalone MinIO servers are best suited for early development and evaluation. Certain features such as versioning, object locking, and bucket replication require distributed deploying MinIO with Erasure Coding. For extended development and production, deploy MinIO with Erasure Coding enabled - specifically, with a minimum of 4 drives per MinIO server. See MinIO Erasure Code Overview for more complete documentation.

MinIO strongly recommends against using compiled-from-source MinIO servers for production environments.

By default MinIO uses the port 9000 to listen for incoming connections. If your platform blocks the port by default, you may need to enable access to the port.

For hosts with ufw enabled (Debian based distros), you can use ufw command to allow traffic to specific ports. Use below command to allow access to port 9000

ufw allow 9000Below command enables all incoming traffic to ports ranging from 9000 to 9010.

ufw allow 9000:9010/tcpFor hosts with firewall-cmd enabled (CentOS), you can use firewall-cmd command to allow traffic to specific ports. Use below commands to allow access to port 9000

firewall-cmd --get-active-zonesThis command gets the active zone(s). Now, apply port rules to the relevant zones returned above. For example if the zone is public, use

firewall-cmd --zone=public --add-port=9000/tcp --permanentNote that permanent makes sure the rules are persistent across firewall start, restart or reload. Finally reload the firewall for changes to take effect.

firewall-cmd --reloadFor hosts with iptables enabled (RHEL, CentOS, etc), you can use iptables command to enable all traffic coming to specific ports. Use below command to allow

access to port 9000

iptables -A INPUT -p tcp --dport 9000 -j ACCEPT

service iptables restartBelow command enables all incoming traffic to ports ranging from 9000 to 9010.

iptables -A INPUT -p tcp --dport 9000:9010 -j ACCEPT

service iptables restartMinIO Server comes with an embedded web based object browser. Point your web browser to http://127.0.0.1:9000 to ensure your server has started successfully.

NOTE: MinIO runs console on random port by default, if you wish to choose a specific port use

--console-addressto pick a specific interface and port.

MinIO redirects browser access requests to the configured server port (i.e. 127.0.0.1:9000) to the configured Console port. MinIO uses the hostname or IP address specified in the request when building the redirect URL. The URL and port must be accessible by the client for the redirection to work.

For deployments behind a load balancer, proxy, or ingress rule where the MinIO host IP address or port is not public, use the MINIO_BROWSER_REDIRECT_URL environment variable to specify the external hostname for the redirect. The LB/Proxy must have rules for directing traffic to the Console port specifically.

For example, consider a MinIO deployment behind a proxy https://minio.example.net, https://console.minio.example.net with rules for forwarding traffic on port :9000 and :9001 to MinIO and the MinIO Console respectively on the internal network. Set MINIO_BROWSER_REDIRECT_URL to https://console.minio.example.net to ensure the browser receives a valid reachable URL.

| Dashboard | Creating a bucket |

|---|---|

|

|

mc provides a modern alternative to UNIX commands like ls, cat, cp, mirror, diff etc. It supports filesystems and Amazon S3 compatible cloud storage services. Follow the MinIO Client Quickstart Guide for further instructions.

Upgrades require zero downtime in MinIO, all upgrades are non-disruptive, all transactions on MinIO are atomic. So upgrading all the servers simultaneously is the recommended way to upgrade MinIO.

NOTE: requires internet access to update directly from https://dl.min.io, optionally you can host any mirrors at https://my-artifactory.example.com/minio/

- For deployments that installed the MinIO server binary by hand, use

mc admin update

mc admin update <minio alias, e.g., myminio>-

For deployments without external internet access (e.g. airgapped environments), download the binary from https://dl.min.io and replace the existing MinIO binary let's say for example

/opt/bin/minio, apply executable permissionschmod +x /opt/bin/minioand proceed to performmc admin service restart alias/. -

For installations using Systemd MinIO service, upgrade via RPM/DEB packages parallelly on all servers or replace the binary lets say

/opt/bin/minioon all nodes, apply executable permissionschmod +x /opt/bin/minioand process to performmc admin service restart alias/.

- Test all upgrades in a lower environment (DEV, QA, UAT) before applying to production. Performing blind upgrades in production environments carries significant risk.

- Read the release notes for MinIO before performing any upgrade, there is no forced requirement to upgrade to latest release upon every release. Some release may not be relevant to your setup, avoid upgrading production environments unnecessarily.

- If you plan to use

mc admin update, MinIO process must have write access to the parent directory where the binary is present on the host system. -

mc admin updateis not supported and should be avoided in kubernetes/container environments, please upgrade containers by upgrading relevant container images. - We do not recommend upgrading one MinIO server at a time, the product is designed to support parallel upgrades please follow our recommended guidelines.

- MinIO Erasure Code Overview

- Use

mcwith MinIO Server - Use

minio-goSDK with MinIO Server - The MinIO documentation website

Please follow MinIO Contributor's Guide

- MinIO source is licensed under the GNU AGPLv3.

- MinIO documentation is licensed under CC BY 4.0.

- License Compliance

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for minio

Similar Open Source Tools

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

OSWorld

OSWorld is a benchmarking tool designed to evaluate multimodal agents for open-ended tasks in real computer environments. It provides a platform for running experiments, setting up virtual machines, and interacting with the environment using Python scripts. Users can install the tool on their desktop or server, manage dependencies with Conda, and run benchmark tasks. The tool supports actions like executing commands, checking for specific results, and evaluating agent performance. OSWorld aims to facilitate research in AI by providing a standardized environment for testing and comparing different agent baselines.

cluster-toolkit

Cluster Toolkit is an open-source software by Google Cloud for deploying AI/ML and HPC environments on Google Cloud. It allows easy deployment following best practices, with high customization and extensibility. The toolkit includes tutorials, examples, and documentation for various modules designed for AI/ML and HPC use cases.

trinityX

TrinityX is an open-source HPC, AI, and cloud platform designed to provide all services required in a modern system, with full customization options. It includes default services like Luna node provisioner, OpenLDAP, SLURM or OpenPBS, Prometheus, Grafana, OpenOndemand, and more. TrinityX also sets up NFS-shared directories, OpenHPC applications, environment modules, HA, and more. Users can install TrinityX on Enterprise Linux, configure network interfaces, set up passwordless authentication, and customize the installation using Ansible playbooks. The platform supports HA, OpenHPC integration, and provides detailed documentation for users to contribute to the project.

nx_open

The `nx_open` repository contains open-source components for the Network Optix Meta Platform, used to build products like Nx Witness Video Management System. It includes source code, specifications, and a Desktop Client. The repository is licensed under Mozilla Public License 2.0. Users can build the Desktop Client and customize it using a zip file. The build environment supports Windows, Linux, and macOS platforms with specific prerequisites. The repository provides scripts for building, signing executable files, and running the Desktop Client. Compatibility with VMS Server versions is crucial, and automatic VMS updates are disabled for the open-source Desktop Client.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

gpustack

GPUStack is an open-source GPU cluster manager designed for running large language models (LLMs). It supports a wide variety of hardware, scales with GPU inventory, offers lightweight Python package with minimal dependencies, provides OpenAI-compatible APIs, simplifies user and API key management, enables GPU metrics monitoring, and facilitates token usage and rate metrics tracking. The tool is suitable for managing GPU clusters efficiently and effectively.

holohub

Holohub is a central repository for the NVIDIA Holoscan AI sensor processing community to share reference applications, operators, tutorials, and benchmarks. It includes example applications, community components, package configurations, and tutorials. Users and developers of the Holoscan platform are invited to reuse and contribute to this repository. The repository provides detailed instructions on prerequisites, building, running applications, contributing, and glossary terms. It also offers a searchable catalog of available components on the Holoscan SDK User Guide website.

hugescm

HugeSCM is a cloud-based version control system designed to address R&D repository size issues. It effectively manages large repositories and individual large files by separating data storage and utilizing advanced algorithms and data structures. It aims for optimal performance in handling version control operations of large-scale repositories, making it suitable for single large library R&D, AI model development, and game or driver development.

azure-search-openai-javascript

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

generative-ai-application-builder-on-aws

The Generative AI Application Builder on AWS (GAAB) is a solution that provides a web-based management dashboard for deploying customizable Generative AI (Gen AI) use cases. Users can experiment with and compare different combinations of Large Language Model (LLM) use cases, configure and optimize their use cases, and integrate them into their applications for production. The solution is targeted at novice to experienced users who want to experiment and productionize different Gen AI use cases. It uses LangChain open-source software to configure connections to Large Language Models (LLMs) for various use cases, with the ability to deploy chat use cases that allow querying over users' enterprise data in a chatbot-style User Interface (UI) and support custom end-user implementations through an API.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

metaflow-service

Metaflow Service is a metadata service implementation for Metaflow, providing a thin wrapper around a database to keep track of metadata associated with Flows, Runs, Steps, Tasks, and Artifacts. It includes features for managing DB migrations, launching compatible versions of the metadata service, and executing flows locally. The service can be run using Docker or as a standalone service, with options for testing and running unit/integration tests. Users can interact with the service via API endpoints or utility CLI tools.

unitycatalog

Unity Catalog is an open and interoperable catalog for data and AI, supporting multi-format tables, unstructured data, and AI assets. It offers plugin support for extensibility and interoperates with Delta Sharing protocol. The catalog is fully open with OpenAPI spec and OSS implementation, providing unified governance for data and AI with asset-level access control enforced through REST APIs.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

For similar tasks

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

oio-sds

OpenIO SDS is a software solution for object storage, targeting very large-scale unstructured data volumes.

summary-of-a-haystack

This repository contains data and code for the experiments in the SummHay paper. It includes publicly released Haystacks in conversational and news domains, along with scripts for running the pipeline, visualizing results, and benchmarking automatic evaluation. The data structure includes topics, subtopics, insights, queries, retrievers, summaries, evaluation summaries, and documents. The pipeline involves scripts for retriever scores, summaries, and evaluation scores using GPT-4o. Visualization scripts are provided for compiling and visualizing results. The repository also includes annotated samples for benchmarking and citation information for the SummHay paper.

IntelliChat

IntelliChat is an open-source AI chatbot tool designed to accelerate the integration of multiple language models into chatbot apps. Users can select their preferred AI provider and model from the UI, manage API keys, and access data using Intellinode. The tool is built with Intellinode and Next.js, and supports various AI providers such as OpenAI ChatGPT, Google Gemini, Azure Openai, Cohere Coral, Replicate, Mistral AI, Anthropic, and vLLM. It offers a user-friendly interface for developers to easily incorporate AI capabilities into their chatbot applications.

niledatabase

Nile is a serverless Postgres database designed for modern SaaS applications. It virtualizes tenants/customers/organizations into Postgres to enable native tenant data isolation, performance isolation, per-tenant backups, and tenant placement on shared or dedicated compute globally. With Nile, you can manage multiple tenants effortlessly, without complex permissions or buggy scripts. Additionally, it offers opt-in user management capabilities, customer-specific vector embeddings, and instant tenant admin dashboards. Built for the cloud, Nile provides a true serverless experience with effortless scaling.

1Panel

1Panel is an open-source, modern web-based control panel for Linux server management. It provides efficient management through a user-friendly web graphical interface, enabling users to effortlessly manage their Linux servers. Key features include host monitoring, file management, database administration, container management, rapid website deployment with WordPress integration, an application store for easy installation and updates, security and reliability through containerization and secure application deployment practices, integrated firewall management, log auditing capabilities, and one-click backup & restore functionality supporting various cloud storage solutions.

job-hunting

Job Hunting is a browser extension designed to enhance the job searching experience on popular recruitment platforms in China. It aims to improve job listing visibility, provide personalized job search capabilities, analyze job data, facilitate job discussions, and offer company insights. The extension offers features such as job card display, company reputation checks, quick company information lookup, job and company data storage, job and company tagging, data analysis, data sharing, personal job preferences, automation tasks, discussion forums, data backup and recovery, and data sharing plans. It supports platforms like BOSS 直聘, 前程无忧, 智联招聘, 拉钩网, and 猎聘网, and provides visualizations for job posting trends and company data.

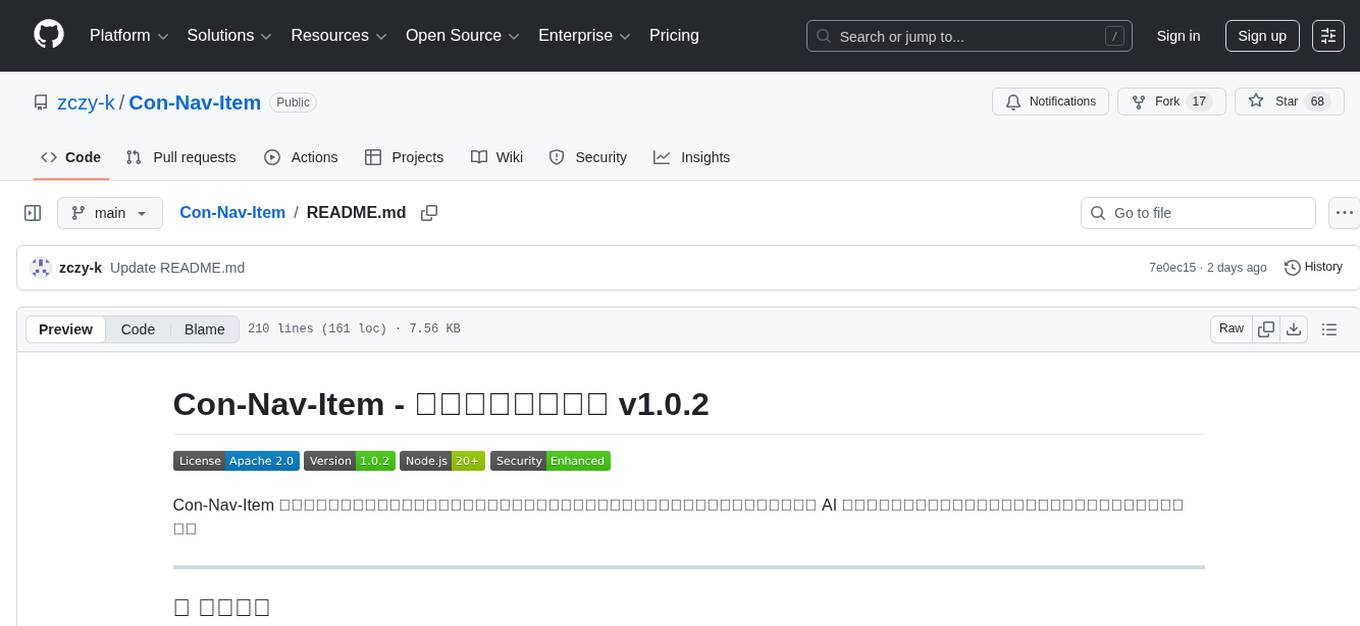

Con-Nav-Item

Con-Nav-Item is a modern personal navigation system designed for digital workers. It is not just a link bookmark but also an all-in-one workspace integrated with AI smart generation, multi-device synchronization, card-based management, and deep browser integration.

For similar jobs

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

generative-ai-cdk-constructs

The AWS Generative AI Constructs Library is an open-source extension of the AWS Cloud Development Kit (AWS CDK) that provides multi-service, well-architected patterns for quickly defining solutions in code to create predictable and repeatable infrastructure, called constructs. The goal of AWS Generative AI CDK Constructs is to help developers build generative AI solutions using pattern-based definitions for their architecture. The patterns defined in AWS Generative AI CDK Constructs are high level, multi-service abstractions of AWS CDK constructs that have default configurations based on well-architected best practices. The library is organized into logical modules using object-oriented techniques to create each architectural pattern model.

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.