bolna

Conversational voice AI agents

Stars: 578

Bolna is an open-source platform for building voice-driven conversational applications using large language models (LLMs). It provides a comprehensive set of tools and integrations to handle various aspects of voice-based interactions, including telephony, transcription, LLM-based conversation handling, and text-to-speech synthesis. Bolna simplifies the process of creating voice agents that can perform tasks such as initiating phone calls, transcribing conversations, generating LLM-powered responses, and synthesizing speech. It supports multiple providers for each component, allowing users to customize their setup based on their specific needs. Bolna is designed to be easy to use, with a straightforward local setup process and well-documented APIs. It is also extensible, enabling users to integrate with other telephony providers or add custom functionality.

README:

End-to-end open-source voice agents platform: Quickly build voice firsts conversational assistants through a json.

[!NOTE] We are actively looking for maintainers.

Bolna is the end-to-end open source production ready framework for quickly building LLM based voice driven conversational applications.

https://github.com/bolna-ai/bolna/assets/1313096/2237f64f-1c5b-4723-b7e7-d11466e9b226

This repository contains the entire orchestration platform to build voice AI applications. It technically orchestrates voice conversations using combination of different ASR+LLM+TTS providers and models over websockets.

Bolna helps you create AI Voice Agents which can be instructed to do tasks beginning with:

- Orchestration platform (this open source repository)

- Hosted APIs (https://docs.bolna.ai/api-reference/introduction) built on top of this orchestration platform [currently closed source]

- No-code UI playground at https://platform.bolna.ai/ using the hosted APIs + tailwind CSS [currently closed source]

- Any integration, enhancement or feature initially lands on this open source package since it forms the backbone of our Hosted APIs and dashboard

- Post that we expose APIs or make changes to existing APIs as required for the same

- Thirdly, we push it to the UI dashboard

graph LR;

A[Bolna open source] -->B[Hosted APIs];

B[Hosted APIs] --> C[Hosted Playground]- Initiating a phone call using telephony providers like

Twilio,Plivo,Exotel(coming soon),Vonage(coming soon) etc. - Transcribing the conversations using

Deepgram,Azureetc. - Using LLMs like

OpenAI,DeepSeek,Llama,Cohere,Mistral, etc to handle conversations - Synthesizing LLM responses back to telephony using

AWS Polly,ElevenLabs,Deepgram,OpenAI,Azure,Cartesia,Smallestetc.

Refer to the docs for a deepdive into all supported providers.

A basic local setup includes usage of Twilio or Plivo for telephony. We have dockerized the setup in local_setup/. One will need to populate an environment .env file from .env.sample.

The setup consists of four containers:

- Telephony web server:

- Choosing Twilio: for initiating the calls one will need to set up a Twilio account

- Choosing Plivo: for initiating the calls one will need to set up a Plivo account

- Bolna server: for creating and handling agents

-

ngrok: for tunneling. One will need to add theauthtokentongrok-config.yml -

redis: for persisting agents & prompt data

The easiest way to get started is to use the provided script:

cd local_setup

chmod +x start.sh

./start.shThis script will check for Docker dependencies, build all services with BuildKit enabled, and start them in detached mode.

Alternatively, you can manually build and run the services:

- Make sure you have Docker with Docker Compose V2 installed

- Enable BuildKit for faster builds:

export DOCKER_BUILDKIT=1 export COMPOSE_DOCKER_CLI_BUILD=1

- Build the images:

docker compose build

- Run the services:

docker compose up -d

To run specific services only:

docker compose up -d bolna-app twilio-app

# or

docker compose up -d bolna-app plivo-appOnce the docker containers are up, you can now start to create your agents and instruct them to initiate calls.

You may try out different agents from example.bolna.dev.

You can also build and run an agent directly in Python without the local telephony setup.

Example script: examples/simple_assistant.py

import asyncio

from bolna.assistant import Assistant

from bolna.models import (

Transcriber,

Synthesizer,

ElevenLabsConfig,

LlmAgent,

SimpleLlmAgent,

)

async def main():

assistant = Assistant(name="demo_agent")

# Configure audio input (ASR)

transcriber = Transcriber(provider="deepgram", model="nova-2", stream=True, language="en")

# Configure LLM

llm_agent = LlmAgent(

agent_type="simple_llm_agent",

agent_flow_type="streaming",

llm_config=SimpleLlmAgent(

provider="openai",

model="gpt-4o-mini",

temperature=0.3,

),

)

# Configure audio output (TTS)

synthesizer = Synthesizer(

provider="elevenlabs",

provider_config=ElevenLabsConfig(

voice="George", voice_id="JBFqnCBsd6RMkjVDRZzb", model="eleven_turbo_v2_5"

),

stream=True,

audio_format="wav",

)

# Build a single coherent pipeline: transcriber -> llm -> synthesizer

assistant.add_task(

task_type="conversation",

llm_agent=llm_agent,

transcriber=transcriber,

synthesizer=synthesizer,

enable_textual_input=False,

)

# Stream results

async for chunk in assistant.execute():

print(chunk)

if __name__ == "__main__":

asyncio.run(main())How to run:

export OPENAI_API_KEY=...

export DEEPGRAM_AUTH_TOKEN=...

export ELEVENLABS_API_KEY=...

python examples/simple_assistant.pyThis demonstrates orchestration and streaming output. For telephony, use the services in local_setup/.

Note: For REST-based usage (Agent CRUD over HTTP), see API.md in the repo root.

Expected output shape: assistant.execute() is an async generator yielding per-task result dicts (event-like chunks). The exact keys depend on configured tools/providers; treat it as a stream and process incrementally.

If you want a text-only flow (no transcriber/synthesizer), you can enable a text-only pipeline:

Example script: examples/text_only_assistant.py

import asyncio

from bolna.assistant import Assistant

from bolna.models import LlmAgent, SimpleLlmAgent

async def main():

assistant = Assistant(name="text_only_agent")

llm_agent = LlmAgent(

agent_type="simple_llm_agent",

agent_flow_type="streaming",

llm_config=SimpleLlmAgent(

provider="openai",

model="gpt-4o-mini",

temperature=0.2,

),

)

# No transcriber/synthesizer; enable a text-only pipeline

assistant.add_task(

task_type="conversation",

llm_agent=llm_agent,

enable_textual_input=True,

)

async for chunk in assistant.execute():

print(chunk)

if __name__ == "__main__":

asyncio.run(main())How to run (text-only):

export OPENAI_API_KEY=...

python examples/text_only_assistant.pyExpected output shape: assistant.execute() yields streaming dicts per task step; fields vary by configuration. Handle chunk-by-chunk.

You can populate the .env file to use your own keys for providers.

ASR Providers

These are the current supported ASRs Providers:

| Provider | Environment variable to be added in .env file |

|---|---|

| Deepgram | DEEPGRAM_AUTH_TOKEN |

LLM Providers

Bolna uses LiteLLM package to support multiple LLM integrations.

These are the current supported LLM Provider Family: https://github.com/bolna-ai/bolna/blob/10fa26e5985d342eedb5a8985642f12f1cf92a4b/bolna/providers.py#L30-L47

For LiteLLM based LLMs, add either of the following to the .env file depending on your use-case:

LITELLM_MODEL_API_KEY: API Key of the LLM

LITELLM_MODEL_API_BASE: URL of the hosted LLM

LITELLM_MODEL_API_VERSION: API VERSION for LLMs like Azure

For LLMs hosted via VLLM, add the following to the .env file:

VLLM_SERVER_BASE_URL: URL of the hosted LLM using VLLM

TTS Providers

These are the current supported TTS Providers: https://github.com/bolna-ai/bolna/blob/c8a0d1428793d4df29133119e354bc2f85a7ca76/bolna/providers.py#L7-L14

| Provider | Environment variable to be added in .env file |

|---|---|

| AWS Polly | Accessed from system wide credentials via ~/.aws |

| Elevenlabs | ELEVENLABS_API_KEY |

| OpenAI | OPENAI_API_KEY |

| Deepgram | DEEPGRAM_AUTH_TOKEN |

| Cartesia | CARTESIA_API_KEY |

| Smallest | SMALLEST_API_KEY |

Telephony Providers

These are the current supported Telephony Providers:

| Provider | Environment variable to be added in .env file |

|---|---|

| Twilio |

TWILIO_ACCOUNT_SID, TWILIO_AUTH_TOKEN, TWILIO_PHONE_NUMBER

|

| Plivo |

PLIVO_AUTH_ID, PLIVO_AUTH_TOKEN, PLIVO_PHONE_NUMBER

|

We have in the past tried to maintain both the open source and the hosted solution (via APIs and a UI dashboard).

We have fluctuated b/w maintaining this repository purely from a point of time crunch and not interest.

Currently, we are continuing to maintain it for the community and improving the adoption of Voice AI.

Though the repository is completely open source, you can connect with us if interested in managed hosted offerings or more customized solutions.

In case you wish to extend and add some other Telephony like Vonage, Telnyx, etc. following the guidelines below:

- Make sure bi-directional streaming is supported by the Telephony provider

- Add the telephony-specific input handler file in input_handlers/telephony_providers writing custom functions extending from the telephony.py class

- This file will mainly contain how different types of event packets are being ingested from the telephony provider

- Add telephony-specific output handler file in output_handlers/telephony_providers writing custom functions extending from the telephony.py class

- This mainly concerns converting audio from the synthesizer class to a supported audio format and streaming it over the websocket provided by the telephony provider

- Lastly, you'll have to write a dedicated server like the example twilio_api_server.py provided in local_setup to initiate calls over websockets.

We love all types of contributions: whether big or small helping in improving this community resource.

- There are a number of open issues present which can be good ones to start with

- If you have suggestions for enhancements, wish to contribute a simple fix such as correcting a typo, or want to address an apparent bug, please feel free to initiate a new issue or submit a pull request

- If you're contemplating a larger change or addition to this repository, be it in terms of its structure or the features, kindly begin by creating a new issue open a new issue

and outline your proposed changes. This will allow us to engage in a discussion before you dedicate a significant amount of time or effort. Your cooperation and understanding are appreciated

and outline your proposed changes. This will allow us to engage in a discussion before you dedicate a significant amount of time or effort. Your cooperation and understanding are appreciated

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for bolna

Similar Open Source Tools

bolna

Bolna is an open-source platform for building voice-driven conversational applications using large language models (LLMs). It provides a comprehensive set of tools and integrations to handle various aspects of voice-based interactions, including telephony, transcription, LLM-based conversation handling, and text-to-speech synthesis. Bolna simplifies the process of creating voice agents that can perform tasks such as initiating phone calls, transcribing conversations, generating LLM-powered responses, and synthesizing speech. It supports multiple providers for each component, allowing users to customize their setup based on their specific needs. Bolna is designed to be easy to use, with a straightforward local setup process and well-documented APIs. It is also extensible, enabling users to integrate with other telephony providers or add custom functionality.

web-llm

WebLLM is a modular and customizable javascript package that directly brings language model chats directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU. WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including json-mode, function-calling, streaming, etc. We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

oasis

OASIS is a scalable, open-source social media simulator that integrates large language models with rule-based agents to realistically mimic the behavior of up to one million users on platforms like Twitter and Reddit. It facilitates the study of complex social phenomena such as information spread, group polarization, and herd behavior, offering a versatile tool for exploring diverse social dynamics and user interactions in digital environments. With features like scalability, dynamic environments, diverse action spaces, and integrated recommendation systems, OASIS provides a comprehensive platform for simulating social media interactions at a large scale.

sage

Sage is a tool that allows users to chat with any codebase, providing a chat interface for code understanding and integration. It simplifies the process of learning how a codebase works by offering heavily documented answers sourced directly from the code. Users can set up Sage locally or on the cloud with minimal effort. The tool is designed to be easily customizable, allowing users to swap components of the pipeline and improve the algorithms powering code understanding and generation.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

langchain

LangChain is a framework for developing Elixir applications powered by language models. It enables applications to connect language models to other data sources and interact with the environment. The library provides components for working with language models and off-the-shelf chains for specific tasks. It aims to assist in building applications that combine large language models with other sources of computation or knowledge. LangChain is written in Elixir and is not aimed for parity with the JavaScript and Python versions due to differences in programming paradigms and design choices. The library is designed to make it easy to integrate language models into applications and expose features, data, and functionality to the models.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

jina

Jina is a tool that allows users to build multimodal AI services and pipelines using cloud-native technologies. It provides a Pythonic experience for serving ML models and transitioning from local deployment to advanced orchestration frameworks like Docker-Compose, Kubernetes, or Jina AI Cloud. Users can build and serve models for any data type and deep learning framework, design high-performance services with easy scaling, serve LLM models while streaming their output, integrate with Docker containers via Executor Hub, and host on CPU/GPU using Jina AI Cloud. Jina also offers advanced orchestration and scaling capabilities, a smooth transition to the cloud, and easy scalability and concurrency features for applications. Users can deploy to their own cloud or system with Kubernetes and Docker Compose integration, and even deploy to JCloud for autoscaling and monitoring.

WindowsAgentArena

Windows Agent Arena (WAA) is a scalable Windows AI agent platform designed for testing and benchmarking multi-modal, desktop AI agents. It provides researchers and developers with a reproducible and realistic Windows OS environment for AI research, enabling testing of agentic AI workflows across various tasks. WAA supports deploying agents at scale using Azure ML cloud infrastructure, allowing parallel running of multiple agents and delivering quick benchmark results for hundreds of tasks in minutes.

storm

STORM is a LLM system that writes Wikipedia-like articles from scratch based on Internet search. While the system cannot produce publication-ready articles that often require a significant number of edits, experienced Wikipedia editors have found it helpful in their pre-writing stage. **Try out our [live research preview](https://storm.genie.stanford.edu/) to see how STORM can help your knowledge exploration journey and please provide feedback to help us improve the system 🙏!**

LeanCopilot

Lean Copilot is a tool that enables the use of large language models (LLMs) in Lean for proof automation. It provides features such as suggesting tactics/premises, searching for proofs, and running inference of LLMs. Users can utilize built-in models from LeanDojo or bring their own models to run locally or on the cloud. The tool supports platforms like Linux, macOS, and Windows WSL, with optional CUDA and cuDNN for GPU acceleration. Advanced users can customize behavior using Tactic APIs and Model APIs. Lean Copilot also allows users to bring their own models through ExternalGenerator or ExternalEncoder. The tool comes with caveats such as occasional crashes and issues with premise selection and proof search. Users can get in touch through GitHub Discussions for questions, bug reports, feature requests, and suggestions. The tool is designed to enhance theorem proving in Lean using LLMs.

fabrice-ai

A lightweight, functional, and composable framework for building AI agents that work together to solve complex tasks. Built with TypeScript and designed to be serverless-ready. Fabrice embraces functional programming principles, remains stateless, and stays focused on composability. It provides core concepts like easy teamwork creation, infrastructure-agnosticism, statelessness, and includes all tools and features needed to build AI teams. Agents are specialized workers with specific roles and capabilities, able to call tools and complete tasks. Workflows define how agents collaborate to achieve a goal, with workflow states representing the current state of the workflow. Providers handle requests to the LLM and responses. Tools extend agent capabilities by providing concrete actions they can perform. Execution involves running the workflow to completion, with options for custom execution and BDD testing.

debug-gym

debug-gym is a text-based interactive debugging framework designed for debugging Python programs. It provides an environment where agents can interact with code repositories, use various tools like pdb and grep to investigate and fix bugs, and propose code patches. The framework supports different LLM backends such as OpenAI, Azure OpenAI, and Anthropic. Users can customize tools, manage environment states, and run agents to debug code effectively. debug-gym is modular, extensible, and suitable for interactive debugging tasks in a text-based environment.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

For similar tasks

bolna

Bolna is an open-source platform for building voice-driven conversational applications using large language models (LLMs). It provides a comprehensive set of tools and integrations to handle various aspects of voice-based interactions, including telephony, transcription, LLM-based conversation handling, and text-to-speech synthesis. Bolna simplifies the process of creating voice agents that can perform tasks such as initiating phone calls, transcribing conversations, generating LLM-powered responses, and synthesizing speech. It supports multiple providers for each component, allowing users to customize their setup based on their specific needs. Bolna is designed to be easy to use, with a straightforward local setup process and well-documented APIs. It is also extensible, enabling users to integrate with other telephony providers or add custom functionality.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

aiogoogle

Aiogoogle is an asynchronous Google API client that allows users to access various Google public APIs such as Google Calendar, Drive, Contacts, Gmail, Maps, Youtube, Translate, Sheets, Docs, Analytics, Books, Fitness, Genomics, Cloud Storage, Kubernetes Engine, and more. It simplifies the process of interacting with Google APIs by providing async capabilities.

J.A.R.V.I.S

J.A.R.V.I.S. is an offline large language model fine-tuned on custom and open datasets to mimic Jarvis's dialog with Stark. It prioritizes privacy by running locally and excels in responding like Jarvis with a similar tone. Current features include time/date queries, web searches, playing YouTube videos, and webcam image descriptions. Users can interact with Jarvis via command line after installing the model locally using Ollama. Future plans involve voice cloning, voice-to-text input, and deploying the voice model as an API.

aioaws

Aioaws is an asyncio SDK for some AWS services, providing clean, secure, and easily debuggable access to services like S3, SES, and SNS. It is written from scratch without dependencies on boto or boto3, formatted with black, and includes complete type hints. The library supports various functionalities such as listing, deleting, and generating signed URLs for S3 files, sending emails with attachments and multipart content via SES, and receiving notifications about mail delivery from SES. It also offers AWS Signature Version 4 authentication and has minimal dependencies like aiofiles, cryptography, httpx, and pydantic.

next-money

Next Money Stripe Starter is a SaaS Starter project that empowers your next project with a stack of Next.js, Prisma, Supabase, Clerk Auth, Resend, React Email, Shadcn/ui, and Stripe. It seamlessly integrates these technologies to accelerate your development and SaaS journey. The project includes frameworks, platforms, UI components, hooks and utilities, code quality tools, and miscellaneous features to enhance the development experience. Created by @koyaguo in 2023 and released under the MIT license.

J.A.R.V.I.S.

J.A.R.V.I.S.1.0 is an advanced virtual assistant tool designed to assist users in various tasks. It provides a wide range of functionalities including voice commands, task automation, information retrieval, and communication management. With its intuitive interface and powerful capabilities, J.A.R.V.I.S.1.0 aims to enhance productivity and streamline daily activities for users.

CogAgent

CogAgent is an advanced intelligent agent model designed for automating operations on graphical interfaces across various computing devices. It supports platforms like Windows, macOS, and Android, enabling users to issue commands, capture device screenshots, and perform automated operations. The model requires a minimum of 29GB of GPU memory for inference at BF16 precision and offers capabilities for executing tasks like sending Christmas greetings and sending emails. Users can interact with the model by providing task descriptions, platform specifications, and desired output formats.

For similar jobs

bolna

Bolna is an open-source platform for building voice-driven conversational applications using large language models (LLMs). It provides a comprehensive set of tools and integrations to handle various aspects of voice-based interactions, including telephony, transcription, LLM-based conversation handling, and text-to-speech synthesis. Bolna simplifies the process of creating voice agents that can perform tasks such as initiating phone calls, transcribing conversations, generating LLM-powered responses, and synthesizing speech. It supports multiple providers for each component, allowing users to customize their setup based on their specific needs. Bolna is designed to be easy to use, with a straightforward local setup process and well-documented APIs. It is also extensible, enabling users to integrate with other telephony providers or add custom functionality.

claim-ai-phone-bot

AI-powered call center solution with Azure and OpenAI GPT. The bot can answer calls, understand the customer's request, and provide relevant information or assistance. It can also create a todo list of tasks to complete the claim, and send a report after the call. The bot is customizable, and can be used in multiple languages.

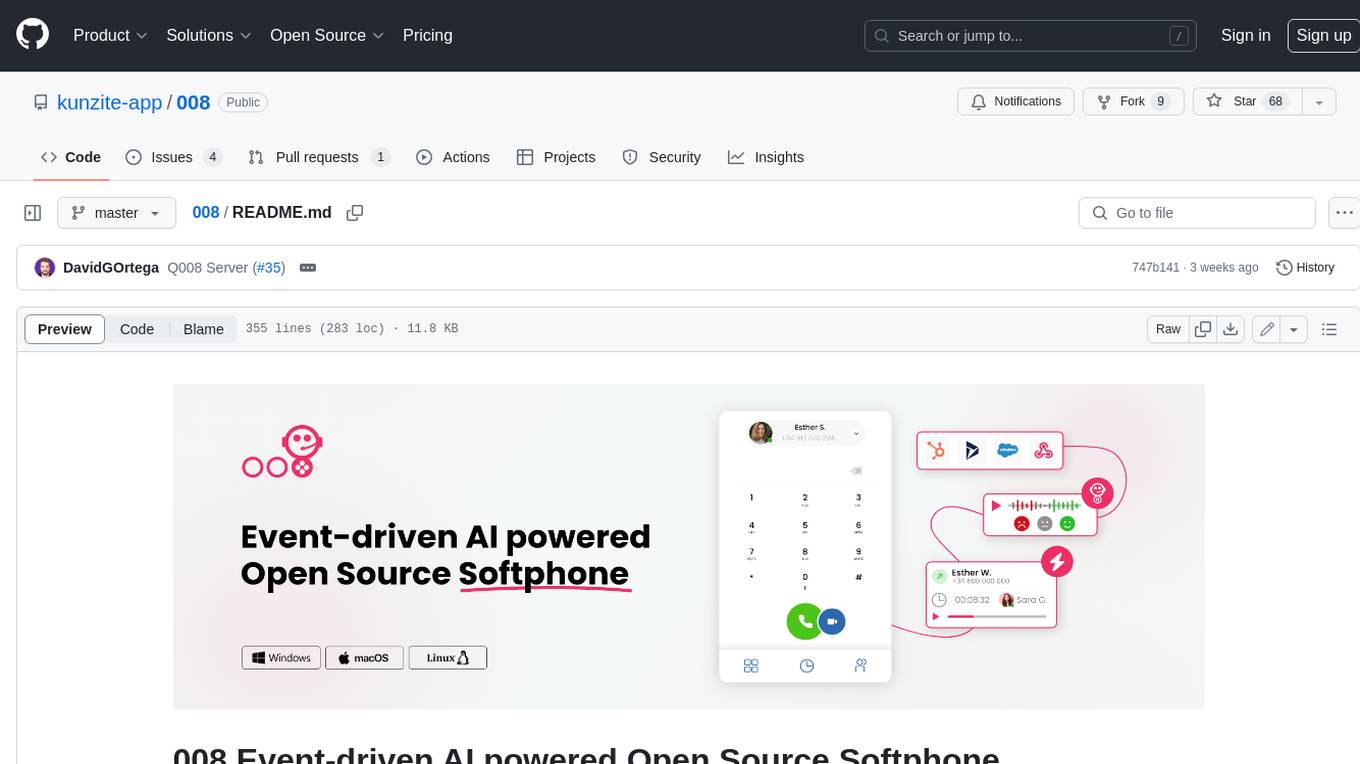

008

008 is an open-source event-driven AI powered WebRTC Softphone compatible with macOS, Windows, and Linux. It is also accessible on the web. The name '008' or 'agent 008' reflects our ambition: beyond crafting the premier Open Source Softphone, we aim to introduce a programmable, event-driven AI agent. This agent utilizes embedded artificial intelligence models operating directly on the softphone, ensuring efficiency and reduced operational costs.

call-center-ai

Call Center AI is an AI-powered call center solution that leverages Azure and OpenAI GPT. It is a proof of concept demonstrating the integration of Azure Communication Services, Azure Cognitive Services, and Azure OpenAI to build an automated call center solution. The project showcases features like accessing claims on a public website, customer conversation history, language change during conversation, bot interaction via phone number, multiple voice tones, lexicon understanding, todo list creation, customizable prompts, content filtering, GPT-4 Turbo for customer requests, specific data schema for claims, documentation database access, SMS report sending, conversation resumption, and more. The system architecture includes components like RAG AI Search, SMS gateway, call gateway, moderation, Cosmos DB, event broker, GPT-4 Turbo, Redis cache, translation service, and more. The tool can be deployed remotely using GitHub Actions and locally with prerequisites like Azure environment setup, configuration file creation, and resource hosting. Advanced usage includes custom training data with AI Search, prompt customization, language customization, moderation level customization, claim data schema customization, OpenAI compatible model usage for the LLM, and Twilio integration for SMS.

air724ug-forwarder

Air724UG forwarder is a tool designed to forward SMS, notify incoming calls, and manage voice messages. It provides a convenient way to handle communication tasks on Air724UG devices. The tool streamlines the process of receiving and managing messages, ensuring users stay connected and informed.

Callytics

Callytics is an advanced call analytics solution that leverages speech recognition and large language models (LLMs) technologies to analyze phone conversations from customer service and call centers. By processing both the audio and text of each call, it provides insights such as sentiment analysis, topic detection, conflict detection, profanity word detection, and summary. These cutting-edge techniques help businesses optimize customer interactions, identify areas for improvement, and enhance overall service quality. When an audio file is placed in the .data/input directory, the entire pipeline automatically starts running, and the resulting data is inserted into the database. This is only a v1.1.0 version; many new features will be added, models will be fine-tuned or trained from scratch, and various optimization efforts will be applied.

DAMO-ConvAI

DAMO-ConvAI is the official repository for Alibaba DAMO Conversational AI. It contains the codebase for various conversational AI models and tools developed by Alibaba Research. These models and tools cover a wide range of tasks, including natural language understanding, natural language generation, dialogue management, and knowledge graph construction. DAMO-ConvAI is released under the MIT license and is available for use by researchers and developers in the field of conversational AI.

nlux

nlux is an open-source Javascript and React JS library that makes it super simple to integrate powerful large language models (LLMs) like ChatGPT into your web app or website. With just a few lines of code, you can add conversational AI capabilities and interact with your favourite LLM.