hugescm

HugeSCM - A next generation cloud-based version control system

Stars: 111

HugeSCM is a cloud-based version control system designed to address R&D repository size issues. It effectively manages large repositories and individual large files by separating data storage and utilizing advanced algorithms and data structures. It aims for optimal performance in handling version control operations of large-scale repositories, making it suitable for single large library R&D, AI model development, and game or driver development.

README:

HugeSCM (codename zeta) is a cloud-based, next-gen version control system addressing R&D repository size issues. It effectively manages both large repositories and individual large files, overcoming limitations of traditional centralized (like Subversion) and distributed systems (like Git) concerning storage and transmission. Designed for modern R&D needs, HugeSCM separates data storage: it keeps directory structures and commit records in a distributed database, while file content resides in a distributed file system or object storage. Unlike attempts to adapt Git to OSS, HugeSCM aims for optimal performance by avoiding common pitfalls. It's particularly suited for single large library R&D, AI model development, and game or driver development.

HugeSCM mainly solves the repository scale problem in the following ways:

- Data separation principle: HugeSCM adopts the principle of data separation to divide the data of the version control system into metadata and file data, and stores them according to different strategies, solving the upper limit of single-machine file storage.

- Efficient transmission protocol: HugeSCM adopts an efficient transmission protocol to reduce the time and bandwidth consumption of data transmission by optimizing the data transmission process. This enables HugeSCM to quickly and reliably handle version control operations of large-scale repositories.

- Advanced algorithms and data structures: HugeSCM uses advanced algorithms and data structures to organize and manage repository data. These algorithms and data structures can effectively handle the storage and retrieval requirements of large-scale repositories and improve the efficiency and performance of operations. HugeSCM introduces fragments objects to solve the scale problem of a single file. This means that in addition to storing source code, HugeSCM can also conveniently store binary data, AI models, binary dependencies, and so on. Through the above strategies and technologies, HugeSCM can effectively solve the repository scale problem and provide high-performance, reliable and flexible version control services.

It draws on Git's experience and gets rid of Git's historical baggage. In short, we are grateful to these predecessors.

Object format: object-format.md

Transport Protocol: protocol.md

After installing the latest version of Golang, developers can build HugeSCM client. They can choose to install make or bali (build packaging tool).

bali -T windows

# create rpm,deb,tar,sh pack

bali -T linux -A amd64 --pack='rpm,deb,tar,sh'The bali build tool can create zip, deb, tar, rpm, sh (STGZ) compression/installation packages.

We have written an Inno Setup package script. You can use Docker + wine to generate an installation package without Windows. You can run amake/innosetup to make an Inno Setup installation package:

docker run --rm -i -v "$TOPLEVEL:/work" amake/innosetup xxxxx.issThen you can generate the installation package. Before that, we need to run bali --target=windows --arch=amd64 to build the Windows platform binary.

Note: On a macOS machine with an Apple Silicon chip, you can use OrbStack to open Rosetta and run the image to make a Windows installation package.

Users can run zeta -h to view all zeta commands, and run zeta ${command} -h to view detailed command help. We try to make it easy for git users to get started with zeta, and we will also enhance some commands. For example, many zeta commands support --json to format the output as json, which is convenient for integration with various tools.

zeta config --global user.email '[email protected]'

zeta config --global user.name 'Example User'The process to obtain a remote repository in git is called clone (or fetch). In zeta, we use checkout, abbreviated as co. Below is how to checkout a repository.:

zeta co http://zeta.example.io/group/repo xh1

zeta co http://zeta.example.io/group/repo xh1 -s dir1We have implemented git-like status, add, and commit commands, usable except in interactive mode. Use -h for help. On properly configured systems, zeta displays the corresponding language version.

echo "hello world" > helloworld.txt

zeta add helloworld.txt

zeta commit -m "Hello world"zeta push

zeta pullThe zeta client supports three acceleration types—direct, dragonfly, and aria2—configurable via core.accelerator or the ZETA_CORE_ACCELERATOR environment variable.

| accelerator | mechanism | remark |

|---|---|---|

direct |

The zeta client directly retrieves the signature address from OSS, bypassing the Zeta Server. | This mechanism is applicable in AI scenarios. After OSS downloads with signatures, speed is adequate. Without an accelerator, download speed may be suboptimal. Users should enable direct connection unless the OSS signature URL is inaccessible. |

dragonfly |

Use the dragonfly client dfget to download, leveraging dragonfly cluster capabilities. | You can use ZETA_EXTENSION_DRAGONFLY_GET to specify the dfget path instead of using the dfget in PATH. |

aria2 |

Use the aria2c command line to download; aria2 is a well-known download tool in the industry. | You can use ZETA_EXTENSION_ARIA2C to specify the aria2c path instead of using the aria2c in PATH. |

# oss direct

zeta config --global core.accelerator directBy default, parallel download support is disabled, regardless of whether the accelerator is on or off. Users can configure core.concurrenttransfers (environment variable ZETA_CORE_CONCURRENT_TRANSFERS) to set the number of concurrent downloads, with valid values between 1-50. When enabled, the benefits of concurrent downloads may not be very noticeable, typically due to bandwidth limitations.

Previously, downloading a model file from a 100GB repository using Git LFS could require over 300GB of storage space, including 100GB for the model files, 100GB for split files, and 100GB for Git LFS objects. This often resulted in download failures due to insufficient disk space. With the introduction of the zeta co url --one mechanism in Zeta, we can check out a file and immediately delete the corresponding blob object, allowing a 100GB model file to ultimately occupy just a bit over 100GB, saving over 60% of space.

zeta co http://zeta.example.io/zeta-poc-test/zeta-poc-test --oneWe added a Size field to the TreeEntry in our object model design, allowing us to easily implement an API to estimate the storage space required for checking out a model repository. This, combined with a one-by-one checkout mechanism, effectively saves disk space.

Initially, zeta only supported downloading objects related to specific commits and allowed the deletion of related blobs after checkout. However, user scenarios turned out to be complex. We introduced automatic downloading of missing objects for zeta cat. If you want to disable this feature, set the environment variable ZETA_CORE_PROMISOR=0. Additionally, we've implemented automatic downloading of missing objects for merge scenarios as well.

In zeta, you can checkout a single file by adding --limit=0 during the checkout process, which excludes all files except empty ones. Then, use zeta checkout -- path to check out the specific file.

zeta co http://zeta.example.io/zeta-poc-test/zeta-poc-test --limit=0 z2

zeta checkout -- dev6.binSome users may only want to modify specific files, which can be done by using checkout single file to checkout the desired file and then making the modifications.

zeta add test1/2.txt

zeta commit -m "XXX"

zeta pushzeta-mc https://github.com/antgroup/hugescm.git hugescm-devhot command is a Git repository governance tool that we integrated into HugeSCM, supporting many scenarios:

- If password credentials and other sensitive information were mistakenly committed to a Git repository, you can use

hot removeto delete and rewrite the history, withhot removebeing faster for rewriting. + You can usehot mcto migrate the object format of the Git repository toSHA256, or migrate fromSHA256back toSHA1. - You can check for large files in the repository by using

hot size (original size)orhot az (approximate compressed size), and you can perform interactive operations directly withhot smart(e.g:hot smart -L20m). - If there are too many invalid branch tags in the repository, you can delete them using

hot prune-refs (prefix matching)orhot expire-refs (by expiration time, whether to merge), and you can also usehot scan-refsto check the status of branches. - You can linearize the history of the repository using

hot unbranch, which means there will be no merge points included. - You can also create an orphan branch based on a specific version using

hot unbranch -K1 master -Tnew-branch, which preserves the recent history and can be used for open-source purposes or resetting history scenarios. - You can view files in the repository with

hot cat, which includescommit/tree/tag/blob, wherecommit/tree/tagcan be output as JSON using--json, andblobcan intelligently output binary files in hexadecimal.

Here's some more help information:

Usage: hot <command> [flags]

hot - Git repositories maintenance tool

Flags:

-h, --help Show context-sensitive help

-V, --verbose Make the operation more talkative

-v, --version Show version number and quit

--debug Enable debug mode; analyze timing

Commands:

cat Provide contents or details of repository objects

stat View repository status

size Show repositories size and large files

remove Remove files in repository and rewrite history

smart Interactive mode to clean repository large files

graft Interactive mode to clean repository large files (Grafting mode)

mc Migrate a repository to the specified object format

unbranch Linearize repository history

prune-refs Prune refs by prefix

scan-refs Scan references in a local repository

expire-refs Clean up expired references

snapshot Create a snapshot commit for the worktree

az Analyze repository large files

co EXPERIMENTAL: Clones a repository into a newly created directory

Run "hot <command> --help" for more information on a command.For example, if you want to view an image in the repository, you can do this:

hot cat HEAD:docs/images/blob.pngFor example, if you want to view the repository information, you can do this:

hot statMigrate Git repository object format from SHA1 to SHA256:

hot mc https://github.com/antgroup/hugescm.gitApache License Version 2.0, see LICENSE

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for hugescm

Similar Open Source Tools

hugescm

HugeSCM is a cloud-based version control system designed to address R&D repository size issues. It effectively manages large repositories and individual large files by separating data storage and utilizing advanced algorithms and data structures. It aims for optimal performance in handling version control operations of large-scale repositories, making it suitable for single large library R&D, AI model development, and game or driver development.

unstructured

The `unstructured` library provides open-source components for ingesting and pre-processing images and text documents, such as PDFs, HTML, Word docs, and many more. The use cases of `unstructured` revolve around streamlining and optimizing the data processing workflow for LLMs. `unstructured` modular functions and connectors form a cohesive system that simplifies data ingestion and pre-processing, making it adaptable to different platforms and efficient in transforming unstructured data into structured outputs.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

unitycatalog

Unity Catalog is an open and interoperable catalog for data and AI, supporting multi-format tables, unstructured data, and AI assets. It offers plugin support for extensibility and interoperates with Delta Sharing protocol. The catalog is fully open with OpenAPI spec and OSS implementation, providing unified governance for data and AI with asset-level access control enforced through REST APIs.

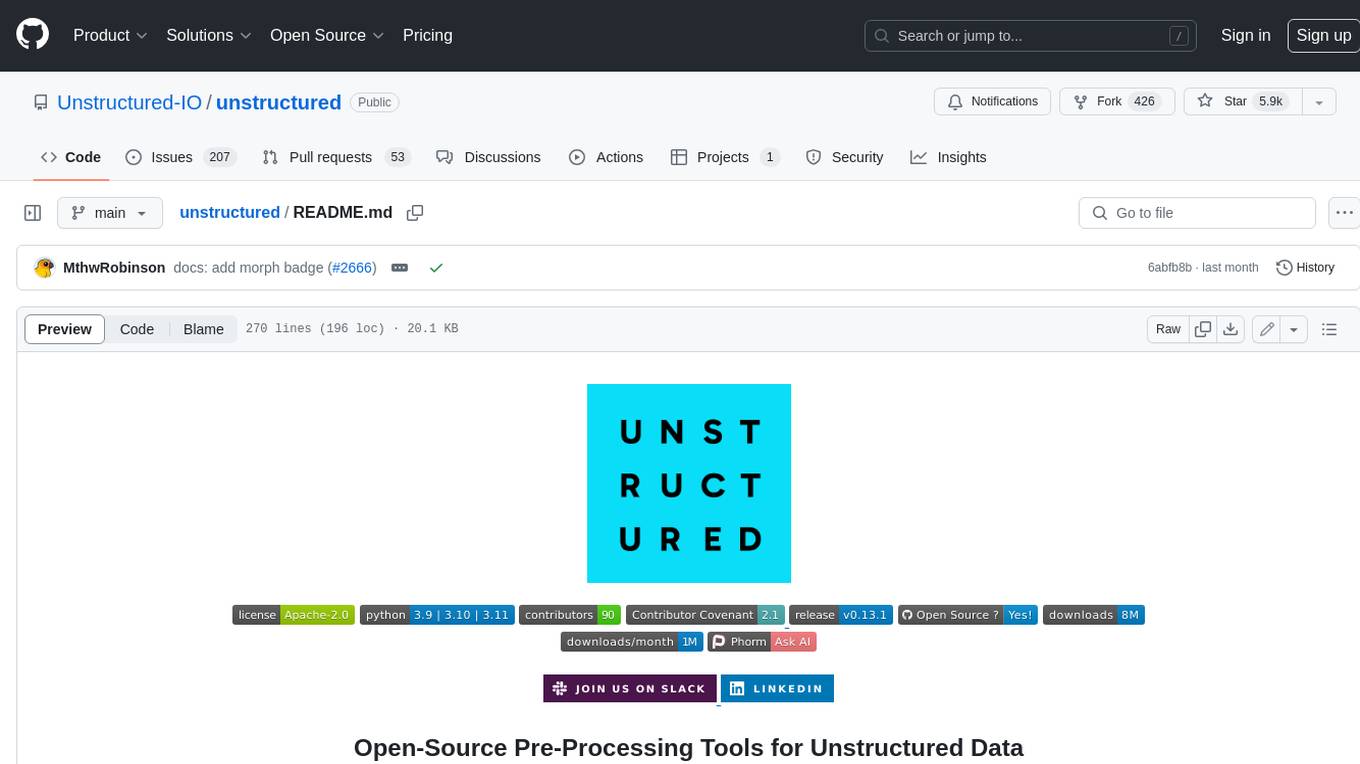

open-deep-research

Open Deep Research is an open-source project that serves as a clone of Open AI's Deep Research experiment. It utilizes Firecrawl's extract and search method along with a reasoning model to conduct in-depth research on the web. The project features Firecrawl Search + Extract, real-time data feeding to AI via search, structured data extraction from multiple websites, Next.js App Router for advanced routing, React Server Components and Server Actions for server-side rendering, AI SDK for generating text and structured objects, support for various model providers, styling with Tailwind CSS, data persistence with Vercel Postgres and Blob, and simple and secure authentication with NextAuth.js.

safety-tooling

This repository, safety-tooling, is designed to be shared across various AI Safety projects. It provides an LLM API with a common interface for OpenAI, Anthropic, and Google models. The aim is to facilitate collaboration among AI Safety researchers, especially those with limited software engineering backgrounds, by offering a platform for contributing to a larger codebase. The repo can be used as a git submodule for easy collaboration and updates. It also supports pip installation for convenience. The repository includes features for installation, secrets management, linting, formatting, Redis configuration, testing, dependency management, inference, finetuning, API usage tracking, and various utilities for data processing and experimentation.

sage

Sage is a tool that allows users to chat with any codebase, providing a chat interface for code understanding and integration. It simplifies the process of learning how a codebase works by offering heavily documented answers sourced directly from the code. Users can set up Sage locally or on the cloud with minimal effort. The tool is designed to be easily customizable, allowing users to swap components of the pipeline and improve the algorithms powering code understanding and generation.

debug-gym

debug-gym is a text-based interactive debugging framework designed for debugging Python programs. It provides an environment where agents can interact with code repositories, use various tools like pdb and grep to investigate and fix bugs, and propose code patches. The framework supports different LLM backends such as OpenAI, Azure OpenAI, and Anthropic. Users can customize tools, manage environment states, and run agents to debug code effectively. debug-gym is modular, extensible, and suitable for interactive debugging tasks in a text-based environment.

0chain

Züs is a high-performance cloud on a fast blockchain offering privacy and configurable uptime. It uses erasure code to distribute data between data and parity servers, allowing flexibility for IT managers to design for security and uptime. Users can easily share encrypted data with business partners through a proxy key sharing protocol. The ecosystem includes apps like Blimp for cloud migration, Vult for personal cloud storage, and Chalk for NFT artists. Other apps include Bolt for secure wallet and staking, Atlus for blockchain explorer, and Chimney for network participation. The QoS protocol challenges providers based on response time, while the privacy protocol enables secure data sharing. Züs supports hybrid and multi-cloud architectures, allowing users to improve regulatory compliance and security requirements.

admet_ai

ADMET-AI is a platform for ADMET prediction using Chemprop-RDKit models trained on ADMET datasets from the Therapeutics Data Commons. It offers command line, Python API, and web server interfaces for making ADMET predictions on new molecules. The platform can be easily installed using pip and supports GPU acceleration. It also provides options for processing TDC data, plotting results, and hosting a web server. ADMET-AI is a machine learning platform for evaluating large-scale chemical libraries.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

PolyMind

PolyMind is a multimodal, function calling powered LLM webui designed for various tasks such as internet searching, image generation, port scanning, Wolfram Alpha integration, Python interpretation, and semantic search. It offers a plugin system for adding extra functions and supports different models and endpoints. The tool allows users to interact via function calling and provides features like image input, image generation, and text file search. The application's configuration is stored in a `config.json` file with options for backend selection, compatibility mode, IP address settings, API key, and enabled features.

dlio_benchmark

DLIO is an I/O benchmark tool designed for Deep Learning applications. It emulates modern deep learning applications using Benchmark Runner, Data Generator, Format Handler, and I/O Profiler modules. Users can configure various I/O patterns, data loaders, data formats, datasets, and parameters. The tool is aimed at emulating the I/O behavior of deep learning applications and provides a modular design for flexibility and customization.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

llamafile

llamafile is a tool that enables users to distribute and run Large Language Models (LLMs) with a single file. It combines llama.cpp with Cosmopolitan Libc to create a framework that simplifies the complexity of LLMs into a single-file executable called a 'llamafile'. Users can run these executable files locally on most computers without the need for installation, making open LLMs more accessible to developers and end users. llamafile also provides example llamafiles for various LLM models, allowing users to try out different LLMs locally. The tool supports multiple CPU microarchitectures, CPU architectures, and operating systems, making it versatile and easy to use.

For similar tasks

hugescm

HugeSCM is a cloud-based version control system designed to address R&D repository size issues. It effectively manages large repositories and individual large files by separating data storage and utilizing advanced algorithms and data structures. It aims for optimal performance in handling version control operations of large-scale repositories, making it suitable for single large library R&D, AI model development, and game or driver development.

genai-os

Kuwa GenAI OS is an open, free, secure, and privacy-focused Generative-AI Operating System. It provides a multi-lingual turnkey solution for GenAI development and deployment on Linux and Windows. Users can enjoy features such as concurrent multi-chat, quoting, full prompt-list import/export/share, and flexible orchestration of prompts, RAGs, bots, models, and hardware/GPUs. The system supports various environments from virtual hosts to cloud, and it is open source, allowing developers to contribute and customize according to their needs.

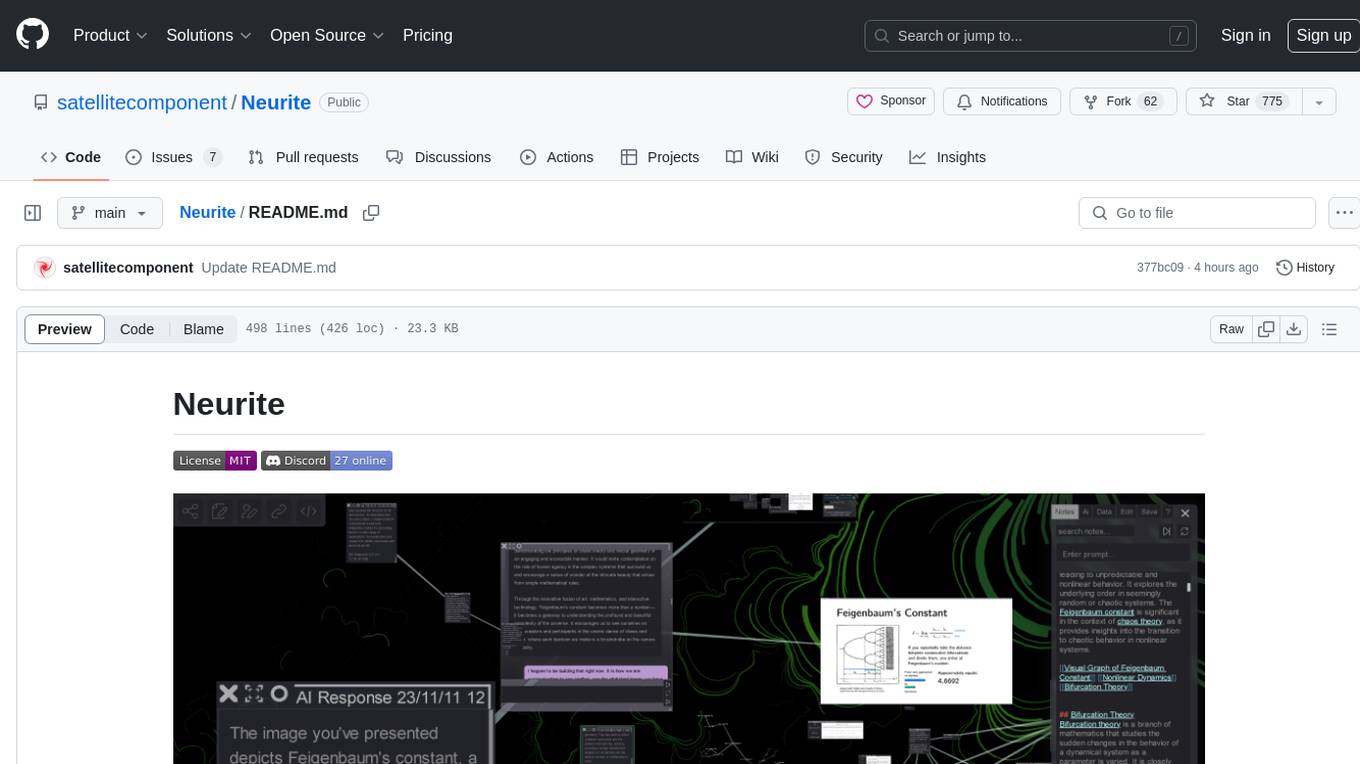

Neurite

Neurite is an innovative project that combines chaos theory and graph theory to create a digital interface that explores hidden patterns and connections for creative thinking. It offers a unique workspace blending fractals with mind mapping techniques, allowing users to navigate the Mandelbrot set in real-time. Nodes in Neurite represent various content types like text, images, videos, code, and AI agents, enabling users to create personalized microcosms of thoughts and inspirations. The tool supports synchronized knowledge management through bi-directional synchronization between mind-mapping and text-based hyperlinking. Neurite also features FractalGPT for modular conversation with AI, local AI capabilities for multi-agent chat networks, and a Neural API for executing code and sequencing animations. The project is actively developed with plans for deeper fractal zoom, advanced control over node placement, and experimental features.

fast-stable-diffusion

Fast-stable-diffusion is a project that offers notebooks for RunPod, Paperspace, and Colab Pro adaptations with AUTOMATIC1111 Webui and Dreambooth. It provides tools for running and implementing Dreambooth, a stable diffusion project. The project includes implementations by XavierXiao and is sponsored by Runpod, Paperspace, and Colab Pro.

big-AGI

big-AGI is an AI suite designed for professionals seeking function, form, simplicity, and speed. It offers best-in-class Chats, Beams, and Calls with AI personas, visualizations, coding, drawing, side-by-side chatting, and more, all wrapped in a polished UX. The tool is powered by the latest models from 12 vendors and open-source servers, providing users with advanced AI capabilities and a seamless user experience. With continuous updates and enhancements, big-AGI aims to stay ahead of the curve in the AI landscape, catering to the needs of both developers and AI enthusiasts.

generative-ai

This repository contains codes related to Generative AI as per YouTube video. It includes various notebooks and files for different days covering topics like map reduce, text to SQL, LLM parameters, tagging, and Kaggle competition. The repository also includes resources like PDF files and databases for different projects related to Generative AI.

Cradle

The Cradle project is a framework designed for General Computer Control (GCC), empowering foundation agents to excel in various computer tasks through strong reasoning abilities, self-improvement, and skill curation. It provides a standardized environment with minimal requirements, constantly evolving to support more games and software. The repository includes released versions, publications, and relevant assets.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.