big-AGI

AI suite powered by state-of-the-art models and providing advanced AI/AGI functions. It features AI personas, AGI functions, multi-model chats, text-to-image, voice, response streaming, code highlighting and execution, PDF import, presets for developers, much more. Deploy on-prem or in the cloud.

Stars: 6258

big-AGI is an AI suite designed for professionals seeking function, form, simplicity, and speed. It offers best-in-class Chats, Beams, and Calls with AI personas, visualizations, coding, drawing, side-by-side chatting, and more, all wrapped in a polished UX. The tool is powered by the latest models from 12 vendors and open-source servers, providing users with advanced AI capabilities and a seamless user experience. With continuous updates and enhancements, big-AGI aims to stay ahead of the curve in the AI landscape, catering to the needs of both developers and AI enthusiasts.

README:

Welcome to big-AGI, the AI suite for professionals that need function, form,

simplicity, and speed. Powered by the latest models from 15 vendors and

open-source servers, big-AGI offers best-in-class Chats,

Beams,

and Calls with AI personas,

visualizations, coding, drawing, side-by-side chatting, and more -- all wrapped in a polished UX.

Stay ahead of the curve with big-AGI. 🚀 Pros & Devs love big-AGI. 🤖

🚀 Big-AGI 2 is launching soon.

Or fork & run on Vercel

This repository contains two main versions:

- Big-AGI 2: next-generation, bringing the most advanced AI experience

-

v2-dev: V2 development branch, the exciting one, future default (this branch)

-

- Big-AGI Stable: as deployed on big-agi.com

-

v1-stable: Current stable version, and currently the Docker 'latest' tagged images

-

Note: After the V2 Q1 2025 release, v2-dev will become the default branch and v1-stable will reach EOL.

Quick links: 👉 roadmap 👉 installation 👉 documentation

Hit 5k commits last week. That's a lot of code, and it's the foundation for what's coming.

Recent work has been intense:

- Chain of thought reasoning across multiple LLMs: OpenAI o3 and o1, DeepSeek R1, Gemini 2.0 Flash Thinking, and more

- Beam is real - ~35% of our free users run it daily to compare models

- New AIX framework lets us scale features we couldn't before

- UI is faster than ever. Like, terminal-fast

Big-AGI 2 is weeks away. Yes, we're late, but we're making it right. The new architecture is solid and the speed improvements are real.

Stay tuned. This is going to be good.

- 1.16.9: Docker Gemini fix (R1 models are supported in Big-AGI 2)

- 1.16.8: OpenAI ChatGPT-4o Latest (o1 models are supported in Big-AGI 2)

- 1.16.7: OpenAI support for GPT-4o 2024-08-06

- 1.16.6: Groq support for Llama 3.1 models

- 1.16.5: GPT-4o Mini support

- 1.16.4: 8192 tokens support for Claude 3.5 Sonnet

- 1.16.3: Anthropic Claude 3.5 Sonnet model support

- 1.16.2: Improve web downloads, as text, markdown, or HTML

- 1.16.2: Proper support for Gemini models

- 1.16.2: Added the latest Mistral model

- 1.16.2: Tokenizer support for gpt-4o

- 1.16.2: Updates to Beam

- 1.16.1: Support for the new OpenAI GPT-4o 2024-05-13 model

- Beam core and UX improvements based on user feedback

- Chat cost estimation 💰 (enable it in Labs / hover the token counter)

- Save/load chat files with Ctrl+S / Ctrl+O on desktop

- Major enhancements to the Auto-Diagrams tool

- YouTube Transcriber Persona for chatting with video content, #500

- Improved formula rendering (LaTeX), and dark-mode diagrams, #508, #520

- Models update: Anthropic, Groq, Ollama, OpenAI, OpenRouter, Perplexity

- Code soft-wrap, chat text selection toolbar, 3x faster on Apple silicon, and more #517, 507

- 🥇 Today we celebrate commit 3000 in just over one year, and going stronger 🚀

- 📢️ Thanks everyone for your support and words of love for Big-AGI, we are committed to creating the best AI experiences for everyone.

⚠️ Beam: the multi-model AI chat. find better answers, faster - a game-changer for brainstorming, decision-making, and creativity. #443- Managed Deployments Auto-Configuration: simplify the UI models setup with backend-set models. #436

- Message Starring ⭐: star important messages within chats, to attach them later. #476

- Enhanced the default Persona

- Fixes to Gemini models and SVGs, improvements to UI and icons

- 1.15.1: Support for Gemini Pro 1.5 and OpenAI Turbo models

- Beast release, over 430 commits, 10,000+ lines changed: release notes, and changes v1.14.1...v1.15.0

What's New in 1.14.1 · March 7, 2024 · Modelmorphic

- Anthropic Claude-3 model family support. #443

- New Perplexity and Groq integration (thanks @Penagwin). #407, #427

- LocalAI deep integration, including support for model galleries

- Mistral Large and Google Gemini 1.5 support

- Performance optimizations: runs much faster, saves lots of power, reduces memory usage

- Enhanced UX with auto-sizing charts, refined search and folder functionalities, perfected scaling

- And with more UI improvements, documentation, bug fixes (20 tickets), and developer enhancements

What's New in 1.13.0 · Feb 8, 2024 · Multi + Mind

https://github.com/enricoros/big-AGI/assets/32999/01732528-730e-41dc-adc7-511385686b13

- Side-by-Side Split Windows: multitask with parallel conversations. #208

- Multi-Chat Mode: message everyone, all at once. #388

- Export tables as CSV: big thanks to @aj47. #392

- Adjustable text size: customize density. #399

- Dev2 Persona Technology Preview

- Better looking chats with improved spacing, fonts, and menus

- More: new video player, LM Studio tutorial (thanks @aj47), MongoDB support (thanks @ranfysvalle02), and speedups

What's New in 1.12.0 · Jan 26, 2024 · AGI Hotline

https://github.com/enricoros/big-AGI/assets/32999/95ceb03c-945d-4fdd-9a9f-3317beb54f3f

- Voice Calls: real-time voice call your personas out of the blue or in relation to a chat #354

- Support OpenAI 0125 Models. #364

- Rename or Auto-Rename chats. #222, #360

- More control over Link Sharing #356

- Accessibility to screen readers #358

- Export chats to Markdown #337

- Paste tables from Excel #286

- Ollama model updates and context window detection fixes #309

What's New in 1.11.0 · Jan 16, 2024 · Singularity

https://github.com/enricoros/big-AGI/assets/1590910/a6b8e172-0726-4b03-a5e5-10cfcb110c68

- Find chats: search in titles and content, with frequency ranking. #329

- Commands: command auto-completion (type '/'). #327

- Together AI inference platform support (good speed and newer models). #346

- Persona Creator history, deletion, custom creation, fix llm API timeouts

- Enable adding up to five custom OpenAI-compatible endpoints

- Developer enhancements: new 'Actiles' framework

What's New in 1.10.0 · Jan 6, 2024 · The Year of AGI

- New UI: for both desktop and mobile, sets the stage for future scale. #201

- Conversation Folders: enhanced conversation organization. #321

- LM Studio support and improved token management

- Resizable panes in split-screen conversations.

- Large performance optimizations

- Developer enhancements: new UI framework, updated documentation for proxy settings on browserless/docker

For full details and former releases, check out the changelog.

You can easily configure 100s of AI models in big-AGI:

| AI models | supported vendors |

|---|---|

| Opensource Servers | LocalAI (multimodal) · Ollama |

| Local Servers | LM Studio |

| Multimodal services | Azure · Anthropic · Google Gemini · OpenAI |

| Language services | Alibaba · DeepSeek · Groq · Mistral · OpenRouter · Perplexity · Together AI |

| Image services | Prodia (SDXL) |

| Speech services | ElevenLabs (Voice synthesis / cloning) |

Add extra functionality with these integrations:

| More | integrations |

|---|---|

| Web Browse | Browserless · Puppeteer-based |

| Web Search | Google CSE |

| Code Editors | CodePen · StackBlitz · JSFiddle |

| Sharing | Paste.gg (Paste chats) |

| Tracking | Helicone (LLM Observability) |

To get started with big-AGI, follow our comprehensive Installation Guide. The guide covers various installation options, whether you're spinning it up on your local computer, deploying on Vercel, on Cloudflare, or rolling it out through Docker.

Whether you're a developer, system integrator, or enterprise user, you'll find step-by-step instructions to set up big-AGI quickly and easily.

Or bring your API keys and jump straight into our free instance on big-AGI.com.

- [ ] 📢️ Chat with us on Discord

- [ ] ⭐ Give us a star on GitHub 👆

- [ ] 🚀 Do you like code? You'll love this gem of a project! Pick up a task! - easy to pro

- [ ] 💡 Got a feature suggestion? Add your roadmap ideas

- [ ] ✨ Deploy your fork for your friends and family, or customize it for work

Big-AGI incorporates third-party software components that are subject to separate license terms. For detailed information about these components and their respective licenses, please refer to the Third-Party Notices.

2023-2024 · Enrico Ros x Big-AGI · Like this project? Leave a star! 💫⭐

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for big-AGI

Similar Open Source Tools

big-AGI

big-AGI is an AI suite designed for professionals seeking function, form, simplicity, and speed. It offers best-in-class Chats, Beams, and Calls with AI personas, visualizations, coding, drawing, side-by-side chatting, and more, all wrapped in a polished UX. The tool is powered by the latest models from 12 vendors and open-source servers, providing users with advanced AI capabilities and a seamless user experience. With continuous updates and enhancements, big-AGI aims to stay ahead of the curve in the AI landscape, catering to the needs of both developers and AI enthusiasts.

Everywhere

Everywhere is an interactive AI assistant with context-aware capabilities, featuring a sleek, modern UI and powerful integrated functionality. It instantly perceives and understands anything on your screen, providing seamless AI assistant support without the need for screenshots or app switching. The tool offers troubleshooting expertise, quick web summarization, instant translation, and email draft assistance. It supports LLM from various providers, integrates with web browsers, file systems, terminals, and more, and provides an interactive experience with a modern UI, context-aware invocation, keyboard shortcuts, and markdown rendering. Everywhere is available on Windows and macOS, with Linux support coming soon. Language support includes Simplified Chinese, English, German, Spanish, French, Italian, Japanese, Korean, Russian, Turkish, Traditional Chinese, and Traditional Chinese (Hong Kong).

lancedb

LanceDB is an open-source database for vector-search built with persistent storage, which greatly simplifies retrieval, filtering, and management of embeddings. The key features of LanceDB include: Production-scale vector search with no servers to manage. Store, query, and filter vectors, metadata, and multi-modal data (text, images, videos, point clouds, and more). Support for vector similarity search, full-text search, and SQL. Native Python and Javascript/Typescript support. Zero-copy, automatic versioning, manage versions of your data without needing extra infrastructure. GPU support in building vector index(*). Ecosystem integrations with LangChain 🦜️🔗, LlamaIndex 🦙, Apache-Arrow, Pandas, Polars, DuckDB, and more on the way. LanceDB's core is written in Rust 🦀 and is built using Lance, an open-source columnar format designed for performant ML workloads.

RisuAI

RisuAI, or Risu for short, is a cross-platform AI chatting software/web application with powerful features such as multiple API support, assets in the chat, regex functions, and much more.

lmms-lab-writer

LMMs-Lab Writer is an AI-native LaTeX editor designed for researchers who prioritize ideas over syntax. It offers a local-first approach with AI agents for editing assistance, one-click LaTeX setup with automatic package installation, support for multiple languages, AI-powered workflows with OpenCode integration, Git integration for modern collaboration, fully open-source with MIT license, cross-platform compatibility, and a comparison with Overleaf highlighting its advantages. The tool aims to streamline the writing and publishing process for researchers while ensuring data security and control.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

UI-TARS-desktop

UI-TARS-desktop is a desktop application that provides a native GUI Agent based on the UI-TARS model. It offers features such as natural language control powered by Vision-Language Model, screenshot and visual recognition support, precise mouse and keyboard control, cross-platform support (Windows/MacOS/Browser), real-time feedback and status display, and private and secure fully local processing. The application aims to enhance the user's computer experience, introduce new browser operation features, and support the advanced UI-TARS-1.5 model for improved performance and precise control.

MentraOS

MentraOS is an open source operating system designed for smart glasses. It simplifies the development of smart glasses apps by handling pairing, connection, data streaming, and cross-compatibility. Developers can create apps using the TypeScript SDK quickly and easily, with access to smart glasses I/O components like displays, microphones, cameras, and speakers. The platform emphasizes cross-compatibility, speed of app development, control over device features, and easy distribution to users. The MentraOS Community is dedicated to promoting open, cross-compatible, and user-controlled personal computing through the development and support of MentraOS.

gemini-voyager

Gemini Voyager is a browser extension designed to enhance the user experience of Google Gemini by providing features such as folder organization, prompt vault, cloud sync, timeline navigation, chat export, Mermaid rendering, markdown fixing, and various power tools. It aims to help users keep their AI conversations organized, accessible, and productive. The extension is available on multiple browsers and supports manual installation and development builds. Users can also support the project by buying the developer a coffee or sponsoring via different platforms.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

jan

Jan is an open-source ChatGPT alternative that runs 100% offline on your computer. It supports universal architectures, including Nvidia GPUs, Apple M-series, Apple Intel, Linux Debian, and Windows x64. Jan is currently in development, so expect breaking changes and bugs. It is lightweight and embeddable, and can be used on its own within your own projects.

MemMachine

MemMachine is an open-source long-term memory layer designed for AI agents and LLM-powered applications. It enables AI to learn, store, and recall information from past sessions, transforming stateless chatbots into personalized, context-aware assistants. With capabilities like episodic memory, profile memory, working memory, and agent memory persistence, MemMachine offers a developer-friendly API, flexible storage options, and seamless integration with various AI frameworks. It is suitable for developers, researchers, and teams needing persistent, cross-session memory for their LLM applications.

WebMasterLog

WebMasterLog is a comprehensive repository showcasing various web development projects built with front-end and back-end technologies. It highlights interactive user interfaces, dynamic web applications, and a spectrum of web development solutions. The repository encourages contributions in areas such as adding new projects, improving existing projects, updating documentation, fixing bugs, implementing responsive design, enhancing code readability, and optimizing project functionalities. Contributors are guided to follow specific guidelines for project submissions, including directory naming conventions, README file inclusion, project screenshots, and commit practices. Pull requests are reviewed based on criteria such as proper PR template completion, originality of work, code comments for clarity, and sharing screenshots for frontend updates. The repository also participates in various open-source programs like JWOC, GSSoC, Hacktoberfest, KWOC, 24 Pull Requests, IWOC, SWOC, and DWOC, welcoming valuable contributors.

lemonade

Lemonade is a tool that helps users run local Large Language Models (LLMs) with high performance by configuring state-of-the-art inference engines for their Neural Processing Units (NPUs) and Graphics Processing Units (GPUs). It is used by startups, research teams, and large companies to run LLMs efficiently. Lemonade provides a high-level Python API for direct integration of LLMs into Python applications and a CLI for mixing and matching LLMs with various features like prompting templates, accuracy testing, performance benchmarking, and memory profiling. The tool supports both GGUF and ONNX models and allows importing custom models from Hugging Face using the Model Manager. Lemonade is designed to be easy to use and switch between different configurations at runtime, making it a versatile tool for running LLMs locally.

Lumina-Note

Lumina Note is a local-first AI note-taking app designed to help users write, connect, and evolve knowledge with AI capabilities while ensuring data ownership. It offers a knowledge-centered workflow with features like Markdown editor, WikiLinks, and graph view. The app includes AI workspace modes such as Chat, Agent, Deep Research, and Codex, along with support for multiple model providers. Users can benefit from bidirectional links, LaTeX support, graph visualization, PDF reader with annotations, real-time voice input, and plugin ecosystem for extended functionalities. Lumina Note is built on Tauri v2 framework with a tech stack including React 18, TypeScript, Tailwind CSS, and SQLite for vector storage.

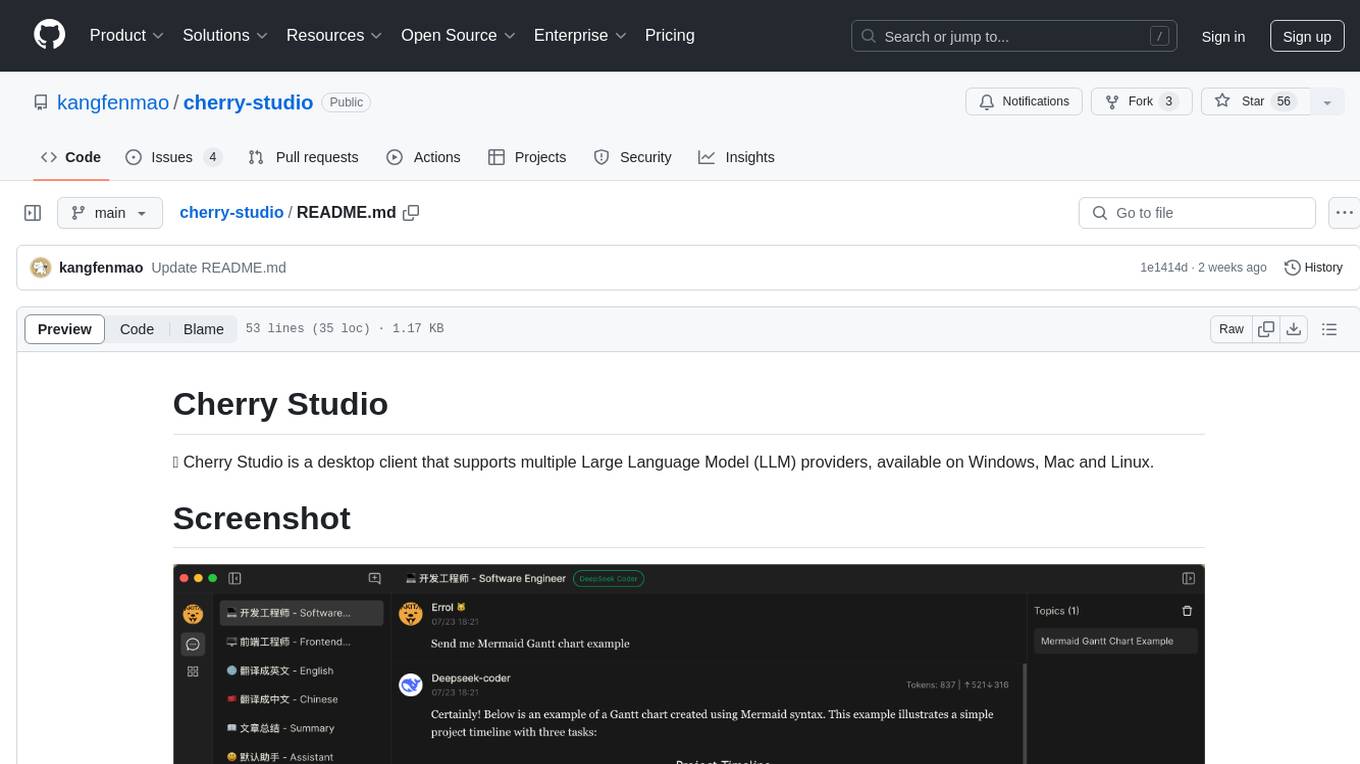

cherry-studio

Cherry Studio is a desktop client that supports multiple Large Language Model (LLM) providers, available on Windows, Mac, and Linux. It allows users to create multiple Assistants and topics, use multiple models to answer questions in the same conversation, and supports drag-and-drop sorting, code highlighting, and Mermaid chart. The tool is designed to enhance productivity and streamline the process of interacting with various language models.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.