turnkeyml

The no-code AI toolchain

Stars: 58

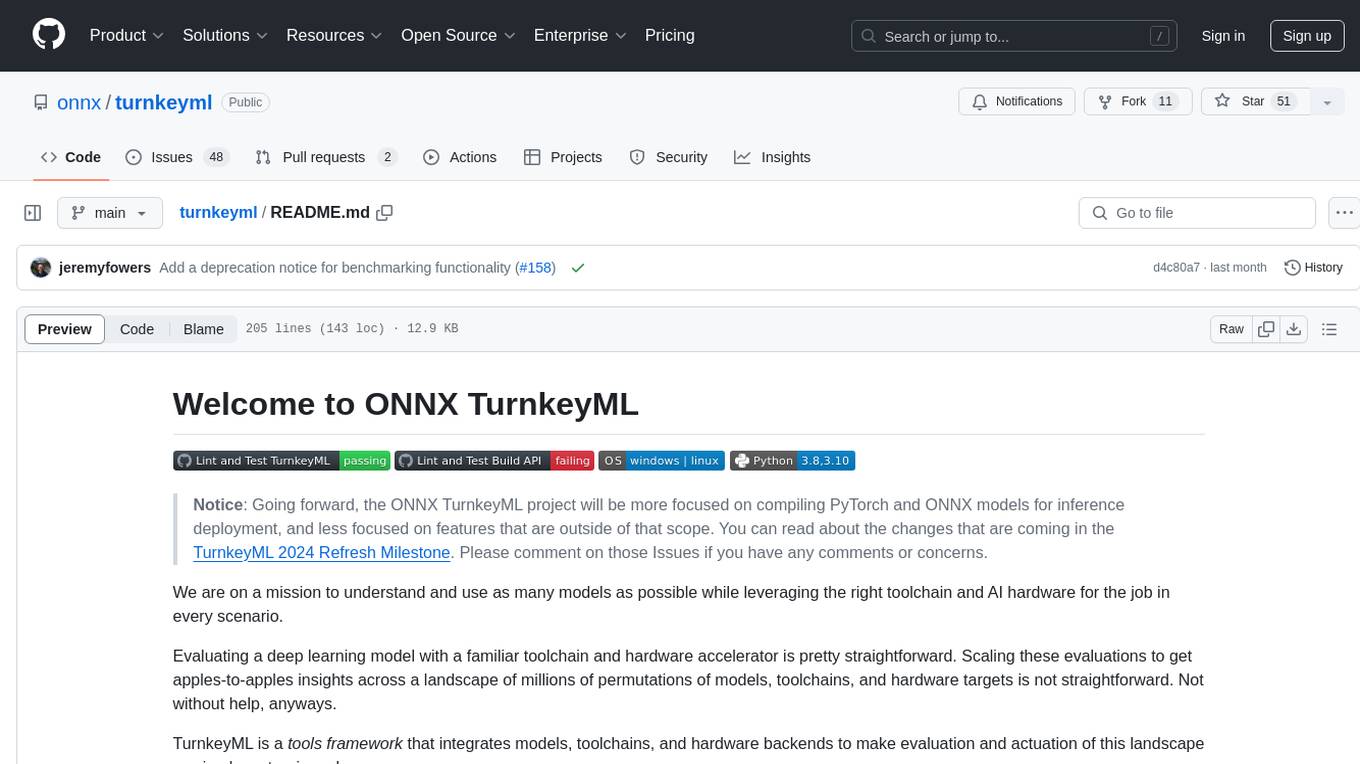

TurnkeyML is a tools framework that integrates models, toolchains, and hardware backends to simplify the evaluation and actuation of deep learning models. It supports use cases like exporting ONNX files, performance validation, functional coverage measurement, stress testing, and model insights analysis. The framework consists of analysis, build, runtime, reporting tools, and a models corpus, seamlessly integrated to provide comprehensive functionality with simple commands. Extensible through plugins, it offers support for various export and optimization tools and AI runtimes. The project is actively seeking collaborators and is licensed under Apache 2.0.

README:

We are on a mission to make it easy to use the most important tools in the ONNX ecosystem. TurnkeyML accomplishes this by providing a no-code CLI, turnkey, as well as a low-code API, that provide seamless integration of these tools.

We also provide turnkey-llm, which has LLM-specific tools for prompting, accuracy measurement, and serving on a variety of runtimes (Huggingface, onnxruntime-genai) and hardware (CPU, GPU, and NPU).

The easiest way to get started is:

pip install turnkeyml- Copy a PyTorch example of a model, like the one on this Huggingface BERT model card, into a file named

bert.py.

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretrained("bert-base-uncased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)-

turnkey -i bert.py discover export-pytorch: make a BERT ONNX file from thisbert.pyexample.

For LLM setup instructions, see turnkey-llm.

Here's turnkey in action: BERT-Base is exported from PyTorch to ONNX using torch.onnx.export, optimized for inference with onnxruntime, and converted to fp16 with onnxmltools:

Breaking down the command turnkey -i bert.py discover export-pytorch optimize-ort convert-fp16:

-

turnkey -i bert.pyfeedsbert.py, a minimal PyTorch script that instantiates BERT, into the tool sequence, starting with... -

discoveris a tool that finds the PyTorch model in a script and passes it to the next tool, which is... -

export-pytorch, which takes a PyTorch model and converts it to an ONNX model, then passes it to... -

optimize-ort, which usesonnxruntimeto optimize the model's compute graph, then passes it to... -

convert-fp16, which usesonnxmltoolsto convert the ONNX file into fp16. - Finally, the result is printed, and we can see that the requested

.onnxfiles have been produced.

All without writing a single line of code or learning how to use any of the underlying ONNX ecosystem tools 🚀

The turnkey CLI provides a set of Tools that users can invoke in a Sequence. The first Tool takes the input (-i), performs some action, and passes its state to the next Tool in the Sequence.

You can read the Sequence out like a sentence. For example, the demo command above was:

> turnkey -i bert.py discover export-pytorch optimize-ort convert-fp16

Which you can read like:

Use

turnkeyonbert.pytodiscoverthe model,exportthepytorchto ONNX,optimizethe ONNX withort, andconvertthe ONNX tofp16.

You can configure each Tool by passing it arguments. For example, export-pytorch --opset 18 would set the opset of the resulting ONNX model to 18.

A full command with an argument looks like:

> turnkey -i bert.py discover export-pytorch --opset 18 optimize-ort conver-fp16

The easiest way to learn more about turnkey is to explore the help menu with turnkey -h. To learn about a specific tool, run turnkey <tool name> -h, for example turnkey export-pytorch -h.

We also provide the following resources:

- Installation guide: how to install from source, set up Slurm, etc.

-

User guide: explains the concepts of

turnkey's, including the syntax for making your own tool sequence. -

Examples: PyTorch scripts and ONNX files that can be used to try out

turnkeyconcepts. - Code organization guide: learn how this repository is structured.

-

Models: PyTorch model scripts that work with

turnkey.

turnkey is used in multiple projects where many hundreds of models are being evaluated. For example, the ONNX Model Zoo was created using turnkey.

We provide several helpful tools to facilitate this kind of mass-evaluation.

turnkey will iterate over multiple inputs if you pass it a wildcard input.

For example, to export ~1000 built-in models to ONNX:

> turnkey models/*/*.py discover export-pytorch

All build results, such as .onnx files, are collected into a cache directory, which you can learn about with turnkey cache -h.

turnkey collects statistics about each model and build into the corresponding build directory in the cache. Use turnkey report -h to see how those statistics can be exported into a CSV file.

This repository is home to a diverse corpus of hundreds of models, which are meant to be a convenient input to turnkey -i <model>.py discover. We are actively working on increasing the number of models in our model library. You can see the set of models in each category by clicking on the corresponding badge.

Evaluating a new model is as simple as taking a Python script that instantiates and invokes a PyTorch torch.nn.module and call turnkey on it. Read about model contributions here.

The build tool has built-in support for a variety of interoperable Tools. If you need more, the TurnkeyML plugin API lets you add your own installable tools with any functionality you like:

> pip install -e my_custom_plugin

> turnkey -i my_model.py discover export-pytorch my-custom-tool --my-args

All of the built-in Tools are implemented against the plugin API. Check out the example plugins and the plugin API guide to learn more about creating an installable plugin.

We are actively seeking collaborators from across the industry. If you would like to contribute to this project, please check out our contribution guide.

This project is sponsored by the ONNX Model Zoo special interest group (SIG). It is maintained by @danielholanda @jeremyfowers @ramkrishna @vgodsoe in equal measure. You can reach us by filing an issue.

This project is licensed under the Apache 2.0 License.

TurnkeyML used code from other open source projects as a starting point (see NOTICE.md). Thank you Philip Colangelo, Derek Elkins, Jeremy Fowers, Dan Gard, Victoria Godsoe, Mark Heaps, Daniel Holanda, Brian Kurtz, Mariah Larwood, Philip Lassen, Andrew Ling, Adrian Macias, Gary Malik, Sarah Massengill, Ashwin Murthy, Hatice Ozen, Tim Sears, Sean Settle, Krishna Sivakumar, Aviv Weinstein, Xueli Xao, Bill Xing, and Lev Zlotnik for your contributions to that work.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for turnkeyml

Similar Open Source Tools

turnkeyml

TurnkeyML is a tools framework that integrates models, toolchains, and hardware backends to simplify the evaluation and actuation of deep learning models. It supports use cases like exporting ONNX files, performance validation, functional coverage measurement, stress testing, and model insights analysis. The framework consists of analysis, build, runtime, reporting tools, and a models corpus, seamlessly integrated to provide comprehensive functionality with simple commands. Extensible through plugins, it offers support for various export and optimization tools and AI runtimes. The project is actively seeking collaborators and is licensed under Apache 2.0.

debug-gym

debug-gym is a text-based interactive debugging framework designed for debugging Python programs. It provides an environment where agents can interact with code repositories, use various tools like pdb and grep to investigate and fix bugs, and propose code patches. The framework supports different LLM backends such as OpenAI, Azure OpenAI, and Anthropic. Users can customize tools, manage environment states, and run agents to debug code effectively. debug-gym is modular, extensible, and suitable for interactive debugging tasks in a text-based environment.

sage

Sage is a tool that allows users to chat with any codebase, providing a chat interface for code understanding and integration. It simplifies the process of learning how a codebase works by offering heavily documented answers sourced directly from the code. Users can set up Sage locally or on the cloud with minimal effort. The tool is designed to be easily customizable, allowing users to swap components of the pipeline and improve the algorithms powering code understanding and generation.

cassio

cassIO is a framework-agnostic Python library that seamlessly integrates Apache Cassandra with ML/LLM/genAI workloads. It provides an easy-to-use interface for developers to connect their Cassandra databases to machine learning models, allowing them to perform complex data analysis and AI-powered tasks directly on their Cassandra data. cassIO is designed to be flexible and extensible, making it suitable for a wide range of use cases, from data exploration and visualization to predictive modeling and natural language processing.

leptonai

A Pythonic framework to simplify AI service building. The LeptonAI Python library allows you to build an AI service from Python code with ease. Key features include a Pythonic abstraction Photon, simple abstractions to launch models like those on HuggingFace, prebuilt examples for common models, AI tailored batteries, a client to automatically call your service like native Python functions, and Pythonic configuration specs to be readily shipped in a cloud environment.

HuggingFaceGuidedTourForMac

HuggingFaceGuidedTourForMac is a guided tour on how to install optimized pytorch and optionally Apple's new MLX, JAX, and TensorFlow on Apple Silicon Macs. The repository provides steps to install homebrew, pytorch with MPS support, MLX, JAX, TensorFlow, and Jupyter lab. It also includes instructions on running large language models using HuggingFace transformers. The repository aims to help users set up their Macs for deep learning experiments with optimized performance.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

llm-verified-with-monte-carlo-tree-search

This prototype synthesizes verified code with an LLM using Monte Carlo Tree Search (MCTS). It explores the space of possible generation of a verified program and checks at every step that it's on the right track by calling the verifier. This prototype uses Dafny, Coq, Lean, Scala, or Rust. By using this technique, weaker models that might not even know the generated language all that well can compete with stronger models.

CoML

CoML (formerly MLCopilot) is an interactive coding assistant for data scientists and machine learning developers, empowered on large language models. It offers an out-of-the-box interactive natural language programming interface for data mining and machine learning tasks, integration with Jupyter lab and Jupyter notebook, and a built-in large knowledge base of machine learning to enhance the ability to solve complex tasks. The tool is designed to assist users in coding tasks related to data analysis and machine learning using natural language commands within Jupyter environments.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

unstructured

The `unstructured` library provides open-source components for ingesting and pre-processing images and text documents, such as PDFs, HTML, Word docs, and many more. The use cases of `unstructured` revolve around streamlining and optimizing the data processing workflow for LLMs. `unstructured` modular functions and connectors form a cohesive system that simplifies data ingestion and pre-processing, making it adaptable to different platforms and efficient in transforming unstructured data into structured outputs.

PrAIvateSearch

PrAIvateSearch is a NextJS web application that aims to implement similar features to SearchGPT in an open-source, local, and private way. It allows users to search the web using their own AI model. The application provides a user-friendly interface for interacting with the AI model and accessing search results. PrAIvateSearch is designed to be easy to install and use, with detailed instructions provided in the readme file. The project is in beta stage and welcomes contributions from the community to improve and enhance its functionality. Users are encouraged to support the project through funding to help it grow and continue to be maintained as an open-source tool under the MIT license.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

hordelib

horde-engine is a wrapper around ComfyUI designed to run inference pipelines visually designed in the ComfyUI GUI. It enables users to design inference pipelines in ComfyUI and then call them programmatically, maintaining compatibility with the existing horde implementation. The library provides features for processing Horde payloads, initializing the library, downloading and validating models, and generating images based on input data. It also includes custom nodes for preprocessing and tasks such as face restoration and QR code generation. The project depends on various open source projects and bundles some dependencies within the library itself. Users can design ComfyUI pipelines, convert them to the backend format, and run them using the run_image_pipeline() method in hordelib.comfy.Comfy(). The project is actively developed and tested using git, tox, and a specific model directory structure.

For similar tasks

turnkeyml

TurnkeyML is a tools framework that integrates models, toolchains, and hardware backends to simplify the evaluation and actuation of deep learning models. It supports use cases like exporting ONNX files, performance validation, functional coverage measurement, stress testing, and model insights analysis. The framework consists of analysis, build, runtime, reporting tools, and a models corpus, seamlessly integrated to provide comprehensive functionality with simple commands. Extensible through plugins, it offers support for various export and optimization tools and AI runtimes. The project is actively seeking collaborators and is licensed under Apache 2.0.

Auto-Analyst

Auto-Analyst is an AI-driven data analytics agentic system designed to simplify and enhance the data science process. By integrating various specialized AI agents, this tool aims to make complex data analysis tasks more accessible and efficient for data analysts and scientists. Auto-Analyst provides a streamlined approach to data preprocessing, statistical analysis, machine learning, and visualization, all within an interactive Streamlit interface. It offers plug and play Streamlit UI, agents with data science speciality, complete automation, LLM agnostic operation, and is built using lightweight frameworks.

For similar jobs

turnkeyml

TurnkeyML is a tools framework that integrates models, toolchains, and hardware backends to simplify the evaluation and actuation of deep learning models. It supports use cases like exporting ONNX files, performance validation, functional coverage measurement, stress testing, and model insights analysis. The framework consists of analysis, build, runtime, reporting tools, and a models corpus, seamlessly integrated to provide comprehensive functionality with simple commands. Extensible through plugins, it offers support for various export and optimization tools and AI runtimes. The project is actively seeking collaborators and is licensed under Apache 2.0.

libedgetpu

This repository contains the source code for the userspace level runtime driver for Coral devices. The software is distributed in binary form at coral.ai/software. Users can build the library using Docker + Bazel, Bazel, or Makefile methods. It supports building on Linux, macOS, and Windows. The library is used to enable the Edge TPU runtime, which may heat up during operation. Google does not accept responsibility for any loss or damage if the device is operated outside the recommended ambient temperature range.

FlagPerf

FlagPerf is an integrated AI hardware evaluation engine jointly built by the Institute of Intelligence and AI hardware manufacturers. It aims to establish an industry-oriented metric system to evaluate the actual capabilities of AI hardware under software stack combinations (model + framework + compiler). FlagPerf features a multidimensional evaluation metric system that goes beyond just measuring 'whether the chip can support specific model training.' It covers various scenarios and tasks, including computer vision, natural language processing, speech, multimodal, with support for multiple training frameworks and inference engines to connect AI hardware with software ecosystems. It also supports various testing environments to comprehensively assess the performance of domestic AI chips in different scenarios.

tt-xla

TT-XLA is a repository that enables running PyTorch and JAX models on Tenstorrent's AI hardware. It serves as a backend integration between the JAX ecosystem and Tenstorrent's ML accelerators using the PJRT (Portable JAX Runtime) interface. It supports ingestion of PyTorch models through PyTorch/XLA and JAX models via jit compile, providing a StableHLO (SHLO) graph to TT-MLIR compiler.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.