cassio

A framework-agnostic Python library to seamlessly integrate Cassandra with ML/LLM/genAI workloads

Stars: 108

cassIO is a framework-agnostic Python library that seamlessly integrates Apache Cassandra with ML/LLM/genAI workloads. It provides an easy-to-use interface for developers to connect their Cassandra databases to machine learning models, allowing them to perform complex data analysis and AI-powered tasks directly on their Cassandra data. cassIO is designed to be flexible and extensible, making it suitable for a wide range of use cases, from data exploration and visualization to predictive modeling and natural language processing.

README:

A framework-agnostic Python library to seamlessly integrate Apache Cassandra with ML/LLM/genAI workloads.

Note: this is currently an alpha release.

Installation is as simple as:

pip install cassio

For example usages and integration with higher-level LLM frameworks such as LangChain, please visit cassio.org.

To develop cassio, we use poetry

pip install poetryUse poetry to install dependencies

poetry installIf the integration is Poetry-based (e.g. LangChain itself), you should get this

in your pyproject.toml:

cassio = {path = "../../cassio", develop = true}

Then you do

poetry remove cassio # if necessary

poetry lock --no-update

poetry install -E all --with dev --with test_integration # or similar, this is for langchain

Inspired from this. You also need a recent Poetry for this to work.

We are still at 0.*. Occasional breaking changes are to be expected,

but please think carefully. Later, a stronger versioning model will be adopted.

Style is enforced through black, linting with ruff,

and typechecking with mypy.

The code should run through make format without issues.

At the moment we try to run tests under Python3.8 and Python3.10 to try and

catch versions-specific issues

(such as the newer typing syntax such as typeA | typeB, illegal on 3.8).

- Bump version in pyproject.toml

- Add to

CHANGES.txt - Commit the very code that will be built:

git tag v<x.y.z>; git push origin v<x.y.z>

make build

poetry publish # (login to PyPI ...)

Please run tests (and add some coverage for new features). This is not

enforced other than to your conscience. Type make for the available tests.

To run the full tests (except specific tests targeting Cassandra),

there's make test-all.

make test-unit

Ensure the required environment variables are set (see for instance

the provided TEMPLATE.testing.env).

You need at least one of either Astra DB or a

Cassandra (5+) cluster to use.

Launch the tests with either of:

make test-integration

make test-astra-integration

make test-cassandra-integration

make test-testcontainerscassandra-integration

The last three above specify TEST_DB_MODE as either LOCAL_CASSANDRA, TESTCONTAINERS_CASSANDRA or

ASTRA_DB. Refer to TEMPLATE.testing.env for required environment variables in the specific cases.

Note: Ideally you should test with both Astra DB and one Cassandra, since some tests are skipped in either case.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cassio

Similar Open Source Tools

cassio

cassIO is a framework-agnostic Python library that seamlessly integrates Apache Cassandra with ML/LLM/genAI workloads. It provides an easy-to-use interface for developers to connect their Cassandra databases to machine learning models, allowing them to perform complex data analysis and AI-powered tasks directly on their Cassandra data. cassIO is designed to be flexible and extensible, making it suitable for a wide range of use cases, from data exploration and visualization to predictive modeling and natural language processing.

CoML

CoML (formerly MLCopilot) is an interactive coding assistant for data scientists and machine learning developers, empowered on large language models. It offers an out-of-the-box interactive natural language programming interface for data mining and machine learning tasks, integration with Jupyter lab and Jupyter notebook, and a built-in large knowledge base of machine learning to enhance the ability to solve complex tasks. The tool is designed to assist users in coding tasks related to data analysis and machine learning using natural language commands within Jupyter environments.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

fsdp_qlora

The fsdp_qlora repository provides a script for training Large Language Models (LLMs) with Quantized LoRA and Fully Sharded Data Parallelism (FSDP). It integrates FSDP+QLoRA into the Axolotl platform and offers installation instructions for dependencies like llama-recipes, fastcore, and PyTorch. Users can finetune Llama-2 70B on Dual 24GB GPUs using the provided command. The script supports various training options including full params fine-tuning, LoRA fine-tuning, custom LoRA fine-tuning, quantized LoRA fine-tuning, and more. It also discusses low memory loading, mixed precision training, and comparisons to existing trainers. The repository addresses limitations and provides examples for training with different configurations, including BnB QLoRA and HQQ QLoRA. Additionally, it offers SLURM training support and instructions for adding support for a new model.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

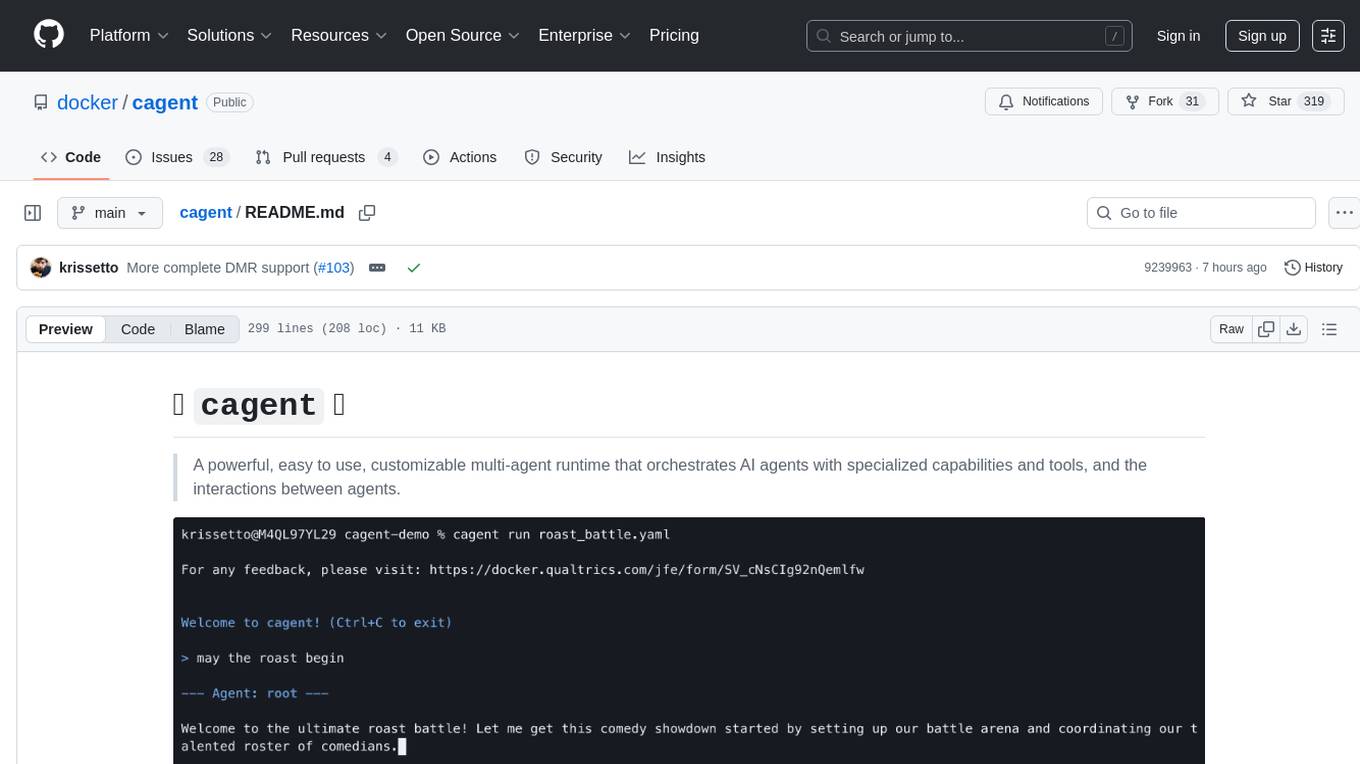

cagent

cagent is a powerful and easy-to-use multi-agent runtime that orchestrates AI agents with specialized capabilities and tools, allowing users to quickly build, share, and run a team of virtual experts to solve complex problems. It supports creating agents with YAML configuration, improving agents with MCP servers, and delegating tasks to specialists. Key features include multi-agent architecture, rich tool ecosystem, smart delegation, YAML configuration, advanced reasoning tools, and support for multiple AI providers like OpenAI, Anthropic, Gemini, and Docker Model Runner.

turnkeyml

TurnkeyML is a tools framework that integrates models, toolchains, and hardware backends to simplify the evaluation and actuation of deep learning models. It supports use cases like exporting ONNX files, performance validation, functional coverage measurement, stress testing, and model insights analysis. The framework consists of analysis, build, runtime, reporting tools, and a models corpus, seamlessly integrated to provide comprehensive functionality with simple commands. Extensible through plugins, it offers support for various export and optimization tools and AI runtimes. The project is actively seeking collaborators and is licensed under Apache 2.0.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

HuggingFaceGuidedTourForMac

HuggingFaceGuidedTourForMac is a guided tour on how to install optimized pytorch and optionally Apple's new MLX, JAX, and TensorFlow on Apple Silicon Macs. The repository provides steps to install homebrew, pytorch with MPS support, MLX, JAX, TensorFlow, and Jupyter lab. It also includes instructions on running large language models using HuggingFace transformers. The repository aims to help users set up their Macs for deep learning experiments with optimized performance.

usage_rules

UsageRules is a development tool for Elixir projects that helps gather and consolidate usage rules from dependencies to provide to LLM agents. It provides pre-built usage rules for Elixir and a powerful documentation search task for hexdocs. The tool scans project dependencies, looks for `usage-rules.md` files, consolidates rules into a target file, and maintains sections that can be updated independently. It is useful for projects using frameworks like Ash, Phoenix, or other packages that provide specific usage guidelines, coding patterns, or best practices.

aides-jeunes

The user interface (and the main server) of the simulator of aids and social benefits for young people. It is based on the free socio-fiscal simulator Openfisca.

opencommit

OpenCommit is a tool that auto-generates meaningful commits using AI, allowing users to quickly create commit messages for their staged changes. It provides a CLI interface for easy usage and supports customization of commit descriptions, emojis, and AI models. Users can configure local and global settings, switch between different AI providers, and set up Git hooks for integration with IDE Source Control. Additionally, OpenCommit can be used as a GitHub Action to automatically improve commit messages on push events, ensuring all commits are meaningful and not generic. Payments for OpenAI API requests are handled by the user, with the tool storing API keys locally.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

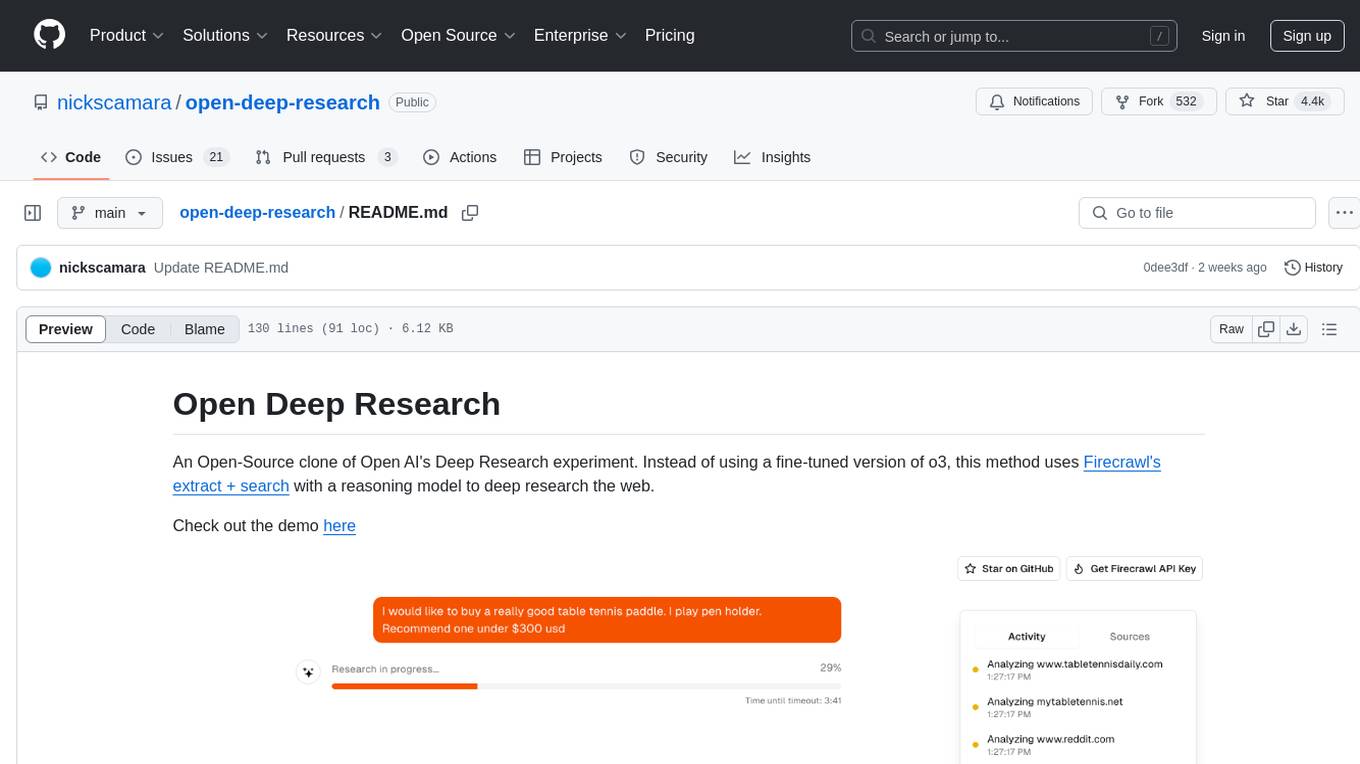

open-deep-research

Open Deep Research is an open-source project that serves as a clone of Open AI's Deep Research experiment. It utilizes Firecrawl's extract and search method along with a reasoning model to conduct in-depth research on the web. The project features Firecrawl Search + Extract, real-time data feeding to AI via search, structured data extraction from multiple websites, Next.js App Router for advanced routing, React Server Components and Server Actions for server-side rendering, AI SDK for generating text and structured objects, support for various model providers, styling with Tailwind CSS, data persistence with Vercel Postgres and Blob, and simple and secure authentication with NextAuth.js.

unstructured

The `unstructured` library provides open-source components for ingesting and pre-processing images and text documents, such as PDFs, HTML, Word docs, and many more. The use cases of `unstructured` revolve around streamlining and optimizing the data processing workflow for LLMs. `unstructured` modular functions and connectors form a cohesive system that simplifies data ingestion and pre-processing, making it adaptable to different platforms and efficient in transforming unstructured data into structured outputs.

For similar tasks

cassio

cassIO is a framework-agnostic Python library that seamlessly integrates Apache Cassandra with ML/LLM/genAI workloads. It provides an easy-to-use interface for developers to connect their Cassandra databases to machine learning models, allowing them to perform complex data analysis and AI-powered tasks directly on their Cassandra data. cassIO is designed to be flexible and extensible, making it suitable for a wide range of use cases, from data exploration and visualization to predictive modeling and natural language processing.

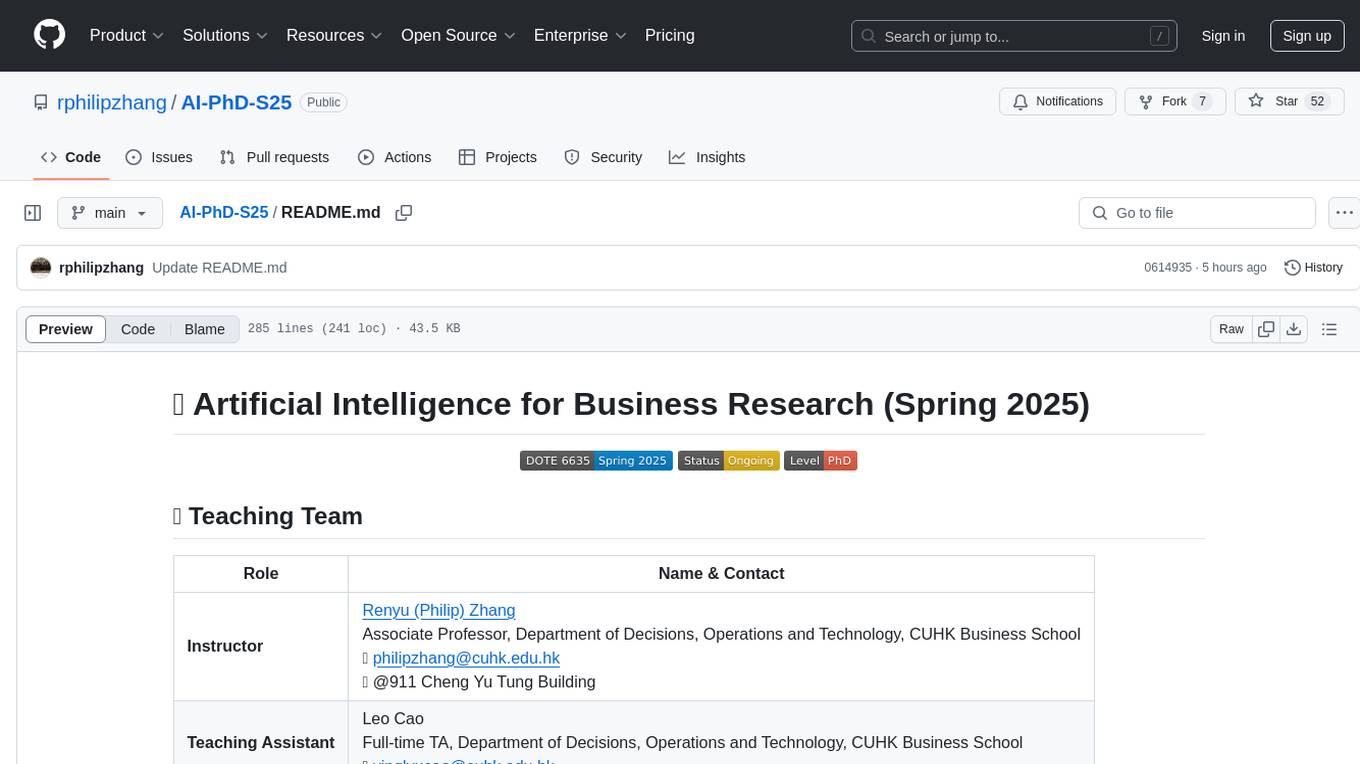

AI-PhD-S25

AI-PhD-S25 is a mono-repo for the DOTE 6635 course on AI for Business Research at CUHK Business School. The course aims to provide a fundamental understanding of ML/AI concepts and methods relevant to business research, explore applications of ML/AI in business research, and discover cutting-edge AI/ML technologies. The course resources include Google CoLab for code distribution, Jupyter Notebooks, Google Sheets for group tasks, Overleaf template for lecture notes, replication projects, and access to HPC Server compute resource. The course covers topics like AI/ML in business research, deep learning basics, attention mechanisms, transformer models, LLM pretraining, posttraining, causal inference fundamentals, and more.

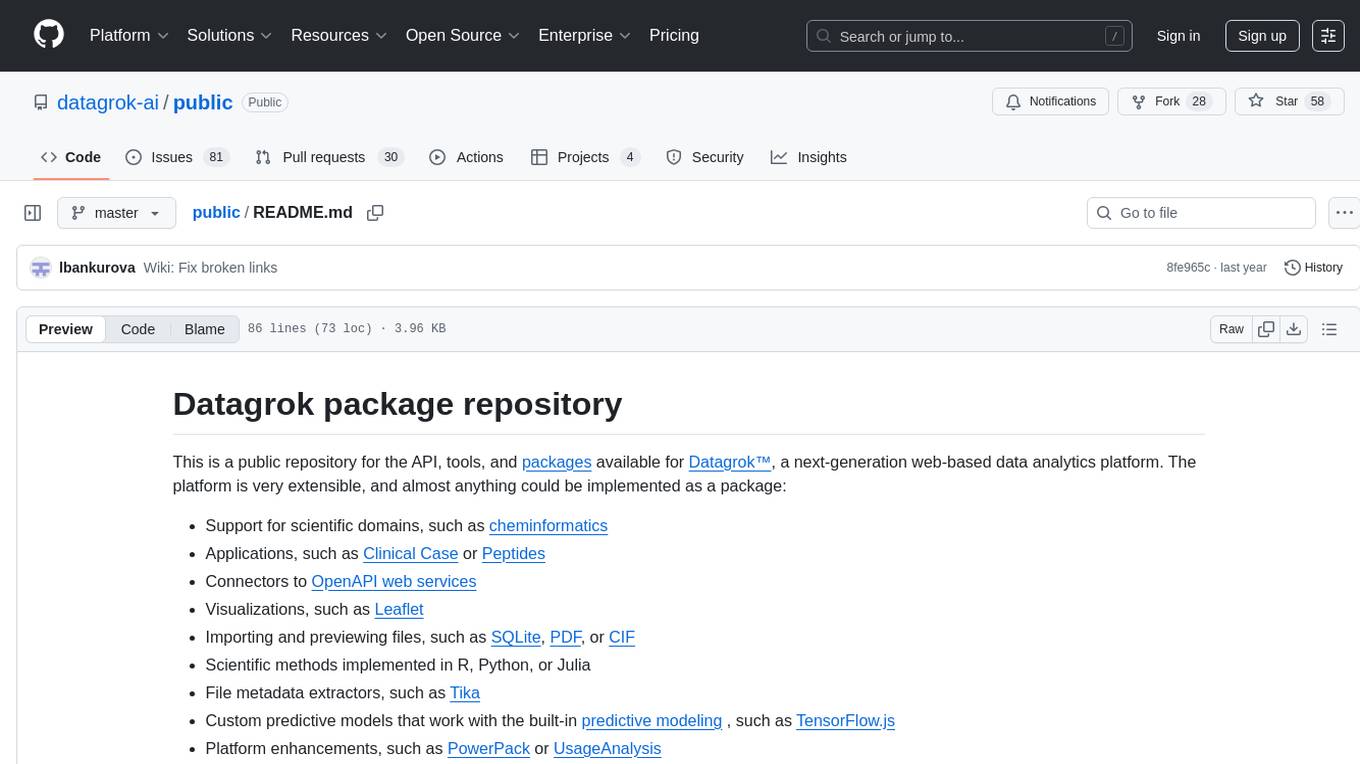

public

This public repository contains API, tools, and packages for Datagrok, a web-based data analytics platform. It offers support for scientific domains, applications, connectors to web services, visualizations, file importing, scientific methods in R, Python, or Julia, file metadata extractors, custom predictive models, platform enhancements, and more. The open-source packages are free to use, with restrictions on server computational capacities for the public environment. Academic institutions can use Datagrok for research and education, benefiting from reproducible and scalable computations and data augmentation capabilities. Developers can contribute by creating visualizations, scientific methods, file editors, connectors to web services, and more.

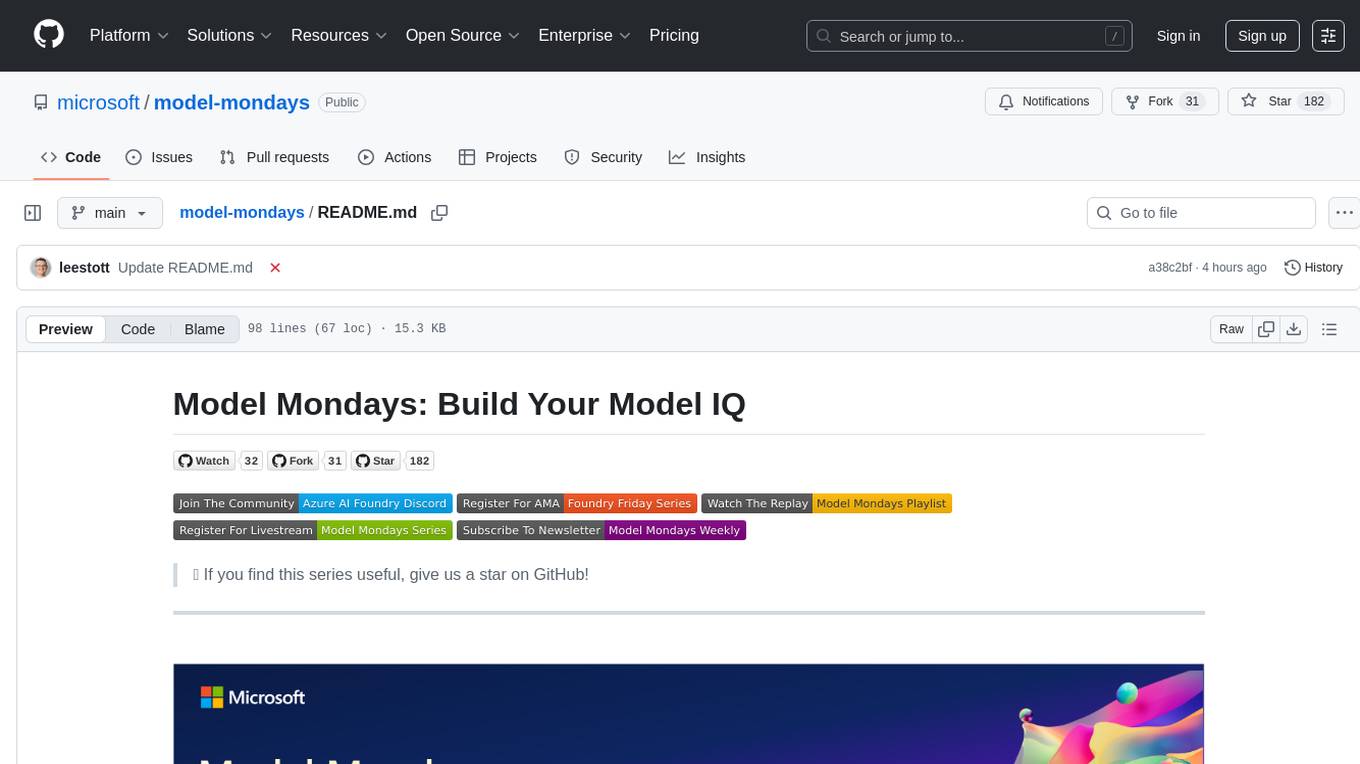

model-mondays

Model Mondays is a repository dedicated to providing a collection of machine learning models implemented in Python. It aims to serve as a resource for individuals looking to explore and experiment with various machine learning algorithms and techniques. The repository includes a wide range of models, from simple linear regression to complex deep learning architectures, along with detailed documentation and examples to facilitate learning and understanding. Whether you are a beginner looking to get started with machine learning or an experienced practitioner seeking reference implementations, Model Mondays offers a valuable repository of models to study and leverage in your projects.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.