ai-starter-kit

None

Stars: 215

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

README:

SambaNova AI Starter Kits are a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for both developers and enterprises.

To run these examples, you can obtain a free API key using SambaNova Cloud. Alternatively, if you are a current SambaNova customer, you can deploy your models using SambaStudio. Most of the code examples are written in Python, although the concepts can be applied to any programming language.

Questions? Just message us on SambaNova Community or create an issue in GitHub. We're happy to help live!

The table below lists the available kits, which are grouped into four categories: 1) Data Ingestion & Preparation, 2) Model Development & Optimization, 3) Intelligent Information Retrieval, and 4) Advanced AI Capabilities.

For functionalities related to third-party integrations, find a list in our Integrations folder.

Note: For each kit, we specify whether it is compatible with SambaNova Cloud, SambaStudio, or both.

| Name | Kit Description | Compatible APIs | Category |

|---|---|---|---|

| Data Extraction | Series of notebooks that demonstrate methods for extracting text from documents in different input formats. | SambaNova Cloud, SambaStudio | Data Ingestion & Preparation |

| End to End Fine-tuning | Recipe to upload and train a Language Model (LLM) using your own data in SambaStudio platform. | SambaStudio | Data Ingestion & Preparation |

| Fine tuning embeddings | Example workflow for fine-tuning embeddings from unstructured data, leveraging Large Language Models (LLMs) and open-source embedding models to enhance NLP task performance. | SambaStudio | Model Development & Optimization |

| Fine tuning SQL | Example workflow for fine-tuning an SQL model for Question-Answering purposes, leveraging Large Language Models (LLMs) and open-source embedding models to enhance SQL generation task performance. | SambaStudio | Model Development & Optimization |

| Prompt Engineering | Starting point demo for prompt engineering using SambaNova's API to experiment with different use case templates. Provides useful resources to improve prompt crafting, making it an ideal entry point for those new to this AISK. | SambaNova Cloud, SambaStudio | Model Development & Optimization |

| Enterprise Knowledge Retrieval | Sample implementation of the semantic search workflow using the SambaNova platform to get answers to questions about your documents. Includes a runnable demo. | SambaNova Cloud, SambaStudio | Intelligent Information Retrieval |

| Image Search | This example workflow shows a simple approach to image search by image description or image similarity. All workflows are built using the SambaNova platform. | SambaStudio | Intelligent Information Retrieval |

| Multimodal Knowledge Retriever | Sample implementation of the semantic search workflow leveraging the SambaNova platform to get answers using text, tables, and images to questions about your documents. Includes a runnable demo. | SambaNova Cloud, SambaStudio | Intelligent Information Retrieval |

| Post Call Analysis | Example workflow that shows a systematic approach to post-call analysis including Automatic Speech Recognition (ASR), diarization, large language model analysis, and retrieval augmented generation (RAG) workflows. All workflows are built using the SambaNova platform. | SambaNova Cloud, SambaStudio | Intelligent Information Retrieval |

| RAG Evaluation Kit | A tool for evaluating the performance of LLM APIs using the RAG Evaluation methodology. | SambaStudio | Intelligent Information Retrieval |

| Search Assistant | Sample implementation of the semantic search workflow built using the SambaNova platform to get answers to your questions using search engine snippets, and website crawled information as the source. Includes a runnable demo. | SambaNova Cloud, SambaStudio | Intelligent Information Retrieval |

| Web Crawled Data Retrieval | Sample implementation of a semantic search workflow built using the SambaNova platform to get answers to your questions using website crawled information as the source. Includes a runnable demo. | SambaNova Cloud, SambaStudio | Intelligent Information Retrieval |

| Benchmarking | This kit evaluates the performance of multiple LLM models hosted in SambaStudio. It offers various performance metrics and configuration options. Users can also see these metrics within a chat interface. | SambaNova Cloud, SambaStudio | Advanced AI Capabilities |

| Code Copilot | This example guide shows a simple integration with Continue VSCode and JetBrains extension using SambaNova platforms, to use Sambanova's hosted models as your custom coding assistant. | SambaNova Cloud, SambaStudio | Integrations |

| Bundle jump start | This kit demonstrates how to call SambaNova Bundle models using the Langchain framework. The script offers different approaches for calling Bundle models, including using SambaStudio with a named expert, and using SambaStudio with routing. | SambaStudio | Advanced AI Capabilities |

| Financial Assistant | This app demonstrates the capabilities of LLMs in extracting and analyzing financial data using function calling, web scraping, and RAG. | SambaNova Cloud, SambaStudio | Advanced AI Capabilities |

| Function Calling | Example of tools calling implementation and a generic function calling module that can be used inside your application workflows. | SambaNova Cloud, SambaStudio | Advanced AI Capabilities |

| SambaNova Scribe | Example implementation of a transcription and summarization workflow. | SambaNova Cloud | Advanced AI Capabilities |

| SambaCloud - Google Integration | App Scripts intended for those with SambaCloud API keys to integrate LLMs into Google Workspaces. | SambaNova Cloud | Advanced AI Capabilities |

Go to SambaNova Cloud Quickstart Guide If is your first time using the AI State Kits and you want to try out simple examples. Follow the next steps to read more detailed instructions or if you ar a SambaStudio user.

Currently, there are two ways to obtain an API key from SambaNova. You can get a free API key using SambaNova Cloud. Alternatively, if you are a current SambaNova customer, you can deploy your models using SambaStudio.

For more information and to obtain your API key, visit the SambaNova Cloud webpage.

To integrate SambaNova Cloud LLMs with this AI starter kit, update the API information by configuring the environment variables in the ai-starter-kit/.env file:

- Create the

.envfile atai-starter-kit/.envif the file does not exist. - Enter the SambaNova Cloud API key in the

.envfile, for example:

SAMBANOVA_API_KEY = "456789abcdef0123456789abcdef0123"Begin by deploying your LLM of choice (e.g., Llama 3 8B) to an endpoint for inference in SambaStudio. Use either the GUI or CLI, as described in the SambaStudio endpoint documentation.

To integrate your LLM deployed on SambaStudio with this AI starter kit, update the API information by configuring the environment variables in the ai-starter-kit/.env file:

- Create the

.envfile atai-starter-kit/.envif the file does not exist. - Set your SambaStudio variables. For example, an endpoint with the URL

"https://api-stage.sambanova.net/api/predict/nlp/12345678-9abc-def0-1234-56789abcdef0/456789ab-cdef-0123-4567-89abcdef0123"

is entered in the

.envfile as:

SAMBASTUDIO_URL="https://api-stage.sambanova.net/api/predict/nlp/12345678-9abc-def0-1234-56789abcdef0/456789ab-cdef-0123-4567-89abcdef0123"

SAMBASTUDIO_API_KEY="89abcdef-0123-4567-89ab-cdef01234567"Currently, you can set your embedding models on CPU or SambaStudio. Note that embedding models are not available yet through SambaNova Cloud, but they will be in future releases.

You can run the Hugging Face embedding models locally on CPU. In this case, no information is needed in the .env file.

Alternatively, you can use SambaStudio embedding model endpoints instead of the CPU-based HugginFace embeddings to increase inference speed. Please follow this guide to deploy your SambaStudio embedding model.

To integrate your embedding model deployed on SambaStudio with this AI starter kit, update the API information by configuring the environment variables in the ai-starter-kit/.env file:

- Create the

.envfile atai-starter-kit/.envif the file does not exist. - Set your SambaStudio variables. For example, an endpoint with the URL

"https://api-stage.sambanova.net/api/predict/generic/12345678-9abc-def0-1234-56789abcdef0/456789ab-cdef-0123-4567-89abcdef0123"is entered in the.envfile as:

SAMBASTUDIO_URL="https://api-stage.sambanova.net/api/predict/nlp/12345678-9abc-def0-1234-56789abcdef0/456789ab-cdef-0123-4567-89abcdef0123"

SAMBASTUDIO_API_KEY="89abcdef-0123-4567-89ab-cdef01234567"Go to the README.md of the starter kit you want to use and follow the instructions. See Available AI Starter Kits.

Use Sambanova's LLMs and Langchain chat model wrappers

Set your environment as shown in integrate your model.

- Import the SambaStudio langchain integration in your project and define your *SambaStudio ChatModel:

pip install langchain-sambanova- If using a Bundle endpoint:

from langchain_sambanova import ChatSambaStudio

load_dotenv('.env')

llm = ChatSambaStudio(

max_tokens_to_generate = 512,

temperature = 0.0,

model = "Meta-Llama-3-8B-Instruct"

)- If using a single model endpoint

from langchain_sambanova import ChatSambaStudio

load_dotenv('.env')

llm = ChatSambaStudio(

max_tokens_to_generate = 512,

temperature = 0.0

)- Use the model

llm.invoke("your prompt")See utils/usage.ipynb for an example.

- Import our SambaNovaCloud langchain integration in your project and define your *SambaNovaCloud ChatModel:

pip install langchain-sambanovafrom langchain_sambanova import ChatSambaNovaCloud

load_dotenv('.env')

llm = ChatSambaNovaCloud(model='Meta-Llama-3.3-70B-Instruct')- Use the model

llm.invoke("your prompt")See utils/usage.ipynb for an example.

- Import the SambaStudioEmbedding langchain integration in your project and define your *SambaStudioEmbedding embedding:

pip install langchain-sambanova- If using a Bundle endpoint

from langchain_sambanova import SambaStudioEmbeddings

load_dotenv('.env')

embedding = SambaStudioEmbeddings(

batch_size=1,

model_kwargs = {

"select_expert":"e5-mistral-7b-instruct"

}

)- If using a single embedding model endpoint

from langchain_sambanova import SambaStudioEmbeddings

load_dotenv('.env')

embedding = SambaStudioEmbeddings(batch_size=32)Note that using different embedding models (cpu or sambastudio) may change the results, and change the way they are set and their parameters

- Use your embedding model in your langchain pipeline

See utils/usage.ipynb for an example.

-

Before running the code, ensure that you have Node.js installed on your system. You can download the latest version from the official Node.js website.

-

Set Up the Environment. To set up the environment, run the following commands in your terminal:

npm init -ynpm install @langchain/openai @langchain/coreThese commands will create a new package.json file and install the required dependencies.

- Create a new file named

app.jsand add the following code:

import { ChatOpenAI } from "@langchain/openai";

const SambaNovaCloudBaseURL = "https://api.sambanova.ai/v1";

const apiKey = "your-api-key";

const SambaNovaCloudChatModel = new ChatOpenAI({

temperature: 0.9,

model: "Meta-Llama-3.1-70B-Instruct",

configuration: {

baseURL: SambaNovaCloudBaseURL,

apiKey: apiKey,

},

});

const response = await SambaNovaCloudChatModel.invoke("Hi there, tell me a joke!");

console.log(response.content);- To run the app, execute the following command in your terminal:

node app.jsSetting up your virtual environment

There are two approaches to setting up your virtual environment for the AI Starter Kits:

- Individual Kit Setup (Traditional Method)

- Base Environment Setup

Each starter kit (see table above) has its own README.md and requirements.txt file. You can set up a separate virtual environment for each kit by following the instructions in their respective directories. This method is suitable if you're only interested in running a single kit or prefer isolated environments for each project.

To use this method:

- Navigate to the specific kit's directory

- Create a virtual environment

- Install the requirements

- Follow the kit-specific instructions

For users who plan to work with multiple kits or prefer a unified development environment, we recommend setting up a base environment. This approach uses a Makefile to automate the setup of a consistent Python environment that works across all kits.

Benefits of the base environment approach:

- Consistent Python version across all kits

- Centralized dependency management

- Simplified setup process

- Easier switching between different kits

- pyenv: The Makefile will attempt to install pyenv if it's not already installed.

- Docker: (Optional) If you want to use the Docker-based setup, ensure Docker is installed on your system.

- Installs pyenv and Poetry if they are not already installed.

- Sets up a Python virtual environment using a specified Python version (default is 3.11.3).

- Installs all necessary dependencies for the base environment.

- Sets up the parsing service required by some kits.

- Installs system dependencies like Tesseract OCR and Poppler.

- Provides Docker-based setup options for consistent environments across different systems.

- Install and Set Up the Base Environment:

make allThis command will set up the base ai-starter-kit environment, including installing all necessary tools and dependencies.

- Activate the Base Environment:

source .venv/bin/activate- Navigate to Your Chosen Starter Kit:

cd path/to/starter_kitWithin the starter kit there will be instructions on how to start the kit. You can skip the virtual environment creation part in the kits README.md as we've done it here.

For certain kits, we utilise a standard parsing service. By Default it's started automatically with the base environment. To work with this service in isolation, following the steps in this section.

- Start Parsing Service:

make start-parsing-service- Stop Parsing Service:

make stop-parsing-service- Check Parsing Service Status:

make parsing-status- View Parsing Service Logs:

make parsing-logTo use the Docker-based setup:

- Ensure Docker is installed on your system.

- Build the Docker image:

make docker-build- Run a specific kit in the Docker container:

make docker-run-kit KIT=<kit_name>Replace <kit_name> with the name of the starter kit you want to run (e.g., function_calling).

- To open a shell in the Docker container:

make docker-shellTo clean up all virtual environments created by the makefile and stop parsing services run the following command:

make cleanThis command removes all virtual environments created with the makefile, stops the parsing service, and cleans up any temporary files.

Troubleshooting

If you encounter issues while setting up or running the AI Starter Kit, here are some common problems and their solutions:

If you're having problems with Python versions:

- Ensure you have pyenv installed:

make ensure-pyenv - Install the required Python versions:

make install-python-versions - If issues persist, check your system's Python installation and PATH settings.

If you're experiencing dependency conflicts:

- Try cleaning your environment:

make clean - Update the lock file:

poetry lock --no-update - Reinstall dependencies:

make install

If you encounter an error while installing pikepdf, such as:

ERROR: Failed building wheel for pikepdf

Failed to build pikepdf

This is likely due to missing qpdf dependency. The Makefile should automatically install qpdf for you, but if you're still encountering issues:

- Ensure you have proper permissions to install system packages.

- If you're on macOS, you can manually install

qpdfusing Homebrew:brew install qpdf

- On Linux, you can install it using your package manager, e.g., on Ubuntu:

sudo apt-get update && sudo apt-get install -y qpdf - After installing

qpdf, try runningmake installagain.

If you continue to face issues, please ensure your system meets all the requirements for building pikepdf and consider checking the pikepdf documentation for more detailed installation instructions.

If the parsing service isn't starting or is behaving unexpectedly:

- Check its status:

make parsing-status - View its logs:

make parsing-log - Try stopping and restarting it:

make stop-parsing-servicefollowed bymake start-parsing-service

If you encounter issues related to Tesseract OCR or Poppler:

- Ensure the Makefile has successfully installed these dependencies.

- On macOS, you can manually install them using Homebrew:

brew install tesseract poppler- On Linux (Ubuntu/Debian), you can install them manually:

sudo apt-get update && sudo apt-get install -y tesseract-ocr poppler-utils- On Windows, you may need to install these dependencies manually and ensure they are in your system PATH.

If you're using the Docker-based setup and encounter issues:

- Ensure Docker is properly installed and running on your system.

- Try rebuilding the Docker image:

make docker-build - Check Docker logs for any error messages.

- Ensure your firewall or antivirus is not blocking Docker operations.

- Ensure all prerequisites (Python, pyenv, Poetry) are correctly installed.

- Try cleaning and rebuilding the environment:

make clean all - Check for any error messages in the console output and address them specifically.

- Ensure your

.envfile is correctly set up in the ai-starter-kit root with all necessary environment variables.

If you continue to experience issues, please open an issue with details about your environment, the full error message, and steps to reproduce the problem.

- Ensure you have sufficient permissions to install software on your system.

- The setup process may take several minutes, especially when installing Python versions or large dependencies.

- If you encounter any issues during setup, check the error messages and ensure your system meets all prerequisites.

- Always activate the base environment before navigating to and running a specific starter kit.

- Some kits may require additional setup steps. Always refer to the specific README of the kit you're using.

Note: These AI Starter Kit code samples are provided "as-is," and are not production-ready or supported code. Bugfix/support will be on a best-effort basis only. Code may use third-party open-source software. You are responsible for performing due diligence per your organization policies for use in your applications.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-starter-kit

Similar Open Source Tools

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

LLM-Engineers-Handbook

The LLM Engineer's Handbook is an official repository containing a comprehensive guide on creating an end-to-end LLM-based system using best practices. It covers data collection & generation, LLM training pipeline, a simple RAG system, production-ready AWS deployment, comprehensive monitoring, and testing and evaluation framework. The repository includes detailed instructions on setting up local and cloud dependencies, project structure, installation steps, infrastructure setup, pipelines for data processing, training, and inference, as well as QA, tests, and running the project end-to-end.

SWELancer-Benchmark

SWE-Lancer is a benchmark repository containing datasets and code for the paper 'SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?'. It provides instructions for package management, building Docker images, configuring environment variables, and running evaluations. Users can use this tool to assess the performance of language models in real-world freelance software engineering tasks.

h2o-llmstudio

H2O LLM Studio is a framework and no-code GUI designed for fine-tuning state-of-the-art large language models (LLMs). With H2O LLM Studio, you can easily and effectively fine-tune LLMs without the need for any coding experience. The GUI is specially designed for large language models, and you can finetune any LLM using a large variety of hyperparameters. You can also use recent finetuning techniques such as Low-Rank Adaptation (LoRA) and 8-bit model training with a low memory footprint. Additionally, you can use Reinforcement Learning (RL) to finetune your model (experimental), use advanced evaluation metrics to judge generated answers by the model, track and compare your model performance visually, and easily export your model to the Hugging Face Hub and share it with the community.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

dockershrink

Dockershrink is an AI-powered Commandline Tool designed to help reduce the size of Docker images. It combines traditional Rule-based analysis with Generative AI techniques to optimize Image configurations. The tool supports NodeJS applications and aims to save costs on storage, data transfer, and build times while increasing developer productivity. By automatically applying advanced optimization techniques, Dockershrink simplifies the process for engineers and organizations, resulting in significant savings and efficiency improvements.

OSWorld

OSWorld is a benchmarking tool designed to evaluate multimodal agents for open-ended tasks in real computer environments. It provides a platform for running experiments, setting up virtual machines, and interacting with the environment using Python scripts. Users can install the tool on their desktop or server, manage dependencies with Conda, and run benchmark tasks. The tool supports actions like executing commands, checking for specific results, and evaluating agent performance. OSWorld aims to facilitate research in AI by providing a standardized environment for testing and comparing different agent baselines.

LARS

LARS is an application that enables users to run Large Language Models (LLMs) locally on their devices, upload their own documents, and engage in conversations where the LLM grounds its responses with the uploaded content. The application focuses on Retrieval Augmented Generation (RAG) to increase accuracy and reduce AI-generated inaccuracies. LARS provides advanced citations, supports various file formats, allows follow-up questions, provides full chat history, and offers customization options for LLM settings. Users can force enable or disable RAG, change system prompts, and tweak advanced LLM settings. The application also supports GPU-accelerated inferencing, multiple embedding models, and text extraction methods. LARS is open-source and aims to be the ultimate RAG-centric LLM application.

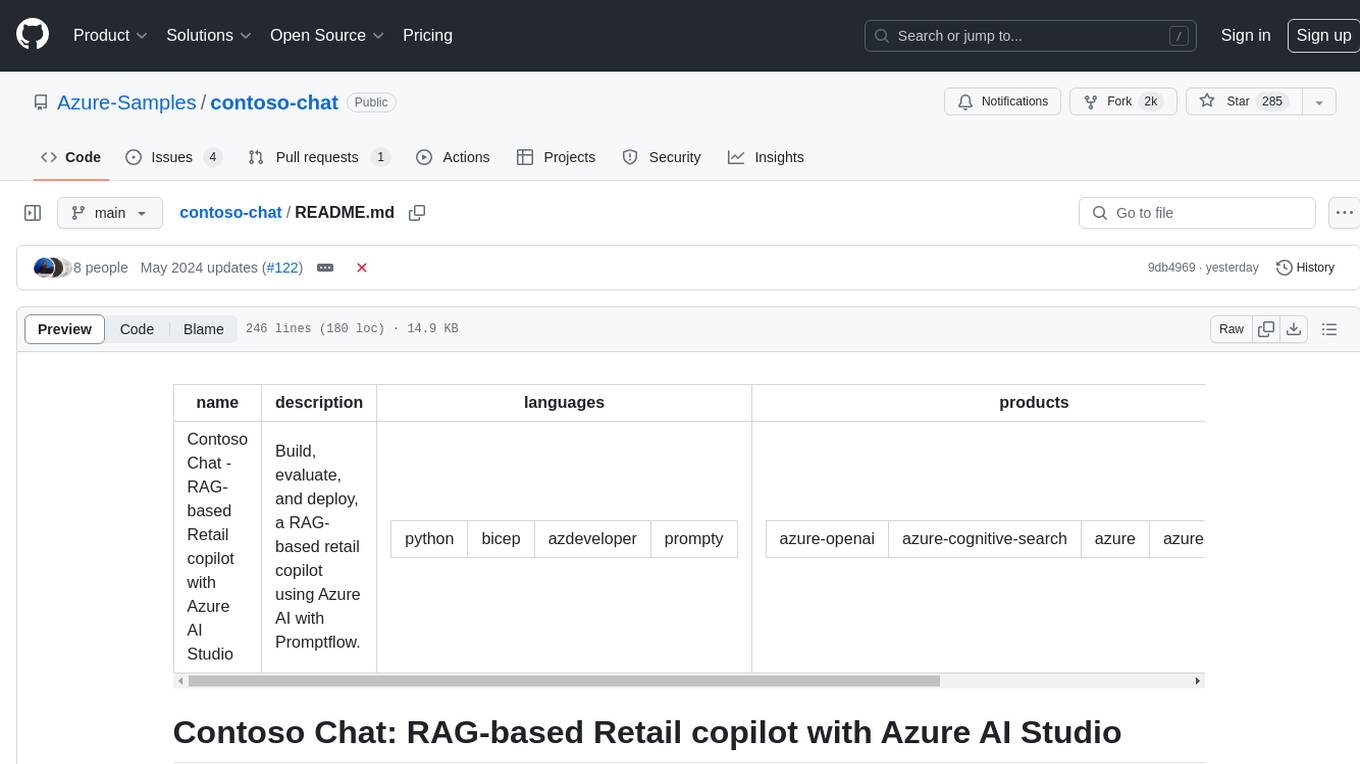

contoso-chat

Contoso Chat is a Python sample demonstrating how to build, evaluate, and deploy a retail copilot application with Azure AI Studio using Promptflow with Prompty assets. The sample implements a Retrieval Augmented Generation approach to answer customer queries based on the company's product catalog and customer purchase history. It utilizes Azure AI Search, Azure Cosmos DB, Azure OpenAI, text-embeddings-ada-002, and GPT models for vectorizing user queries, AI-assisted evaluation, and generating chat responses. By exploring this sample, users can learn to build a retail copilot application, define prompts using Prompty, design, run & evaluate a copilot using Promptflow, provision and deploy the solution to Azure using the Azure Developer CLI, and understand Responsible AI practices for evaluation and content safety.

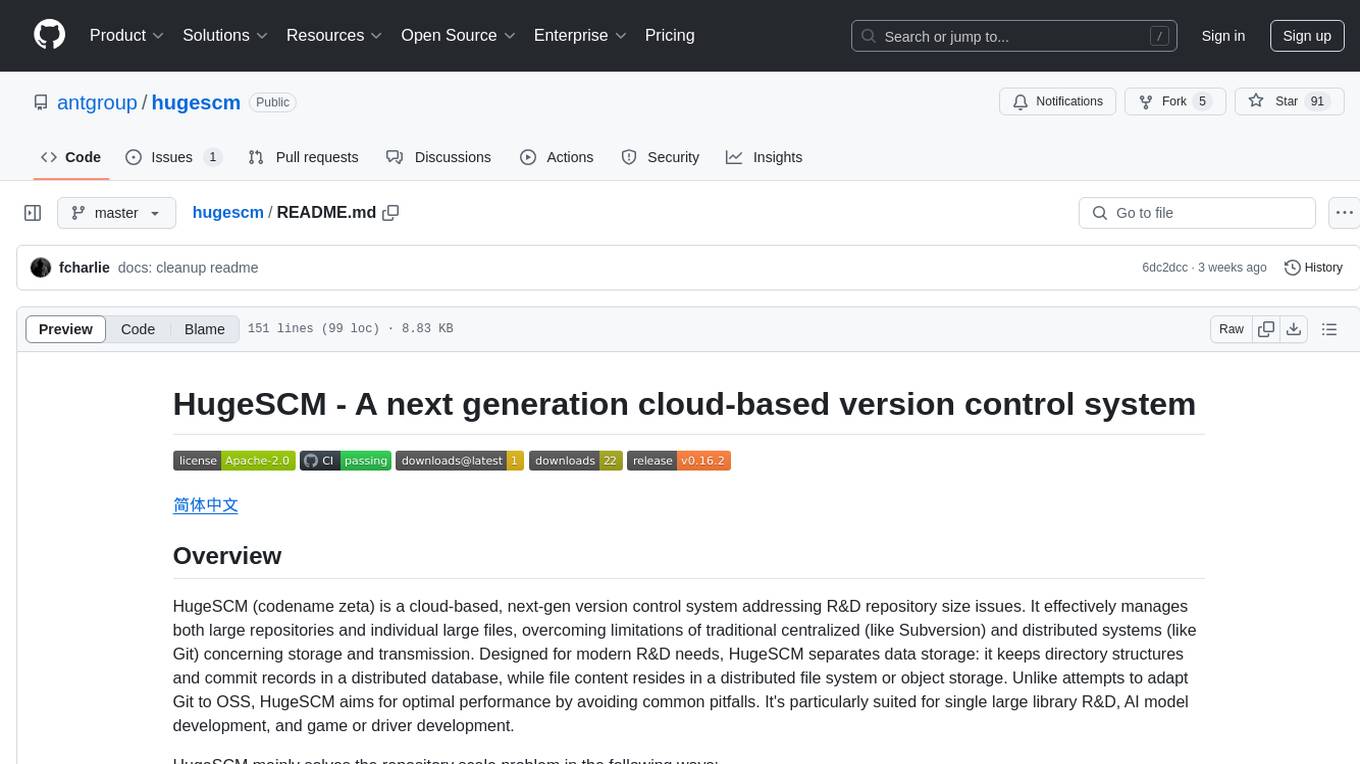

hugescm

HugeSCM is a cloud-based version control system designed to address R&D repository size issues. It effectively manages large repositories and individual large files by separating data storage and utilizing advanced algorithms and data structures. It aims for optimal performance in handling version control operations of large-scale repositories, making it suitable for single large library R&D, AI model development, and game or driver development.

starter-monorepo

Starter Monorepo is a template repository for setting up a monorepo structure in your project. It provides a basic setup with configurations for managing multiple packages within a single repository. This template includes tools for package management, versioning, testing, and deployment. By using this template, you can streamline your development process, improve code sharing, and simplify dependency management across your project. Whether you are working on a small project or a large-scale application, Starter Monorepo can help you organize your codebase efficiently and enhance collaboration among team members.

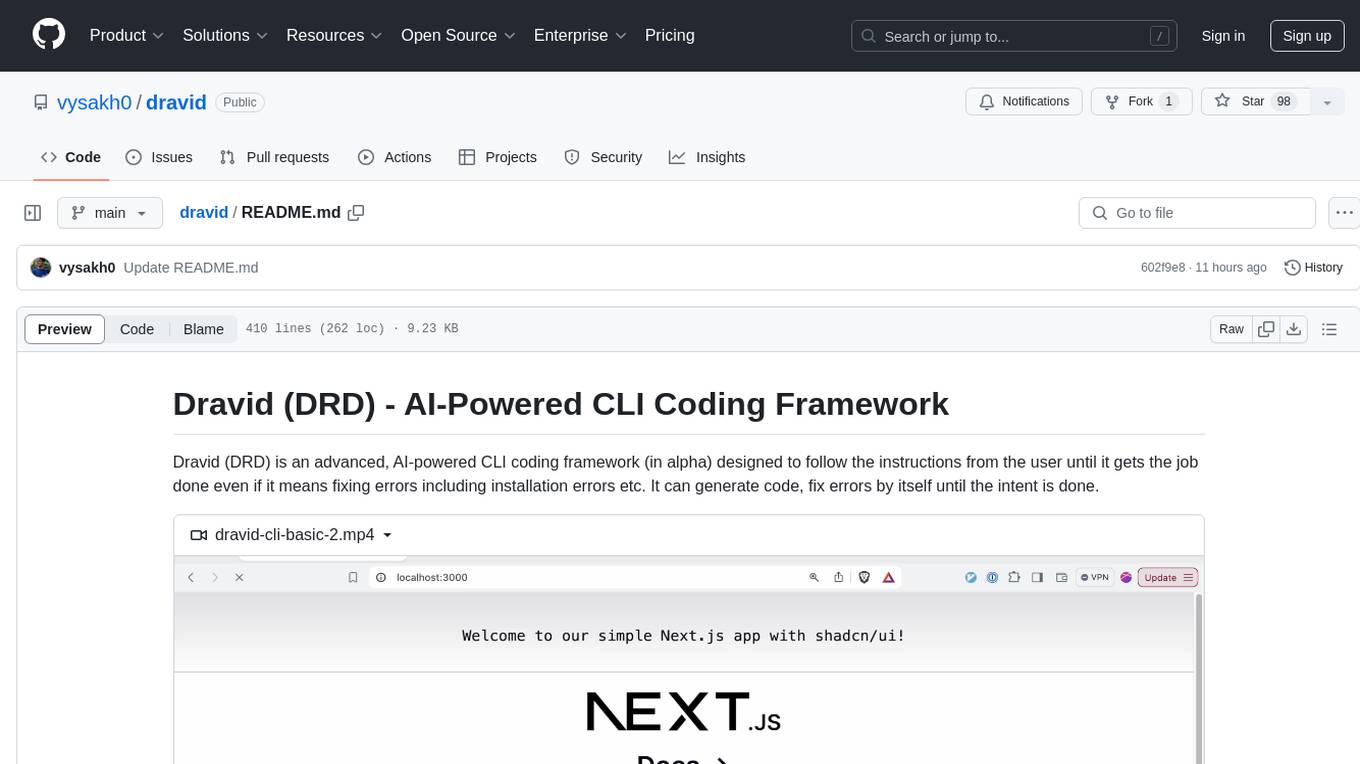

dravid

Dravid (DRD) is an advanced, AI-powered CLI coding framework designed to follow user instructions until the job is completed, including fixing errors. It can generate code, fix errors, handle image queries, manage file operations, integrate with external APIs, and provide a development server with error handling. Dravid is extensible and requires Python 3.7+ and CLAUDE_API_KEY. Users can interact with Dravid through CLI commands for various tasks like creating projects, asking questions, generating content, handling metadata, and file-specific queries. It supports use cases like Next.js project development, working with existing projects, exploring new languages, Ruby on Rails project development, and Python project development. Dravid's project structure includes directories for source code, CLI modules, API interaction, utility functions, AI prompt templates, metadata management, and tests. Contributions are welcome, and development setup involves cloning the repository, installing dependencies with Poetry, setting up environment variables, and using Dravid for project enhancements.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

azure-search-openai-javascript

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval.

cognita

Cognita is an open-source framework to organize your RAG codebase along with a frontend to play around with different RAG customizations. It provides a simple way to organize your codebase so that it becomes easy to test it locally while also being able to deploy it in a production ready environment. The key issues that arise while productionizing RAG system from a Jupyter Notebook are: 1. **Chunking and Embedding Job** : The chunking and embedding code usually needs to be abstracted out and deployed as a job. Sometimes the job will need to run on a schedule or be trigerred via an event to keep the data updated. 2. **Query Service** : The code that generates the answer from the query needs to be wrapped up in a api server like FastAPI and should be deployed as a service. This service should be able to handle multiple queries at the same time and also autoscale with higher traffic. 3. **LLM / Embedding Model Deployment** : Often times, if we are using open-source models, we load the model in the Jupyter notebook. This will need to be hosted as a separate service in production and model will need to be called as an API. 4. **Vector DB deployment** : Most testing happens on vector DBs in memory or on disk. However, in production, the DBs need to be deployed in a more scalable and reliable way. Cognita makes it really easy to customize and experiment everything about a RAG system and still be able to deploy it in a good way. It also ships with a UI that makes it easier to try out different RAG configurations and see the results in real time. You can use it locally or with/without using any Truefoundry components. However, using Truefoundry components makes it easier to test different models and deploy the system in a scalable way. Cognita allows you to host multiple RAG systems using one app. ### Advantages of using Cognita are: 1. A central reusable repository of parsers, loaders, embedders and retrievers. 2. Ability for non-technical users to play with UI - Upload documents and perform QnA using modules built by the development team. 3. Fully API driven - which allows integration with other systems. > If you use Cognita with Truefoundry AI Gateway, you can get logging, metrics and feedback mechanism for your user queries. ### Features: 1. Support for multiple document retrievers that use `Similarity Search`, `Query Decompostion`, `Document Reranking`, etc 2. Support for SOTA OpenSource embeddings and reranking from `mixedbread-ai` 3. Support for using LLMs using `Ollama` 4. Support for incremental indexing that ingests entire documents in batches (reduces compute burden), keeps track of already indexed documents and prevents re-indexing of those docs.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.