genai-toolbox

MCP Toolbox for Databases is an open source MCP server for databases.

Stars: 13013

Gen AI Toolbox for Databases is an open source server that simplifies building Gen AI tools for interacting with databases. It handles complexities like connection pooling, authentication, and more, enabling easier, faster, and more secure tool development. The toolbox sits between the application's orchestration framework and the database, providing a control plane to modify, distribute, or invoke tools. It offers simplified development, better performance, enhanced security, and end-to-end observability. Users can install the toolbox as a binary, container image, or compile from source. Configuration is done through a 'tools.yaml' file, defining sources, tools, and toolsets. The project follows semantic versioning and welcomes contributions.

README:

[!NOTE] MCP Toolbox for Databases is currently in beta, and may see breaking changes until the first stable release (v1.0).

MCP Toolbox for Databases is an open source MCP server for databases. It enables you to develop tools easier, faster, and more securely by handling the complexities such as connection pooling, authentication, and more.

This README provides a brief overview. For comprehensive details, see the full documentation.

[!NOTE] This solution was originally named “Gen AI Toolbox for Databases” as its initial development predated MCP, but was renamed to align with recently added MCP compatibility.

Toolbox helps you build Gen AI tools that let your agents access data in your database. Toolbox provides:

- Simplified development: Integrate tools to your agent in less than 10 lines of code, reuse tools between multiple agents or frameworks, and deploy new versions of tools more easily.

- Better performance: Best practices such as connection pooling, authentication, and more.

- Enhanced security: Integrated auth for more secure access to your data

- End-to-end observability: Out of the box metrics and tracing with built-in support for OpenTelemetry.

⚡ Supercharge Your Workflow with an AI Database Assistant ⚡

Stop context-switching and let your AI assistant become a true co-developer. By connecting your IDE to your databases with MCP Toolbox, you can delegate complex and time-consuming database tasks, allowing you to build faster and focus on what matters. This isn't just about code completion; it's about giving your AI the context it needs to handle the entire development lifecycle.

Here’s how it will save you time:

- Query in Plain English: Interact with your data using natural language right from your IDE. Ask complex questions like, "How many orders were delivered in 2024, and what items were in them?" without writing any SQL.

- Automate Database Management: Simply describe your data needs, and let the AI assistant manage your database for you. It can handle generating queries, creating tables, adding indexes, and more.

- Generate Context-Aware Code: Empower your AI assistant to generate application code and tests with a deep understanding of your real-time database schema. This accelerates the development cycle by ensuring the generated code is directly usable.

- Slash Development Overhead: Radically reduce the time spent on manual setup and boilerplate. MCP Toolbox helps streamline lengthy database configurations, repetitive code, and error-prone schema migrations.

Learn how to connect your AI tools (IDEs) to Toolbox using MCP.

Toolbox sits between your application's orchestration framework and your database, providing a control plane that is used to modify, distribute, or invoke tools. It simplifies the management of your tools by providing you with a centralized location to store and update tools, allowing you to share tools between agents and applications and update those tools without necessarily redeploying your application.

You can run Toolbox directly with a configuration file:

npx @toolbox-sdk/server --tools-file tools.yamlThis runs the latest version of the toolbox server with your configuration file.

[!NOTE] This method should only be used for non-production use cases such as experimentation. For any production use-cases, please consider Installing the server and then running it.

For the latest version, check the releases page and use the following instructions for your OS and CPU architecture.

Binary

To install Toolbox as a binary:

Linux (AMD64)

To install Toolbox as a binary on Linux (AMD64):

# see releases page for other versions export VERSION=0.27.0 curl -L -o toolbox https://storage.googleapis.com/genai-toolbox/v$VERSION/linux/amd64/toolbox chmod +x toolboxmacOS (Apple Silicon)

To install Toolbox as a binary on macOS (Apple Silicon):

# see releases page for other versions export VERSION=0.27.0 curl -L -o toolbox https://storage.googleapis.com/genai-toolbox/v$VERSION/darwin/arm64/toolbox chmod +x toolboxmacOS (Intel)

To install Toolbox as a binary on macOS (Intel):

# see releases page for other versions export VERSION=0.27.0 curl -L -o toolbox https://storage.googleapis.com/genai-toolbox/v$VERSION/darwin/amd64/toolbox chmod +x toolboxWindows (Command Prompt)

To install Toolbox as a binary on Windows (Command Prompt):

:: see releases page for other versions set VERSION=0.27.0 curl -o toolbox.exe "https://storage.googleapis.com/genai-toolbox/v%VERSION%/windows/amd64/toolbox.exe"Windows (PowerShell)

To install Toolbox as a binary on Windows (PowerShell):

# see releases page for other versions $VERSION = "0.27.0" curl.exe -o toolbox.exe "https://storage.googleapis.com/genai-toolbox/v$VERSION/windows/amd64/toolbox.exe"

Container image

You can also install Toolbox as a container:# see releases page for other versions

export VERSION=0.27.0

docker pull us-central1-docker.pkg.dev/database-toolbox/toolbox/toolbox:$VERSIONHomebrew

To install Toolbox using Homebrew on macOS or Linux:

brew install mcp-toolboxCompile from source

To install from source, ensure you have the latest version of Go installed, and then run the following command:

go install github.com/googleapis/[email protected]Gemini CLI Extensions

To install Gemini CLI Extensions for MCP Toolbox, run the following command:

gemini extensions install https://github.com/gemini-cli-extensions/mcp-toolboxConfigure a tools.yaml to define your tools, and then

execute toolbox to start the server:

Binary

To run Toolbox from binary:

./toolbox --tools-file "tools.yaml"ⓘ Note

Toolbox enables dynamic reloading by default. To disable, use the--disable-reloadflag.

Container image

To run the server after pulling the container image:

export VERSION=0.24.0 # Use the version you pulled

docker run -p 5000:5000 \

-v $(pwd)/tools.yaml:/app/tools.yaml \

us-central1-docker.pkg.dev/database-toolbox/toolbox/toolbox:$VERSION \

--tools-file "/app/tools.yaml"ⓘ Note

The-vflag mounts your localtools.yamlinto the container, and-pmaps the container's port5000to your host's port5000.

Source

To run the server directly from source, navigate to the project root directory and run:

go run .ⓘ Note

This command runs the project from source, and is more suitable for development and testing. It does not compile a binary into your$GOPATH. If you want to compile a binary instead, refer the Developer Documentation.

Homebrew

If you installed Toolbox using Homebrew, the toolbox

binary is available in your system path. You can start the server with the same

command:

toolbox --tools-file "tools.yaml"NPM

To run Toolbox directly without manually downloading the binary (requires Node.js):

npx @toolbox-sdk/server --tools-file tools.yamlGemini CLI

Interact with your custom tools using natural language. Check gemini-cli-extensions/mcp-toolbox for more information.

You can use toolbox help for a full list of flags! To stop the server, send a

terminate signal (ctrl+c on most platforms).

For more detailed documentation on deploying to different environments, check out the resources in the How-to section

Once your server is up and running, you can load the tools into your application. See below the list of Client SDKs for using various frameworks:

Python (Github)

Core

Install Toolbox Core SDK:

pip install toolbox-coreLoad tools:

from toolbox_core import ToolboxClient # update the url to point to your server async with ToolboxClient("http://127.0.0.1:5000") as client: # these tools can be passed to your application! tools = await client.load_toolset("toolset_name")For more detailed instructions on using the Toolbox Core SDK, see the project's README.

LangChain / LangGraph

Install Toolbox LangChain SDK:

pip install toolbox-langchainLoad tools:

from toolbox_langchain import ToolboxClient # update the url to point to your server async with ToolboxClient("http://127.0.0.1:5000") as client: # these tools can be passed to your application! tools = client.load_toolset()For more detailed instructions on using the Toolbox LangChain SDK, see the project's README.

LlamaIndex

Install Toolbox Llamaindex SDK:

pip install toolbox-llamaindexLoad tools:

from toolbox_llamaindex import ToolboxClient # update the url to point to your server async with ToolboxClient("http://127.0.0.1:5000") as client: # these tools can be passed to your application! tools = client.load_toolset()For more detailed instructions on using the Toolbox Llamaindex SDK, see the project's README.

Javascript/Typescript (Github)

Core

Install Toolbox Core SDK:

npm install @toolbox-sdk/coreLoad tools:

import { ToolboxClient } from '@toolbox-sdk/core'; // update the url to point to your server const URL = 'http://127.0.0.1:5000'; let client = new ToolboxClient(URL); // these tools can be passed to your application! const tools = await client.loadToolset('toolsetName');For more detailed instructions on using the Toolbox Core SDK, see the project's README.

LangChain / LangGraph

Install Toolbox Core SDK:

npm install @toolbox-sdk/coreLoad tools:

import { ToolboxClient } from '@toolbox-sdk/core'; // update the url to point to your server const URL = 'http://127.0.0.1:5000'; let client = new ToolboxClient(URL); // these tools can be passed to your application! const toolboxTools = await client.loadToolset('toolsetName'); // Define the basics of the tool: name, description, schema and core logic const getTool = (toolboxTool) => tool(currTool, { name: toolboxTool.getName(), description: toolboxTool.getDescription(), schema: toolboxTool.getParamSchema() }); // Use these tools in your Langchain/Langraph applications const tools = toolboxTools.map(getTool);Genkit

Install Toolbox Core SDK:

npm install @toolbox-sdk/coreLoad tools:

import { ToolboxClient } from '@toolbox-sdk/core'; import { genkit } from 'genkit'; // Initialise genkit const ai = genkit({ plugins: [ googleAI({ apiKey: process.env.GEMINI_API_KEY || process.env.GOOGLE_API_KEY }) ], model: googleAI.model('gemini-2.0-flash'), }); // update the url to point to your server const URL = 'http://127.0.0.1:5000'; let client = new ToolboxClient(URL); // these tools can be passed to your application! const toolboxTools = await client.loadToolset('toolsetName'); // Define the basics of the tool: name, description, schema and core logic const getTool = (toolboxTool) => ai.defineTool({ name: toolboxTool.getName(), description: toolboxTool.getDescription(), schema: toolboxTool.getParamSchema() }, toolboxTool) // Use these tools in your Genkit applications const tools = toolboxTools.map(getTool);ADK

Install Toolbox ADK SDK:

npm install @toolbox-sdk/adkLoad tools:

import { ToolboxClient } from '@toolbox-sdk/adk'; // update the url to point to your server const URL = 'http://127.0.0.1:5000'; let client = new ToolboxClient(URL); // these tools can be passed to your application! const tools = await client.loadToolset('toolsetName');For more detailed instructions on using the Toolbox ADK SDK, see the project's README.

Go (Github)

Core

Install Toolbox Go SDK:

go get github.com/googleapis/mcp-toolbox-sdk-goLoad tools:

package main import ( "github.com/googleapis/mcp-toolbox-sdk-go/core" "context" ) func main() { // Make sure to add the error checks // update the url to point to your server URL := "http://127.0.0.1:5000"; ctx := context.Background() client, err := core.NewToolboxClient(URL) // Framework agnostic tools tools, err := client.LoadToolset("toolsetName", ctx) }For more detailed instructions on using the Toolbox Go SDK, see the project's README.

LangChain Go

Install Toolbox Go SDK:

go get github.com/googleapis/mcp-toolbox-sdk-goLoad tools:

package main import ( "context" "encoding/json" "github.com/googleapis/mcp-toolbox-sdk-go/core" "github.com/tmc/langchaingo/llms" ) func main() { // Make sure to add the error checks // update the url to point to your server URL := "http://127.0.0.1:5000" ctx := context.Background() client, err := core.NewToolboxClient(URL) // Framework agnostic tool tool, err := client.LoadTool("toolName", ctx) // Fetch the tool's input schema inputschema, err := tool.InputSchema() var paramsSchema map[string]any _ = json.Unmarshal(inputschema, ¶msSchema) // Use this tool with LangChainGo langChainTool := llms.Tool{ Type: "function", Function: &llms.FunctionDefinition{ Name: tool.Name(), Description: tool.Description(), Parameters: paramsSchema, }, } }Genkit

Install Toolbox Go SDK:

go get github.com/googleapis/mcp-toolbox-sdk-goLoad tools:

package main import ( "context" "log" "github.com/firebase/genkit/go/genkit" "github.com/googleapis/mcp-toolbox-sdk-go/core" "github.com/googleapis/mcp-toolbox-sdk-go/tbgenkit" ) func main() { // Make sure to add the error checks // Update the url to point to your server URL := "http://127.0.0.1:5000" ctx := context.Background() g := genkit.Init(ctx) client, err := core.NewToolboxClient(URL) // Framework agnostic tool tool, err := client.LoadTool("toolName", ctx) // Convert the tool using the tbgenkit package // Use this tool with Genkit Go genkitTool, err := tbgenkit.ToGenkitTool(tool, g) if err != nil { log.Fatalf("Failed to convert tool: %v\n", err) } log.Printf("Successfully converted tool: %s", genkitTool.Name()) }Go GenAI

Install Toolbox Go SDK:

go get github.com/googleapis/mcp-toolbox-sdk-goLoad tools:

package main import ( "context" "encoding/json" "github.com/googleapis/mcp-toolbox-sdk-go/core" "google.golang.org/genai" ) func main() { // Make sure to add the error checks // Update the url to point to your server URL := "http://127.0.0.1:5000" ctx := context.Background() client, err := core.NewToolboxClient(URL) // Framework agnostic tool tool, err := client.LoadTool("toolName", ctx) // Fetch the tool's input schema inputschema, err := tool.InputSchema() var schema *genai.Schema _ = json.Unmarshal(inputschema, &schema) funcDeclaration := &genai.FunctionDeclaration{ Name: tool.Name(), Description: tool.Description(), Parameters: schema, } // Use this tool with Go GenAI genAITool := &genai.Tool{ FunctionDeclarations: []*genai.FunctionDeclaration{funcDeclaration}, } }OpenAI Go

Install Toolbox Go SDK:

go get github.com/googleapis/mcp-toolbox-sdk-goLoad tools:

package main import ( "context" "encoding/json" "github.com/googleapis/mcp-toolbox-sdk-go/core" openai "github.com/openai/openai-go" ) func main() { // Make sure to add the error checks // Update the url to point to your server URL := "http://127.0.0.1:5000" ctx := context.Background() client, err := core.NewToolboxClient(URL) // Framework agnostic tool tool, err := client.LoadTool("toolName", ctx) // Fetch the tool's input schema inputschema, err := tool.InputSchema() var paramsSchema openai.FunctionParameters _ = json.Unmarshal(inputschema, ¶msSchema) // Use this tool with OpenAI Go openAITool := openai.ChatCompletionToolParam{ Function: openai.FunctionDefinitionParam{ Name: tool.Name(), Description: openai.String(tool.Description()), Parameters: paramsSchema, }, } }ADK Go

Install Toolbox Go SDK:

go get github.com/googleapis/mcp-toolbox-sdk-goLoad tools:

package main import ( "github.com/googleapis/mcp-toolbox-sdk-go/tbadk" "context" ) func main() { // Make sure to add the error checks // Update the url to point to your server URL := "http://127.0.0.1:5000" ctx := context.Background() client, err := tbadk.NewToolboxClient(URL) if err != nil { return fmt.Sprintln("Could not start Toolbox Client", err) } // Use this tool with ADK Go tool, err := client.LoadTool("toolName", ctx) if err != nil { return fmt.Sprintln("Could not load Toolbox Tool", err) } }For more detailed instructions on using the Toolbox Go SDK, see the project's README.

Gemini CLI extensions provide tools to interact directly with your data sources from command line. Below is a list of Gemini CLI extensions that are built on top of Toolbox. They allow you to interact with your data sources through pre-defined or custom tools with natural language. Click into the link to see detailed instructions on their usage.

To use custom tools with Gemini CLI:

To use prebuilt tools with Gemini CLI:

- AlloyDB for PostgreSQL

- AlloyDB for PostgreSQL Observability

- BigQuery Data Analytics

- BigQuery Conversational Analytics

- Cloud SQL for MySQL

- Cloud SQL for MySQL Observability

- Cloud SQL for PostgreSQL

- Cloud SQL for PostgreSQL Observability

- Cloud SQL for SQL Server

- Cloud SQL for SQL Server Observability

- Looker

- Dataplex

- MySQL

- PostgreSQL

- Spanner

- Firestore

- SQL Server

The primary way to configure Toolbox is through the tools.yaml file. If you

have multiple files, you can tell toolbox which to load with the --tools-file tools.yaml flag.

You can find more detailed reference documentation to all resource types in the Resources.

The sources section of your tools.yaml defines what data sources your

Toolbox should have access to. Most tools will have at least one source to

execute against.

kind: sources

name: my-pg-source

type: postgres

host: 127.0.0.1

port: 5432

database: toolbox_db

user: toolbox_user

password: my-passwordFor more details on configuring different types of sources, see the Sources.

The tools section of a tools.yaml define the actions an agent can take: what

type of tool it is, which source(s) it affects, what parameters it uses, etc.

kind: tools

name: search-hotels-by-name

type: postgres-sql

source: my-pg-source

description: Search for hotels based on name.

parameters:

- name: name

type: string

description: The name of the hotel.

statement: SELECT * FROM hotels WHERE name ILIKE '%' || $1 || '%';For more details on configuring different types of tools, see the Tools.

The toolsets section of your tools.yaml allows you to define groups of tools

that you want to be able to load together. This can be useful for defining

different groups based on agent or application.

toolsets:

my_first_toolset:

- my_first_tool

- my_second_tool

my_second_toolset:

- my_second_tool

- my_third_toolYou can load toolsets by name:

# This will load all tools

all_tools = client.load_toolset()

# This will only load the tools listed in 'my_second_toolset'

my_second_toolset = client.load_toolset("my_second_toolset")The prompts section of a tools.yaml defines prompts that can be used for

interactions with LLMs.

prompts:

code_review:

description: "Asks the LLM to analyze code quality and suggest improvements."

messages:

- content: "Please review the following code for quality, correctness, and potential improvements: \n\n{{.code}}"

arguments:

- name: "code"

description: "The code to review"For more details on configuring prompts, see the Prompts.

This project uses semantic versioning (MAJOR.MINOR.PATCH).

Since the project is in a pre-release stage (version 0.x.y), we follow the

standard conventions for initial development:

While the major version is 0, the public API should be considered unstable.

The version will be incremented as follows:

-

0.MINOR.PATCH: The MINOR version is incremented when we add new functionality or make breaking, incompatible API changes. -

0.MINOR.PATCH: The PATCH version is incremented for backward-compatible bug fixes.

Once the project reaches a stable 1.0.0 release, the version number

MAJOR.MINOR.PATCH will follow the more common convention:

-

MAJOR: Incremented for incompatible API changes. -

MINOR: Incremented for new, backward-compatible functionality. -

PATCH: Incremented for backward-compatible bug fixes.

The public API that this applies to is the CLI associated with Toolbox, the

interactions with official SDKs, and the definitions in the tools.yaml file.

Contributions are welcome. Please, see the CONTRIBUTING to get started.

Please note that this project is released with a Contributor Code of Conduct. By participating in this project you agree to abide by its terms. See Contributor Code of Conduct for more information.

Join our discord community to connect with our developers!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for genai-toolbox

Similar Open Source Tools

genai-toolbox

Gen AI Toolbox for Databases is an open source server that simplifies building Gen AI tools for interacting with databases. It handles complexities like connection pooling, authentication, and more, enabling easier, faster, and more secure tool development. The toolbox sits between the application's orchestration framework and the database, providing a control plane to modify, distribute, or invoke tools. It offers simplified development, better performance, enhanced security, and end-to-end observability. Users can install the toolbox as a binary, container image, or compile from source. Configuration is done through a 'tools.yaml' file, defining sources, tools, and toolsets. The project follows semantic versioning and welcomes contributions.

mcp-victoriametrics

The VictoriaMetrics MCP Server is an implementation of Model Context Protocol (MCP) server for VictoriaMetrics. It provides access to your VictoriaMetrics instance and seamless integration with VictoriaMetrics APIs and documentation. The server allows you to use almost all read-only APIs of VictoriaMetrics, enabling monitoring, observability, and debugging tasks related to your VictoriaMetrics instances. It also contains embedded up-to-date documentation and tools for exploring metrics, labels, alerts, and more. The server can be used for advanced automation and interaction capabilities for engineers and tools.

uLoopMCP

uLoopMCP is a Unity integration tool designed to let AI drive your Unity project forward with minimal human intervention. It provides a 'self-hosted development loop' where an AI can compile, run tests, inspect logs, and fix issues using tools like compile, run-tests, get-logs, and clear-console. It also allows AI to operate the Unity Editor itself—creating objects, calling menu items, inspecting scenes, and refining UI layouts from screenshots via tools like execute-dynamic-code, execute-menu-item, and capture-window. The tool enables AI-driven development loops to run autonomously inside existing Unity projects.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

mcpdoc

The MCP LLMS-TXT Documentation Server is an open-source server that provides developers full control over tools used by applications like Cursor, Windsurf, and Claude Code/Desktop. It allows users to create a user-defined list of `llms.txt` files and use a `fetch_docs` tool to read URLs within these files, enabling auditing of tool calls and context returned. The server supports various applications and provides a way to connect to them, configure rules, and test tool calls for tasks related to documentation retrieval and processing.

context7

Context7 is a powerful tool for analyzing and visualizing data in various formats. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With advanced features such as data filtering, aggregation, and customization, Context7 is suitable for both beginners and experienced data analysts. The tool supports a wide range of data sources and formats, making it versatile for different use cases. Whether you are working on exploratory data analysis, data visualization, or data storytelling, Context7 can help you uncover valuable insights and communicate your findings effectively.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

pentagi

PentAGI is an innovative tool for automated security testing that leverages cutting-edge artificial intelligence technologies. It is designed for information security professionals, researchers, and enthusiasts who need a powerful and flexible solution for conducting penetration tests. The tool provides secure and isolated operations in a sandboxed Docker environment, fully autonomous AI-powered agent for penetration testing steps, a suite of 20+ professional security tools, smart memory system for storing research results, web intelligence for gathering information, integration with external search systems, team delegation system, comprehensive monitoring and reporting, modern interface, API integration, persistent storage, scalable architecture, self-hosted solution, flexible authentication, and quick deployment through Docker Compose.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

MCPSharp

MCPSharp is a .NET library that helps build Model Context Protocol (MCP) servers and clients for AI assistants and models. It allows creating MCP-compliant tools, connecting to existing MCP servers, exposing .NET methods as MCP endpoints, and handling MCP protocol details seamlessly. With features like attribute-based API, JSON-RPC support, parameter validation, and type conversion, MCPSharp simplifies the development of AI capabilities in applications through standardized interfaces.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

For similar tasks

llm-functions

LLM Functions is a project that enables the enhancement of large language models (LLMs) with custom tools and agents developed in bash, javascript, and python. Users can create tools for their LLM to execute system commands, access web APIs, or perform other complex tasks triggered by natural language prompts. The project provides a framework for building tools and agents, with tools being functions written in the user's preferred language and automatically generating JSON declarations based on comments. Agents combine prompts, function callings, and knowledge (RAG) to create conversational AI agents. The project is designed to be user-friendly and allows users to easily extend the capabilities of their language models.

llm_agents

LLM Agents is a small library designed to build agents controlled by large language models. It aims to provide a better understanding of how such agents work in a concise manner. The library allows agents to be instructed by prompts, use custom-built components as tools, and run in a loop of Thought, Action, Observation. The agents leverage language models to generate Thought and Action, while tools like Python REPL, Google search, and Hacker News search provide Observations. The library requires setting up environment variables for OpenAI API and SERPAPI API keys. Users can create their own agents by importing the library and defining tools accordingly.

genai-toolbox

Gen AI Toolbox for Databases is an open source server that simplifies building Gen AI tools for interacting with databases. It handles complexities like connection pooling, authentication, and more, enabling easier, faster, and more secure tool development. The toolbox sits between the application's orchestration framework and the database, providing a control plane to modify, distribute, or invoke tools. It offers simplified development, better performance, enhanced security, and end-to-end observability. Users can install the toolbox as a binary, container image, or compile from source. Configuration is done through a 'tools.yaml' file, defining sources, tools, and toolsets. The project follows semantic versioning and welcomes contributions.

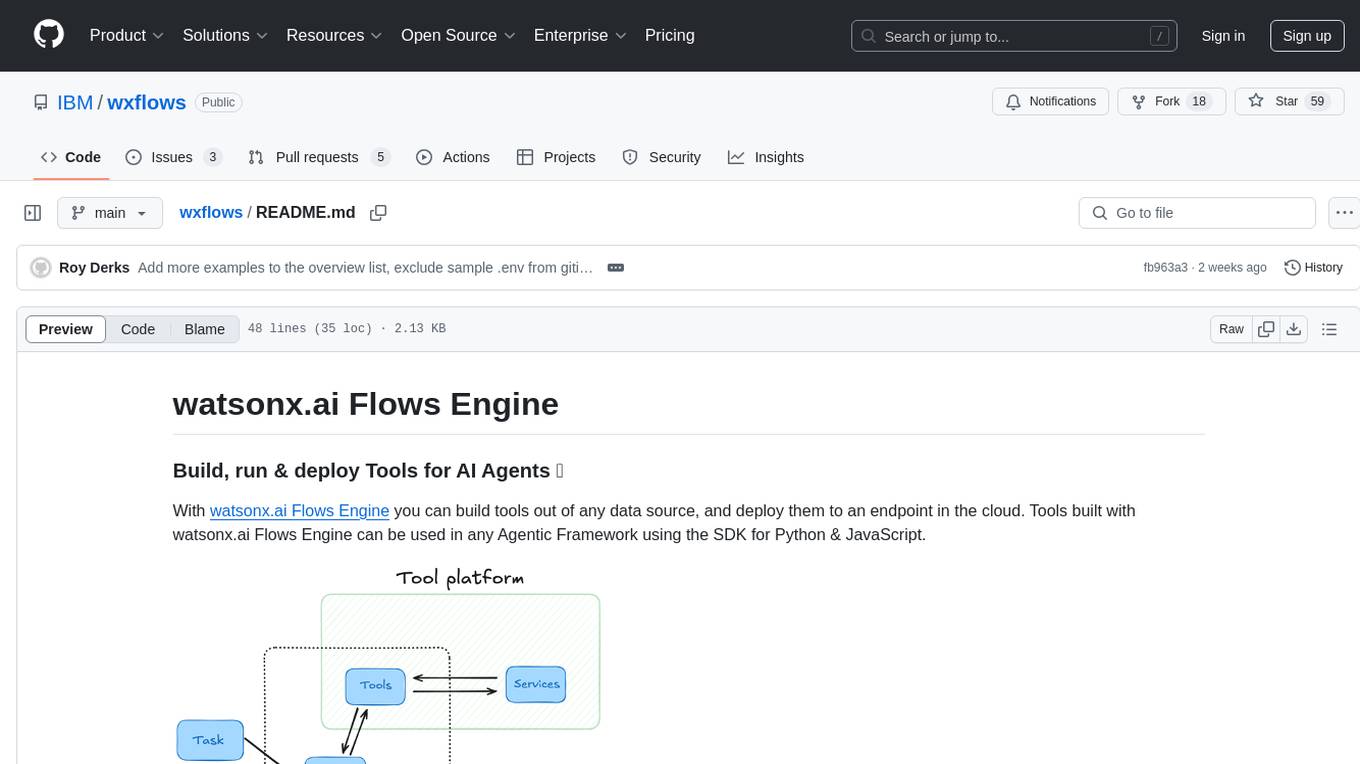

wxflows

watsonx.ai Flows Engine is a powerful tool for building, running, and deploying AI agents. It allows users to create tools from various data sources and deploy them to the cloud. The tools built with watsonx.ai Flows Engine can be integrated into any Agentic Framework using the SDK for Python & JavaScript. The platform offers a range of tools and integrations, including exchange, wikipedia, google_books, math, and weather. Users can also build their own tools and leverage integrations like LangGraph, LangChain, watsonx.ai, and OpenAI. Examples of applications built with watsonx.ai Flows Engine include an end-to-end Agent Chat App, Text-to-SQL Agent, YouTube transcription agent, Math agent, and more. The platform provides comprehensive support through Discord for any questions or feedback.

superglue

superglue is an AI-powered tool builder that abstracts away authentication, documentation handling, and data mapping between systems. It self-heals tools by auto-repairing failures due to API changes. Users can build lightweight data syncing tools, migrate SQL procedures to REST API calls, and create enterprise GPT tools. Interfaces include a web application, superglue SDK for CRUD functionality, and MCP Server for discoverability and execution of pre-built tools. Detailed documentation is available at docs.superglue.cloud.

tegon

Tegon is an open-source AI-First issue tracking tool designed for engineering teams. It aims to simplify task management by leveraging AI and integrations to automate task creation, prioritize tasks, and enhance bug resolution. Tegon offers features like issues tracking, automatic title generation, AI-generated labels and assignees, custom views, and upcoming features like sprints and task prioritization. It integrates with GitHub, Slack, and Sentry to streamline issue tracking processes. Tegon also plans to introduce AI Agents like PR Agent and Bug Agent to enhance product management and bug resolution. Contributions are welcome, and the product is licensed under the MIT License.

composio

Composio is a production-ready toolset for AI agents that enables users to integrate AI agents with various agentic tools effortlessly. It provides support for over 100 tools across different categories, including popular softwares like GitHub, Notion, Linear, Gmail, Slack, and more. Composio ensures managed authorization with support for six different authentication protocols, offering better agentic accuracy and ease of use. Users can easily extend Composio with additional tools, frameworks, and authorization protocols. The toolset is designed to be embeddable and pluggable, allowing for seamless integration and consistent user experience.

MLE-agent

MLE-Agent is an intelligent companion designed for machine learning engineers and researchers. It features autonomous baseline creation, integration with Arxiv and Papers with Code, smart debugging, file system organization, comprehensive tools integration, and an interactive CLI chat interface for seamless AI engineering and research workflows.

For similar jobs

StoryToolKit

StoryToolkitAI is a film editing tool that utilizes AI to transcribe, index scenes, search through footage, and create stories. It offers features such as automatic transcription, translation, story creation, speaker detection, project file management, and more. The tool works locally on your machine and integrates with DaVinci Resolve Studio 18. It aims to streamline the editing process by leveraging AI capabilities and enhancing user efficiency.

genai-toolbox

Gen AI Toolbox for Databases is an open source server that simplifies building Gen AI tools for interacting with databases. It handles complexities like connection pooling, authentication, and more, enabling easier, faster, and more secure tool development. The toolbox sits between the application's orchestration framework and the database, providing a control plane to modify, distribute, or invoke tools. It offers simplified development, better performance, enhanced security, and end-to-end observability. Users can install the toolbox as a binary, container image, or compile from source. Configuration is done through a 'tools.yaml' file, defining sources, tools, and toolsets. The project follows semantic versioning and welcomes contributions.

sdialog

SDialog is an MIT-licensed open-source toolkit for building, simulating, and evaluating LLM-based conversational agents end-to-end. It aims to bridge agent construction, user simulation, dialog generation, and evaluation in a single reproducible workflow, enabling the generation of reliable, controllable dialog systems or data at scale. The toolkit standardizes a Dialog schema, offers persona-driven multi-agent simulation with LLMs, provides composable orchestration for precise control over behavior and flow, includes built-in evaluation metrics, and offers mechanistic interpretability. It allows for easy creation of user-defined components and interoperability across various AI platforms.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.