MLE-agent

🤖 MLE-Agent: Your intelligent companion for seamless AI engineering and research. 🔍 Integrate with arxiv and paper with code to provide better code/research plans 🧰 OpenAI, Anthropic, Ollama, etc supported. :fireworks: Code RAG

Stars: 1054

MLE-Agent is an intelligent companion designed for machine learning engineers and researchers. It features autonomous baseline creation, integration with Arxiv and Papers with Code, smart debugging, file system organization, comprehensive tools integration, and an interactive CLI chat interface for seamless AI engineering and research workflows.

README:

MLE-Agent is designed as a pairing LLM agent for machine learning engineers and researchers. It is featured by:

- 🤖 Autonomous Baseline: Automatically builds ML/AI baselines and solutions based on your requirements.

- 🏅End-to-end ML Task: Participates in Kaggle competitions and completes tasks independently.

- 🔍 Arxiv and Papers with Code Integration: Access best practices and state-of-the-art methods.

- 🐛 Smart Debugging: Ensures high-quality code through automatic debugger-coder interactions.

- 📂 File System Integration: Organizes your project structure efficiently.

- 🧰 Comprehensive Tools Integration: Includes AI/ML functions and MLOps tools for a seamless workflow.

- ☕ Interactive CLI Chat: Enhances your projects with an easy-to-use chat interface.

- 🧠 Smart Advisor: Provides personalized suggestions and recommendations for your ML/AI project.

- 📊 Weekly Report: Automatically generates detailed summaries of your weekly works.

https://github.com/user-attachments/assets/dac7be90-c662-4d0d-8d3a-2bc4df9cffb9

- 🚀 09/24/2024: Release the

0.4.2with enhancedAuto-Kagglemode to complete an end-to-end competition with minimal effort. - 🚀 09/10/2024: Release the

0.4.0with new CLIs likeMLE report,MLE kaggle,MLE integrationand many new models likeMistral. - 🚀 07/25/2024: Release the

0.3.0with huge refactoring, many integrations, etc. (v0.3.0) - 🚀 07/11/2024: Release the

0.2.0with multiple agents interaction (v0.2.0) - 👨🍼 07/03/2024: Kaia is born

- 🚀 06/01/2024: Release the first rule-based version of MLE agent (v0.1.0)

pip install mle-agent -U

# or from source

git clone [email protected]:MLSysOps/MLE-agent.git

pip install -e .mle new <project name>And a project directory will be created under the current path, you need to start the project under the project directory.

cd <project name>

mle startYou can also start an interactive chat in the terminal under the project directory:

mle chatMLE agent can help you prototype an ML baseline with the given requirements, and test the model on the local machine. The requirements can be vague, such as "I want to predict the stock price based on the historical data".

cd <project name>

mle startMLE agent can help you summarize your weekly report, including development progress, communication notes, reference, and to-do lists.

cd <project name>

mle reportThen, you can visit http://localhost:3000/ to generate your report locally.

MLE agent can participate in Kaggle competitions and finish coding and debugging from data preparation to model training independently. Here is the basic command to start a Kaggle competition:

cd <project name>

mle kaggleOr you can let the agents finish the Kaggle task without human interaction if you have the dataset and submission file ready:

cd <project name>

mle kaggle --auto \

--datasets "<path_to_dataset1>,<path_to_dataset2>,..." \

--description "<description_file_path_or_text>" \

--submission "<submission_file_path>" \

--sub_example "<submission_example_file_path>" \

--comp_id "<competition_id>"Please make sure you have joined the competition before running the command. For more details, see the MLE-Agent Tutorials.

The following is a list of the tasks we plan to do, welcome to propose something new!

🔨 General Features

- [x] Understand users' requirements to create an end-to-end AI project

- [x] Suggest the SOTA data science solutions by using the web search

- [x] Plan the ML engineering tasks with human interaction

- [x] Execute the code on the local machine/cloud, debug and fix the errors

- [x] Leverage the built-in functions to complete ML engineering tasks

- [x] Interactive chat: A human-in-the-loop mode to help improve the existing ML projects

- [x] Kaggle mode: to finish a Kaggle task without humans

- [x] Summary and reflect the whole ML/AI pipeline

- [ ] Integration with Cloud data and testing and debugging platforms

- [x] Local RAG support to make personal ML/AI coding assistant

- [ ] Function zoo: generate AI/ML functions and save them for future usage

⭐ More LLMs and Serving Tools

- [x] Ollama LLama3

- [x] OpenAI GPTs

- [x] Anthropic Claude 3.5 Sonnet

💖 Better user experience

- [x] CLI Application

- [x] Web UI

- [x] Discord

🧩 Functions and Integrations

- [x] Local file system

- [x] Local code exectutor

- [x] Arxiv.org search

- [x] Papers with Code search

- [x] General keyword search

- [ ] Hugging Face

- [ ] SkyPilot cloud deployment

- [ ] Snowflake data

- [ ] AWS S3 data

- [ ] Databricks data catalog

- [ ] Wandb experiment monitoring

- [ ] MLflow management

- [ ] DBT data transform

We welcome contributions from the community. We are looking for contributors to help us with the following tasks:

- Benchmark and Evaluate the agent

- Add more features to the agent

- Improve the documentation

- Write tests

Please check the CONTRIBUTING.md file if you want to contribute.

- Discord community. If you have any questions, please ask in the Discord community.

Check MIT License file for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for MLE-agent

Similar Open Source Tools

MLE-agent

MLE-Agent is an intelligent companion designed for machine learning engineers and researchers. It features autonomous baseline creation, integration with Arxiv and Papers with Code, smart debugging, file system organization, comprehensive tools integration, and an interactive CLI chat interface for seamless AI engineering and research workflows.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

refact-lsp

Refact Agent is a small executable written in Rust as part of the Refact Agent project. It lives inside your IDE to keep AST and VecDB indexes up to date, supporting connection graphs between definitions and usages in popular programming languages. It functions as an LSP server, offering code completion, chat functionality, and integration with various tools like browsers, databases, and debuggers. Users can interact with it through a Text UI in the command line.

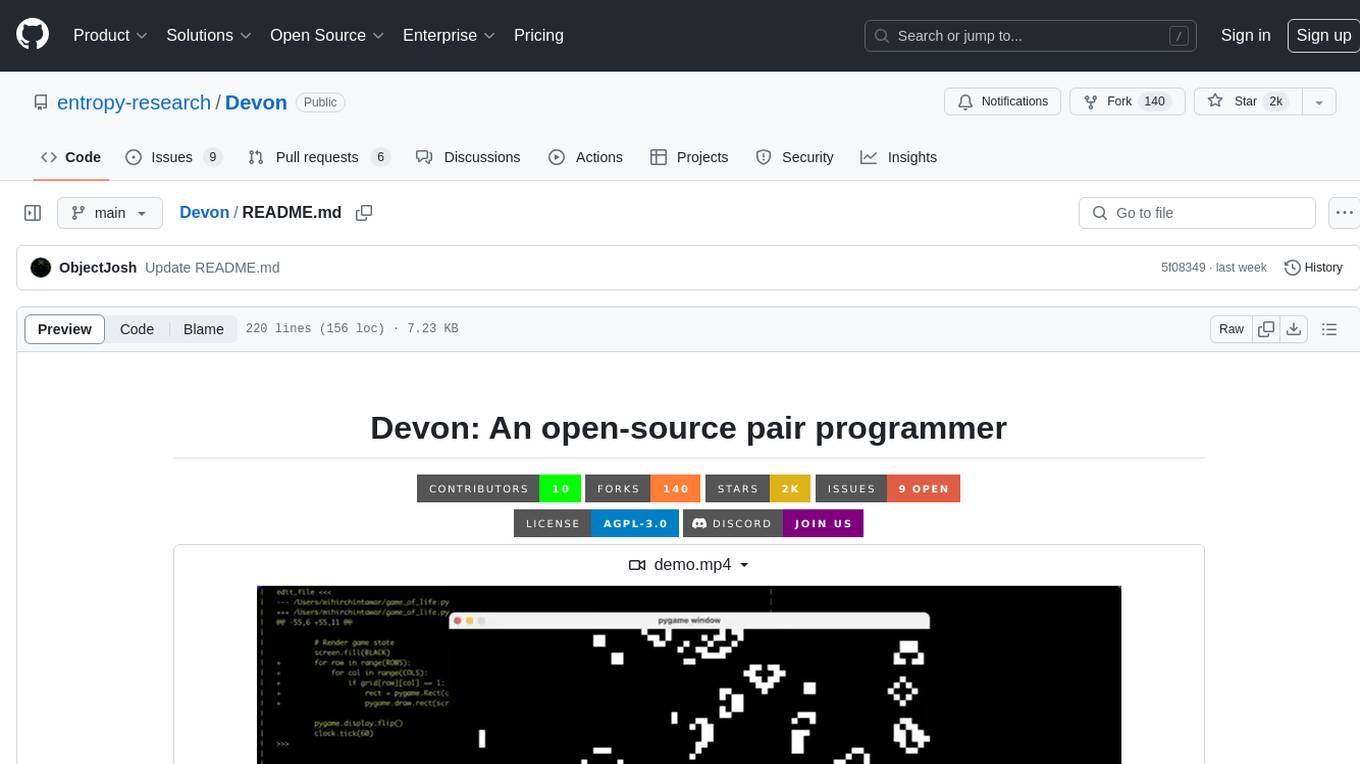

Devon

Devon is an open-source pair programmer tool designed to facilitate collaborative coding sessions. It provides features such as multi-file editing, codebase exploration, test writing, bug fixing, and architecture exploration. The tool supports Anthropic, OpenAI, and Groq APIs, with plans to add more models in the future. Devon is community-driven, with ongoing development goals including multi-model support, plugin system for tool builders, self-hostable Electron app, and setting SOTA on SWE-bench Lite. Users can contribute to the project by developing core functionality, conducting research on agent performance, providing feedback, and testing the tool.

pebble

Pebbling is an open-source protocol for agent-to-agent communication, enabling AI agents to collaborate securely using Decentralised Identifiers (DIDs) and mutual TLS (mTLS). It provides a lightweight communication protocol built on JSON-RPC 2.0, ensuring reliable and secure conversations between agents. Pebbling allows agents to exchange messages safely, connect seamlessly regardless of programming language, and communicate quickly and efficiently. It is designed to pave the way for the next generation of collaborative AI systems, promoting secure and effortless communication between agents across different environments.

AIOS

AIOS, a Large Language Model (LLM) Agent operating system, embeds large language model into Operating Systems (OS) as the brain of the OS, enabling an operating system "with soul" -- an important step towards AGI. AIOS is designed to optimize resource allocation, facilitate context switch across agents, enable concurrent execution of agents, provide tool service for agents, maintain access control for agents, and provide a rich set of toolkits for LLM Agent developers.

esp-ai

ESP-AI provides a complete AI conversation solution for your development board, including IAT+LLM+TTS integration solutions for ESP32 series development boards. It can be injected into projects without affecting existing ones. By providing keys from platforms like iFlytek, Jiling, and local services, you can run the services without worrying about interactions between services or between development boards and services. The project's server-side code is based on Node.js, and the hardware code is based on Arduino IDE.

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

openlit

OpenLIT is an OpenTelemetry-native GenAI and LLM Application Observability tool. It's designed to make the integration process of observability into GenAI projects as easy as pie – literally, with just **a single line of code**. Whether you're working with popular LLM Libraries such as OpenAI and HuggingFace or leveraging vector databases like ChromaDB, OpenLIT ensures your applications are monitored seamlessly, providing critical insights to improve performance and reliability.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

AutoAgent

AutoAgent is a fully-automated and zero-code framework that enables users to create and deploy LLM agents through natural language alone. It is a top performer on the GAIA Benchmark, equipped with a native self-managing vector database, and allows for easy creation of tools, agents, and workflows without any coding. AutoAgent seamlessly integrates with a wide range of LLMs and supports both function-calling and ReAct interaction modes. It is designed to be dynamic, extensible, customized, and lightweight, serving as a personal AI assistant.

agent-squad

Agent Squad is a flexible, lightweight open-source framework for orchestrating multiple AI agents to handle complex conversations. It intelligently routes queries, maintains context across interactions, and offers pre-built components for quick deployment. The system allows easy integration of custom agents and conversation messages storage solutions, making it suitable for various applications from simple chatbots to sophisticated AI systems, scaling efficiently.

any-agent

Any-agent is a tool that provides a single interface to use and evaluate different agent frameworks. It supports various frameworks like TinyAgent, Google ADK, LangChain, LlamaIndex, OpenAI Agents, Smolagents, and Agno AI. Users can define agent systems using the tool and access practical examples for creating agents, agent evaluations, using callbacks, integrating Model Context Protocol tools, deploying agents with Agent-to-Agent communication, and building Multi-Agent Systems with A2A. Contributions for new frameworks and features are welcome.

For similar tasks

MLE-agent

MLE-Agent is an intelligent companion designed for machine learning engineers and researchers. It features autonomous baseline creation, integration with Arxiv and Papers with Code, smart debugging, file system organization, comprehensive tools integration, and an interactive CLI chat interface for seamless AI engineering and research workflows.

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

continue

Continue is an open-source autopilot for VS Code and JetBrains that allows you to code with any LLM. With Continue, you can ask coding questions, edit code in natural language, generate files from scratch, and more. Continue is easy to use and can help you save time and improve your coding skills.

anterion

Anterion is an open-source AI software engineer that extends the capabilities of `SWE-agent` to plan and execute open-ended engineering tasks, with a frontend inspired by `OpenDevin`. It is designed to help users fix bugs and prototype ideas with ease. Anterion is equipped with easy deployment and a user-friendly interface, making it accessible to users of all skill levels.

sglang

SGLang is a structured generation language designed for large language models (LLMs). It makes your interaction with LLMs faster and more controllable by co-designing the frontend language and the runtime system. The core features of SGLang include: - **A Flexible Front-End Language**: This allows for easy programming of LLM applications with multiple chained generation calls, advanced prompting techniques, control flow, multiple modalities, parallelism, and external interaction. - **A High-Performance Runtime with RadixAttention**: This feature significantly accelerates the execution of complex LLM programs by automatic KV cache reuse across multiple calls. It also supports other common techniques like continuous batching and tensor parallelism.

ChatDBG

ChatDBG is an AI-based debugging assistant for C/C++/Python/Rust code that integrates large language models into a standard debugger (`pdb`, `lldb`, `gdb`, and `windbg`) to help debug your code. With ChatDBG, you can engage in a dialog with your debugger, asking open-ended questions about your program, like `why is x null?`. ChatDBG will _take the wheel_ and steer the debugger to answer your queries. ChatDBG can provide error diagnoses and suggest fixes. As far as we are aware, ChatDBG is the _first_ debugger to automatically perform root cause analysis and to provide suggested fixes.

For similar jobs

Thor

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

redbox

Redbox is a retrieval augmented generation (RAG) app that uses GenAI to chat with and summarise civil service documents. It increases organisational memory by indexing documents and can summarise reports read months ago, supplement them with current work, and produce a first draft that lets civil servants focus on what they do best. The project uses a microservice architecture with each microservice running in its own container defined by a Dockerfile. Dependencies are managed using Python Poetry. Contributions are welcome, and the project is licensed under the MIT License. Security measures are in place to ensure user data privacy and considerations are being made to make the core-api secure.

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

MLE-agent

MLE-Agent is an intelligent companion designed for machine learning engineers and researchers. It features autonomous baseline creation, integration with Arxiv and Papers with Code, smart debugging, file system organization, comprehensive tools integration, and an interactive CLI chat interface for seamless AI engineering and research workflows.

LynxHub

LynxHub is a platform that allows users to seamlessly install, configure, launch, and manage all their AI interfaces from a single, intuitive dashboard. It offers features like AI interface management, arguments manager, custom run commands, pre-launch actions, extension management, in-app tools like terminal and web browser, AI information dashboard, Discord integration, and additional features like theme options and favorite interface pinning. The platform supports modular design for custom AI modules and upcoming extensions system for complete customization. LynxHub aims to streamline AI workflow and enhance user experience with a user-friendly interface and comprehensive functionalities.

ChatGPT-Next-Web-Pro

ChatGPT-Next-Web-Pro is a tool that provides an enhanced version of ChatGPT-Next-Web with additional features and functionalities. It offers complete ChatGPT-Next-Web functionality, file uploading and storage capabilities, drawing and video support, multi-modal support, reverse model support, knowledge base integration, translation, customizations, and more. The tool can be deployed with or without a backend, allowing users to interact with AI models, manage accounts, create models, manage API keys, handle orders, manage memberships, and more. It supports various cloud services like Aliyun OSS, Tencent COS, and Minio for file storage, and integrates with external APIs like Azure, Google Gemini Pro, and Luma. The tool also provides options for customizing website titles, subtitles, icons, and plugin buttons, and offers features like voice input, file uploading, real-time token count display, and more.

agentneo

AgentNeo is a Python package that provides functionalities for project, trace, dataset, experiment management. It allows users to authenticate, create projects, trace agents and LangGraph graphs, manage datasets, and run experiments with metrics. The tool aims to streamline AI project management and analysis by offering a comprehensive set of features.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface with features such as independent development documentation page support, service monitoring page configuration support, and third-party login support. Users can manage user registration time, optimize interface elements, and support features like online recharge, model pricing display, and sensitive word filtering. VoAPI also provides support for various AI models and platforms, with the ability to configure homepage templates, model information, and manufacturer information.