gitleaks

Find secrets with Gitleaks 🔑

Stars: 19358

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

README:

┌─○───┐

│ │╲ │

│ │ ○ │

│ ○ ░ │

└─░───┘

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin.

➜ ~/code(master) gitleaks git -v

○

│╲

│ ○

○ ░

░ gitleaks

Finding: "export BUNDLE_ENTERPRISE__CONTRIBSYS__COM=cafebabe:deadbeef",

Secret: cafebabe:deadbeef

RuleID: sidekiq-secret

Entropy: 2.609850

File: cmd/generate/config/rules/sidekiq.go

Line: 23

Commit: cd5226711335c68be1e720b318b7bc3135a30eb2

Author: John

Email: [email protected]

Date: 2022-08-03T12:31:40Z

Fingerprint: cd5226711335c68be1e720b318b7bc3135a30eb2:cmd/generate/config/rules/sidekiq.go:sidekiq-secret:23

Gitleaks can be installed using Homebrew, Docker, or Go. Gitleaks is also available in binary form for many popular platforms and OS types on the releases page. In addition, Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action using Gitleaks-Action.

# MacOS

brew install gitleaks

# Docker (DockerHub)

docker pull zricethezav/gitleaks:latest

docker run -v ${path_to_host_folder_to_scan}:/path zricethezav/gitleaks:latest [COMMAND] [OPTIONS] [SOURCE_PATH]

# Docker (ghcr.io)

docker pull ghcr.io/gitleaks/gitleaks:latest

docker run -v ${path_to_host_folder_to_scan}:/path ghcr.io/gitleaks/gitleaks:latest [COMMAND] [OPTIONS] [SOURCE_PATH]

# From Source (make sure `go` is installed)

git clone https://github.com/gitleaks/gitleaks.git

cd gitleaks

make buildCheck out the official Gitleaks GitHub Action

name: gitleaks

on: [pull_request, push, workflow_dispatch]

jobs:

scan:

name: gitleaks

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

with:

fetch-depth: 0

- uses: gitleaks/gitleaks-action@v2

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

GITLEAKS_LICENSE: ${{ secrets.GITLEAKS_LICENSE}} # Only required for Organizations, not personal accounts.

-

Install pre-commit from https://pre-commit.com/#install

-

Create a

.pre-commit-config.yamlfile at the root of your repository with the following content:repos: - repo: https://github.com/gitleaks/gitleaks rev: v8.23.1 hooks: - id: gitleaksfor a native execution of GitLeaks or use the

gitleaks-dockerpre-commit ID for executing GitLeaks using the official Docker images -

Auto-update the config to the latest repos' versions by executing

pre-commit autoupdate -

Install with

pre-commit install -

Now you're all set!

➜ git commit -m "this commit contains a secret"

Detect hardcoded secrets.................................................Failed

Note: to disable the gitleaks pre-commit hook you can prepend SKIP=gitleaks to the commit command

and it will skip running gitleaks

➜ SKIP=gitleaks git commit -m "skip gitleaks check"

Detect hardcoded secrets................................................Skipped

Usage:

gitleaks [command]

Available Commands:

completion generate the autocompletion script for the specified shell

dir scan directories or files for secrets

git scan git repositories for secrets

help Help about any command

stdin detect secrets from stdin

version display gitleaks version

Flags:

-b, --baseline-path string path to baseline with issues that can be ignored

-c, --config string config file path

order of precedence:

1. --config/-c

2. env var GITLEAKS_CONFIG

3. env var GITLEAKS_CONFIG_TOML with the file content

4. (target path)/.gitleaks.toml

If none of the four options are used, then gitleaks will use the default config

--enable-rule strings only enable specific rules by id

--exit-code int exit code when leaks have been encountered (default 1)

-i, --gitleaks-ignore-path string path to .gitleaksignore file or folder containing one (default ".")

-h, --help help for gitleaks

--ignore-gitleaks-allow ignore gitleaks:allow comments

-l, --log-level string log level (trace, debug, info, warn, error, fatal) (default "info")

--max-decode-depth int allow recursive decoding up to this depth (default "0", no decoding is done)

--max-target-megabytes int files larger than this will be skipped

--no-banner suppress banner

--no-color turn off color for verbose output

--redact uint[=100] redact secrets from logs and stdout. To redact only parts of the secret just apply a percent value from 0..100. For example --redact=20 (default 100%)

-f, --report-format string output format (json, csv, junit, sarif) (default "json")

-r, --report-path string report file

--report-template string template file used to generate the report (implies --report-format=template)

-v, --verbose show verbose output from scan

--version version for gitleaks

Use "gitleaks [command] --help" for more information about a command.

detect and protect. Those commands are still available but

are hidden in the --help menu. Take a look at this gist for easy command translations.

If you find v8.19.0 broke an existing command (detect/protect), please open an issue.

There are three scanning modes: git, dir, and stdin.

The git command lets you scan local git repos. Under the hood, gitleaks uses the git log -p command to scan patches.

You can configure the behavior of git log -p with the log-opts option.

For example, if you wanted to run gitleaks on a range of commits you could use the following

command: gitleaks git -v --log-opts="--all commitA..commitB" path_to_repo. See the git log documentation for more information.

If there is no target specified as a positional argument, then gitleaks will attempt to scan the current working directory as a git repo.

The dir (aliases include files, directory) command lets you scan directories and files. Example: gitleaks dir -v path_to_directory_or_file.

If there is no target specified as a positional argument, then gitleaks will scan the current working directory.

You can also stream data to gitleaks with the stdin command. Example: cat some_file | gitleaks -v stdin

When scanning large repositories or repositories with a long history, it can be convenient to use a baseline. When using a baseline,

gitleaks will ignore any old findings that are present in the baseline. A baseline can be any gitleaks report. To create a gitleaks report, run gitleaks with the --report-path parameter.

gitleaks git --report-path gitleaks-report.json # This will save the report in a file called gitleaks-report.json

Once as baseline is created it can be applied when running the detect command again:

gitleaks git --baseline-path gitleaks-report.json --report-path findings.json

After running the detect command with the --baseline-path parameter, report output (findings.json) will only contain new issues.

You can run Gitleaks as a pre-commit hook by copying the example pre-commit.py script into

your .git/hooks/ directory.

The order of precedence is:

-

--config/-coption:gitleaks git --config /home/dev/customgitleaks.toml . - Environment variable

GITLEAKS_CONFIGwith the file path:export GITLEAKS_CONFIG="/home/dev/customgitleaks.toml" gitleaks git .

- Environment variable

GITLEAKS_CONFIG_TOMLwith the file content:export GITLEAKS_CONFIG_TOML=`cat customgitleaks.toml` gitleaks git .

- A

.gitleaks.tomlfile within the target path:gitleaks git .

If none of the four options are used, then gitleaks will use the default config.

Gitleaks offers a configuration format you can follow to write your own secret detection rules:

# Title for the gitleaks configuration file.

title = "Custom Gitleaks configuration"

# You have basically two options for your custom configuration:

#

# 1. define your own configuration, default rules do not apply

#

# use e.g., the default configuration as starting point:

# https://github.com/gitleaks/gitleaks/blob/master/config/gitleaks.toml

#

# 2. extend a configuration, the rules are overwritten or extended

#

# When you extend a configuration the extended rules take precedence over the

# default rules. I.e., if there are duplicate rules in both the extended

# configuration and the default configuration the extended rules or

# attributes of them will override the default rules.

# Another thing to know with extending configurations is you can chain

# together multiple configuration files to a depth of 2. Allowlist arrays are

# appended and can contain duplicates.

# useDefault and path can NOT be used at the same time. Choose one.

[extend]

# useDefault will extend the default gitleaks config built in to the binary

# the latest version is located at:

# https://github.com/gitleaks/gitleaks/blob/master/config/gitleaks.toml

useDefault = true

# or you can provide a path to a configuration to extend from.

# The path is relative to where gitleaks was invoked,

# not the location of the base config.

# path = "common_config.toml"

# If there are any rules you don't want to inherit, they can be specified here.

disabledRules = [ "generic-api-key"]

# An array of tables that contain information that define instructions

# on how to detect secrets

[[rules]]

# Unique identifier for this rule

id = "awesome-rule-1"

# Short human readable description of the rule.

description = "awesome rule 1"

# Golang regular expression used to detect secrets. Note Golang's regex engine

# does not support lookaheads.

regex = '''one-go-style-regex-for-this-rule'''

# Int used to extract secret from regex match and used as the group that will have

# its entropy checked if `entropy` is set.

secretGroup = 3

# Float representing the minimum shannon entropy a regex group must have to be considered a secret.

entropy = 3.5

# Golang regular expression used to match paths. This can be used as a standalone rule or it can be used

# in conjunction with a valid `regex` entry.

path = '''a-file-path-regex'''

# Keywords are used for pre-regex check filtering. Rules that contain

# keywords will perform a quick string compare check to make sure the

# keyword(s) are in the content being scanned. Ideally these values should

# either be part of the identiifer or unique strings specific to the rule's regex

# (introduced in v8.6.0)

keywords = [

"auth",

"password",

"token",

]

# Array of strings used for metadata and reporting purposes.

tags = ["tag","another tag"]

# ⚠️ In v8.21.0 `[rules.allowlist]` was replaced with `[[rules.allowlists]]`.

# This change was backwards-compatible: instances of `[rules.allowlist]` still work.

#

# You can define multiple allowlists for a rule to reduce false positives.

# A finding will be ignored if _ANY_ `[[rules.allowlists]]` matches.

[[rules.allowlists]]

description = "ignore commit A"

# When multiple criteria are defined the default condition is "OR".

# e.g., this can match on |commits| OR |paths| OR |stopwords|.

condition = "OR"

commits = [ "commit-A", "commit-B"]

paths = [

'''go\.mod''',

'''go\.sum'''

]

# note: stopwords targets the extracted secret, not the entire regex match

# like 'regexes' does. (stopwords introduced in 8.8.0)

stopwords = [

'''client''',

'''endpoint''',

]

[[rules.allowlists]]

# The "AND" condition can be used to make sure all criteria match.

# e.g., this matches if |regexes| AND |paths| are satisfied.

condition = "AND"

# note: |regexes| defaults to check the _Secret_ in the finding.

# Acceptable values for |regexTarget| are "secret" (default), "match", and "line".

regexTarget = "match"

regexes = [ '''(?i)parseur[il]''' ]

paths = [ '''package-lock\.json''' ]

# You can extend a particular rule from the default config. e.g., gitlab-pat

# if you have defined a custom token prefix on your GitLab instance

[[rules]]

id = "gitlab-pat"

# all the other attributes from the default rule are inherited

[[rules.allowlists]]

regexTarget = "line"

regexes = [ '''MY-glpat-''' ]

# This is a global allowlist which has a higher order of precedence than rule-specific allowlists.

# If a commit listed in the `commits` field below is encountered then that commit will be skipped and no

# secrets will be detected for said commit. The same logic applies for regexes and paths.

[allowlist]

description = "global allow list"

commits = [ "commit-A", "commit-B", "commit-C"]

paths = [

'''gitleaks\.toml''',

'''(.*?)(jpg|gif|doc)'''

]

# note: (global) regexTarget defaults to check the _Secret_ in the finding.

# if regexTarget is not specified then _Secret_ will be used.

# Acceptable values for regexTarget are "match" and "line"

regexTarget = "match"

regexes = [

'''219-09-9999''',

'''078-05-1120''',

'''(9[0-9]{2}|666)-\d{2}-\d{4}''',

]

# note: stopwords targets the extracted secret, not the entire regex match

# like 'regexes' does. (stopwords introduced in 8.8.0)

stopwords = [

'''client''',

'''endpoint''',

]Refer to the default gitleaks config for examples or follow the contributing guidelines if you would like to contribute to the default configuration. Additionally, you can check out this gitleaks blog post which covers advanced configuration setups.

If you are knowingly committing a test secret that gitleaks will catch you can add a gitleaks:allow comment to that line which will instruct gitleaks

to ignore that secret. Ex:

class CustomClass:

discord_client_secret = '8dyfuiRyq=vVc3RRr_edRk-fK__JItpZ' #gitleaks:allow

You can ignore specific findings by creating a .gitleaksignore file at the root of your repo. In release v8.10.0 Gitleaks added a Fingerprint value to the Gitleaks report. Each leak, or finding, has a Fingerprint that uniquely identifies a secret. Add this fingerprint to the .gitleaksignore file to ignore that specific secret. See Gitleaks' .gitleaksignore for an example. Note: this feature is experimental and is subject to change in the future.

Sometimes secrets are encoded in a way that can make them difficult to find

with just regex. Now you can tell gitleaks to automatically find and decode

encoded text. The flag --max-decode-depth enables this feature (the default

value "0" means the feature is disabled by default).

Recursive decoding is supported since decoded text can also contain encoded

text. The flag --max-decode-depth sets the recursion limit. Recursion stops

when there are no new segments of encoded text to decode, so setting a really

high max depth doesn't mean it will make that many passes. It will only make as

many as it needs to decode the text. Overall, decoding only minimally increases

scan times.

The findings for encoded text differ from normal findings in the following ways:

- The location points the bounds of the encoded text

- If the rule matches outside the encoded text, the bounds are adjusted to include that as well

- The match and secret contain the decoded value

- Two tags are added

decoded:<encoding>anddecode-depth:<depth>

Currently supported encodings:

-

base64(both standard and base64url)

Gitleaks has built-in support for several report formats: json, csv, junit, and sarif.

If none of these formats fit your need, you can create your own report format with a Go text/template .tmpl file and the --report-template flag. The template can use extended functionality from the Masterminds/sprig template library.

For example, the following template provides a custom JSON output:

# jsonextra.tmpl

[{{ $lastFinding := (sub (len . ) 1) }}

{{- range $i, $finding := . }}{{with $finding}}

{

"Description": {{ quote .Description }},

"StartLine": {{ .StartLine }},

"EndLine": {{ .EndLine }},

"StartColumn": {{ .StartColumn }},

"EndColumn": {{ .EndColumn }},

"Line": {{ quote .Line }},

"Match": {{ quote .Match }},

"Secret": {{ quote .Secret }},

"File": "{{ .File }}",

"SymlinkFile": {{ quote .SymlinkFile }},

"Commit": {{ quote .Commit }},

"Entropy": {{ .Entropy }},

"Author": {{ quote .Author }},

"Email": {{ quote .Email }},

"Date": {{ quote .Date }},

"Message": {{ quote .Message }},

"Tags": [{{ $lastTag := (sub (len .Tags ) 1) }}{{ range $j, $tag := .Tags }}{{ quote . }}{{ if ne $j $lastTag }},{{ end }}{{ end }}],

"RuleID": {{ quote .RuleID }},

"Fingerprint": {{ quote .Fingerprint }}

}{{ if ne $i $lastFinding }},{{ end }}

{{- end}}{{ end }}

]

Usage:

$ gitleaks dir ~/leaky-repo/ --report-path "report.json" --report-format template --report-template testdata/report/jsonextra.tmpl

You can always set the exit code when leaks are encountered with the --exit-code flag. Default exit codes below:

0 - no leaks present

1 - leaks or error encountered

126 - unknown flag

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gitleaks

Similar Open Source Tools

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

banks

Banks is a linguist professor tool that helps generate meaningful LLM prompts using a template language. It provides a user-friendly way to create prompts for various tasks such as blog writing, summarizing documents, lemmatizing text, and generating text using a LLM. The tool supports async operations and comes with predefined filters for data processing. Banks leverages Jinja's macro system to create prompts and interact with OpenAI API for text generation. It also offers a cache mechanism to avoid regenerating text for the same template and context.

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

mjai.app

mjai.app is a platform for mahjong AI competition. It contains an implementation of a mahjong game simulator for evaluating submission files. The simulator runs Docker internally, and there is a base class for developing bots that communicate via the mjai protocol. Submission files are deployed in a Docker container, and the Docker image is pushed to Docker Hub. The Mjai protocol used is customized based on Mortal's Mjai Engine implementation.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

agent-mimir

Agent Mimir is a command line and Discord chat client 'agent' manager for LLM's like Chat-GPT that provides the models with access to tooling and a framework with which accomplish multi-step tasks. It is easy to configure your own agent with a custom personality or profession as well as enabling access to all tools that are compatible with LangchainJS. Agent Mimir is based on LangchainJS, every tool or LLM that works on Langchain should also work with Mimir. The tasking system is based on Auto-GPT and BabyAGI where the agent needs to come up with a plan, iterate over its steps and review as it completes the task.

top_secret

Top Secret is a Ruby gem designed to filter sensitive information from free text before sending it to external services or APIs, such as chatbots and LLMs. It provides default filters for credit cards, emails, phone numbers, social security numbers, people's names, and locations, with the ability to add custom filters. Users can configure the tool to handle sensitive information redaction, scan for sensitive data, batch process messages, and restore filtered text from external services. Top Secret uses Regex and NER filters to detect and redact sensitive information, allowing users to override default filters, disable specific filters, and add custom filters globally. The tool is suitable for applications requiring data privacy and security measures.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

langchainrb

Langchain.rb is a Ruby library that makes it easy to build LLM-powered applications. It provides a unified interface to a variety of LLMs, vector search databases, and other tools, making it easy to build and deploy RAG (Retrieval Augmented Generation) systems and assistants. Langchain.rb is open source and available under the MIT License.

For similar tasks

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

MLE-agent

MLE-Agent is an intelligent companion designed for machine learning engineers and researchers. It features autonomous baseline creation, integration with Arxiv and Papers with Code, smart debugging, file system organization, comprehensive tools integration, and an interactive CLI chat interface for seamless AI engineering and research workflows.

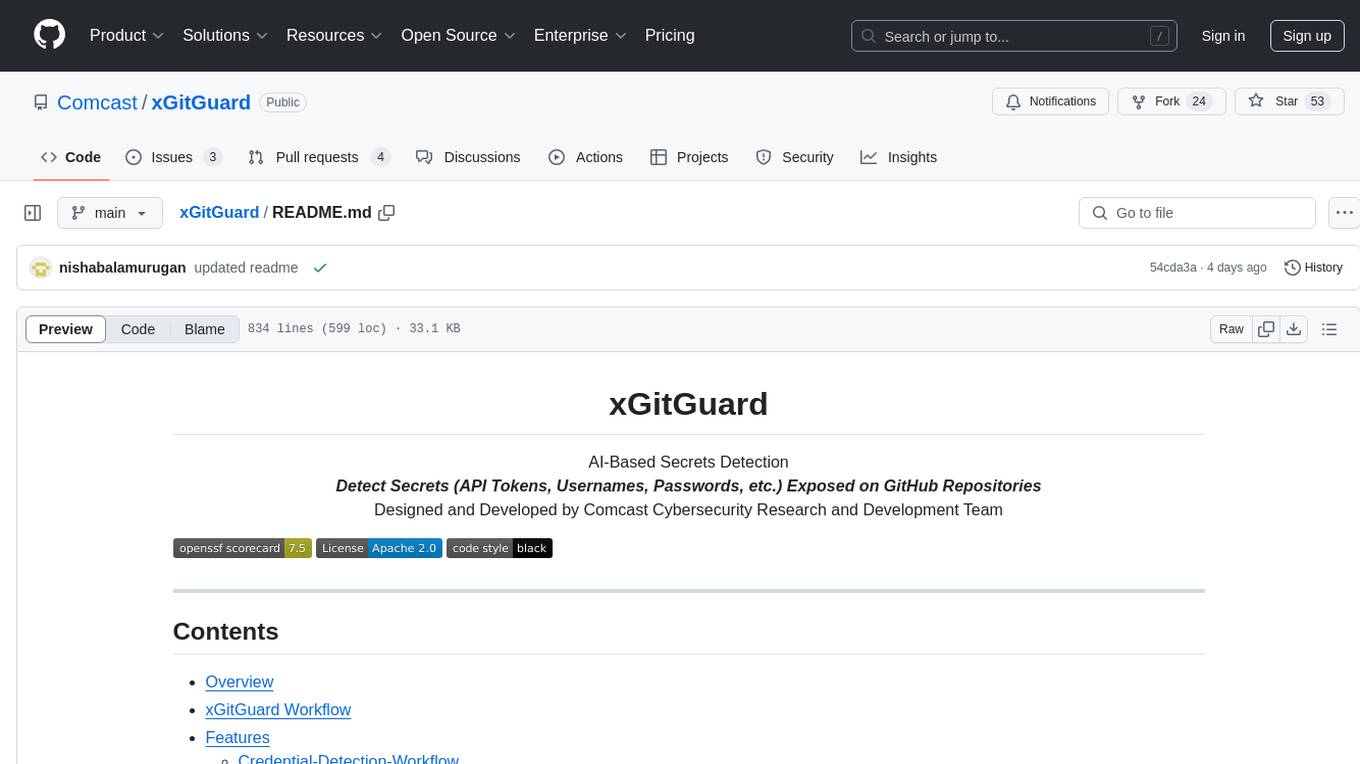

xGitGuard

xGitGuard is an AI-based system developed by Comcast Cybersecurity Research and Development team to detect secrets (e.g., API tokens, usernames, passwords) exposed on GitHub repositories. It uses advanced Natural Language Processing to detect secrets at scale and with appropriate velocity. The tool provides workflows for detecting credentials and keys/tokens in both enterprise and public GitHub accounts. Users can set up search patterns, configure API access, run detections with or without ML filters, and train ML models for improved detection accuracy. xGitGuard also supports custom keyword scans for targeted organizations or repositories. The tool is licensed under Apache 2.0.

For similar jobs

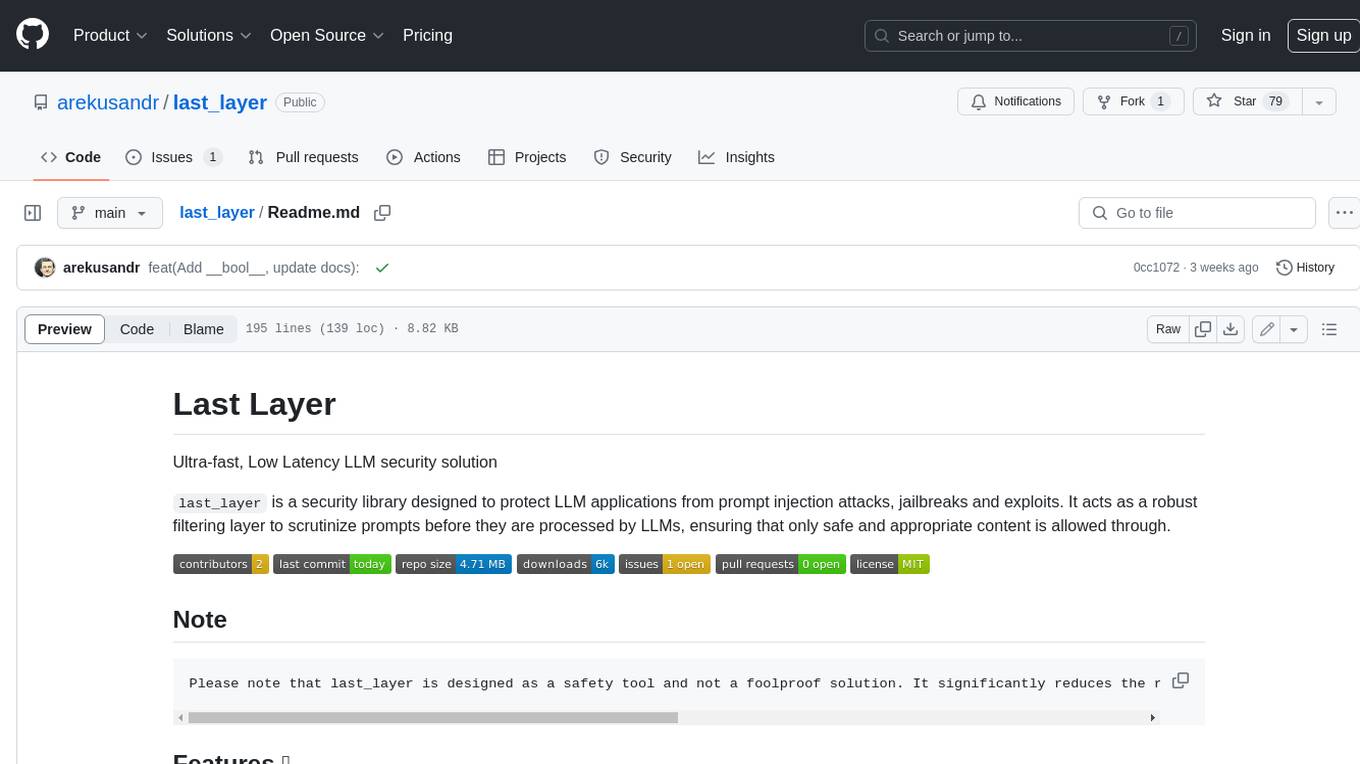

last_layer

last_layer is a security library designed to protect LLM applications from prompt injection attacks, jailbreaks, and exploits. It acts as a robust filtering layer to scrutinize prompts before they are processed by LLMs, ensuring that only safe and appropriate content is allowed through. The tool offers ultra-fast scanning with low latency, privacy-focused operation without tracking or network calls, compatibility with serverless platforms, advanced threat detection mechanisms, and regular updates to adapt to evolving security challenges. It significantly reduces the risk of prompt-based attacks and exploits but cannot guarantee complete protection against all possible threats.

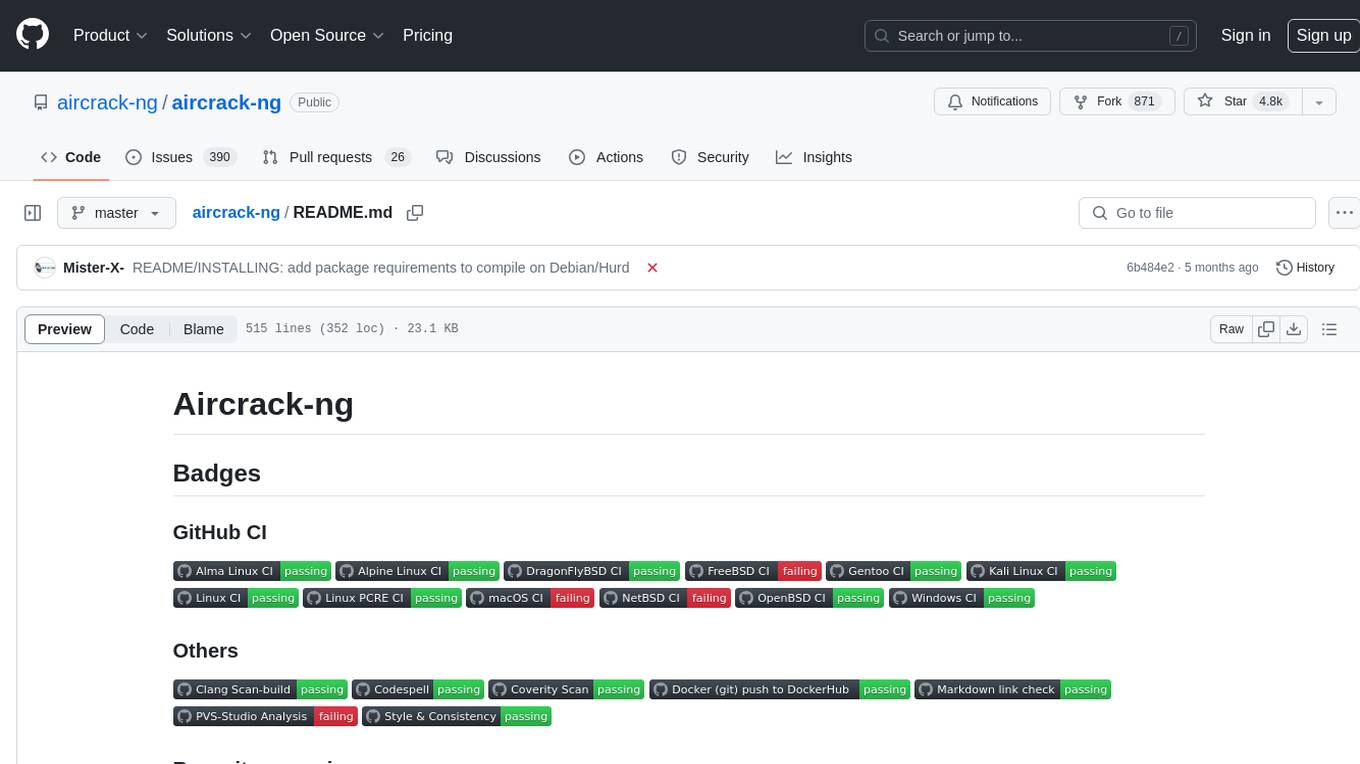

aircrack-ng

Aircrack-ng is a comprehensive suite of tools designed to evaluate the security of WiFi networks. It covers various aspects of WiFi security, including monitoring, attacking (replay attacks, deauthentication, fake access points), testing WiFi cards and driver capabilities, and cracking WEP and WPA PSK. The tools are command line-based, allowing for extensive scripting and have been utilized by many GUIs. Aircrack-ng primarily works on Linux but also supports Windows, macOS, FreeBSD, OpenBSD, NetBSD, Solaris, and eComStation 2.

reverse-engineering-assistant

ReVA (Reverse Engineering Assistant) is a project aimed at building a disassembler agnostic AI assistant for reverse engineering tasks. It utilizes a tool-driven approach, providing small tools to the user to empower them in completing complex tasks. The assistant is designed to accept various inputs, guide the user in correcting mistakes, and provide additional context to encourage exploration. Users can ask questions, perform tasks like decompilation, class diagram generation, variable renaming, and more. ReVA supports different language models for online and local inference, with easy configuration options. The workflow involves opening the RE tool and program, then starting a chat session to interact with the assistant. Installation includes setting up the Python component, running the chat tool, and configuring the Ghidra extension for seamless integration. ReVA aims to enhance the reverse engineering process by breaking down actions into small parts, including the user's thoughts in the output, and providing support for monitoring and adjusting prompts.

AutoAudit

AutoAudit is an open-source large language model specifically designed for the field of network security. It aims to provide powerful natural language processing capabilities for security auditing and network defense, including analyzing malicious code, detecting network attacks, and predicting security vulnerabilities. By coupling AutoAudit with ClamAV, a security scanning platform has been created for practical security audit applications. The tool is intended to assist security professionals with accurate and fast analysis and predictions to combat evolving network threats.

aif

Arno's Iptables Firewall (AIF) is a single- & multi-homed firewall script with DSL/ADSL support. It is a free software distributed under the GNU GPL License. The script provides a comprehensive set of configuration files and plugins for setting up and managing firewall rules, including support for NAT, load balancing, and multirouting. It offers detailed instructions for installation and configuration, emphasizing security best practices and caution when modifying settings. The script is designed to protect against hostile attacks by blocking all incoming traffic by default and allowing users to configure specific rules for open ports and network interfaces.

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

Academic_LLM_Sec_Papers

Academic_LLM_Sec_Papers is a curated collection of academic papers related to LLM Security Application. The repository includes papers sorted by conference name and published year, covering topics such as large language models for blockchain security, software engineering, machine learning, and more. Developers and researchers are welcome to contribute additional published papers to the list. The repository also provides information on listed conferences and journals related to security, networking, software engineering, and cryptography. The papers cover a wide range of topics including privacy risks, ethical concerns, vulnerabilities, threat modeling, code analysis, fuzzing, and more.

DeGPT

DeGPT is a tool designed to optimize decompiler output using Large Language Models (LLM). It requires manual installation of specific packages and setting up API key for OpenAI. The tool provides functionality to perform optimization on decompiler output by running specific scripts.