watchtower

AIShield Watchtower: Dive Deep into AI's Secrets! 🔍 Open-source tool by AIShield for AI model insights & vulnerability scans. Secure your AI supply chain today! ⚙️🛡️

Stars: 187

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

README:

In today's rapidly advancing landscape of machine learning and artificial intelligence (AI), ensuring the security of AI models has become an imperative. These models serve as the driving force behind decision-making in a myriad of applications. As such, safeguarding their integrity and protection against potential attacks remains a top priority. Unfortunately, the critical components of this AI ecosystem, namely Jupyter notebooks and models, are often overlooked in routine security assessments, leaving them vulnerable and appealing targets for potential attackers. Initial tests with open-source tools painted a grim picture, underscoring severe limitations and an urgent need for a bespoke solution. As AI/ML models continue to reshape industries and drive innovation, the significance of model security is paramount. However, amidst the excitement of AI's potential, the security of Jupyter notebooks and AI models has been consistently relegated to the shadows.

A compelling illustration of this security blind spot revolves around the widespread usage of Jupyter notebooks. Within these digital pages lie the blueprints, codes, and algorithms that breathe life into many of today's AI-driven products and services. However, like any other codebase, Jupyter notebooks are not immune to vulnerabilities that could inadvertently find their way into the final product. These vulnerabilities, if left unaddressed, can become gateways for cyber-attacks, risking not only the integrity of AI-driven products but also the security of user data.

AIShield Watchtower is designed to automate model and notebook discoveries and conduct comprehensive vulnerability scan. Its capabilities go beyond merely identifying all the models and notebooks within your repository. It assesses risks, such as hard-coded secrets, PIIs, outdated/unsafe libraries, model serialization attacks, custom unsafe operations etc.

AIShield Watchtower stands out with its capability to categorize scans into four distinct risk levels: "low," "medium," "high," and "critical." This classification equips organizations with the ability to tailor their security efforts to the level of risk detected. Its adaptive approach and meticulous risk categorization significantly bolster security efforts, fortifying them effectively. Watchtower's alignment with industry standards such as OWASP, MITRE, CWE, NIST AI RMF MAP functions further enhances its market standing by providing advanced security solutions.

AIShield Watchtower can be used to inspect vulnerabilities in Jupyter notebooks and AI/ML Models (.h5, .pkl and .pb file formats).

For using AIShield Watchtower, clone Watchtower repo. Install prerequisites and scan your notebooks and AI/ML models. Some starting sample test files are available within the Watchtower repo.

- For running Watchtower in CLI or UI version, python3 and pip should be installed in the host system.

- For running UI-Docker version, docker and docker-compose should be installed in the host system

Cloning Watchtower repo

git clone https://github.com/bosch-aisecurity-aishield/watchtower.git

Once Git repositories cloned, change directory.

NOTE: For docker users, refer UI-Docker

cd watchtower/srcInstall Watchtower related dependency libraries using following commands

pip install -r requirements.txt

python -m spacy download en_core_web_lgInspection of Jupyter Notebooks and ML/DL models can be done by any of the three methods:

To View the available options in CLI mode

python watchtower.py -h python watchtower.py --repo_type=github --repo_url=<Enter Repo Url> --branch_name=<Enter Branch Name> --depth=<Enter a number>NOTE: branch_name and depth parameters are optional. Default value of branch_name is main and default depth value is 1

python watchtower.py --repo_type=huggingface --repo_url=<Enter Hugging Face Url>python watchtower.py --repo_type=s3 --bucket_name="<Enter Bucket name>" --region="<Enter region of s3 bucket>" --aws_access_key_id="<Enter aws access key>" --aws_secret_access_key="<Enter aws secret key>"#Select Repo_type = file for scanning individual file

python watchtower.py --repo_type=file --path=<Enter path of File>

#Select Repo_type = file for scanning individual file

python watchtower.py --repo_type=folder --path=<Enter path of Folder>For using Watchtower UI, execute following command

python watchtower_webapp.py open browser and paste: http://localhost:5015/watchtower-aishield

For using Watchtower UI, build docker image for Watchtower and run Watchtower image

cd watchtower

docker-compose build

docker-compose up open browser and paste: http://localhost:5015/watchtower-aishield

On successful completion of scan, Watchtower vulnerability reports will be available in reports folder in Watchtower root folder.

For stopping and removing Watchtower image execute following

docker-compose downFor quick getting started, you may try Watchtower Playground by visiting https://app-watchtower.boschaishield.com

In the Watchtower Playground, users can scan Notebooks and AI/ML models available in public Github Repos by providing public Github Repo URL. After completion of vulnerability scan, reports will be available to download in the Playground screen.

On successful completion of the Watchtower scan, three reports will be generated in the following path :

-

For CLI Mode - all three reports will be available inside the Watchtower src folder. Users may refer last line of the summary report in the console for the complete path of the reports location

-

For UI Mode - all three will be reports will be available inside the Watchtower src folder. Users may refer to the success message on the UI to get the path of the reports location

-

For UI-Docker Mode - all three reports will be available inside the Watchtower reports folder. Users may refer to the success message on the UI to get the path of the reports location

- Summary Report - In summary report will provide information on number of model files and notebook files detected, Number of vulnerabilities detected and Count of those vulnerabilities mapped to Critical, High, Medium and low. Sample snippet of Summary Report:

{

"Repository Type": "github",

"Repository URL": "https://github.com/bosch-aisecurity-aishield/watchtower.git",

"Total Number of Model Found": 17,

"Total Number of Notebooks & Requirement files Found": 6,

"Total Number of Model Scanned": 17,

"Total Number of Notebooks & Requirement files Scanned": 6,

"Total Notebook & Requirement files Vulnerabilities Found": {

"Critical": 0,

"High": 40,

"Medium": 17,

"Low": 1

},

"Total Model Vulnerabilities Found": {

"Critical": 0,

"High": 4,

"Medium": 2,

"Low": 8

}

}- Severity Mapping Report - In this report, details of High, Medium and Low mapping to Model or Notebooks is reported. Sample snippet of Severity Mapping Report:

{

"type": "Hex High Entropy String",

"filename": "repo_dir_1696827949/sample_test_files/sample_notebook_files/classification_notebook.py",

"hashed_secret": "8d1e60a0b91ca2071dc4027b6f227990fb599d27",

"is_verified": false,

"line_number": 47,

"vulnerability_severity": "High"

},

{

"type": "Secret Keyword",

"filename": "repo_dir_1696827949/sample_test_files/sample_notebook_files/classification_notebook.py",

"hashed_secret": "8d1e60a0b91ca2071dc4027b6f227990fb599d27",

"is_verified": false,

"line_number": 47,

"vulnerability_severity": "High"

}- Detailed Report - In this report, Watchtower users will be able to find all logs generated during the vulnerability scan.

- Model and Notebook Detection: Automatically recognizes AI/ML models and Notebooks within a provided repository. Supported file format is H5, pickle, saved model, .ipynb

- Scanning:Executes thorough scans of the models and notebooks to detect potential safety and security concerns.

- Report Generation: Produces comprehensive reports that classify the scanned files containing "low," "medium,", "high" and "critical" risk.

- Supported Repositories : AIShield Watchtower supports integration with GitHub and AWS S3 buckets, allowing for automated scanning of Git repositories and AWS S3 buckets to identify potential risks.

- User Interface (UI): Offers an intuitive user interface for conducting repository scans.

- Real-time scanning is an essential component for the swift identification and mitigation of emerging threats. This feature ensures that any changes or additions to AI/ML models and notebooks are promptly analyzed. It enables immediate action against potential vulnerabilities, thereby preserving the integrity of AI/ML applications.

- Versatile Framework Support allows Watchtower to cater to a wide array of AI/ML projects by ensuring compatibility with diverse model frameworks. This versatility enables organizations to leverage Watchtower regardless of the frameworks they utilize, making it a universally applicable security tool.

- Dynamic Risk Identification plays a pivotal role in adapting to evolving threats and vulnerabilities within the ever-changing landscape of AI/ML. This adaptability is vital as new threat types continually emerge, demanding a security tool that can evolve and adapt in tandem.

- Comprehensive Assessment offered by Watchtower covers a wide spectrum of vulnerability assessments, identifying and analyzing a broad range of risks. It meticulously examines all aspects of potential threats, ensuring that individuals and organizations possess comprehensive knowledge of their security landscape.

- Industry Standards Compliance is ensured through alignment with renowned security standards such as OWASP, MITRE, and CWE. This compliance guarantees that Watchtower adheres to globally recognized security practices, establishing a baseline of security and fostering trust among stakeholders.

- Efficiency and Scalability are achieved through automated assessments, significantly accelerating security workflows. This efficiency is critical for organizations expanding their AI/ML initiatives, ensuring that security does not hinder development processes during scaling.

- Seamless Integration is a valuable feature that allows Watchtower to integrate effortlessly with popular AI/ML platforms and repositories. It simplifies the incorporation of Watchtower into existing development ecosystems, streamlining security implementation and ensuring consistency across platforms.

- Informed Decision-Making is facilitated by detailed vulnerability reports generated by Watchtower. These reports empower organizations to prioritize actions and allocate resources effectively, enabling prompt addressing of critical vulnerabilities and optimal resource utilization.

- Competitive Advantage is gained by leveraging advanced security tools like Watchtower in a market where security is of paramount concern. This advantage not only appeals to clients and end-users but also instills confidence among stakeholders. It underscores the organization's commitment to securing its AI/ML assets against a wide range of risks, from model tampering to unauthorized data access. This comprehensive assessment ensures a thorough examination of all possible vulnerabilities, leaving no stone unturned in safeguarding AI/ML assets.

- Support only for public Git and huggingface repository.

- Enable AWS S3 bucket support by configuring role-based credentials, where a specialized role is crafted to grant minimal read-only access.

- Doesn't support scanning of .pb file from s3 buckets.

- Presidio analyser has 1000000 (1GB) has max length. Any data greater than 1GB will not be captured in reports.

- Possible miss-match in severity levels from Whispers library and Watchtower severity levels.

- Watchtower application is tested in Ubuntu LTS 22.04.

- Reduce false positives by using these customized versions that detect vulnerabilities more accurately.

- Refine PII severity assessment with contextual rules for varied levels.

- Enhance model vulnerability detection by adding checks for the embedding layer's potential issues.

- Integration with Github actions

- Repositories cloned from GitHub and Hugging Face during watchtower analysis will not be automatically removed post-analysis. It is advisable to manually delete these folders found within the 'src' directory.

Contributions are always welcome! See the Contribution Guidelines for more details.

- Parmar Manojkumar Somabhai

- Amit Phadke

- Deepak Kumar Byrappa

- Pankaj Kanta Maurya

- Ankita Kumari Patro

- Yuvaraj Govindarajulu

- Sumitra Biswal

- Amlan Jyoti

- Mallikarjun Udanashiv

- Manpreet Singh

- Shankar Ajmeera

- Aravindh J

This project is licensed under the Apache License. See LICENSE for details.

First and foremost, we want to extend our deepest gratitude to the vibrant open-source community. The foundation of AI Watchtower by AIShield is built upon the collective wisdom, tools, and insights shared by countless contributors. It's a privilege to stand on the shoulders of these giants:

- Yelp's detect-secrets: Used for scanning configurations to prevent accidental commitment of sensitive data.

- Safety: Used for scanning python dependencies for known security vulnerabilities and suggests the proper remediation for vulnerabilities detected.

- Whispers : Identify hardcoded secrets in static structured text

- Whispers: Advanced secrets detection

- Presidio Analyzer: The Presidio analyzer is a Python based service for detecting PII entities in text

- PII Confidentiality Impact Levels

- Top open source licenses and legal risk for developers

- Secure API keys

- OWASP Top 10: Vulnerability and Outdated Components

- Chris Anley - Practical Attacks on Machine Learning Systems

- Abhishek Kumar - Insecure Deserialization with Python Pickle module

- Shibin B Shaji - Using Python’s pickling to explain Insecure Deserialization

- TensorFlow Model Comparison: Insights from Saturn Cloud [1, 2, 3 ]

- TensorFlow Remote Code Execution with Malicious Model

- TensorFlow layers embedding causes memory leak

- Adding embeddings for unknown words in Tensorflow

- Data Leakage in Machine Learning

- Race Conditions and Secure File Operations

- Nuthdanai Wangpratham - Feature Scaling Matters for Accurate Predictions

- Predicting how and when hidden neurons skew measured synaptic interactions

- Deserialization bug in TensorFlow machine learning framework allowed arbitrary code execution

- Tom Bonner - A Deep Dive into Security Risks in TensorFlow and Keras

- Security in MLOps Pipeline

- A traditional attack vector applied to AI/ML Models

... and to many others who have contributed their knowledge on open-source licenses, API key security, MLOps pipeline security, and more.

In creating AI Watchtower, it's our humble attempt to give back to this incredible community. We're inspired by the spirit of collaboration and are thrilled to contribute our grain of sand to the vast desert of open-source knowledge. Together, let's continue to make the AI landscape safer and more robust for all!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for watchtower

Similar Open Source Tools

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

AntSK

AntSK is an AI knowledge base/agent built with .Net8+Blazor+SemanticKernel. It features a semantic kernel for accurate natural language processing, a memory kernel for continuous learning and knowledge storage, a knowledge base for importing and querying knowledge from various document formats, a text-to-image generator integrated with StableDiffusion, GPTs generation for creating personalized GPT models, API interfaces for integrating AntSK into other applications, an open API plugin system for extending functionality, a .Net plugin system for integrating business functions, real-time information retrieval from the internet, model management for adapting and managing different models from different vendors, support for domestic models and databases for operation in a trusted environment, and planned model fine-tuning based on llamafactory.

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

Sentience

Sentience is a tool that allows developers to create autonomous AI agents on-chain with verifiable proofs. It leverages a Trusted Execution Environment (TEE) architecture to ensure secure execution of AI calls and provides transparency through cryptographic attestations posted on Solana's blockchain. The tool enhances market potential by transforming agents into cryptographically verifiable entities, addressing the need for trust in AI development. Sentience offers features like OpenAI compatibility, on-chain verifiability, an explorer for agent history, and an easy-to-use developer experience. The repository includes SDKs for Python and JavaScript, along with components for verified inference and instructions for verifying the TEE architecture.

CoLLM

CoLLM is a novel method that integrates collaborative information into Large Language Models (LLMs) for recommendation. It converts recommendation data into language prompts, encodes them with both textual and collaborative information, and uses a two-step tuning method to train the model. The method incorporates user/item ID fields in prompts and employs a conventional collaborative model to generate user/item representations. CoLLM is built upon MiniGPT-4 and utilizes pretrained Vicuna weights for training.

wandbot

Wandbot is a question-answering bot designed for Weights & Biases documentation. It employs Retrieval Augmented Generation with a ChromaDB backend for efficient responses. The bot features periodic data ingestion, integration with Discord and Slack, and performance monitoring through logging. It has a fallback mechanism for model selection and is evaluated based on retrieval accuracy and model-generated responses. The implementation includes creating document embeddings, constructing the Q&A RAGPipeline, model selection, deployment on FastAPI, Discord, and Slack, logging and analysis with Weights & Biases Tables, and performance evaluation.

aiid

The Artificial Intelligence Incident Database (AIID) is a collection of incidents involving the development and use of artificial intelligence (AI). The database is designed to help researchers, policymakers, and the public understand the potential risks and benefits of AI, and to inform the development of policies and practices to mitigate the risks and promote the benefits of AI. The AIID is a collaborative project involving researchers from the University of California, Berkeley, the University of Washington, and the University of Toronto.

SwiftSage

SwiftSage is a tool designed for conducting experiments in the field of machine learning and artificial intelligence. It provides a platform for researchers and developers to implement and test various algorithms and models. The tool is particularly useful for exploring new ideas and conducting experiments in a controlled environment. SwiftSage aims to streamline the process of developing and testing machine learning models, making it easier for users to iterate on their ideas and achieve better results. With its user-friendly interface and powerful features, SwiftSage is a valuable tool for anyone working in the field of AI and ML.

Nucleoid

Nucleoid is a declarative (logic) runtime environment that manages both data and logic under the same runtime. It uses a declarative programming paradigm, which allows developers to focus on the business logic of the application, while the runtime manages the technical details. This allows for faster development and reduces the amount of code that needs to be written. Additionally, the sharding feature can help to distribute the load across multiple instances, which can further improve the performance of the system.

codebase-context-spec

The Codebase Context Specification (CCS) project aims to standardize embedding contextual information within codebases to enhance understanding for both AI and human developers. It introduces a convention similar to `.env` and `.editorconfig` files but focused on documenting code for both AI and humans. By providing structured contextual metadata, collaborative documentation guidelines, and standardized context files, developers can improve code comprehension, collaboration, and development efficiency. The project includes a linter for validating context files and provides guidelines for using the specification with AI assistants. Tooling recommendations suggest creating memory systems, IDE plugins, AI model integrations, and agents for context creation and utilization. Future directions include integration with existing documentation systems, dynamic context generation, and support for explicit context overriding.

Guardrails

Guardrails is a security tool designed to help developers identify and fix security vulnerabilities in their code. It provides automated scanning and analysis of code repositories to detect potential security issues, such as sensitive data exposure, injection attacks, and insecure configurations. By integrating Guardrails into the development workflow, teams can proactively address security concerns and reduce the risk of security breaches. The tool offers detailed reports and actionable recommendations to guide developers in remediation efforts, ultimately improving the overall security posture of the codebase. Guardrails supports multiple programming languages and frameworks, making it versatile and adaptable to different development environments. With its user-friendly interface and seamless integration with popular version control systems, Guardrails empowers developers to prioritize security without compromising productivity.

aika

AIKA (Artificial Intelligence for Knowledge Acquisition) is a new type of artificial neural network designed to mimic the behavior of a biological brain more closely and bridge the gap to classical AI. The network conceptually separates activations from neurons, creating two separate graphs to represent acquired knowledge and inferred information. It uses different types of neurons and synapses to propagate activation values, binding signals, causal relations, and training gradients. The network structure allows for flexible topology and supports the gradual population of neurons and synapses during training.

generative-ai-sagemaker-cdk-demo

This repository showcases how to deploy generative AI models from Amazon SageMaker JumpStart using the AWS CDK. Generative AI is a type of AI that can create new content and ideas, such as conversations, stories, images, videos, and music. The repository provides a detailed guide on deploying image and text generative AI models, utilizing pre-trained models from SageMaker JumpStart. The web application is built on Streamlit and hosted on Amazon ECS with Fargate. It interacts with the SageMaker model endpoints through Lambda functions and Amazon API Gateway. The repository also includes instructions on setting up the AWS CDK application, deploying the stacks, using the models, and viewing the deployed resources on the AWS Management Console.

kdbai-samples

KDB.AI is a time-based vector database that allows developers to build scalable, reliable, and real-time applications by providing advanced search, recommendation, and personalization for Generative AI applications. It supports multiple index types, distance metrics, top-N and metadata filtered retrieval, as well as Python and REST interfaces. The repository contains samples demonstrating various use-cases such as temporal similarity search, document search, image search, recommendation systems, sentiment analysis, and more. KDB.AI integrates with platforms like ChatGPT, Langchain, and LlamaIndex. The setup steps require Unix terminal, Python 3.8+, and pip installed. Users can install necessary Python packages and run Jupyter notebooks to interact with the samples.

intelligence-toolkit

The Intelligence Toolkit is a suite of interactive workflows designed to help domain experts make sense of real-world data by identifying patterns, themes, relationships, and risks within complex datasets. It utilizes generative AI (GPT models) to create reports on findings of interest. The toolkit supports analysis of case, entity, and text data, providing various interactive workflows for different intelligence tasks. Users are expected to evaluate the quality of data insights and AI interpretations before taking action. The system is designed for moderate-sized datasets and responsible use of personal case data. It uses the GPT-4 model from OpenAI or Azure OpenAI APIs for generating reports and insights.

For similar tasks

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

Guardrails

Guardrails is a security tool designed to help developers identify and fix security vulnerabilities in their code. It provides automated scanning and analysis of code repositories to detect potential security issues, such as sensitive data exposure, injection attacks, and insecure configurations. By integrating Guardrails into the development workflow, teams can proactively address security concerns and reduce the risk of security breaches. The tool offers detailed reports and actionable recommendations to guide developers in remediation efforts, ultimately improving the overall security posture of the codebase. Guardrails supports multiple programming languages and frameworks, making it versatile and adaptable to different development environments. With its user-friendly interface and seamless integration with popular version control systems, Guardrails empowers developers to prioritize security without compromising productivity.

LLM-PLSE-paper

LLM-PLSE-paper is a repository focused on the applications of Large Language Models (LLMs) in Programming Language and Software Engineering (PL/SE) domains. It covers a wide range of topics including bug detection, specification inference and verification, code generation, fuzzing and testing, code model and reasoning, code understanding, IDE technologies, prompting for reasoning tasks, and agent/tool usage and planning. The repository provides a comprehensive collection of research papers, benchmarks, empirical studies, and frameworks related to the capabilities of LLMs in various PL/SE tasks.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

OpenRedTeaming

OpenRedTeaming is a repository focused on red teaming for generative models, specifically large language models (LLMs). The repository provides a comprehensive survey on potential attacks on GenAI and robust safeguards. It covers attack strategies, evaluation metrics, benchmarks, and defensive approaches. The repository also implements over 30 auto red teaming methods. It includes surveys, taxonomies, attack strategies, and risks related to LLMs. The goal is to understand vulnerabilities and develop defenses against adversarial attacks on large language models.

Awesome-LLM4Cybersecurity

The repository 'Awesome-LLM4Cybersecurity' provides a comprehensive overview of the applications of Large Language Models (LLMs) in cybersecurity. It includes a systematic literature review covering topics such as constructing cybersecurity-oriented domain LLMs, potential applications of LLMs in cybersecurity, and research directions in the field. The repository analyzes various benchmarks, datasets, and applications of LLMs in cybersecurity tasks like threat intelligence, fuzzing, vulnerabilities detection, insecure code generation, program repair, anomaly detection, and LLM-assisted attacks.

quark-engine

Quark Engine is an AI-powered tool designed for analyzing Android APK files. It focuses on enhancing the detection process for auto-suggestion, enabling users to create detection workflows without coding. The tool offers an intuitive drag-and-drop interface for workflow adjustments and updates. Quark Agent, the core component, generates Quark Script code based on natural language input and feedback. The project is committed to providing a user-friendly experience for designing detection workflows through textual and visual methods. Various features are still under development and will be rolled out gradually.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

For similar jobs

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

awesome-ai-cybersecurity

This repository is a comprehensive collection of resources for utilizing AI in cybersecurity. It covers various aspects such as prediction, prevention, detection, response, monitoring, and more. The resources include tools, frameworks, case studies, best practices, tutorials, and research papers. The repository aims to assist professionals, researchers, and enthusiasts in staying updated and advancing their knowledge in the field of AI cybersecurity.

last_layer

last_layer is a security library designed to protect LLM applications from prompt injection attacks, jailbreaks, and exploits. It acts as a robust filtering layer to scrutinize prompts before they are processed by LLMs, ensuring that only safe and appropriate content is allowed through. The tool offers ultra-fast scanning with low latency, privacy-focused operation without tracking or network calls, compatibility with serverless platforms, advanced threat detection mechanisms, and regular updates to adapt to evolving security challenges. It significantly reduces the risk of prompt-based attacks and exploits but cannot guarantee complete protection against all possible threats.

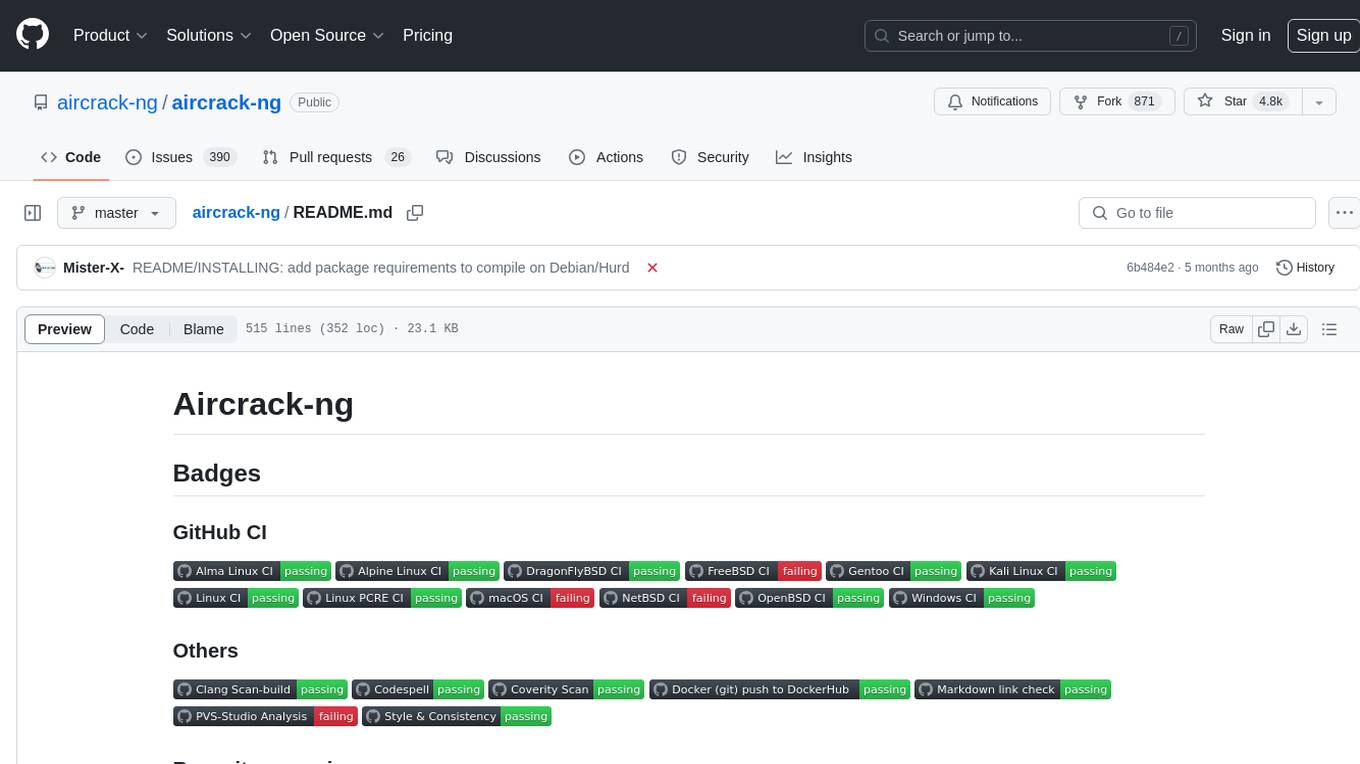

aircrack-ng

Aircrack-ng is a comprehensive suite of tools designed to evaluate the security of WiFi networks. It covers various aspects of WiFi security, including monitoring, attacking (replay attacks, deauthentication, fake access points), testing WiFi cards and driver capabilities, and cracking WEP and WPA PSK. The tools are command line-based, allowing for extensive scripting and have been utilized by many GUIs. Aircrack-ng primarily works on Linux but also supports Windows, macOS, FreeBSD, OpenBSD, NetBSD, Solaris, and eComStation 2.

reverse-engineering-assistant

ReVA (Reverse Engineering Assistant) is a project aimed at building a disassembler agnostic AI assistant for reverse engineering tasks. It utilizes a tool-driven approach, providing small tools to the user to empower them in completing complex tasks. The assistant is designed to accept various inputs, guide the user in correcting mistakes, and provide additional context to encourage exploration. Users can ask questions, perform tasks like decompilation, class diagram generation, variable renaming, and more. ReVA supports different language models for online and local inference, with easy configuration options. The workflow involves opening the RE tool and program, then starting a chat session to interact with the assistant. Installation includes setting up the Python component, running the chat tool, and configuring the Ghidra extension for seamless integration. ReVA aims to enhance the reverse engineering process by breaking down actions into small parts, including the user's thoughts in the output, and providing support for monitoring and adjusting prompts.

AutoAudit

AutoAudit is an open-source large language model specifically designed for the field of network security. It aims to provide powerful natural language processing capabilities for security auditing and network defense, including analyzing malicious code, detecting network attacks, and predicting security vulnerabilities. By coupling AutoAudit with ClamAV, a security scanning platform has been created for practical security audit applications. The tool is intended to assist security professionals with accurate and fast analysis and predictions to combat evolving network threats.

aif

Arno's Iptables Firewall (AIF) is a single- & multi-homed firewall script with DSL/ADSL support. It is a free software distributed under the GNU GPL License. The script provides a comprehensive set of configuration files and plugins for setting up and managing firewall rules, including support for NAT, load balancing, and multirouting. It offers detailed instructions for installation and configuration, emphasizing security best practices and caution when modifying settings. The script is designed to protect against hostile attacks by blocking all incoming traffic by default and allowing users to configure specific rules for open ports and network interfaces.

Academic_LLM_Sec_Papers

Academic_LLM_Sec_Papers is a curated collection of academic papers related to LLM Security Application. The repository includes papers sorted by conference name and published year, covering topics such as large language models for blockchain security, software engineering, machine learning, and more. Developers and researchers are welcome to contribute additional published papers to the list. The repository also provides information on listed conferences and journals related to security, networking, software engineering, and cryptography. The papers cover a wide range of topics including privacy risks, ethical concerns, vulnerabilities, threat modeling, code analysis, fuzzing, and more.