codebase-context-spec

Proposal for a flexible, tool-agnostic, codebase context system that helps teach AI coding tools about your codebase. Super easy to get started, just create a .context.md file in the root of your project.

Stars: 75

The Codebase Context Specification (CCS) project aims to standardize embedding contextual information within codebases to enhance understanding for both AI and human developers. It introduces a convention similar to `.env` and `.editorconfig` files but focused on documenting code for both AI and humans. By providing structured contextual metadata, collaborative documentation guidelines, and standardized context files, developers can improve code comprehension, collaboration, and development efficiency. The project includes a linter for validating context files and provides guidelines for using the specification with AI assistants. Tooling recommendations suggest creating memory systems, IDE plugins, AI model integrations, and agents for context creation and utilization. Future directions include integration with existing documentation systems, dynamic context generation, and support for explicit context overriding.

README:

Welcome to the Codebase Context Specification (CCS) repository! This project establishes a standardized method for embedding rich contextual information within codebases to enhance understanding for both AI and human developers. By providing a clear and consistent way to communicate project structure, conventions, and key concepts, we significantly improve code comprehension and facilitate more effective collaboration between humans and AI in software development.

- Full Specification (CODEBASE-CONTEXT.md)

- GitHub Repository

- NPM Package (codebase-context-lint)

- SubStack Article by Vaskin

- Context File Example (.context.md)

- AI Assistant Prompt (CODING-ASSISTANT-PROMPT.md)

To install the Codebase Context Linter globally, use npm:

npm install -g codebase-context-lintAfter global installation, you can use the codebase-context-lint command to lint your project:

codebase-context-lint [directory_to_lint] [options]You can also use npx to run the linter without installing it globally:

npx codebase-context-lint [directory_to_lint] [options]If no directory is specified, the linter will default to the current directory.

Options:

-

--log-level <level>: Set the logging level (error, warn, info, debug). Default: info -

--help,-h: Show the help message

Examples:

codebase-context-lint

codebase-context-lint .

codebase-context-lint /path/to/project --log-level debug

npx codebase-context-lint

npx codebase-context-lint /path/to/project --log-level debugThe linter will validate your .context.md, .context.yaml, .context.json, .contextdocs.md, and .contextignore files according to the Codebase Context Specification.

You can also use the Codebase Context Linter as a library in your TypeScript or JavaScript projects:

import { ContextLinter, LogLevel } from 'codebase-context-lint';

const linter = new ContextLinter(LogLevel.INFO);

const isValid = await linter.lintDirectory('/path/to/your/project', '1.0.0');

console.log(`Linting result: ${isValid ? 'Valid' : 'Invalid'}`);Note: The linter will automatically create any necessary directories when writing files.

To help you get started with creating context files for your project, we've developed the Codebase Context Editor. This tool simplifies the process of generating .context.md, .contextdocs.md, and .contextignore files that adhere to the Codebase Context Specification.

Get Started with the Codebase Context Editor

The Codebase Context Editor provides an intuitive interface for:

- Creating and editing context files

- Viewing and copying AI prompts for context generation

- Validating your context files against the specification

Whether you're new to the Codebase Context Specification or an experienced user, the editor can significantly streamline your workflow.

This project supports the following Node.js versions:

- Node.js 18.x

- Node.js 20.x

- Node.js 22.x

We recommend using the latest LTS (Long Term Support) version of Node.js for optimal performance and security.

The Codebase Context Specification introduces a convention similar to .env and .editorconfig systems, but focused on documenting your code for both AI and humans. Just as .env files manage environment variables and .editorconfig ensures consistent coding styles, CCS files (.context.md, .context.yaml, .context.json) provide a standardized way to capture and communicate the context of your codebase.

This convention allows developers to:

- Document high-level architecture and design decisions

- Explain project-specific conventions and patterns

- Highlight important relationships between different parts of the codebase

- Provide context that might not be immediately apparent from the code itself

By adopting this convention, teams can ensure that both human developers and AI assistants have access to crucial contextual information, leading to better code understanding, more accurate suggestions, and improved overall development efficiency.

- Contextual Metadata: Structured information about the project, its components, and conventions, designed for both human and AI consumption.

- AI-Human Collaborative Documentation: Guidelines for creating documentation that's easily parsed by AI models while remaining human-readable and maintainable.

-

Standardized Context Files: Consistent use of

.context.md,.context.yaml, and.context.jsonfiles for conveying codebase context at various levels (project-wide, directory-specific, etc.). - Context-Aware Development: Encouraging a development approach that considers and documents the broader context of code, not just its immediate functionality.

We've recently updated our dependencies to address security vulnerabilities and improve compatibility with different Node.js versions. If you encounter any issues after updating, please report them in our GitHub issues.

The CODING-ASSISTANT-PROMPT.md file provides guidelines for AI assistants to understand and use the Codebase Context Specification. This allows for immediate adoption of the specification without requiring specific tooling integration.

To use the Codebase Context Specification with an AI assistant:

- Include the content of CODING-ASSISTANT-PROMPT.md in your prompt to the AI assistant.

- Ask the AI to analyze your project's context files based on these guidelines.

- The AI will be able to provide more accurate and context-aware responses by following the instructions in the prompt.

Note that while this approach allows for immediate use of the specification, some features like .contextignore should eventually be applied by tooling for more robust implementation.

Developers are encouraged to create:

- Memory systems using git branches as storage

- IDE plugins for context file creation and editing

- AI model integrations for parsing and utilizing context

- Tools for aggregating and presenting project-wide context

- Agents that can help create context in codebases that are blank

- Codebase summarizers, submodule summarizers

- Memory systems that take advantage of the context

- Continuous TODO monitors that can re-try implementations / solutions

- Integration with existing documentation systems

- Dynamic context generation through code analysis

- Potential support for explicit context overriding

- Agent tool / context matching and references

We welcome contributions and feedback from the community to help shape the final specification. Here's how you can get involved:

- Review the Specification: Start by thoroughly reading the current specification in CODEBASE-CONTEXT.md.

- Open an Issue: For suggestions, questions, or concerns, open an issue in this repository.

- Submit a Pull Request: For proposed changes or additions, submit a pull request with a clear description of your modifications.

- Join the Discussion: Participate in open discussions and provide your insights on existing issues and pull requests.

All contributions will be reviewed and discussed openly. Significant changes may go through an RFC (Request for Comments) process to ensure thorough consideration and community input.

- 1.0.0-RFC: Initial RFC release of the Codebase Context Specification

For a deeper dive into the Codebase Context Specification, check out this SubStack article by Vaskin, the author of the specification.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for codebase-context-spec

Similar Open Source Tools

codebase-context-spec

The Codebase Context Specification (CCS) project aims to standardize embedding contextual information within codebases to enhance understanding for both AI and human developers. It introduces a convention similar to `.env` and `.editorconfig` files but focused on documenting code for both AI and humans. By providing structured contextual metadata, collaborative documentation guidelines, and standardized context files, developers can improve code comprehension, collaboration, and development efficiency. The project includes a linter for validating context files and provides guidelines for using the specification with AI assistants. Tooling recommendations suggest creating memory systems, IDE plugins, AI model integrations, and agents for context creation and utilization. Future directions include integration with existing documentation systems, dynamic context generation, and support for explicit context overriding.

db-ally

db-ally is a library for creating natural language interfaces to data sources. It allows developers to outline specific use cases for a large language model (LLM) to handle, detailing the desired data format and the possible operations to fetch this data. db-ally effectively shields the complexity of the underlying data source from the model, presenting only the essential information needed for solving the specific use cases. Instead of generating arbitrary SQL, the model is asked to generate responses in a simplified query language.

ctakes

Apache cTAKES is a clinical Text Analysis and Knowledge Extraction System that focuses on extracting knowledge from clinical text through Natural Language Processing (NLP) techniques. It is modular and employs rule-based and machine learning methods to extract concepts such as symptoms, procedures, diagnoses, medications, and anatomy with attributes and standard codes. cTAKES can identify temporal events, dates, and times, placing events in a patient timeline. It supports various biomedical text processing tasks and can handle different types of clinical and health-related narratives using multiple data standards. cTAKES is widely used in research initiatives and encourages contributions from professionals, researchers, doctors, and students from diverse backgrounds.

wandbot

Wandbot is a question-answering bot designed for Weights & Biases documentation. It employs Retrieval Augmented Generation with a ChromaDB backend for efficient responses. The bot features periodic data ingestion, integration with Discord and Slack, and performance monitoring through logging. It has a fallback mechanism for model selection and is evaluated based on retrieval accuracy and model-generated responses. The implementation includes creating document embeddings, constructing the Q&A RAGPipeline, model selection, deployment on FastAPI, Discord, and Slack, logging and analysis with Weights & Biases Tables, and performance evaluation.

fridon-ai

FridonAI is an open-source project offering AI-powered tools for cryptocurrency analysis and blockchain operations. It includes modules like FridonAnalytics for price analysis, FridonSearch for technical indicators, FridonNotifier for custom alerts, FridonBlockchain for blockchain operations, and FridonChat as a unified chat interface. The platform empowers users to create custom AI chatbots, access crypto tools, and interact effortlessly through chat. The core functionality is modular, with plugins, tools, and utilities for easy extension and development. FridonAI implements a scoring system to assess user interactions and incentivize engagement. The application uses Redis extensively for communication and includes a Nest.js backend for system operations.

spring-ai

The Spring AI project provides a Spring-friendly API and abstractions for developing AI applications. It offers a portable client API for interacting with generative AI models, enabling developers to easily swap out implementations and access various models like OpenAI, Azure OpenAI, and HuggingFace. Spring AI also supports prompt engineering, providing classes and interfaces for creating and parsing prompts, as well as incorporating proprietary data into generative AI without retraining the model. This is achieved through Retrieval Augmented Generation (RAG), which involves extracting, transforming, and loading data into a vector database for use by AI models. Spring AI's VectorStore abstraction allows for seamless transitions between different vector database implementations.

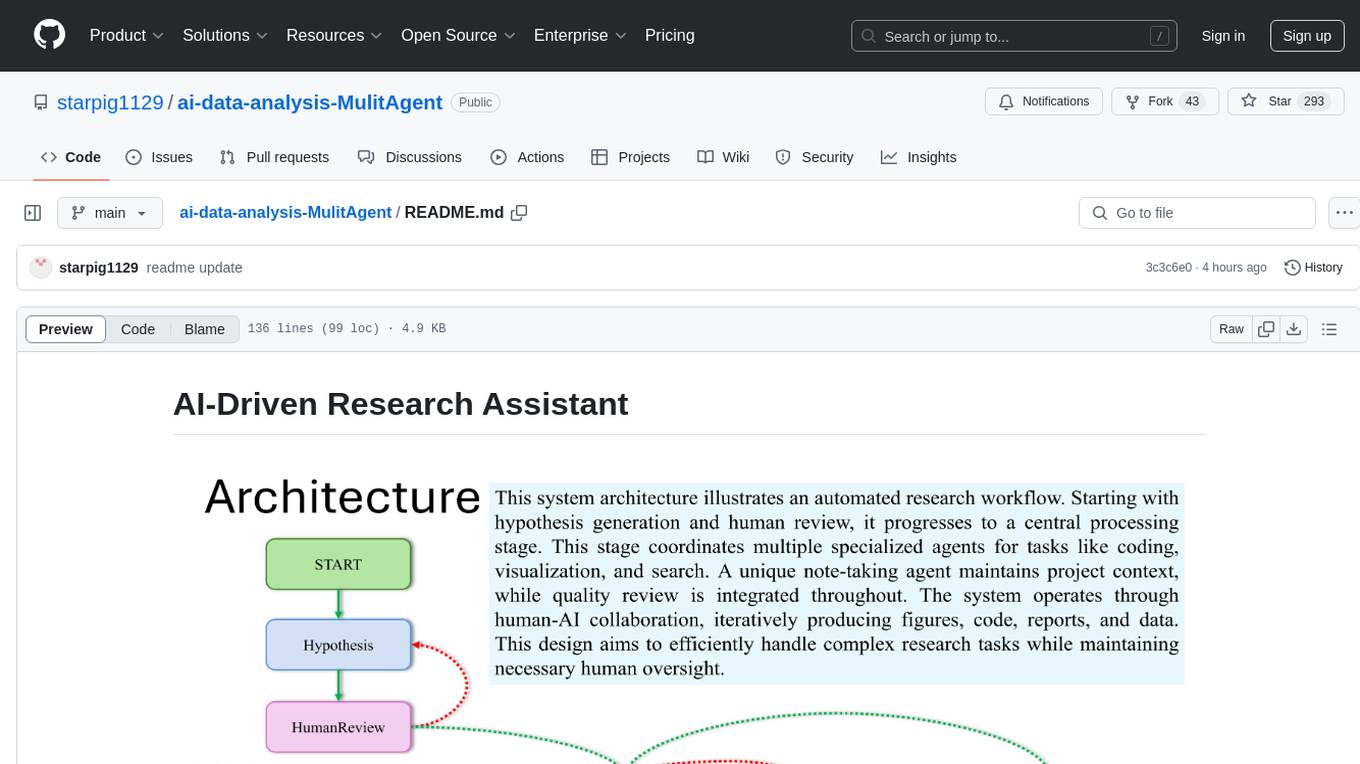

ai-data-analysis-MulitAgent

AI-Driven Research Assistant is an advanced AI-powered system utilizing specialized agents for data analysis, visualization, and report generation. It integrates LangChain, OpenAI's GPT models, and LangGraph for complex research processes. Key features include hypothesis generation, data processing, web search, code generation, and report writing. The system's unique Note Taker agent maintains project state, reducing overhead and improving context retention. System requirements include Python 3.10+ and Jupyter Notebook environment. Installation involves cloning the repository, setting up a Conda virtual environment, installing dependencies, and configuring environment variables. Usage instructions include setting data, running Jupyter Notebook, customizing research tasks, and viewing results. Main components include agents for hypothesis generation, process supervision, visualization, code writing, search, report writing, quality review, and note-taking. Workflow involves hypothesis generation, processing, quality review, and revision. Customization is possible by modifying agent creation and workflow definition. Current issues include OpenAI errors, NoteTaker efficiency, runtime optimization, and refiner improvement. Contributions via pull requests are welcome under the MIT License.

blades

Blades is a multimodal AI Agent framework in Go, supporting custom models, tools, memory, middleware, and more. It is well-suited for multi-turn conversations, chain reasoning, and structured output. The framework provides core components like Agent, Prompt, Chain, ModelProvider, Tool, Memory, and Middleware, enabling developers to build intelligent applications with flexible configuration and high extensibility. Blades leverages the characteristics of Go to achieve high decoupling and efficiency, making it easy to integrate different language model services and external tools. The project is in its early stages, inviting Go developers and AI enthusiasts to contribute and explore the possibilities of building AI applications in Go.

MemoryBear

MemoryBear is a next-generation AI memory system developed by RedBear AI, focusing on overcoming limitations in knowledge storage and multi-agent collaboration. It empowers AI with human-like memory capabilities, enabling deep knowledge understanding and cognitive collaboration. The system addresses challenges such as knowledge forgetting, memory gaps in multi-agent collaboration, and semantic ambiguity during reasoning. MemoryBear's core features include memory extraction engine, graph storage, hybrid search, memory forgetting engine, self-reflection engine, and FastAPI services. It offers a standardized service architecture for efficient integration and invocation across applications.

devdocs-to-llm

The devdocs-to-llm repository is a work-in-progress tool that aims to convert documentation from DevDocs format to Long Language Model (LLM) format. This tool is designed to streamline the process of converting documentation for use with LLMs, making it easier for developers to leverage large language models for various tasks. By automating the conversion process, developers can quickly adapt DevDocs content for training and fine-tuning LLMs, enabling them to create more accurate and contextually relevant language models.

stride-gpt

STRIDE GPT is an AI-powered threat modelling tool that leverages Large Language Models (LLMs) to generate threat models and attack trees for a given application based on the STRIDE methodology. Users provide application details, such as the application type, authentication methods, and whether the application is internet-facing or processes sensitive data. The model then generates its output based on the provided information. It features a simple and user-friendly interface, supports multi-modal threat modelling, generates attack trees, suggests possible mitigations for identified threats, and does not store application details. STRIDE GPT can be accessed via OpenAI API, Azure OpenAI Service, Google AI API, or Mistral API. It is available as a Docker container image for easy deployment.

LabelLLM

LabelLLM is an open-source data annotation platform designed to optimize the data annotation process for LLM development. It offers flexible configuration, multimodal data support, comprehensive task management, and AI-assisted annotation. Users can access a suite of annotation tools, enjoy a user-friendly experience, and enhance efficiency. The platform allows real-time monitoring of annotation progress and quality control, ensuring data integrity and timeliness.

easydist

EasyDist is an automated parallelization system and infrastructure designed for multiple ecosystems. It offers usability by making parallelizing training or inference code effortless with just a single line of change. It ensures ecological compatibility by serving as a centralized source of truth for SPMD rules at the operator-level for various machine learning frameworks. EasyDist decouples auto-parallel algorithms from specific frameworks and IRs, allowing for the development and benchmarking of different auto-parallel algorithms in a flexible manner. The architecture includes MetaOp, MetaIR, and the ShardCombine Algorithm for SPMD sharding rules without manual annotations.

synthora

Synthora is a lightweight and extensible framework for LLM-driven Agents and ALM research. It aims to simplify the process of building, testing, and evaluating agents by providing essential components. The framework allows for easy agent assembly with a single config, reducing the effort required for tuning and sharing agents. Although in early development stages with unstable APIs, Synthora welcomes feedback and contributions to enhance its stability and functionality.

btp-cap-genai-rag

This GitHub repository provides support for developers, partners, and customers to create advanced GenAI solutions on SAP Business Technology Platform (SAP BTP) following the Reference Architecture. It includes examples on integrating Foundation Models and Large Language Models via Generative AI Hub, using LangChain in CAP, and implementing advanced techniques like Retrieval Augmented Generation (RAG) through embeddings and SAP HANA Cloud's Vector Engine for enhanced value in customer support scenarios.

For similar tasks

codebase-context-spec

The Codebase Context Specification (CCS) project aims to standardize embedding contextual information within codebases to enhance understanding for both AI and human developers. It introduces a convention similar to `.env` and `.editorconfig` files but focused on documenting code for both AI and humans. By providing structured contextual metadata, collaborative documentation guidelines, and standardized context files, developers can improve code comprehension, collaboration, and development efficiency. The project includes a linter for validating context files and provides guidelines for using the specification with AI assistants. Tooling recommendations suggest creating memory systems, IDE plugins, AI model integrations, and agents for context creation and utilization. Future directions include integration with existing documentation systems, dynamic context generation, and support for explicit context overriding.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.