neocodeium

free AI completion plugin for neovim

Stars: 160

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

README:

⚡ Free AI completion plugin powered by codeium ⚡

NeoCodeium is a plugin that provides AI completion powered by Codeium. The

primary reason for creating NeoCodeium was to address the issue of flickering

suggestions in the official plugin which was particularly annoying when dealing

with multi-line virtual text. Additionally, I desired a feature that would

allow accepting Codeium suggestions to be repeatable using the . command

because I use it as my main completion plugin and only manually invoke

nvim-cmp.

Differences with codeium.vim

- Supports only Neovim (written in Lua)

- Flickering has been removed in most scenarios, resulting in a snappier experience

- Completions on the current line can now be repeated using the

.key - Performance improvements have been achieved through cache techniques

- The suggestion count label is displayed in the number column, making it closer to the context

- Default keymaps have been removed

-

Possibility to complete only word/line of the suggestion(codeium.vim added this feature in 9fa0dee) - No debounce by default, allowing suggestions to appear while typing (this behavior can be disabled with

debounce = truein the setup)

[!Warning] While using this plugin, your code is constantly being sent to Codeium servers by their own language server in order to evaluate and return completions. Before using make sure you have read and accept the Codeium Privacy Policy. NeoCodeium has the ability to disable the server globally or for individual buffers. This plugin does not send any data to the server from disabled buffers, but the Codeium server is still running behind the scenes and we cannot guarantee that it doesn't send information while running.

- Neovim >= 0.10.0

Here’s an example for 💤lazy plugin manager. If you're using a different plugin manager, please refer to its documentation for installation instructions.

-- add this to the file where you setup your other plugins:

{

"monkoose/neocodeium",

event = "VeryLazy",

config = function()

local neocodeium = require("neocodeium")

neocodeium.setup()

vim.keymap.set("i", "<A-f>", neocodeium.accept)

end,

}

Now you can use Alt-f in insert mode to accept codeium suggestions.

Enterprise users: you should receive portal and API URLs for Codeium from your company.

Once you get them, add them to your config. This way :NeoCodeium auth will authenticate you on the right portal. For example,

{

"monkoose/neocodeium",

event = "VeryLazy",

opts = {

server = {

api_url = 'https://codeium.company.net/_route/api_server',

portal_url = 'https://codeium.company.net',

},

}

}Note: To obtain an API token, you’ll need to run :NeoCodeium auth.

On Windows WSL wslview (sudo apt install wslu) should be installed to properly open the browser.

In addition to the already mentioned accept() function, the plugin also provides a few others:

local neocodeium = require("neocodeium")

-- Accepts the suggestion

neocodeium.accept()

-- Accepts only part of the suggestion if the full suggestion doesn't make sense

neocodeium.accept_word()

neocodeium.accept_line()

-- Clears the current suggestion

neocodeium.clear()

-- Cycles through suggestions by `n` (1 by default) items. Use a negative value to cycle in reverse order

neocodeium.cycle(n)

-- Same as `cycle()`, but also tries to show a suggestion if none is visible.

-- Mostly useful with the enabled `manual` option

neocodeium.cycle_or_complete(n)

-- Checks if a suggestion's virtual text is visible or not (useful for some complex mappings)

neocodeium.visible()Using alongside nvim-cmp

If you are using NeoCodeium with manual = false (the default), it is

recommended to set nvim-cmp to manual completion and clear NeoCodeium

suggestions on opening of the nvim-cmp pop-up menu. You can achieve this with

following code in the place where nvim-cmp is configured:

local cmp = require("cmp")

local neocodeium = require("neocodeium")

local commands = require("neocodeium.commands")

cmp.event:on("menu_opened", function()

neocodeium.clear()

end)

neocodeium.setup({

filter = function()

return not cmp.visible()

end,

})

cmp.setup({

completion = {

autocomplete = false,

},

})If you want to use autocompletion with nvim-cmp, then it is recommended to use

NeoCodeium with manual = true, add a binding for triggering NeoCodeium

completion, and make sure to close the nvim-cmp window when completions are

rendered. You can achieve this with the following code where you setup

NeoCodeium:

local neocodeium = require("neocodeium")

neocodeium.setup({

manual = true, -- recommended to not conflict with nvim-cmp

})

-- create an autocommand which closes cmp when ai completions are displayed

vim.api.nvim_create_autocmd("User", {

pattern = "NeoCodeiumCompletionDisplayed",

callback = function() require("cmp").abort() end

})

-- set up some sort of keymap to cycle and complete to trigger completion

vim.keymap.set("i", "<A-e>", function() neocodeium.cycle_or_complete() end)

-- make sure to have a mapping to accept a completion

vim.keymap.set("i", "<A-f>", function() neocodeium.accept() end)Disable in Telescope prompt and DAP REPL

require("neocodeium").setup({

filetypes = {

...

TelescopePrompt = false,

["dap-repl"] = false,

},

})Enable NeoCodeium only in specified filetypes

local filetypes = { 'lua', 'python' }

neocodeium.setup({

-- function accepts one argument `bufnr`

filter = function(bufnr)

if vim.tbl_contains(filetypes, vim.api.nvim_get_option_value('filetype', { buf = bufnr})) then

return true

end

return false

end

})NeoCodeium doesn’t provide any keymaps, which means you’ll need to add them

yourself. While codeium.vim and

copilot.vim set the <Tab> key as the

default key for accepting a suggestion, we recommend avoiding it as it has some

downsides to consider (although nothing is stopping you from using it):

- Risk of Interference: There’s a high chance of it conflicting with other plugins (such as snippets, nvim-cmp, etc.).

-

Not Consistent: It doesn’t work in the

:h command-line-window. - Indentation Challenges: It is harder to indent with the tab at the start of a line.

Suggested keymaps:

vim.keymap.set("i", "<A-f>", function()

require("neocodeium").accept()

end)

vim.keymap.set("i", "<A-w>", function()

require("neocodeium").accept_word()

end)

vim.keymap.set("i", "<A-a>", function()

require("neocodeium").accept_line()

end)

vim.keymap.set("i", "<A-e>", function()

require("neocodeium").cycle_or_complete()

end)

vim.keymap.set("i", "<A-r>", function()

require("neocodeium").cycle_or_complete(-1)

end)

vim.keymap.set("i", "<A-c>", function()

require("neocodeium").clear()

end)NeoCodeium provides :NeoCodeium user command, which has some useful actions:

-

:NeoCodeium auth- authenticates the user and saves the API token. -

:NeoCodeium[!] disable- disables completions. With the bang also stops the codeium server. -

:NeoCodeium enable- enables NeoCodeium completion. -

:NeoCodeium[!] toggle- toggles NeoCodeium completion. Convey the bang to disable command. -

:NeoCodeium disable_buffer- disables NeoCodeium completion in the current buffer. -

:NeoCodeium enable_buffer- enables NeoCodeium completion in the current buffer. -

:NeoCodeium toggle_buffer- toggles NeoCodeium completion in the current buffer. -

:NeoCodeium open_log- opens new tab with the log output. More information is in the logging section. -

:NeoCodeium chat- opens browser with the Codeium Chat. -

:NeoCodeium restart- restarts Codeium server (useful if the server stops responding for any reason).

You can also use the same commands in your Lua scripts by calling:

require("neocodeium.commands").<command_name>()`

-- Examples

-- disable completions

require("neocodeium.commands").disable()

-- disable completions and stop the server

require("neocodeium.commands").disable(true)NeoCodeium triggers several user events which can be used to trigger code. These can be used to optimize when statusline elements are updated, creating mappings only when the server is available, or modifying completion engine settings when AI completion is started or displaying hints.

-

NeoCodeiumServerConnecting- triggers when a connection to the Codeium server is starting -

NeoCodeiumServerConnected- triggers when a successful connection to the Codeium server is made -

NeoCodeiumServerStopped- triggers when the Codeium server is stopped -

NeoCodeiumEnabled- triggers when the NeoCodeium plugin is enabled globally -

NeoCodeiumDisabled- triggers when the NeoCodeium plugin is disabled globally -

NeoCodeiumBufEnabled- triggers when the NeoCodeium plugin is enabled for a buffer -

NeoCodeiumBufDisabled- triggers when the NeoCodeium plugin is disabled for a buffer -

NeoCodeiumCompletionDisplayed- triggers when NeoCodeium successfully displays a completion item as virtual text -

NeoCodeiumCompletionCleared- triggers when NeoCodeium clears virtual text and completions

require("neocodeium").get_status() can be used to get the some useful information about the current state.

The best use case for this output is to implement custom statusline component.

This function returns two numbers:

-

Status of the plugin

0 - Enabled 1 - Globally disabled with `:NeoCodeium disable`, `:NeoCodeium toggle` or with `setup.enabled = false` 2 - Buffer is disabled with `:NeoCodeium disable_buffer` 3 - Buffer is disableld when it's filetype is matching `setup.filetypes = { some_filetyps = false }` 4 - Buffer is disabled when `setup.filter` returns `false` for the current buffer 5 - Buffer has wrong encoding (codeium can accept only UTF-8 and LATIN-1 encodings) -

Server status

0 - Server is on (running) 1 - Connecting to the server (not working status) 2 - Server is off (stopped)

To use output from get_status() for in-time update it is required to invoke this function

from events

Statusline Examples

Without statusline plugins

-- function to process get_status() and set buffer variable to that data.

local neocodeium = require("neocodeium")

local function get_neocodeium_status(ev)

local status, server_status = neocodeium.get_status()

-- process this data, convert it to custom string/icon etc and set buffer variable

if status == 0 then

vim.api.nvim_buf_set_var(ev.buf, "neocodeium_status", "OK")

else

vim.api.nvim_buf_set_var(ev.buf, "neocodeium_status", "OFF")

end

vim.cmd.redrawstatus()

end

-- Then only some of event fired we invoked this function

vim.api.nvim_create_autocmd("User", {

-- group = ..., -- set some augroup here

pattern = {

"NeoCodeiumServer*",

"NeoCodeium*Enabled",

"NeoCodeium*Disabled",

}

callback = get_neocodeium_status,

})

-- add neocodeium_status to your statusline

vim.opt.statusline:append("%{get(b:, 'neocodeium_status', '')%}")Heirline.nvim

local NeoCodeium = {

static = {

symbols = {

status = {

[0] = " ", -- Enabled

[1] = " ", -- Disabled Globally

[2] = " ", -- Disabled for Buffer

[3] = " ", -- Disabled for Buffer filetype

[4] = " ", -- Disabled for Buffer with enabled function

[5] = " ", -- Disabled for Buffer encoding

},

server_status = {

[0] = " ", -- Connected

[1] = " ", -- Connecting

[2] = " ", -- Disconnected

},

},

},

update = {

"User",

pattern = { "NeoCodeiumServer*", "NeoCodeium*{En,Dis}abled" },

callback = function() vim.cmd.redrawstatus() end,

},

provider = function(self)

local symbols = self.symbols

local status, server_status = require("neocodeium").get_status()

return symbols.status[status] .. symbols.server_status[server_status]

end,

hl = { fg = "yellow" },

}NeoCodeium offers a couple of highlight groups. Feel free to adjust them to your preference and to match your chosen color scheme:

-

NeoCodeiumSuggestion- virtual text color of the plugin suggestions (default:#808080) -

NeoCodeiumLabel- color of the label that indicates the number of suggestions (default: inverted DiagnosticInfo)

While running, NeoCodeium logs some messages into a temporary file. It can be

viewed with the :NeoCodeium open_log command. By default only errors and

warnings are logged.

You can set the logging level to one of trace, debug, info, warn or

error by exporting the NEOCODEIUM_LOG_LEVEL environment variable.

Example:

NEOCODEIUM_LOG_LEVEL=info nvimNeoCodeium comes with the following default options:

-- NeoCodeium Configuration

require("neocodeium").setup({

-- If `false`, then would not start codeium server (disabled state)

-- You can manually enable it at runtime with `:NeoCodeium enable`

enabled = true,

-- Path to a custom Codeium server binary (you can download one from:

-- https://github.com/Exafunction/codeium/releases)

bin = nil,

-- When set to `true`, autosuggestions are disabled.

-- Use `require'neodecodeium'.cycle_or_complete()` to show suggestions manually

manual = false,

-- Information about the API server to use

server = {

-- API URL to use (for Enterprise mode)

api_url = nil,

-- Portal URL to use (for registering a user and downloading the binary)

portal_url = nil,

},

-- Set to `false` to disable showing the number of suggestions label in the line number column

show_label = true,

-- Set to `true` to enable suggestions debounce

debounce = false,

-- Maximum number of lines parsed from loaded buffers (current buffer always fully parsed)

-- Set to `0` to disable parsing non-current buffers (may lower suggestion quality)

-- Set it to `-1` to parse all lines

max_lines = 10000,

-- Set to `true` to disable some non-important messages, like "NeoCodeium: server started..."

silent = false,

-- Set to a function that returns `true` if a buffer should be enabled

-- and `false` if the buffer should be disabled

-- You can still enable disabled by this option buffer with `:NeoCodeium enable_buffer`

filter = function(bufnr) return true end,

-- Set to `false` to disable suggestions in buffers with specific filetypes

-- You can still enable disabled by this option buffer with `:NeoCodeium enable_buffer`

filetypes = {

help = false,

gitcommit = false,

gitrebase = false,

["."] = false,

},

-- List of directories and files to detect workspace root directory for Codeium chat

root_dir = { ".bzr", ".git", ".hg", ".svn", "_FOSSIL_", "package.json" }

})You can chat with AI in the browser with the :NeoCodeium chat command. The

first time you open it, it requires the server to restart with some

chat-specific flags, so be patient (this usually doesn't take more than a few

seconds). After that, it should open a chat window in the browser with the

context of the current buffer. Here, you can ask some specific questions about

your code base. When you switch buffers, this context should be updated

automatically (it takes some time). You can see current chat context in the

left bottom corner.

- codeium.vim - The main source for understanding how to use Codeium.

MIT license

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for neocodeium

Similar Open Source Tools

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

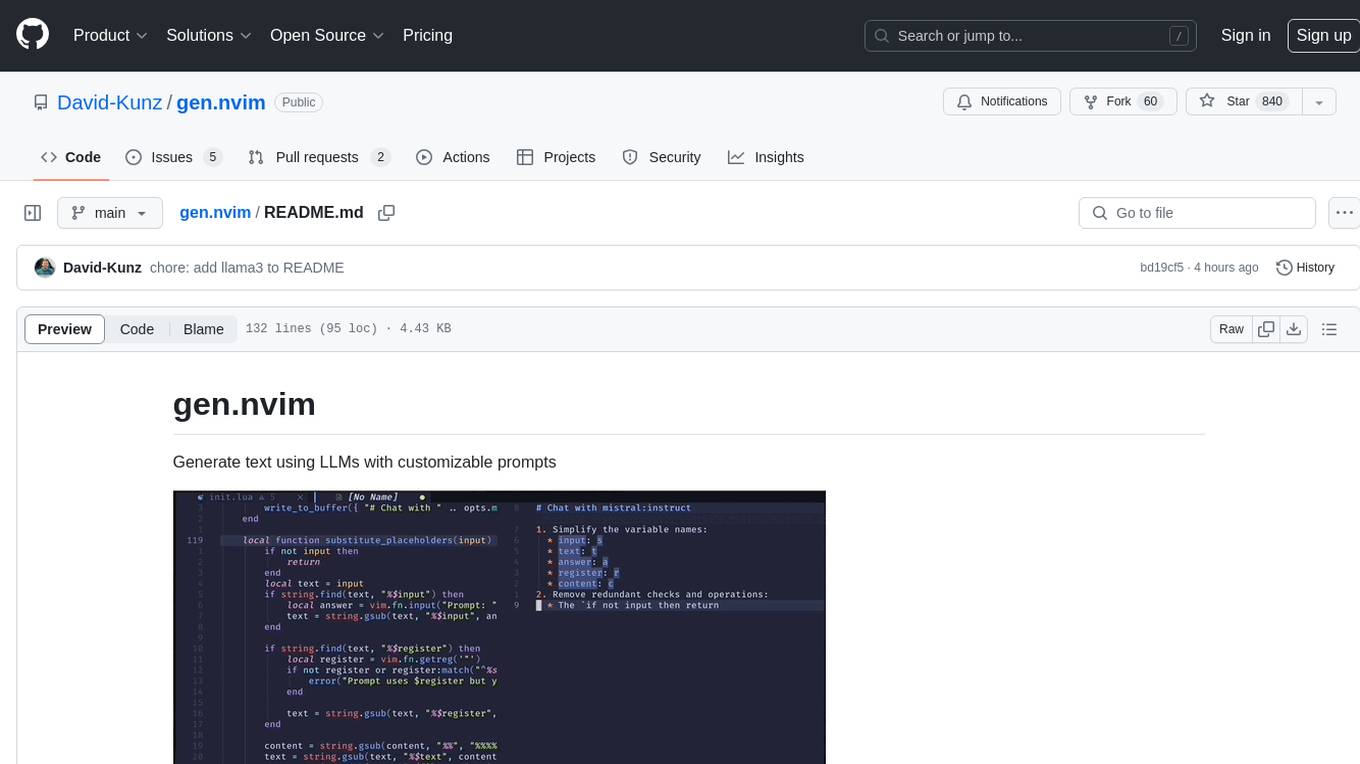

gen.nvim

gen.nvim is a tool that allows users to generate text using Language Models (LLMs) with customizable prompts. It requires Ollama with models like `llama3`, `mistral`, or `zephyr`, along with Curl for installation. Users can use the `Gen` command to generate text based on predefined or custom prompts. The tool provides key maps for easy invocation and allows for follow-up questions during conversations. Additionally, users can select a model from a list of installed models and customize prompts as needed.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

simpleAI

SimpleAI is a self-hosted alternative to the not-so-open AI API, focused on replicating main endpoints for LLM such as text completion, chat, edits, and embeddings. It allows quick experimentation with different models, creating benchmarks, and handling specific use cases without relying on external services. Users can integrate and declare models through gRPC, query endpoints using Swagger UI or API, and resolve common issues like CORS with FastAPI middleware. The project is open for contributions and welcomes PRs, issues, documentation, and more.

APIMyLlama

APIMyLlama is a server application that provides an interface to interact with the Ollama API, a powerful AI tool to run LLMs. It allows users to easily distribute API keys to create amazing things. The tool offers commands to generate, list, remove, add, change, activate, deactivate, and manage API keys, as well as functionalities to work with webhooks, set rate limits, and get detailed information about API keys. Users can install APIMyLlama packages with NPM, PIP, Jitpack Repo+Gradle or Maven, or from the Crates Repository. The tool supports Node.JS, Python, Java, and Rust for generating responses from the API. Additionally, it provides built-in health checking commands for monitoring API health status.

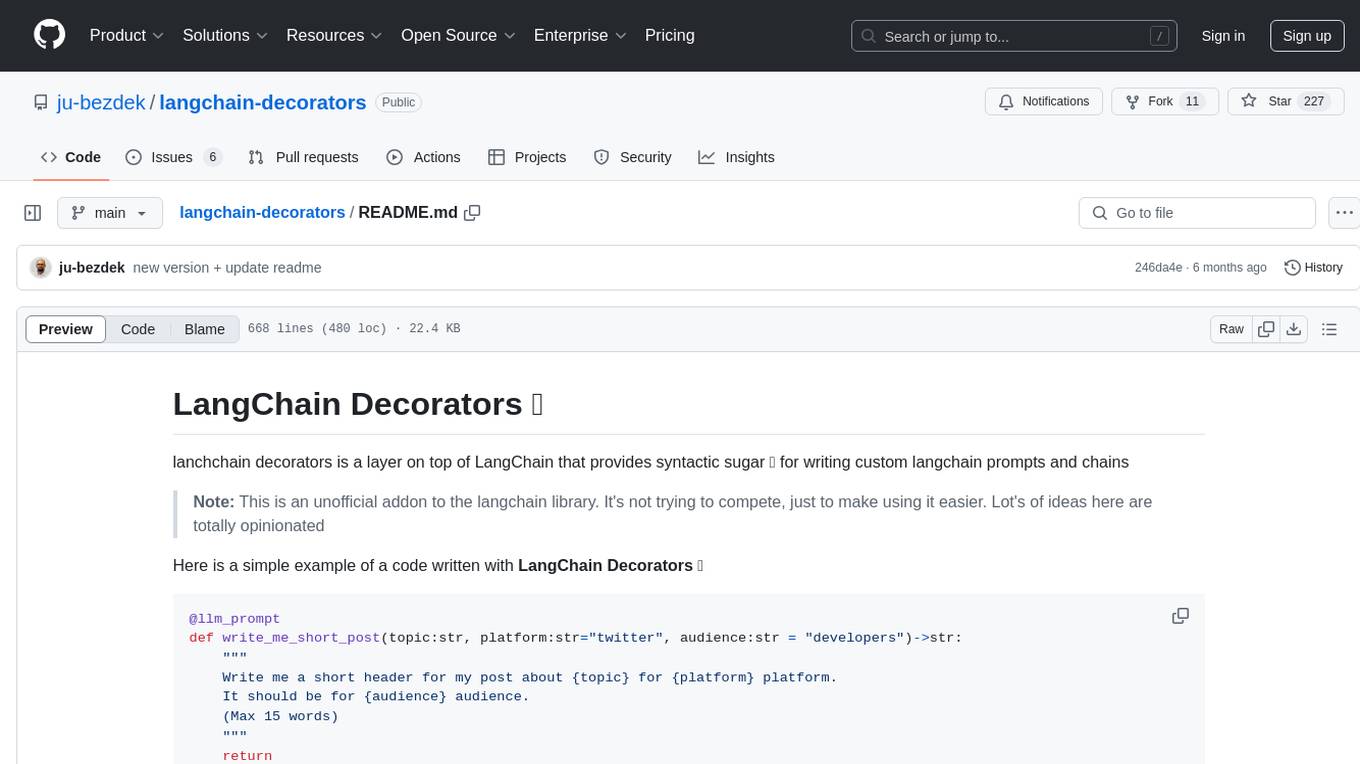

langchain-decorators

LangChain Decorators is a layer on top of LangChain that provides syntactic sugar for writing custom langchain prompts and chains. It offers a more pythonic way of writing code, multiline prompts without breaking code flow, IDE support for hinting and type checking, leveraging LangChain ecosystem, support for optional parameters, and sharing parameters between prompts. It simplifies streaming, automatic LLM selection, defining custom settings, debugging, and passing memory, callback, stop, etc. It also provides functions provider, dynamic function schemas, binding prompts to objects, defining custom settings, and debugging options. The project aims to enhance the LangChain library by making it easier to use and more efficient for writing custom prompts and chains.

minja

Minja is a minimalistic C++ Jinja templating engine designed specifically for integration with C++ LLM projects, such as llama.cpp or gemma.cpp. It is not a general-purpose tool but focuses on providing a limited set of filters, tests, and language features tailored for chat templates. The library is header-only, requires C++17, and depends only on nlohmann::json. Minja aims to keep the codebase small, easy to understand, and offers decent performance compared to Python. Users should be cautious when using Minja due to potential security risks, and it is not intended for producing HTML or JavaScript output.

openai

An open-source client package that allows developers to easily integrate the power of OpenAI's state-of-the-art AI models into their Dart/Flutter applications. The library provides simple and intuitive methods for making requests to OpenAI's various APIs, including the GPT-3 language model, DALL-E image generation, and more. It is designed to be lightweight and easy to use, enabling developers to focus on building their applications without worrying about the complexities of dealing with HTTP requests. Note that this is an unofficial library as OpenAI does not have an official Dart library.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

perplexity-ai

Perplexity is a module that utilizes emailnator to generate new accounts, providing users with 5 pro queries per account creation. It enables the creation of new Gmail accounts with emailnator, ensuring unlimited pro queries. The tool requires specific Python libraries for installation and offers both a web interface and an API for different usage scenarios. Users can interact with the tool to perform various tasks such as account creation, query searches, and utilizing different modes for research purposes. Perplexity also supports asynchronous operations and provides guidance on obtaining cookies for account usage and account generation from emailnator.

agent-mimir

Agent Mimir is a command line and Discord chat client 'agent' manager for LLM's like Chat-GPT that provides the models with access to tooling and a framework with which accomplish multi-step tasks. It is easy to configure your own agent with a custom personality or profession as well as enabling access to all tools that are compatible with LangchainJS. Agent Mimir is based on LangchainJS, every tool or LLM that works on Langchain should also work with Mimir. The tasking system is based on Auto-GPT and BabyAGI where the agent needs to come up with a plan, iterate over its steps and review as it completes the task.

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

Gemini-API

Gemini-API is a reverse-engineered asynchronous Python wrapper for Google Gemini web app (formerly Bard). It provides features like persistent cookies, ImageFx support, extension support, classified outputs, official flavor, and asynchronous operation. The tool allows users to generate contents from text or images, have conversations across multiple turns, retrieve images in response, generate images with ImageFx, save images to local files, use Gemini extensions, check and switch reply candidates, and control log level.

For similar tasks

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

evedel

Evedel is an Emacs package designed to streamline the interaction with LLMs during programming. It aims to reduce manual code writing by creating detailed instruction annotations in the source files for LLM models. The tool leverages overlays to track instructions, categorize references with tags, and provide a seamless workflow for managing and processing directives. Evedel offers features like saving instruction overlays, complex query expressions for directives, and easy navigation through instruction overlays across all buffers. It is versatile and can be used in various types of buffers beyond just programming buffers.

obsidian-github-copilot

Obsidian Github Copilot Plugin is a tool that enables users to utilize Github Copilot within the Obsidian editor. It acts as a bridge between Obsidian and the Github Copilot service, allowing for enhanced code completion and suggestion features. Users can configure various settings such as suggestion generation delay, key bindings, and visibility of suggestions. The plugin requires a Github Copilot subscription, Node.js 18 or later, and a network connection to interact with the Copilot service. It simplifies the process of writing code by providing helpful completions and suggestions directly within the Obsidian editor.

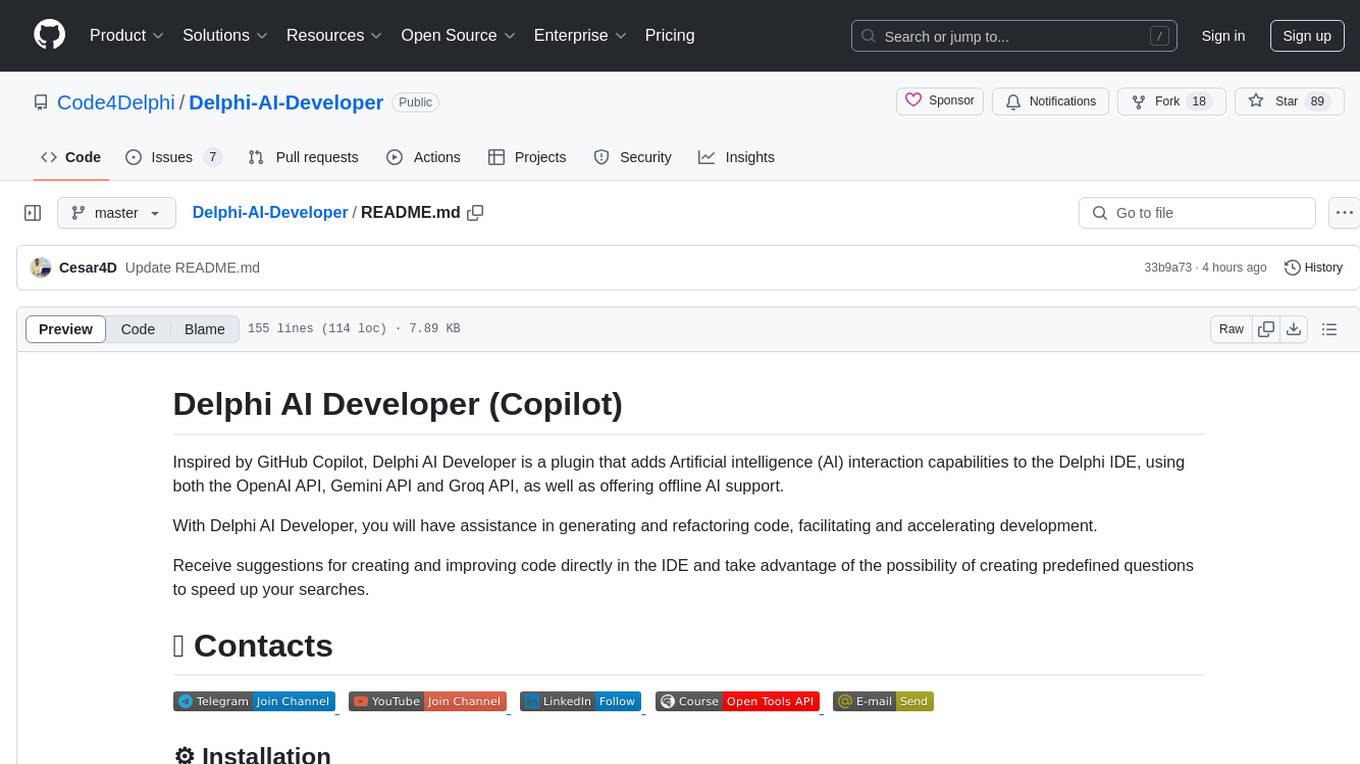

Delphi-AI-Developer

Delphi AI Developer is a plugin that enhances the Delphi IDE with AI capabilities from OpenAI, Gemini, and Groq APIs. It assists in code generation, refactoring, and speeding up development by providing code suggestions and predefined questions. Users can interact with AI chat and databases within the IDE, customize settings, and access documentation. The plugin is open-source and under the MIT License.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.