aire

Modern form builder for Laravel

Stars: 544

Aire is a modern Laravel form builder with a focus on expressive and beautiful code. It allows easy configuration of form components using fluent method calls or Blade components. Aire supports customization through config files and custom views, data binding with Eloquent models or arrays, method spoofing, CSRF token injection, server-side and client-side validation, and translations. It is designed to run on Laravel 5.8.28 and higher, with support for PHP 7.1 and higher. Aire is actively maintained and under consideration for additional features like read-only plain text, cross-browser support for custom checkboxes and radio buttons, support for Choices.js or similar libraries, improved file input handling, and better support for content prepending or appending to inputs.

README:

Aire is a modern Laravel form builder (demo) with a focus on the same expressive and beautiful code you expect from the Laravel ecosystem.

The most common usage is via the Aire facade in your blade templates. All method calls

are fluent, allowing for easy configuration of your form components:

{{ Aire::open()

->route('users.update')

->bind($user) }}

{{ Aire::input('given_name', 'First/Given Name')

->id('given_name') }}

{{ Aire::input('family_name', 'Last/Family Name')

->id('family_name')

->autoComplete('off') }}

{{ Aire::email('email', 'Email Address')

->helpText('Please use your company email address.') }}

{{ Aire::submit('Update User') }}

{{ Aire::close() }}As of Aire 2.4.0, you can also use all Aire elements as Blade Components. The above form is identical to:

<x-aire::form route="users.update" :bind="$user">

<x-aire::input

name="given_name"

label="First/Given Name"

id="given_name"

/>

<x-aire::input

name="family_name"

label="Last/Family Name"

id="family_name"

auto-complete="off"

/>

<x-aire::email

name="email"

label="Email Address"

help-text="Please use your company email address."

/>

<x-aire::submit label="Update User" />

</x-aire::form>Install via composer with:

composer require glhd/aireAire comes with classes that should work with the default Tailwind class names

out of the box (.bg-blue-600 etc). If you need to change the default class names

for any given element, there are two different ways to go about it.

The first is to publish the aire.php config file via php artisan vendor:publish --tag=aire-config

and update the default_classes config for the element you'd like to change:

return [

'default_classes' => [

'input' => 'text-gray-900 bg-white border rounded-sm',

],

];The second option is to publish custom views via php artisan vendor:publish --tag=aire-views

which gives you total control over component rendering. There's a view file for each component

type (input.blade.php etc) as well as for component grouping. This gives you the most

flexibility, but means that you have the maintain your views as Aire releases add new

features or change component rendering.

When you publish the aire.php config file via php artisan vendor:publish --tag=aire-config,

there are a handful of other configuration options. The config file is fully documented,

so go check it out!

Aire automatically binds old input to your form so that values are preserved if a validation

error occurs. You can also bind data with the bind() method.

// Bind Eloquent models

Aire::bind(User::find(1));

// Bind an array

Aire::bind(['given_name' => 'Chris']);

// Bind any object

Aire::bind((object) ['given_name' => 'Chris']);Binding is applied in the following order:

- Values set with

value()are applied no matter what - Old input is applied if available

- Bound data is applied last

Aire will automatically add the Laravel _method field for forms that are not GET or POST.

It will also automatically infer the intended method from the route if possible.

// In routes

Route::delete('/photos/{photo}', 'PhotosController@destroy')

->name('photos.destroy');

// In your view

{{ Aire::open()->route('photos.destroy', $photo) }}

{{ Aire::close() }}Will generate the resulting HTML:

<form action="/photos/1" method="POST">

<input type="hidden" name="_method" value="DELETE" />

</form>Aire will automatically inject a CSRF token if one exists and the form is not a GET form.

Simply enable the session and a hidden _token field will be injected for you.

If you run validations on the server, Aire will pick up any errors and automatically apply error classes and show error messages within the associated input's group.

You can also include an error summary, which provides an easy way to show your users an error at the top of the page if validation failed.

// Print "There are X errors on this page that you must fix before continuing."

{{ Aire::summary() }}

// Also include an itemized list of errors

{{ Aire::summary()->verbose() }}Javascript validation in Aire is in its early stages. Browser testing is limited, and the

Javascript code hasn't had an performance optimizations applied. That said, Aire

supports automatic client-side validation—simply pass an array of rules or a FormRequest

object and Aire will automatically apply most rules on the client side (thanks

to validatorjs!).

Aire should run on Laravel 5.8.28 and higher, and PHP 7.1 and higher. Our policy is to test the last two major releases of PHP and Laravel, so support below that is not guaranteed.

Aire comes with support for a handful of languages (feel free to submit a PR!). If you would like to add your own translations, you can do so by publishing them with:

php artisan vendor:publish --tag=aire-translationsThere are a few things that are still either in-the-works or being considered for a later release. These include:

- Read-only plain text

- Cross-browser support for custom checkboxes and radio buttons via a config option

- Support for Choices.js or similar

<select>UI libraries - Better handling of file inputs

- Better support for prepending or appending content to inputs

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aire

Similar Open Source Tools

aire

Aire is a modern Laravel form builder with a focus on expressive and beautiful code. It allows easy configuration of form components using fluent method calls or Blade components. Aire supports customization through config files and custom views, data binding with Eloquent models or arrays, method spoofing, CSRF token injection, server-side and client-side validation, and translations. It is designed to run on Laravel 5.8.28 and higher, with support for PHP 7.1 and higher. Aire is actively maintained and under consideration for additional features like read-only plain text, cross-browser support for custom checkboxes and radio buttons, support for Choices.js or similar libraries, improved file input handling, and better support for content prepending or appending to inputs.

minja

Minja is a minimalistic C++ Jinja templating engine designed specifically for integration with C++ LLM projects, such as llama.cpp or gemma.cpp. It is not a general-purpose tool but focuses on providing a limited set of filters, tests, and language features tailored for chat templates. The library is header-only, requires C++17, and depends only on nlohmann::json. Minja aims to keep the codebase small, easy to understand, and offers decent performance compared to Python. Users should be cautious when using Minja due to potential security risks, and it is not intended for producing HTML or JavaScript output.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

web-llm

WebLLM is a modular and customizable javascript package that directly brings language model chats directly onto web browsers with hardware acceleration. Everything runs inside the browser with no server support and is accelerated with WebGPU. WebLLM is fully compatible with OpenAI API. That is, you can use the same OpenAI API on any open source models locally, with functionalities including json-mode, function-calling, streaming, etc. We can bring a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

experts

Experts.js is a tool that simplifies the creation and deployment of OpenAI's Assistants, allowing users to link them together as Tools to create a Panel of Experts system with expanded memory and attention to detail. It leverages the new Assistants API from OpenAI, which offers advanced features such as referencing attached files & images as knowledge sources, supporting instructions up to 256,000 characters, integrating with 128 tools, and utilizing the Vector Store API for efficient file search. Experts.js introduces Assistants as Tools, enabling the creation of Multi AI Agent Systems where each Tool is an LLM-backed Assistant that can take on specialized roles or fulfill complex tasks.

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

lollms_legacy

Lord of Large Language Models (LoLLMs) Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications. The tool supports multiple personalities for generating text with different styles and tones, real-time text generation with WebSocket-based communication, RESTful API for listing personalities and adding new personalities, easy integration with various applications and frameworks, sending files to personalities, running on multiple nodes to provide a generation service to many outputs at once, and keeping data local even in the remote version.

playword

PlayWord is a tool designed to supercharge web test automation experience with AI. It provides core features such as enabling browser operations and validations using natural language inputs, as well as monitoring interface to record and dry-run test steps. PlayWord supports multiple AI services including Anthropic, Google, and OpenAI, allowing users to select the appropriate provider based on their requirements. The tool also offers features like assertion handling, frame handling, custom variables, test recordings, and an Observer module to track user interactions on web pages. With PlayWord, users can interact with web pages using natural language commands, reducing the need to worry about element locators and providing AI-powered adaptation to UI changes.

VMind

VMind is an open-source solution for intelligent visualization, providing an intelligent chart component based on LLM by VisActor. It allows users to create chart narrative works with natural language interaction, edit charts through dialogue, and export narratives as videos or GIFs. The tool is easy to use, scalable, supports various chart types, and offers one-click export functionality. Users can customize chart styles, specify themes, and aggregate data using LLM models. VMind aims to enhance efficiency in creating data visualization works through dialogue-based editing and natural language interaction.

auto-playwright

Auto Playwright is a tool that allows users to run Playwright tests using AI. It eliminates the need for selectors by determining actions at runtime based on plain-text instructions. Users can automate complex scenarios, write tests concurrently with or before functionality development, and benefit from rapid test creation. The tool supports various Playwright actions and offers additional options for debugging and customization. It uses HTML sanitization to reduce costs and improve text quality when interacting with the OpenAI API.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

OpenAI-sublime-text

The OpenAI Completion plugin for Sublime Text provides first-class code assistant support within the editor. It utilizes LLM models to manipulate code, engage in chat mode, and perform various tasks. The plugin supports OpenAI, llama.cpp, and ollama models, allowing users to customize their AI assistant experience. It offers separated chat histories and assistant settings for different projects, enabling context-specific interactions. Additionally, the plugin supports Markdown syntax with code language syntax highlighting, server-side streaming for faster response times, and proxy support for secure connections. Users can configure the plugin's settings to set their OpenAI API key, adjust assistant modes, and manage chat history. Overall, the OpenAI Completion plugin enhances the Sublime Text editor with powerful AI capabilities, streamlining coding workflows and fostering collaboration with AI assistants.

For similar tasks

forms-flow-ai

formsflow.ai is a Free, Open-Source, Low Code Development Platform for rapidly building powerful business applications. It combines leading Open-Source applications including form.io forms, Camunda’s workflow engine, Keycloak’s security, and Redash’s data analytics into a seamless, integrated platform. Check out the installation documentation for installation instructions and features documentation to explore features and capabilities in detail.

morgana-form

MorGana Form is a full-stack form builder project developed using Next.js, React, TypeScript, Ant Design, PostgreSQL, and other technologies. It allows users to quickly create and collect data through survey forms. The project structure includes components, hooks, utilities, pages, constants, Redux store, themes, types, server-side code, and component packages. Environment variables are required for database settings, NextAuth login configuration, and file upload services. Additionally, the project integrates an AI model for form generation using the Ali Qianwen model API.

aire

Aire is a modern Laravel form builder with a focus on expressive and beautiful code. It allows easy configuration of form components using fluent method calls or Blade components. Aire supports customization through config files and custom views, data binding with Eloquent models or arrays, method spoofing, CSRF token injection, server-side and client-side validation, and translations. It is designed to run on Laravel 5.8.28 and higher, with support for PHP 7.1 and higher. Aire is actively maintained and under consideration for additional features like read-only plain text, cross-browser support for custom checkboxes and radio buttons, support for Choices.js or similar libraries, improved file input handling, and better support for content prepending or appending to inputs.

ConvoForm

ConvoForm.com transforms traditional forms into interactive conversational experiences, powered by AI for an enhanced user journey. It offers AI-Powered Form Generation, Real-time Form Editing and Preview, and Customizable Submission Pages. The tech stack includes Next.js for frontend, tRPC for backend, GPT-3.5-Turbo for AI integration, and Socket.io for real-time updates. Local setup requires Node.js, pnpm, Git, PostgreSQL database, Clerk for Authentication, OpenAI key, Redis Database, and Sentry for monitoring. The project is open for contributions and is licensed under the MIT License.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

freeciv-web

Freeciv-web is an open-source turn-based strategy game that can be played in any HTML5 capable web-browser. It features in-depth gameplay, a wide variety of game modes and options. Players aim to build cities, collect resources, organize their government, and build an army to create the best civilization. The game offers both multiplayer and single-player modes, with a 2D version with isometric graphics and a 3D WebGL version available. The project consists of components like Freeciv-web, Freeciv C server, Freeciv-proxy, Publite2, and pbem for play-by-email support. Developers interested in contributing can check the GitHub issues and TODO file for tasks to work on.

nextpy

Nextpy is a cutting-edge software development framework optimized for AI-based code generation. It provides guardrails for defining AI system boundaries, structured outputs for prompt engineering, a powerful prompt engine for efficient processing, better AI generations with precise output control, modularity for multiplatform and extensible usage, developer-first approach for transferable knowledge, and containerized & scalable deployment options. It offers 4-10x faster performance compared to Streamlit apps, with a focus on cooperation within the open-source community and integration of key components from various projects.

airbadge

Airbadge is a Stripe addon for Auth.js that provides an easy way to create a SaaS site without writing any authentication or payment code. It integrates Stripe Checkout into the signup flow, offers over 50 OAuth options for authentication, allows route and UI restriction based on subscription, enables self-service account management, handles all Stripe webhooks, supports trials and free plans, includes subscription and plan data in the session, and is open source with a BSL license. The project also provides components for conditional UI display based on subscription status and helper functions to restrict route access. Additionally, it offers a billing endpoint with various routes for billing operations. Setup involves installing @airbadge/sveltekit, setting up a database provider for Auth.js, adding environment variables, configuring authentication and billing options, and forwarding Stripe events to localhost.

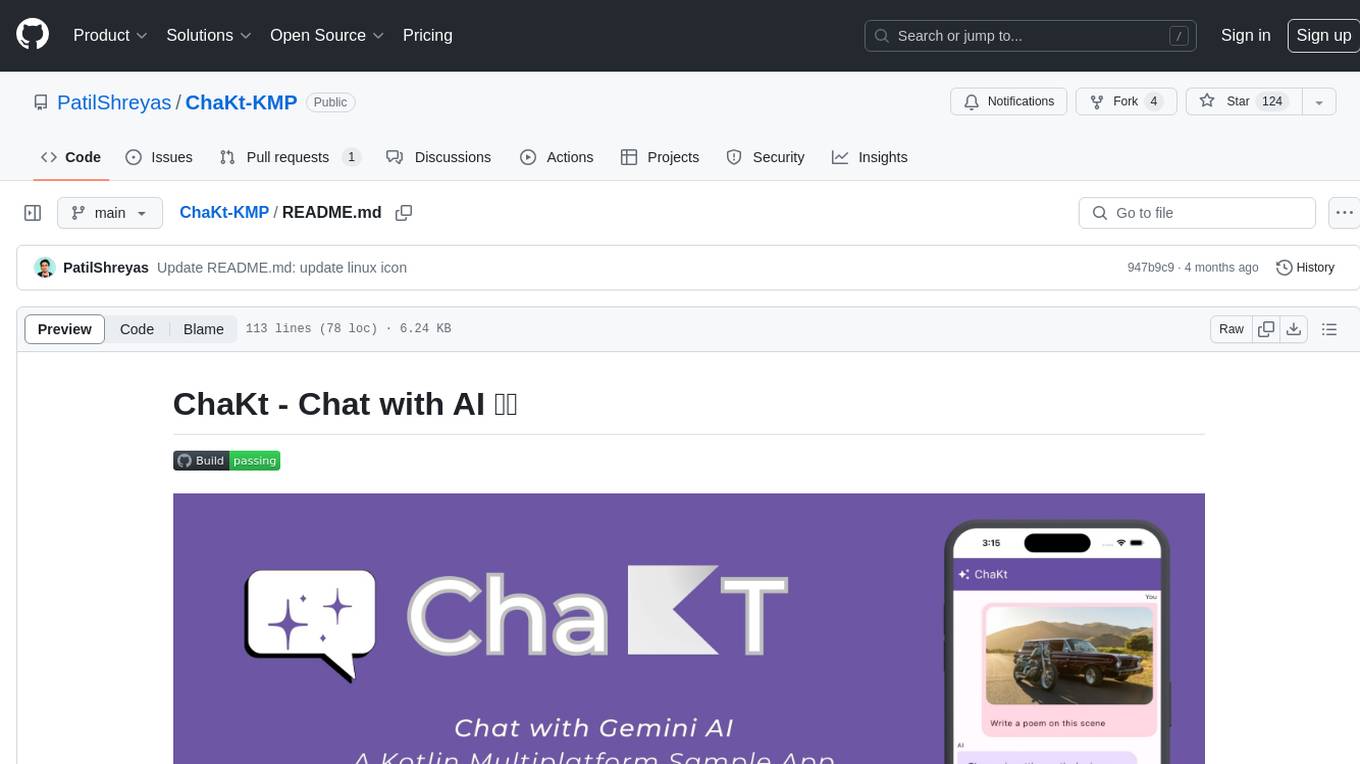

ChaKt-KMP

ChaKt is a multiplatform app built using Kotlin and Compose Multiplatform to demonstrate the use of Generative AI SDK for Kotlin Multiplatform to generate content using Google's Generative AI models. It features a simple chat based user interface and experience to interact with AI. The app supports mobile, desktop, and web platforms, and is built with Kotlin Multiplatform, Kotlin Coroutines, Compose Multiplatform, Generative AI SDK, Calf - File picker, and BuildKonfig. Users can contribute to the project by following the guidelines in CONTRIBUTING.md. The app is licensed under the MIT License.