Thor

Thor(雷神托尔) 是一款强大的人工智能模型管理工具,其主要目的是为了实现多种AI模型的统一管理和使用。通过Thor(雷神托尔),用户可以轻松地管理和使用众多AI模型,而且Thor(雷神托尔)兼容OpenAI的接口格式,使得使用更加方便。

Stars: 183

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

README:

Thor(雷神托尔) 是一款强大的人工智能模型管理工具,其主要目的是为了实现多种AI模型的统一管理和使用。通过Thor(雷神托尔),用户可以轻松地管理和使用众多AI模型,而且Thor(雷神托尔)兼容OpenAI的接口格式,使得使用更加方便。

Thor(雷神托尔)提供了丰富的功能:

- 管理功能:支持用户管理,渠道管理以及token管理,简化了管理流程。

- 数据统计预览:可以清晰地看到各种数据的统计情况,帮助用户更好地了解使用情况。

- 日志查看:支持日志查看,方便用户跟踪和解决问题。

- 系统设置:可以根据需要进行各种系统设置。

- 外部Chat链接接入:支持接入外部Chat链接,提升交互体验。

- 支付宝购买账号余额:提供支付宝购买账号余额的功能,方便用户进行充值。

此外,Thor(雷神托尔)还支持多种AI大模型,包括OpenAI、星火大模型、Claudia、智谱AI、Ollama、通义千问(阿里云)、AzureOpenAI以及腾讯混元大模型,满足了用户对各种AI模型的需求。

Thor(雷神托尔)还支持多种数据库,包括SqlServer、PostgreSql、Sqlite以及MySql,用户可以根据自己的需要选择合适的数据库。

清晰的数据统计

- [x] 支持用户管理

- [x] 支持渠道管理

- [x] 支持token管理

- [x] 提供数据统计预览

- [x] 支持日志查看

- [x] 支持系统设置

- [x] 支持接入外部Chat链接

- [x] 支持支付宝购买账号余额

- [x] 支持Rabbit消费日志(默认情况下使用本地事件)

- [x] 支持分布式多级缓存

- [x] OpenAI (支持function)

- [x] Kimi(月之暗面)(支持function)

- [x] 星火大模型(支持function)

- [x] Claudia (支持function)

- [x] 智谱AI (支持function)

- [x] 微软Azure(支持function)

- [x] Ollama(支持function)

- [x] 通义千问(阿里云)(支持function)

- [x] 腾讯混元大模型

- [x] 支持百度大模型(ErnieBot)

- [x] Gitee AI (支持function)

- [x] MiniMax AI(支持function)

- [x] SiliconFlow AI(支持function)

- [x] DeepSeek AI(支持function)

- [x] 火山引擎 (支持function)

- [x] 亚马逊 (支持function)

- [x] 谷歌Claude (支持function) ( 代理地址:[LocationId]-aiplatform.googleapis.com|[ProjectId]|[LocationId]|rawPredict ) [这里面填写你的区域/项目id] (密钥:在Google平台创建的大json 就是autu 2.0授权码 )

- [x] SqlServer 配置类型[sqlserver,mssql]

- [x] PostgreSql 配置类型[postgresql,pgsql]

- [x] Sqlite 配置类型[sqlite,默认]

- [x] MySql 配置类型[mysql]

- [x] 达梦数据库 配置类型[dm]

修改appsettings.json的ConnectionStrings:DBType配置项即可切换数据库类型。请注意切换数据库不会迁移数据。

graph LR

A(用户)

A --->|使用 Thor 分发的 key 进行请求| B(Thor)

B -->|中继请求| C(OpenAI)

B -->|中继请求| D(Azure)

B -->|中继请求| E(其他 OpenAI API 格式下游渠道)

B -->|中继并修改请求体和返回体| F(非 OpenAI API 格式下游渠道)默认账号密码 admin admin

需要注意的是,如果克隆项目后,项目根目录缺少data时,需要手动创建,docker compose up 时,需要挂载本地目录data。

- DBType sqlite | [postgresql,pgsql] | [sqlserver,mssql] | mysql

- ConnectionStrings:DefaultConnection 主数据库连接字符串

- ConnectionStrings:LoggerConnection 日志数据连接字符串

- CACHE_TYPE 缓存类型 Memory|Redis

- CACHE_CONNECTION_STRING 缓存连接字符串 如果是Redis则为Redis连接字符串,Memory则为空

- HttpClientPoolSize HttpClient连接池大小

- RunMigrationsAtStartup 是否在启动时运行迁移 如果是首次启动则需要设置为true

使用docker compose启动服务:

version: '3.8'

services:

thor:

image: aidotnet/thor:latest

ports:

- 18080:8080

container_name: thor

volumes:

- ./data:/data

environment:

- TZ=Asia/Shanghai

- DBType=sqlite # sqlite | [postgresql,pgsql] | [sqlserver,mssql] | mysql

- ConnectionStrings:DefaultConnection=data source=/data/token.db

- ConnectionStrings:LoggerConnection=data source=/data/logger.db

- RunMigrationsAtStartup=true使用docker run启动服务

docker run -d -p 18080:8080 --name thor --network=gateway -v $PWD/data:/data -e Theme=lobe -e TZ=Asia/Shanghai -e DBType=sqlite -e ConnectionStrings:ConnectionString="data source=/data/token.db" -e RunMigrationsAtStartup=true -e ConnectionStrings:LoggerConnectionString="data source=/data/logger.db" aidotnet/thor:latestdocker compose版本

项目根目录创建docker-compose.yml文件,内容如下:

version: '3.8'

services:

thor:

image: aidotnet/thor:latest

container_name: thor

ports:

- 18080:8080

volumes:

- ./data:/data

environment:

- TZ=Asia/Shanghai

- DBType=sqlite

- ConnectionStrings:DefaultConnection=data source=/data/token.db

- ConnectionStrings:LoggerConnection=data source=/data/logger.db

- RunMigrationsAtStartup=true执行如下命令打包镜像

sudo docker compose build执行以下命令启动服务

sudo docker compose up -ddocker run版本

docker run -d -p 18080:8080 --name ai-dotnet-api-service -v $(pwd)/data:/data -e RunMigrationsAtStartup=true -e Theme=lobe -e TZ=Asia/Shanghai -e DBType=sqlite -e ConnectionStrings:DefaultConnection=data source=/data/token.db -e ConnectionStrings:LoggerConnection=data source=/data/logger.db aidotnet/thor:latest然后访问 http://localhost:18080 即可看到服务启动成功。

docker compose版本

项目根目录创建docker-compose.yml文件,内容如下:

version: '3.8'

services:

thor:

image: aidotnet/thor:latest

container_name: thor

ports:

- 18080:8080

volumes:

- ./data:/data

environment:

- TZ=Asia/Shanghai

- DBType=postgresql

- ConnectionStrings:DefaultConnection=Host=127.0.0.1;Port=5432;Database=token;Username=token;Password=dd666666

- ConnectionStrings:LoggerConnection=Host=127.0.0.1;Port=5432;Database=logger;Username=token;Password=dd666666

- RunMigrationsAtStartup=true执行如下命令打包镜像

sudo docker compose build执行以下命令启动服务

sudo docker compose up -ddocker run版本

docker run -d \

--name thor \

-p 18080:8080 \

-v $(pwd)/data:/data \

-e TZ=Asia/Shanghai \

-e DBType=postgresql \

-e RunMigrationsAtStartup=true \

-e ConnectionStrings:DefaultConnection=Host=127.0.0.1;Port=5432;Database=token;Username=token;Password=dd666666 \

-e ConnectionStrings:LoggerConnection=Host=127.0.0.1;Port=5432;Database=logger;Username=token;Password=dd666666 \

aidotnet/thor:latest然后访问 http://localhost:18080 即可看到服务启动成功。

docker compose版本

项目根目录创建docker-compose.yml文件,内容如下:

version: '3.8'

services:

thor:

image: aidotnet/thor:latest

container_name: thor

ports:

- 18080:8080

volumes:

- ./data:/data

environment:

- TZ=Asia/Shanghai

- DBType=sqlserver

- ConnectionStrings:DefaultConnection=Server=127.0.0.1;Database=token;User Id=sa;Password=dd666666;

- ConnectionStrings:LoggerConnection=Server=127.0.0.1;Database=logger;User Id=sa;Password=dd666666;

- RunMigrationsAtStartup=true执行如下命令打包镜像

sudo docker compose build执行以下命令启动服务

sudo docker compose up -ddocker run版本

docker run -d \

--name thor \

-p 18080:8080 \

-v $(pwd)/data:/data \

-e TZ=Asia/Shanghai \

-e RunMigrationsAtStartup=true \

-e DBType=sqlserver \

-e ConnectionStrings:DefaultConnection=Server=127.0.0.1;Database=token;User Id=sa;Password=dd666666; \

-e ConnectionStrings:LoggerConnection=Server=127.0.0.1;Database=logger;User Id=sa;Password=dd666666; \

aidotnet/thor:latest然后访问 http://localhost:18080 即可看到服务启动成功。

docker compose版本

项目根目录创建docker-compose.yml文件,内容如下:

version: '3.8'

services:

thor:

image: aidotnet/thor:latest

container_name: thor

ports:

- 18080:8080

volumes:

- ./data:/data

environment:

- TZ=Asia/Shanghai

- DBType=mysql

- "ConnectionStrings:DefaultConnection=mysql://root:dd666666@localhost:3306/token"

- "ConnectionStrings:LoggerConnection=mysql://root:dd666666@localhost:3306/logger"

- RunMigrationsAtStartup=true执行如下命令打包镜像

sudo docker compose build执行以下命令启动服务

sudo docker compose up -ddocker run版本

docker run -d \

--name thor \

-p 18080:8080 \

-v $(pwd)/data:/data \

-e TZ=Asia/Shanghai \

-e DBType=mysql \

-e "ConnectionStrings:DefaultConnection=mysql://root:dd666666@localhost:3306/token" \

-e "ConnectionStrings:LoggerConnection=mysql://root:dd666666@localhost:3306/logger" \

-e RunMigrationsAtStartup=true \

aidotnet/thor:latest然后访问 http://localhost:18080 即可看到服务启动成功。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Thor

Similar Open Source Tools

Thor

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

dify-chat

Dify Chat Web is an AI conversation web app based on the Dify API, compatible with DeepSeek, Dify Chatflow/Workflow applications, and Agent Mind Chain output information. It supports multiple scenarios, flexible deployment without backend dependencies, efficient integration with reusable React components, and style customization for unique business system styles.

goodsKill

The 'goodsKill' project aims to build a complete project framework integrating good technologies and development techniques, mainly focusing on backend technologies. It provides a simulated flash sale project with unified flash sale simulation request interface. The project uses SpringMVC + Mybatis for the overall technology stack, Dubbo3.x for service intercommunication, Nacos for service registration and discovery, and Spring State Machine for data state transitions. It also integrates Spring AI service for simulating flash sale actions.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

ddddocr

ddddocr is a Rust version of a simple OCR API server that provides easy deployment for captcha recognition without relying on the OpenCV library. It offers a user-friendly general-purpose captcha recognition Rust library. The tool supports recognizing various types of captchas, including single-line text, transparent black PNG images, target detection, and slider matching algorithms. Users can also import custom OCR training models and utilize the OCR API server for flexible OCR result control and range limitation. The tool is cross-platform and can be easily deployed.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface with features such as independent development documentation page support, service monitoring page configuration support, and third-party login support. Users can manage user registration time, optimize interface elements, and support features like online recharge, model pricing display, and sensitive word filtering. VoAPI also provides support for various AI models and platforms, with the ability to configure homepage templates, model information, and manufacturer information.

HuaTuoAI

HuaTuoAI is an artificial intelligence image classification system specifically designed for traditional Chinese medicine. It utilizes deep learning techniques, such as Convolutional Neural Networks (CNN), to accurately classify Chinese herbs and ingredients based on input images. The project aims to unlock the secrets of plants, depict the unknown realm of Chinese medicine using technology and intelligence, and perpetuate ancient cultural heritage.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface, independent development documentation page support, service monitoring page configuration support, and third-party login support. It also optimizes interface elements, user registration time support, data operation button positioning, and more.

AMchat

AMchat is a large language model that integrates advanced math concepts, exercises, and solutions. The model is based on the InternLM2-Math-7B model and is specifically designed to answer advanced math problems. It provides a comprehensive dataset that combines Math and advanced math exercises and solutions. Users can download the model from ModelScope or OpenXLab, deploy it locally or using Docker, and even retrain it using XTuner for fine-tuning. The tool also supports LMDeploy for quantization, OpenCompass for evaluation, and various other features for model deployment and evaluation. The project contributors have provided detailed documentation and guides for users to utilize the tool effectively.

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

ai-trend-publish

AI TrendPublish is an AI-based trend discovery and content publishing system that supports multi-source data collection, intelligent summarization, and automatic publishing to WeChat official accounts. It features data collection from various sources, AI-powered content processing using DeepseekAI Together, key information extraction, intelligent title generation, automatic article publishing to WeChat official accounts with custom templates and scheduled tasks, notification system integration with Bark for task status updates and error alerts. The tool offers multiple templates for content customization and is built using Node.js + TypeScript with AI services from DeepseekAI Together, data sources including Twitter/X API and FireCrawl, and uses node-cron for scheduling tasks and EJS as the template engine.

ophel

Ophel Atlas is a tool that transforms AI conversations into readable, navigable, and reusable documents. It organizes conversations into a structured workflow, allowing users to easily navigate and reuse valuable insights. It offers features such as intelligent outlining, conversation management, prompt libraries, theme customization, interface optimization, reading experience enhancements, efficiency tools, and privacy-focused data storage. Ophel Atlas is designed for various use cases including learning and research, daily work tasks, development and technical writing, content creation, and frequent AI users seeking structured and reusable capabilities.

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

CareGPT

CareGPT is a medical large language model (LLM) that explores medical data, training, and deployment related research work. It integrates resources, open-source models, rich data, and efficient deployment methods. It supports various medical tasks, including patient diagnosis, medical dialogue, and medical knowledge integration. The model has been fine-tuned on diverse medical datasets to enhance its performance in the healthcare domain.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

siliconflow-plugin

SiliconFlow-PLUGIN (SF-PLUGIN) is a versatile AI integration plugin for the Yunzai robot framework, supporting multiple AI services and models. It includes features such as AI drawing, intelligent conversations, real-time search, text-to-speech synthesis, resource management, link handling, video parsing, group functions, WebSocket support, and Jimeng-Api interface. The plugin offers functionalities for drawing, conversation, search, image link retrieval, video parsing, group interactions, and more, enhancing the capabilities of the Yunzai framework.

For similar tasks

Thor

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

tts-generation-webui

TTS Generation WebUI is a comprehensive tool that provides a user-friendly interface for text-to-speech and voice cloning tasks. It integrates various AI models such as Bark, MusicGen, AudioGen, Tortoise, RVC, Vocos, Demucs, SeamlessM4T, and MAGNeT. The tool offers one-click installers, Google Colab demo, videos for guidance, and extra voices for Bark. Users can generate audio outputs, manage models, caches, and system space for AI projects. The project is open-source and emphasizes ethical and responsible use of AI technology.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface, independent development documentation page support, service monitoring page configuration support, and third-party login support. It also optimizes interface elements, user registration time support, data operation button positioning, and more.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface with features such as independent development documentation page support, service monitoring page configuration support, and third-party login support. Users can manage user registration time, optimize interface elements, and support features like online recharge, model pricing display, and sensitive word filtering. VoAPI also provides support for various AI models and platforms, with the ability to configure homepage templates, model information, and manufacturer information.

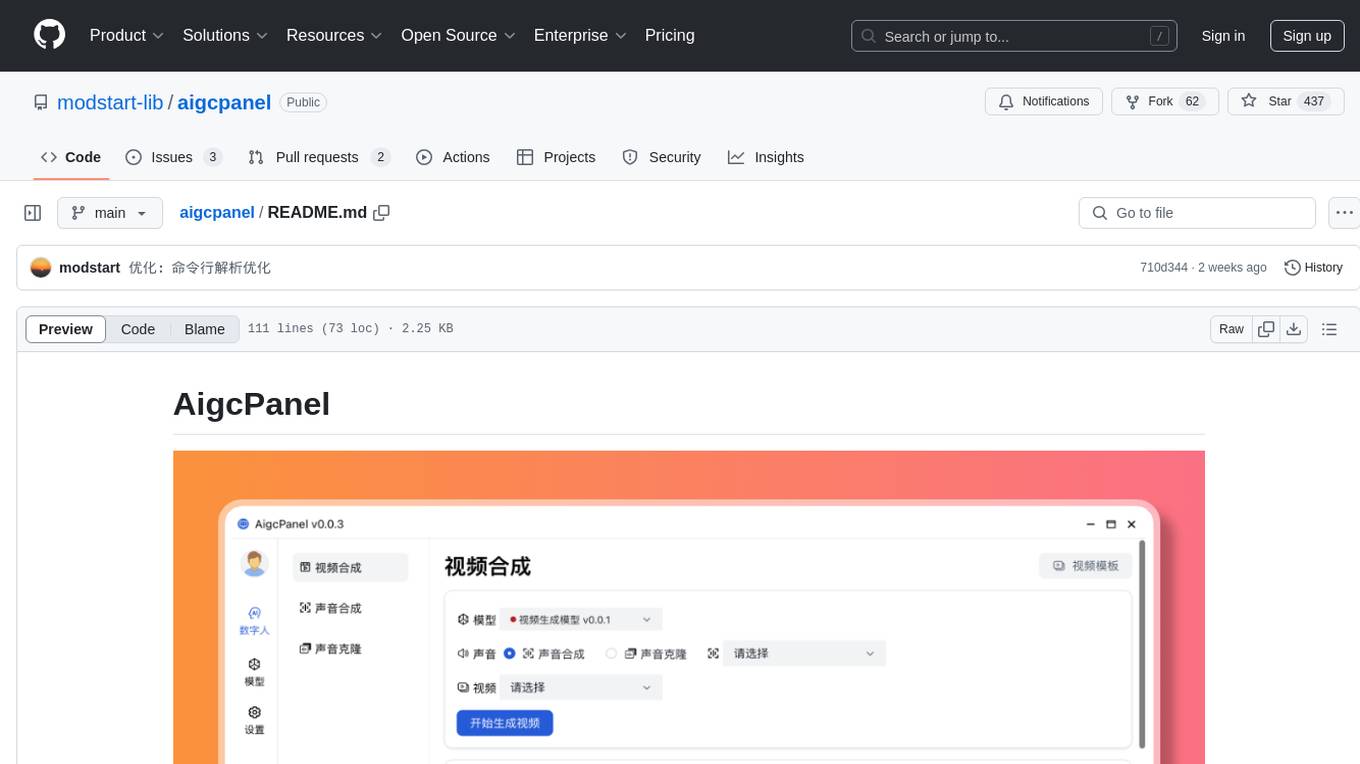

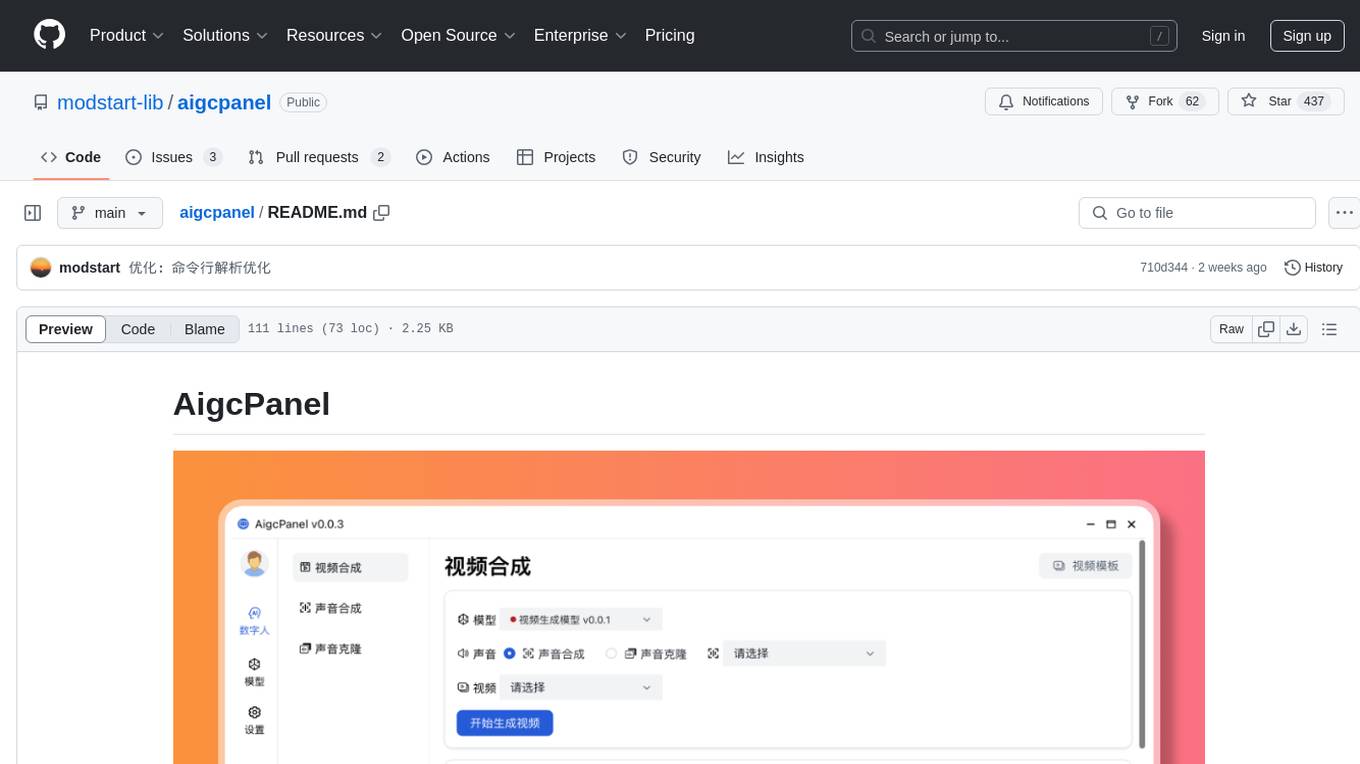

aigcpanel

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

solo-server

Solo Server is a lightweight server designed for managing hardware-aware inference. It provides seamless setup through a simple CLI and HTTP servers, an open model registry for pulling models from platforms like Ollama and Hugging Face, cross-platform compatibility for effortless deployment of AI models on hardware, and a configurable framework that auto-detects hardware components (CPU, GPU, RAM) and sets optimal configurations.

erag

ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. It processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. The tool includes modules for interacting with web content, GitHub repositories, and performing exploratory data analysis using various language models. It offers a GUI for managing local LLaMA.cpp servers, customizable settings, and advanced search utilities. ERAG supports multi-model collaboration, iterative knowledge refinement, automated quality assessment, and structured knowledge format enforcement. Users can generate specific knowledge entries, full-size textbooks, or datasets using AI-generated questions and answers.

For similar jobs

Thor

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

aigcpanel

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

redbox

Redbox is a retrieval augmented generation (RAG) app that uses GenAI to chat with and summarise civil service documents. It increases organisational memory by indexing documents and can summarise reports read months ago, supplement them with current work, and produce a first draft that lets civil servants focus on what they do best. The project uses a microservice architecture with each microservice running in its own container defined by a Dockerfile. Dependencies are managed using Python Poetry. Contributions are welcome, and the project is licensed under the MIT License. Security measures are in place to ensure user data privacy and considerations are being made to make the core-api secure.

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

MLE-agent

MLE-Agent is an intelligent companion designed for machine learning engineers and researchers. It features autonomous baseline creation, integration with Arxiv and Papers with Code, smart debugging, file system organization, comprehensive tools integration, and an interactive CLI chat interface for seamless AI engineering and research workflows.

LynxHub

LynxHub is a platform that allows users to seamlessly install, configure, launch, and manage all their AI interfaces from a single, intuitive dashboard. It offers features like AI interface management, arguments manager, custom run commands, pre-launch actions, extension management, in-app tools like terminal and web browser, AI information dashboard, Discord integration, and additional features like theme options and favorite interface pinning. The platform supports modular design for custom AI modules and upcoming extensions system for complete customization. LynxHub aims to streamline AI workflow and enhance user experience with a user-friendly interface and comprehensive functionalities.

ChatGPT-Next-Web-Pro

ChatGPT-Next-Web-Pro is a tool that provides an enhanced version of ChatGPT-Next-Web with additional features and functionalities. It offers complete ChatGPT-Next-Web functionality, file uploading and storage capabilities, drawing and video support, multi-modal support, reverse model support, knowledge base integration, translation, customizations, and more. The tool can be deployed with or without a backend, allowing users to interact with AI models, manage accounts, create models, manage API keys, handle orders, manage memberships, and more. It supports various cloud services like Aliyun OSS, Tencent COS, and Minio for file storage, and integrates with external APIs like Azure, Google Gemini Pro, and Luma. The tool also provides options for customizing website titles, subtitles, icons, and plugin buttons, and offers features like voice input, file uploading, real-time token count display, and more.

agentneo

AgentNeo is a Python package that provides functionalities for project, trace, dataset, experiment management. It allows users to authenticate, create projects, trace agents and LangGraph graphs, manage datasets, and run experiments with metrics. The tool aims to streamline AI project management and analysis by offering a comprehensive set of features.