Best AI tools for< Ai Solutions Architect >

Infographic

20 - AI tool Sites

ViSenze Solutions

ViSenze Solutions is an AI-powered platform that offers Smart Search and Product Discovery solutions for e-commerce businesses. Leveraging multimodal AI technology, ViSenze provides personalized search experiences, relevant product recommendations, and seamless shopping journeys to drive conversions and revenue. The platform integrates advanced AI and machine learning to enable natural language, image, and keyword-based searches, as well as personalized recommendations and AI-powered styling assistance. ViSenze also offers tools for customizing search and discovery experiences, automated product tagging, performance analytics, and global support for tailored solutions. With a focus on scalability, performance, and security, ViSenze aims to enhance the online shopping experience for customers and optimize business outcomes for retailers.

Clarifai

Clarifai is an AI Workflow Orchestration Platform that helps businesses establish an AI Operating Model and transition from prototype to production efficiently. It offers end-to-end solutions for operationalizing AI, including Retrieval Augmented Generation (RAG), Generative AI, Digital Asset Management, Visual Inspection, Automated Data Labeling, and Content Moderation. Clarifai's platform enables users to build and deploy AI faster, reduce development costs, ensure oversight and security, and unlock AI capabilities across the organization. The platform simplifies data labeling, content moderation, intelligence & surveillance, generative AI, content organization & personalization, and visual inspection. Trusted by top enterprises, Clarifai helps companies overcome challenges in hiring AI talent and misuse of data, ultimately leading to AI success at scale.

Community Labs

Community Labs is a modern AI platform that offers advanced business intelligence through a unified AI cloud. It provides real-time insights for decision-making by collecting and analyzing data from various sectors. The platform enables public and private organizations to address challenges, streamline data for maximum impact, and foster innovation and improvement. Community Labs integrates data from diverse sectors like education, healthcare, government, and social services, offering robust capabilities for efficient resource allocation and service delivery. With stringent security standards and advanced AI capabilities, the platform empowers municipal employees to be more productive and impactful.

Context64AI

Context64AI is an AI application that specializes in transforming industries with data-driven solutions. It provides a unified intelligence platform that connects data, workflows, and knowledge to deliver trusted, actionable outcomes. The application focuses on providing comprehensive business context to AI models, ensuring better outcomes and faster decision-making. Context64AI offers various intelligence solutions such as Product Intelligence Hub, Compliance Intelligence, Scenario Intelligence, Digital Twin Intelligence, Sustainability Intelligence, and Supply Network Intelligence. It also features an open architecture, intelligent orchestration, and a unified context platform for enterprise deployment.

FriendliAI

FriendliAI is a generative AI infrastructure company that offers efficient, fast, and reliable generative AI inference solutions for production. Their cutting-edge technologies enable groundbreaking performance improvements, cost savings, and lower latency. FriendliAI provides a platform for building and serving compound AI systems, deploying custom models effortlessly, and monitoring and debugging model performance. The application guarantees consistent results regardless of the model used and offers seamless data integration for real-time knowledge enhancement. With a focus on security, scalability, and performance optimization, FriendliAI empowers businesses to scale with ease.

Pega

Pega is an enterprise AI decisioning and workflow automation platform that empowers organizations to unlock business-transforming outcomes with real-time optimization. Clients use Pega's AI capabilities to personalize engagement, automate customer service, and streamline operations. With a scalable and flexible architecture, Pega helps enterprises meet current customer demands while continuously transforming for the future.

Innodata Inc.

Innodata Inc. is a global data engineering company that delivers AI-enabled software platforms and managed services for AI data collection/annotation, AI digital transformation, and industry-specific business processes. They provide a full-suite of services and products to power data-centric AI initiatives using artificial intelligence and human expertise. With a 30+ year legacy, they offer the highest quality data and outstanding service to their customers.

Strong Analytics

Strong Analytics is a data science consulting and machine learning engineering company that specializes in building bespoke data science, machine learning, and artificial intelligence solutions for various industries. They offer end-to-end services to design, engineer, and deploy custom AI products and solutions, leveraging a team of full-stack data scientists and engineers with cross-industry experience. Strong Analytics is known for its expertise in accelerating innovation, deploying state-of-the-art techniques, and empowering enterprises to unlock the transformative value of AI.

DeepModel

DeepModel is an AI Strategy & Implementation Experts platform that offers expertise, playbook, and tooling to help ambitious companies go AI-Native. They provide workshops, TEL Analysis™ for prioritizing AI opportunities, and pre-built agent templates for faster deployment. The platform focuses on turning AI vision into reality responsibly and at scale, guiding companies through AI strategy planning and execution.

Ayfie

Ayfie is an AI-powered platform offering Retrieval-Augmented-Generation solutions. It goes beyond traditional search by utilizing RAG (Retrieval Augmented Generation) to provide coherent and contextually relevant results. Ayfie enhances AI accuracy, optimizes workflows, and offers flexible solutions for enterprise search and integration. The platform empowers businesses with generative AI capabilities, robust search engines, and secure data handling. With custom deployment options and seamless integration with existing systems, Ayfie helps organizations efficiently access and analyze large amounts of data to make data-driven decisions.

CUJO AI

CUJO AI is a global leader in cutting-edge cybersecurity and network intelligence solutions for network operators. The platform offers a range of services including Operator Intelligence, Digital Life Protection, and AI Platform. CUJO AI empowers network operators to enhance digital life protection for their customers both at home and on the go. By leveraging AI-powered cybersecurity, CUJO AI enables operators to improve customer value proposition, monetize networks, and reduce operating complexity and costs.

FlowX.AI

FlowX.AI is a Multi-Agent AI Platform designed for Banking and Insurance Modernization. It offers a cutting-edge AI-native agentic platform for building and deploying AI agents and mission-critical AI-enabled systems in highly regulated industries. The platform enables businesses to build the next generation of banking and insurance systems in weeks, not years, by providing faster development, time to market, and reduced maintenance and implementation costs.

Crayon Data

Crayon Data offers B2B AI solutions for enterprises through their platform maya.ai. The platform provides flexible building blocks to help businesses launch and scale quickly. With a cloud-agnostic full-stack solution, maya.ai enables real-world applications for data, customer management, and more. Crayon Data focuses on AI-led solutions to enhance customer experiences, turn raw data into valuable insights, and drive engagement through AI marketplaces. The platform also offers tools for travel planning, payment optimization, offer management, data analytics, influencer management, and more. Industries served include consumer banking, digital payments, travel, and consumer products.

Fluid AI

Fluid AI is an Enterprise Generative AI Solution Platform that offers advanced capabilities for Enterprise use-cases. It leverages organizational knowledge to function as an intelligent agent, supporting teams with easy access to precise answers, insights, reports, and creativity. The platform automates conversations across channels, enhances speed, accuracy, and scalability, and maintains personalized interactions. Fluid AI can integrate seamlessly with legacy systems, ensuring efficient AI adoption with Enterprise-level security.

Rozie AI

Rozie AI is an AI partner that helps create personalized experiences at scale for leading brands. The platform offers a middleware solution, agentic AI capabilities, and pre-built experience kits to manage the entire process from ideation to implementation. Rozie AI specializes in experience design, digital engagement, and applying artificial intelligence to future-proof product experiences. The company empowers clients to own their experiences while off-loading the tech stack maintenance. With customizable solutions, Rozie AI delivers speed, scalability, and control for innovative CX solutions.

Visor.ai

Visor.ai is an AI Agentic Platform for Enterprise Solutions Company that offers AI Agents capable of learning from businesses, taking actions across systems, and achieving up to 85% automation rate. The platform provides endless possibilities for transforming operations and enhancing customer interactions through intelligent AI experiences. With features like real-time visibility, actionable insights, safe AI, oversight, analytics, guardrails, and powered by leading technology Nexa, Visor.ai aims to empower businesses with advanced AI capabilities.

Infrrd

Infrrd is an intelligent document automation platform that offers advanced document extraction solutions. It leverages AI technology to enhance, classify, extract, and review documents with high accuracy, eliminating the need for human review. Infrrd provides effective process transformation solutions across various industries, such as mortgage, invoice, insurance, and audit QC. The platform is known for its world-class document extraction engine, supported by over 10 patents and award-winning algorithms. Infrrd's AI-powered automation streamlines document processing, improves data accuracy, and enhances operational efficiency for businesses.

Waanee AI

Waanee.ai is a leading AI solution for contact centers, offering a range of AI-powered tools and solutions to transform customer conversations. It provides services such as AI-powered conversation audit, cloud contact center with built-in CRM, intelligent virtual agents, and more. Waanee.ai aims to enhance customer engagement and satisfaction by leveraging advanced AI technology to optimize customer interactions and streamline operational processes.

Teneo.ai

Teneo.ai is an AI-driven platform that redefines contact center AI, customer service automation, and conversational IVR systems. It offers advanced TLML technology to boost AI accuracy to over +95% and enhance efficiency in customer interactions. Teneo helps contact centers achieve significant cost savings, reduce call misrouting, and engage customers proactively with personalized care. The platform is designed for high-volume contact centers seeking to elevate customer interaction quality without complexity, providing transformative results within hours.

Cognigy.AI

Cognigy is an AI-powered customer service platform that offers generative and conversational AI agents to transform customer engagement. The platform provides pre-trained AI agents for phone, voice chat, and messaging, along with agent assist solutions for sales and marketing. Cognigy.AI elevates contact centers across various industries by offering enterprise-grade capabilities such as low-code automation, voice connectivity, generative AI for CX transformation, AI-based semantic search, and knowledge management. The platform also supports multilingual customer journeys, live agent workspace, omnichannel reporting, and analytics.

79 - Open Source Tools

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

booster

Booster is a powerful inference accelerator designed for scaling large language models within production environments or for experimental purposes. It is built with performance and scaling in mind, supporting various CPUs and GPUs, including Nvidia CUDA, Apple Metal, and OpenCL cards. The tool can split large models across multiple GPUs, offering fast inference on machines with beefy GPUs. It supports both regular FP16/FP32 models and quantised versions, along with popular LLM architectures. Additionally, Booster features proprietary Janus Sampling for code generation and non-English languages.

xFasterTransformer

xFasterTransformer is an optimized solution for Large Language Models (LLMs) on the X86 platform, providing high performance and scalability for inference on mainstream LLM models. It offers C++ and Python APIs for easy integration, along with example codes and benchmark scripts. Users can prepare models in a different format, convert them, and use the APIs for tasks like encoding input prompts, generating token ids, and serving inference requests. The tool supports various data types and models, and can run in single or multi-rank modes using MPI. A web demo based on Gradio is available for popular LLM models like ChatGLM and Llama2. Benchmark scripts help evaluate model inference performance quickly, and MLServer enables serving with REST and gRPC interfaces.

amazon-transcribe-live-call-analytics

The Amazon Transcribe Live Call Analytics (LCA) with Agent Assist Sample Solution is designed to help contact centers assess and optimize caller experiences in real time. It leverages Amazon machine learning services like Amazon Transcribe, Amazon Comprehend, and Amazon SageMaker to transcribe and extract insights from contact center audio. The solution provides real-time supervisor and agent assist features, integrates with existing contact centers, and offers a scalable, cost-effective approach to improve customer interactions. The end-to-end architecture includes features like live call transcription, call summarization, AI-powered agent assistance, and real-time analytics. The solution is event-driven, ensuring low latency and seamless processing flow from ingested speech to live webpage updates.

ai-lab-recipes

This repository contains recipes for building and running containerized AI and LLM applications with Podman. It provides model servers that serve machine-learning models via an API, allowing developers to quickly prototype new AI applications locally. The recipes include components like model servers and AI applications for tasks such as chat, summarization, object detection, etc. Images for sample applications and models are available in `quay.io`, and bootable containers for AI training on Linux OS are enabled.

XLearning

XLearning is a scheduling platform for big data and artificial intelligence, supporting various machine learning and deep learning frameworks. It runs on Hadoop Yarn and integrates frameworks like TensorFlow, MXNet, Caffe, Theano, PyTorch, Keras, XGBoost. XLearning offers scalability, compatibility, multiple deep learning framework support, unified data management based on HDFS, visualization display, and compatibility with code at native frameworks. It provides functions for data input/output strategies, container management, TensorBoard service, and resource usage metrics display. XLearning requires JDK >= 1.7 and Maven >= 3.3 for compilation, and deployment on CentOS 7.2 with Java >= 1.7 and Hadoop 2.6, 2.7, 2.8.

openai-forward

OpenAI-Forward is an efficient forwarding service implemented for large language models. Its core features include user request rate control, token rate limiting, intelligent prediction caching, log management, and API key management, aiming to provide efficient and convenient model forwarding services. Whether proxying local language models or cloud-based language models like LocalAI or OpenAI, OpenAI-Forward makes it easy. Thanks to support from libraries like uvicorn, aiohttp, and asyncio, OpenAI-Forward achieves excellent asynchronous performance.

mslearn-knowledge-mining

The mslearn-knowledge-mining repository contains lab files for Azure AI Knowledge Mining modules. It provides resources for learning and implementing knowledge mining techniques using Azure AI services. The repository is designed to help users explore and understand how to leverage AI for knowledge mining purposes within the Azure ecosystem.

duix.ai

Duix is a silicon-based digital human SDK for intelligent interaction, providing users with instant virtual human interaction experience on devices like Android and iOS. The SDK offers intuitive effect display and supports user customization through open documentation. It is fully open-source, allowing developers to understand its workings, optimize, and innovate further.

extension-gen-ai

The Looker GenAI Extension provides code examples and resources for building a Looker Extension that integrates with Vertex AI Large Language Models (LLMs). Users can leverage the power of LLMs to enhance data exploration and analysis within Looker. The extension offers generative explore functionality to ask natural language questions about data and generative insights on dashboards to analyze data by asking questions. It leverages components like BQML Remote Models, BQML Remote UDF with Vertex AI, and Custom Fine Tune Model for different integration options. Deployment involves setting up infrastructure with Terraform and deploying the Looker Extension by creating a Looker project, copying extension files, configuring BigQuery connection, connecting to Git, and testing the extension. Users can save example prompts and configure user settings for the extension. Development of the Looker Extension environment includes installing dependencies, starting the development server, and building for production.

Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services

This solution accelerator is built on Azure Cognitive Search Service and Azure OpenAI Service to synthesize post-contact center transcripts for intelligent contact center scenarios. It converts raw transcripts into customer call summaries to extract insights around product and service performance. Key features include conversation summarization, key phrase extraction, speech-to-text transcription, sensitive information extraction, sentiment analysis, and opinion mining. The tool enables data professionals to quickly analyze call logs for improvement in contact center operations.

LLM_Learning_Database

LLM Learning Database is a comprehensive repository dedicated to AI large models, offering a curated collection of resources covering fundamental knowledge, cutting-edge technologies, and practical applications. It includes guides, case studies, code examples for model training, optimization, and deployment, as well as insightful articles from industry experts and scholars. Whether you are a beginner or an experienced learner in the field of AI large models, this repository aims to support your learning journey and foster continuous growth and progress.

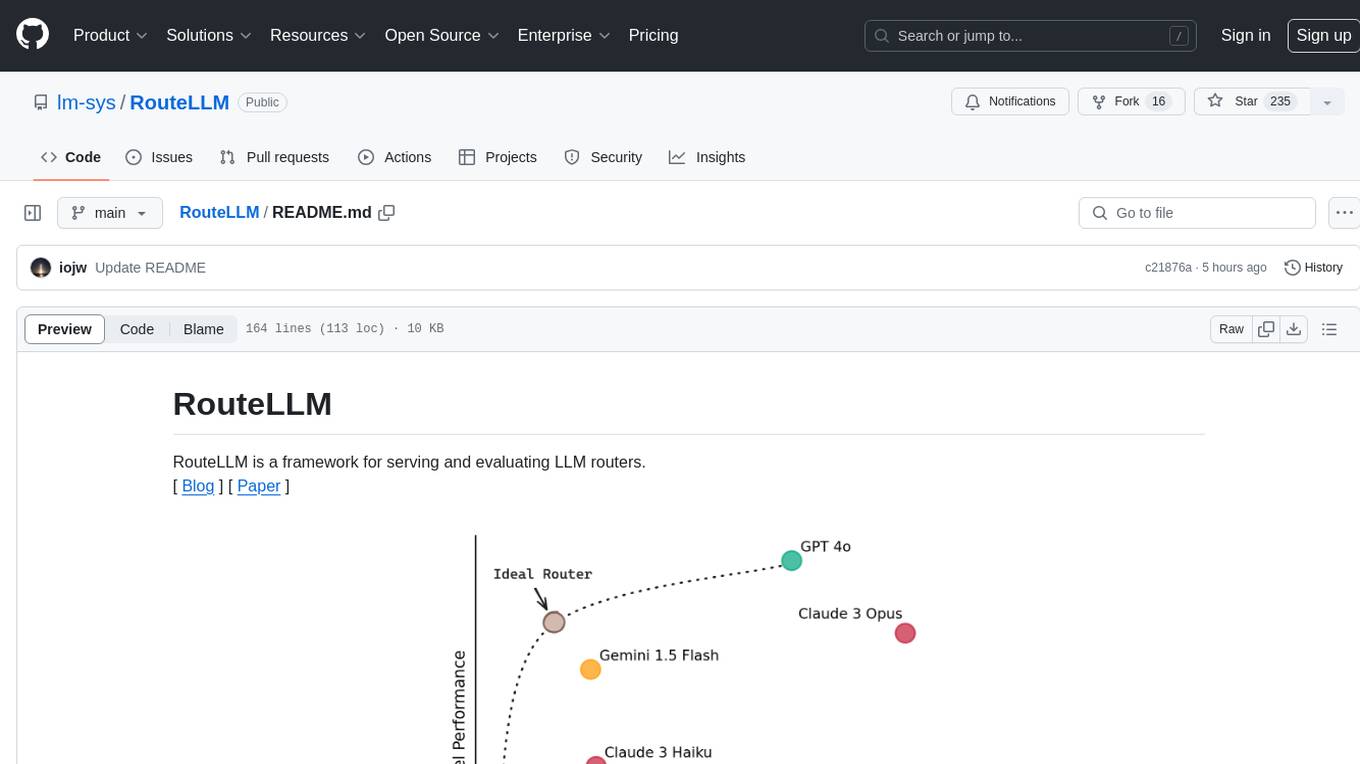

RouteLLM

RouteLLM is a framework for serving and evaluating LLM routers. It allows users to launch an OpenAI-compatible API that routes requests to the best model based on cost thresholds. Trained routers are provided to reduce costs while maintaining performance. Users can easily extend the framework, compare router performance, and calibrate cost thresholds. RouteLLM supports multiple routing strategies and benchmarks, offering a lightweight server and evaluation framework. It enables users to evaluate routers on benchmarks, calibrate thresholds, and modify model pairs. Contributions for adding new routers and benchmarks are welcome.

Thor

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

aws-bedrock-with-rag-and-react

This solution provides a low-code ReactJS application to prototype and vet business use cases for GenAI using Retrieval Augmented Generation (RAG). It includes a backend Flask application that uses LangChain to provide PDF data as embeddings to a text-gen model via Amazon Bedrock and a vector database with FAISS or Kendra Index. The solution utilizes Amazon Bedrock as the only cost-generating AWS service.

generative-ai-sagemaker-cdk-demo

This repository showcases how to deploy generative AI models from Amazon SageMaker JumpStart using the AWS CDK. Generative AI is a type of AI that can create new content and ideas, such as conversations, stories, images, videos, and music. The repository provides a detailed guide on deploying image and text generative AI models, utilizing pre-trained models from SageMaker JumpStart. The web application is built on Streamlit and hosted on Amazon ECS with Fargate. It interacts with the SageMaker model endpoints through Lambda functions and Amazon API Gateway. The repository also includes instructions on setting up the AWS CDK application, deploying the stacks, using the models, and viewing the deployed resources on the AWS Management Console.

aws-ai-intelligent-document-processing

This repository is part of Intelligent Document Processing with AWS AI Services workshop. It aims to automate the extraction of information from complex content in various document formats such as insurance claims, mortgages, healthcare claims, contracts, and legal contracts using AWS Machine Learning services like Amazon Textract and Amazon Comprehend. The repository provides hands-on labs to familiarize users with these AI services and build solutions to automate business processes that rely on manual inputs and intervention across different file types and formats.

llm-on-ray

LLM-on-Ray is a comprehensive solution for building, customizing, and deploying Large Language Models (LLMs). It simplifies complex processes into manageable steps by leveraging the power of Ray for distributed computing. The tool supports pretraining, finetuning, and serving LLMs across various hardware setups, incorporating industry and Intel optimizations for performance. It offers modular workflows with intuitive configurations, robust fault tolerance, and scalability. Additionally, it provides an Interactive Web UI for enhanced usability, including a chatbot application for testing and refining models.

call-center-ai

Call Center AI is an AI-powered call center solution leveraging Azure and OpenAI GPT. It allows for AI agent-initiated phone calls or direct calls to the bot from a configured phone number. The bot is customizable for various industries like insurance, IT support, and customer service, with features such as accessing claim information, conversation history, language change, SMS sending, and more. The project is a proof of concept showcasing the integration of Azure Communication Services, Azure Cognitive Services, and Azure OpenAI for an automated call center solution.

superduper

superduper.io is a Python framework that integrates AI models, APIs, and vector search engines directly with existing databases. It allows hosting of models, streaming inference, and scalable model training/fine-tuning. Key features include integration of AI with data infrastructure, inference via change-data-capture, scalable model training, model chaining, simple Python interface, Python-first approach, working with difficult data types, feature storing, and vector search capabilities. The tool enables users to turn their existing databases into centralized repositories for managing AI model inputs and outputs, as well as conducting vector searches without the need for specialized databases.

SuperKnowa

SuperKnowa is a fast framework to build Enterprise RAG (Retriever Augmented Generation) Pipelines at Scale, powered by watsonx. It accelerates Enterprise Generative AI applications to get prod-ready solutions quickly on private data. The framework provides pluggable components for tackling various Generative AI use cases using Large Language Models (LLMs), allowing users to assemble building blocks to address challenges in AI-driven text generation. SuperKnowa is battle-tested from 1M to 200M private knowledge base & scaled to billions of retriever tokens.

jina

Jina is a tool that allows users to build multimodal AI services and pipelines using cloud-native technologies. It provides a Pythonic experience for serving ML models and transitioning from local deployment to advanced orchestration frameworks like Docker-Compose, Kubernetes, or Jina AI Cloud. Users can build and serve models for any data type and deep learning framework, design high-performance services with easy scaling, serve LLM models while streaming their output, integrate with Docker containers via Executor Hub, and host on CPU/GPU using Jina AI Cloud. Jina also offers advanced orchestration and scaling capabilities, a smooth transition to the cloud, and easy scalability and concurrency features for applications. Users can deploy to their own cloud or system with Kubernetes and Docker Compose integration, and even deploy to JCloud for autoscaling and monitoring.

mslearn-ai-vision

The 'mslearn-ai-vision' repository contains lab files for Azure AI Vision modules. It provides hands-on exercises and resources for learning about AI vision capabilities on the Azure platform. The labs cover topics such as image recognition, object detection, and image classification using Azure's AI services. By following the lab exercises, users can gain practical experience in building and deploying AI vision solutions in the cloud.

models

The Intel® AI Reference Models repository contains links to pre-trained models, sample scripts, best practices, and tutorials for popular open-source machine learning models optimized by Intel to run on Intel® Xeon® Scalable processors and Intel® Data Center GPUs. It aims to replicate the best-known performance of target model/dataset combinations in optimally-configured hardware environments. The repository will be deprecated upon the publication of v3.2.0 and will no longer be maintained or published.

llm-app-stack

LLM App Stack, also known as Emerging Architectures for LLM Applications, is a comprehensive list of available tools, projects, and vendors at each layer of the LLM app stack. It covers various categories such as Data Pipelines, Embedding Models, Vector Databases, Playgrounds, Orchestrators, APIs/Plugins, LLM Caches, Logging/Monitoring/Eval, Validators, LLM APIs (proprietary and open source), App Hosting Platforms, Cloud Providers, and Opinionated Clouds. The repository aims to provide a detailed overview of tools and projects for building, deploying, and maintaining enterprise data solutions, AI models, and applications.

Conversational-Azure-OpenAI-Accelerator

The Conversational Azure OpenAI Accelerator is a tool designed to provide rapid, no-cost custom demos tailored to customer use cases, from internal HR/IT to external contact centers. It focuses on top use cases of GenAI conversation and summarization, plus live backend data integration. The tool automates conversations across voice and text channels, providing a valuable way to save money and improve customer and employee experience. By combining Azure OpenAI + Cognitive Search, users can efficiently deploy a ChatGPT experience using web pages, knowledge base articles, and data sources. The tool enables simultaneous deployment of conversational content to chatbots, IVR, voice assistants, and more in one click, eliminating the need for in-depth IT involvement. It leverages Microsoft's advanced AI technologies, resulting in a conversational experience that can converse in human-like dialogue, respond intelligently, and capture content for omni-channel unified analytics.

higress

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

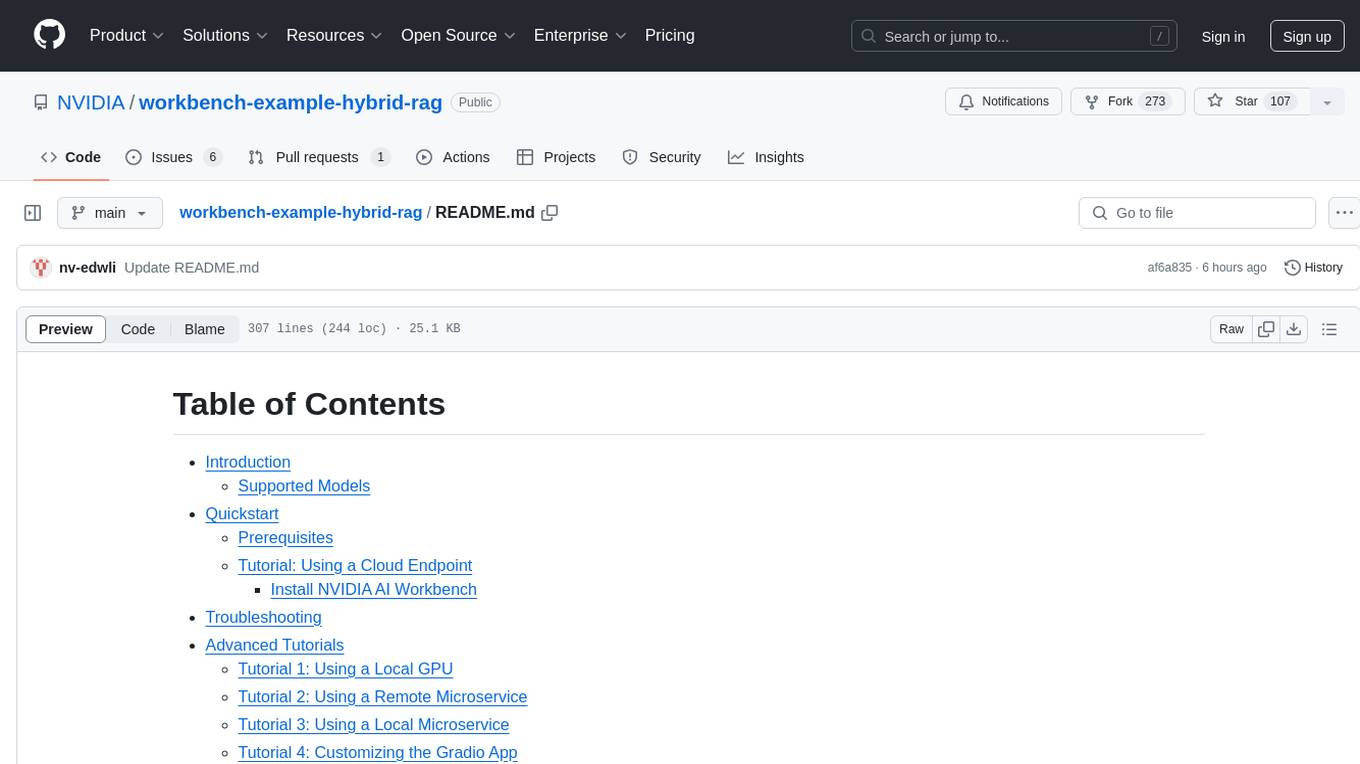

workbench-example-hybrid-rag

This NVIDIA AI Workbench project is designed for developing a Retrieval Augmented Generation application with a customizable Gradio Chat app. It allows users to embed documents into a locally running vector database and run inference locally on a Hugging Face TGI server, in the cloud using NVIDIA inference endpoints, or using microservices via NVIDIA Inference Microservices (NIMs). The project supports various models with different quantization options and provides tutorials for using different inference modes. Users can troubleshoot issues, customize the Gradio app, and access advanced tutorials for specific tasks.

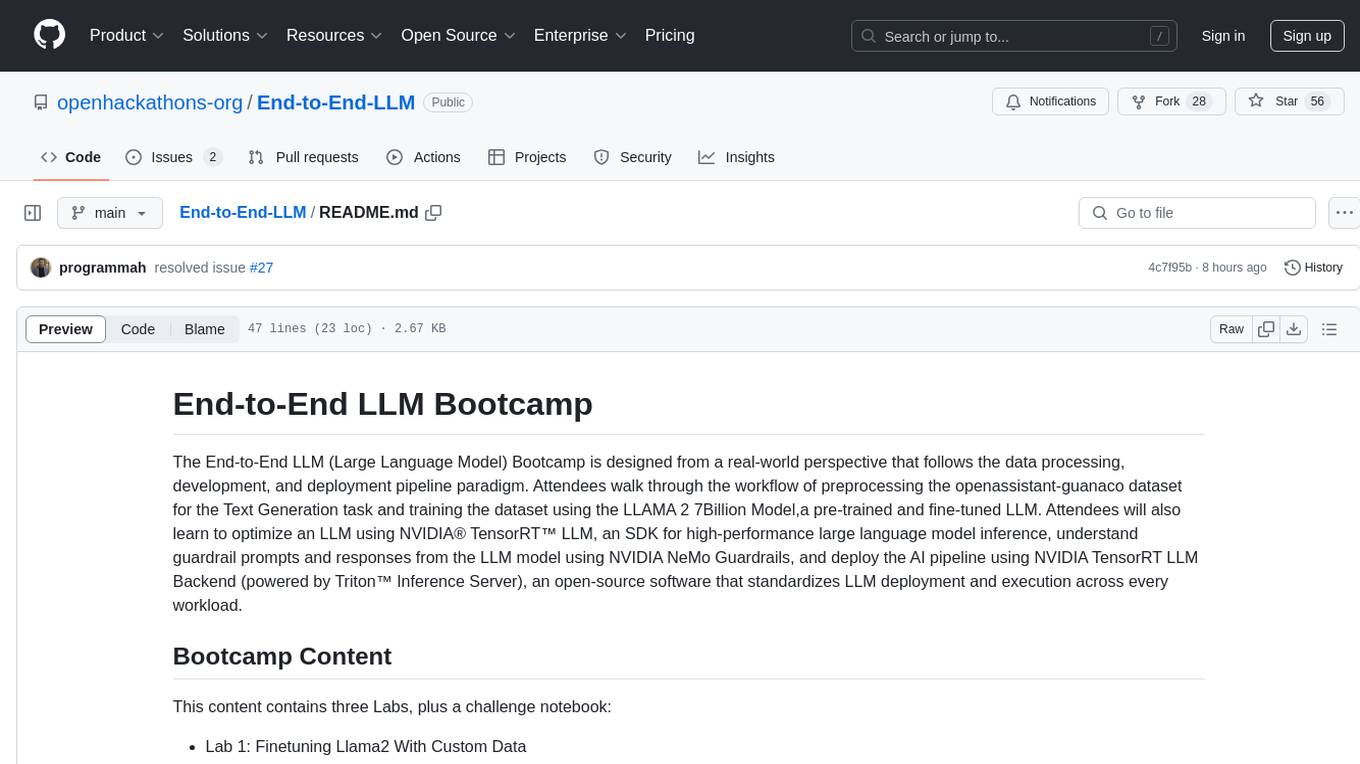

End-to-End-LLM

The End-to-End LLM Bootcamp is a comprehensive training program that covers the entire process of developing and deploying large language models. Participants learn to preprocess datasets, train models, optimize performance using NVIDIA technologies, understand guardrail prompts, and deploy AI pipelines using Triton Inference Server. The bootcamp includes labs, challenges, and practical applications, with a total duration of approximately 7.5 hours. It is designed for individuals interested in working with advanced language models and AI technologies.

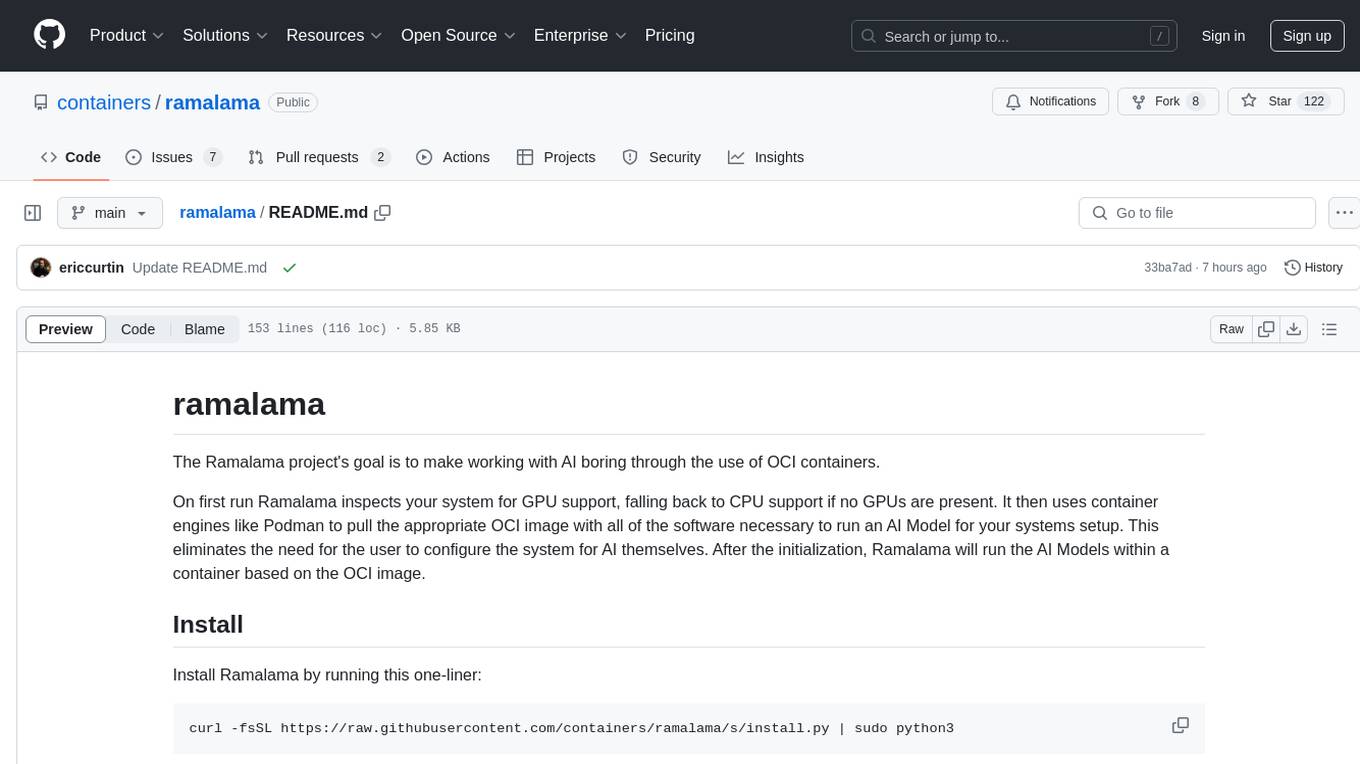

ramalama

The Ramalama project simplifies working with AI by utilizing OCI containers. It automatically detects GPU support, pulls necessary software in a container, and runs AI models. Users can list, pull, run, and serve models easily. The tool aims to support various GPUs and platforms in the future, making AI setup hassle-free.

guidance-for-a-multi-tenant-generative-ai-gateway-with-cost-and-usage-tracking-on-aws

This repository provides guidance on building a multi-tenant SaaS solution for accessing foundation models using Amazon Bedrock and Amazon SageMaker. It helps enterprise IT teams track usage and costs of foundation models, regulate access, and provide visibility to cost centers. The solution includes an API Gateway design pattern for standardization and governance, enabling loose coupling between model consumers and endpoint services. The CDK Stack deploys resources for private networking, API Gateway, Lambda functions, DynamoDB table, EventBridge, S3 buckets, and Cloudwatch logs.

ai-reference-models

The Intel® AI Reference Models repository contains links to pre-trained models, sample scripts, best practices, and tutorials for popular open-source machine learning models optimized by Intel to run on Intel® Xeon® Scalable processors and Intel® Data Center GPUs. The purpose is to quickly replicate complete software environments showcasing the AI capabilities of Intel platforms. It includes optimizations for popular deep learning frameworks like TensorFlow and PyTorch, with additional plugins/extensions for improved performance. The repository is licensed under Apache License Version 2.0.

crazyai-ml

The 'crazyai-ml' repository is a collection of resources related to machine learning, specifically focusing on explaining artificial intelligence models. It includes articles, code snippets, and tutorials covering various machine learning algorithms, data analysis, model training, and deployment. The content aims to provide a comprehensive guide for beginners in the field of AI, offering practical implementations and insights into popular machine learning packages and model tuning techniques. The repository also addresses the integration of AI models and frontend-backend concepts, making it a valuable resource for individuals interested in AI applications.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

END-TO-END-GENERATIVE-AI-PROJECTS

The 'END TO END GENERATIVE AI PROJECTS' repository is a collection of awesome industry projects utilizing Large Language Models (LLM) for various tasks such as chat applications with PDFs, image to speech generation, video transcribing and summarizing, resume tracking, text to SQL conversion, invoice extraction, medical chatbot, financial stock analysis, and more. The projects showcase the deployment of LLM models like Google Gemini Pro, HuggingFace Models, OpenAI GPT, and technologies such as Langchain, Streamlit, LLaMA2, LLaMAindex, and more. The repository aims to provide end-to-end solutions for different AI applications.

nexa-sdk

Nexa SDK is a comprehensive toolkit supporting ONNX and GGML models for text generation, image generation, vision-language models (VLM), and text-to-speech (TTS) capabilities. It offers an OpenAI-compatible API server with JSON schema mode and streaming support, along with a user-friendly Streamlit UI. Users can run Nexa SDK on any device with Python environment, with GPU acceleration supported. The toolkit provides model support, conversion engine, inference engine for various tasks, and differentiating features from other tools.

SQL-AI-samples

This repository contains samples to help design AI applications using data from an Azure SQL Database. It showcases technical concepts and workflows integrating Azure SQL data with popular AI components both within and outside Azure. The samples cover various AI features such as Azure Cognitive Services, Promptflow, OpenAI, Vanna.AI, Content Moderation, LangChain, and more. Additionally, there are end-to-end samples like Similar Content Finder, Session Conference Assistant, Chatbots, Vectorization, SQL Server Database Development, Redis Vector Search, and Similarity Search with FAISS.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface, independent development documentation page support, service monitoring page configuration support, and third-party login support. It also optimizes interface elements, user registration time support, data operation button positioning, and more.

FocusOnAI_24

The .NET Conf Focus on AI 2024 repository contains content from the event focusing on incorporating AI into .NET applications and services. It includes slides and demos showcasing various AI-powered web apps, AI models, generative AI apps, and more. The repository serves as a resource for developers looking to explore AI integration with .NET technologies.

uni-api

uni-api is a project that unifies the management of large language model APIs, allowing you to call multiple backend services through a single unified API interface, converting them all to OpenAI format, and supporting load balancing. It supports various backend services such as OpenAI, Anthropic, Gemini, Vertex, Azure, xai, Cohere, Groq, Cloudflare, OpenRouter, and more. The project offers features like no front-end, pure configuration file setup, unified management of multiple backend services, support for multiple standard OpenAI format interfaces, rate limiting, automatic retry, channel cooling, fine-grained model timeout settings, and fine-grained permission control.

otto-m8

otto-m8 is a flowchart based automation platform designed to run deep learning workloads with minimal to no code. It provides a user-friendly interface to spin up a wide range of AI models, including traditional deep learning models and large language models. The tool deploys Docker containers of workflows as APIs for integration with existing workflows, building AI chatbots, or standalone applications. Otto-m8 operates on an Input, Process, Output paradigm, simplifying the process of running AI models into a flowchart-like UI.

CAG

Cache-Augmented Generation (CAG) is an alternative paradigm to Retrieval-Augmented Generation (RAG) that eliminates real-time retrieval delays and errors by preloading all relevant resources into the model's context. CAG leverages extended context windows of large language models (LLMs) to generate responses directly, providing reduced latency, improved reliability, and simplified design. While CAG has limitations in knowledge size and context length, advancements in LLMs are addressing these issues, making CAG a practical and scalable alternative for complex applications.

RagaAI-Catalyst

RagaAI Catalyst is a comprehensive platform designed to enhance the management and optimization of LLM projects. It offers features such as project management, dataset management, evaluation management, trace management, prompt management, synthetic data generation, and guardrail management. These functionalities enable efficient evaluation and safeguarding of LLM applications.

ai-sdk-js

SAP Cloud SDK for AI is the official Software Development Kit (SDK) for SAP AI Core, SAP Generative AI Hub, and Orchestration Service. It allows users to integrate chat completion into business applications, leverage generative AI capabilities for templating, grounding, data masking, and content filtering. The SDK provides tools for managing scenarios, workflows, data preprocessing, model training pipelines, batch inference jobs, deploying inference endpoints, and orchestrating AI activities. Users can set up their SAP AI Core instance using the SDK, which includes packages for AI API, foundation models, LangChain model clients, and orchestration capabilities. The SDK also offers a sample project for demonstrating its usage in TypeScript/JavaScript applications, along with guidelines for local testing and contribution.

node-sdk

The ChatBotKit Node SDK is a JavaScript-based platform for building conversational AI bots and agents. It offers easy setup, serverless compatibility, modern framework support, customizability, and multi-platform deployment. With capabilities like multi-modal and multi-language support, conversation management, chat history review, custom datasets, and various integrations, this SDK enables users to create advanced chatbots for websites, mobile apps, and messaging platforms.

aibrix

AIBrix is an open-source initiative providing essential building blocks for scalable GenAI inference infrastructure. It delivers a cloud-native solution optimized for deploying, managing, and scaling large language model (LLM) inference, tailored to enterprise needs. Key features include High-Density LoRA Management, LLM Gateway and Routing, LLM App-Tailored Autoscaler, Unified AI Runtime, Distributed Inference, Distributed KV Cache, Cost-efficient Heterogeneous Serving, and GPU Hardware Failure Detection.

watsonx-ai-samples

Sample notebooks for IBM Watsonx.ai for IBM Cloud and IBM Watsonx.ai software product. The notebooks demonstrate capabilities such as running experiments on model building using AutoAI or Deep Learning, deploying third-party models as web services or batch jobs, monitoring deployments with OpenScale, managing model lifecycles, inferencing Watsonx.ai foundation models, and integrating LangChain with Watsonx.ai. Notebooks with Python code and the Python SDK can be found in the `python_sdk` folder. The REST API examples are organized in the `rest_api` folder.

openai-scala-client

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

oba-live-tool

The oba live tool is a small tool for Douyin small shops and Kuaishou Baiying live broadcasts. It features multiple account management, intelligent message assistant, automatic product explanation, AI automatic reply, and AI intelligent assistant. The tool requires Windows 10 or above, Chrome or Edge browser, and a valid account for Douyin small shops or Kuaishou Baiying. Users can download the tool from the Releases page, connect to the control panel, set API keys for AI functions, and configure auto-reply prompts. The tool is licensed under the MIT license.

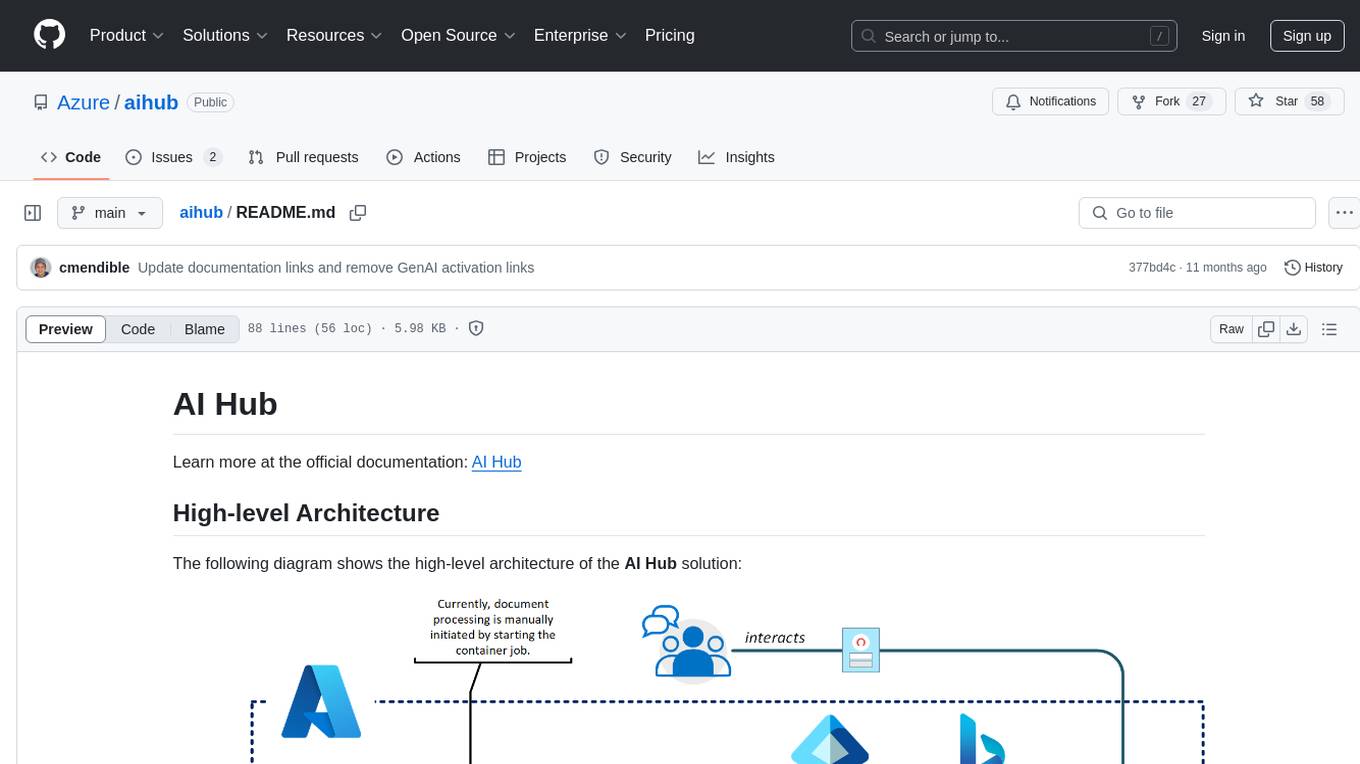

aihub

AI Hub is a comprehensive solution that leverages artificial intelligence and cloud computing to provide functionalities such as document search and retrieval, call center analytics, image analysis, brand reputation analysis, form analysis, document comparison, and content safety moderation. It integrates various Azure services like Cognitive Search, ChatGPT, Azure Vision Services, and Azure Document Intelligence to offer scalable, extensible, and secure AI-powered capabilities for different use cases and scenarios.

AmazonSageMakerCourse

Amazon SageMaker Course is a comprehensive guide for AWS Certified Machine Learning Specialty (MLS-C01) that covers training, optimizing, deploying, and integrating machine learning models in the AWS cloud. The course includes hands-on experience with AWS built-in algorithms, Bring Your Own models, and ready-to-use AI capabilities. It also provides a complete guide to AWS Certified Machine Learning – Specialty certification, along with a high-quality timed practice test. Participants will learn how to integrate trained models into their applications and receive prompt support through the course Q&A forum and private messaging.

ClicShopping_V3

ClicShoppingAI is a powerful open-source Ecommerce solution that supports B2B, B2C, and B2B-B2C. Integrated with cutting-edge generative artificial intelligence systems like Gpt and Ollama, it helps merchants increase turnover and competitiveness for free. With AI capabilities, it optimizes inventory, offers personalized recommendations, and provides top-notch customer service. The solution is modular, lightweight, and user-friendly, with a seamless, responsive design for all devices. Installation is easy, empowering ongoing development through community support. Features include GPT API integration, generative AI functionalities, real-time safety stock predictive, WYSIWYG product description creation, image editor management, full SEO optimization, payment and shipping modules, extension system, GDPR compliance, multi-language support, and more.

amazon-sagemaker-llm-fine-tuning-remote-decorator

This repository provides interactive fine-tuning of Foundation Models with Amazon SageMaker Training using the @remote decorator. It showcases the use of SageMaker AI capabilities for Small/Large Language Models fine-tuning by employing different distribution techniques like FSDP and DDP. Users can run the repository from Amazon SageMaker Studio or a local IDE. The notebooks cover various supervised and self-supervised fine-tuning scenarios for different models, along with instructions for updating configurations based on the AWS region and Python version compatibility.

open-responses

OpenResponses API provides enterprise-grade AI capabilities through a powerful API, simplifying development and deployment while ensuring complete data control. It offers automated tracing, integrated RAG for contextual information retrieval, pre-built tool integrations, self-hosted architecture, and an OpenAI-compatible interface. The toolkit addresses development challenges like feature gaps and integration complexity, as well as operational concerns such as data privacy and operational control. Engineering teams can benefit from improved productivity, production readiness, compliance confidence, and simplified architecture by choosing OpenResponses.

arthur-engine

The Arthur Engine is a comprehensive tool for monitoring and governing AI/ML workloads. It provides evaluation and benchmarking of machine learning models, guardrails enforcement, and extensibility for fitting into various application architectures. With support for a wide range of evaluation metrics and customizable features, the tool aims to improve model understanding, optimize generative AI outputs, and prevent data-security and compliance risks. Key features include real-time guardrails, model performance monitoring, feature importance visualization, error breakdowns, and support for custom metrics and models integration.

arkflow

ArkFlow is a high-performance Rust stream processing engine that seamlessly integrates AI capabilities, providing powerful real-time data processing and intelligent analysis. It supports multiple input/output sources and processors, enabling easy loading and execution of machine learning models for streaming data and inference, anomaly detection, and complex event processing. The tool is built on Rust and Tokio async runtime, offering excellent performance and low latency. It features built-in SQL queries, Python script, JSON processing, Protobuf encoding/decoding, and batch processing capabilities. ArkFlow is extensible with a modular design, making it easy to extend with new components.

neuro-san-studio

Neuro SAN Studio is an open-source library for building agent networks across various industries. It simplifies the development of collaborative AI systems by enabling users to create sophisticated multi-agent applications using declarative configuration files. The tool offers features like data-driven configuration, adaptive communication protocols, safe data handling, dynamic agent network designer, flexible tool integration, robust traceability, and cloud-agnostic deployment. It has been used in various use-cases such as automated generation of multi-agent configurations, airline policy assistance, banking operations, market analysis in consumer packaged goods, insurance claims processing, intranet knowledge management, retail operations, telco network support, therapy vignette supervision, and more.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

AIClient-2-API

AIClient-2-API is a versatile and lightweight API proxy designed for developers, providing ample free API request quotas and comprehensive support for various mainstream large models like Gemini, Qwen Code, Claude, etc. It converts multiple backend APIs into standard OpenAI format interfaces through a Node.js HTTP server. The project adopts a modern modular architecture, supports strategy and adapter patterns, comes with complete test coverage and health check mechanisms, and is ready to use after 'npm install'. By easily switching model service providers in the configuration file, any OpenAI-compatible client or application can seamlessly access different large model capabilities through the same API address, eliminating the hassle of maintaining multiple sets of configurations for different services and dealing with incompatible interfaces.

kserve

KServe provides a Kubernetes Custom Resource Definition for serving predictive and generative machine learning (ML) models. It encapsulates the complexity of autoscaling, networking, health checking, and server configuration to bring cutting edge serving features like GPU Autoscaling, Scale to Zero, and Canary Rollouts to ML deployments. KServe enables a simple, pluggable, and complete story for Production ML Serving including prediction, pre-processing, post-processing, and explainability. It is a standard, cloud agnostic Model Inference Platform for serving predictive and generative AI models on Kubernetes, built for highly scalable use cases.

ai-core-samples

This repository contains sample notebooks and workflow templates that enable users to have a quick hands-on experience with SAP AI Core. The provided content demonstrates how to productize a simple Business ML use case to SAP AI Core with a Plug and Play approach. Users need to meet certain prerequisites before using the notebooks and workflow templates, such as going through tutorials, provisioning a SAP AI Core instance, having a GitHub account, access to an Object Store like AWS, and access to DockerHub or Docker for creating Docker images.

mcp-ts-template

The MCP TypeScript Server Template is a production-grade framework for building powerful and scalable Model Context Protocol servers with TypeScript. It features built-in observability, declarative tooling, robust error handling, and a modular, DI-driven architecture. The template is designed to be AI-agent-friendly, providing detailed rules and guidance for developers to adhere to best practices. It enforces architectural principles like 'Logic Throws, Handler Catches' pattern, full-stack observability, declarative components, and dependency injection for decoupling. The project structure includes directories for configuration, container setup, server resources, services, storage, utilities, tests, and more. Configuration is done via environment variables, and key scripts are available for development, testing, and publishing to the MCP Registry.

fenic

fenic is an opinionated DataFrame framework from typedef.ai for building AI and agentic applications. It transforms unstructured and structured data into insights using familiar DataFrame operations enhanced with semantic intelligence. With support for markdown, transcripts, and semantic operators, plus efficient batch inference across various model providers. fenic is purpose-built for LLM inference, providing a query engine designed for AI workloads, semantic operators as first-class citizens, native unstructured data support, production-ready infrastructure, and a familiar DataFrame API.

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

youtu-graphrag

Youtu-GraphRAG is a vertically unified agentic paradigm that connects the entire framework based on graph schema, allowing seamless domain transfer with minimal intervention. It introduces key innovations like schema-guided hierarchical knowledge tree construction, dually-perceived community detection, agentic retrieval, advanced construction and reasoning capabilities, fair anonymous dataset 'AnonyRAG', and unified configuration management. The framework demonstrates robustness with lower token cost and higher accuracy compared to state-of-the-art methods, enabling enterprise-scale deployment with minimal manual intervention for new domains.

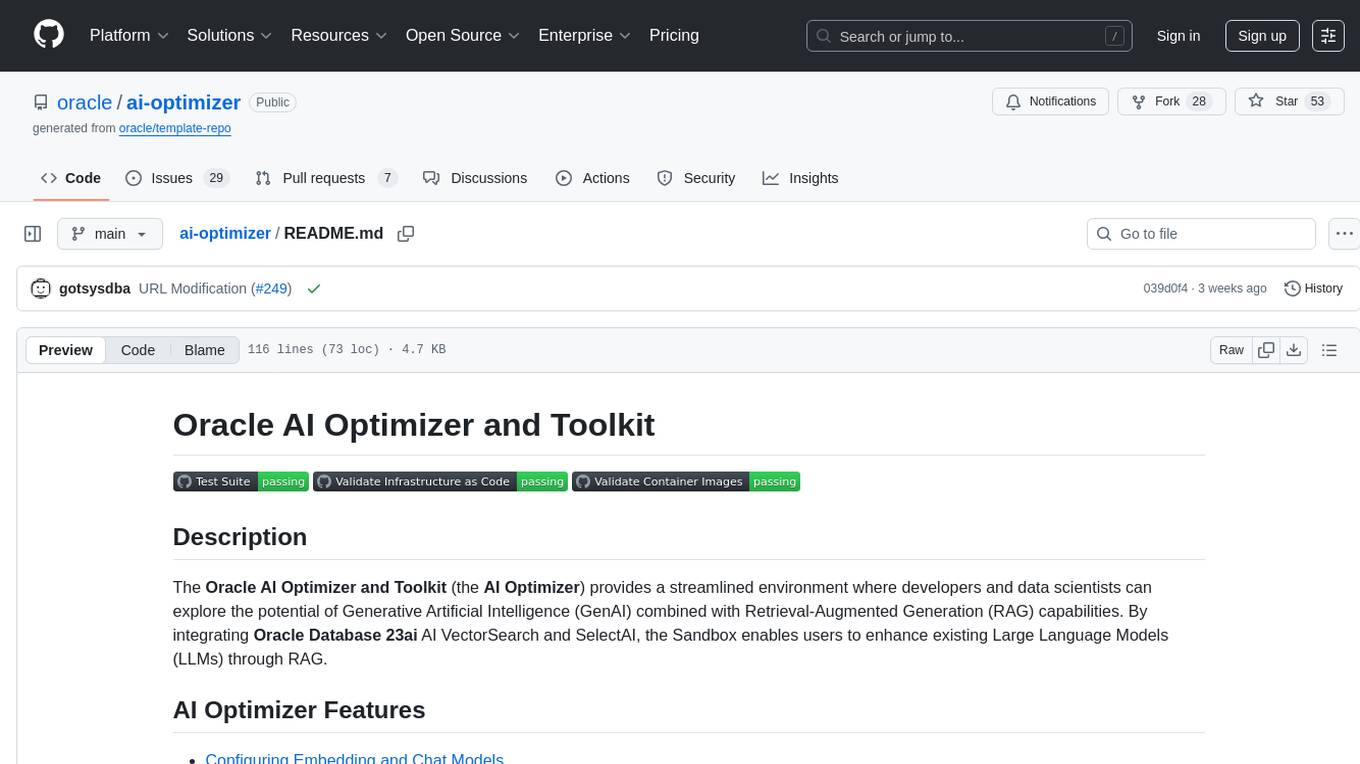

ai-optimizer

The Oracle AI Optimizer and Toolkit provides a streamlined environment for developers and data scientists to explore Generative Artificial Intelligence (GenAI) and Retrieval-Augmented Generation (RAG) capabilities. It integrates Oracle Database 23ai AI VectorSearch and SelectAI to enhance Large Language Models (LLMs) through RAG.

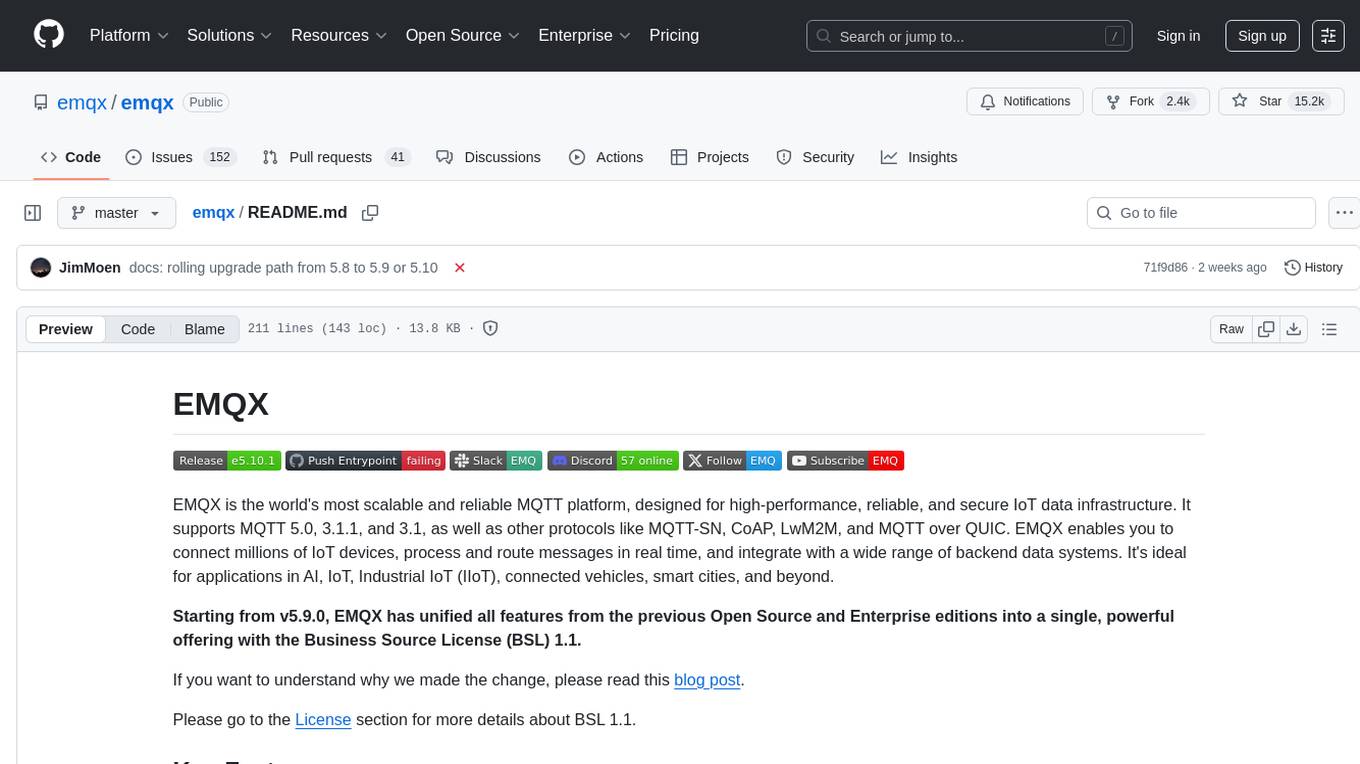

emqx

EMQX is a highly scalable and reliable MQTT platform designed for IoT data infrastructure. It supports various protocols like MQTT 5.0, 3.1.1, and 3.1, as well as MQTT-SN, CoAP, LwM2M, and MQTT over QUIC. EMQX allows connecting millions of IoT devices, processing messages in real time, and integrating with backend data systems. It is suitable for applications in AI, IoT, IIoT, connected vehicles, smart cities, and more. The tool offers features like massive scalability, powerful rule engine, flow designer, AI processing, robust security, observability, management, extensibility, and a unified experience with the Business Source License (BSL) 1.1.

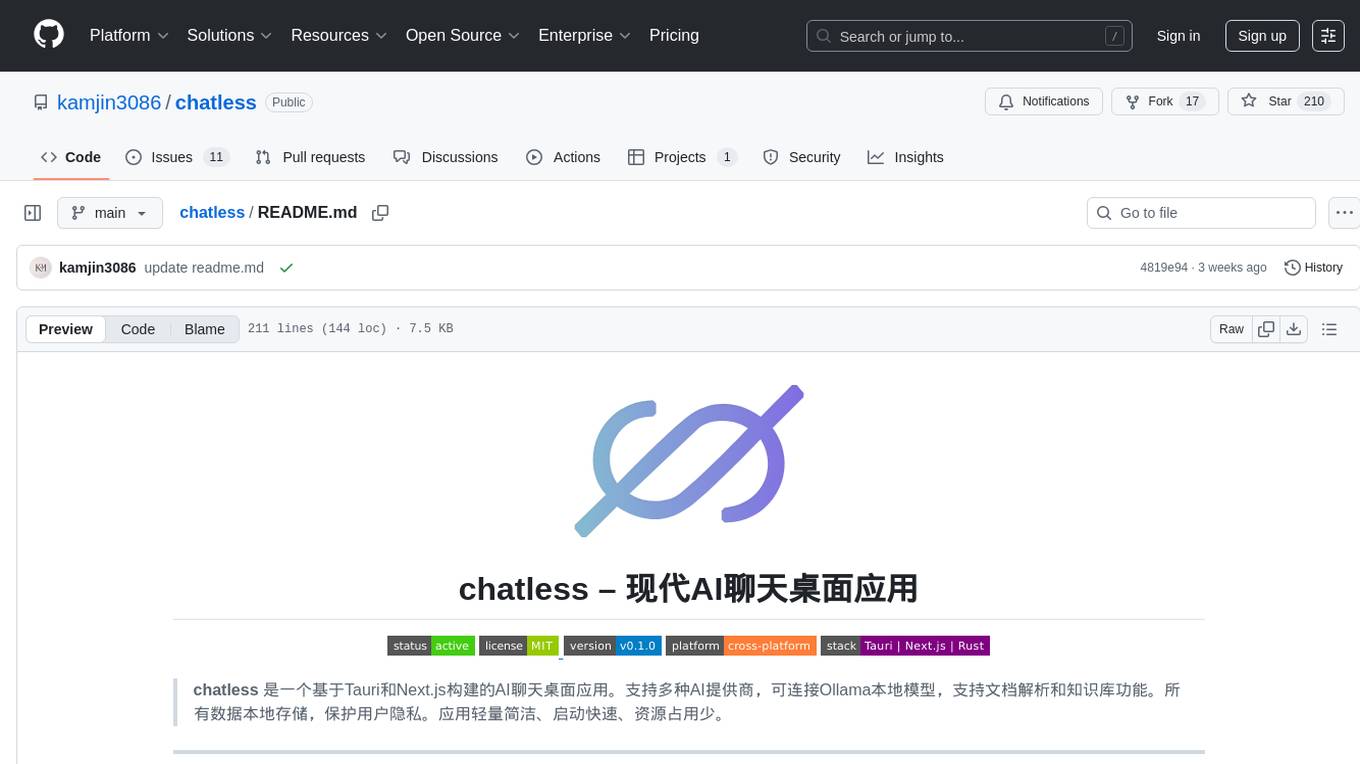

chatless

Chatless is a modern AI chat desktop application built on Tauri and Next.js. It supports multiple AI providers, can connect to local Ollama models, supports document parsing and knowledge base functions. All data is stored locally to protect user privacy. The application is lightweight, simple, starts quickly, and consumes minimal resources.

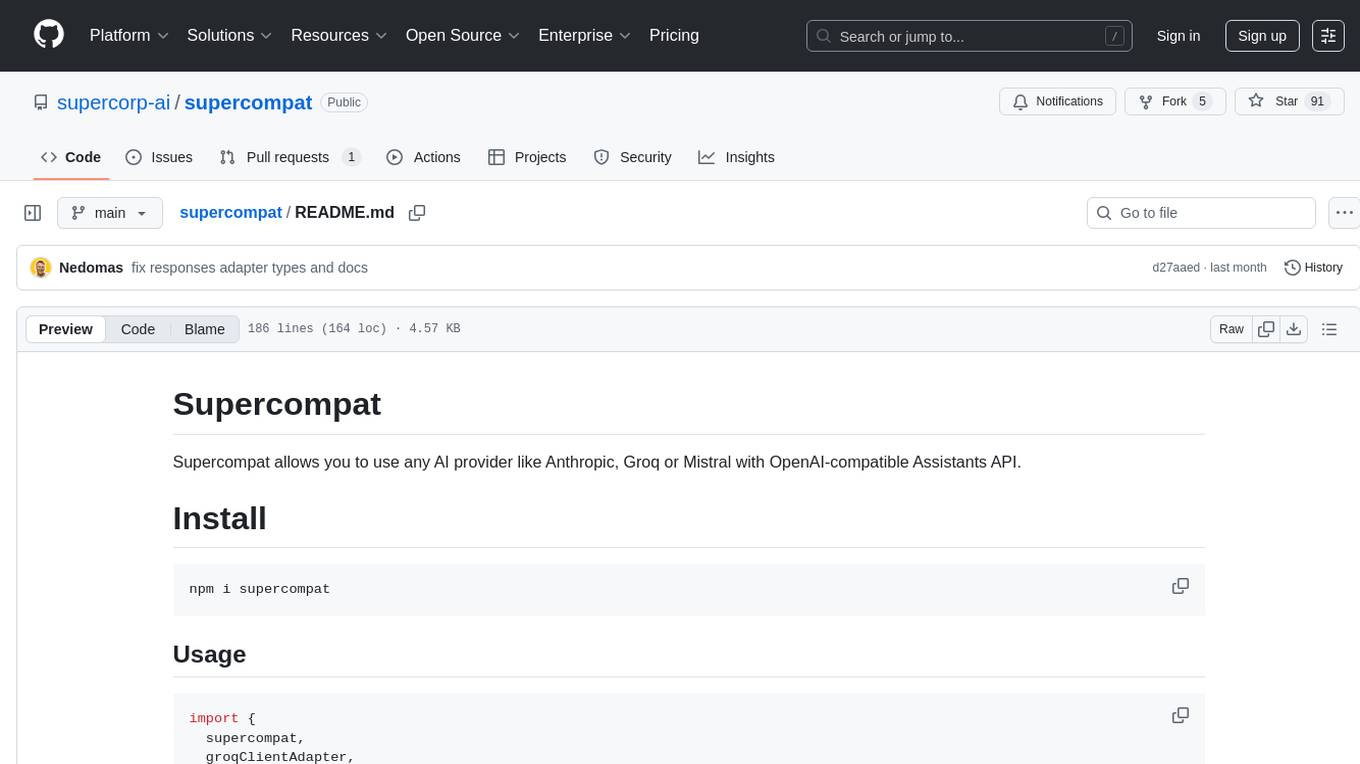

supercompat

Supercompat is a tool that enables users to integrate various AI providers like Anthropic, Groq, or Mistral with the OpenAI-compatible Assistants API. It provides adapters for different AI services and storage options, allowing seamless communication between the user's application and the AI providers. With Supercompat, developers can easily leverage the capabilities of multiple AI services within their projects, enhancing the functionality and intelligence of their applications.

ai-accelerator

The AI Accelerator project source code is designed to initialize an OpenShift cluster with a recommended set of operators and components for training, deploying, serving, and monitoring Machine Learning models. It provides core OpenShift features for Data Science environments and can be customized for specific scenarios. The project automates IT infrastructure using GitOps practices, including Git, code review, and CI/CD. ArgoCD Application objects are used to manage the installation of operators on the cluster.

mcp-apache-spark-history-server

The MCP Server for Apache Spark History Server is a tool that connects AI agents to Apache Spark History Server for intelligent job analysis and performance monitoring. It enables AI agents to analyze job performance, identify bottlenecks, and provide insights from Spark History Server data. The server bridges AI agents with existing Apache Spark infrastructure, allowing users to query job details, analyze performance metrics, compare multiple jobs, investigate failures, and generate insights from historical execution data.

ApeRAG

ApeRAG is a production-ready platform for Retrieval-Augmented Generation (RAG) that combines Graph RAG, vector search, and full-text search with advanced AI agents. It is ideal for building Knowledge Graphs, Context Engineering, and deploying intelligent AI agents for autonomous search and reasoning across knowledge bases. The platform offers features like advanced index types, intelligent AI agents with MCP support, enhanced Graph RAG with entity normalization, multimodal processing, hybrid retrieval engine, MinerU integration for document parsing, production-grade deployment with Kubernetes, enterprise management features, MCP integration, and developer-friendly tools for customization and contribution.

AI-Practices

AI-Practices is a systematic platform for learning and practicing artificial intelligence, covering a wide range of topics from foundational machine learning to advanced deep learning, reinforcement learning, generative models, large language models, multimodal learning, deployment optimization, distributed training, and agent reasoning. The platform provides a structured learning path, combining theoretical knowledge with practical implementation, following industrial code standards and including Kaggle competition solutions. It covers core algorithms in machine learning, deep learning, reinforcement learning, generative models, and large models. The platform supports popular deep learning frameworks like PyTorch, TensorFlow, and Keras, along with essential data science tools like NumPy, Pandas, and Scikit-Learn.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

llmio

LLMIO is a Go-based LLM load balancing gateway that provides a unified REST API, weight scheduling, logging, and modern management interface for your LLM clients. It helps integrate different model capabilities from OpenAI, Anthropic, Gemini, and more in a single service. Features include unified API compatibility, weight scheduling with two strategies, visual management dashboard, rate and failure handling, and local persistence with SQLite. The tool supports multiple vendors' APIs and authentication methods, making it versatile for various AI model integrations.

superglue

superglue is an AI-powered tool builder that abstracts away authentication, documentation handling, and data mapping between systems. It self-heals tools by auto-repairing failures due to API changes. Users can build lightweight data syncing tools, migrate SQL procedures to REST API calls, and create enterprise GPT tools. Interfaces include a web application, superglue SDK for CRUD functionality, and MCP Server for discoverability and execution of pre-built tools. Detailed documentation is available at docs.superglue.cloud.

llamactl

llamactl is a tool for unified management and routing of llama.cpp, MLX, and vLLM models with a web dashboard. It offers easy model management with built-in model downloader, dynamic multi-model instances, smart resource management, and a modern React UI dashboard. It provides flexible integration with API compatibility for OpenAI chat completions and resources endpoints, multi-backend support, and Docker readiness. The tool supports distributed deployment with remote instances and central management. Users can quickly start by installing a backend, downloading llamactl, creating an instance, and starting inferencing.

20 - OpenAI Gpts

Bot Advisor

Expert in bot-building platforms and AI solutions for tailored industry proposals.

cloud exams coach

AI Cloud Computing (Engineering, Architecture, DevOps ) Certifications Coach for AWS, GCP, and Azure. I provide timed mock exams.

Architext

Architext is a sophisticated chatbot designed to guide users through the complexities of AWS architecture, leveraging the AWS Well-Architected Framework. It offers real-time, tailored advice, interactive learning, and up-to-date resources for both novices and experts in AWS cloud infrastructure.

Code Architect AI

First discusses assistant details, then implements tailored code solutions.

The Learning Architect

An all-in-one, consultative L&D expert AI helping you build impactful, customized learning solutions for your organization.

ArchitectAI

A custom GPT model designed to assist in developing personalized software design solutions.

IoE - Internet of Everything Advisor

Advanced IoE-focused GPT, excelling in domain knowledge, security awareness, and problem-solving, powered by OpenAI

360GPT ~ All Things AI & Machine Learning

AI 360 Solutions. Designed to provide all-encompassing solutions in the field of artificial intelligence.

ConsultorIA

I develop AI implementation proposals based on your specific needs, focusing on value and affordability.

ecosystem.Ai Use Case Designer v2

The use case designer is configured with the latest Data Science and Behavioral Social Science insights to guide you through the process of defining AI and Machine Learning use cases for the ecosystem.Ai platform.

Strategy Guide

An expert in AI strategy, offering insights on AI implementation and industry trends.

AI Cyberwar

AI and cyber warfare expert, advising on policy, conflict, and technical trends