call-center-ai

Send a phone call from AI agent, in an API call. Or, directly call the bot from the configured phone number!

Stars: 325

Call Center AI is an AI-powered call center solution leveraging Azure and OpenAI GPT. It allows for AI agent-initiated phone calls or direct calls to the bot from a configured phone number. The bot is customizable for various industries like insurance, IT support, and customer service, with features such as accessing claim information, conversation history, language change, SMS sending, and more. The project is a proof of concept showcasing the integration of Azure Communication Services, Azure Cognitive Services, and Azure OpenAI for an automated call center solution.

README:

AI-powered call center solution with Azure and OpenAI GPT.

Send a phone call from AI agent, in an API call. Or, directly call the bot from the configured phone number!

Insurance, IT support, customer service, and more. The bot can be customized in few hours (really) to fit your needs.

# Ask the bot to call a phone number

data='{

"bot_company": "Contoso",

"bot_name": "Amélie",

"phone_number": "+11234567890",

"task": "Help the customer with their digital workplace. Assistant is working for the IT support department. The objective is to help the customer with their issue and gather information in the claim.",

"agent_phone_number": "+33612345678",

"claim": [

{

"name": "hardware_info",

"type": "text"

},

{

"name": "first_seen",

"type": "datetime"

},

{

"name": "building_location",

"type": "text"

}

]

}'

curl \

--header 'Content-Type: application/json' \

--request POST \

--url https://xxx/call \

--data $data-

Enhanced communication and user experience: Integrates inbound and outbound calls with a dedicated phone number, supports multiple languages and voice tones, and allows users to provide or receive information via SMS. Conversations are streamed in real-time to avoid delays, can be resumed after disconnections, and are stored for future reference. This ensures an improved customer experience, enabling 24/7 communication and handling of low to medium complexity calls, all in a more accessible and user-friendly manner.

-

Advanced intelligence and data management: Leverages GPT-4o and GPT-4o Realtime (known for higher performance and a 10–15x cost premium) to achieve nuanced comprehension. It can discuss private and sensitive data, including customer-specific information, while following retrieval-augmented generation (RAG) best practices to ensure secure and compliant handling of internal documents. The system understands domain-specific terms, follows a structured claim schema, generates automated to-do lists, filters inappropriate content, and detects jailbreak attempts. Historical conversations and past interactions can also be used to fine-tune the LLM, improving accuracy and personalization over time. Redis caching further enhances efficiency.

-

Customization, oversight, and scalability: Offers customizable prompts, feature flags for controlled experimentation, human agent fallback, and call recording for quality assurance. Integrates Application Insights for monitoring and tracing, provides publicly accessible claim data, and plans future enhancements such as automated callbacks and IVR-like workflows. It also enables the creation of a brand-specific custom voice, allowing the assistant’s voice to reflect the company’s identity and improve brand consistency.

-

Cloud-native deployment and resource management: Deployed on Azure with a containerized, serverless architecture for low maintenance and elastic scaling. This approach optimizes costs based on usage, ensuring flexibility and affordability over time. Seamless integration with Azure Communication Services, Cognitive Services, and OpenAI resources provides a secure environment suitable for rapid iteration, continuous improvement, and accommodating variable workloads in the call center.

A French demo is avaialble on YouTube. Do not hesitate to watch the demo in x1.5 speed to get a quick overview of the project. Voice is hesitant on purpose to show the bot can handle it. All the infrastructure is deployed on Azure, mostly in serverless mode. Provisionning of the LLM resources can be done to reduce the latency.

Main interactions shown in the demo:

- User calls the call center

- The bot answers and the conversation starts

- The bot stores conversation, claim and todo list in the database

Extract of the data stored during the call:

{

"claim": {

"incident_description": "Collision avec un autre véhicule, voiture dans le fossé, pas de blessés",

"incident_location": "Nationale 17",

"involved_parties": "Dujardin, Madame Lesné",

"policy_number": "DEC1748"

},

"messages": [

{

"created_at": "2024-12-10T15:51:04.566727Z",

"action": "talk",

"content": "Non, je pense que c'est pas mal. Vous avez répondu à mes questions et là j'attends la dépaneuse. Merci beaucoup.",

"persona": "human",

"style": "none",

"tool_calls": []

},

{

"created_at": "2024-12-10T15:51:06.040451Z",

"action": "talk",

"content": "Je suis ravi d'avoir pu vous aider! Si vous avez besoin de quoi que ce soit d'autre, n'hésitez pas à nous contacter. Je vous souhaite une bonne journée et j'espère que tout se passera bien avec la dépanneuse. Au revoir!",

"persona": "assistant",

"style": "none",

"tool_calls": []

}

],

"next": {

"action": "case_closed",

"justification": "The customer has provided all necessary information for the insurance claim, and a reminder has been set for a follow-up call. The customer is satisfied with the assistance provided and is waiting for the tow truck. The case can be closed for now."

},

"reminders": [

{

"created_at": "2024-12-10T15:50:09.507903Z",

"description": "Rappeler le client pour faire le point sur l'accident et l'avancement du dossier.",

"due_date_time": "2024-12-11T14:30:00",

"owner": "assistant",

"title": "Rappel client sur l'accident"

}

],

"synthesis": {

"long": "During our call, you reported an accident involving your vehicle on the Nationale 17. You mentioned that there were no injuries, but both your car and the other vehicle ended up in a ditch. The other party involved is named Dujardin, and your vehicle is a 4x4 Ford. I have updated your claim with these details, including the license plates: yours is U837GE and the other vehicle's is GA837IA. A reminder has been set for a follow-up call tomorrow at 14:30 to discuss the progress of your claim. If you need further assistance, please feel free to reach out.",

"satisfaction": "high",

"short": "the accident on Nationale 17",

"improvement_suggestions": "To improve the customer experience, it would be beneficial to ensure that the call connection is stable to avoid interruptions. Additionally, providing a clear step-by-step guide on what information is needed for the claim could help streamline the process and reduce any confusion for the customer."

}

...

}A report is available at https://[your_domain]/report/[phone_number] (like http://localhost:8080/report/%2B133658471534). It shows the conversation history, claim data and reminders.

---

title: System diagram (C4 model)

---

graph

user(["User"])

agent(["Agent"])

app["Call Center AI"]

app -- Transfer to --> agent

app -. Send voice .-> user

user -- Call --> app---

title: Claim AI component diagram (C4 model)

---

graph LR

agent(["Agent"])

user(["User"])

subgraph "Claim AI"

ada["Embedding<br>(ADA)"]

app["App<br>(Container App)"]

communication_services["Call & SMS gateway<br>(Communication Services)"]

db[("Conversations and claims<br>(Cosmos DB)")]

eventgrid["Broker<br>(Event Grid)"]

gpt["LLM<br>(GPT-4o)"]

queues[("Queues<br>(Azure Storage)")]

redis[("Cache<br>(Redis)")]

search[("RAG<br>(AI Search)")]

sounds[("Sounds<br>(Azure Storage)")]

sst["Speech-to-text<br>(Cognitive Services)"]

translation["Translation<br>(Cognitive Services)"]

tts["Text-to-speech<br>(Cognitive Services)"]

end

app -- Translate static TTS --> translation

app -- Sezarch RAG data --> search

app -- Generate completion --> gpt

gpt -. Answer with completion .-> app

app -- Generate voice --> tts

tts -. Answer with voice .-> app

app -- Get cached data --> redis

app -- Save conversation --> db

app -- Transform voice --> sst

sst -. Answer with text .-> app

app <-. Exchange audio .-> communication_services

app -. Watch .-> queues

communication_services -- Load sound --> sounds

communication_services -- Notifies --> eventgrid

communication_services -- Transfer to --> agent

communication_services <-. Exchange audio .-> agent

communication_services <-. Exchange audio .-> user

eventgrid -- Push to --> queues

search -- Generate embeddings --> ada

user -- Call --> communication_services[!NOTE] This project is a proof of concept. It is not intended to be used in production. This demonstrates how can be combined Azure Communication Services, Azure Cognitive Services and Azure OpenAI to build an automated call center solution.

Prefer using GitHub Codespaces for a quick start. The environment will setup automatically with all the required tools.

In macOS, with Homebrew, simply type make brew.

For other systems, make sure you have the following installed:

- Azure CLI

- Twilio CLI (optional)

- yq

- Bash compatible shell, like

bashorzsh - Make,

apt install make(Ubuntu),yum install make(CentOS),brew install make(macOS)

Then, Azure resources are needed:

- Prefer to use lowercase and no special characters other than dashes (e.g.

ccai-customer-a)

- Same name as the resource group

- Enable system managed identity

- From the Communication Services resource

- Allow inbound and outbound communication

- Enable voice (required) and SMS (optional) capabilities

Now that the prerequisites are configured (local + Azure), the deployment can be done.

A pre-built container image is available on GitHub Actions, it will be used to deploy the solution on Azure:

- Latest version from a branch:

ghcr.io/clemlesne/call-center-ai:main - Specific tag:

ghcr.io/clemlesne/call-center-ai:0.1.0(recommended)

Fill the template from the example at config-remote-example.yaml. The file should be placed at the root of the project under the name config.yaml. It will be used by install scripts (incl. Makefile and Bicep) to configure the Azure resources.

az login[!TIP] Specify the release version under the

image_versionparameter (default ismain). For example,image_version=16.0.0orimage_version=sha-7ca2c0c. This will ensure any future project breaking changes won't affect your deployment.

make deploy name=my-rg-nameWait for the deployment to finish.

make logs name=my-rg-nameIf you skiped the make brew command from the first install section, make sure you have the following installed:

Finally, run make install to setup Python environment.

If the application is already deployed on Azure, you can run make name=my-rg-name sync-local-config to copy the configuration from remote to your local machine.

[!TIP] To use a Service Principal to authenticate to Azure, you can also add the following in a

.envfile:AZURE_CLIENT_ID=xxx AZURE_CLIENT_SECRET=xxx AZURE_TENANT_ID=xxx

If the solution is not running online, fill the template from the example at config-local-example.yaml. The file should be placed at the root of the project under the name config.yaml.

Execute if the solution is not yet deployed on Azure.

make deploy-bicep deploy-post name=my-rg-name- This will deploy the Azure resources without the API server, allowing you to test the bot locally

- Wait for the deployment to finish

[!IMPORTANT] Tunnel requires to be run in a separate terminal, because it needs to be running all the time

# Log in once

devtunnel login

# Start the tunnel

make tunnel[!NOTE] To override a specific configuration value, you can use environment variables. For example, to override the

llm.fast.endpointvalue, you can use theLLM__FAST__ENDPOINTvariable:LLM__FAST__ENDPOINT=https://xxx.openai.azure.com

[!NOTE] Also,

local.pyscript is available to test the application without the need of a phone call (= without Communication Services). Run the script with:python3 -m tests.local

make dev- Code is automatically reloaded on file changes, no need to restart the server

- The API server is available at

http://localhost:8080

Call recording is disabled by default. To enable it:

- Create a new container in the Azure Storage account (i.e.

recordings), it is already done if you deployed the solution on Azure - Update the feature flag

recording_enabledin App Configuration totrue

Training data is stored on AI Search to be retrieved by the bot, on demand.

Required index schema:

| Field Name | Type |

Retrievable | Searchable | Dimensions | Vectorizer |

|---|---|---|---|---|---|

| answer | Edm.String |

Yes | Yes | ||

| context | Edm.String |

Yes | Yes | ||

| created_at | Edm.String |

Yes | No | ||

| document_synthesis | Edm.String |

Yes | Yes | ||

| file_path | Edm.String |

Yes | No | ||

| id | Edm.String |

Yes | No | ||

| question | Edm.String |

Yes | Yes | ||

| vectors | Collection(Edm.Single) |

No | Yes | 1536 | OpenAI ADA |

Software to fill the index is included on Synthetic RAG Index repository.

The bot can be used in multiple languages. It can understand the language the user chose.

See the list of supported languages for the Text-to-Speech service.

# config.yaml

conversation:

initiate:

lang:

default_short_code: fr-FR

availables:

- pronunciations_en: ["French", "FR", "France"]

short_code: fr-FR

voice: fr-FR-DeniseNeural

- pronunciations_en: ["Chinese", "ZH", "China"]

short_code: zh-CN

voice: zh-CN-XiaoqiuNeuralIf you built and deployed an Azure Speech Custom Neural Voice (CNV), add field custom_voice_endpoint_id on the language configuration:

# config.yaml

conversation:

initiate:

lang:

default_short_code: fr-FR

availables:

- pronunciations_en: ["French", "FR", "France"]

short_code: fr-FR

voice: xxx

custom_voice_endpoint_id: xxxLevels are defined for each category of Content Safety. The higher the score, the more strict the moderation is, from 0 to 7. Moderation is applied on all bot data, including the web page and the conversation. Configure them in Azure OpenAI Content Filters.

Customization of the data schema is fully supported. You can add or remove fields as needed, depending on the requirements.

By default, the schema of composed of:

-

caller_email(email) -

caller_name(text) -

caller_phone(phone_number)

Values are validated to ensure the data format commit to your schema. They can be either:

datetimeemail-

phone_number(E164format) text

Finally, an optional description can be provided. The description must be short and meaningful, it will be passed to the LLM.

Default schema, for inbound calls, is defined in the configuration:

# config.yaml

conversation:

default_initiate:

claim:

- name: additional_notes

type: text

# description: xxx

- name: device_info

type: text

# description: xxx

- name: incident_datetime

type: datetime

# description: xxxClaim schema can be customized for each call, by adding the claim field in the POST /call API call.

The objective is a description of what the bot will do during the call. It is used to give a context to the LLM. It should be short, meaningful, and written in English.

This solution is priviledged instead of overriding the LLM prompt.

Default task, for inbound calls, is defined in the configuration:

# config.yaml

conversation:

initiate:

task: |

Help the customer with their insurance claim. Assistant requires data from the customer to fill the claim. The latest claim data will be given. Assistant role is not over until all the relevant data is gathered.Task can be customized for each call, by adding the task field in the POST /call API call.

Conversation options are represented as features. They can be configured from App Configuration, without the need to redeploy or restart the application. Once a feature is updated, a delay of 60 secs is needed to make the change effective.

| Name | Description | Type | Default |

|---|---|---|---|

answer_hard_timeout_sec |

The hard timeout for the bot answer in secs. | int |

60 |

answer_soft_timeout_sec |

The soft timeout for the bot answer in secs. | int |

30 |

callback_timeout_hour |

The timeout for a callback in hours. Set 0 to disable. | int |

3 |

phone_silence_timeout_sec |

Amount of silence in secs to trigger a warning message from the assistant. | int |

20 |

recognition_retry_max |

TThe maximum number of retries for voice recognition. Minimum of 1. | int |

3 |

recognition_stt_complete_timeout_ms |

The timeout for STT completion in milliseconds. | int |

100 |

recording_enabled |

Whether call recording is enabled. | bool |

false |

slow_llm_for_chat |

Whether to use the slow LLM for chat. | bool |

false |

vad_cutoff_timeout_ms |

The cutoff timeout for voice activity detection in milliseconds. | int |

250 |

vad_silence_timeout_ms |

Silence to trigger voice activity detection in milliseconds. | int |

500 |

vad_threshold |

The threshold for voice activity detection. Between 0.1 and 1. | float |

0.5 |

To use Twilio for SMS, you need to create an account and get the following information:

- Account SID

- Auth Token

- Phone number

Then, add the following in the config.yaml file:

# config.yaml

sms:

mode: twilio

twilio:

account_sid: xxx

auth_token: xxx

phone_number: "+33612345678"Note that prompt examples contains {xxx} placeholders. These placeholders are replaced by the bot with the corresponding data. For example, {bot_name} is internally replaced by the bot name. Be sure to write all the TTS prompts in English. This language is used as a pivot language for the conversation translation. All texts are referenced as lists, so user can have a different experience each time they call, thus making the conversation more engaging.

# config.yaml

prompts:

tts:

hello_tpl:

- : |

Hello, I'm {bot_name}, from {bot_company}! I'm an IT support specialist.

Here's how I work: when I'm working, you'll hear a little music; then, at the beep, it's your turn to speak. You can speak to me naturally, I'll understand.

What's your problem?

- : |

Hi, I'm {bot_name} from {bot_company}. I'm here to help.

You'll hear music, then a beep. Speak naturally, I'll understand.

What's the issue?

llm:

default_system_tpl: |

Assistant is called {bot_name} and is in a call center for the company {bot_company} as an expert with 20 years of experience in IT service.

# Context

Today is {date}. Customer is calling from {phone_number}. Call center number is {bot_phone_number}.

chat_system_tpl: |

# Objective

Provide internal IT support to employees. Assistant requires data from the employee to provide IT support. The assistant's role is not over until the issue is resolved or the request is fulfilled.

# Rules

- Answers in {default_lang}, even if the customer speaks another language

- Cannot talk about any topic other than IT support

- Is polite, helpful, and professional

- Rephrase the employee's questions as statements and answer them

- Use additional context to enhance the conversation with useful details

- When the employee says a word and then spells out letters, this means that the word is written in the way the employee spelled it (e.g. "I work in Paris PARIS", "My name is John JOHN", "My email is Clemence CLEMENCE at gmail GMAIL dot com COM")

- You work for {bot_company}, not someone else

# Required employee data to be gathered by the assistant

- Department

- Description of the IT issue or request

- Employee name

- Location

# General process to follow

1. Gather information to know the employee's identity (e.g. name, department)

2. Gather details about the IT issue or request to understand the situation (e.g. description, location)

3. Provide initial troubleshooting steps or solutions

4. Gather additional information if needed (e.g. error messages, screenshots)

5. Be proactive and create reminders for follow-up or further assistance

# Support status

{claim}

# Reminders

{reminders}The delay mainly come from two things:

- Voice in and voice out are processed by Azure AI Speech, both are implemented in streaming mode but voice is not directly streamed to the LLM

- The LLM, more specifically the delay between API call and first sentence infered, can be long (as the sentences are sent one by one once they are made avalable), even longer if it hallucinate and returns empty answers (it happens regularly, and the applicatoipn retries the call)

From now, the only impactful thing you can do is the LLM part. This can be acheieve by a PTU on Azure or using a less smart model like gpt-4o-mini (selected by default on the latest versions). With a PTU on Azure OpenAI, you can divide by 2 the latency in some case.

The application is natively connected to Azure Application Insights, so you can monitor the response time and see where the time is spent. This is a great start to identify the bottlenecks.

Feel free to raise an issue or propose a PR if you have any idea to optimize the response delay.

Enhance the LLM’s accuracy and domain adaptation by integrating historical data from human-run call centers. Before proceeding, ensure compliance with data privacy regulations, internal security standards, and Responsible AI principles. Consider the following steps:

- Aggregate authentic data sources: Collect voice recordings, call transcripts, and chat logs from previous human-managed interactions to provide the LLM with realistic training material.

- Preprocess and anonymize data: Remove sensitive information (AI Language Personally Identifiable Information detection), including personal identifiers or confidential details, to preserve user privacy, meet compliance, and align with Responsible AI guidelines.

- Perform iterative fine-tuning: Continuously refine the model’s using the curated dataset (AI Foundry Fine-tuning), allowing it to learn industry-specific terminology, preferred conversation styles, and problem-resolution approaches.

- Validate improvements: Test the updated model against sample scenarios and measure key performance indicators (e.g. user satisfaction, call duration, resolution rate) to confirm that adjustments have led to meaningful enhancements.

- Monitor, iterate, and A/B test: Regularly reassess the model’s performance, integrate newly gathered data, and apply further fine-tuning as needed. Leverage built-in feature configurations to A/B test (App Configuration Experimentation) different versions of the model, ensuring responsible, data-driven decisions and continuous optimization over time.

Application send traces and metrics to Azure Application Insights. You can monitor the application from the Azure portal, or by using the API.

This includes application behavior, database queries, and external service calls. Plus, LLM metrics (latency, token usage, prompts content, raw response) from OpenLLMetry, following the semantic sonventions for OpenAI operations.

Additionally custom metrics (viewable in Application Insights > Metrics) are published, notably:

-

call.aec.droped, number of times the echo cancellation dropped the voice completely. -

call.aec.missed, number of times the echo cancellation failed to remove the echo in time. -

call.answer.latency, time between the end of the user voice and the start of the bot voice.

For a monthly usage of 1000 calls of 10 minutes each. Costs are estimated for 2024-12-10, in USD. Prices are subject to change.

[!NOTE] For production usage, it is recommended to upgrade to SKUs with vNET integration and private endpoints. This can increase notably the costs.

This totalizes $720.07 /month, $0.12 /hour, with the following breakdown:

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| West Europe | Audio Streaming | $0.004 /minute | $40 |

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| Sweden Central | GPT-4o mini global | $0.15 /1M input tokens | $35.25 | 8k tokens for conversation history, 3750 tokens for RAG, each participant talk every 15s |

| Sweden Central | GPT-4o mini global | $0.60 /1M output tokens | $1.4 | 400 tokens for each response incl tools, each participant talk every 15s |

| Sweden Central | GPT-4o global | $2.50 /1M input tokens | $10 | 4k tokens for each conversation, to get insights |

| Sweden Central | GPT-4o global | $10 /1M output tokens | $10 | 1k tokens for each conversation, to get insights |

| Sweden Central | text-embedding-3-large | $0.00013 /1k tokens | $2.08 | 1 search or 400 tokens for each message, each participant talk every 15s |

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| Sweden Central | Serverless vCPU | $0.000024 /sec | $128.56 | Avg of 2 replicas with 1 vCPU |

| Sweden Central | Serverless memory (average of 2 replicas) | $0.000003 /sec | $32.14 | Avg of 2 replicas with 2GB |

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| Sweden Central | Standard C0 | $40.15 /month | $40.15 | Has 250MB of memory, should be upgraded for more intensive usage |

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| Sweden Central | Basic | $73.73 /month | $73.73 | Has 15GB of storage /index, should be upgraded for big datasets |

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| West Europe | Speech-to-text real-time | $1 /hour | $83.33 | Each participant talk every 15s |

| West Europe | Text-to-speech standard | $15 /1M characters | $69.23 | 300 tokens for each response, 1.3 tokens /word in English, each participant talk every 15s |

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| Sweden Central | Multi-region write RU/s /region | $11.68 /100 RU/s | $233.6 | Avg of 1k RU/s on 2 regions |

| Sweden Central | Transactional storage | $0.25 /GB | $0.5 | 2GB of storage, should be upgraded if more history is needed |

Not included upper:

[!NOTE] Azure Monitor costs shouldn't be considered as optional as monitoring is a key part of maintaining a business-critical application and high-quality service for users.

Optional costs totalizing $343.02 /month, with the following breakdown:

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| West Europe | Call recording | $0.002 /minute | $20 |

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| Sweden Central | text-embedding-3-large | $0.00013 /1k tokens | $0.52 | 10k PDF pages with 400 tokens each, for indexing |

| Region | Metric | Cost | Total (monthly $) | Note |

|---|---|---|---|---|

| Sweden Central | Basic logs ingestion | $0.645 /GB | $322.5 | 500GB of logs with sampling enabled |

Quality:

- [x] Unit and integration tests for persistence layer

- [ ] Complete unit and integration tests coverage

Reliability:

- [x] Reproductible builds

- [x] Traces and telemetry

- [ ] Operation runbooks for common issues

- [ ] Proper dashboarding in Azure Application Insights (deployed with the IaC)

Maintainability:

- [x] Automated and required static code checks

- [ ] Decouple assistant from the insights in a separate service

- [ ] Peer review to limit the bus factor

Resiliency:

- [x] Infrastructure as Code (IaC)

- [ ] Multi-region deployment

- [ ] Reproductible performance tests

Security:

- [x] CI builds attestations

- [x] CodeQL static code checks

- [ ] GitOps for deployments

- [ ] Private networking

- [ ] Production SKUs allowing vNET integration

- [ ] Red team exercises

Responsible AI:

- [x] Harmful content detection

- [ ] Grounding detection with Content Safety

- [ ] Social impact assessment

At the time of development, no LLM framework was available to handle all of these features: streaming capability with multi-tools, backup models on availability issue, callbacks mechanisms in the triggered tools. So, OpenAI SDK is used directly and some algorithms are implemented to handle reliability.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for call-center-ai

Similar Open Source Tools

call-center-ai

Call Center AI is an AI-powered call center solution leveraging Azure and OpenAI GPT. It allows for AI agent-initiated phone calls or direct calls to the bot from a configured phone number. The bot is customizable for various industries like insurance, IT support, and customer service, with features such as accessing claim information, conversation history, language change, SMS sending, and more. The project is a proof of concept showcasing the integration of Azure Communication Services, Azure Cognitive Services, and Azure OpenAI for an automated call center solution.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

moatless-tools

Moatless Tools is a hobby project focused on experimenting with using Large Language Models (LLMs) to edit code in large existing codebases. The project aims to build tools that insert the right context into prompts and handle responses effectively. It utilizes an agentic loop functioning as a finite state machine to transition between states like Search, Identify, PlanToCode, ClarifyChange, and EditCode for code editing tasks.

marqo

Marqo is more than a vector database, it's an end-to-end vector search engine for both text and images. Vector generation, storage and retrieval are handled out of the box through a single API. No need to bring your own embeddings.

LEADS

LEADS is a lightweight embedded assisted driving system designed to simplify the development of instrumentation, control, and analysis systems for racing cars. It is written in Python and C/C++ with impressive performance. The system is customizable and provides abstract layers for component rearrangement. It supports hardware components like Raspberry Pi and Arduino, and can adapt to various hardware types. LEADS offers a modular structure with a focus on flexibility and lightweight design. It includes robust safety features, modern GUI design with dark mode support, high performance on different platforms, and powerful ESC systems for traction control and braking. The system also supports real-time data sharing, live video streaming, and AI-enhanced data analysis for driver training. LEADS VeC Remote Analyst enables transparency between the driver and pit crew, allowing real-time data sharing and analysis. The system is designed to be user-friendly, adaptable, and efficient for racing car development.

allms

allms is a versatile and powerful library designed to streamline the process of querying Large Language Models (LLMs). Developed by Allegro engineers, it simplifies working with LLM applications by providing a user-friendly interface, asynchronous querying, automatic retrying mechanism, error handling, and output parsing. It supports various LLM families hosted on different platforms like OpenAI, Google, Azure, and GCP. The library offers features for configuring endpoint credentials, batch querying with symbolic variables, and forcing structured output format. It also provides documentation, quickstart guides, and instructions for local development, testing, updating documentation, and making new releases.

langchain

LangChain is a framework for developing Elixir applications powered by language models. It enables applications to connect language models to other data sources and interact with the environment. The library provides components for working with language models and off-the-shelf chains for specific tasks. It aims to assist in building applications that combine large language models with other sources of computation or knowledge. LangChain is written in Elixir and is not aimed for parity with the JavaScript and Python versions due to differences in programming paradigms and design choices. The library is designed to make it easy to integrate language models into applications and expose features, data, and functionality to the models.

baml

BAML is a config file format for declaring LLM functions that you can then use in TypeScript or Python. With BAML you can Classify or Extract any structured data using Anthropic, OpenAI or local models (using Ollama) ## Resources  [Discord Community](https://discord.gg/boundaryml)  [Follow us on Twitter](https://twitter.com/boundaryml) * Discord Office Hours - Come ask us anything! We hold office hours most days (9am - 12pm PST). * Documentation - Learn BAML * Documentation - BAML Syntax Reference * Documentation - Prompt engineering tips * Boundary Studio - Observability and more #### Starter projects * BAML + NextJS 14 * BAML + FastAPI + Streaming ## Motivation Calling LLMs in your code is frustrating: * your code uses types everywhere: classes, enums, and arrays * but LLMs speak English, not types BAML makes calling LLMs easy by taking a type-first approach that lives fully in your codebase: 1. Define what your LLM output type is in a .baml file, with rich syntax to describe any field (even enum values) 2. Declare your prompt in the .baml config using those types 3. Add additional LLM config like retries or redundancy 4. Transpile the .baml files to a callable Python or TS function with a type-safe interface. (VSCode extension does this for you automatically). We were inspired by similar patterns for type safety: protobuf and OpenAPI for RPCs, Prisma and SQLAlchemy for databases. BAML guarantees type safety for LLMs and comes with tools to give you a great developer experience:  Jump to BAML code or how Flexible Parsing works without additional LLM calls. | BAML Tooling | Capabilities | | ----------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | | BAML Compiler install | Transpiles BAML code to a native Python / Typescript library (you only need it for development, never for releases) Works on Mac, Windows, Linux  | | VSCode Extension install | Syntax highlighting for BAML files Real-time prompt preview Testing UI | | Boundary Studio open (not open source) | Type-safe observability Labeling |

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

AgentLab

AgentLab is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides features for developing and evaluating agents on various benchmarks supported by BrowserGym. The framework allows for large-scale parallel agent experiments using ray, building blocks for creating agents over BrowserGym, and a unified LLM API for OpenRouter, OpenAI, Azure, or self-hosted using TGI. AgentLab also offers reproducibility features, a unified LeaderBoard, and supports multiple benchmarks like WebArena, WorkArena, WebLinx, VisualWebArena, AssistantBench, GAIA, Mind2Web-live, and MiniWoB.

evalverse

Evalverse is an open-source project designed to support Large Language Model (LLM) evaluation needs. It provides a standardized and user-friendly solution for processing and managing LLM evaluations, catering to AI research engineers and scientists. Evalverse supports various evaluation methods, insightful reports, and no-code evaluation processes. Users can access unified evaluation with submodules, request evaluations without code via Slack bot, and obtain comprehensive reports with scores, rankings, and visuals. The tool allows for easy comparison of scores across different models and swift addition of new evaluation tools.

PostTrainBench

PostTrainBench is a benchmark designed to measure the ability of command-line interface (CLI) agents to post-train pre-trained large language models (LLMs). The agents are tasked with improving the performance of a base LLM on a given benchmark using an evaluation script and 10 hours on an H100 GPU. The benchmark scores are computed after post-training, and the setup evaluates an agent's capability to conduct AI research and development. The repository provides a platform for collaborative contributions to expand tasks and agent scaffolds, with the potential for co-authorship on research papers.

mcp

The Snowflake Cortex AI Model Context Protocol (MCP) Server provides tooling for Snowflake Cortex AI, object management, and SQL orchestration. It supports capabilities such as Cortex Search, Cortex Analyst, Cortex Agent, Object Management, SQL Execution, and Semantic View Querying. Users can connect to Snowflake using various authentication methods like username/password, key pair, OAuth, SSO, and MFA. The server is client-agnostic and works with MCP Clients like Claude Desktop, Cursor, fast-agent, Microsoft Visual Studio Code + GitHub Copilot, and Codex. It includes tools for Object Management (creating, dropping, describing, listing objects), SQL Execution (executing SQL statements), and Semantic View Querying (discovering, querying Semantic Views). Troubleshooting can be done using the MCP Inspector tool.

OneKE

OneKE is a flexible dockerized system for schema-guided knowledge extraction, capable of extracting information from the web and raw PDF books across multiple domains like science and news. It employs a collaborative multi-agent approach and includes a user-customizable knowledge base to enable tailored extraction. OneKE offers various IE tasks support, data sources support, LLMs support, extraction method support, and knowledge base configuration. Users can start with examples using YAML, Python, or Web UI, and perform tasks like Named Entity Recognition, Relation Extraction, Event Extraction, Triple Extraction, and Open Domain IE. The tool supports different source formats like Plain Text, HTML, PDF, Word, TXT, and JSON files. Users can choose from various extraction models like OpenAI, DeepSeek, LLaMA, Qwen, ChatGLM, MiniCPM, and OneKE for information extraction tasks. Extraction methods include Schema Agent, Extraction Agent, and Reflection Agent. The tool also provides support for schema repository and case repository management, along with solutions for network issues. Contributors to the project include Ningyu Zhang, Haofen Wang, Yujie Luo, Xiangyuan Ru, Kangwei Liu, Lin Yuan, Mengshu Sun, Lei Liang, Zhiqiang Zhang, Jun Zhou, Lanning Wei, Da Zheng, and Huajun Chen.

AgentBench

AgentBench is a benchmark designed to evaluate Large Language Models (LLMs) as autonomous agents in various environments. It includes 8 distinct environments such as Operating System, Database, Knowledge Graph, Digital Card Game, and Lateral Thinking Puzzles. The tool provides a comprehensive evaluation of LLMs' ability to operate as agents by offering Dev and Test sets for each environment. Users can quickly start using the tool by following the provided steps, configuring the agent, starting task servers, and assigning tasks. AgentBench aims to bridge the gap between LLMs' proficiency as agents and their practical usability.

LLM-Pruner

LLM-Pruner is a tool for structural pruning of large language models, allowing task-agnostic compression while retaining multi-task solving ability. It supports automatic structural pruning of various LLMs with minimal human effort. The tool is efficient, requiring only 3 minutes for pruning and 3 hours for post-training. Supported LLMs include Llama-3.1, Llama-3, Llama-2, LLaMA, BLOOM, Vicuna, and Baichuan. Updates include support for new LLMs like GQA and BLOOM, as well as fine-tuning results achieving high accuracy. The tool provides step-by-step instructions for pruning, post-training, and evaluation, along with a Gradio interface for text generation. Limitations include issues with generating repetitive or nonsensical tokens in compressed models and manual operations for certain models.

For similar tasks

call-center-ai

Call Center AI is an AI-powered call center solution leveraging Azure and OpenAI GPT. It allows for AI agent-initiated phone calls or direct calls to the bot from a configured phone number. The bot is customizable for various industries like insurance, IT support, and customer service, with features such as accessing claim information, conversation history, language change, SMS sending, and more. The project is a proof of concept showcasing the integration of Azure Communication Services, Azure Cognitive Services, and Azure OpenAI for an automated call center solution.

gemini-ai

Gemini AI is a Ruby Gem designed to provide low-level access to Google's generative AI services through Vertex AI, Generative Language API, or AI Studio. It allows users to interact with Gemini to build abstractions on top of it. The Gem provides functionalities for tasks such as generating content, embeddings, predictions, and more. It supports streaming capabilities, server-sent events, safety settings, system instructions, JSON format responses, and tools (functions) calling. The Gem also includes error handling, development setup, publishing to RubyGems, updating the README, and references to resources for further learning.

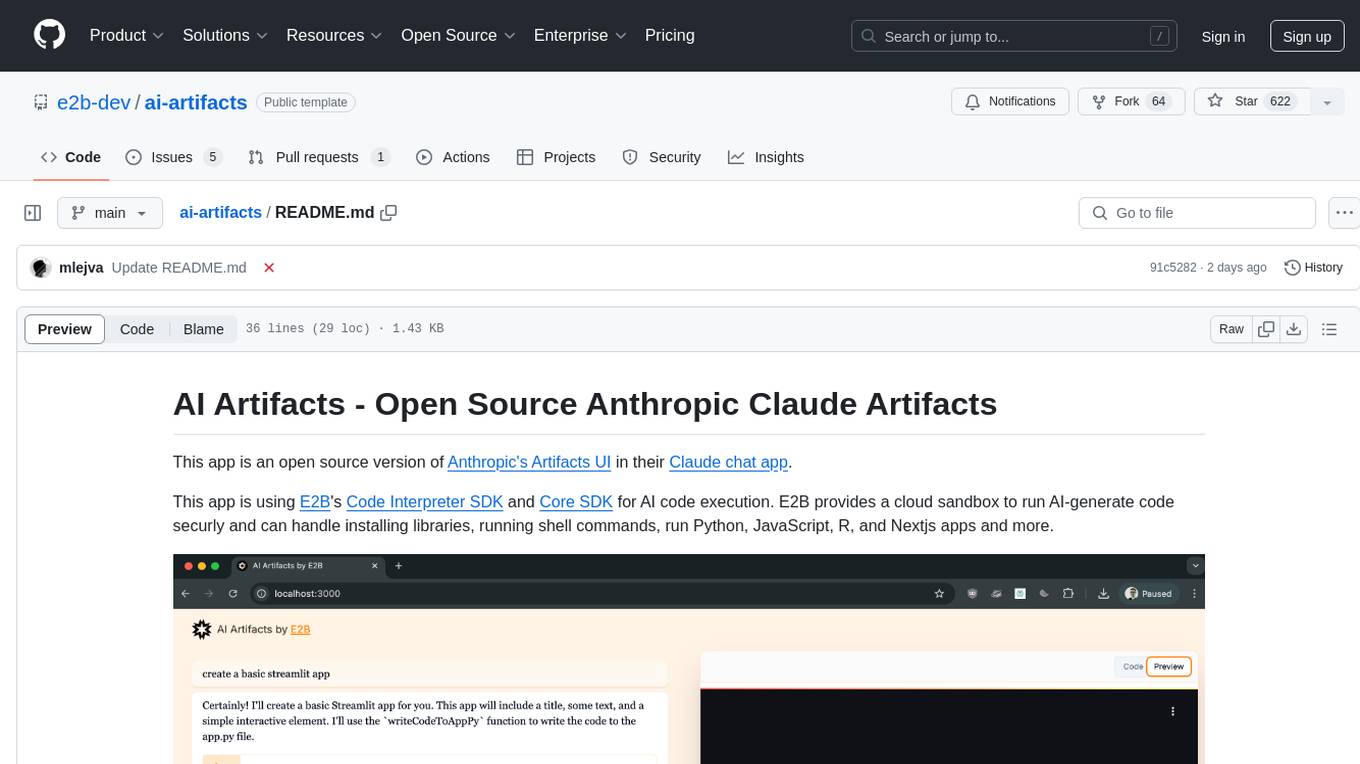

ai-artifacts

AI Artifacts is an open source tool that replicates Anthropic's Artifacts UI in the Claude chat app. It utilizes E2B's Code Interpreter SDK and Core SDK for secure AI code execution in a cloud sandbox environment. Users can run AI-generated code in various languages such as Python, JavaScript, R, and Nextjs apps. The tool also supports running AI-generated Python in Jupyter notebook, Next.js apps, and Streamlit apps. Additionally, it offers integration with Vercel AI SDK for tool calling and streaming responses from the model.

tambo

tambo ai is a React library that simplifies the process of building AI assistants and agents in React by handling thread management, state persistence, streaming responses, AI orchestration, and providing a compatible React UI library. It eliminates React boilerplate for AI features, allowing developers to focus on creating exceptional user experiences with clean React hooks that seamlessly integrate with their codebase.

instructor-go

Instructor Go is a library that simplifies working with structured outputs from large language models (LLMs). Built on top of `invopop/jsonschema` and utilizing `jsonschema` Go struct tags, it provides a user-friendly API for managing validation, retries, and streaming responses without changing code logic. The library supports LLM provider APIs such as OpenAI, Anthropic, Cohere, and Google, capturing and returning usage data in responses. Users can easily add metadata to struct fields using `jsonschema` tags to enhance model awareness and streamline workflows.

dive

Dive is an AI toolkit for Go that enables the creation of specialized teams of AI agents and seamless integration with leading LLMs. It offers a CLI and APIs for easy integration, with features like creating specialized agents, hierarchical agent systems, declarative configuration, multiple LLM support, extended reasoning, model context protocol, advanced model settings, tools for agent capabilities, tool annotations, streaming, CLI functionalities, thread management, confirmation system, deep research, and semantic diff. Dive also provides semantic diff analysis, unified interface for LLM providers, tool system with annotations, custom tool creation, and support for various verified models. The toolkit is designed for developers to build AI-powered applications with rich agent capabilities and tool integrations.

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

LocalLLMClient

LocalLLMClient is a Swift package designed to interact with local Large Language Models (LLMs) on Apple platforms. It supports GGUF, MLX models, and the FoundationModels framework, providing streaming API, multimodal capabilities, and tool calling functionalities. Users can easily integrate this tool to work with various models for text generation and processing. The package also includes advanced features for low-level API control and multimodal image processing. LocalLLMClient is experimental and subject to API changes, offering support for iOS, macOS, and Linux platforms.

For similar jobs

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

booster

Booster is a powerful inference accelerator designed for scaling large language models within production environments or for experimental purposes. It is built with performance and scaling in mind, supporting various CPUs and GPUs, including Nvidia CUDA, Apple Metal, and OpenCL cards. The tool can split large models across multiple GPUs, offering fast inference on machines with beefy GPUs. It supports both regular FP16/FP32 models and quantised versions, along with popular LLM architectures. Additionally, Booster features proprietary Janus Sampling for code generation and non-English languages.

xFasterTransformer

xFasterTransformer is an optimized solution for Large Language Models (LLMs) on the X86 platform, providing high performance and scalability for inference on mainstream LLM models. It offers C++ and Python APIs for easy integration, along with example codes and benchmark scripts. Users can prepare models in a different format, convert them, and use the APIs for tasks like encoding input prompts, generating token ids, and serving inference requests. The tool supports various data types and models, and can run in single or multi-rank modes using MPI. A web demo based on Gradio is available for popular LLM models like ChatGLM and Llama2. Benchmark scripts help evaluate model inference performance quickly, and MLServer enables serving with REST and gRPC interfaces.

amazon-transcribe-live-call-analytics

The Amazon Transcribe Live Call Analytics (LCA) with Agent Assist Sample Solution is designed to help contact centers assess and optimize caller experiences in real time. It leverages Amazon machine learning services like Amazon Transcribe, Amazon Comprehend, and Amazon SageMaker to transcribe and extract insights from contact center audio. The solution provides real-time supervisor and agent assist features, integrates with existing contact centers, and offers a scalable, cost-effective approach to improve customer interactions. The end-to-end architecture includes features like live call transcription, call summarization, AI-powered agent assistance, and real-time analytics. The solution is event-driven, ensuring low latency and seamless processing flow from ingested speech to live webpage updates.

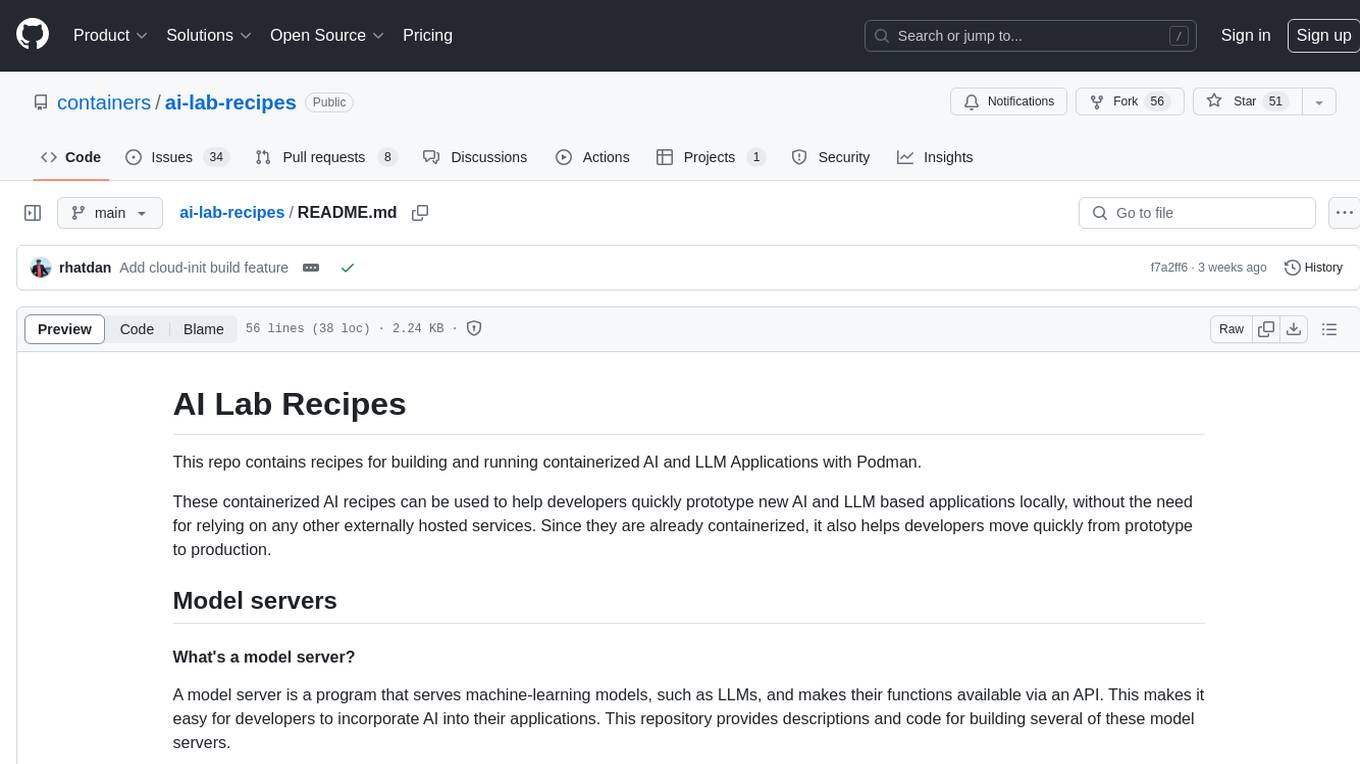

ai-lab-recipes

This repository contains recipes for building and running containerized AI and LLM applications with Podman. It provides model servers that serve machine-learning models via an API, allowing developers to quickly prototype new AI applications locally. The recipes include components like model servers and AI applications for tasks such as chat, summarization, object detection, etc. Images for sample applications and models are available in `quay.io`, and bootable containers for AI training on Linux OS are enabled.

XLearning

XLearning is a scheduling platform for big data and artificial intelligence, supporting various machine learning and deep learning frameworks. It runs on Hadoop Yarn and integrates frameworks like TensorFlow, MXNet, Caffe, Theano, PyTorch, Keras, XGBoost. XLearning offers scalability, compatibility, multiple deep learning framework support, unified data management based on HDFS, visualization display, and compatibility with code at native frameworks. It provides functions for data input/output strategies, container management, TensorBoard service, and resource usage metrics display. XLearning requires JDK >= 1.7 and Maven >= 3.3 for compilation, and deployment on CentOS 7.2 with Java >= 1.7 and Hadoop 2.6, 2.7, 2.8.