pgai

A suite of tools to develop RAG, semantic search, and other AI applications more easily with PostgreSQL

Stars: 5734

pgai simplifies the process of building search and Retrieval Augmented Generation (RAG) AI applications with PostgreSQL. It brings embedding and generation AI models closer to the database, allowing users to create embeddings, retrieve LLM chat completions, reason over data for classification, summarization, and data enrichment directly from within PostgreSQL in a SQL query. The tool requires an OpenAI API key and a PostgreSQL client to enable AI functionality in the database. Users can install pgai from source, run it in a pre-built Docker container, or enable it in a Timescale Cloud service. The tool provides functions to handle API keys using psql or Python, and offers various AI functionalities like tokenizing, detokenizing, embedding, chat completion, and content moderation.

README:

A Python library that transforms PostgreSQL into a robust, production-ready retrieval engine for RAG and Agentic applications.

-

🔄 Automatically create and synchronize vector embeddings from PostgreSQL data and S3 documents. Embeddings update automatically as data changes.

-

🤖 Semantic Catalog: Enable natural language to SQL with AI. Automatically generate database descriptions and power text-to-SQL for agentic applications.

-

🔍 Powerful vector and semantic search with pgvector and pgvectorscale.

-

🛡️ Production-ready out-of-the-box: Supports batch processing for efficient embedding generation, with built-in handling for model failures, rate limits, and latency spikes.

-

🐘 Works with any PostgreSQL database, including Timescale Cloud, Amazon RDS, Supabase and more.

Basic Architecture: The system consists of an application you write, a PostgreSQL database, and stateless vectorizer workers. The application defines a vectorizer configuration to embed data from sources like PostgreSQL or S3. The workers read this configuration, processes the data queue into embeddings and chunked text, and writes the results back. The application then queries this data to power RAG and semantic search.

The key strength of this architecture lies in its resilience: data modifications made by the application are decoupled from the embedding process, ensuring that failures in the embedding service do not affect the core data operations.

First, install the pgai package.

pip install pgaiThen, install the pgai database components. You can do this from the terminal using the CLI or in your Python application code using the pgai python package.

# from the cli

pgai install -d <database-url>

# or from the python package, often done as part of your application setup

import pgai

pgai.install(DB_URL)

If you are not on Timescale Cloud you will also need to run the pgai vectorizer worker. Install the dependencies for it via:

pip install "pgai[vectorizer-worker]"If you are using the semantic catalog, you will need to run:

pip install "pgai[semantic-catalog]"This quickstart demonstrates how pgai Vectorizer enables semantic search and RAG over PostgreSQL data by automatically creating and synchronizing embeddings as data changes.

Looking for text-to-SQL? Check out the Semantic Catalog quickstart to transform natural language questions into SQL queries.

The key "secret sauce" of pgai Vectorizer is its declarative approach to embedding generation. Simply define your pipeline and let Vectorizer handle the operational complexity of keeping embeddings in sync, even when embedding endpoints are unreliable. You can define a simple version of the pipeline as follows:

CREATE TABLE IF NOT EXISTS wiki (

id INTEGER PRIMARY KEY GENERATED ALWAYS AS IDENTITY,

url TEXT NOT NULL,

title TEXT NOT NULL,

text TEXT NOT NULL

)

SELECT ai.create_vectorizer(

'wiki'::regclass,

loading => ai.loading_column(column_name=>'text'),

destination => ai.destination_table(target_table=>'wiki_embedding_storage'),

embedding => ai.embedding_openai(model=>'text-embedding-ada-002', dimensions=>'1536')

)The vectorizer will automatically create embeddings for all the rows in the

wiki table, and, more importantly, will keep the embeddings synced with the

underlying data as it changes. Think of it almost like declaring an index on

the wiki table, but instead of the database managing the index datastructure

for you, the Vectorizer is managing the embeddings.

Prerequisites:

- A PostgreSQL database (docker instructions).

- An OpenAI API key (we use openai for embedding in the quick start, but you can use multiple providers).

Create a .env file with the following:

OPENAI_API_KEY=<your-openai-api-key>

DB_URL=<your-database-url>

You can download the full python code and requirements.txt from the quickstart example and run it in the same directory as the .env file.

Click here for a bash script to run the quickstart

curl -O https://raw.githubusercontent.com/timescale/pgai/main/examples/quickstart/main.py

curl -O https://raw.githubusercontent.com/timescale/pgai/main/examples/quickstart/requirements.txt

python -m venv venv

source venv/bin/activate

pip install -r requirements.txt

python main.pyClick to expand sample output

Search results 1:

[WikiSearchResult(id=7,

url='https://en.wikipedia.org/wiki/Aristotle',

title='Aristotle',

text='Aristotle (; Aristotélēs, ; 384–322\xa0BC) was an '

'Ancient Greek philosopher and polymath. His writings '

'cover a broad range of subjects spanning the natural '

'sciences, philosophy, linguistics, economics, '

'politics, psychology and the arts. As the founder of '

'the Peripatetic school of philosophy in the Lyceum in '

'Athens, he began the wider Aristotelian tradition that '

'followed, which set the groundwork for the development '

'of modern science.\n'

'\n'

"Little is known about Aristotle's life. He was born in "

'the city of Stagira in northern Greece during the '

'Classical period. His father, Nicomachus, died when '

'Aristotle was a child, and he was brought up by a '

"guardian. At 17 or 18 he joined Plato's Academy in "

'Athens and remained there till the age of 37 (). '

'Shortly after Plato died, Aristotle left Athens and, '

'at the request of Philip II of Macedon, tutored his '

'son Alexander the Great beginning in 343 BC. He '

'established a library in the Lyceum which helped him '

'to produce many of his hundreds of books on papyru',

chunk='Aristotle (; Aristotélēs, ; 384–322\xa0BC) was an '

'Ancient Greek philosopher and polymath. His writings '

'cover a broad range of subjects spanning the natural '

'sciences, philosophy, linguistics, economics, '

'politics, psychology and the arts. As the founder of '

'the Peripatetic school of philosophy in the Lyceum in '

'Athens, he began the wider Aristotelian tradition '

'that followed, which set the groundwork for the '

'development of modern science.',

distance=0.22242502364217387)]

Search results 2:

[WikiSearchResult(id=41,

url='https://en.wikipedia.org/wiki/pgai',

title='pgai',

text='pgai is a Python library that turns PostgreSQL into '

'the retrieval engine behind robust, production-ready '

'RAG and Agentic applications. It does this by '

'automatically creating vector embeddings for your data '

'based on the vectorizer you define.',

chunk='pgai is a Python library that turns PostgreSQL into '

'the retrieval engine behind robust, production-ready '

'RAG and Agentic applications. It does this by '

'automatically creating vector embeddings for your '

'data based on the vectorizer you define.',

distance=0.13639101792546204)]

RAG response:

The main thing pgai does right now is generating vector embeddings for data in PostgreSQL databases based on the vectorizer defined by the user, enabling the creation of robust RAG and Agentic applications.

Pgai requires a few catalog tables and functions to be installed into the database. This is done using the pgai.install function, which will install the necessary components into the ai schema of the database.

pgai.install(DB_URL)This defines the vectorizer, which tells the system how to create the embeddings from the text column in the wiki table. The vectorizer creates a view wiki_embedding that we can query for the embeddings (as we'll see below).

async def create_vectorizer(conn: psycopg.AsyncConnection):

async with conn.cursor() as cur:

await cur.execute("""

SELECT ai.create_vectorizer(

'wiki'::regclass,

if_not_exists => true,

loading => ai.loading_column(column_name=>'text'),

embedding => ai.embedding_openai(model=>'text-embedding-ada-002', dimensions=>'1536'),

destination => ai.destination_table(view_name=>'wiki_embedding')

)

""")

await conn.commit()In this example, we run the vectorizer worker once to create the embeddings for the existing data.

worker = Worker(DB_URL, once=True)

worker.run()In a real application, we would not call the worker manually like this every time we want to create the embeddings. Instead, we would run the worker in the background and it would run continuously, polling for work from the vectorizer.

You can run the worker in the background from the application, the cli, or docker. See the vectorizer worker documentation for more details.

This is standard pgvector semantic search in PostgreSQL. The search is performed against the wiki_embedding view, which is created by the vectorizer and includes all the columns from the wiki table plus the embedding column and the chunk text. This function returns both the entire text column from the wiki table and smaller chunks of the text that are most relevant to the query.

@dataclass

class WikiSearchResult:

id: int

url: str

title: str

text: str

chunk: str

distance: float

async def _find_relevant_chunks(client: AsyncOpenAI, query: str, limit: int = 1) -> List[WikiSearchResult]:

# Generate embedding for the query using OpenAI's API

response = await client.embeddings.create(

model="text-embedding-ada-002",

input=query,

encoding_format="float",

)

embedding = np.array(response.data[0].embedding)

# Query the database for the most similar chunks using pgvector's cosine distance operator (<=>)

async with pool.connection() as conn:

async with conn.cursor(row_factory=class_row(WikiSearchResult)) as cur:

await cur.execute("""

SELECT w.id, w.url, w.title, w.text, w.chunk, w.embedding <=> %s as distance

FROM wiki_embedding w

ORDER BY distance

LIMIT %s

""", (embedding, limit))

return await cur.fetchall()This code is notable for what it is not doing. This is a simple insert of a new article into the wiki table. We did not need to do anything different to create the embeddings, the vectorizer worker will take care of updating the embeddings as the data changes.

def insert_article_about_pgai(conn: psycopg.AsyncConnection):

async with conn.cursor(row_factory=class_row(WikiSearchResult)) as cur:

await cur.execute("""

INSERT INTO wiki (url, title, text) VALUES

('https://en.wikipedia.org/wiki/pgai', 'pgai', 'pgai is a Python library that turns PostgreSQL into the retrieval engine behind robust, production-ready RAG and Agentic applications. It does this by automatically creating vector embeddings for your data based on the vectorizer you define.')

""")

await conn.commit() This code performs RAG with the LLM. It uses the _find_relevant_chunks function defined above to find the most relevant chunks of text from the wiki table and then uses the LLM to generate a response.

query = "What is the main thing pgai does right now?"

relevant_chunks = await _find_relevant_chunks(client, query)

context = "\n\n".join(

f"{chunk.title}:\n{chunk.text}"

for chunk in relevant_chunks

)

prompt = f"""Question: {query}

Please use the following context to provide an accurate response:

{context}

Answer:"""

response = await client.chat.completions.create({

model: "gpt-4o-mini",

messages: [{ role: "user", content: prompt }],

})

print("RAG response:")

print(response.choices[0].message.content)- Semantic Catalog Quickstart - Learn how to use the semantic catalog to translate natural language to SQL for agentic applications.

Our pgai Python library lets you work with embeddings generated from your data:

- Automatically create and sync vector embeddings for your data using the vectorizer.

- Load data from a column in your table or from a file, s3 bucket, etc.

- Create multiple embeddings for the same data with different models and parameters for testing and experimentation.

- Customize how your embedding pipeline parses, chunks, formats, and embeds your data.

You can use the vector embeddings to:

- Perform semantic search using pgvector.

- Implement Retrieval Augmented Generation (RAG)

- Perform high-performance, cost-efficient ANN search on large vector workloads with pgvectorscale, which complements pgvector.

Text-to-SQL with Semantic Catalog: Transform natural language into accurate SQL queries. The semantic catalog generates database descriptions automatically, lets a human in the loop review and improve the descriptions and stores SQL examples and business facts. This enables LLMs to understand your schema and data context. See the semantic catalog for more details.

We also offer a PostgreSQL extension that can perform LLM model calling directly from SQL. This is often useful for use cases like classification, summarization, and data enrichment on your existing data.

The vectorizer is designed to be flexible and customizable. Each vectorizer defines a pipeline for creating embeddings from your data. The pipeline is defined by a series of components that are applied in sequence to the data:

- Loading: First, you define the source of the data to embed. It can be the data stored directly in a column of the source table or a URI referenced in a column of the source table that points to a file, s3 bucket, etc.

- Parsing: Then, you define the way the data is parsed if it is a non-text document such as a PDF, HTML, or markdown file.

- Chunking: Next, you define the way text data is split into chunks.

- Formatting: Then, for each chunk, you define the way the data is formatted before it is sent for embedding. For example, you can add the title of the document as the first line of the chunk.

- Embedding: Finally, you specify the LLM provider, model, and the parameters to be used when generating the embeddings.

The following models are supported for embedding:

Simply creating vector embeddings is easy and straightforward. The challenge is that LLMs are somewhat unreliable and the endpoints exhibit intermittent failures and/or degraded performance. A critical part of properly handling failures is that your primary data-modification operations (INSERT, UPDATE, DELETE) should not be dependent on the embedding operation. Otherwise, your application will be down every time the endpoint is slow or fails and your user experience will suffer.

Normally, you would need to implement a custom MLops pipeline to properly handle endpoint failures. This commonly involves queuing system like Kafka, specialized workers, and other infrastructure for handling the queue and retrying failed requests. This is a lot of work and it is easy to get wrong.

With pgai, you can skip all that and focus on building your application because the vectorizer is managing the embeddings for you. We have built in queueing and retry logic to handle the various failure modes you can encounter. Because we do this work in the background, the primary data modification operations are not dependent on the embedding operation. This is why pgai is production-ready out of the box.

Many specialized vector databases create embeddings for you. However, they typically fail when embedding endpoints are down or degraded, placing the burden of error handling and retries back on you.

- Vector Databases Are the Wrong Abstraction

- pgai: Giving PostgreSQL Developers AI Engineering Superpowers

- Semantic Catalog (Text-to-SQL) - Learn how to use the semantic catalog to improve the translation of natural language to SQL for agentic applications.

- The vectorizer quick start above

- Quick start with OpenAI

- Quick start with VoyageAI

- How to Automatically Create & Update Embeddings in PostgreSQL—With One SQL Query

- [video] Auto Create and Sync Vector Embeddings in 1 Line of SQL

- Which OpenAI Embedding Model Is Best for Your RAG App With Pgvector?

- Which RAG Chunking and Formatting Strategy Is Best for Your App With Pgvector

- Parsing All the Data With Open-Source Tools: Unstructured and Pgai

We welcome contributions to pgai! See the Contributing page for more information.

pgai is still at an early stage. Now is a great time to help shape the direction of this project; we are currently deciding priorities. Have a look at the list of features we're thinking of working on. Feel free to comment, expand the list, or hop on the Discussions forum.

To get started, take a look at how to contribute and how to set up a dev/test environment.

Timescale is a PostgreSQL database company. To learn more visit the timescale.com.

Timescale Cloud is a high-performance, developer focused, cloud platform that provides PostgreSQL services for the most demanding AI, time-series, analytics, and event workloads. Timescale Cloud is ideal for production applications and provides high availability, streaming backups, upgrades over time, roles and permissions, and great security.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for pgai

Similar Open Source Tools

pgai

pgai simplifies the process of building search and Retrieval Augmented Generation (RAG) AI applications with PostgreSQL. It brings embedding and generation AI models closer to the database, allowing users to create embeddings, retrieve LLM chat completions, reason over data for classification, summarization, and data enrichment directly from within PostgreSQL in a SQL query. The tool requires an OpenAI API key and a PostgreSQL client to enable AI functionality in the database. Users can install pgai from source, run it in a pre-built Docker container, or enable it in a Timescale Cloud service. The tool provides functions to handle API keys using psql or Python, and offers various AI functionalities like tokenizing, detokenizing, embedding, chat completion, and content moderation.

rag-experiment-accelerator

The RAG Experiment Accelerator is a versatile tool that helps you conduct experiments and evaluations using Azure AI Search and RAG pattern. It offers a rich set of features, including experiment setup, integration with Azure AI Search, Azure Machine Learning, MLFlow, and Azure OpenAI, multiple document chunking strategies, query generation, multiple search types, sub-querying, re-ranking, metrics and evaluation, report generation, and multi-lingual support. The tool is designed to make it easier and faster to run experiments and evaluations of search queries and quality of response from OpenAI, and is useful for researchers, data scientists, and developers who want to test the performance of different search and OpenAI related hyperparameters, compare the effectiveness of various search strategies, fine-tune and optimize parameters, find the best combination of hyperparameters, and generate detailed reports and visualizations from experiment results.

knowledge-graph-of-thoughts

Knowledge Graph of Thoughts (KGoT) is an innovative AI assistant architecture that integrates LLM reasoning with dynamically constructed knowledge graphs (KGs). KGoT extracts and structures task-relevant knowledge into a dynamic KG representation, iteratively enhanced through external tools such as math solvers, web crawlers, and Python scripts. Such structured representation of task-relevant knowledge enables low-cost models to solve complex tasks effectively. The KGoT system consists of three main components: the Controller, the Graph Store, and the Integrated Tools, each playing a critical role in the task-solving process.

aici

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

chronon

Chronon is a platform that simplifies and improves ML workflows by providing a central place to define features, ensuring point-in-time correctness for backfills, simplifying orchestration for batch and streaming pipelines, offering easy endpoints for feature fetching, and guaranteeing and measuring consistency. It offers benefits over other approaches by enabling the use of a broad set of data for training, handling large aggregations and other computationally intensive transformations, and abstracting away the infrastructure complexity of data plumbing.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

langchain

LangChain is a framework for developing Elixir applications powered by language models. It enables applications to connect language models to other data sources and interact with the environment. The library provides components for working with language models and off-the-shelf chains for specific tasks. It aims to assist in building applications that combine large language models with other sources of computation or knowledge. LangChain is written in Elixir and is not aimed for parity with the JavaScript and Python versions due to differences in programming paradigms and design choices. The library is designed to make it easy to integrate language models into applications and expose features, data, and functionality to the models.

MegatronApp

MegatronApp is a toolchain built around the Megatron-LM training framework, offering performance tuning, slow-node detection, and training-process visualization. It includes modules like MegaScan for anomaly detection, MegaFBD for forward-backward decoupling, MegaDPP for dynamic pipeline planning, and MegaScope for visualization. The tool aims to enhance large-scale distributed training by providing valuable capabilities and insights.

Tools4AI

Tools4AI is a Java-based Agentic Framework for building AI agents to integrate with enterprise Java applications. It enables the conversion of natural language prompts into actionable behaviors, streamlining user interactions with complex systems. By leveraging AI capabilities, it enhances productivity and innovation across diverse applications. The framework allows for seamless integration of AI with various systems, such as customer service applications, to interpret user requests, trigger actions, and streamline workflows. Prompt prediction anticipates user actions based on input prompts, enhancing user experience by proactively suggesting relevant actions or services based on context.

pydantic-ai

PydanticAI is a Python agent framework designed to make it less painful to build production grade applications with Generative AI. It is built by the Pydantic Team and supports various AI models like OpenAI, Anthropic, Gemini, Ollama, Groq, and Mistral. PydanticAI seamlessly integrates with Pydantic Logfire for real-time debugging, performance monitoring, and behavior tracking of LLM-powered applications. It is type-safe, Python-centric, and offers structured responses, dependency injection system, and streamed responses. PydanticAI is in early beta, offering a Python-centric design to apply standard Python best practices in AI-driven projects.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

curate-gpt

CurateGPT is a prototype web application and framework for performing general purpose AI-guided curation and curation-related operations over collections of objects. It allows users to load JSON, YAML, or CSV data, build vector database indexes for ontologies, and interact with various data sources like GitHub, Google Drives, Google Sheets, and more. The tool supports ontology curation, knowledge base querying, term autocompletion, and all-by-all comparisons for objects in a collection.

monitors4codegen

This repository hosts the official code and data artifact for the paper 'Monitor-Guided Decoding of Code LMs with Static Analysis of Repository Context'. It introduces Monitor-Guided Decoding (MGD) for code generation using Language Models, where a monitor uses static analysis to guide the decoding. The repository contains datasets, evaluation scripts, inference results, a language server client 'multilspy' for static analyses, and implementation of various monitors monitoring for different properties in 3 programming languages. The monitors guide Language Models to adhere to properties like valid identifier dereferences, correct number of arguments to method calls, typestate validity of method call sequences, and more.

curategpt

CurateGPT is a prototype web application and framework designed for general purpose AI-guided curation and curation-related operations over collections of objects. It provides functionalities for loading example data, building indexes, interacting with knowledge bases, and performing tasks such as chatting with a knowledge base, querying Pubmed, interacting with a GitHub issue tracker, term autocompletion, and all-by-all comparisons. The tool is built to work best with the OpenAI gpt-4 model and OpenAI ada-text-embedding-002 for embedding, but also supports alternative models through a plugin architecture.

Mapperatorinator

Mapperatorinator is a multi-model framework that uses spectrogram inputs to generate fully featured osu! beatmaps for all gamemodes and assist modding beatmaps. The project aims to automatically generate rankable quality osu! beatmaps from any song with a high degree of customizability. The tool is built upon osuT5 and osu-diffusion, utilizing GPU compute and instances on vast.ai for development. Users can responsibly use AI in their beatmaps with this tool, ensuring disclosure of AI usage. Installation instructions include cloning the repository, creating a virtual environment, and installing dependencies. The tool offers a Web GUI for user-friendly experience and a Command-Line Inference option for advanced configurations. Additionally, an Interactive CLI script is available for terminal-based workflow with guided setup. The tool provides generation tips and features MaiMod, an AI-driven modding tool for osu! beatmaps. Mapperatorinator tokenizes beatmaps, utilizes a model architecture based on HF Transformers Whisper model, and offers multitask training format for conditional generation. The tool ensures seamless long generation, refines coordinates with diffusion, and performs post-processing for improved beatmap quality. Super timing generator enhances timing accuracy, and LoRA fine-tuning allows adaptation to specific styles or gamemodes. The project acknowledges credits and related works in the osu! community.

lotus

LOTUS (LLMs Over Tables of Unstructured and Structured Data) is a query engine that provides a declarative programming model and an optimized query engine for reasoning-based query pipelines over structured and unstructured data. It offers a simple and intuitive Pandas-like API with semantic operators for fast and easy LLM-powered data processing. The tool implements a semantic operator programming model, allowing users to write AI-based pipelines with high-level logic and leaving the rest of the work to the query engine. LOTUS supports various semantic operators like sem_map, sem_filter, sem_extract, sem_agg, sem_topk, sem_join, sem_sim_join, and sem_search, enabling users to perform tasks like mapping records, filtering data, aggregating records, and more. The tool also supports different model classes such as LM, RM, and Reranker for language modeling, retrieval, and reranking tasks respectively.

For similar tasks

superagent

Superagent is an open-source AI assistant framework and API that allows developers to add powerful AI assistants to their applications. These assistants use large language models (LLMs), retrieval augmented generation (RAG), and generative AI to help users with a variety of tasks, including question answering, chatbot development, content generation, data aggregation, and workflow automation. Superagent is backed by Y Combinator and is part of YC W24.

Awesome-Segment-Anything

Awesome-Segment-Anything is a powerful tool for segmenting and extracting information from various types of data. It provides a user-friendly interface to easily define segmentation rules and apply them to text, images, and other data formats. The tool supports both supervised and unsupervised segmentation methods, allowing users to customize the segmentation process based on their specific needs. With its versatile functionality and intuitive design, Awesome-Segment-Anything is ideal for data analysts, researchers, content creators, and anyone looking to efficiently extract valuable insights from complex datasets.

simpletransformers

Simple Transformers is a library based on the Transformers library by HuggingFace, allowing users to quickly train and evaluate Transformer models with only 3 lines of code. It supports various tasks such as Information Retrieval, Language Models, Encoder Model Training, Sequence Classification, Token Classification, Question Answering, Language Generation, T5 Model, Seq2Seq Tasks, Multi-Modal Classification, and Conversational AI.

smile

Smile (Statistical Machine Intelligence and Learning Engine) is a comprehensive machine learning, NLP, linear algebra, graph, interpolation, and visualization system in Java and Scala. It covers every aspect of machine learning, including classification, regression, clustering, association rule mining, feature selection, manifold learning, multidimensional scaling, genetic algorithms, missing value imputation, efficient nearest neighbor search, etc. Smile implements major machine learning algorithms and provides interactive shells for Java, Scala, and Kotlin. It supports model serialization, data visualization using SmilePlot and declarative approach, and offers a gallery showcasing various algorithms and visualizations.

pgai

pgai simplifies the process of building search and Retrieval Augmented Generation (RAG) AI applications with PostgreSQL. It brings embedding and generation AI models closer to the database, allowing users to create embeddings, retrieve LLM chat completions, reason over data for classification, summarization, and data enrichment directly from within PostgreSQL in a SQL query. The tool requires an OpenAI API key and a PostgreSQL client to enable AI functionality in the database. Users can install pgai from source, run it in a pre-built Docker container, or enable it in a Timescale Cloud service. The tool provides functions to handle API keys using psql or Python, and offers various AI functionalities like tokenizing, detokenizing, embedding, chat completion, and content moderation.

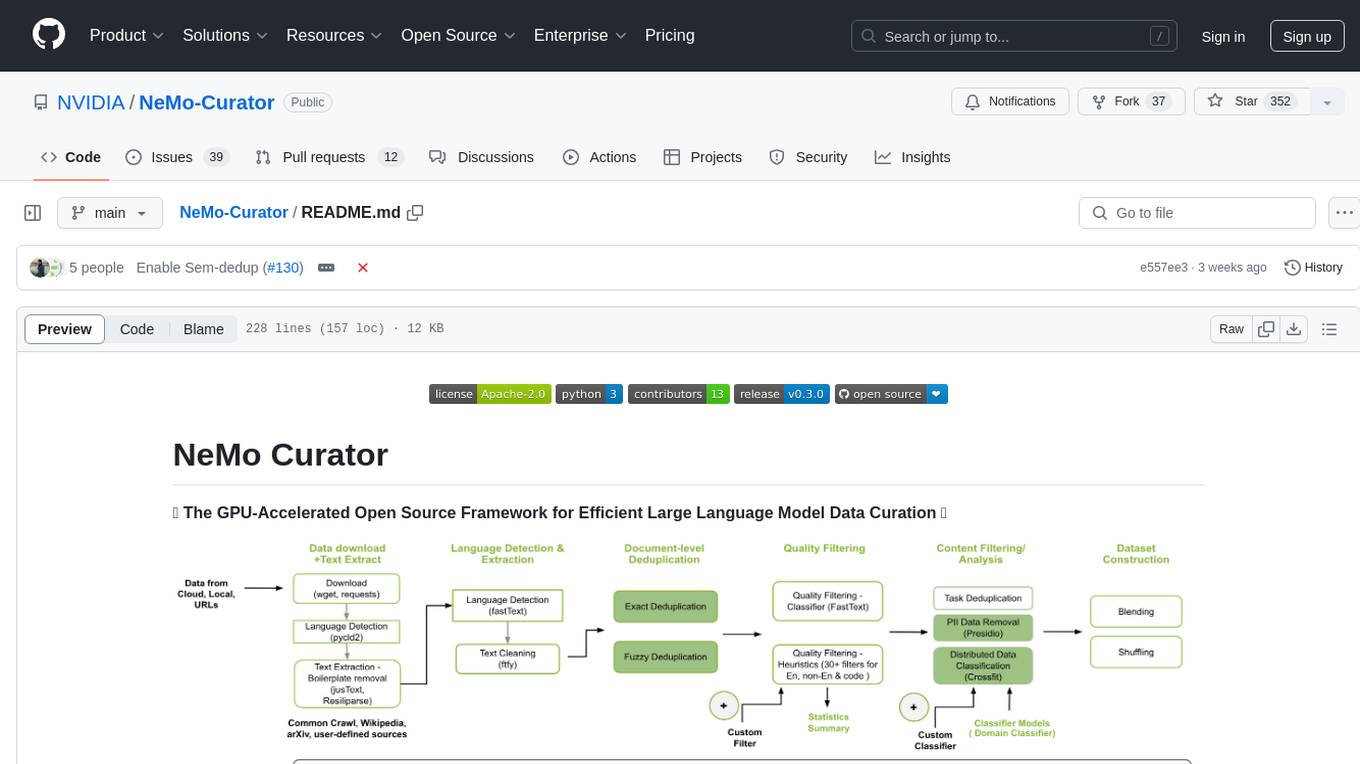

NeMo-Curator

NeMo Curator is a GPU-accelerated open-source framework designed for efficient large language model data curation. It provides scalable dataset preparation for tasks like foundation model pretraining, domain-adaptive pretraining, supervised fine-tuning, and parameter-efficient fine-tuning. The library leverages GPUs with Dask and RAPIDS to accelerate data curation, offering customizable and modular interfaces for pipeline expansion and model convergence. Key features include data download, text extraction, quality filtering, deduplication, downstream-task decontamination, distributed data classification, and PII redaction. NeMo Curator is suitable for curating high-quality datasets for large language model training.

bootcamp_machine-learning

Bootcamp Machine Learning is a one-week program designed by 42 AI to teach the basics of Machine Learning. The curriculum covers topics such as linear algebra, statistics, regression, classification, and regularization. Participants will learn concepts like gradient descent, hypothesis modeling, overfitting detection, logistic regression, and more. The bootcamp is ideal for individuals with prior knowledge of Python who are interested in diving into the field of artificial intelligence.

geoai

geoai is a Python package designed for utilizing Artificial Intelligence (AI) in the context of geospatial data. It allows users to visualize various types of geospatial data such as vector, raster, and LiDAR data. Additionally, the package offers functionalities for segmenting remote sensing imagery using the Segment Anything Model and classifying remote sensing imagery with deep learning models. With a focus on geospatial AI applications, geoai provides a versatile tool for processing and analyzing spatial data with the power of AI.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.