superagent

The worlds first purpose-built firewall for AI

Stars: 6146

Superagent is an open-source AI assistant framework and API that allows developers to add powerful AI assistants to their applications. These assistants use large language models (LLMs), retrieval augmented generation (RAG), and generative AI to help users with a variety of tasks, including question answering, chatbot development, content generation, data aggregation, and workflow automation. Superagent is backed by Y Combinator and is part of YC W24.

README:

Runtime protection for AI applications - blocks prompt injection, backdoor attacks, and sensitive data leaks in real time.

🛡️ Prompt Injection Protection - Detects and blocks malicious prompt injections before they reach your AI models

🔒 Backdoor Attack Prevention - Identifies hidden backdoor commands and neutralizes them automatically

🚫 Sensitive Data Filtering - Prevents PII, secrets, and confidential information from being exposed in AI responses

⚡ Real-time Processing - Zero-latency protection with streaming response support

📊 Complete Observability - Structured JSON logs for monitoring, alerting, and compliance

🔄 Model Routing - Route requests to different AI providers based on model configuration

# Node.js

cd node/ && npm install && npm start

# Rust (high performance)

cd rust/ && cargo build --release && ./target/release/ai-firewall start

# Docker

docker-compose up -dConfig File Location

By default, both implementations look for superagent.yaml in the current working directory. You can specify a custom config file path using the --config parameter:

# Node.js

npm start -- --config=/etc/superagent/superagent.yaml

# Rust

./target/release/ai-firewall start --config=/etc/superagent/superagent.yamlConfig File Format

Edit superagent.yaml to add models and API endpoints:

models:

- model_name: "gpt-4o"

provider: "openai"

api_base: "https://api.openai.com"

- model_name: "claude-3-7-sonnet-20250219"

provider: "anthropic"

api_base: "https://api.anthropic.com/v1"

# Optional: Send telemetry data to external webhook

telemetry_webhook:

url: "https://your-webhook-endpoint.com/api/telemetry"

headers:

x-api-key: "your-api-key"

x-team-id: "your-team-id"Command Examples

Both Node.js and Rust implementations support the following CLI options:

# Basic usage

ai-firewall start --port 8080

# With custom config

ai-firewall start --port 8080 --config=/path/to/superagent.yaml

# With redaction API for input screening

ai-firewall start --redaction-api-url=http://localhost:3000/redact

# Background mode (daemon)

ai-firewall start --daemon

# Server management

ai-firewall stop --port 8080

ai-firewall status --port 8080Global Options

-

-p, --port <PORT>: Port to run on (default: 8080) -

-c, --config <PATH>: Path to superagent.yaml file (default: superagent.yaml) -

--redaction-api-url <URL>: URL for redaction API to screen user messages -

-d, --daemon: Run in background (start command only)

Node.js Package

Install the package:

npm install ai-firewallCreate a server programmatically:

import ProxyServer from 'ai-firewall';

const port = 8080;

const configPath = './superagent.yaml'; // optional, defaults to 'superagent.yaml'

const redactionApiUrl = 'http://localhost:3000/redact'; // optional

const proxy = new ProxyServer(port, configPath, redactionApiUrl);

// Start the server

await proxy.start();

// Graceful shutdown

process.on('SIGINT', () => {

proxy.stop();

process.exit(0);

});Rust Crate

Add to your Cargo.toml:

[dependencies]

ai-firewall = "0.0.1"Create a server programmatically:

use ai_firewall::ProxyServer;

#[tokio::main]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

let port = 8080;

let config_path = Some("./superagent.yaml".to_string()); // optional

let redaction_api_url = Some("http://localhost:3000/redact".to_string()); // optional

let server = ProxyServer::new(port, config_path, redaction_api_url).await?;

// Start the server (this blocks)

server.start().await?;

Ok(())

}Point your AI client to the proxy URL instead of the direct API:

curl -X POST http://localhost:8080/messages \

-H "Content-Type: application/json" \

-H "x-api-key: your-key" \

-d '{"model":"claude-3-7-sonnet-20250219","messages":[...]}'Health check: GET /health

Built-in Firewall Engine

Powered by a fine-tuned Gemma 3 270M model for real-time threat detection:

# Start the firewall engine

cd api/ && ./start.sh

# Start proxy with firewall enabled

./target/release/ai-firewall start --redaction-api-url=http://localhost:3000/redactProtection Types:

- 🛡️ Prompt Injections → Replaced with

[INJECTION] - 🔒 Backdoor Commands → Replaced with

[BACKDOOR] - 🚫 Sensitive Data (PII) → Replaced with

[REDACTED]

Features:

- Automatic model download on first run

- Sub-100ms inference time

- Supports all message formats (text, structured content)

- Graceful fallback if firewall is unavailable

Structured Logging

Superagent outputs structured JSON logs to stdout that can be ingested by any log aggregation system (ELK, Splunk, DataDog, Loki, etc.).

Environment Variables:

LOG_LEVEL=info # debug|info|warn|error (default: info)Example log output:

{

"timestamp": "2024-08-26T10:30:00.000Z",

"level": "info",

"message": "Request processed",

"service": "ai-firewall-node",

"version": "0.0.1",

"event_type": "request_processed",

"trace_id": "abc123-def456-789",

"request": {

"method": "POST",

"url": "/v1/messages",

"model": "claude-3-7-sonnet-20250219",

"headers": {

"user-agent": "curl/7.68.0",

"originator": "my-app"

},

"body_size_bytes": 1024

},

"response": {

"status": 200,

"duration_ms": 1250,

"body_size_bytes": 2048,

"is_sse": true

},

"redaction": {

"input_redacted": true,

"output_redacted": true,

"processing_time_ms": 15

},

"proxy": {

"target_url": "https://api.anthropic.com/v1/messages",

"model_routing": true

}

}Log Integration Examples

Docker/Kubernetes (stdout):

# View logs directly

docker logs container-name | jq '.'

kubectl logs deployment/ai-firewall | jq '.'

# Pipe to analysis tools

docker logs container-name | grep '"level":"error"'Fluent Bit:

[INPUT]

Name tail

Path /var/log/containers/ai-firewall*.log

Parser json

[OUTPUT]

Name elasticsearch

Match *

Host elasticsearch.example.com

Vector:

[sources.ai_firewall]

type = "docker_logs"

include_labels = ["ai-firewall"]

[sinks.datadog]

type = "datadog_logs"

inputs = ["ai_firewall"]

default_api_key = "${DATADOG_API_KEY}"Promtail (Loki):

scrape_configs:

- job_name: ai-firewall

docker_sd_configs:

- host: unix:///var/run/docker.sock

relabel_configs:

- source_labels: [__meta_docker_container_label_service]

target_label: service- Zero-trust Security - Every request is analyzed and sanitized before processing

- Complete Audit Trail - Detailed logs for compliance and security monitoring

- Multi-provider Support - Route between OpenAI, Anthropic, and other AI providers

- High Performance - Rust implementation scales from development to production

- Easy Deployment - Docker support with health checks and graceful shutdown

├── node/ # Node.js implementation

├── rust/ # Rust implementation (high performance)

├── api/ # Built-in redaction server (Python/FastAPI)

├── docker/ # Docker configurations

└── README.md # This file

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for superagent

Similar Open Source Tools

superagent

Superagent is an open-source AI assistant framework and API that allows developers to add powerful AI assistants to their applications. These assistants use large language models (LLMs), retrieval augmented generation (RAG), and generative AI to help users with a variety of tasks, including question answering, chatbot development, content generation, data aggregation, and workflow automation. Superagent is backed by Y Combinator and is part of YC W24.

nexus

Nexus is a tool that acts as a unified gateway for multiple LLM providers and MCP servers. It allows users to aggregate, govern, and control their AI stack by connecting multiple servers and providers through a single endpoint. Nexus provides features like MCP Server Aggregation, LLM Provider Routing, Context-Aware Tool Search, Protocol Support, Flexible Configuration, Security features, Rate Limiting, and Docker readiness. It supports tool calling, tool discovery, and error handling for STDIO servers. Nexus also integrates with AI assistants, Cursor, Claude Code, and LangChain for seamless usage.

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

ai-wechat-bot

Gewechat is a project based on the Gewechat project to implement a personal WeChat channel, using the iPad protocol for login. It can obtain wxid and send voice messages, which is more stable than the itchat protocol. The project provides documentation for the API. Users can deploy the Gewechat service and use the ai-wechat-bot project to interface with it. Configuration parameters for Gewechat and ai-wechat-bot need to be set in the config.json file. Gewechat supports sending voice messages, with limitations on the duration of received voice messages. The project has restrictions such as requiring the server to be in the same province as the device logging into WeChat, limited file download support, and support only for text and image messages.

Groq2API

Groq2API is a REST API wrapper around the Groq2 model, a large language model trained by Google. The API allows you to send text prompts to the model and receive generated text responses. The API is easy to use and can be integrated into a variety of applications.

supergateway

Supergateway is a tool that allows running MCP stdio-based servers over SSE (Server-Sent Events) with one command. It is useful for remote access, debugging, or connecting to SSE-based clients when your MCP server only speaks stdio. The tool supports running in SSE to Stdio mode as well, where it connects to a remote SSE server and exposes a local stdio interface for downstream clients. Supergateway can be used with ngrok to share local MCP servers with remote clients and can also be run in a Docker containerized deployment. It is designed with modularity in mind, ensuring compatibility and ease of use for AI tools exchanging data.

summarize

The 'summarize' tool is designed to transcribe and summarize videos from various sources using AI models. It helps users efficiently summarize lengthy videos, take notes, and extract key insights by providing timestamps, original transcripts, and support for auto-generated captions. Users can utilize different AI models via Groq, OpenAI, or custom local models to generate grammatically correct video transcripts and extract wisdom from video content. The tool simplifies the process of summarizing video content, making it easier to remember and reference important information.

mcp-omnisearch

mcp-omnisearch is a Model Context Protocol (MCP) server that acts as a unified gateway to multiple search providers and AI tools. It integrates Tavily, Perplexity, Kagi, Jina AI, Brave, Exa AI, and Firecrawl to offer a wide range of search, AI response, content processing, and enhancement features through a single interface. The server provides powerful search capabilities, AI response generation, content extraction, summarization, web scraping, structured data extraction, and more. It is designed to work flexibly with the API keys available, enabling users to activate only the providers they have keys for and easily add more as needed.

open-edison

OpenEdison is a secure MCP control panel that connects AI to data/software with additional security controls to reduce data exfiltration risks. It helps address the lethal trifecta problem by providing visibility, monitoring potential threats, and alerting on data interactions. The tool offers features like data leak monitoring, controlled execution, easy configuration, visibility into agent interactions, a simple API, and Docker support. It integrates with LangGraph, LangChain, and plain Python agents for observability and policy enforcement. OpenEdison helps gain observability, control, and policy enforcement for AI interactions with systems of records, existing company software, and data to reduce risks of AI-caused data leakage.

lumen

Lumen is a command-line tool that leverages AI to enhance your git workflow. It assists in generating commit messages, understanding changes, interactive searching, and analyzing impacts without the need for an API key. With smart commit messages, git history insights, interactive search, change analysis, and rich markdown output, Lumen offers a seamless and flexible experience for users across various git workflows.

LLMVoX

LLMVoX is a lightweight 30M-parameter, LLM-agnostic, autoregressive streaming Text-to-Speech (TTS) system designed to convert text outputs from Large Language Models into high-fidelity streaming speech with low latency. It achieves significantly lower Word Error Rate compared to speech-enabled LLMs while operating at comparable latency and speech quality. Key features include being lightweight & fast with only 30M parameters, LLM-agnostic for easy integration with existing models, multi-queue streaming for continuous speech generation, and multilingual support for easy adaptation to new languages.

sparrow

Sparrow is an innovative open-source solution for efficient data extraction and processing from various documents and images. It seamlessly handles forms, invoices, receipts, and other unstructured data sources. Sparrow stands out with its modular architecture, offering independent services and pipelines all optimized for robust performance. One of the critical functionalities of Sparrow - pluggable architecture. You can easily integrate and run data extraction pipelines using tools and frameworks like LlamaIndex, Haystack, or Unstructured. Sparrow enables local LLM data extraction pipelines through Ollama or Apple MLX. With Sparrow solution you get API, which helps to process and transform your data into structured output, ready to be integrated with custom workflows. Sparrow Agents - with Sparrow you can build independent LLM agents, and use API to invoke them from your system. **List of available agents:** * **llamaindex** - RAG pipeline with LlamaIndex for PDF processing * **vllamaindex** - RAG pipeline with LLamaIndex multimodal for image processing * **vprocessor** - RAG pipeline with OCR and LlamaIndex for image processing * **haystack** - RAG pipeline with Haystack for PDF processing * **fcall** - Function call pipeline * **unstructured-light** - RAG pipeline with Unstructured and LangChain, supports PDF and image processing * **unstructured** - RAG pipeline with Weaviate vector DB query, Unstructured and LangChain, supports PDF and image processing * **instructor** - RAG pipeline with Unstructured and Instructor libraries, supports PDF and image processing. Works great for JSON response generation

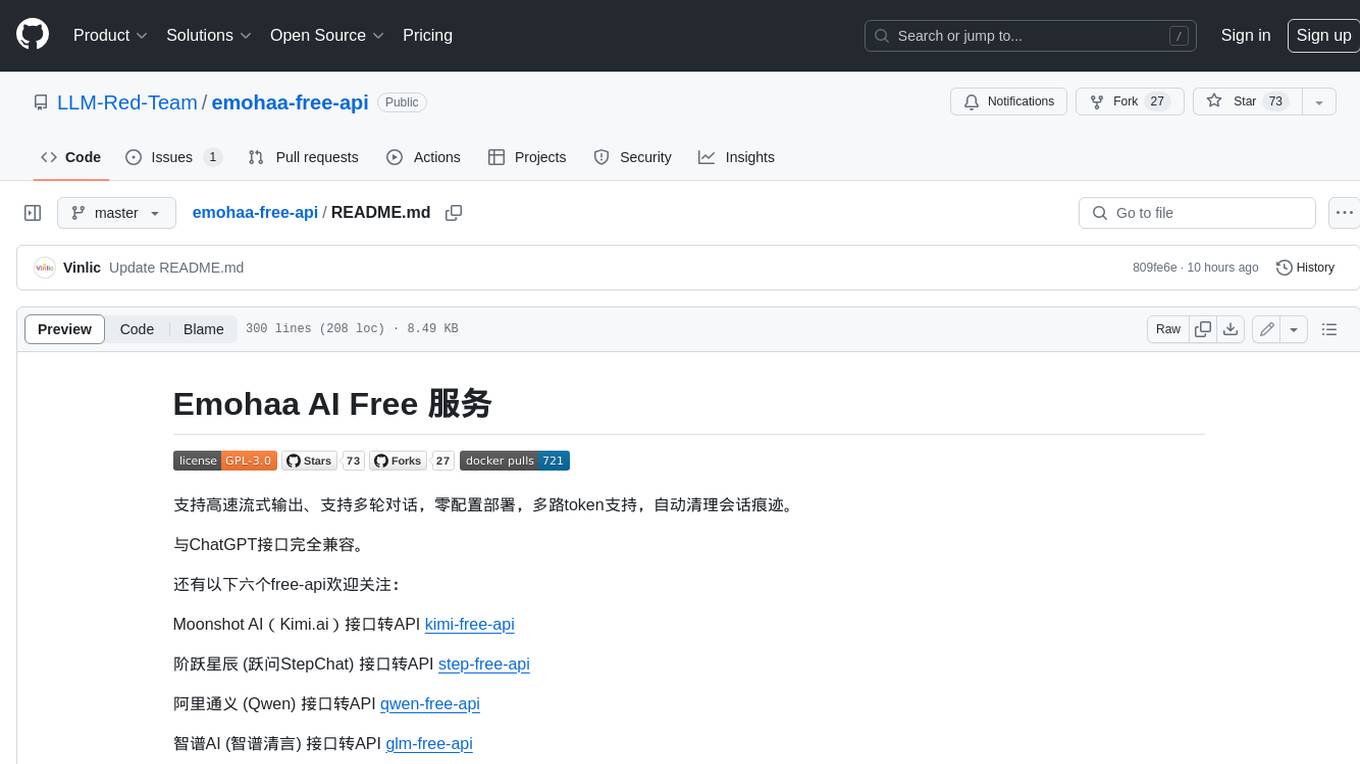

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

ck

ck (seek) is a semantic grep tool that finds code by meaning, not just keywords. It replaces traditional grep by understanding the user's search intent. It allows users to search for code based on concepts like 'error handling' and retrieves relevant code even if the exact keywords are not present. ck offers semantic search, drop-in grep compatibility, hybrid search combining keyword precision with semantic understanding, agent-friendly output in JSONL format, smart file filtering, and various advanced features. It supports multiple search modes, relevance scoring, top-K results, and smart exclusions. Users can index projects for semantic search, choose embedding models, and search specific files or directories. The tool is designed to improve code search efficiency and accuracy for developers and AI agents.

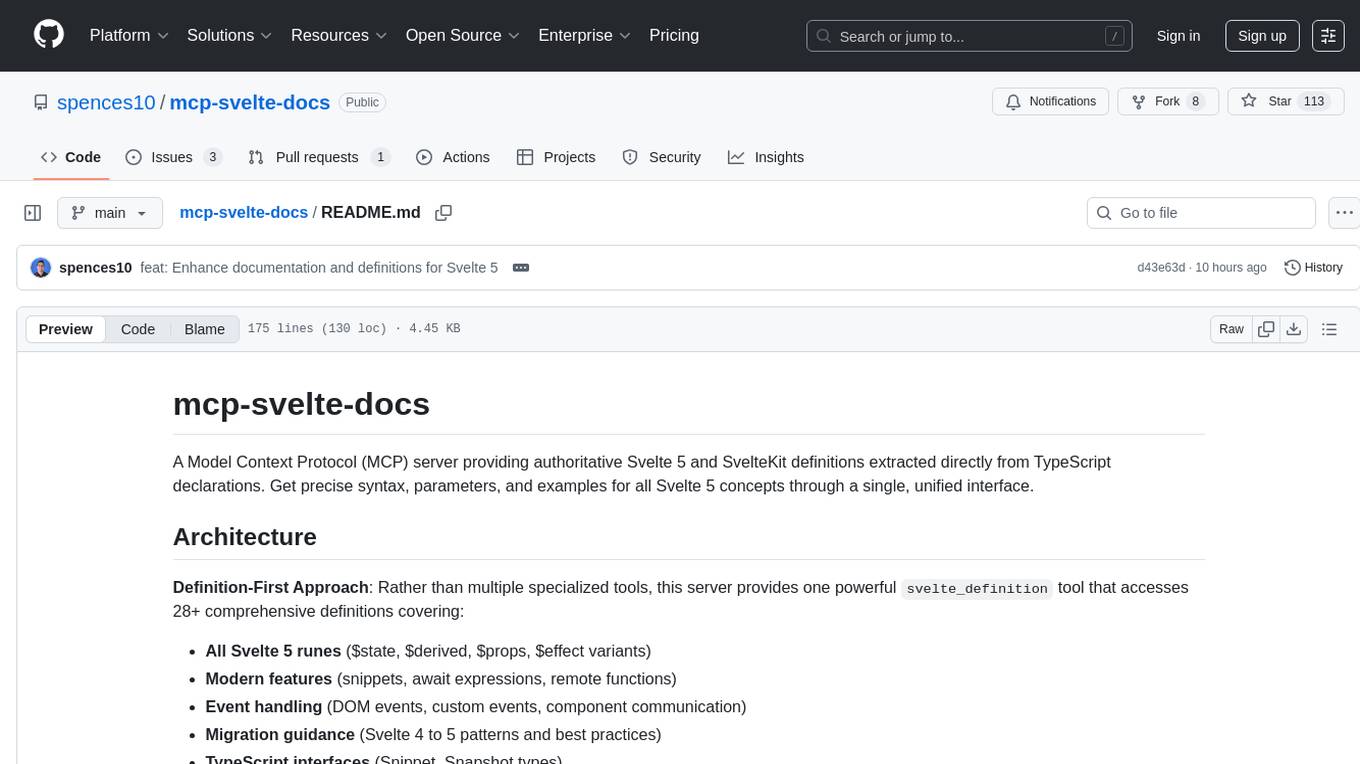

mcp-svelte-docs

A Model Context Protocol (MCP) server providing authoritative Svelte 5 and SvelteKit definitions extracted directly from TypeScript declarations. Get precise syntax, parameters, and examples for all Svelte 5 concepts through a single, unified interface. The server offers a 'svelte_definition' tool that covers various Svelte 5 runes, modern features, event handling, migration guidance, TypeScript interfaces, and advanced patterns. It aims to provide up-to-date, type-safe, and comprehensive documentation for Svelte developers.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.