ai-wechat-bot

将所有AI 产品接入你的微信,打造你个人AI 助理,帮助你解决更多生活日常。

Stars: 366

Gewechat is a project based on the Gewechat project to implement a personal WeChat channel, using the iPad protocol for login. It can obtain wxid and send voice messages, which is more stable than the itchat protocol. The project provides documentation for the API. Users can deploy the Gewechat service and use the ai-wechat-bot project to interface with it. Configuration parameters for Gewechat and ai-wechat-bot need to be set in the config.json file. Gewechat supports sending voice messages, with limitations on the duration of received voice messages. The project has restrictions such as requiring the server to be in the same province as the device logging into WeChat, limited file download support, and support only for text and image messages.

README:

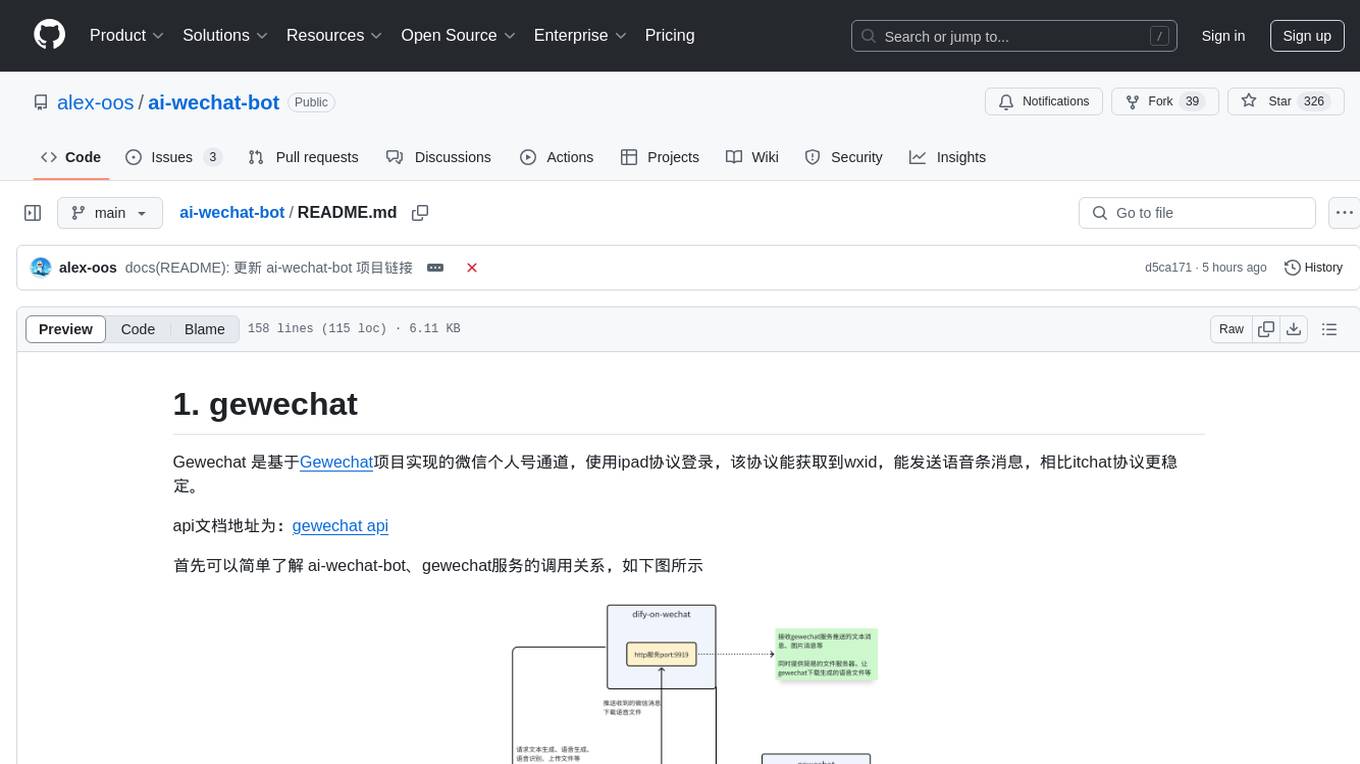

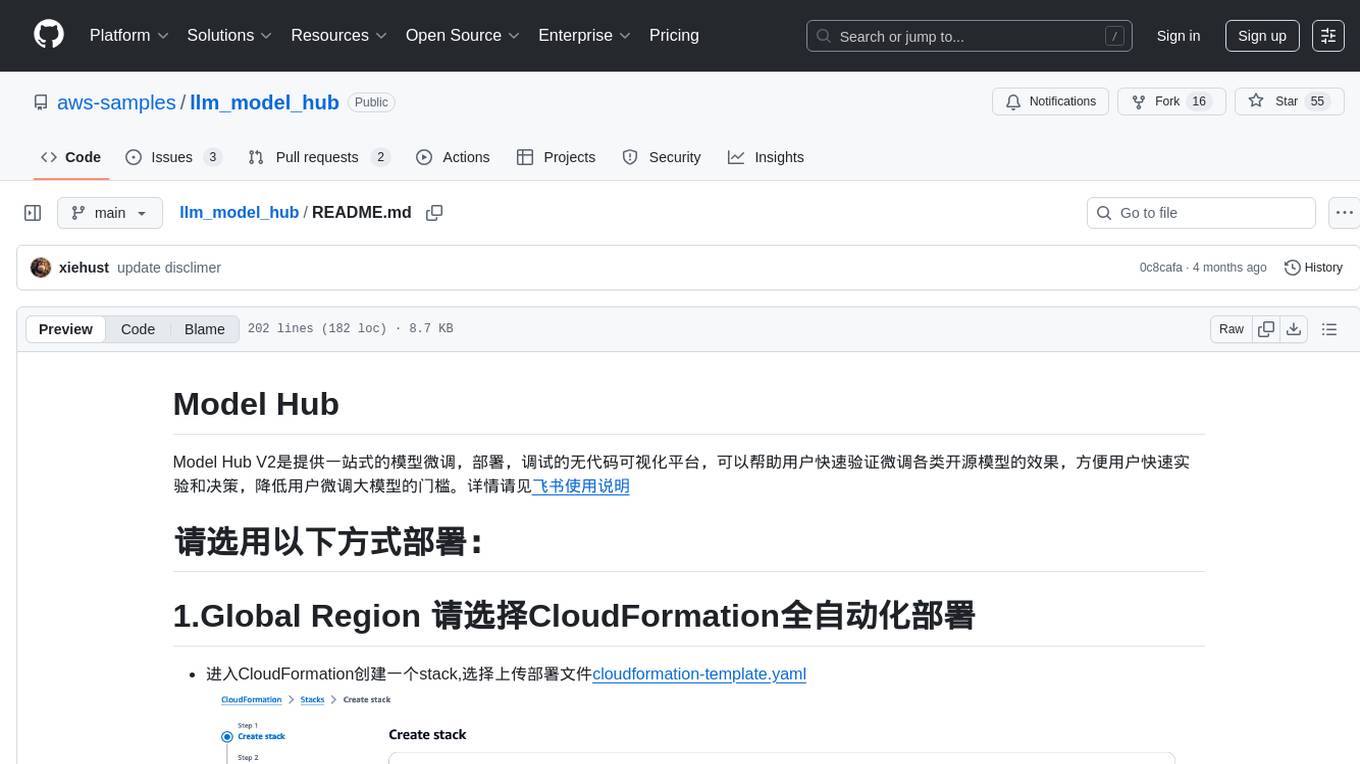

Gewechat 是基于Gewechat项目实现的微信个人号通道,使用ipad协议登录,该协议能获取到wxid,能发送语音条消息,相比itchat协议更稳定。

api文档地址为:gewechat api

首先可以简单了解 ai-wechat-bot、gewechat服务的调用关系,如下图所示

gewechat 服务需要自行部署,ai-wechat-bot 项目只负责对接gewechat服务,请参考下方教程部署gewechat服务。

感谢gewechat交流群中的大佬 @1H 重构了镜像,让gewe镜像不依赖cgroup和docker --privilege,可以在更高版本的ubuntu、debian以及macos系统上运行。

# 从阿里云镜像仓库拉取(国内)

docker pull registry.cn-hangzhou.aliyuncs.com/gewe/gewe:latest

docker tag registry.cn-hangzhou.aliyuncs.com/gewe/gewe gewemkdir -p gewechat/data

docker run -itd -v gewechat/data:/root/temp -p 2531:2531 -p 2532:2532 --privileged=true --name=gewe gewe /usr/sbin/init

#设置开机自启

docker update --restart=always gewe首先创建必要的数据目录:

mkdir -p gewechat/data创建 docker-compose.yml 文件:

version: '3'

services:

gewechat:

image: gewe

container_name: gewe

volumes:

- ./gewechat/data:/root/temp

ports:

- "2531:2531"

- "2532:2532"

restart: always运行:

docker compose up -d看到如下日志,表示gewechat服务启动成功

在config.json中需要配置以下gewechat相关的参数:

{

"token": "", # gewechat服务的token,用于接口认证

"appId": "", # gewechat服务的应用ID

"baseUrl": "http://本机ip:2531/v2/api", # gewechat服务的API基础URL

"callbackUrl": "http://本机ip:9919/v2/api/callback/collect", # 回调URL,用于接收消息

"downloadUrl": "http://本机ip:2532/download", # 文件下载URL

}参数说明:

-

token: gewechat服务的认证token,首次登录时,可以留空,启动ai-wechat-bot服务时,会自动获取token并自动保存到config.json中 -

appId: gewechat服务分配的设备ID,首次登录时,可以留空,启动ai-wechat-bot服务时,会自动获取appid并自动保存到config.json中 -

baseUrl: gewechat服务的API基础地址,请根据实际情况配置,如果gewechat服务与ai-wechat-bot服务部署在同一台机器上,可以配置为http://本机ip:2531/v2/api -

callbackUrl: 接收gewechat消息的回调地址,请根据实际情况配置,如果gewechat服务与ai-wechat-bot服务部署在同一台机器上,可以配置为http://本机ip:9919/v2/api/callback/collect,如无特殊需要,请使用9919端口号 -

downloadUrl: 文件下载地址,用于下载语音、图片等文件,请根据实际部署情况配置,如果gewechat服务与ai-wechat-bot服务部署在同一台机器上,可以配置为http://本机ip:2532/download

注意:请确保您的回调地址(callback_url),即ai-wechat-bot启动的回调服务可以被gewechat服务正常访问到。如果您使用Docker部署,需要注意网络配置,确保容器之间可以正常通信。

在config.json中需要配置以下

{

"model": "ali", # 模型名称设置为ali

"singleChatPrefix": [""], # 私聊触发前缀

"singleChatReplyPrefix": "", # 私聊回复前缀

"groupChatPrefix": ["@bot"], # 群聊触发前缀

"groupNameWhiteList": ["ALL_GROUP"], # 允许响应的群组

}完成上述配置后,你需要确保gewechat服务已正常启动

mvn run ai-wechat-bot.jar启动成功后,可以看到如下日志信息,注意token和appid会自动保存到config.json,无需手动保存

- gewechat 要求必须搭建服务到同省服务器或者电脑里方可正常使用,即登录微信的手机与gewechat服务必须在同一省

- gewechat 开源框架只支持下载接收到的图片,不支持下载文件

- gewechat_channel 目前暂时只支持接收文字消息,只支持发送文字消息与图片消息,后续支持的消息类型会逐步完善

- 此项目仅用于个人娱乐场景,请勿用于任何商业场景

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-wechat-bot

Similar Open Source Tools

ai-wechat-bot

Gewechat is a project based on the Gewechat project to implement a personal WeChat channel, using the iPad protocol for login. It can obtain wxid and send voice messages, which is more stable than the itchat protocol. The project provides documentation for the API. Users can deploy the Gewechat service and use the ai-wechat-bot project to interface with it. Configuration parameters for Gewechat and ai-wechat-bot need to be set in the config.json file. Gewechat supports sending voice messages, with limitations on the duration of received voice messages. The project has restrictions such as requiring the server to be in the same province as the device logging into WeChat, limited file download support, and support only for text and image messages.

Groq2API

Groq2API is a REST API wrapper around the Groq2 model, a large language model trained by Google. The API allows you to send text prompts to the model and receive generated text responses. The API is easy to use and can be integrated into a variety of applications.

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

superagent

Superagent is an open-source AI assistant framework and API that allows developers to add powerful AI assistants to their applications. These assistants use large language models (LLMs), retrieval augmented generation (RAG), and generative AI to help users with a variety of tasks, including question answering, chatbot development, content generation, data aggregation, and workflow automation. Superagent is backed by Y Combinator and is part of YC W24.

EasyAIVtuber

EasyAIVtuber is a tool designed to animate 2D waifus by providing features like automatic idle actions, speaking animations, head nodding, singing animations, and sleeping mode. It also offers API endpoints and a web UI for interaction. The tool requires dependencies like torch and pre-trained models for optimal performance. Users can easily test the tool using OBS and UnityCapture, with options to customize character input, output size, simplification level, webcam output, model selection, port configuration, sleep interval, and movement extension. The tool also provides an API using Flask for actions like speaking based on audio, rhythmic movements, singing based on music and voice, stopping current actions, and changing images.

sparrow

Sparrow is an innovative open-source solution for efficient data extraction and processing from various documents and images. It seamlessly handles forms, invoices, receipts, and other unstructured data sources. Sparrow stands out with its modular architecture, offering independent services and pipelines all optimized for robust performance. One of the critical functionalities of Sparrow - pluggable architecture. You can easily integrate and run data extraction pipelines using tools and frameworks like LlamaIndex, Haystack, or Unstructured. Sparrow enables local LLM data extraction pipelines through Ollama or Apple MLX. With Sparrow solution you get API, which helps to process and transform your data into structured output, ready to be integrated with custom workflows. Sparrow Agents - with Sparrow you can build independent LLM agents, and use API to invoke them from your system. **List of available agents:** * **llamaindex** - RAG pipeline with LlamaIndex for PDF processing * **vllamaindex** - RAG pipeline with LLamaIndex multimodal for image processing * **vprocessor** - RAG pipeline with OCR and LlamaIndex for image processing * **haystack** - RAG pipeline with Haystack for PDF processing * **fcall** - Function call pipeline * **unstructured-light** - RAG pipeline with Unstructured and LangChain, supports PDF and image processing * **unstructured** - RAG pipeline with Weaviate vector DB query, Unstructured and LangChain, supports PDF and image processing * **instructor** - RAG pipeline with Unstructured and Instructor libraries, supports PDF and image processing. Works great for JSON response generation

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

Bindu

Bindu is an operating layer for AI agents that provides identity, communication, and payment capabilities. It delivers a production-ready service with a convenient API to connect, authenticate, and orchestrate agents across distributed systems using open protocols: A2A, AP2, and X402. Built with a distributed architecture, Bindu makes it fast to develop and easy to integrate with any AI framework. Transform any agent framework into a fully interoperable service for communication, collaboration, and commerce in the Internet of Agents.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

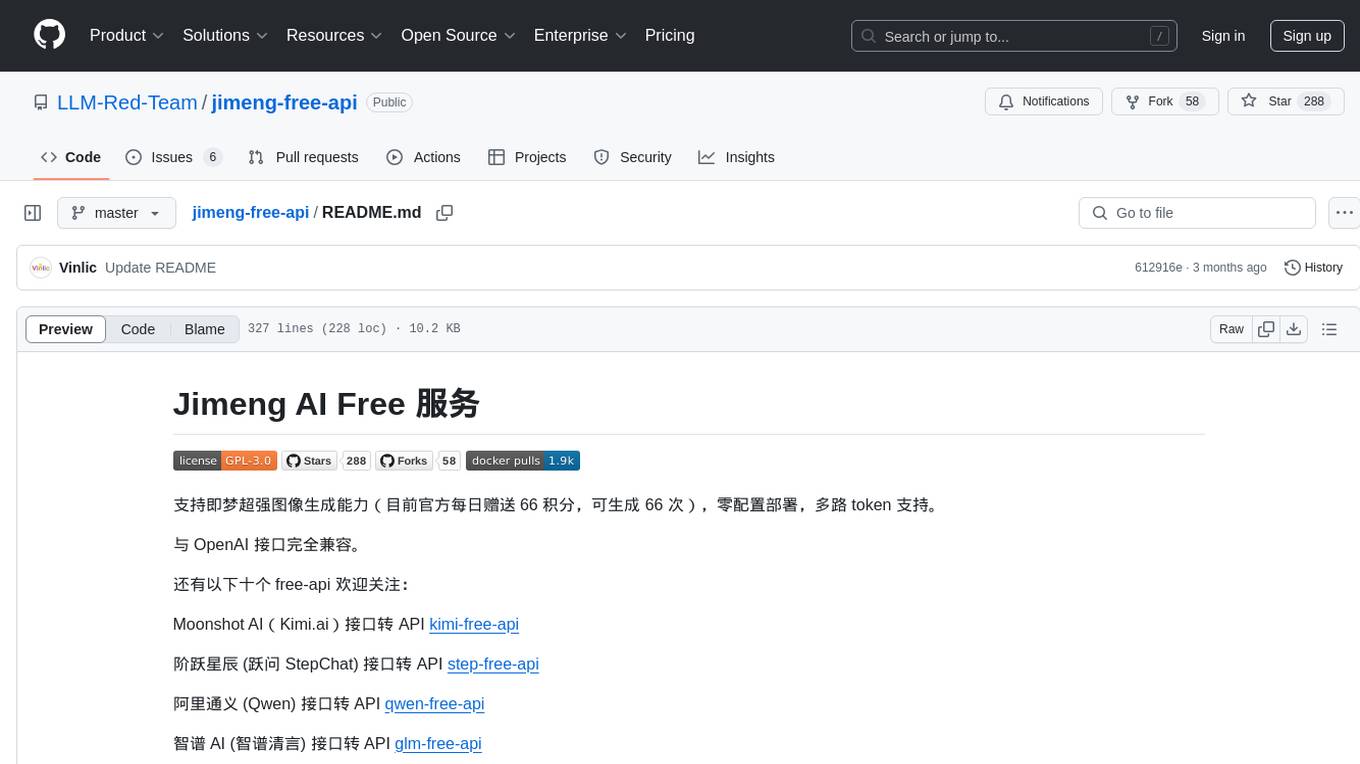

jimeng-free-api

Jimeng AI Free service provides powerful image generation capabilities with zero configuration deployment and support for multiple tokens. It is fully compatible with the OpenAI interface. The repository also includes other free APIs like Moonshot AI, StepChat, Qwen, GLM AI, Metaso AI, Doubao by ByteDance, Spark by Xunfei, Hailuo AI, DeepSeek, and Emohaa AI. Users can access the service by obtaining a sessionid from Jimeng and using it as a Bearer Token in the Authorization header for API requests. The service supports chat completions and image generations, with different models and parameters available for customization. Various deployment options are provided, including Docker, Docker-compose, Render, Vercel, and native deployment. Users are advised to use the recommended client applications for faster and simpler access to the free API services.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

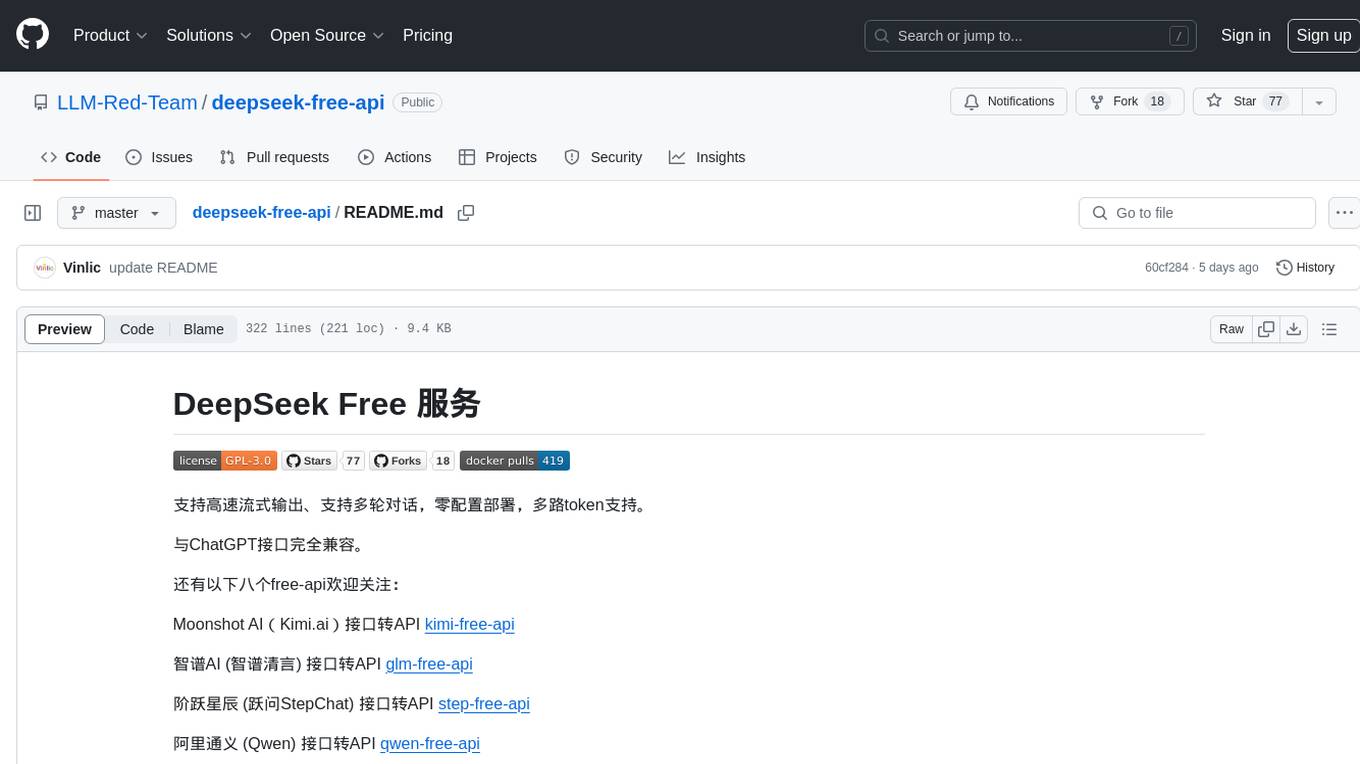

deepseek-free-api

DeepSeek Free API is a high-speed streaming output tool that supports multi-turn conversations and zero-configuration deployment. It is compatible with the ChatGPT interface and offers multiple token support. The tool provides eight free APIs for various AI interfaces. Users can access the tool online, prepare for integration, deploy using Docker, Docker-compose, Render, Vercel, or native deployment methods. It also offers client recommendations for faster integration and supports dialogue completion and userToken live checks. The tool comes with important considerations for Nginx reverse proxy optimization and token statistics.

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

zeroclaw

ZeroClaw is a fast, small, and fully autonomous AI assistant infrastructure built with Rust. It features a lean runtime, cost-efficient deployment, fast cold starts, and a portable architecture. It is secure by design, fully swappable, and supports OpenAI-compatible provider support. The tool is designed for low-cost boards and small cloud instances, with a memory footprint of less than 5MB. It is suitable for tasks like deploying AI assistants, swapping providers/channels/tools, and pluggable everything.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

mcp-hub

MCP Hub is a centralized manager for Model Context Protocol (MCP) servers, offering dynamic server management and monitoring, REST API for tool execution and resource access, MCP Server marketplace integration, real-time server status tracking, client connection management, and process lifecycle handling. It acts as a central management server connecting to and managing multiple MCP servers, providing unified API endpoints for client access, handling server lifecycle and health monitoring, and routing requests between clients and MCP servers.

For similar tasks

rag

RAG with txtai is a Retrieval Augmented Generation (RAG) Streamlit application that helps generate factually correct content by limiting the context in which a Large Language Model (LLM) can generate answers. It supports two categories of RAG: Vector RAG, where context is supplied via a vector search query, and Graph RAG, where context is supplied via a graph path traversal query. The application allows users to run queries, add data to the index, and configure various parameters to control its behavior.

ai-wechat-bot

Gewechat is a project based on the Gewechat project to implement a personal WeChat channel, using the iPad protocol for login. It can obtain wxid and send voice messages, which is more stable than the itchat protocol. The project provides documentation for the API. Users can deploy the Gewechat service and use the ai-wechat-bot project to interface with it. Configuration parameters for Gewechat and ai-wechat-bot need to be set in the config.json file. Gewechat supports sending voice messages, with limitations on the duration of received voice messages. The project has restrictions such as requiring the server to be in the same province as the device logging into WeChat, limited file download support, and support only for text and image messages.

llm-deploy

LLM-Deploy focuses on the theory and practice of model/LLM reasoning and deployment, aiming to be your partner in mastering the art of LLM reasoning and deployment. Whether you are a newcomer to this field or a senior professional seeking to deepen your skills, you can find the key path to successfully deploy large language models here. The project covers reasoning and deployment theories, model and service optimization practices, and outputs from experienced engineers. It serves as a valuable resource for algorithm engineers and individuals interested in reasoning deployment.

lightspeed-service

OpenShift LightSpeed (OLS) is an AI powered assistant that runs on OpenShift and provides answers to product questions using backend LLM services. It supports various LLM providers such as OpenAI, Azure OpenAI, OpenShift AI, RHEL AI, and Watsonx. Users can configure the service, manage API keys securely, and deploy it locally or on OpenShift. The project structure includes REST API handlers, configuration loader, LLM providers registry, and more. Additional tools include generating OpenAPI schema, requirements.txt file, and uploading artifacts to an S3 bucket. The project is open source under the Apache 2.0 License.

chatgpt-adapter

ChatGPT-Adapter is an interface service that integrates various free services together. It provides a unified interface specification and integrates services like Bing, Claude-2, Gemini. Users can start the service by running the linux-server script and set proxies if needed. The tool offers model lists for different adapters, completion dialogues, authorization methods for different services like Claude, Bing, Gemini, Coze, and Lmsys. Additionally, it provides a free drawing interface with options like coze.dall-e-3, sd.dall-e-3, xl.dall-e-3, pg.dall-e-3 based on user-provided Authorization keys. The tool also supports special flags for enhanced functionality.

nosia

Nosia is a platform that allows users to run an AI model on their own data. It is designed to be easy to install and use. Users can follow the provided guides for quickstart, API usage, upgrading, starting, stopping, and troubleshooting. The platform supports custom installations with options for remote Ollama instances, custom completion models, and custom embeddings models. Advanced installation instructions are also available for macOS with a Debian or Ubuntu VM setup. Users can access the platform at 'https://nosia.localhost' and troubleshoot any issues by checking logs and job statuses.

xiaozhi-client

Xiaozhi Client is a tool that supports integration with Xiaozhi official servers, acts as a regular MCP Server integrated into various clients, allows configuration of multiple Xiaozhi access points for shared MCP configuration, aggregates multiple MCP Servers in a standard way, dynamically controls MCP Server tool visibility, supports local deployment of open-source server integration, provides web-based visual configuration allowing customization of IP and port, integrates ModelScope remote MCP services, creates Xiaozhi Client projects through templates, and supports running in the background.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.