Chat-Style-Bot

Chat-Style-Bot是一个聊天风格模仿大语言模型,通过分析和学习微信聊天记录,可模仿你的说话风格(口头禅等),并可接入微信和你的朋友们自动聊天。Chat-Style-Bot is a chat style imitating large language model. By analyzing and learning WeChat chat records, it can imitate your speaking style (mantra, etc.), and can connect to WeChat to automatically chat with your friends.

Stars: 68

Chat-Style-Bot is an intelligent chatbot designed to mimic the chatting style of a specified individual. By analyzing and learning from WeChat chat records, Chat-Style-Bot can imitate your unique chatting style and become your personal chat assistant. Whether it's communicating with friends or handling daily conversations, Chat-Style-Bot can provide a natural, personalized interactive experience.

README:

请选择您使用的语言 / Please select the language you use: [ 中文 | English ]

Chat-Style-Bot是一个专门用于模仿指定人物聊天风格的智能聊天机器人。通过分析和学习微信聊天记录,Chat-Style-Bot能够模仿你的独特说话风格(口头禅等),并可以接入微信成为你的替身。在朋友交流等日常对话场景中,Chat-Style-Bot能提供个性化且自然的互动体验。

聊天风格模仿机器人接入微信效果展示

[!IMPORTANT] 此步骤为必需。

git clone https://github.com/Chain-Mao/Chat-Style-Bot.git

conda create -n chat-style-bot python=3.10

conda activate chat-style-bot

cd Chat-Style-Bot

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt[!TIP] pytorch的安装和本机的cuda版本相关,可通过

nvcc -V确定cuda版本。遇到包冲突时,可使用

pip install --no-deps -e .解决。

此处以微信为例,如果您想尝试其他聊天软件的数据,请将处理后的数据格式和本项目保持一致。

请先在Windows11或Windows10系统电脑上下载微信聊天导出工具 Memotrace。

在解析数据前,建议先将手机聊天迁移到电脑上,以扩充数据的数量。具体的数据解析过程请移步至 Memotrace使用教程。

数据解析完成后,点击“数据” -> “导出聊天数据(全部)” -> “CSV”,已导出CSV格式的全部聊天数据。

得到微信聊天CSV文件后,需要将其转换为更适合用于微调的JSON数据,您可以直接通过以下命令完成。

其中input_csv参数表示您导出的CSV文件地址,output_json表示JSON文件保存路径,您的JSON文件存储路径应设为data/chat_records.json。

python scripts/preprocess.py --input_csv data/messages.csv --output_json data/chat_records.json转换后的内容应是如下格式,data/example.json 中提供了一些示范数据。

[

{

"instruction": "人类指令(必填)",

"input": "人类输入(选填)",

"output": "模型回答(必填)",

"system": "系统提示词(选填)",

"history": [

["第一轮指令(选填)", "第一轮回答(选填)"],

["第二轮指令(选填)", "第二轮回答(选填)"]

]

}

]您可以根据需要填写可选数据 input、system和history,这三个参数非必需,保持为空也没有问题。

可选数据说明

在指令监督微调时,instruction 列对应的内容会与 input 列对应的内容拼接后作为人类指令,即人类指令为 instruction\ninput。而 output 列对应的内容为模型回答。

如果指定,system 列对应的内容将被作为系统提示词。

history 列是由多个字符串二元组构成的列表,分别代表历史消息中每轮对话的指令和回答。注意在指令监督微调时,历史消息中的回答内容也会被用于模型学习。

为了实现更好的风格模仿效果,你可以加入身份认证相关的标签,辅助模型理解自己和开发者的身份,标签包括机器人的姓名和开发人员的姓名。

身份认证数据在 data/identity.json,示例如下:

[

{

"instruction": "你是谁?",

"input": "",

"output": "您好,我是 {{name}},一个由 {{author}} 发明的人工智能助手。我可以回答各种问题,提供实用的建议和帮助,帮助用户完成各种任务。"

}

]您可以通过执行以下命令将标签替换成您需要的信息,其中name表示模型名称,author表示开发人员,data/identity.json表示身份标签文件路径。

python scripts/id_tag.py --name ZhangSan --author LiSi --file_path data/identity.json如果您不需要身份认证,可以在 如config\train\llama3_lora_sft_ds3.yaml 等模型配置文件中更改dataset条目,仅保留chat_records即可。

本项目支持Llama3、GLM4、Qwen2等当前主流模型。

| 模型名 | 模型大小 | 下载地址 |

|---|---|---|

| GLM-4-9B-Chat | 9B | https://huggingface.co/THUDM/glm-4-9b-chat |

| LLaMA-3-8B | 8B | https://huggingface.co/meta-llama/Meta-Llama-3-8B |

| Llama3-8B-Chinese-Chat | 8B | https://huggingface.co/shenzhi-wang/Llama3-8B-Chinese-Chat |

| Qwen-2 | 7B | https://huggingface.co/Qwen/Qwen2-7B-Instruct |

[!NOTE] Meta发布的LLaMA-3-8B模型没有中文问答的能力,如果您用中文数据微调,请使用Llama3-8B-Chinese-Chat模型。

上述几个模型Lora方法微调下约占用16G-20G显存,最少需要一张3090或4090显卡,全参数微调需要60G以上显存,更少的显存资源请使用QLora的方法。

我们推荐使用下述命令下载Huggingface上的模型。resume-download 后添加模型在Huggingface上的上传者和模型名称。下载模型文件并保存到指定位置时,需要添加 local-dir 参数,此时将文件保存至当前目录下,如:

huggingface-cli download --resume-download Qwen/Qwen2-7B-Instruct --local-dir ./Qwen2-7B-Instruct如果部分模型的下载需要登录确认,可以使用命令 huggingface-cli login 登录您的Huggingface账户。

如果您在中国内地,无法访问Huggingface,请在下载模型前在命令行执行以下命令,从Huggingface国内镜像源下载文件。

export HF_ENDPOINT=https://hf-mirror.com 除了Huggingface,中国内地的用户也可以在魔搭社区查看并下载模型。

llamafactory-cli train config/train/llama3_lora_sft.yamlFORCE_TORCHRUN=1 llamafactory-cli config/train/llama3_lora_sft_ds3.yamlllamafactory-cli train config/train/llama3_qlora_sft.yamlFORCE_TORCHRUN=1 llamafactory-cli train config/train/llama3_full_sft_ds3.yaml配置参数介绍

.yaml文件中 model_name_or_path 后填写模型路径,template 表示不同模型的Prompt模板格式,可填llama3、glm4或qwen。

QLora方法 quantization_bit 参数可选 4/8 比特量化。

config文件夹中Llama3文件较全,若没有其它模型对应的配置文件,可仿照已有Llama3的配置文件格式新建即可。

若显存不足:

- 可调节截断长度 `cutoff_len`,微信聊天数据一般较短,设为256即可。

- 可减小 `per_device_train_batch_size`,最小为1,以缓解显存压力。

llamafactory-cli export config/merge_lora/llama3_lora_sft.yaml调用未合并的原模型和 Lora 适配器

llamafactory-cli chat config/inference/llama3_lora_sft.yaml或 直接调用合并后的模型

llamafactory-cli chat config/inference/llama3.yamlllamafactory-cli webchat config/inference/llama3_lora_sft.yamlllamafactory-cli api config/inference/llama3_lora_sft.yaml[!IMPORTANT]

为了账号的安全起见,建议使用微信小号扫码登录,微信必须绑定银行卡才能使用。

为了配置本地模型和微信间的接口,您应该先在config/wechat/settings.json配置模型路径。

在三种模型配置中找到您训练的对应模型,将原始模型和Lora适配器路径分别填入该配置的 model_name_or_path 和 adapter_name_or_path。若不需要加载Lora适配器,则 adapter_name_or_path 为空即可。若是全参数微调,则将 finetuning_type 改为 full。

python scripts/api_service.py --model_name llama3

python scripts/wechat.py第一条命令中 model_name 后超参数可以选llama3、qwen2或glm4。执行完第一条命令后,新开一个终端执行第二条命令,扫描终端显示的二维码即可登录。可以让别人和这个号的机器人聊天,或者在群聊中 @群聊机器人 互相Battle。

二维码刷新过快问题解决

这是itchat包的一个Bug,首先通过命令 pip show itchat-uos 先找到itchat安装路径。

找到 xxx/site-packages/itchat/components/login.py 的 login() 函数中,在进入 while not isLoggedIn 循环前增加一个time.sleep(15)。

到此为止,如果一切顺利,您将会得到和您说话风格一样的聊天机器人,并能够在微信上对话自如。

如果模型的回答开始天马行空,大概率是训练轮数太多导致过拟合,可以尝试使用训练轮数少一些的checkpoint加载模型。

下面三种方法非必须

如果您希望进一步优化模型,使模型的聊天风格和您更加对齐。您可以在和机器人聊天过程中,对模型的输出进行反馈,并将反馈结果记录在偏好数据集中,使用DPO和KTO等优化策略进行进一步微调。

llamafactory-cli train config/train/llama3_lora_dpo.yamlDPO 优化的数据格式如下,其中 conversations 表示提问问题,choesn 表示您更倾向的回答,rejected 表示您更不倾向的回答,具体示例参考 data\dpo_zh_demo.json。

{

"conversations": [

{

"from": "human",

"value": "最近怎么样,bro?"

}

],

"choesn": {

"from": "gpt",

"value": "还不错,bro,你最近怎么样?"

},

"rejected": {

"from": "gpt",

"value": "挺好的"

}

}llamafactory-cli train config/train/llama3_lora_kto.yamlKTO 优化的数据格式如下,其中 user 表示问题,assistant 表示回答,您满意的回答标记为 true,您不满意的回答标记为 false,具体示例参考 data\kto_en_demo.json。

{

"messages": [

{

"content": "最近怎么样,bro?",

"role": "user"

},

{

"content": "还不错,bro,你最近怎么样?",

"role": "assistant"

}

],

"label": true

},

{

"messages": [

{

"content": "最近怎么样,bro?",

"role": "user"

},

{

"content": "挺好的",

"role": "assistant"

}

],

"label": false

}[!TIP] 我们在微信接口中集成了人类偏好优化策略,便于您在微信部署过程中实时收集偏好数据,并将其用于后续偏好优化微调训练。

对于 DPO算法,机器人会有概率触发两个回答,您可以打出

1或者2以选择一个更符合您聊天风格的回答,数据将被记录在data/dpo_records.json。对于 KTO算法,您可以在机器人的任意回答后打出

不错或者不好,数据将被记录在data/kto_records.json。

如果您的训练数据为纯文本而非问答形式的数据,则无法用指令监督微调的方式,这时则需要通过增量预训练进行无监督学习。数据格式要求如下:

{

"text": "Be sure to tune in and watch Donald Trump on Late Night with David Letterman as he presents the Top Ten List tonight!"

},

{

"text": "Donald Trump will be appearing on The View tomorrow morning to discuss Celebrity Apprentice and his new book Think Like A Champion!"

}本项目提供了预处理后的名人普通文本数据,如特朗普和马斯克发布的推特内容。您可以通过以下命令进行增量预训练。

llamafactory-cli train config/train/llama3_lora_pretrain.yaml安装依赖问题

如果 mpi4py 包安装出问题,请用 conda install mpi4py 进行安装。

如果遇到 weight must be 2-D 问题,使用 pip install -e ".[torch,metrics]"解决依赖冲突。

如果需要导入自己的数据集,您可以在数据集配置文件data\dataset_info.json中添加自定义的数据集,并在config中.yaml模型配置文件的dataset条目中添加数据集名称。

为了实现各模型与历史聊天数据的连接,我们接入了 LlamaIndex 并提供了教程案例scripts\rag.py,旨在帮助用户利用 LlamaIndex 与 llama3、qwen2、glm4 等模型快速部署检索增强生成(RAG)技术。结合 RAG 后的模型会进一步提升风格模仿能力和细节问题的准确性。

在案例中,我们需要设置语言模型、向量模型和聊天记录的路径,并执行以下命令:

python scripts\rag.py --model llama3对于向量模型,您可以使用bge-base-en-v1.5模型来检索英文文档,下载bge-base-zh-v1.5模型以检索中文文档。根据您的计算资源,您还可以选择bge-large或bge-small作为向量模型,或调整上下文窗口大小或文本块大小。

如果您对我们的代码有任何疑问或在实验过程中遇到难题,请随时发起Issues或发送电子邮件至[email protected]。

- [x] 根据个人微信聊天历史数据,训练个人风格模仿机器人

- [x] 支持增量预训练功能,实现对普通文本的无监督学习

- [x] 制作清洗名人文本数据集,训练名人风格模仿机器人

- [x] 支持人类偏好优化策略,在聊天过程中持续学习

- [x] 支持检索增强生成RAG,从聊天记录中检索有效信息

本仓库的代码依照 Apache-2.0 协议开源。

使用模型权重时,请遵循对应的模型协议:GLM4 / LLaMA-3 / Qwen。

本项目受益于 LLaMA-Factory 和 WeChatMsg。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Chat-Style-Bot

Similar Open Source Tools

Chat-Style-Bot

Chat-Style-Bot is an intelligent chatbot designed to mimic the chatting style of a specified individual. By analyzing and learning from WeChat chat records, Chat-Style-Bot can imitate your unique chatting style and become your personal chat assistant. Whether it's communicating with friends or handling daily conversations, Chat-Style-Bot can provide a natural, personalized interactive experience.

huge-ai-search

Huge AI Search MCP Server integrates Google AI Mode search into clients like Cursor, Claude Code, and Codex, supporting continuous follow-up questions and source links. It allows AI clients to directly call 'huge-ai-search' for online searches, providing AI summary results and source links. The tool supports text and image searches, with the ability to ask follow-up questions in the same session. It requires Microsoft Edge for installation and supports various IDEs like VS Code. The tool can be used for tasks such as searching for specific information, asking detailed questions, and avoiding common pitfalls in development tasks.

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

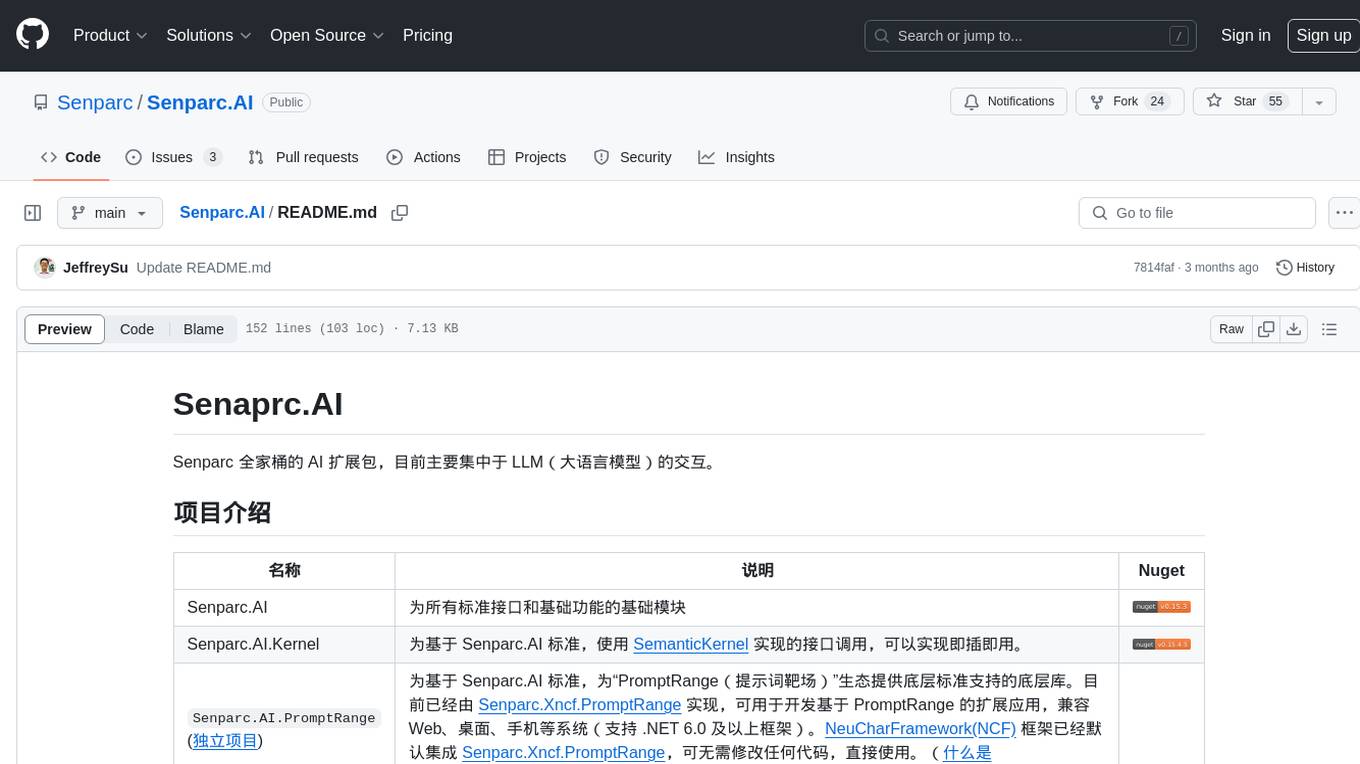

Senparc.AI

Senparc.AI is an AI extension package for the Senparc ecosystem, focusing on LLM (Large Language Models) interaction. It provides modules for standard interfaces and basic functionalities, as well as interfaces using SemanticKernel for plug-and-play capabilities. The package also includes a library for supporting the 'PromptRange' ecosystem, compatible with various systems and frameworks. Users can configure different AI platforms and models, define AI interface parameters, and run AI functions easily. The package offers examples and commands for dialogue, embedding, and DallE drawing operations.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

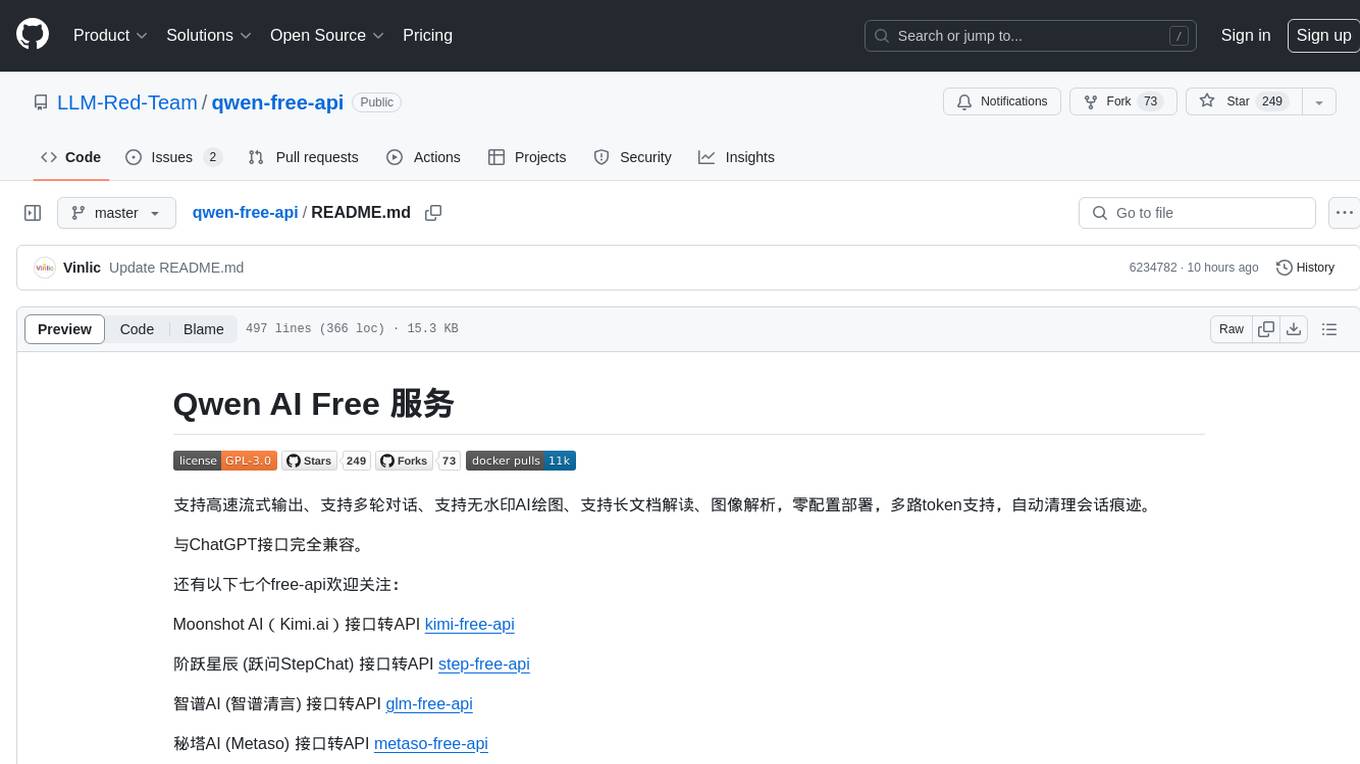

qwen-free-api

Qwen AI Free service supports high-speed streaming output, multi-turn dialogue, watermark-free AI drawing, long document interpretation, image parsing, zero-configuration deployment, multi-token support, automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository provides various free APIs for different AI services. Users can access the service through different deployment methods like Docker, Docker-compose, Render, Vercel, and native deployment. It offers interfaces for chat completions, AI drawing, document interpretation, image parsing, and token checking. Users need to provide 'login_tongyi_ticket' for authorization. The project emphasizes research, learning, and personal use only, discouraging commercial use to avoid service pressure on the official platform.

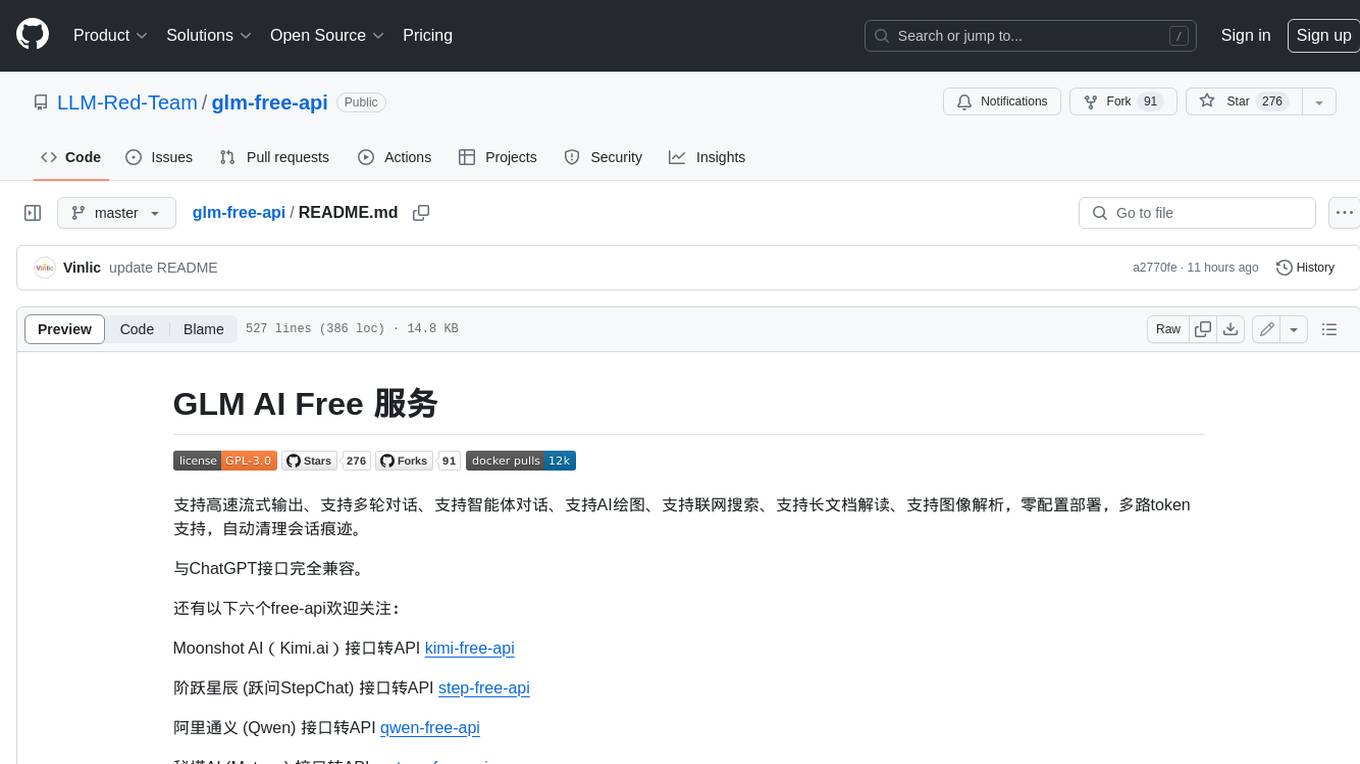

glm-free-api

GLM AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, intelligent agent dialogue support, AI drawing support, online search support, long document interpretation support, image parsing support. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository also includes six other free APIs for various services like Moonshot AI, StepChat, Qwen, Metaso, Spark, and Emohaa. The tool supports tasks such as chat completions, AI drawing, document interpretation, image parsing, and refresh token survival check.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

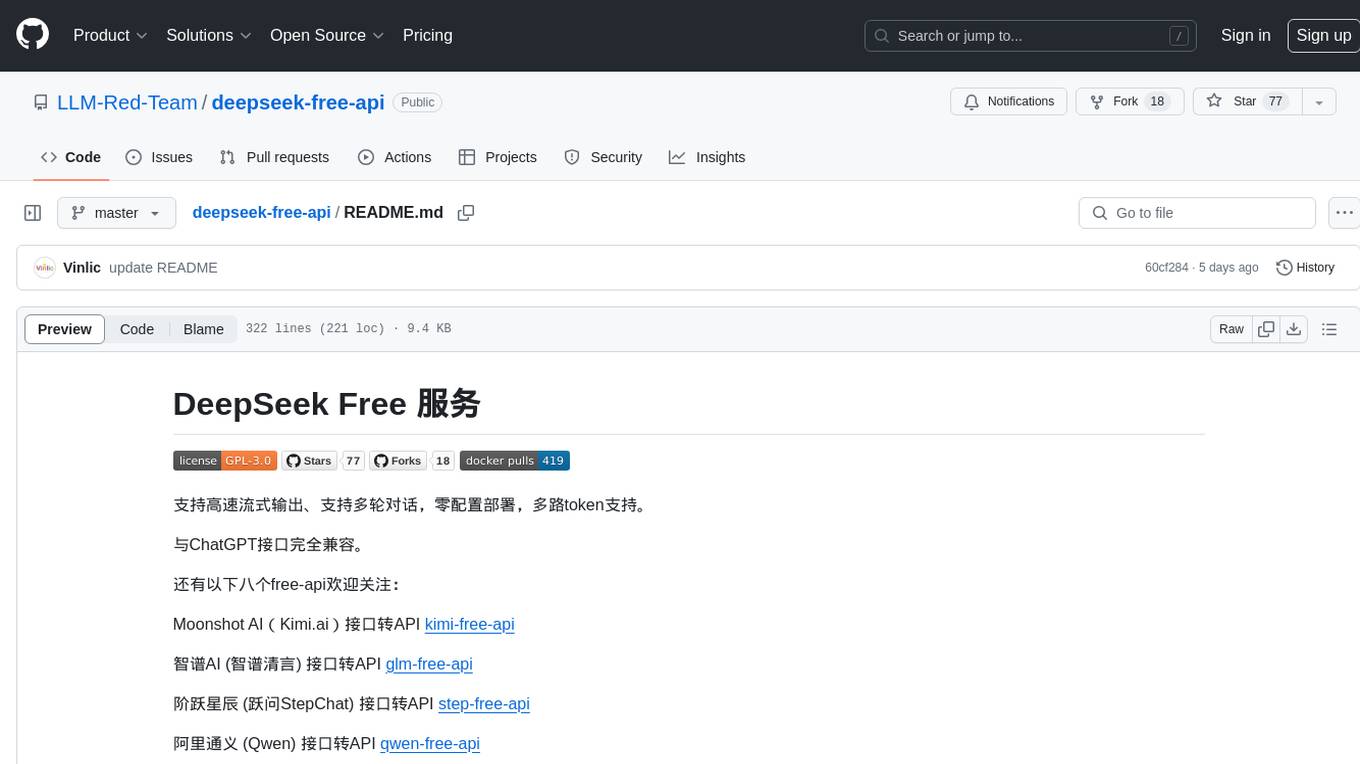

deepseek-free-api

DeepSeek Free API is a high-speed streaming output tool that supports multi-turn conversations and zero-configuration deployment. It is compatible with the ChatGPT interface and offers multiple token support. The tool provides eight free APIs for various AI interfaces. Users can access the tool online, prepare for integration, deploy using Docker, Docker-compose, Render, Vercel, or native deployment methods. It also offers client recommendations for faster integration and supports dialogue completion and userToken live checks. The tool comes with important considerations for Nginx reverse proxy optimization and token statistics.

EasyAIVtuber

EasyAIVtuber is a tool designed to animate 2D waifus by providing features like automatic idle actions, speaking animations, head nodding, singing animations, and sleeping mode. It also offers API endpoints and a web UI for interaction. The tool requires dependencies like torch and pre-trained models for optimal performance. Users can easily test the tool using OBS and UnityCapture, with options to customize character input, output size, simplification level, webcam output, model selection, port configuration, sleep interval, and movement extension. The tool also provides an API using Flask for actions like speaking based on audio, rhythmic movements, singing based on music and voice, stopping current actions, and changing images.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

kimi-free-api

KIMI AI Free 服务 支持高速流式输出、支持多轮对话、支持联网搜索、支持长文档解读、支持图像解析,零配置部署,多路token支持,自动清理会话痕迹。 与ChatGPT接口完全兼容。 还有以下五个free-api欢迎关注: 阶跃星辰 (跃问StepChat) 接口转API step-free-api 阿里通义 (Qwen) 接口转API qwen-free-api ZhipuAI (智谱清言) 接口转API glm-free-api 秘塔AI (metaso) 接口转API metaso-free-api 聆心智能 (Emohaa) 接口转API emohaa-free-api

jimeng-free-api

Jimeng AI Free service provides powerful image generation capabilities with zero configuration deployment and support for multiple tokens. It is fully compatible with the OpenAI interface. The repository also includes other free APIs like Moonshot AI, StepChat, Qwen, GLM AI, Metaso AI, Doubao by ByteDance, Spark by Xunfei, Hailuo AI, DeepSeek, and Emohaa AI. Users can access the service by obtaining a sessionid from Jimeng and using it as a Bearer Token in the Authorization header for API requests. The service supports chat completions and image generations, with different models and parameters available for customization. Various deployment options are provided, including Docker, Docker-compose, Render, Vercel, and native deployment. Users are advised to use the recommended client applications for faster and simpler access to the free API services.

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

newapi-ai-check-in

The newapi.ai-check-in repository is designed for automatically signing in with multiple accounts on public welfare websites. It supports various features such as single/multiple account automatic check-ins, multiple robot notifications, Linux.do and GitHub login authentication, and Cloudflare bypass. Users can fork the repository, set up GitHub environment secrets, configure multiple account formats, enable GitHub Actions, and test the check-in process manually. The script runs every 8 hours and users can trigger manual check-ins at any time. Notifications can be enabled through email, DingTalk, Feishu, WeChat Work, PushPlus, ServerChan, and Telegram bots. To prevent automatic disabling of Actions due to inactivity, users can set up a trigger PAT in the GitHub settings. The repository also provides troubleshooting tips for failed check-ins and instructions for setting up a local development environment.

For similar tasks

Chat-Style-Bot

Chat-Style-Bot is an intelligent chatbot designed to mimic the chatting style of a specified individual. By analyzing and learning from WeChat chat records, Chat-Style-Bot can imitate your unique chatting style and become your personal chat assistant. Whether it's communicating with friends or handling daily conversations, Chat-Style-Bot can provide a natural, personalized interactive experience.

ChatLab

ChatLab is a free, open-source, and local-first application dedicated to analyzing chat records. Through an AI Agent and a flexible SQL engine, you can freely dissect, query, and even reconstruct your social data. It provides ultimate performance, privacy protection, an intelligent AI Agent, multi-dimensional data visualization, and format standardization. The tool supports chat record analysis for various platforms like LINE, WeChat, QQ, WhatsApp, Instagram, and Discord. Users can export chat records, troubleshoot, and access standardized format specifications. The system architecture includes Electron Main Process, Worker and Data Pipeline, and Rendering Process. Local development setup steps are provided for Node.js environment. Contributions are welcome following specific guidelines. Users are advised to read the Privacy Policy & User Agreement before using the software. The tool is licensed under AGPL-3.0 License.

emeltal

Emeltal is a local ML voice chat tool that uses high-end models to provide a self-contained, user-friendly out-of-the-box experience. It offers a hand-picked list of proven open-source high-performance models, aiming to provide the best model for each category/size combination. Emeltal heavily relies on the llama.cpp for LLM processing, and whisper.cpp for voice recognition. Text rendering uses Ink to convert between Markdown and HTML. It uses PopTimer for debouncing things. Emeltal is released under the terms of the MIT license, and all model data which is downloaded locally by the app comes from HuggingFace, and use of the models and data is subject to the respective license of each specific model.

jiwu-mall-chat-tauri

Jiwu Chat Tauri APP is a desktop chat application based on Nuxt3 + Tauri + Element Plus framework. It provides a beautiful user interface with integrated chat and social functions. It also supports AI shopping chat and global dark mode. Users can engage in real-time chat, share updates, and interact with AI customer service through this application.

dinopal

DinoPal is an AI voice assistant residing in the Mac menu bar, offering real-time voice and video chat, screen sharing, online search, and multilingual support. It provides various AI assistants with unique strengths and characteristics to meet different conversational needs. Users can easily install DinoPal and access different communication modes, with a call time limit of 30 minutes. User feedback can be shared in the Discord community. DinoPal is powered by Google Gemini & Pipecat.

KouriChat

KouriChat is a project that seamlessly integrates virtual and real interactions, providing eternal gentle bonds. It offers features like WeChat integration, immersive role-playing, intelligent conversation segmentation, emotion-based emojis, image generation, image recognition, voice messages, and more. The project is focused on technical research and learning exchanges, with a strong emphasis on ethical and legal guidelines. Users are required to take full responsibility for their actions, especially minors who should use the tool under supervision. The project architecture includes avatar configurations, data storage, handlers, AI service interfaces, a web UI, and utility libraries.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.