jimeng-free-api

🚀 即梦逆向API【特长:图像生成顶流】,零配置部署,多路token支持,仅供测试,如需商用请前往官方开放平台。

Stars: 288

Jimeng AI Free service provides powerful image generation capabilities with zero configuration deployment and support for multiple tokens. It is fully compatible with the OpenAI interface. The repository also includes other free APIs like Moonshot AI, StepChat, Qwen, GLM AI, Metaso AI, Doubao by ByteDance, Spark by Xunfei, Hailuo AI, DeepSeek, and Emohaa AI. Users can access the service by obtaining a sessionid from Jimeng and using it as a Bearer Token in the Authorization header for API requests. The service supports chat completions and image generations, with different models and parameters available for customization. Various deployment options are provided, including Docker, Docker-compose, Render, Vercel, and native deployment. Users are advised to use the recommended client applications for faster and simpler access to the free API services.

README:

支持即梦超强图像生成能力(目前官方每日赠送 66 积分,可生成 66 次),零配置部署,多路 token 支持。

与 OpenAI 接口完全兼容。

还有以下十个 free-api 欢迎关注:

Moonshot AI(Kimi.ai)接口转 API kimi-free-api

阶跃星辰 (跃问 StepChat) 接口转 API step-free-api

阿里通义 (Qwen) 接口转 API qwen-free-api

智谱 AI (智谱清言) 接口转 API glm-free-api

秘塔 AI (Metaso) 接口转 API metaso-free-api

字节跳动(豆包)接口转 API doubao-free-api

讯飞星火(Spark)接口转 API spark-free-api

MiniMax(海螺 AI)接口转 API hailuo-free-api

深度求索(DeepSeek)接口转 API deepseek-free-api

聆心智能 (Emohaa) 接口转 API emohaa-free-api

逆向 API 是不稳定的,建议前往即梦 AI 官方 https://jimeng.jianying.com/ 体验功能,避免封禁的风险。

本组织和个人不接受任何资金捐助和交易,此项目是纯粹研究交流学习性质!

仅限自用,禁止对外提供服务或商用,避免对官方造成服务压力,否则风险自担!

仅限自用,禁止对外提供服务或商用,避免对官方造成服务压力,否则风险自担!

仅限自用,禁止对外提供服务或商用,避免对官方造成服务压力,否则风险自担!

从 即梦 获取 sessionid

进入即梦登录账号,然后 F12 打开开发者工具,从 Application > Cookies 中找到sessionid的值,这将作为 Authorization 的 Bearer Token 值:Authorization: Bearer sessionid

你可以通过提供多个账号的 sessionid 并使用,拼接提供:

Authorization: Bearer sessionid1,sessionid2,sessionid3

每次请求服务会从中挑选一个。

可爱的熊猫漫画,熊猫看到地上有一个叫“即梦”的时间机器,然后说了一句“我借用一下没事吧”

请准备一台具有公网 IP 的服务器并将 8000 端口开放。

拉取镜像并启动服务

docker run -it -d --init --name jimeng-free-api -p 8000:8000 -e TZ=Asia/Shanghai vinlic/jimeng-free-api:latest查看服务实时日志

docker logs -f jimeng-free-api重启服务

docker restart jimeng-free-api停止服务

docker stop jimeng-free-apiversion: "3"

services:

jimeng-free-api:

container_name: jimeng-free-api

image: vinlic/jimeng-free-api:latest

restart: always

ports:

- "8000:8000"

environment:

- TZ=Asia/Shanghai注意:部分部署区域可能无法连接即梦,如容器日志出现请求超时或无法连接,请切换其他区域部署! 注意:免费账户的容器实例将在一段时间不活动时自动停止运行,这会导致下次请求时遇到 50 秒或更长的延迟,建议查看Render 容器保活

-

fork 本项目到你的 github 账号下。

-

访问 Render 并登录你的 github 账号。

-

构建你的 Web Service(New+ -> Build and deploy from a Git repository -> Connect 你 fork 的项目 -> 选择部署区域 -> 选择实例类型为 Free -> Create Web Service)。

-

等待构建完成后,复制分配的域名并拼接 URL 访问即可。

注意:Vercel 免费账户的请求响应超时时间为 10 秒,但接口响应通常较久,可能会遇到 Vercel 返回的 504 超时错误!

请先确保安装了 Node.js 环境。

npm i -g vercel --registry http://registry.npmmirror.com

vercel login

git clone https://github.com/LLM-Red-Team/jimeng-free-api

cd jimeng-free-api

vercel --prod请准备一台具有公网 IP 的服务器并将 8000 端口开放。

请先安装好 Node.js 环境并且配置好环境变量,确认 node 命令可用。

安装依赖

npm i安装 PM2 进行进程守护

npm i -g pm2编译构建,看到 dist 目录就是构建完成

npm run build启动服务

pm2 start dist/index.js --name "jimeng-free-api"查看服务实时日志

pm2 logs jimeng-free-api重启服务

pm2 reload jimeng-free-api停止服务

pm2 stop jimeng-free-api使用以下二次开发客户端接入 free-api 系列项目更快更简单,支持文档/图像上传!

由 Clivia 二次开发的 LobeChat https://github.com/Yanyutin753/lobe-chat

由 时光@ 二次开发的 ChatGPT Web https://github.com/SuYxh/chatgpt-web-sea

目前支持与 openai 兼容的 /v1/chat/completions 接口,可自行使用与 openai 或其他兼容的客户端接入接口,或者使用 dify 等线上服务接入使用。

对话补全接口,与 openai 的 chat-completions-api 兼容。

POST /v1/chat/completions

header 需要设置 Authorization 头部:

Authorization: Bearer [sessionid]

请求数据:

{

// jimeng-2.1(默认) / jimeng-2.0-pro / jimeng-2.0 / jimeng-1.4 / jimeng-xl-pro

"model": "jimeng-2.1",

"messages": [

{

"role": "user",

"content": "少女祈祷中..."

}

],

// 如果使用SSE流请设置为true,默认false

"stream": false

}响应数据:

{

"id": "b400abe0-b4c3-11ef-b2eb-4175f5393bfd",

"model": "jimeng-2.1",

"object": "chat.completion",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "\n\n\n\n"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 1,

"completion_tokens": 1,

"total_tokens": 2

},

"created": 1733593810

}图像生成接口,与 openai 的 images-create-api 兼容。

POST /v1/images/generations

header 需要设置 Authorization 头部:

Authorization: Bearer [sessionid]

请求数据:

{

// jimeng-2.1(默认) / jimeng-2.0-pro / jimeng-2.0 / jimeng-1.4 / jimeng-xl-pro

"model": "jimeng-2.1",

// 提示词,必填

"prompt": "少女祈祷中...",

// 反向提示词,默认空字符串

"negativePrompt": "",

// 图像宽度,默认1024

"width": 1024,

// 图像高度,默认1024

"height": 1024,

// 精细度,取值范围0-1,默认0.5

"sample_strength": 0.5

}响应数据:

{

"created": 1733593745,

"data": [

{

"url": "https://p9-heycan-hgt-sign.byteimg.com/tos-cn-i-3jr8j4ixpe/61bceb3afeb54c1c80ffdd598ac2f72d~tplv-3jr8j4ixpe-aigc_resize:0:0.jpeg?lk3s=43402efa&x-expires=1735344000&x-signature=DUY6jlx4zAXRYJeATyjZ3O6F1Pw%3D&format=.jpeg"

},

{

"url": "https://p3-heycan-hgt-sign.byteimg.com/tos-cn-i-3jr8j4ixpe/e37ab3cd95854cd7b37fb697ea2cb4da~tplv-3jr8j4ixpe-aigc_resize:0:0.jpeg?lk3s=43402efa&x-expires=1735344000&x-signature=oKtY400tjZeydKMyPZufjt0Qpjs%3D&format=.jpeg"

},

{

"url": "https://p9-heycan-hgt-sign.byteimg.com/tos-cn-i-3jr8j4ixpe/13841ff1c30940cf931eccc22405656b~tplv-3jr8j4ixpe-aigc_resize:0:0.jpeg?lk3s=43402efa&x-expires=1735344000&x-signature=4UffSRMmOeYoC0u%2B5igl9S%2BfYKs%3D&format=.jpeg"

},

{

"url": "https://p6-heycan-hgt-sign.byteimg.com/tos-cn-i-3jr8j4ixpe/731c350244b745d5990e8931b79b7fe7~tplv-3jr8j4ixpe-aigc_resize:0:0.jpeg?lk3s=43402efa&x-expires=1735344000&x-signature=ywYjZQeP3t2yyvx6Wlud%2BCB28nU%3D&format=.jpeg"

}

]

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for jimeng-free-api

Similar Open Source Tools

jimeng-free-api

Jimeng AI Free service provides powerful image generation capabilities with zero configuration deployment and support for multiple tokens. It is fully compatible with the OpenAI interface. The repository also includes other free APIs like Moonshot AI, StepChat, Qwen, GLM AI, Metaso AI, Doubao by ByteDance, Spark by Xunfei, Hailuo AI, DeepSeek, and Emohaa AI. Users can access the service by obtaining a sessionid from Jimeng and using it as a Bearer Token in the Authorization header for API requests. The service supports chat completions and image generations, with different models and parameters available for customization. Various deployment options are provided, including Docker, Docker-compose, Render, Vercel, and native deployment. Users are advised to use the recommended client applications for faster and simpler access to the free API services.

deepseek-free-api

DeepSeek Free API is a high-speed streaming output tool that supports multi-turn conversations and zero-configuration deployment. It is compatible with the ChatGPT interface and offers multiple token support. The tool provides eight free APIs for various AI interfaces. Users can access the tool online, prepare for integration, deploy using Docker, Docker-compose, Render, Vercel, or native deployment methods. It also offers client recommendations for faster integration and supports dialogue completion and userToken live checks. The tool comes with important considerations for Nginx reverse proxy optimization and token statistics.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

qwen-free-api

Qwen AI Free service supports high-speed streaming output, multi-turn dialogue, watermark-free AI drawing, long document interpretation, image parsing, zero-configuration deployment, multi-token support, automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository provides various free APIs for different AI services. Users can access the service through different deployment methods like Docker, Docker-compose, Render, Vercel, and native deployment. It offers interfaces for chat completions, AI drawing, document interpretation, image parsing, and token checking. Users need to provide 'login_tongyi_ticket' for authorization. The project emphasizes research, learning, and personal use only, discouraging commercial use to avoid service pressure on the official platform.

glm-free-api

GLM AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, intelligent agent dialogue support, AI drawing support, online search support, long document interpretation support, image parsing support. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository also includes six other free APIs for various services like Moonshot AI, StepChat, Qwen, Metaso, Spark, and Emohaa. The tool supports tasks such as chat completions, AI drawing, document interpretation, image parsing, and refresh token survival check.

kimi-free-api

KIMI AI Free 服务 支持高速流式输出、支持多轮对话、支持联网搜索、支持长文档解读、支持图像解析,零配置部署,多路token支持,自动清理会话痕迹。 与ChatGPT接口完全兼容。 还有以下五个free-api欢迎关注: 阶跃星辰 (跃问StepChat) 接口转API step-free-api 阿里通义 (Qwen) 接口转API qwen-free-api ZhipuAI (智谱清言) 接口转API glm-free-api 秘塔AI (metaso) 接口转API metaso-free-api 聆心智能 (Emohaa) 接口转API emohaa-free-api

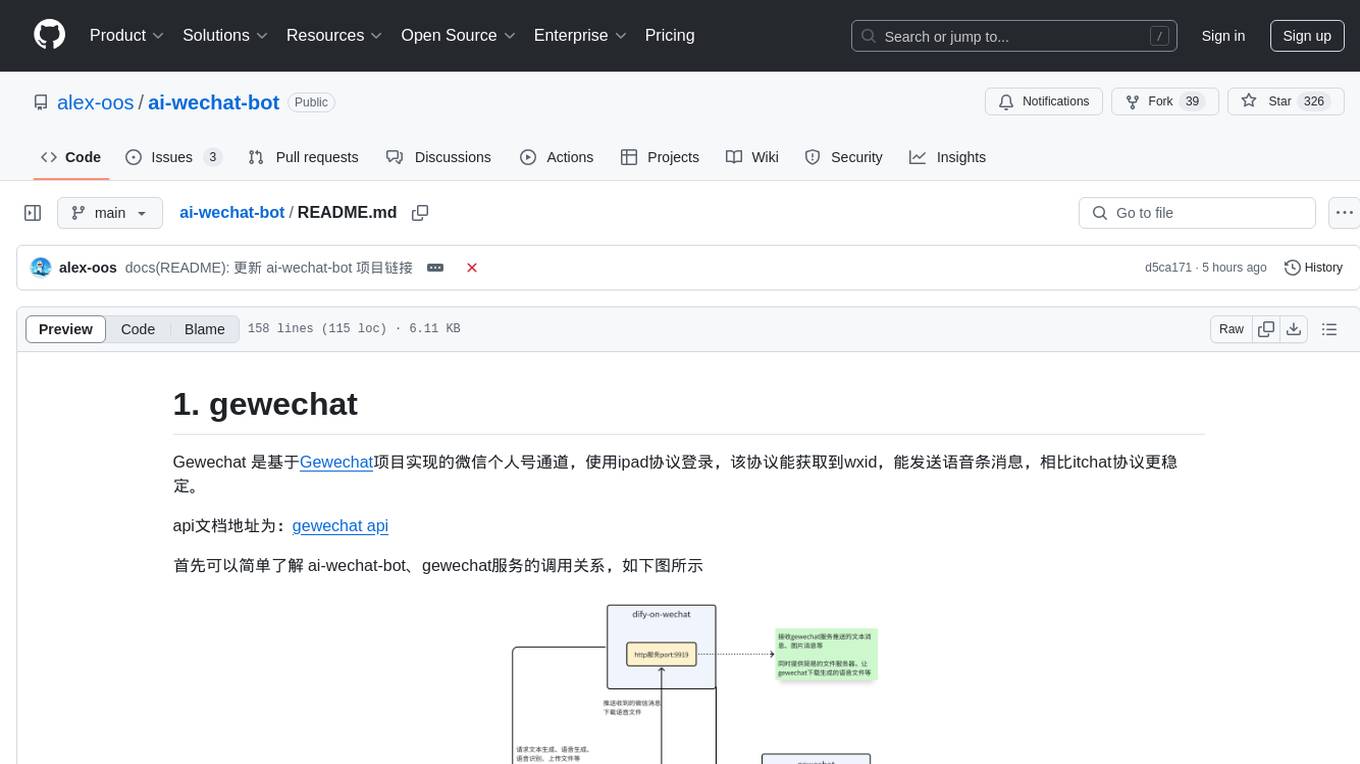

ai-wechat-bot

Gewechat is a project based on the Gewechat project to implement a personal WeChat channel, using the iPad protocol for login. It can obtain wxid and send voice messages, which is more stable than the itchat protocol. The project provides documentation for the API. Users can deploy the Gewechat service and use the ai-wechat-bot project to interface with it. Configuration parameters for Gewechat and ai-wechat-bot need to be set in the config.json file. Gewechat supports sending voice messages, with limitations on the duration of received voice messages. The project has restrictions such as requiring the server to be in the same province as the device logging into WeChat, limited file download support, and support only for text and image messages.

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

sparrow

Sparrow is an innovative open-source solution for efficient data extraction and processing from various documents and images. It seamlessly handles forms, invoices, receipts, and other unstructured data sources. Sparrow stands out with its modular architecture, offering independent services and pipelines all optimized for robust performance. One of the critical functionalities of Sparrow - pluggable architecture. You can easily integrate and run data extraction pipelines using tools and frameworks like LlamaIndex, Haystack, or Unstructured. Sparrow enables local LLM data extraction pipelines through Ollama or Apple MLX. With Sparrow solution you get API, which helps to process and transform your data into structured output, ready to be integrated with custom workflows. Sparrow Agents - with Sparrow you can build independent LLM agents, and use API to invoke them from your system. **List of available agents:** * **llamaindex** - RAG pipeline with LlamaIndex for PDF processing * **vllamaindex** - RAG pipeline with LLamaIndex multimodal for image processing * **vprocessor** - RAG pipeline with OCR and LlamaIndex for image processing * **haystack** - RAG pipeline with Haystack for PDF processing * **fcall** - Function call pipeline * **unstructured-light** - RAG pipeline with Unstructured and LangChain, supports PDF and image processing * **unstructured** - RAG pipeline with Weaviate vector DB query, Unstructured and LangChain, supports PDF and image processing * **instructor** - RAG pipeline with Unstructured and Instructor libraries, supports PDF and image processing. Works great for JSON response generation

Chat-Style-Bot

Chat-Style-Bot is an intelligent chatbot designed to mimic the chatting style of a specified individual. By analyzing and learning from WeChat chat records, Chat-Style-Bot can imitate your unique chatting style and become your personal chat assistant. Whether it's communicating with friends or handling daily conversations, Chat-Style-Bot can provide a natural, personalized interactive experience.

EduChat

EduChat is a large-scale language model-based chatbot system designed for intelligent education by the EduNLP team at East China Normal University. The project focuses on developing a dialogue-based language model for the education vertical domain, integrating diverse education vertical domain data, and providing functions such as automatic question generation, homework correction, emotional support, course guidance, and college entrance examination consultation. The tool aims to serve teachers, students, and parents to achieve personalized, fair, and warm intelligent education.

ChatGLM3

ChatGLM3 is a conversational pretrained model jointly released by Zhipu AI and THU's KEG Lab. ChatGLM3-6B is the open-sourced model in the ChatGLM3 series. It inherits the advantages of its predecessors, such as fluent conversation and low deployment threshold. In addition, ChatGLM3-6B introduces the following features: 1. A stronger foundation model: ChatGLM3-6B's foundation model ChatGLM3-6B-Base employs more diverse training data, more sufficient training steps, and more reasonable training strategies. Evaluation on datasets from different perspectives, such as semantics, mathematics, reasoning, code, and knowledge, shows that ChatGLM3-6B-Base has the strongest performance among foundation models below 10B parameters. 2. More complete functional support: ChatGLM3-6B adopts a newly designed prompt format, which supports not only normal multi-turn dialogue, but also complex scenarios such as tool invocation (Function Call), code execution (Code Interpreter), and Agent tasks. 3. A more comprehensive open-source sequence: In addition to the dialogue model ChatGLM3-6B, the foundation model ChatGLM3-6B-Base, the long-text dialogue model ChatGLM3-6B-32K, and ChatGLM3-6B-128K, which further enhances the long-text comprehension ability, are also open-sourced. All the above weights are completely open to academic research and are also allowed for free commercial use after filling out a questionnaire.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

For similar tasks

jimeng-free-api

Jimeng AI Free service provides powerful image generation capabilities with zero configuration deployment and support for multiple tokens. It is fully compatible with the OpenAI interface. The repository also includes other free APIs like Moonshot AI, StepChat, Qwen, GLM AI, Metaso AI, Doubao by ByteDance, Spark by Xunfei, Hailuo AI, DeepSeek, and Emohaa AI. Users can access the service by obtaining a sessionid from Jimeng and using it as a Bearer Token in the Authorization header for API requests. The service supports chat completions and image generations, with different models and parameters available for customization. Various deployment options are provided, including Docker, Docker-compose, Render, Vercel, and native deployment. Users are advised to use the recommended client applications for faster and simpler access to the free API services.

glm-free-api

GLM AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, intelligent agent dialogue support, AI drawing support, online search support, long document interpretation support, image parsing support. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository also includes six other free APIs for various services like Moonshot AI, StepChat, Qwen, Metaso, Spark, and Emohaa. The tool supports tasks such as chat completions, AI drawing, document interpretation, image parsing, and refresh token survival check.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

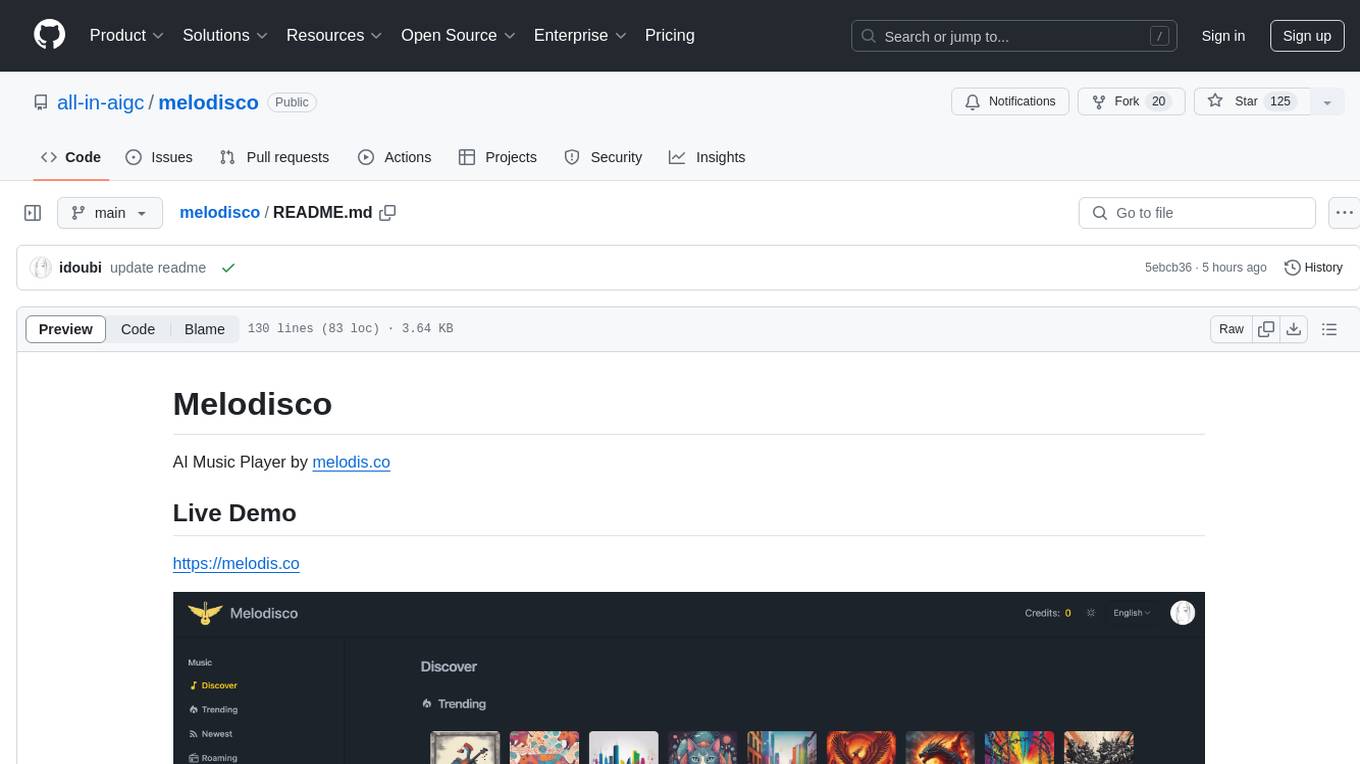

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.