tribe

Low code tool to rapidly build and coordinate multi-agent teams

Stars: 919

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

README:

Build a multi-agent team like this with simple drag and drop in minutes! 🤩

[!TIP] Prefer to write code instead? Check out Rojak — a Python library designed to orchestrate durable, fault-tolerant multi-agent workflows with ease!

[!WARNING] This project is NOT affiliated with any blockchain-related projects in any way. Be cautious of scammers falsely claiming association. Stay vigilant!

- Table of Contents

- What is Tribe?

- What are some use cases?

- Highlights

- How to get started

- Guides and concepts

- Contribution

- Release Notes

- License

[!WARNING] This project is currently under heavy development. Please be aware that significant changes may occur.

Have you heard the saying, 'Two minds are better than one'? That's true for agents too. Tribe leverages on the langgraph framework to let you customize and coordinate teams of agents easily. By splitting up tough tasks among agents that are good at different things, each one can focus on what it does best. This makes solving problems faster and better.

By teaming up, agents can take on more complex tasks. Here are a few examples of what they can do together:

- ⚽️ Footbal analysis: Imagine a team of agents where one scours the web for the latest Premier League news, and another analyzes the data to write insightful reports on each team's performance in the new season.

- 🏝️ Trip Planning: For planning your next vacation, one agent could recommend the best local eateries, while another finds the top-rated hotels for you. This team makes sure every part of your trip is covered.

- 👩💻 Customer Service: A customer service team where one agent handles IT issues, another manages complaints, and a third takes care of product inquiries. Each agent specializes in a different area, making the service faster and more efficient.

and many many more!

- Persistent conversations: Save and maintain chat histories, allowing you to continue conversations.

- Observability: Monitor and track your agents’ performance and outputs in real-time using LangSmith to ensure they operate efficiently.

- Tool Calling: Enable your agents to utilize external tools and APIs.

- Retrieval Augmented Generation: Enable your agents to reason with your internal knowledge base.

- Human-In-The-Loop: Enable human approval before tool calling.

- Open Source Models: Use open-source LLM models such as llama, Gemma and Phi.

- Integrate Tribe with external application: Use Tribe’s public API endpoints to interact with your teams.

- Easy Deployment: Deploy Tribe effortlessly using Docker.

- Multi-Tenancy: Manage and support multiple users and teams.

Before deploying it, make sure you change at least the values for:

SECRET_KEYFIRST_SUPERUSER_PASSWORDPOSTGRES_PASSWORD

You can (and should) pass these as environment variables from secrets.

Some environment variables in the .env file have a default value of changethis.

You have to change them with a secret key, to generate secret keys you can run the following command:

python -c "import secrets; print(secrets.token_urlsafe(32))"Copy the content and use that as password / secret key. And run that again to generate another secure key.

Get up and started within minutes on your local machine.

Deploy Tribe on your remote server.

In a sequential workflow, your agents are arranged in an orderly sequence and execute tasks one after another. Each task can be dependent on the previous task. This is useful if you want to tasks to be completed one after another in a deterministic sequence.

Use this if:

- Your project has clear, step-by-step tasks.

- The outcome of one task influences the next.

- You prefer a straightforward and predictable execution order.

- You need to ensure tasks are performed in a specific order.

In a hierarchical workflow, your agents are organised into a team-like structure comprising of 'team leader', 'team members' and even other 'sub-team leaders'. The team leader breaks down the task into smaller tasks and delegate them to its team members. After the team members complete these tasks, their responses will be passed to the team leader who then chooses to return the response to the user or delegate more tasks.

Use this if:

- Your tasks are complex and multifaceted.

- You need specialized agents to handle different subtasks.

- Task delegation and re-evaluation are crucial for your workflow.

- You want flexibility in task management and adaptability to changes.

Skills are abilities that you can equip your agents with to interact with the world. For example, you can provide your agent with the skill to check the current weather condition or search the web for the latest news. By default, Tribe provides three skills:

- duckduckgo-search: Performs web searches.

- wikipedia: Searches Wikipedia for information.

- yahoo-finance: Retrieves information from Yahoo Finance News.

You will likely want to create custom skills, which can be done in two ways: by using function definitions for simple HTTP requests or by writing custom skills in the codebase.

If your skill involves performing an HTTP request to fetch or update data, using skill definitions is the simplest approach. In Tribe, start by navigating to the 'Skills' tab and clicking the 'Add Skill' button. You will then be prompted to provide the skill definition, which instructs your agent on how to execute the specific skill. This definition should be structured as follows:

{

"url": "https://example.com",

"method": "GET",

"headers": {},

"type": "function",

"function": {

"name": "Your skill name",

"description": "Your skill description",

"parameters": {

"type": "object",

"properties": {

"param1": {

"type": "integer",

"description": "Description of the first parameter"

},

"param2": {

"type": "string",

"enum": ["option1"],

"description": "Description of the second parameter"

}

},

"required": ["param1", "param2"]

}

}

}| Key | Description |

|---|---|

url |

The endpoint URL for the API call. |

method |

The HTTP method used for the request. It can be GET, POST, PUT, PATCH, or DELETE. |

headers |

Any HTTP headers to include in the request. |

function |

Contains details about the skill: |

function > name |

The name of the skill. Follow these rules: only letters (a-z, A-Z), numbers (0-9), underscores (_), and hyphens (-) are allowed; must be between 1 and 64 characters long. |

function > description |

Describes the skill to inform the agent about its usage. |

function > parameters |

Details about the parameters the API accepts. |

properties > param |

The name of the query or body parameter. For GET methods, this will be a query parameter. For POST, PUT, PATCH, and DELETE, it will be in the request body. |

param > type |

Specifies the type of the parameter, which can be string, number, integer, or boolean. |

param > description |

Provides context about the parameter's purpose. |

param > enum |

Optionally, include an array to restrict the agent to select from predefined values. |

parameters > required |

Lists the parameters that are required, ensuring they are always included in the API request. |

For more intricate tasks that extend beyond simple HTTP requests, LangChain allows you to develop more advanced tools. You can integrate these tools into Tribe by adding them to the managed_skills dictionary. For a practical example, refer to the demo calculator tool. To learn how to create a LangChain tool, please consult their documentation.

After creating a new tool, restart the application to ensure the tool is properly loaded into the database. Likewise, if you need to remove a tool, simply delete it from the managed_skills dictionary and restart the application to ensure it is removed from the database. Do note that tools created this way are available to all users in your application.

RAG is a technique for augmenting your agents' knowledge with additional data. Agents can reason about a wide range of topics, but their knowledge is limited to public data up to the point in time they were trained on. If you want your agents to reason about private data, Tribe allows you to upload your data and select which data to include in your agent’s knowledge base. This enables your agents to reason with the selected data and allows you to create different agents with specialized knowledge.

By default, Tribe uses BAAI/bge-small-en-v1.5, which is a light and fast English embedding model that is better than OpenAI Ada-002. If your documents are multilingual or require image embedding, you may want to use another embedding model. You can easily do this by changing DENSE_EMBEDDING_MODEL in your .env file:

# See the list of supported models: https://qdrant.github.io/fastembed/examples/Supported_Models/

DENSE_EMBEDDING_MODEL=BAAI/bge-small-en-v1.5 # Change this[!WARNING] If your existing and new embedding models have different vector dimensions, you may need to recreate your Qdrant collection. You can delete the collection through the Qdrant Dashboard at http://qdrant.localhost/dashboard. Therefore, it is better to plan ahead which embedding model is most suitable for your workflows.

Open source models are becoming cheaper and easier to run, and some even match the performance of closed models. You might prefer using them for their privacy and cost benefits. If you are running Tribe locally and want to use open source models, I would recommend Ollama for its ease of use.

- Install Ollama: First, set up Ollama on your device. You can find the instructions in Ollama's repo.

- Download Models: Download your preferred models from Ollama

-

Configure your agents:

- Update the agent's provider to

ollama. - Paste the downloaded model's name (e.g.,

llama3.1:8b) into the model input field. - By default, Tribe will run on

http://host.docker.internal:11434, which maps tohttps://localhost:11434. This setup allows Tribe to communicate with the default Ollama host. If your setup uses a different host, specify the new host in the 'Base URL' input field.

- Update the agent's provider to

There are hundreds of open source models in Ollama's library suitable for different tasks. Here’s how to choose the right one for your use case:

-

Tool Calling Models: If you are planning to equip agents with specific skills, use models like

Llama3.1,Mistral Nemo,Firefunction V2, orCommand-R +and others that support tool calling. -

For Creative, Reasoning and other Tasks: You have more flexibility. You may stick to tool calling capable models or consider models like

gemma2orphi3.

If you’re not planning to use Ollama, you can still run open source models compatible with the OpenAI chat completions API.

Steps:

- Edit Your Agent: Select 'OpenAI' as your model provider.

- Specify Endpoint: Under 'Base URL', specify the model’s inference endpoint.

Log into Tribe using the email and password you set during the installation step.

Navigate to the 'Teams' page and click on 'Add Team'. Enter a name for your team and click 'Save'.

Create two additional team members by dragging the handle of the Team Leader node.

Update the first team member as shown.

Update the second team member as shown.

Go to the 'Chat' tab and send a question to your team to see how they respond.

Congratulations! You’ve successfully built and communicated with your first multi-agent team on Tribe.

Your team member can do more by providing it with a set of skills. Add a skill to your Foodie.

Now, when you ask your Foodie a question, it will search the web for more up-to-date information!

Create a new team and select the 'Sequential' workflow.

Drag and drop to create another team member below 'Worker0'.

Update the first team member as shown. Provide the 'wikipedia' skill to this team member.

Update the second team member as shown.

Go to the 'Chat' tab and send a question to your team to see how they respond. Notice that the Researcher will use Wikipedia to do its research. Very cool!

You can require your team members to wait for your approval before executing their skills. Add the ‘duckduckgo-search’ skill and select ‘Require approval’ in Researcher.

Now, before the Researcher executes its skills, it will ask for your approval. If the Researcher’s search isn’t what you wanted, reject the action and include an optional message to provide direction.

Once the Researcher adjusts the search to meet your requirements, you can approve the action.

The Researcher will then proceed to execute its skills as directed.

Tribe is open sourced and welcome contributions from the community! Check out our contribution guide to get started.

Some ways to contribute:

- Report bugs and issues.

- Enhance our documentation.

- Suggest or contribute new features or enhancements.

Check the file release-notes.md.

Tribe is licensed under the terms of the MIT license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for tribe

Similar Open Source Tools

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

SalesGPT

SalesGPT is an open-source AI agent designed for sales, utilizing context-awareness and LLMs to work across various communication channels like voice, email, and texting. It aims to enhance sales conversations by understanding the stage of the conversation and providing tools like product knowledge base to reduce errors. The agent can autonomously generate payment links, handle objections, and close sales. It also offers features like automated email communication, meeting scheduling, and integration with various LLMs for customization. SalesGPT is optimized for low latency in voice channels and ensures human supervision where necessary. The tool provides enterprise-grade security and supports LangSmith tracing for monitoring and evaluation of intelligent agents built on LLM frameworks.

LaVague

LaVague is an open-source Large Action Model framework that uses advanced AI techniques to compile natural language instructions into browser automation code. It leverages Selenium or Playwright for browser actions. Users can interact with LaVague through an interactive Gradio interface to automate web interactions. The tool requires an OpenAI API key for default examples and offers a Playwright integration guide. Contributors can help by working on outlined tasks, submitting PRs, and engaging with the community on Discord. The project roadmap is available to track progress, but users should exercise caution when executing LLM-generated code using 'exec'.

rosa

ROSA is an AI Agent designed to interact with ROS-based robotics systems using natural language queries. It can generate system reports, read and parse ROS log files, adapt to new robots, and run various ROS commands using natural language. The tool is versatile for robotics research and development, providing an easy way to interact with robots and the ROS environment.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

HackBot

HackBot is an AI-powered cybersecurity chatbot designed to provide accurate answers to cybersecurity-related queries, conduct code analysis, and scan analysis. It utilizes the Meta-LLama2 AI model through the 'LlamaCpp' library to respond coherently. The chatbot offers features like local AI/Runpod deployment support, cybersecurity chat assistance, interactive interface, clear output presentation, static code analysis, and vulnerability analysis. Users can interact with HackBot through a command-line interface and utilize it for various cybersecurity tasks.

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

EdgeChains

EdgeChains is an open-source chain-of-thought engineering framework tailored for Large Language Models (LLMs)- like OpenAI GPT, LLama2, Falcon, etc. - With a focus on enterprise-grade deployability and scalability. EdgeChains is specifically designed to **orchestrate** such applications. At EdgeChains, we take a unique approach to Generative AI - we think Generative AI is a deployment and configuration management challenge rather than a UI and library design pattern challenge. We build on top of a tech that has solved this problem in a different domain - Kubernetes Config Management - and bring that to Generative AI. Edgechains is built on top of jsonnet, originally built by Google based on their experience managing a vast amount of configuration code in the Borg infrastructure.

AI-Scientist

The AI Scientist is a comprehensive system for fully automatic scientific discovery, enabling Foundation Models to perform research independently. It aims to tackle the grand challenge of developing agents capable of conducting scientific research and discovering new knowledge. The tool generates papers on various topics using Large Language Models (LLMs) and provides a platform for exploring new research ideas. Users can create their own templates for specific areas of study and run experiments to generate papers. However, caution is advised as the codebase executes LLM-written code, which may pose risks such as the use of potentially dangerous packages and web access.

nagato-ai

Nagato-AI is an intuitive AI Agent library that supports multiple LLMs including OpenAI's GPT, Anthropic's Claude, Google's Gemini, and Groq LLMs. Users can create agents from these models and combine them to build an effective AI Agent system. The library is named after the powerful ninja Nagato from the anime Naruto, who can control multiple bodies with different abilities. Nagato-AI acts as a linchpin to summon and coordinate AI Agents for specific missions. It provides flexibility in programming and supports tools like Coordinator, Researcher, Critic agents, and HumanConfirmInputTool.

project_alice

Alice is an agentic workflow framework that integrates task execution and intelligent chat capabilities. It provides a flexible environment for creating, managing, and deploying AI agents for various purposes, leveraging a microservices architecture with MongoDB for data persistence. The framework consists of components like APIs, agents, tasks, and chats that interact to produce outputs through files, messages, task results, and URL references. Users can create, test, and deploy agentic solutions in a human-language framework, making it easy to engage with by both users and agents. The tool offers an open-source option, user management, flexible model deployment, and programmatic access to tasks and chats.

open-source-slack-ai

This repository provides a ready-to-run basic Slack AI solution that allows users to summarize threads and channels using OpenAI. Users can generate thread summaries, channel overviews, channel summaries since a specific time, and full channel summaries. The tool is powered by GPT-3.5-Turbo and an ensemble of NLP models. It requires Python 3.8 or higher, an OpenAI API key, Slack App with associated API tokens, Poetry package manager, and ngrok for local development. Users can customize channel and thread summaries, run tests with coverage using pytest, and contribute to the project for future enhancements.

SwiftSage

SwiftSage is a tool designed for conducting experiments in the field of machine learning and artificial intelligence. It provides a platform for researchers and developers to implement and test various algorithms and models. The tool is particularly useful for exploring new ideas and conducting experiments in a controlled environment. SwiftSage aims to streamline the process of developing and testing machine learning models, making it easier for users to iterate on their ideas and achieve better results. With its user-friendly interface and powerful features, SwiftSage is a valuable tool for anyone working in the field of AI and ML.

CyberScraper-2077

CyberScraper 2077 is an advanced web scraping tool powered by AI, designed to extract data from websites with precision and style. It offers a user-friendly interface, supports multiple data export formats, operates in stealth mode to avoid detection, and promises lightning-fast scraping. The tool respects ethical scraping practices, including robots.txt and site policies. With upcoming features like proxy support and page navigation, CyberScraper 2077 is a futuristic solution for data extraction in the digital realm.

devika

Devika is an advanced AI software engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective. Devika utilizes large language models, planning and reasoning algorithms, and web browsing abilities to intelligently develop software. Devika aims to revolutionize the way we build software by providing an AI pair programmer who can take on complex coding tasks with minimal human guidance. Whether you need to create a new feature, fix a bug, or develop an entire project from scratch, Devika is here to assist you.

Tiger

Tiger is a community-driven project developing a reusable and integrated tool ecosystem for LLM Agent Revolution. It utilizes Upsonic for isolated tool storage, profiling, and automatic document generation. With Tiger, you can create a customized environment for your agents or leverage the robust and publicly maintained Tiger curated by the community itself.

For similar tasks

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

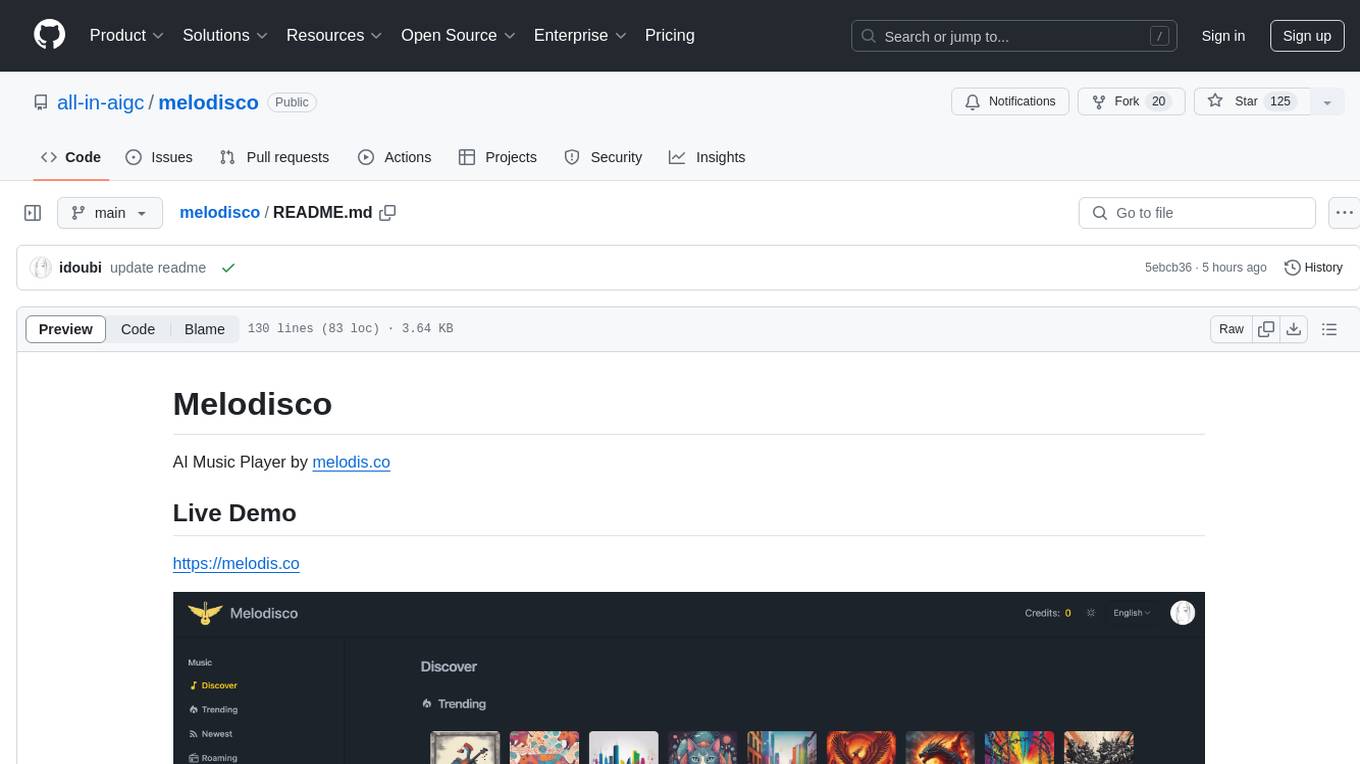

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

grps_trtllm

The grps-trtllm repository is a C++ implementation of a high-performance OpenAI LLM service, combining GRPS and TensorRT-LLM. It supports functionalities like Chat, Ai-agent, and Multi-modal. The repository offers advantages over triton-trtllm, including a complete LLM service implemented in pure C++, integrated tokenizer supporting huggingface and sentencepiece, custom HTTP functionality for OpenAI interface, support for different LLM prompt styles and result parsing styles, integration with tensorrt backend and opencv library for multi-modal LLM, and stable performance improvement compared to triton-trtllm.

discord-ai-bot

Discord AI Bot is a chatbot tool designed to interact with Ollama and AUTOMATIC1111 Stable Diffusion on Discord. The bot allows users to set up and configure a Discord bot to communicate with the mentioned AI models. Users can follow step-by-step instructions to install Node.js, Ollama, and the required dependencies, create a Discord bot, and interact with the bot by mentioning it in messages. Additionally, the tool provides set-up instructions for Docker users to easily deploy the bot using Docker containers. Overall, Discord AI Bot simplifies the process of integrating AI chatbots into Discord servers for interactive communication.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.