git-mcp

None

Stars: 320

GitMCP is a free, open-source service that transforms any GitHub project into a remote Model Context Protocol (MCP) endpoint, allowing AI assistants to access project documentation effortlessly. It empowers AI with semantic search capabilities, requires zero setup, is completely free and private, and serves as a bridge between GitHub repositories and AI assistants.

README:

Features • Usage • How It Works • Examples • FAQ • Privacy • Contributing • License

GitMCP is a free, open-source service that seamlessly transforms any GitHub project into a remote Model Context Protocol (MCP) endpoint, enabling AI assistants to access and understand the project's documentation effortlessly.

- Empower AI with GitHub Project Access: Direct your AI assistant to GitMCP for instant access to any GitHub project's documentation, complete with semantic search capabilities to optimize token usage.

- Zero Setup Required: No configurations or modifications needed — GitMCP works out of the box.

- Completely Free and Private: GitMCP is free. We don't collect any personally identifiable information or queries. Plus, you can host it yourself!

To make your GitHub repository accessible to AI assistants via GitMCP, use the following URL formats:

- For GitHub repositories:

gitmcp.io/{owner}/{repo} - For GitHub Pages sites:

{owner}.gitmcp.io/{repo} - Dynamic endpoint:

gitmcp.io/docs

Congratulations! The chosen GitHub project is now fully accessible to your AI.

Replace {owner} with your GitHub username or organization name and {repo} with your repository name. Once configured, your AI assistant can access the project's documentation seamlessly.

The dynamic endpoint doesn't require a pre-defined repository. When used, your AI assistant can dynamically input any GitHub repository to enjoy GitMCP's features.

GitMCP serves as a bridge between your GitHub repository's documentation and AI assistants by implementing the Model Context Protocol (MCP). When an AI assistant requires information from your repository, it sends a request to GitMCP. GitMCP retrieves the relevant content and provides semantic search capabilities, ensuring efficient and accurate information delivery.

Here are some examples of how to use GitMCP with different repositories:

-

Example 1: For the repository

https://github.com/octocat/Hello-World, use:https://gitmcp.io/octocat/Hello-World -

Example 2: For the GitHub Pages site

langchain-ai.gitmcp.io/langgraph, use:https://langchain-ai.gitmcp.io/langgraph -

Example 3: Use the generic

gitmcp.com/docsendpoint for your AI to dynamically select a repository

These URLs enable AI assistants to access and interact with the project's documentation through GitMCP.

GitMCP provides a set of tools that can be used to access and interact with the project's documentation.

Fetches the documentation for the {owner}/{repo} GitHub repository (as extracted from the URL: gitmcp.io/{owner}/{repo} or {owner}.gitmcp.io/{repo}). Useful for general questions. Retrieves the llms.txt file and falls back to README.md or other pages if the former is unavailable.

It searches the repository's documentation by providing a query. This is useful for specific questions. It uses semantic search to find the most relevant documentation. This mitigates the cost of a large documentation set that cannot be provided as direct context to LLMs.

Note: In the case of a generic

gitmcp.com/docsusage, the tools are calledfetch_generic_documentationandsearch_generic_documentation, and receive additionalownerandrepoarguments.

The Model Context Protocol is a standard that allows AI assistants to request and receive additional context from external sources in a structured manner, enhancing their understanding and performance.

Yes, GitMCP is compatible with any AI assistant supporting the Model Context Protocol, including tools like Cursor, VSCode, Claude, etc.

Absolutely! GitMCP works with any public GitHub repository without requiring any modifications. It prioritizes the llms.txt file and falls back to README.md or other pages if the former is unavailable. Future updates aim to support additional documentation methods and even generate content dynamically.

No, GitMCP is a free service to the community with no associated costs.

GitMCP is deeply committed to its users' privacy. The service doesn't have access to or store any personally identifiable information as it doesn't require authentication. In addition, it doesn't store any queries sent by the agents. Moreover, as GitMCP is an open-source project, it can be deployed independently in your environment.

GitMCP only accesses content that is already publicly available and only when queried by a user. GitMCP does not automatically scrape repositories. Before accessing any GitHub Pages site, the code checks for robots.txt rules and follows the directives set by site owners, allowing them to opt out. Please note that GitMCP doesn't permanently store data regarding the GitHub projects or their content.

We welcome contributions! Please take a look at our contribution guidelines.

This project is licensed under the MIT License.

GitMCP is provided "as is" without warranty of any kind. While we strive to ensure the reliability and security of our service, we are not responsible for any damages or issues that may arise from its use. GitHub projects accessed through GitMCP are subject to their respective owners' terms and conditions. GitMCP is not affiliated with GitHub or any of the mentioned AI tools.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for git-mcp

Similar Open Source Tools

git-mcp

GitMCP is a free, open-source service that transforms any GitHub project into a remote Model Context Protocol (MCP) endpoint, allowing AI assistants to access project documentation effortlessly. It empowers AI with semantic search capabilities, requires zero setup, is completely free and private, and serves as a bridge between GitHub repositories and AI assistants.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

ollama-ai-provider

Vercel AI Provider for running Large Language Models locally using Ollama. This module is under development and may contain errors and frequent incompatible changes. It provides the capability of generating and streaming text and objects, with features like image input, object generation, tool usage simulation, tool streaming simulation, intercepting fetch requests, and provider management. The provider can be customized with optional settings like baseURL and headers.

devika

Devika is an advanced AI software engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective. Devika utilizes large language models, planning and reasoning algorithms, and web browsing abilities to intelligently develop software. Devika aims to revolutionize the way we build software by providing an AI pair programmer who can take on complex coding tasks with minimal human guidance. Whether you need to create a new feature, fix a bug, or develop an entire project from scratch, Devika is here to assist you.

azure-search-openai-javascript

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

civitai

Civitai is a platform where people can share their stable diffusion models (textual inversions, hypernetworks, aesthetic gradients, VAEs, and any other crazy stuff people do to customize their AI generations), collaborate with others to improve them, and learn from each other's work. The platform allows users to create an account, upload their models, and browse models that have been shared by others. Users can also leave comments and feedback on each other's models to facilitate collaboration and knowledge sharing.

vector-vein

VectorVein is a no-code AI workflow software inspired by LangChain and langflow, aiming to combine the powerful capabilities of large language models and enable users to achieve intelligent and automated daily workflows through simple drag-and-drop actions. Users can create powerful workflows without the need for programming, automating all tasks with ease. The software allows users to define inputs, outputs, and processing methods to create customized workflow processes for various tasks such as translation, mind mapping, summarizing web articles, and automatic categorization of customer reviews.

spec-kit

Spec Kit is a tool designed to enable organizations to focus on product scenarios rather than writing undifferentiated code through Spec-Driven Development. It flips the script on traditional software development by making specifications executable, directly generating working implementations. The tool provides a structured process emphasizing intent-driven development, rich specification creation, multi-step refinement, and heavy reliance on advanced AI model capabilities for specification interpretation. Spec Kit supports various development phases, including 0-to-1 Development, Creative Exploration, and Iterative Enhancement, and aims to achieve experimental goals related to technology independence, enterprise constraints, user-centric development, and creative & iterative processes. The tool requires Linux/macOS (or WSL2 on Windows), an AI coding agent (Claude Code, GitHub Copilot, Gemini CLI, or Cursor), uv for package management, Python 3.11+, and Git.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

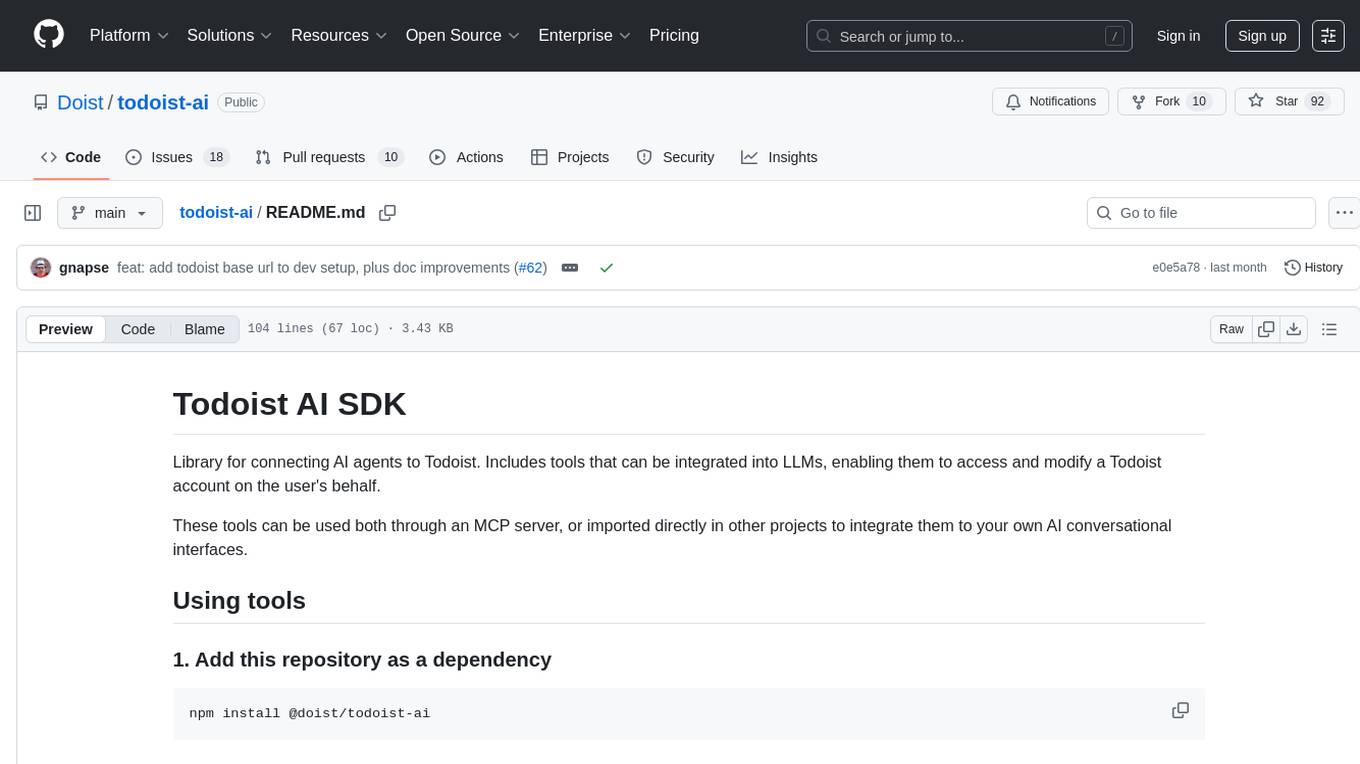

todoist-ai

Library for connecting AI agents to Todoist, enabling them to access and modify a Todoist account on the user's behalf. Tools can be used through an MCP server or integrated into other projects for AI conversational interfaces. Reusable tools allow for complete workflows, balancing flexibility and efficiency for LLMs. Early-stage project with more tools planned. Designed to provide a small set of tools for various AI interfaces.

OrionChat

Orion is a web-based chat interface that simplifies interactions with multiple AI model providers. It provides a unified platform for chatting and exploring various large language models (LLMs) such as Ollama, OpenAI (GPT model), Cohere (Command-r models), Google (Gemini models), Anthropic (Claude models), Groq Inc., Cerebras, and SambaNova. Users can easily navigate and assess different AI models through an intuitive, user-friendly interface. Orion offers features like browser-based access, code execution with Google Gemini, text-to-speech (TTS), speech-to-text (STT), seamless integration with multiple AI models, customizable system prompts, language translation tasks, document uploads for analysis, and more. API keys are stored locally, and requests are sent directly to official providers' APIs without external proxies.

dockershrink

Dockershrink is an AI-powered Commandline Tool designed to help reduce the size of Docker images. It combines traditional Rule-based analysis with Generative AI techniques to optimize Image configurations. The tool supports NodeJS applications and aims to save costs on storage, data transfer, and build times while increasing developer productivity. By automatically applying advanced optimization techniques, Dockershrink simplifies the process for engineers and organizations, resulting in significant savings and efficiency improvements.

raggenie

RAGGENIE is a low-code RAG builder tool designed to simplify the creation of conversational AI applications. It offers out-of-the-box plugins for connecting to various data sources and building conversational AI on top of them, including integration with pre-built agents for actions. The tool is open-source under the MIT license, with a current focus on making it easy to build RAG applications and future plans for maintenance, monitoring, and transitioning applications from pilots to production.

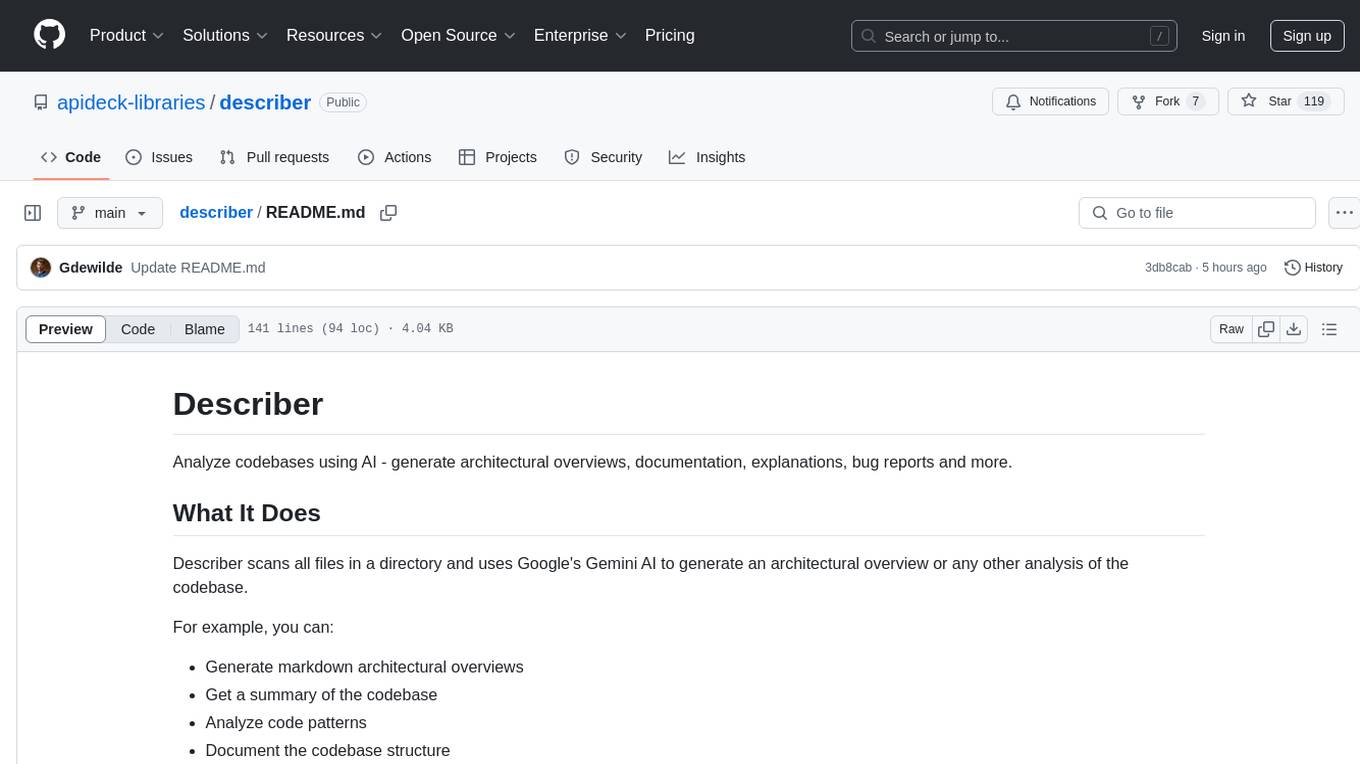

describer

Describer is a tool that analyzes codebases using AI to generate architectural overviews, documentation, explanations, bug reports, and more. It scans all files in a directory and uses Google's Gemini AI to provide insights such as markdown architectural overviews, codebase summaries, code pattern analysis, codebase structure documentation, bug identification, and test idea generation. The tool respects .gitignore rules by default but allows users to include/exclude specific files or patterns for analysis.

airbyte_serverless

AirbyteServerless is a lightweight tool designed to simplify the management of Airbyte connectors. It offers a serverless mode for running connectors, allowing users to easily move data from any source to their data warehouse. Unlike the full Airbyte-Open-Source-Platform, AirbyteServerless focuses solely on the Extract-Load process without a UI, database, or transform layer. It provides a CLI tool, 'abs', for managing connectors, creating connections, running jobs, selecting specific data streams, handling secrets securely, and scheduling remote runs. The tool is scalable, allowing independent deployment of multiple connectors. It aims to streamline the connector management process and provide a more agile alternative to the comprehensive Airbyte platform.

For similar tasks

Fay

Fay is an open-source digital human framework that offers different versions for various purposes. The '带货完整版' is suitable for online and offline salespersons. The '助理完整版' serves as a human-machine interactive digital assistant that can also control devices upon command. The 'agent版' is designed to be an autonomous agent capable of making decisions and contacting its owner. The framework provides updates and improvements across its different versions, including features like emotion analysis integration, model optimizations, and compatibility enhancements. Users can access detailed documentation for each version through the provided links.

hume-python-sdk

The Hume AI Python SDK allows users to integrate Hume APIs directly into their Python applications. Users can access complete documentation, quickstart guides, and example notebooks to get started. The SDK is designed to provide support for Hume's expressive communication platform built on scientific research. Users are encouraged to create an account at beta.hume.ai and stay updated on changes through Discord. The SDK may undergo breaking changes to improve tooling and ensure reliable releases in the future.

deid-examples

This repository contains examples demonstrating how to use the Private AI REST API for identifying and replacing Personally Identifiable Information (PII) in text. The API supports over 50 entity types, such as Credit Card information and Social Security numbers, across 50 languages. Users can access documentation and the API reference on Private AI's website. The examples include common API call scenarios and use cases in both Python and JavaScript, with additional content related to PrivateGPT for secure work with Language Models (LLMs).

web-ui

WebUI is a user-friendly tool built on Gradio that enhances website accessibility for AI agents. It supports various Large Language Models (LLMs) and allows custom browser integration for seamless interaction. The tool eliminates the need for re-login and authentication challenges, offering high-definition screen recording capabilities.

git-mcp

GitMCP is a free, open-source service that transforms any GitHub project into a remote Model Context Protocol (MCP) endpoint, allowing AI assistants to access project documentation effortlessly. It empowers AI with semantic search capabilities, requires zero setup, is completely free and private, and serves as a bridge between GitHub repositories and AI assistants.

tidb.ai

TiDB.AI is a conversational search RAG (Retrieval-Augmented Generation) app based on TiDB Serverless Vector Storage. It provides an out-of-the-box and embeddable QA robot experience based on knowledge from official and documentation sites. The platform features a Perplexity-style Conversational Search page with an advanced built-in website crawler for comprehensive coverage. Users can integrate an embeddable JavaScript snippet into their website for instant responses to product-related queries. The tech stack includes Next.js, TypeScript, Tailwind CSS, shadcn/ui for design, TiDB for database storage, Kysely for SQL query building, NextAuth.js for authentication, Vercel for deployments, and LlamaIndex for the RAG framework. TiDB.AI is open-source under the Apache License, Version 2.0.

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

code_puppy

Code Puppy is an AI-powered code generation agent designed to understand programming tasks, generate high-quality code, and explain its reasoning. It supports multi-language code generation, interactive CLI, and detailed code explanations. The tool requires Python 3.9+ and API keys for various models like GPT, Google's Gemini, Cerebras, and Claude. It also integrates with MCP servers for advanced features like code search and documentation lookups. Users can create custom JSON agents for specialized tasks and access a variety of tools for file management, code execution, and reasoning sharing.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.