odoo-expert

RAG-powered documentation assistant that converts, processes, and provides semantic search capabilities for Odoo's technical documentation. Supports multiple Odoo versions with an interactive chat interface powered by LLM models.

Stars: 56

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

README:

RAG-Powered Odoo Documentation Assistant

Intro, Updates & Demo Video: https://fanyangmeng.blog/introducing-odoo-expert/

Browser extension now available for Chrome and Edge!

Check it out: https://microsoftedge.microsoft.com/addons/detail/odoo-expert/mnmapgdlgncmdiofbdacjilfcafgapci

⚠️ PLEASE NOTE: This project is not sponsored or endrosed by Odoo S.A. or Odoo Inc. yet. I am developing this project as a personal project with the intention of helping the Odoo community on my own.

A comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. This tool supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings.

The project was conceived with the vision of enhancing the Odoo documentation experience. The goal was to create a system similar to Perplexity or Google, where users could receive AI-powered answers directly within the documentation website, complete with proper source links. This eliminates the need for users to manually navigate through complex documentation structures.

graph TD

A[Odoo Documentation] -->|pull_rawdata.sh| B[Raw Data]

B -->|process-raw| C[Markdown Files]

C -->|process-docs| D[(Database with Embeddings)]

D -->|serve --mode ui| E[Streamlit UI]

D -->|serve --mode api| F[REST API]

subgraph "Data Processing Pipeline"

B

C

D

end

subgraph "Interface Layer"

E

F

end

style A fill:#f9f,stroke:#333,stroke-width:2px

style D fill:#bbf,stroke:#333,stroke-width:2px

style E fill:#bfb,stroke:#333,stroke-width:2px

style F fill:#bfb,stroke:#333,stroke-width:2pxThe system operates through a pipeline of data processing and serving steps:

- Documentation Pulling: Fetches raw documentation from Odoo's repositories

- Format Conversion: Converts RST files to Markdown for better AI processing

- Embedding Generation: Processes Markdown files and stores them with embeddings

- Interface Layer: Provides both UI and API access to the processed knowledge base

- Documentation Processing: Automated conversion of RST to Markdown with smart preprocessing

- Semantic Search: Real-time semantic search across documentation versions

- AI-Powered Chat: Context-aware responses with source citations

- Multi-Version Support: Comprehensive support for Odoo versions 16.0, 17.0, and 18.0

- Always updated: Efficiently detect and process documentation updates.

- Web UI: Streamlit-based interface for interactive querying

- REST API: Authenticated endpoints for programmatic access

- CLI: Command-line interface for document processing and chat

- Docker and Docker Compose

- PostgreSQL with pgvector extension

- OpenAI API access

- Git

if you want to do source install, you need to install the following dependencies:

- Python 3.10+

- Pandoc

- PostgreSQL with pgvector extension

Assuming the table name is odoo_docs. If you have a different table name, please update the table name in the following SQL commands.

- Download the docker-compose.yml file to your local machine.

- Set up environment variables in the

.envfile by using the.env.examplefile as a template.OPENAI_API_KEY=your_openai_api_key OPENAI_API_BASE=https://api.openai.com/v1 POSTGRES_USER=odoo_expert POSTGRES_PASSWORD=your_secure_password POSTGRES_DB=odoo_expert_db POSTGRES_HOST=db POSTGRES_PORT=5432 LLM_MODEL=gpt-4o BEARER_TOKEN=comma_separated_bearer_tokens CORS_ORIGINS=http://localhost:3000,http://localhost:8501,https://www.odoo.com ODOO_VERSIONS=16.0,17.0,18.0 SYSTEM_PROMPT=same as .env.example # Data Directories RAW_DATA_DIR=raw_data MARKDOWN_DATA_DIR=markdown - Run the following command:

docker-compose up -d

- Pull the raw data and write to your PostgreSQL's table:

# Pull documentation (uses ODOO_VERSIONS from .env) docker compose run --rm odoo-expert ./pull_rawdata.sh # Convert RST to Markdown docker compose run --rm odoo-expert python main.py process-raw # Process documents docker compose run --rm odoo-expert python main.py process-docs

- Access the UI at port 8501 and the API at port 8000

- Docker compose will automatically pull the latest changes and update the system once a day, or you can manually update by running the following command:

docker compose run --rm odoo-expert python main.py check-updates

-

Install PostgreSQL and pgvector:

# For Debian/Ubuntu sudo apt-get install postgresql postgresql-contrib # Install pgvector extension git clone https://github.com/pgvector/pgvector.git cd pgvector make make install

-

Create database and enable extension:

CREATE DATABASE odoo_expert; \c odoo_expert CREATE EXTENSION vector;

-

Set up the database schema by running the SQL commands in

src/sqls/init.sql. -

Create a

.envfile from the template and configure your environment variables:cp .env.example .env # Edit .env with your settings including ODOO_VERSIONS and SYSTEM_PROMPT -

Pull Odoo documentation:

chmod +x pull_rawdata.sh ./pull_rawdata.sh # Will use ODOO_VERSIONS from .env -

Convert RST to Markdown:

python main.py process-raw

-

Process and embed documents:

python main.py process-docs

-

Launch the chat interface:

python main.py serve --mode ui

-

Launch the API:

python main.py serve --mode api

-

Access the UI at port 8501 and the API at port 8000

-

To sync with the latest changes in the Odoo documentation, run:

python main.py check-updates

The project provides a REST API for programmatic access to the documentation assistant.

All API endpoints require Bearer token authentication. Add your API token in the Authorization header:

Authorization: Bearer your-api-tokenPOST /api/chat

Query the documentation and get AI-powered responses.

Request body:

{

"query": "string", // The question about Odoo

"version": integer, // Odoo version (160, 170, or 180)

"conversation_history": [ // Optional

{

"user": "string",

"assistant": "string"

}

]

}Response:

{

"answer": "string", // AI-generated response

"sources": [ // Reference documents used

{

"url": "string",

"title": "string"

}

]

}Example:

curl -X POST "http://localhost:8000/api/chat" \

-H "Authorization: Bearer your-api-token" \

-H "Content-Type: application/json" \

-d '{

"query": "How do I install Odoo?",

"version": 180,

"conversation_history": []

}'POST /api/stream

Query the documentation and get AI-powered responses in streaming format.

Request body:

{

"query": "string", // The question about Odoo

"version": integer, // Odoo version (160, 170, or 180)

"conversation_history": [ // Optional

{

"user": "string",

"assistant": "string"

}

]

}Response: Stream of text chunks (text/event-stream content type)

Example:

curl -X POST "http://localhost:8000/api/stream" \

-H "Authorization: Bearer your-api-token" \

-H "Content-Type: application/json" \

-d '{

"query": "How do I install Odoo?",

"version": 180,

"conversation_history": []

}'The project includes a browser extension that enhances the Odoo documentation search experience with AI-powered responses. To set up the extension:

-

Open Chrome/Edge and navigate to the extensions page:

- Chrome:

chrome://extensions/ - Edge:

edge://extensions/

- Chrome:

-

Enable "Developer mode" in the top right corner

-

Click "Load unpacked" and select the

browser-extfolder from this project -

The Odoo Expert extension icon should appear in your browser toolbar

-

Make sure your local API server is running (port 8000)

The extension will now enhance the search experience on Odoo documentation pages by providing AI-powered responses alongside the traditional search results.

Please see GitHub Issues for the future roadmap.

If you encounter any issues or have questions, please:

- Check the known issues

- Create a new issue in the GitHub repository

- Provide detailed information about your environment and the problem

⚠️ Please do not directly email me for support, as I will not respond to it at all, let's keep the discussion in the GitHub issues for clarity and transparency.

Contributions are welcome! Please feel free to submit a Pull Request.

Thanks for the following contributors during the development of this project:

- Viet Din (Desdaemon): Giving me important suggestions on how to improve the performance.

This project is licensed under Apache License 2.0: No warranty is provided. You can use this project for any purpose, but you must include the original copyright and license.

Extra license CC-BY-SA 4.0 to align with the original Odoo/Documentation license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for odoo-expert

Similar Open Source Tools

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

cursor-tools

cursor-tools is a CLI tool designed to enhance AI agents with advanced skills, such as web search, repository context, documentation generation, GitHub integration, Xcode tools, and browser automation. It provides features like Perplexity for web search, Gemini 2.0 for codebase context, and Stagehand for browser operations. The tool requires API keys for Perplexity AI and Google Gemini, and supports global installation for system-wide access. It offers various commands for different tasks and integrates with Cursor Composer for AI agent usage.

sosumi.ai

sosumi.ai provides Apple Developer documentation in an AI-readable format by converting JavaScript-rendered pages into Markdown. It offers an HTTP API to access Apple docs, supports external Swift-DocC sites, integrates with MCP server, and provides tools like searchAppleDocumentation and fetchAppleDocumentation. The project can be self-hosted and is currently hosted on Cloudflare Workers. It is built with Hono and supports various runtimes. The application is designed for accessibility-first, on-demand rendering of Apple Developer pages to Markdown.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

supabase-mcp

Supabase MCP Server standardizes how Large Language Models (LLMs) interact with Supabase, enabling AI assistants to manage tables, fetch config, and query data. It provides tools for project management, database operations, project configuration, branching (experimental), and development tools. The server is pre-1.0, so expect some breaking changes between versions.

mcp-server

The UI5 Model Context Protocol server offers tools to improve the developer experience when working with agentic AI tools. It helps with creating new UI5 projects, detecting and fixing UI5-specific errors, and providing additional UI5-specific information for agentic AI tools. The server supports various tools such as scaffolding new UI5 applications, fetching UI5 API documentation, providing UI5 development best practices, extracting metadata and configuration from UI5 projects, retrieving version information for the UI5 framework, analyzing and reporting issues in UI5 code, offering guidelines for converting UI5 applications to TypeScript, providing UI Integration Cards development best practices, scaffolding new UI Integration Cards, and validating the manifest against the UI5 Manifest schema. The server requires Node.js and npm versions specified, along with an MCP client like VS Code or Cline. Configuration options are available for customizing the server's behavior, and specific setup instructions are provided for MCP clients like VS Code and Cline.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

LLamaWorker

LLamaWorker is a HTTP API server developed to provide an OpenAI-compatible API for integrating Large Language Models (LLM) into applications. It supports multi-model configuration, streaming responses, text embedding, chat templates, automatic model release, function calls, API key authentication, and test UI. Users can switch models, complete chats and prompts, manage chat history, and generate tokens through the test UI. Additionally, LLamaWorker offers a Vulkan compiled version for download and provides function call templates for testing. The tool supports various backends and provides API endpoints for chat completion, prompt completion, embeddings, model information, model configuration, and model switching. A Gradio UI demo is also available for testing.

steel-browser

Steel is an open-source browser API designed for AI agents and applications, simplifying the process of building live web agents and browser automation tools. It serves as a core building block for a production-ready, containerized browser sandbox with features like stealth capabilities, text-to-markdown session management, UI for session viewing/debugging, and full browser control through popular automation frameworks. Steel allows users to control, run, and manage a production-ready browser environment via a REST API, offering features such as full browser control, session management, proxy support, extension support, debugging tools, anti-detection mechanisms, resource management, and various browser tools. It aims to streamline complex browsing tasks programmatically, enabling users to focus on their AI applications while Steel handles the underlying complexity.

chat-mcp

A Cross-Platform Interface for Large Language Models (LLMs) utilizing the Model Context Protocol (MCP) to connect and interact with various LLMs. The desktop app, built on Electron, ensures compatibility across Linux, macOS, and Windows. It simplifies understanding MCP principles, facilitates testing of multiple servers and LLMs, and supports dynamic LLM configuration and multi-client management. The UI can be extracted for web use, ensuring consistency across web and desktop versions.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

mcp-server-qdrant

The mcp-server-qdrant repository is an official Model Context Protocol (MCP) server designed for keeping and retrieving memories in the Qdrant vector search engine. It acts as a semantic memory layer on top of the Qdrant database. The server provides tools like 'qdrant-store' for storing information in the database and 'qdrant-find' for retrieving relevant information. Configuration is done using environment variables, and the server supports different transport protocols. It can be installed using 'uvx' or Docker, and can also be installed via Smithery for Claude Desktop. The server can be used with Cursor/Windsurf as a code search tool by customizing tool descriptions. It can store code snippets and help developers find specific implementations or usage patterns. The repository is licensed under the Apache License 2.0.

langchain-extract

LangChain Extract is a simple web server that allows you to extract information from text and files using LLMs. It is built using FastAPI, LangChain, and Postgresql. The backend closely follows the extraction use-case documentation and provides a reference implementation of an app that helps to do extraction over data using LLMs. This repository is meant to be a starting point for building your own extraction application which may have slightly different requirements or use cases.

raglite

RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite. It offers configurable options for choosing LLM providers, database types, and rerankers. The toolkit is fast and permissive, utilizing lightweight dependencies and hardware acceleration. RAGLite provides features like PDF to Markdown conversion, multi-vector chunk embedding, optimal semantic chunking, hybrid search capabilities, adaptive retrieval, and improved output quality. It is extensible with a built-in Model Context Protocol server, customizable ChatGPT-like frontend, document conversion to Markdown, and evaluation tools. Users can configure RAGLite for various tasks like configuring, inserting documents, running RAG pipelines, computing query adapters, evaluating performance, running MCP servers, and serving frontends.

promptwright

Promptwright is a Python library designed for generating large synthetic datasets using a local LLM and various LLM service providers. It offers flexible interfaces for generating prompt-led synthetic datasets. The library supports multiple providers, configurable instructions and prompts, YAML configuration for tasks, command line interface for running tasks, push to Hugging Face Hub for dataset upload, and system message control. Users can define generation tasks using YAML configuration or Python code. Promptwright integrates with LiteLLM to interface with LLM providers and supports automatic dataset upload to Hugging Face Hub.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

For similar tasks

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

langchain-rust

LangChain Rust is a library for building applications with Large Language Models (LLMs) through composability. It provides a set of tools and components that can be used to create conversational agents, document loaders, and other applications that leverage LLMs. LangChain Rust supports a variety of LLMs, including OpenAI, Azure OpenAI, Ollama, and Anthropic Claude. It also supports a variety of embeddings, vector stores, and document loaders. LangChain Rust is designed to be easy to use and extensible, making it a great choice for developers who want to build applications with LLMs.

dolma

Dolma is a dataset and toolkit for curating large datasets for (pre)-training ML models. The dataset consists of 3 trillion tokens from a diverse mix of web content, academic publications, code, books, and encyclopedic materials. The toolkit provides high-performance, portable, and extensible tools for processing, tagging, and deduplicating documents. Key features of the toolkit include built-in taggers, fast deduplication, and cloud support.

sparrow

Sparrow is an innovative open-source solution for efficient data extraction and processing from various documents and images. It seamlessly handles forms, invoices, receipts, and other unstructured data sources. Sparrow stands out with its modular architecture, offering independent services and pipelines all optimized for robust performance. One of the critical functionalities of Sparrow - pluggable architecture. You can easily integrate and run data extraction pipelines using tools and frameworks like LlamaIndex, Haystack, or Unstructured. Sparrow enables local LLM data extraction pipelines through Ollama or Apple MLX. With Sparrow solution you get API, which helps to process and transform your data into structured output, ready to be integrated with custom workflows. Sparrow Agents - with Sparrow you can build independent LLM agents, and use API to invoke them from your system. **List of available agents:** * **llamaindex** - RAG pipeline with LlamaIndex for PDF processing * **vllamaindex** - RAG pipeline with LLamaIndex multimodal for image processing * **vprocessor** - RAG pipeline with OCR and LlamaIndex for image processing * **haystack** - RAG pipeline with Haystack for PDF processing * **fcall** - Function call pipeline * **unstructured-light** - RAG pipeline with Unstructured and LangChain, supports PDF and image processing * **unstructured** - RAG pipeline with Weaviate vector DB query, Unstructured and LangChain, supports PDF and image processing * **instructor** - RAG pipeline with Unstructured and Instructor libraries, supports PDF and image processing. Works great for JSON response generation

Open-DocLLM

Open-DocLLM is an open-source project that addresses data extraction and processing challenges using OCR and LLM technologies. It consists of two main layers: OCR for reading document content and LLM for extracting specific content in a structured manner. The project offers a larger context window size compared to JP Morgan's DocLLM and integrates tools like Tesseract OCR and Mistral for efficient data analysis. Users can run the models on-premises using LLM studio or Ollama, and the project includes a FastAPI app for testing purposes.

aws-genai-llm-chatbot

This repository provides code to deploy a chatbot powered by Multi-Model and Multi-RAG using AWS CDK on AWS. Users can experiment with various Large Language Models and Multimodal Language Models from different providers. The solution supports Amazon Bedrock, Amazon SageMaker self-hosted models, and third-party providers via API. It also offers additional resources like AWS Generative AI CDK Constructs and Project Lakechain for building generative AI solutions and document processing. The roadmap and authors are listed, along with contributors. The library is licensed under the MIT-0 License with information on changelog, code of conduct, and contributing guidelines. A legal disclaimer advises users to conduct their own assessment before using the content for production purposes.

ExtractThinker

ExtractThinker is a library designed for extracting data from files and documents using Language Model Models (LLMs). It offers ORM-style interaction between files and LLMs, supporting multiple document loaders such as Tesseract OCR, Azure Form Recognizer, AWS TextExtract, and Google Document AI. Users can customize extraction using contract definitions, process documents asynchronously, handle various document formats efficiently, and split and process documents. The project is inspired by the LangChain ecosystem and focuses on Intelligent Document Processing (IDP) using LLMs to achieve high accuracy in document extraction tasks.

For similar jobs

assistant-ui

assistant-ui is a set of React components for AI chat. It provides a collection of components that can be easily integrated into projects to create AI chat interfaces for Discord, websites, and demos. The components are designed to streamline the process of setting up AI chat functionality in React applications, making it easier for developers to incorporate AI chat features into their projects.

Simulator-Controller

Simulator Controller is a modular administration and controller application for Sim Racing, featuring a comprehensive plugin automation framework for external controller hardware. It includes voice chat capable Assistants like Virtual Race Engineer, Race Strategist, Race Spotter, and Driving Coach. The tool offers features for setup, strategy development, monitoring races, and more. Developed in AutoHotkey, it supports various simulation games and integrates with third-party applications for enhanced functionality.

RirikoBot

RirikoBot is a powerful AI-powered Discord bot with features like Twitch Live Notifier, Giveaways, OpenAI, Stable Diffusion, Moderations, Anime / Manga Finder, and more. It is based on Discord.js v14 and can be hosted on a PC or a Server. Users can interact with the bot through various commands to access different functionalities.

douyin-chatgpt-bot

Douyin ChatGPT Bot is an AI-driven system for automatic replies on Douyin, including comment and private message replies. It offers features such as comment filtering, customizable robot responses, and automated account management. The system aims to enhance user engagement and brand image on the Douyin platform, providing a seamless experience for managing interactions with followers and potential customers.

fridon-ai

FridonAI is an open-source project offering AI-powered tools for cryptocurrency analysis and blockchain operations. It includes modules like FridonAnalytics for price analysis, FridonSearch for technical indicators, FridonNotifier for custom alerts, FridonBlockchain for blockchain operations, and FridonChat as a unified chat interface. The platform empowers users to create custom AI chatbots, access crypto tools, and interact effortlessly through chat. The core functionality is modular, with plugins, tools, and utilities for easy extension and development. FridonAI implements a scoring system to assess user interactions and incentivize engagement. The application uses Redis extensively for communication and includes a Nest.js backend for system operations.

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

typedai

TypedAI is a TypeScript-first AI platform designed for developers to create and run autonomous AI agents, LLM based workflows, and chatbots. It offers advanced autonomous agents, software developer agents, pull request code review agent, AI chat interface, Slack chatbot, and supports various LLM services. The platform features configurable Human-in-the-loop settings, functional callable tools/integrations, CLI and Web UI interface, and can be run locally or deployed on the cloud with multi-user/SSO support. It leverages the Python AI ecosystem through executing Python scripts/packages and provides flexible run/deploy options like single user mode, Firestore & Cloud Run deployment, and multi-user SSO enterprise deployment. TypedAI also includes UI examples, code examples, and automated LLM function schemas for seamless development and execution of AI workflows.

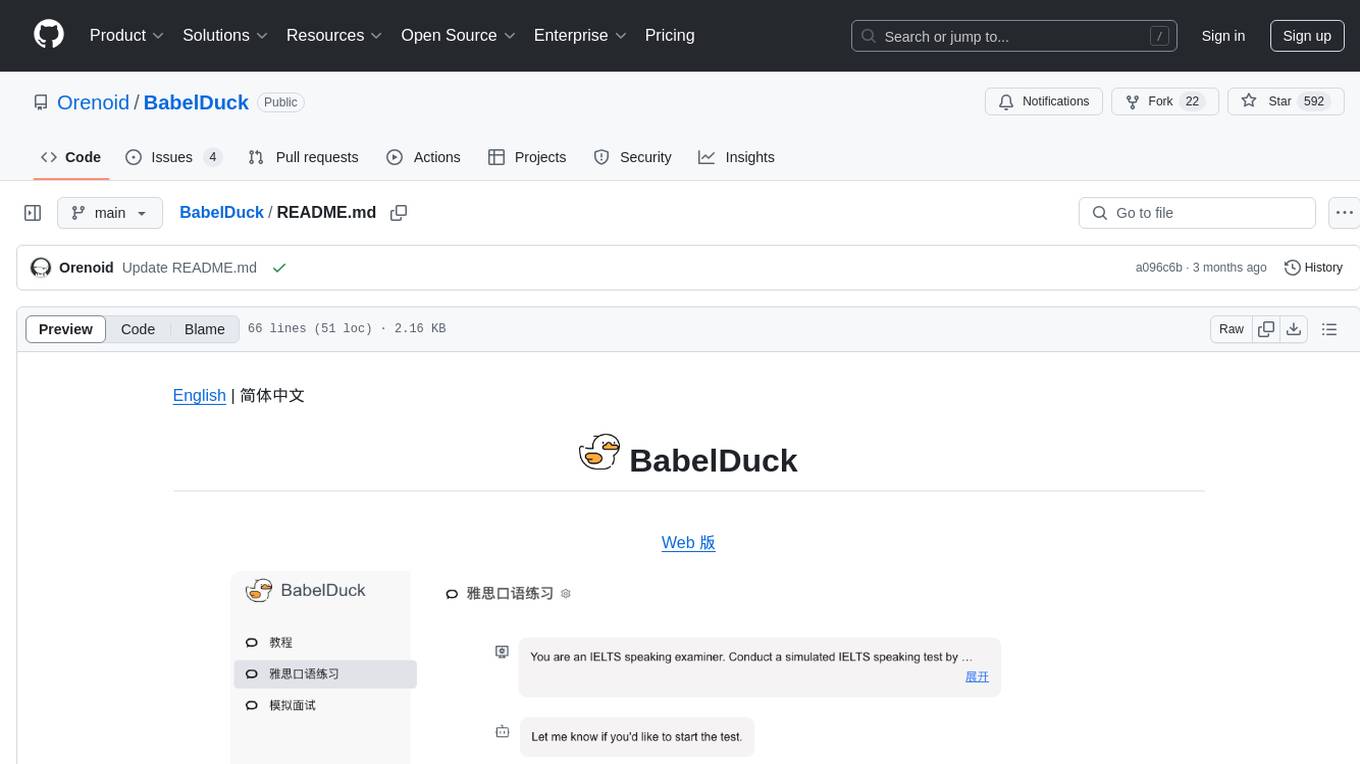

BabelDuck

BabelDuck is a highly customizable AI oral conversation practice application for language learners at all levels, with a focus on being more beginner-friendly. It aims to minimize the threshold and mental burden of oral expression practice. The tool supports various AI conversation features such as managing multiple dialogues, customizing system prompts, and providing suggestions for grammar, translation, or expression refinement without interrupting the current conversation. Users can seek further discussion through sub-dialogues when in doubt about AI suggestions, seamlessly returning to the original conversation afterward. BabelDuck also offers voice input and output, integrates browser-built text-to-speech, and Azure TTS, and supports different dialogue preferences, data stored locally for user privacy, multilingual interface, and built-in tutorials.