single-file-agents

What if we could pack single purpose, powerful AI Agents into a single python file?

Stars: 202

Single File Agents (SFA) is a collection of powerful single-file agents built on top of uv, a modern Python package installer and resolver. These agents aim to perform specific tasks efficiently, demonstrating precise prompt engineering and GenAI patterns. The repository contains agents built across major GenAI providers like Gemini, OpenAI, and Anthropic. Each agent is self-contained, minimal, and built on modern Python for fast and reliable dependency management. Users can run these scripts from their server or directly from a gist. The agents are patternful, emphasizing the importance of setting up effective prompts, tools, and processes for reusability.

README:

Premise: #1: What if we could pack single purpose, powerful AI Agents into a single python file?

Premise: #2: What's the best structural pattern for building Agents that can improve in capability as compute and intelligence increases?

A collection of powerful single-file agents built on top of uv - the modern Python package installer and resolver.

These agents aim to do one thing and one thing only. They demonstrate precise prompt engineering and GenAI patterns for practical tasks many of which I share on the IndyDevDan YouTube channel. Watch us walk through the Single File Agent in this video.

You can also check out this video where we use Devin, Cursor, Aider, and PAIC-Patterns to build three new agents with powerful spec (plan) prompts.

This repo contains a few agents built across the big 3 GenAI providers (Gemini, OpenAI, Anthropic).

Export your API keys:

export GEMINI_API_KEY='your-api-key-here'

export OPENAI_API_KEY='your-api-key-here'

export ANTHROPIC_API_KEY='your-api-key-here'

export FIRECRAWL_API_KEY='your-api-key-here' # Get your API key from https://www.firecrawl.dev/JQ Agent:

uv run sfa_jq_gemini_v1.py --exe "Filter scores above 80 from data/analytics.json and save to high_scores.json"DuckDB Agent (OpenAI):

# Tip tier

uv run sfa_duckdb_openai_v2.py -d ./data/analytics.db -p "Show me all users with score above 80"DuckDB Agent (Anthropic):

# Tip tier

uv run sfa_duckdb_anthropic_v2.py -d ./data/analytics.db -p "Show me all users with score above 80"DuckDB Agent (Gemini):

# Buggy but usually works

uv run sfa_duckdb_gemini_v2.py -d ./data/analytics.db -p "Show me all users with score above 80"SQLite Agent (OpenAI):

uv run sfa_sqlite_openai_v2.py -d ./data/analytics.sqlite -p "Show me all users with score above 80"Meta Prompt Generator:

uv run sfa_meta_prompt_openai_v1.py \

--purpose "generate mermaid diagrams" \

--instructions "generate a mermaid valid chart, use diagram type specified or default flow, use examples to understand the structure of the output" \

--sections "user-prompt" \

--variables "user-prompt"(sfa_bash_editor_agent_anthropic_v2.py)

An AI-powered assistant that can both edit files and execute bash commands using Claude's tool use capabilities.

Example usage:

# View a file

uv run sfa_bash_editor_agent_anthropic_v2.py --prompt "Show me the first 10 lines of README.md"

# Create a new file

uv run sfa_bash_editor_agent_anthropic_v2.py --prompt "Create a new file called hello.txt with 'Hello World!' in it"

# Replace text in a file

uv run sfa_bash_editor_agent_anthropic_v2.py --prompt "Create a new file called hello.txt with 'Hello World!' in it. Then update hello.txt to say 'Hello AI Coding World'"

# Execute a bash command

uv run sfa_bash_editor_agent_anthropic_v2.py --prompt "List all Python files in the current directory sorted by size"(sfa_polars_csv_agent_openai_v2.py)

An AI-powered assistant that generates and executes Polars data transformations for CSV files using OpenAI's function calling capabilities.

Example usage:

# Run Polars CSV agent with default compute loops (10)

uv run sfa_polars_csv_agent_openai_v2.py -i "data/analytics.csv" -p "What is the average age of the users?"

# Run with custom compute loops

uv run sfa_polars_csv_agent_openai_v2.py -i "data/analytics.csv" -p "What is the average age of the users?" -c 5(sfa_scrapper_agent_openai_v2.py)

An AI-powered web scraping and content filtering assistant that uses OpenAI's function calling capabilities and the Firecrawl API for efficient web scraping.

Example usage:

# Basic scraping with markdown list output

uv run sfa_scrapper_agent_openai_v2.py -u "https://example.com" -p "Scrap and format each sentence as a separate line in a markdown list" -o "example.md"

# Advanced scraping with specific content extraction

uv run sfa_scrapper_agent_openai_v2.py \

--url https://agenticengineer.com/principled-ai-coding \

--prompt "What are the names and descriptions of each lesson?" \

--output-file-path paic-lessons.md \

-c 10- Self-contained: Each agent is a single file with embedded dependencies

- Minimal, Precise Agents: Carefully crafted prompts for small agents that can do one thing really well

- Modern Python: Built on uv for fast, reliable dependency management

- Run From The Cloud: With uv, you can run these scripts from your server or right from a gist (see my gists commands)

- Patternful: Building effective agents is about setting up the right prompts, tools, and process for your use case. Once you setup a great pattern, you can re-use it over and over. That's part of the magic of these SFA's.

The project includes a test duckdb database (data/analytics.db), a sqlite database (data/analytics.sqlite), and a JSON file (data/analytics.json) for testing purposes. The database contains sample user data with the following characteristics:

- 30 sample users with varied attributes

- Fields: id (UUID), name, age, city, score, is_active, status, created_at

- Test data includes:

- Names: Alice, Bob, Charlie, Diana, Eric, Fiona, Jane, John

- Cities: Berlin, London, New York, Paris, Singapore, Sydney, Tokyo, Toronto

- Status values: active, inactive, pending, archived

- Age range: 20-65

- Score range: 3.1-96.18

- Date range: 2023-2025

Perfect for testing filtering, sorting, and aggregation operations with realistic data variations.

Note: We're using the term 'agent' loosely for some of these SFA's. We have prompts, prompt chains, and a couple are official Agents.

(sfa_jq_gemini_v1.py)

An AI-powered assistant that generates precise jq commands for JSON processing

Example usage:

# Generate and execute a jq command

uv run sfa_jq_gemini_v1.py --exe "Filter scores above 80 from data/analytics.json and save to high_scores.json"

# Generate command only

uv run sfa_jq_gemini_v1.py "Filter scores above 80 from data/analytics.json and save to high_scores.json"(sfa_duckdb_openai_v2.py, sfa_duckdb_anthropic_v2.py, sfa_duckdb_gemini_v2.py, sfa_duckdb_gemini_v1.py)

We have three DuckDB agents that demonstrate different approaches and capabilities across major AI providers:

An AI-powered assistant that generates and executes DuckDB SQL queries using OpenAI's function calling capabilities.

Example usage:

# Run DuckDB agent with default compute loops (10)

uv run sfa_duckdb_openai_v2.py -d ./data/analytics.db -p "Show me all users with score above 80"

# Run with custom compute loops

uv run sfa_duckdb_openai_v2.py -d ./data/analytics.db -p "Show me all users with score above 80" -c 5An AI-powered assistant that generates and executes DuckDB SQL queries using Claude's tool use capabilities.

Example usage:

# Run DuckDB agent with default compute loops (10)

uv run sfa_duckdb_anthropic_v2.py -d ./data/analytics.db -p "Show me all users with score above 80"

# Run with custom compute loops

uv run sfa_duckdb_anthropic_v2.py -d ./data/analytics.db -p "Show me all users with score above 80" -c 5An AI-powered assistant that generates and executes DuckDB SQL queries using Gemini's function calling capabilities.

Example usage:

# Run DuckDB agent with default compute loops (10)

uv run sfa_duckdb_gemini_v2.py -d ./data/analytics.db -p "Show me all users with score above 80"

# Run with custom compute loops

uv run sfa_duckdb_gemini_v2.py -d ./data/analytics.db -p "Show me all users with score above 80" -c 5An AI-powered assistant that generates comprehensive, structured prompts for language models.

Example usage:

# Generate a meta prompt using command-line arguments.

# Optional arguments are marked with a ?.

uv run sfa_meta_prompt_openai_v1.py \

--purpose "generate mermaid diagrams" \

--instructions "generate a mermaid valid chart, use diagram type specified or default flow, use examples to understand the structure of the output" \

--sections "examples, user-prompt" \

--examples "create examples of 3 basic mermaid charts with <user-chart-request> and <chart-response> blocks" \

--variables "user-prompt"

# Without optional arguments, the script will enter interactive mode.

uv run sfa_meta_prompt_openai_v1.py \

--purpose "generate mermaid diagrams" \

--instructions "generate a mermaid valid chart, use diagram type specified or default flow, use examples to understand the structure of the output"

# Interactive Mode

# Just run the script without any flags to enter interactive mode.

# You'll be prompted step by step for:

# - Purpose (required): The main goal of your prompt

# - Instructions (required): Detailed instructions for the model

# - Sections (optional): Additional sections to include

# - Examples (optional): Example inputs and outputs

# - Variables (optional): Placeholders for dynamic content

uv run sfa_meta_prompt_openai_v1.pyUp for a challenge?

- Python 3.8+

- uv package manager

- GEMINI_API_KEY (for Gemini-based agents)

- OPENAI_API_KEY (for OpenAI-based agents)

- ANTHROPIC_API_KEY (for Anthropic-based agents)

- jq command-line JSON processor (for JQ agent)

- DuckDB CLI (for DuckDB agents)

macOS:

brew install jqWindows:

- Download from stedolan.github.io/jq/download

- Or install with Chocolatey:

choco install jq

macOS:

brew install duckdbWindows:

- Download the CLI executable from duckdb.org/docs/installation

- Add the executable location to your system PATH

- Install uv:

curl -LsSf https://astral.sh/uv/install.sh | sh- Clone this repository:

git clone <repository-url>- Set your Gemini API key (for JQ generator):

export GEMINI_API_KEY='your-api-key-here'

# Set your OpenAI API key (for DuckDB agents):

export OPENAI_API_KEY='your-api-key-here'

# Set your Anthropic API key (for DuckDB agents):

export ANTHROPIC_API_KEY='your-api-key-here'- uv - The engineers creating uv are built different. Thank you for fixing the python ecosystem.

- Simon Willison - Simon introduced me to the fact that you can use uv to run single file python scripts with dependencies. Massive thanks for all your work. He runs one of the most valuable blogs for engineers in the world.

- Building Effective Agents - A proper breakdown of how to build useful units of value built on top of GenAI.

- Part Time Larry - Larry has a great breakdown on the new Python GenAI library and delivers great hands on, actionable GenAI x Finance information.

- Aider - AI Coding done right. Maximum control over your AI Coding Experience. Enough said.

Read README.md, CLAUDE.md, ai_docs/*, and run git ls-files to understand this codebase.

MIT License - feel free to use this code in your own projects.

If you find value from my work: give a shout out and tag my YT channel IndyDevDan.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for single-file-agents

Similar Open Source Tools

single-file-agents

Single File Agents (SFA) is a collection of powerful single-file agents built on top of uv, a modern Python package installer and resolver. These agents aim to perform specific tasks efficiently, demonstrating precise prompt engineering and GenAI patterns. The repository contains agents built across major GenAI providers like Gemini, OpenAI, and Anthropic. Each agent is self-contained, minimal, and built on modern Python for fast and reliable dependency management. Users can run these scripts from their server or directly from a gist. The agents are patternful, emphasizing the importance of setting up effective prompts, tools, and processes for reusability.

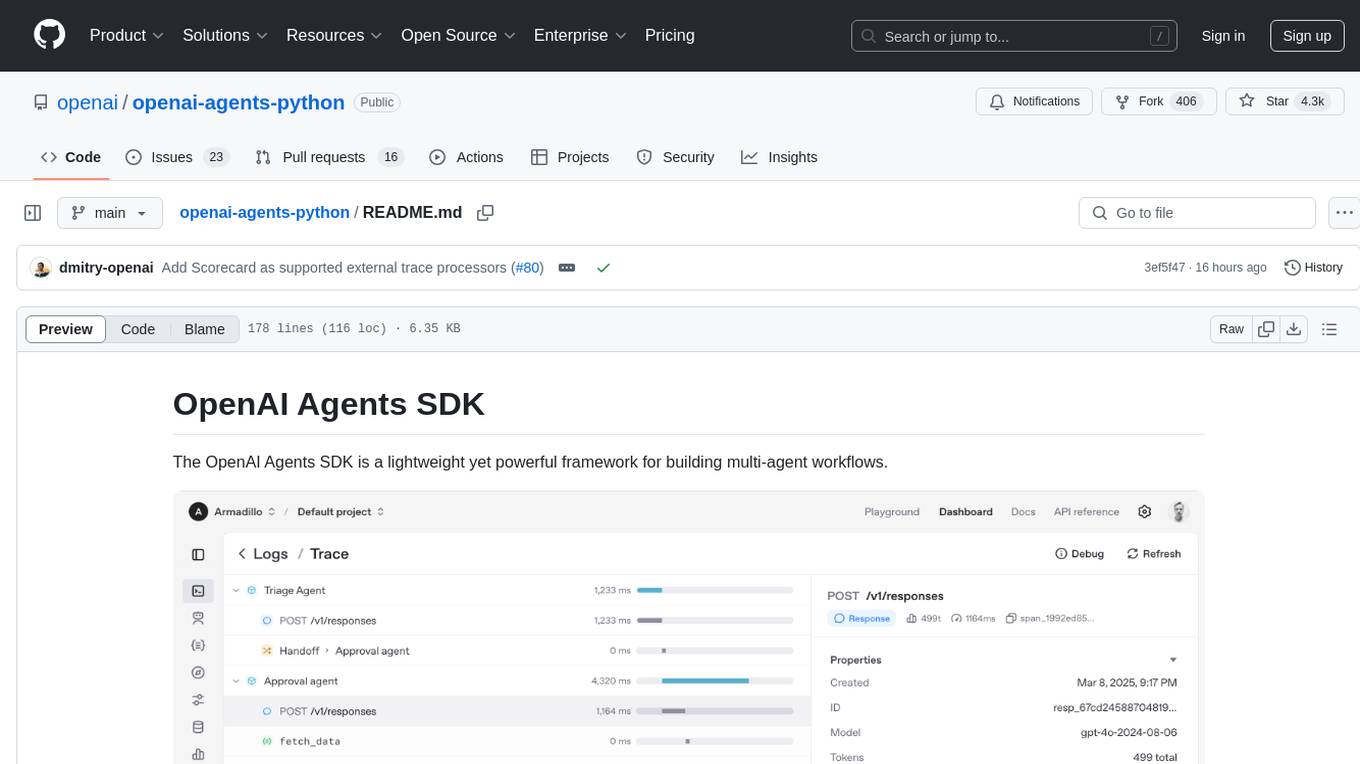

openai-agents-python

The OpenAI Agents SDK is a lightweight framework for building multi-agent workflows. It includes concepts like Agents, Handoffs, Guardrails, and Tracing to facilitate the creation and management of agents. The SDK is compatible with any model providers supporting the OpenAI Chat Completions API format. It offers flexibility in modeling various LLM workflows and provides automatic tracing for easy tracking and debugging of agent behavior. The SDK is designed for developers to create deterministic flows, iterative loops, and more complex workflows.

PSAI

PSAI is a PowerShell module that empowers scripts with the intelligence of OpenAI, bridging the gap between PowerShell and AI. It enables seamless integration for tasks like file searches and data analysis, revolutionizing automation possibilities with just a few lines of code. The module supports the latest OpenAI API changes, offering features like improved file search, vector store objects, token usage control, message limits, tool choice parameter, custom conversation histories, and model configuration parameters.

promptwright

Promptwright is a Python library designed for generating large synthetic datasets using a local LLM and various LLM service providers. It offers flexible interfaces for generating prompt-led synthetic datasets. The library supports multiple providers, configurable instructions and prompts, YAML configuration for tasks, command line interface for running tasks, push to Hugging Face Hub for dataset upload, and system message control. Users can define generation tasks using YAML configuration or Python code. Promptwright integrates with LiteLLM to interface with LLM providers and supports automatic dataset upload to Hugging Face Hub.

SWELancer-Benchmark

SWE-Lancer is a benchmark repository containing datasets and code for the paper 'SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?'. It provides instructions for package management, building Docker images, configuring environment variables, and running evaluations. Users can use this tool to assess the performance of language models in real-world freelance software engineering tasks.

oasis

OASIS is a scalable, open-source social media simulator that integrates large language models with rule-based agents to realistically mimic the behavior of up to one million users on platforms like Twitter and Reddit. It facilitates the study of complex social phenomena such as information spread, group polarization, and herd behavior, offering a versatile tool for exploring diverse social dynamics and user interactions in digital environments. With features like scalability, dynamic environments, diverse action spaces, and integrated recommendation systems, OASIS provides a comprehensive platform for simulating social media interactions at a large scale.

promptwright

Promptwright is a Python library designed for generating large synthetic datasets using local LLM and various LLM service providers. It offers flexible interfaces for generating prompt-led synthetic datasets. The library supports multiple providers, configurable instructions and prompts, YAML configuration, command line interface, push to Hugging Face Hub, and system message control. Users can define generation tasks using YAML configuration files or programmatically using Python code. Promptwright integrates with LiteLLM for LLM providers and supports automatic dataset upload to Hugging Face Hub. The library is not responsible for the content generated by models and advises users to review the data before using it in production environments.

neo4j-graphrag-python

The Neo4j GraphRAG package for Python is an official repository that provides features for creating and managing vector indexes in Neo4j databases. It aims to offer developers a reliable package with long-term commitment, maintenance, and fast feature updates. The package supports various Python versions and includes functionalities for creating vector indexes, populating them, and performing similarity searches. It also provides guidelines for installation, examples, and development processes such as installing dependencies, making changes, and running tests.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

cover-agent

CodiumAI Cover Agent is a tool designed to help increase code coverage by automatically generating qualified tests to enhance existing test suites. It utilizes Generative AI to streamline development workflows and is part of a suite of utilities aimed at automating the creation of unit tests for software projects. The system includes components like Test Runner, Coverage Parser, Prompt Builder, and AI Caller to simplify and expedite the testing process, ensuring high-quality software development. Cover Agent can be run via a terminal and is planned to be integrated into popular CI platforms. The tool outputs debug files locally, such as generated_prompt.md, run.log, and test_results.html, providing detailed information on generated tests and their status. It supports multiple LLMs and allows users to specify the model to use for test generation.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

safety-tooling

This repository, safety-tooling, is designed to be shared across various AI Safety projects. It provides an LLM API with a common interface for OpenAI, Anthropic, and Google models. The aim is to facilitate collaboration among AI Safety researchers, especially those with limited software engineering backgrounds, by offering a platform for contributing to a larger codebase. The repo can be used as a git submodule for easy collaboration and updates. It also supports pip installation for convenience. The repository includes features for installation, secrets management, linting, formatting, Redis configuration, testing, dependency management, inference, finetuning, API usage tracking, and various utilities for data processing and experimentation.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

wcgw

wcgw is a shell and coding agent designed for Claude and Chatgpt. It provides full shell access with no restrictions, desktop control on Claude for screen capture and control, interactive command handling, large file editing, and REPL support. Users can use wcgw to create, execute, and iterate on tasks, such as solving problems with Python, finding code instances, setting up projects, creating web apps, editing large files, and running server commands. Additionally, wcgw supports computer use on Docker containers for desktop control. The tool can be extended with a VS Code extension for pasting context on Claude app and integrates with Chatgpt for custom GPT interactions.

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

For similar tasks

single-file-agents

Single File Agents (SFA) is a collection of powerful single-file agents built on top of uv, a modern Python package installer and resolver. These agents aim to perform specific tasks efficiently, demonstrating precise prompt engineering and GenAI patterns. The repository contains agents built across major GenAI providers like Gemini, OpenAI, and Anthropic. Each agent is self-contained, minimal, and built on modern Python for fast and reliable dependency management. Users can run these scripts from their server or directly from a gist. The agents are patternful, emphasizing the importance of setting up effective prompts, tools, and processes for reusability.

mcp-server-mysql

The MCP Server for MySQL based on NodeJS is a Model Context Protocol server that provides access to MySQL databases. It enables users to inspect database schemas and execute SQL queries. The server offers tools for executing SQL queries, providing comprehensive database information, security features like SQL injection prevention, performance optimizations, monitoring, and debugging capabilities. Users can configure the server using environment variables and advanced options. The server supports multi-DB mode, schema-specific permissions, and includes troubleshooting guidelines for common issues. Contributions are welcome, and the project roadmap includes enhancing query capabilities, security features, performance optimizations, monitoring, and expanding schema information.

db-ally

db-ally is a library for creating natural language interfaces to data sources. It allows developers to outline specific use cases for a large language model (LLM) to handle, detailing the desired data format and the possible operations to fetch this data. db-ally effectively shields the complexity of the underlying data source from the model, presenting only the essential information needed for solving the specific use cases. Instead of generating arbitrary SQL, the model is asked to generate responses in a simplified query language.

reductstore

ReductStore is a high-performance time series database designed for storing and managing large amounts of unstructured blob data. It offers features such as real-time querying, batching data, and HTTP(S) API for edge computing, computer vision, and IoT applications. The database ensures data integrity, implements retention policies, and provides efficient data access, making it a cost-effective solution for applications requiring unstructured data storage and access at specific time intervals.

markdowner

Markdowner is a fast tool designed to convert any website into LLM-ready markdown data. It aims to improve the quality of responses in the AI app Supermemory by structuring and predicting data in markdown format. The tool offers features such as website conversion, LLM filtering, detailed markdown mode, auto crawler, text and JSON responses, and easy self-hosting. Markdowner utilizes Cloudflare's Browser rendering and Durable objects for browser instance creation and markdown conversion. Users can self-host the project with the Workers paid plan, following simple steps. Support the project by starring the repository.

letsql

LETSQL is a data processing library built on top of Ibis and DataFusion to write multi-engine data workflows. It is currently in development and does not have a stable release. Users can install LETSQL from PyPI and use it to connect to data sources, read data, filter, group, and aggregate data for analysis. Contributions to the project are welcome, and the library is actively maintained with support available for any issues. LETSQL heavily relies on Ibis and DataFusion for its functionality.

qsv

qsv is a command line program for querying, slicing, indexing, analyzing, filtering, enriching, transforming, sorting, validating, joining, formatting & converting tabular data (CSV, spreadsheets, DBs, parquet, etc). Commands are simple, composable & 'blazing fast'. It is a blazing-fast data-wrangling toolkit with a focus on speed, processing very large files, and being a complete data-wrangling toolkit. It is designed to be portable, easy to use, secure, and easy to contribute to. qsv follows the RFC 4180 CSV standard, requires UTF-8 encoding, and supports various file formats. It has extensive shell completion support, automatic compression/decompression using Snappy, and supports environment variables and dotenv files. qsv has a comprehensive test suite and is dual-licensed under MIT or the UNLICENSE.

context7

Context7 is a powerful tool for analyzing and visualizing data in various formats. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With advanced features such as data filtering, aggregation, and customization, Context7 is suitable for both beginners and experienced data analysts. The tool supports a wide range of data sources and formats, making it versatile for different use cases. Whether you are working on exploratory data analysis, data visualization, or data storytelling, Context7 can help you uncover valuable insights and communicate your findings effectively.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.