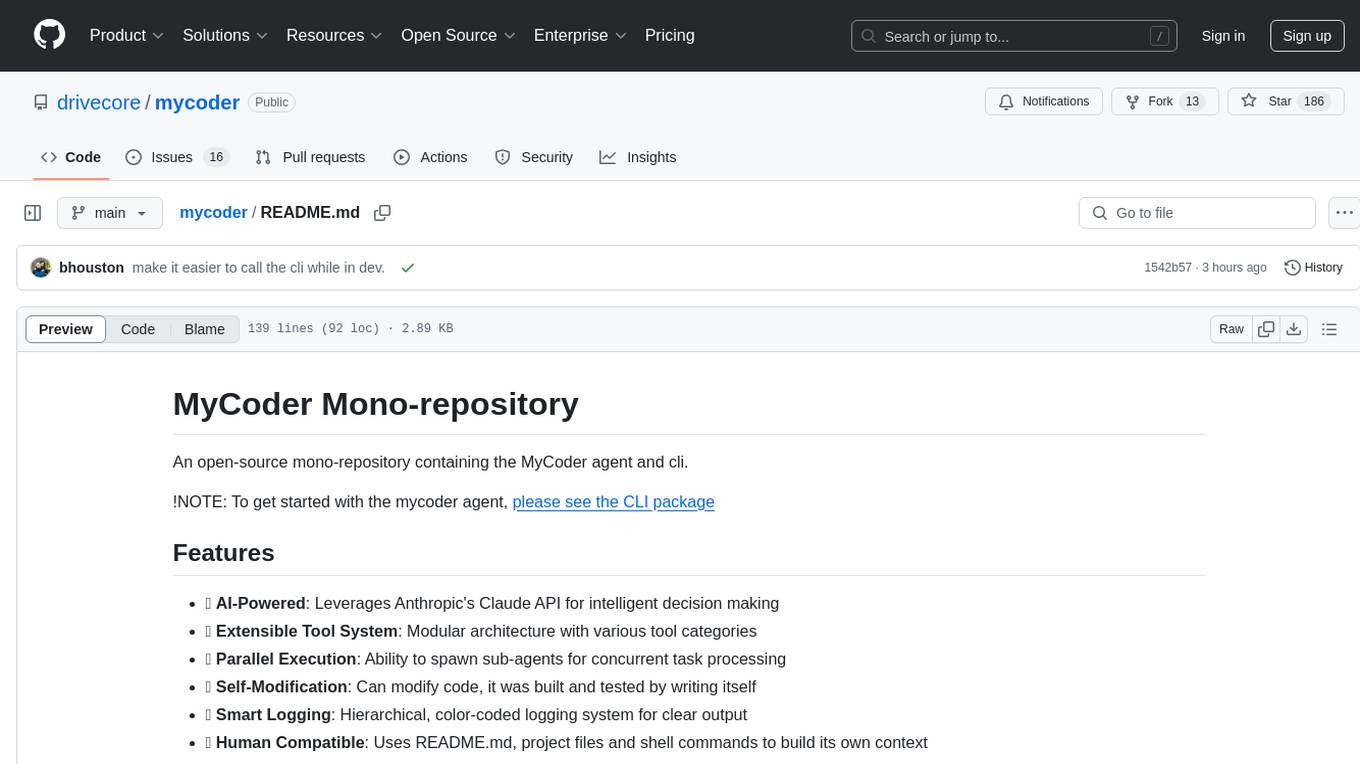

mycoder

Simple to install, powerful command-line based AI agent system for coding.

Stars: 342

An open-source mono-repository containing the MyCoder agent and CLI. It leverages Anthropic's Claude API for intelligent decision making, has a modular architecture with various tool categories, supports parallel execution with sub-agents, can modify code by writing itself, features a smart logging system for clear output, and is human-compatible using README.md, project files, and shell commands to build its own context.

README:

Command-line interface for AI-powered coding tasks. Full details available on the main MyCoder.ai website and the Official MyCoder.Ai Docs website.

- 🤖 AI-Powered: Leverages Anthropic's Claude, OpenAI models, and Ollama for intelligent coding assistance

- 🛠️ Extensible Tool System: Modular architecture with various tool categories

- 🔄 Parallel Execution: Ability to spawn sub-agents for concurrent task processing

- 📝 Self-Modification: Can modify code, it was built and tested by writing itself

- 🔍 Smart Logging: Hierarchical, color-coded logging system for clear output

- 👤 Human Compatible: Uses README.md, project files and shell commands to build its own context

- 🌐 GitHub Integration: GitHub mode for working with issues and PRs as part of workflow

- 📄 Model Context Protocol: Support for MCP to access external context sources

- 🧠 Message Compaction: Automatic management of context window for long-running agents

Please join the MyCoder.ai discord for support: https://discord.gg/5K6TYrHGHt

npm install -g mycoderFor detailed installation instructions for macOS and Linux, including how to set up Node.js using NVM, see our Getting Started guide.

# Interactive mode

mycoder -i

# Run with a prompt

mycoder "Implement a React component that displays a list of items"

# Run with a prompt from a file

mycoder -f prompt.txt

# Enable interactive corrections during execution (press Ctrl+M to send corrections)

mycoder --interactive "Implement a React component that displays a list of items"

# Disable user prompts for fully automated sessions

mycoder --userPrompt false "Generate a basic Express.js server"

# Disable user consent warning and version upgrade check for automated environments

mycoder --upgradeCheck false "Generate a basic Express.js server"MyCoder is configured using a configuration file in your project. MyCoder supports multiple configuration file locations and formats, similar to ESLint and other modern JavaScript tools.

MyCoder will look for configuration in the following locations (in order of precedence):

-

mycoder.config.jsin your project root -

.mycoder.config.jsin your project root -

.config/mycoder.jsin your project root -

.mycoder.rcin your project root -

.mycoder.rcin your home directory -

mycoderfield inpackage.json -

~/.config/mycoder/config.js(XDG standard user configuration)

Multiple file extensions are supported: .js, .ts, .mjs, .cjs, .json, .jsonc, .json5, .yaml, .yml, and .toml.

Create a configuration file in your preferred location:

// mycoder.config.js

export default {

// GitHub integration

githubMode: true,

// Browser settings

headless: true,

userSession: false,

// System browser detection settings

browser: {

// Whether to use system browsers or Playwright's bundled browsers

useSystemBrowsers: true,

// Preferred browser type (chromium, firefox, webkit)

preferredType: 'chromium',

// Custom browser executable path (overrides automatic detection)

// executablePath: null, // e.g., '/path/to/chrome'

},

// Model settings

provider: 'anthropic',

model: 'claude-3-7-sonnet-20250219',

maxTokens: 4096,

temperature: 0.7,

// Custom settings

// customPrompt can be a string or an array of strings for multiple lines

customPrompt: '',

// Example of multiple line custom prompts:

// customPrompt: [

// 'Custom instruction line 1',

// 'Custom instruction line 2',

// 'Custom instruction line 3',

// ],

profile: false,

// Base URL configuration (for providers that need it)

baseUrl: 'http://localhost:11434', // Example for Ollama

// MCP configuration

mcp: {

servers: [

{

name: 'example',

url: 'https://mcp.example.com',

auth: {

type: 'bearer',

token: 'your-token-here',

},

},

],

defaultResources: ['example://docs/api'],

defaultTools: ['example://tools/search'],

},

};CLI arguments will override settings in your configuration file.

MyCoder supports sending corrections to the main agent while it's running. This is useful when you notice the agent is going off track or needs additional information.

-

Start MyCoder with the

--interactiveflag:mycoder --interactive "Implement a React component" -

While the agent is running, press

Ctrl+Mto enter correction mode -

Type your correction or additional context

-

Press Enter to send the correction to the agent

The agent will receive your message and incorporate it into its decision-making process, similar to how parent agents can send messages to sub-agents.

You can enable interactive corrections in your configuration file:

// mycoder.config.js

export default {

// ... other options

interactive: true,

};MyCoder can be triggered directly from GitHub issue comments using the flexible /mycoder command:

/mycoder [your instructions here]

Examples:

/mycoder implement a PR for this issue/mycoder create an implementation plan/mycoder suggest test cases for this feature

Learn more about GitHub comment commands

- mycoder - Command-line interface for MyCoder

- mycoder-agent - Agent module for MyCoder

- mycoder-docs - Documentation website for MyCoder

# Clone the repository

git clone https://github.com/drivecore/mycoder.git

cd mycoder

# Install dependencies

pnpm install

# Build all packages

pnpm build

# Run tests

pnpm test

# Create a commit with interactive prompt

pnpm commitMyCoder follows the Conventional Commits specification for commit messages. Our release process is fully automated:

- Commit your changes following the conventional commits format

- Create a PR and get it reviewed and approved

- When merged to main, our CI/CD pipeline will:

- Determine the next version based on commit messages

- Generate a changelog

- Create a GitHub Release

- Tag the release

- Publish to NPM

For more details, see the Contributing Guide.

MyCoder uses Playwright for browser automation, which is used by the sessionStart and sessionMessage tools. By default, Playwright requires browsers to be installed separately via npx playwright install.

MyCoder now includes a system browser detection feature that allows it to use your existing installed browsers instead of requiring separate Playwright browser installations. This is particularly useful when MyCoder is installed globally.

The system browser detection:

- Automatically detects installed browsers on Windows, macOS, and Linux

- Supports Chrome, Edge, Firefox, and other browsers

- Maintains headless mode and clean session capabilities

- Falls back to Playwright's bundled browsers if no system browser is found

You can configure the browser detection in your mycoder.config.js:

export default {

// Other configuration...

// System browser detection settings

browser: {

// Whether to use system browsers or Playwright's bundled browsers

useSystemBrowsers: true,

// Preferred browser type (chromium, firefox, webkit)

preferredType: 'chromium',

// Custom browser executable path (overrides automatic detection)

// executablePath: null, // e.g., '/path/to/chrome'

},

};Please see CONTRIBUTING.md for details on how to contribute to this project.

This project is licensed under the MIT License - see the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mycoder

Similar Open Source Tools

mycoder

An open-source mono-repository containing the MyCoder agent and CLI. It leverages Anthropic's Claude API for intelligent decision making, has a modular architecture with various tool categories, supports parallel execution with sub-agents, can modify code by writing itself, features a smart logging system for clear output, and is human-compatible using README.md, project files, and shell commands to build its own context.

cursor-tools

cursor-tools is a CLI tool designed to enhance AI agents with advanced skills, such as web search, repository context, documentation generation, GitHub integration, Xcode tools, and browser automation. It provides features like Perplexity for web search, Gemini 2.0 for codebase context, and Stagehand for browser operations. The tool requires API keys for Perplexity AI and Google Gemini, and supports global installation for system-wide access. It offers various commands for different tasks and integrates with Cursor Composer for AI agent usage.

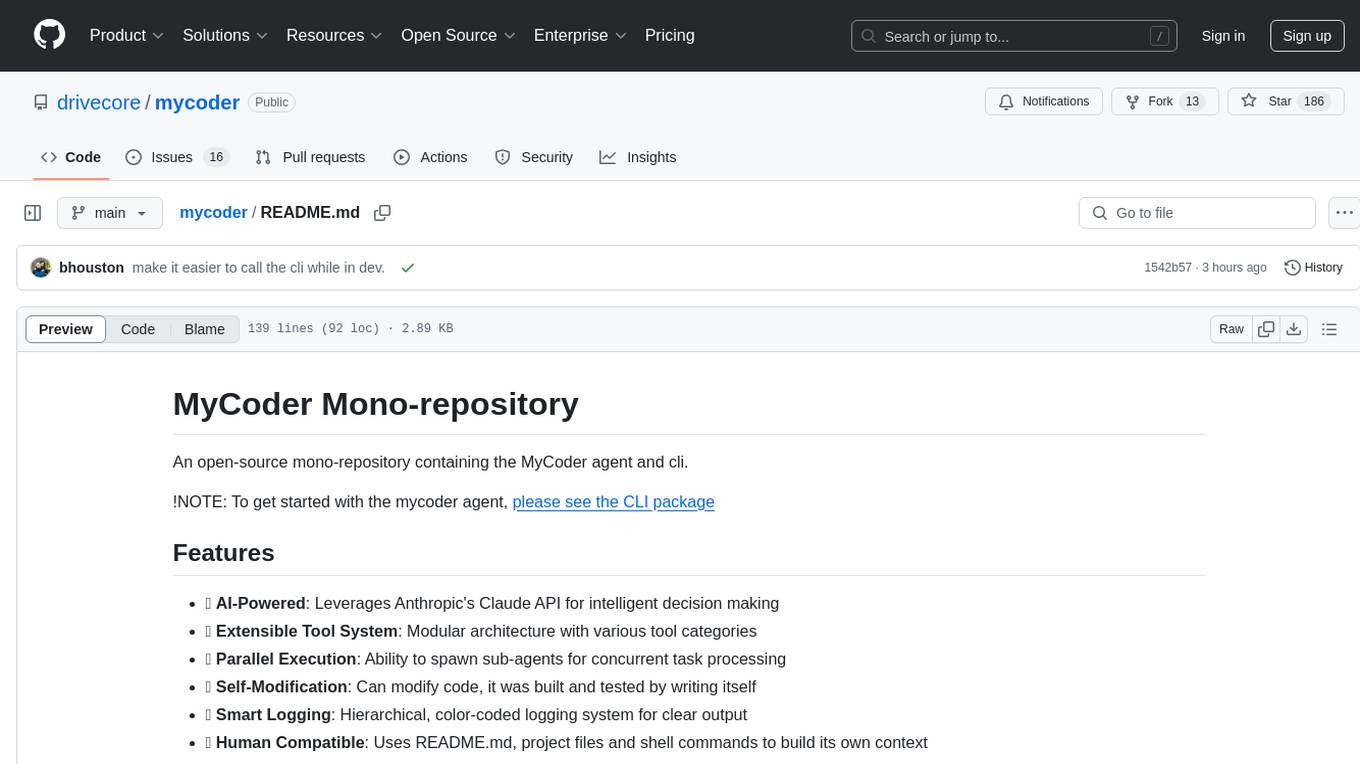

mcp-server

The UI5 Model Context Protocol server offers tools to improve the developer experience when working with agentic AI tools. It helps with creating new UI5 projects, detecting and fixing UI5-specific errors, and providing additional UI5-specific information for agentic AI tools. The server supports various tools such as scaffolding new UI5 applications, fetching UI5 API documentation, providing UI5 development best practices, extracting metadata and configuration from UI5 projects, retrieving version information for the UI5 framework, analyzing and reporting issues in UI5 code, offering guidelines for converting UI5 applications to TypeScript, providing UI Integration Cards development best practices, scaffolding new UI Integration Cards, and validating the manifest against the UI5 Manifest schema. The server requires Node.js and npm versions specified, along with an MCP client like VS Code or Cline. Configuration options are available for customizing the server's behavior, and specific setup instructions are provided for MCP clients like VS Code and Cline.

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

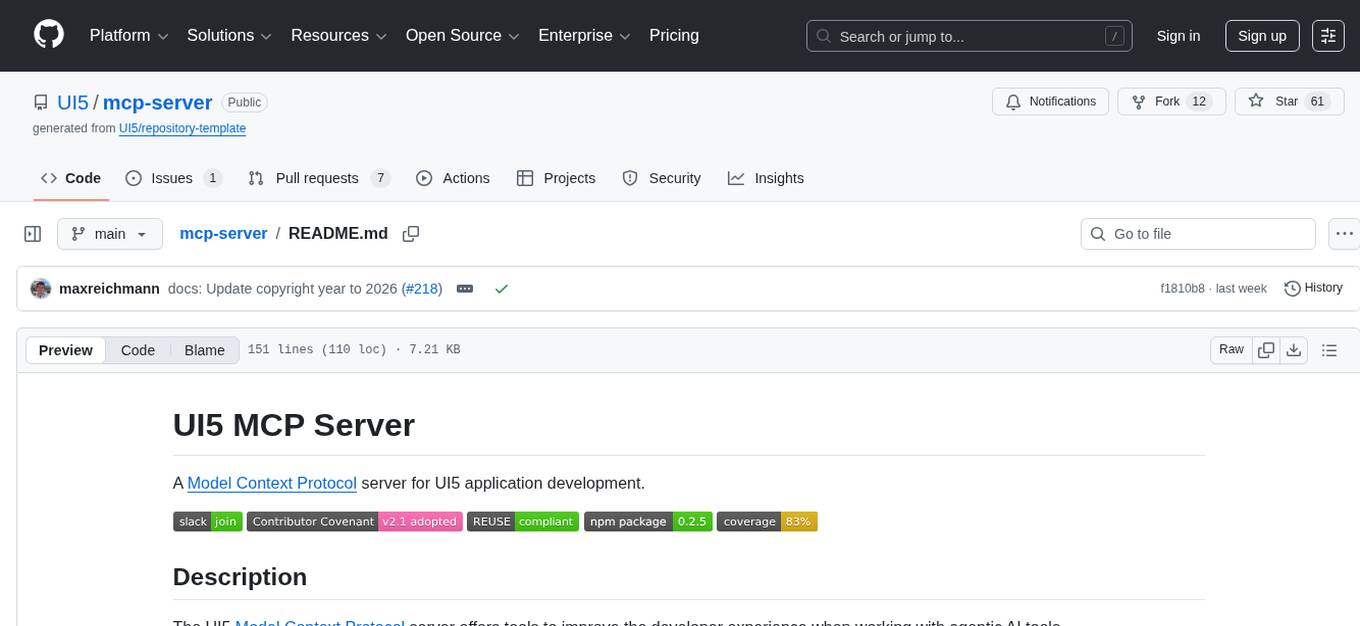

sosumi.ai

sosumi.ai provides Apple Developer documentation in an AI-readable format by converting JavaScript-rendered pages into Markdown. It offers an HTTP API to access Apple docs, supports external Swift-DocC sites, integrates with MCP server, and provides tools like searchAppleDocumentation and fetchAppleDocumentation. The project can be self-hosted and is currently hosted on Cloudflare Workers. It is built with Hono and supports various runtimes. The application is designed for accessibility-first, on-demand rendering of Apple Developer pages to Markdown.

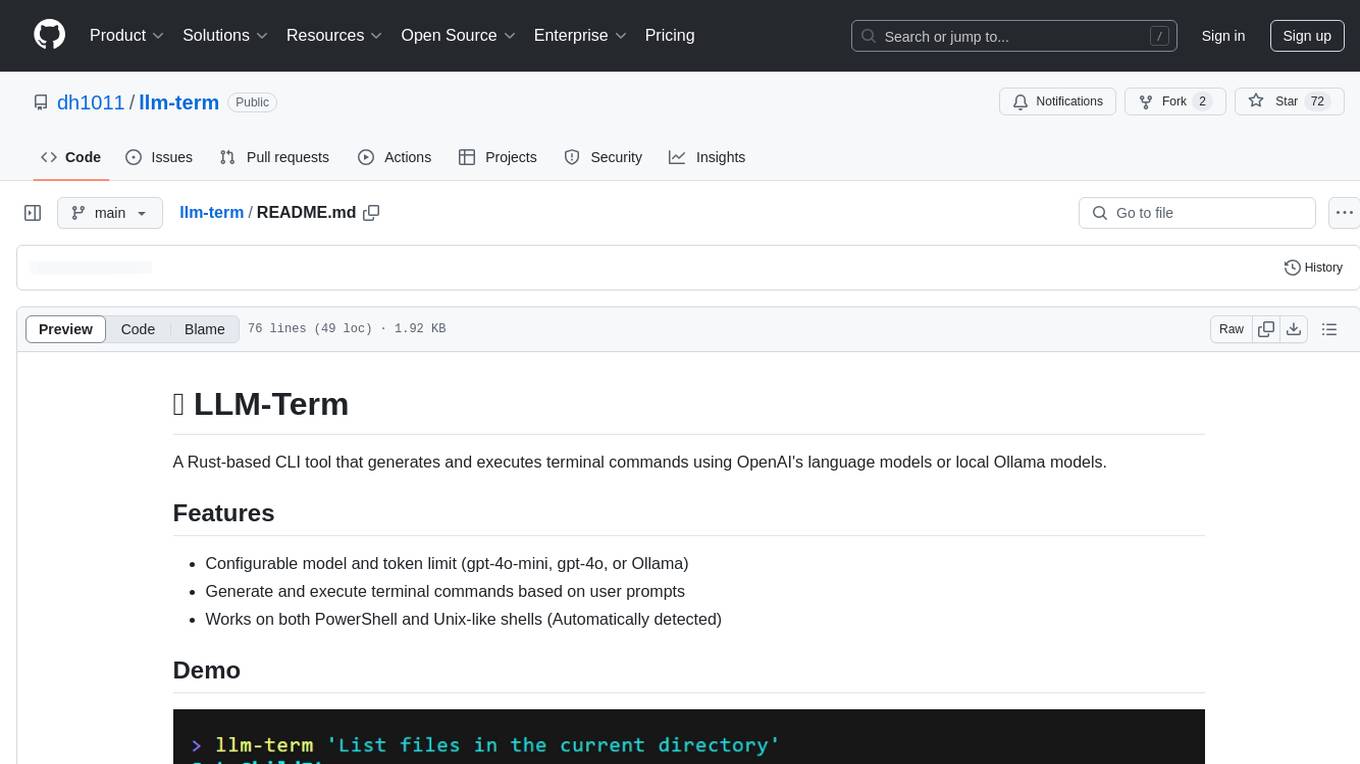

llm-term

LLM-Term is a Rust-based CLI tool that generates and executes terminal commands using OpenAI's language models or local Ollama models. It offers configurable model and token limits, works on both PowerShell and Unix-like shells, and provides a seamless user experience for generating commands based on prompts. Users can easily set up the tool, customize configurations, and leverage different models for command generation.

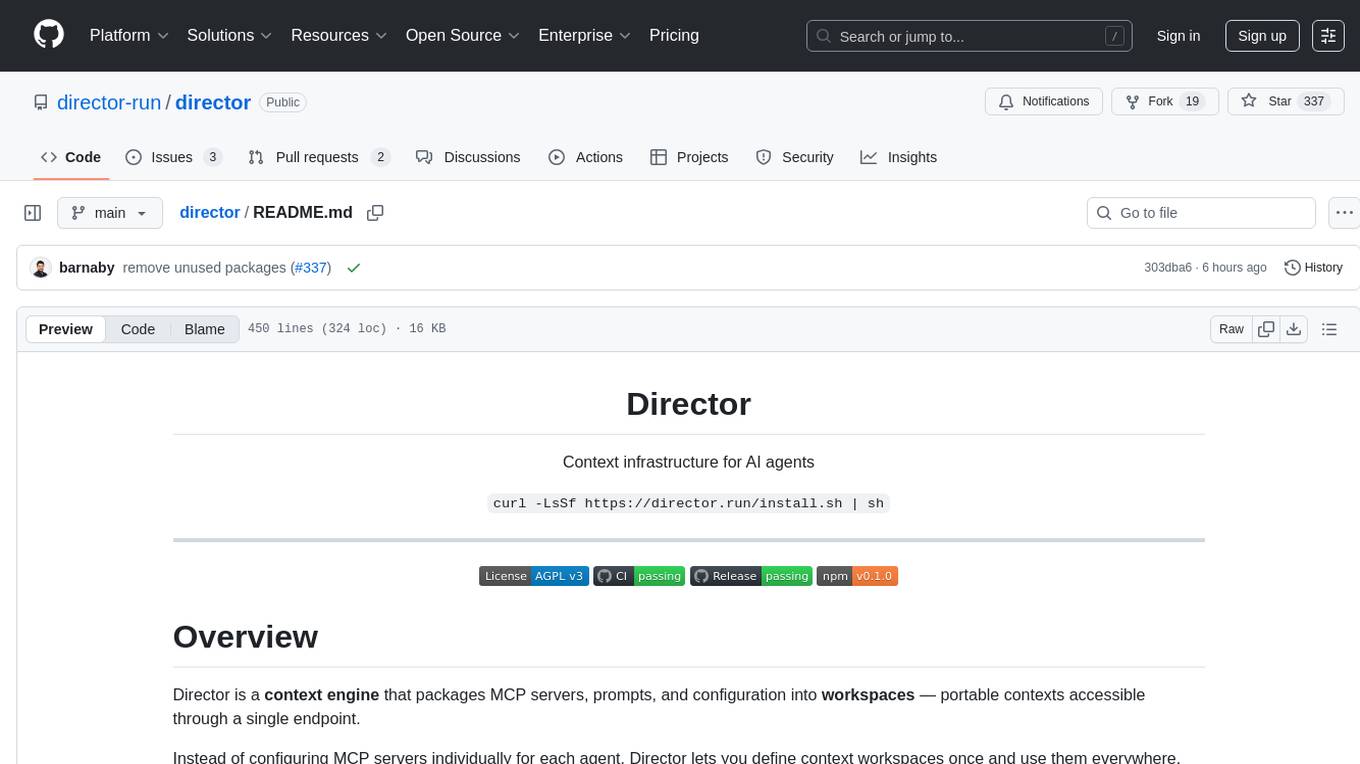

director

Director is a context infrastructure tool for AI agents that simplifies managing MCP servers, prompts, and configurations by packaging them into portable workspaces accessible through a single endpoint. It allows users to define context workspaces once and share them across different AI clients, enabling seamless collaboration, instant context switching, and secure isolation of untrusted servers without cloud dependencies or API keys. Director offers features like workspaces, universal portability, local-first architecture, sandboxing, smart filtering, unified OAuth, observability, multiple interfaces, and compatibility with all MCP clients and servers.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

chat-mcp

A Cross-Platform Interface for Large Language Models (LLMs) utilizing the Model Context Protocol (MCP) to connect and interact with various LLMs. The desktop app, built on Electron, ensures compatibility across Linux, macOS, and Windows. It simplifies understanding MCP principles, facilitates testing of multiple servers and LLMs, and supports dynamic LLM configuration and multi-client management. The UI can be extracted for web use, ensuring consistency across web and desktop versions.

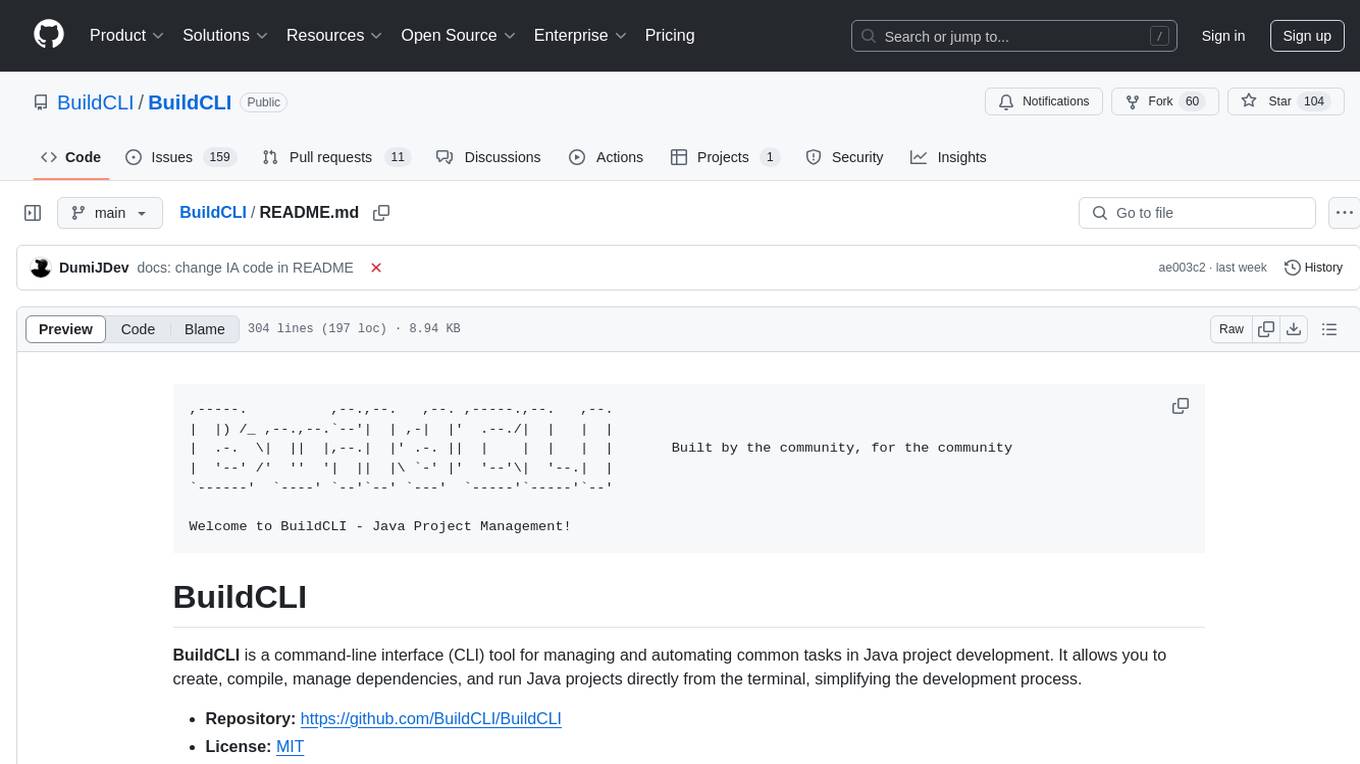

BuildCLI

BuildCLI is a command-line interface (CLI) tool designed for managing and automating common tasks in Java project development. It simplifies the development process by allowing users to create, compile, manage dependencies, run projects, generate documentation, manage configuration profiles, dockerize projects, integrate CI/CD tools, and generate structured changelogs. The tool aims to enhance productivity and streamline Java project management by providing a range of functionalities accessible directly from the terminal.

steel-browser

Steel is an open-source browser API designed for AI agents and applications, simplifying the process of building live web agents and browser automation tools. It serves as a core building block for a production-ready, containerized browser sandbox with features like stealth capabilities, text-to-markdown session management, UI for session viewing/debugging, and full browser control through popular automation frameworks. Steel allows users to control, run, and manage a production-ready browser environment via a REST API, offering features such as full browser control, session management, proxy support, extension support, debugging tools, anti-detection mechanisms, resource management, and various browser tools. It aims to streamline complex browsing tasks programmatically, enabling users to focus on their AI applications while Steel handles the underlying complexity.

SWELancer-Benchmark

SWE-Lancer is a benchmark repository containing datasets and code for the paper 'SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?'. It provides instructions for package management, building Docker images, configuring environment variables, and running evaluations. Users can use this tool to assess the performance of language models in real-world freelance software engineering tasks.

chat-ui

This repository provides a minimalist approach to create a chatbot by constructing the entire front-end UI using a single HTML file. It supports various backend endpoints through custom configurations, multiple response formats, chat history download, and MCP. Users can deploy the chatbot locally, via Docker, Cloudflare pages, Huggingface, or within K8s. The tool also supports image inputs, toggling between different display formats, internationalization, and localization.

agents-starter

A starter template for building AI-powered chat agents using Cloudflare's Agent platform, powered by agents-sdk. It provides a foundation for creating interactive chat experiences with AI, complete with a modern UI and tool integration capabilities. Features include interactive chat interface with AI, built-in tool system with human-in-the-loop confirmation, advanced task scheduling, dark/light theme support, real-time streaming responses, state management, and chat history. Prerequisites include a Cloudflare account and OpenAI API key. The project structure includes components for chat UI implementation, chat agent logic, tool definitions, and helper functions. Customization guide covers adding new tools, modifying the UI, and example use cases for customer support, development assistant, data analysis assistant, personal productivity assistant, and scheduling assistant.

svelte-bench

SvelteBench is an LLM benchmark tool for evaluating Svelte components generated by large language models. It supports multiple LLM providers such as OpenAI, Anthropic, Google, and OpenRouter. Users can run predefined test suites to verify the functionality of the generated components. The tool allows configuration of API keys for different providers and offers debug mode for faster development. Users can provide a context file to improve component generation. Benchmark results are saved in JSON format for analysis and visualization.

For similar tasks

mycoder

An open-source mono-repository containing the MyCoder agent and CLI. It leverages Anthropic's Claude API for intelligent decision making, has a modular architecture with various tool categories, supports parallel execution with sub-agents, can modify code by writing itself, features a smart logging system for clear output, and is human-compatible using README.md, project files, and shell commands to build its own context.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.