llm-vscode

LLM powered development for VSCode

Stars: 1144

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

README:

llm-vscode is an extension for all things LLM. It uses llm-ls as its backend.

We also have extensions for:

Previously huggingface-vscode.

[!NOTE] When using the Inference API, you will probably encounter some limitations. Subscribe to the PRO plan to avoid getting rate limited in the free tier.

This plugin supports "ghost-text" code completion, à la Copilot.

Requests for code generation are made via an HTTP request.

You can use the Hugging Face Inference API or your own HTTP endpoint, provided it adheres to the APIs listed in backend.

The list of officially supported models is located in the config template section.

The prompt sent to the model will always be sized to fit within the context window, with the number of tokens determined using tokenizers.

Hit Cmd+shift+a to check if the generated code is in The Stack.

This is a rapid first-pass attribution check using stack.dataportraits.org.

We check for sequences of at least 50 characters that match a Bloom filter.

This means false positives are possible and long enough surrounding context is necesssary (see the paper for details on n-gram striding and sequence length).

The dedicated Stack search tool is a full dataset index and can be used for a complete second pass.

Install like any other vscode extension.

By default, this extension uses bigcode/starcoder & Hugging Face Inference API for the inference.

You can supply your HF API token (hf.co/settings/token) with this command:

-

Cmd/Ctrl+Shift+Pto open VSCode command palette - Type:

Llm: Login

If you previously logged in with huggingface-cli login on your system the extension will read the token from disk.

You can check the full list of configuration settings by opening your settings page (cmd+,) and typing Llm.

You can configure the backend to which requests will be sent. llm-vscode supports the following backends:

-

huggingface: The Hugging Face Inference API (default) -

ollama: Ollama -

openai: any OpenAI compatible API (e.g. llama-cpp-python) -

tgi: Text Generation Inference

Let's say your current code is this:

import numpy as np

import scipy as sp

{YOUR_CURSOR_POSITION}

def hello_world():

print("Hello world")The request body will then look like:

const inputs = `{start token}import numpy as np\nimport scipy as sp\n{end token}def hello_world():\n print("Hello world"){middle token}`

const data = { inputs, ...configuration.requestBody };

const model = configuration.modelId;

let endpoint;

switch(configuration.backend) {

// cf URL construction

let endpoint = build_url(configuration);

}

const res = await fetch(endpoint, {

body: JSON.stringify(data),

headers,

method: "POST"

});

const json = await res.json() as { generated_text: string };Note that the example above is a simplified version to explain what is happening under the hood.

The endpoint URL that is queried to fetch suggestions is build the following way:

- depending on the backend, it will try to append the correct path to the base URL located in the configuration (e.g.

{url}/v1/completionsfor theopenaibackend) - if no URL is set for the

huggingfacebackend, it will automatically use the default URL- it will error for other backends as there is no sensible default URL

- if you do set the correct path at the end of the URL it will not add it a second time as it checks if it is already present

- there is an option to disable this behavior:

llm.disableUrlPathCompletion

You can tune the way the suggestions behave:

-

llm.enableAutoSuggestlets you choose to enable or disable "suggest-as-you-type" suggestions. -

llm.documentFilterlets you enable suggestions only on specific files that match the pattern matching syntax you will provide. The object must be of typeDocumentFilter | DocumentFilter[]:- to match on all types of buffers:

llm.documentFilter: { pattern: "**" } - to match on all files in

my_project/:llm.documentFilter: { pattern: "/path/to/my_project/**" } - to match on all python and rust files:

llm.documentFilter: { pattern: "**/*.{py,rs}" }

- to match on all types of buffers:

llm-vscode sets two keybindings:

- you can trigger suggestions with

Cmd+shift+lby default, which corresponds to theeditor.action.inlineSuggest.triggercommand -

code attribution is set to

Cmd+shift+aby default, which corresponds to thellm.attributioncommand

By default, llm-ls is bundled with the extension. When developing locally or if you built your own binary because your platform is not supported, you can set the llm.lsp.binaryPath setting to the path of the binary.

llm-ls uses tokenizers to make sure the prompt fits the context_window.

To configure it, you have a few options:

- No tokenization, llm-ls will count the number of characters instead:

{

"llm.tokenizer": null

}- from a local file on your disk:

{

"llm.tokenizer": {

"path": "/path/to/my/tokenizer.json"

}

}- from a Hugging Face repository, llm-ls will attempt to download

tokenizer.jsonat the root of the repository:

{

"llm.tokenizer": {

"repository": "myusername/myrepo",

"api_token": null,

}

}Note: when api_token is set to null, it will use the token you set with Llm: Login command. If you want to use a different token, you can set it here.

- from an HTTP endpoint, llm-ls will attempt to download a file via an HTTP GET request:

{

"llm.tokenizer": {

"url": "https://my-endpoint.example.com/mytokenizer.json",

"to": "/download/path/of/mytokenizer.json"

}

}To test Code Llama 13B model:

- Make sure you have the latest version of this extension.

- Make sure you have supplied HF API token

- Open Vscode Settings (

cmd+,) & type:Llm: Config Template - From the dropdown menu, choose

hf/codellama/CodeLlama-13b-hf

Read more here about Code LLama.

To test Phind/Phind-CodeLlama-34B-v2 and/or WizardLM/WizardCoder-Python-34B-V1.0 :

- Make sure you have the latest version of this extension.

- Make sure you have supplied HF API token

- Open Vscode Settings (

cmd+,) & type:Llm: Config Template - From the dropdown menu, choose

hf/Phind/Phind-CodeLlama-34B-v2orhf/WizardLM/WizardCoder-Python-34B-V1.0

Read more about Phind-CodeLlama-34B-v2 here and WizardCoder-15B-V1.0 here.

- Clone

llm-ls:git clone https://github.com/huggingface/llm-ls - Build

llm-ls:cd llm-ls && cargo build(you can also usecargo build --releasefor a release build) - Clone this repo:

git clone https://github.com/huggingface/llm-vscode - Install deps:

cd llm-vscode && npm ci - In vscode, open

Run and Debugside bar & clickLaunch Extension - In the new vscode window, set the

llm.lsp.binaryPathsetting to the path of thellm-lsbinary you built in step 2 (e.g./path/to/llm-ls/target/debug/llm-ls) - Close the window and restart the extension with

F5or like in5.

| Repository | Description |

|---|---|

| huggingface-vscode-endpoint-server | Custom code generation endpoint for this repository |

| llm-vscode-inference-server | An endpoint server for efficiently serving quantized open-source LLMs for code. |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-vscode

Similar Open Source Tools

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

fish-ai

fish-ai is a tool that adds AI functionality to Fish shell. It can be integrated with various AI providers like OpenAI, Azure OpenAI, Google, Hugging Face, Mistral, or a self-hosted LLM. Users can transform comments into commands, autocomplete commands, and suggest fixes. The tool allows customization through configuration files and supports switching between contexts. Data privacy is maintained by redacting sensitive information before submission to the AI models. Development features include debug logging, testing, and creating releases.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

shell-pilot

Shell-pilot is a simple, lightweight shell script designed to interact with various AI models such as OpenAI, Ollama, Mistral AI, LocalAI, ZhipuAI, Anthropic, Moonshot, and Novita AI from the terminal. It enhances intelligent system management without any dependencies, offering features like setting up a local LLM repository, using official models and APIs, viewing history and session persistence, passing input prompts with pipe/redirector, listing available models, setting request parameters, generating and running commands in the terminal, easy configuration setup, system package version checking, and managing system aliases.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

bilingual_book_maker

The bilingual_book_maker is an AI translation tool that uses ChatGPT to assist users in creating multi-language versions of epub/txt/srt files and books. It supports various models like gpt-4, gpt-3.5-turbo, claude-2, palm, llama-2, azure-openai, command-nightly, and gemini. Users need ChatGPT or OpenAI token, epub/txt books, internet access, and Python 3.8+. The tool provides options to specify OpenAI API key, model selection, target language, proxy server, context addition, translation style, and more. It generates bilingual books in epub format after translation. Users can test translations, set batch size, tweak prompts, and use different models like DeepL, Google Gemini, Tencent TranSmart, and more. The tool also supports retranslation, translating specific tags, and e-reader type specification. Docker usage is available for easy setup.

aidermacs

Aidermacs is an AI pair programming tool for Emacs that integrates Aider, a powerful open-source AI pair programming tool. It provides top performance on the SWE Bench, support for multi-file edits, real-time file synchronization, and broad language support. Aidermacs delivers an Emacs-centric experience with features like intelligent model selection, flexible terminal backend support, smarter syntax highlighting, enhanced file management, and streamlined transient menus. It thrives on community involvement, encouraging contributions, issue reporting, idea sharing, and documentation improvement.

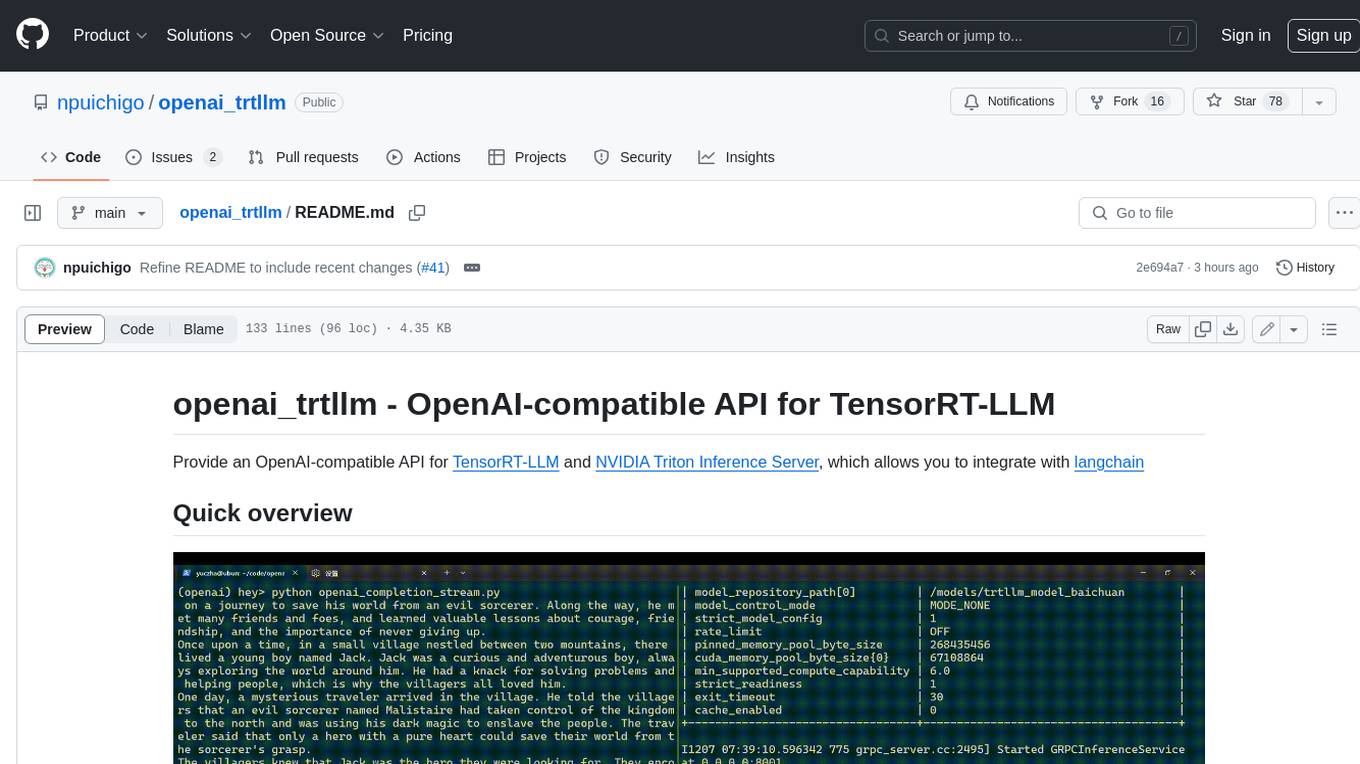

openai_trtllm

OpenAI-compatible API for TensorRT-LLM and NVIDIA Triton Inference Server, which allows you to integrate with langchain

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

For similar tasks

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

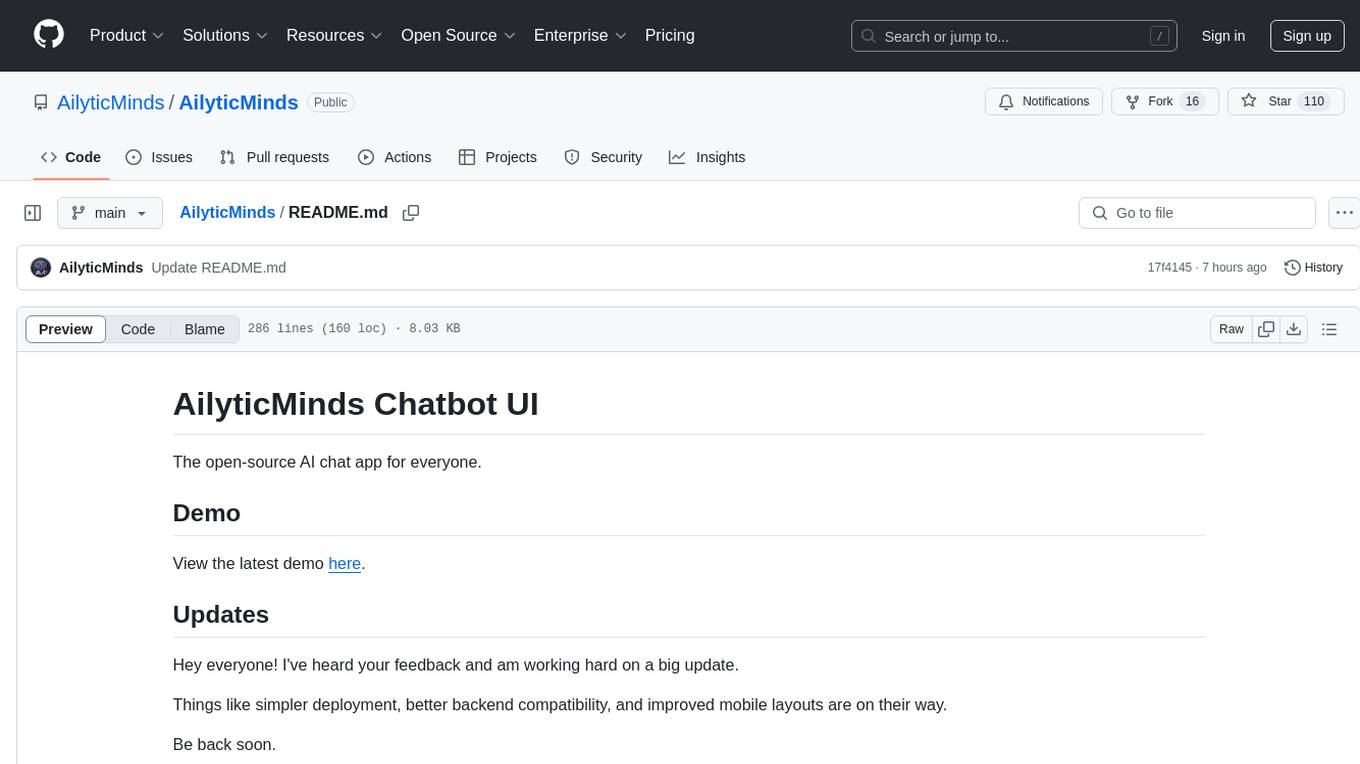

AilyticMinds

AilyticMinds Chatbot UI is an open-source AI chat app designed for easy deployment and improved backend compatibility. It provides a user-friendly interface for creating and hosting chatbots, with features like mobile layout optimization and support for various providers. The tool utilizes Supabase for data storage and management, offering a secure and scalable solution for chatbot development. Users can quickly set up their own instances locally or in the cloud, with detailed instructions provided for installation and configuration.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

jetson-generative-ai-playground

This repo hosts tutorial documentation for running generative AI models on NVIDIA Jetson devices. The documentation is auto-generated and hosted on GitHub Pages using their CI/CD feature to automatically generate/update the HTML documentation site upon new commits.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.