AI-Video-Boilerplate-Simple

Simple AI Templates on Live Video

Stars: 57

AI-video-boilerplate-simple is a free Live AI Video boilerplate for testing out live video AI experiments. It includes a simple Flask server that serves files, supports live video from various sources, and integrates with Roboflow for AI vision. Users can use this template for projects, research, business ideas, and homework. It is lightweight and can be deployed on popular cloud platforms like Replit, Vercel, Digital Ocean, or Heroku.

README:

This is a completely free Live AI Video boilerplate (Simple) for you to play with.

Hosted on Heroku for a live demo here: https:///">simpleai.darefail.com</

AI Video Boilerplate Pro (scalable, dockerized, complete apps): https://github.com/DareFail/AI-Video-Boilerplate-Pro/

AI Video Boilerplate for Chrome Extensions: https://github.com/DareFail/AI-Video-Boilerplate-Chrome/

-

Backend: Simple Flask server, just serves files.

-

Live Video: From your webcam, desktop, browser tab, or a local .mp4 or .mov file

-

AI Vision: Integrated with Roboflow (sponsored project)

This is a template for testing out live video AI experiments. It is best used for projects, research, business ideas, and even homework.

It is an extremely lightweight flask server that can be uploaded to popular cloud platforms like Replit, Vercel, Digital Ocean, or Heroku.

-

Get a free API key from Roboflow to use their vision models.

-

Create a .env file in the main directory

ROBOFLOW_API_KEY=YOUR_ROBOFLOW_KEY_HERE

# For the whiteboard, you need this key too

OPENAI_API_KEY=YOUR_OPENAI_API_KEY_HERE

- Clone the repo

git clone https://github.com/DareFail/AI-Video-Boilerplate-Simple.git

cd AI-Video-Boilerplate

- Install poetry

# via homebrew (mac)

brew install poetry

# PC

(Invoke-WebRequest -Uri https://install.python-poetry.org -UseBasicParsing).Content | Invoke-Expression

- Enter Poetry Shell (needed to install dependencies and run server)

poetry shell

- Install dependencies

poetry install

- Start the server

poetry run python main.py

Then go to localhost:8000 You can change the port it runs on in main.py

AI-Video-Boilerplate comes with a growing list of AI templates. They will always be linked on the homepage but you can also view their code in each top folder in the main directory like "Gaze" and "Template."

There is a static folder in the main directory but it is only used by the homepage folder. This is due to a quirk in flask.

To add your own app, the easiest way is to modify one of the existing ones.

If you want to make a brand new one to add to the repo, follow these steps: (Replace all {{APP_NAME_HERE}} with your new app name)

- Copy the XXXXX Template folder to the main directory and rename it

- In main.py, import your new folder name

"from {{APP_NAME_HERE}} import {{APP_NAME_HERE}}"

app.register_blueprint({{APP_NAME_HERE}}, url_prefix='/{{UNIQUE_URL_HERE}}')

- In {{APP_NAME_HERE}}/__init__.py:

from flask import Blueprint

{{APP_NAME_HERE}} = Blueprint('{{APP_NAME_HERE}}', __name__, template_folder='XXXXX', static_folder='static')

from . import views

- In {{APP_NAME_HERE}}/views.py:

from flask import render_template

import os

from . import {{APP_NAME_HERE}}

@{{APP_NAME_HERE}}.route('/')

def index():

return render_template(

'{{APP_NAME_HERE}}/index.html',

ROBOFLOW_API_KEY=os.environ.get("ROBOFLOW_API_KEY")

)

- In {{APP_NAME_HERE}}/templates/{{APP_NAME_HERE}}/index.html:

# Swap out

# <link rel="stylesheet" href="{{ url_for('XXXXX.static', filename='styles.css') }}" />

# with:

<link rel="stylesheet" href="{{ url_for('{{APP_NAME_HERE}}.static', filename='styles.css') }}" />

and

# Swap out

#<script src="{{ url_for('XXXXX.static', filename='script.js') }}"></script>

# with:

#<script src="{{ url_for('{{APP_NAME_HERE}}.static', filename='script.js') }}"></script>

- Replit: Can be used as is, just keep the .replit file

- Digital Ocean

- Vercel

- Heroku: Enter the following commands and keep the Procfile

heroku buildpacks:clear

heroku buildpacks:add https://github.com/moneymeets/python-poetry-buildpack.git

heroku buildpacks:add heroku/python

heroku config:set PYTHON_RUNTIME_VERSION=3.10.0

- Thanks to Roboflow for sponsoring this project. Get your free API key at: Roboflow

Distributed under the APACHE 2.0 License. See LICENSE for more information.

Twitter: @darefailed

Youtube: How to Video coming soon

Project Link: https://github.com/DareFail/AI-Video-Boilerplate-Simple

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-Video-Boilerplate-Simple

Similar Open Source Tools

AI-Video-Boilerplate-Simple

AI-video-boilerplate-simple is a free Live AI Video boilerplate for testing out live video AI experiments. It includes a simple Flask server that serves files, supports live video from various sources, and integrates with Roboflow for AI vision. Users can use this template for projects, research, business ideas, and homework. It is lightweight and can be deployed on popular cloud platforms like Replit, Vercel, Digital Ocean, or Heroku.

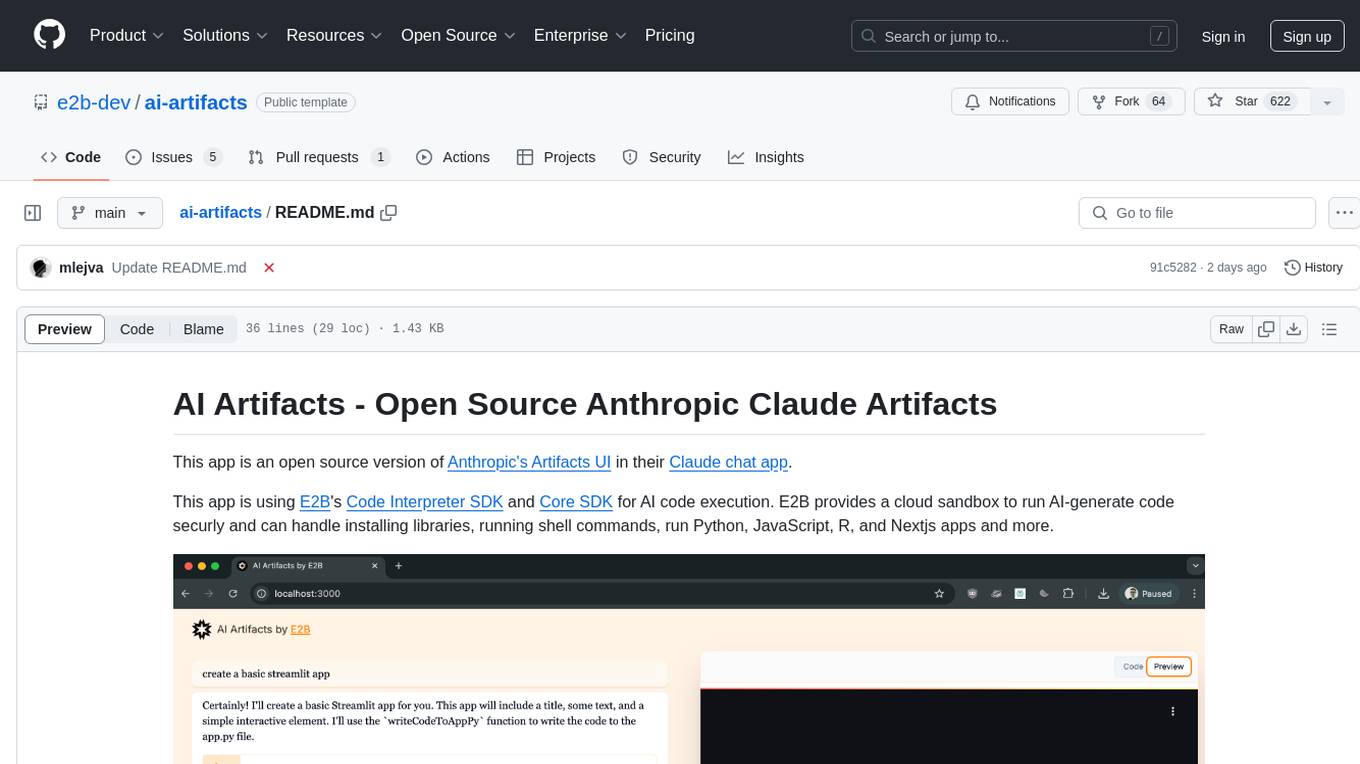

ai-artifacts

AI Artifacts is an open source tool that replicates Anthropic's Artifacts UI in the Claude chat app. It utilizes E2B's Code Interpreter SDK and Core SDK for secure AI code execution in a cloud sandbox environment. Users can run AI-generated code in various languages such as Python, JavaScript, R, and Nextjs apps. The tool also supports running AI-generated Python in Jupyter notebook, Next.js apps, and Streamlit apps. Additionally, it offers integration with Vercel AI SDK for tool calling and streaming responses from the model.

simpleAI

SimpleAI is a self-hosted alternative to the not-so-open AI API, focused on replicating main endpoints for LLM such as text completion, chat, edits, and embeddings. It allows quick experimentation with different models, creating benchmarks, and handling specific use cases without relying on external services. Users can integrate and declare models through gRPC, query endpoints using Swagger UI or API, and resolve common issues like CORS with FastAPI middleware. The project is open for contributions and welcomes PRs, issues, documentation, and more.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

rclip

rclip is a command-line photo search tool powered by the OpenAI's CLIP neural network. It allows users to search for images using text queries, similar image search, and combining multiple queries. The tool extracts features from photos to enable searching and indexing, with options for previewing results in supported terminals or custom viewers. Users can install rclip on Linux, macOS, and Windows using different installation methods. The repository follows the Conventional Commits standard and welcomes contributions from the community.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

hydraai

Generate React components on-the-fly at runtime using AI. Register your components, and let Hydra choose when to show them in your App. Hydra development is still early, and patterns for different types of components and apps are still being developed. Join the discord to chat with the developers. Expects to be used in a NextJS project. Components that have function props do not work.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

fish-ai

fish-ai is a tool that adds AI functionality to Fish shell. It can be integrated with various AI providers like OpenAI, Azure OpenAI, Google, Hugging Face, Mistral, or a self-hosted LLM. Users can transform comments into commands, autocomplete commands, and suggest fixes. The tool allows customization through configuration files and supports switching between contexts. Data privacy is maintained by redacting sensitive information before submission to the AI models. Development features include debug logging, testing, and creating releases.

hey

Hey is a free CLI-based AI assistant powered by LLMs, allowing users to connect Hey to different LLM services. It provides commands for quick usage, customization options, and integration with code editors. Hey was created for a hackathon and is licensed under the MIT License.

raycast_api_proxy

The Raycast AI Proxy is a tool that acts as a proxy for the Raycast AI application, allowing users to utilize the application without subscribing. It intercepts and forwards Raycast requests to various AI APIs, then reformats the responses for Raycast. The tool supports multiple AI providers and allows for custom model configurations. Users can generate self-signed certificates, add them to the system keychain, and modify DNS settings to redirect requests to the proxy. The tool is designed to work with providers like OpenAI, Azure OpenAI, Google, and more, enabling tasks such as AI chat completions, translations, and image generation.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

For similar tasks

langflow

Langflow is an open-source Python-powered visual framework designed for building multi-agent and RAG applications. It is fully customizable, language model agnostic, and vector store agnostic. Users can easily create flows by dragging components onto the canvas, connect them, and export the flow as a JSON file. Langflow also provides a command-line interface (CLI) for easy management and configuration, allowing users to customize the behavior of Langflow for development or specialized deployment scenarios. The tool can be deployed on various platforms such as Google Cloud Platform, Railway, and Render. Contributors are welcome to enhance the project on GitHub by following the contributing guidelines.

AI-Video-Boilerplate-Simple

AI-video-boilerplate-simple is a free Live AI Video boilerplate for testing out live video AI experiments. It includes a simple Flask server that serves files, supports live video from various sources, and integrates with Roboflow for AI vision. Users can use this template for projects, research, business ideas, and homework. It is lightweight and can be deployed on popular cloud platforms like Replit, Vercel, Digital Ocean, or Heroku.

aspire-ai-chat-demo

Aspire AI Chat is a full-stack chat sample that combines modern technologies to deliver a ChatGPT-like experience. The backend API is built with ASP.NET Core and interacts with an LLM using Microsoft.Extensions.AI. It uses Entity Framework Core with CosmosDB for flexible, cloud-based NoSQL storage. The AI capabilities include using Ollama for local inference and switching to Azure OpenAI in production. The frontend UI is built with React, offering a modern and interactive chat experience.

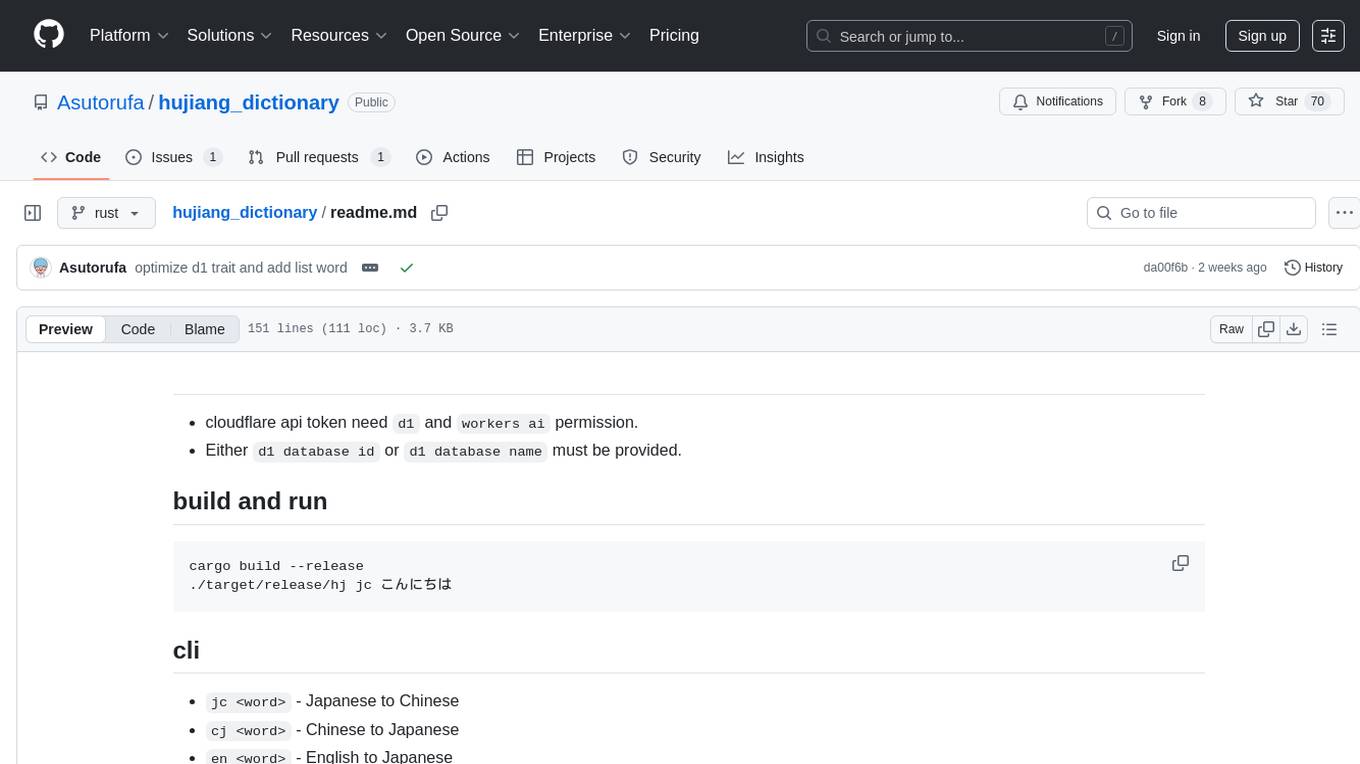

hujiang_dictionary

Hujiang Dictionary is a tool that provides translation services between Japanese, Chinese, and English. It supports various translation modes such as Japanese to Chinese, Chinese to Japanese, English to Japanese, and more. The tool utilizes cloud services like Telegram, Lambda, and Cloudflare Workers for different deployment options. Users can interact with the tool via a command-line interface (CLI) to perform translations and access online resources like weblio and Google Translate. Additionally, the tool offers a Telegram bot for users to access translation services conveniently. The tool also supports setting up and managing databases for storing translation data.

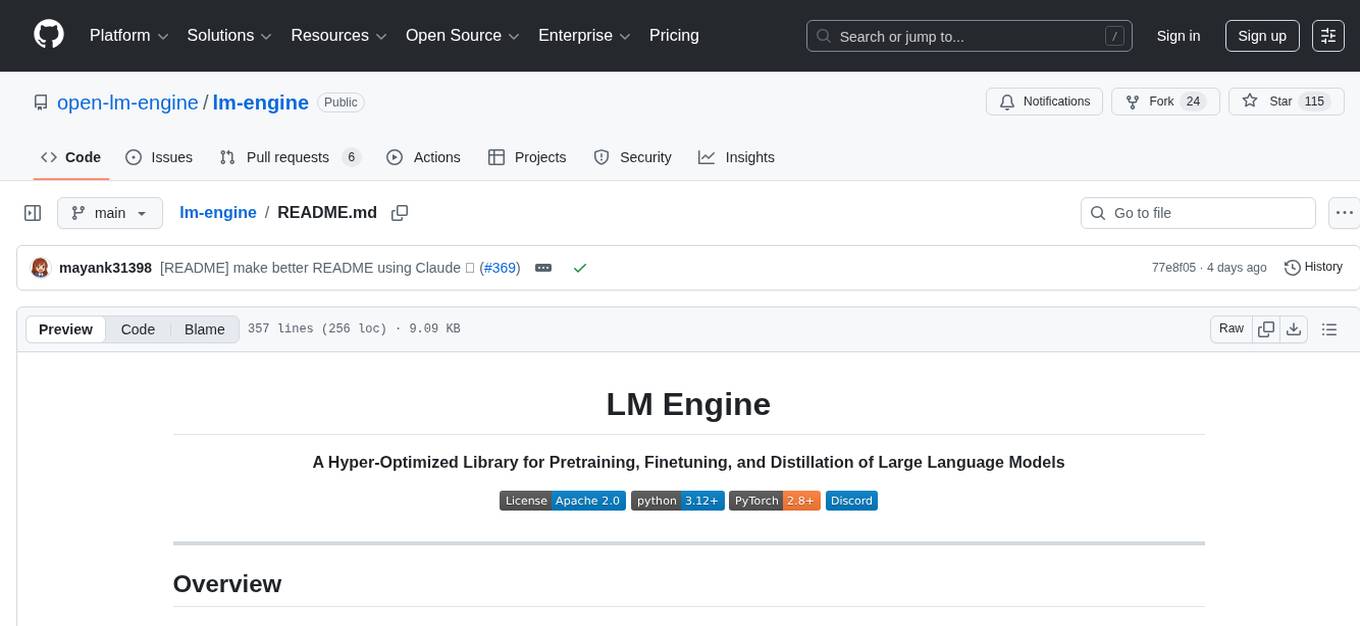

lm-engine

LM Engine is a research-grade, production-ready library for training large language models at scale. It provides support for multiple accelerators including NVIDIA GPUs, Google TPUs, and AWS Trainiums. Key features include multi-accelerator support, advanced distributed training, flexible model architectures, HuggingFace integration, training modes like pretraining and finetuning, custom kernels for high performance, experiment tracking, and efficient checkpointing.

OpenHands

OpenHands is a community focused on AI-driven development, offering a Software Agent SDK, CLI, Local GUI, Cloud deployment, and Enterprise solutions. The SDK is a Python library for defining and running agents, the CLI provides an easy way to start using OpenHands, the Local GUI allows running agents on a laptop with REST API, the Cloud deployment offers hosted infrastructure with integrations, and the Enterprise solution enables self-hosting via Kubernetes with extended support and access to the research team. OpenHands is available under the MIT license.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.