opencharacter

Open Source Alternative to Character.AI - Create your own characters uncensored no filters

Stars: 104

OpenCharacter is an open-source tool that allows users to create and run characters locally with local models or use the hosted version. The stack includes Next.js for frontend, TailwindCSS for styling, Drizzle ORM for database access, NextAuth for authentication, Cloudflare D1 for serverless databases, Cloudflare Pages for hosting, and ShadcnUI as the component library. Users can integrate OpenCharacter with OpenRouter by configuring the OpenRouter API key. The tool is fully scalable, composable, and cost-effective, with powerful tools like Wrangler for database management and migrations. No environment variables are needed, making it easy to use and deploy.

README:

Create and run any characters locally with local models or use the hosted version.

- Next.js for frontend

- TailwindCSS for styling

- Drizzle ORM for database access

- NextAuth for authentication

- Cloudflare D1 for serverless databases

- Cloudflare Pages for hosting

- ShadcnUI as the component library

-

Make sure that you have Wrangler installed. And also that you have logged in with

wrangler login(You'll need a Cloudflare account) -

Clone the repository and install dependencies:

git clone https://github.com/Dhravya/cloudflare-saas-stack cd cloudflare-saas-stack npm i -g bun bun install bun run setup -

Run the development server:

bun run dev

Open http://localhost:3000 with your browser to see the result.

Besides the dev script, c3 has added extra scripts for Cloudflare Pages integration:

-

pages:build: Build the application for Pages using@cloudflare/next-on-pagesCLI -

preview: Locally preview your Pages application using Wrangler CLI -

deploy: Deploy your Pages application using Wrangler CLI

Note: While the

devscript is optimal for local development, you should preview your Pages application periodically to ensure it works properly in the Pages environment.

Cloudflare Bindings allow you to interact with Cloudflare Platform resources. You can use bindings during development, local preview, and in the deployed application.

For detailed instructions on setting up bindings, refer to the Cloudflare documentation.

To apply database migrations:

- For development:

bun run migrate:dev - For production:

bun run migrate:prd

Don't forget to add the CORS policy to the R2 bucket. The CORS policy should look like this:

[

{

"AllowedOrigins": [

"http://localhost:3000",

"https://your-domain.com"

],

"AllowedMethods": [

"GET",

"PUT"

],

"AllowedHeaders": [

"Content-Type"

],

"ExposeHeaders": [

"ETag"

]

}

]To integrate OpenCharacter with OpenRouter, you must configure your OpenRouter API key. To do this, add the following environment variable:

OPENROUTER_API_KEY="your_openrouter_api_key_here"This can be done in your .env file for local development or in your Cloudflare Pages environment variables for production.

With this setup, you can also enable object upload capabilities.

If you prefer manual setup:

- Create a Cloudflare account and install Wrangler CLI.

- Create a D1 database:

bunx wrangler d1 create ${dbName} - Create a

.dev.varsfile in the project root with your Google OAuth credentials and NextAuth secret. - Run local migration:

bunx wrangler d1 execute ${dbName} --local --file=migrations/0000_setup.sql - Run remote migration:

bunx wrangler d1 execute ${dbName} --remote --file=migrations/0000_setup.sql - Start development server:

bun run dev - Deploy:

bun run deploy

- Fully scalable and composable

- No environment variables needed (use

env.DB,env.KV,env.Queue,env.AI, etc.) - Powerful tools like Wrangler for database management and migrations

- Cost-effective scaling (e.g., $5/month for multiple high-traffic projects)

Just change your Cloudflare account ID in the project settings, and you're good to go!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for opencharacter

Similar Open Source Tools

opencharacter

OpenCharacter is an open-source tool that allows users to create and run characters locally with local models or use the hosted version. The stack includes Next.js for frontend, TailwindCSS for styling, Drizzle ORM for database access, NextAuth for authentication, Cloudflare D1 for serverless databases, Cloudflare Pages for hosting, and ShadcnUI as the component library. Users can integrate OpenCharacter with OpenRouter by configuring the OpenRouter API key. The tool is fully scalable, composable, and cost-effective, with powerful tools like Wrangler for database management and migrations. No environment variables are needed, making it easy to use and deploy.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

frontend

Nuclia frontend apps and libraries repository contains various frontend applications and libraries for the Nuclia platform. It includes components such as Dashboard, Widget, SDK, Sistema (design system), NucliaDB admin, CI/CD Deployment, and Maintenance page. The repository provides detailed instructions on installation, dependencies, and usage of these components for both Nuclia employees and external developers. It also covers deployment processes for different components and tools like ArgoCD for monitoring deployments and logs. The repository aims to facilitate the development, testing, and deployment of frontend applications within the Nuclia ecosystem.

steel-browser

Steel is an open-source browser API designed for AI agents and applications, simplifying the process of building live web agents and browser automation tools. It serves as a core building block for a production-ready, containerized browser sandbox with features like stealth capabilities, text-to-markdown session management, UI for session viewing/debugging, and full browser control through popular automation frameworks. Steel allows users to control, run, and manage a production-ready browser environment via a REST API, offering features such as full browser control, session management, proxy support, extension support, debugging tools, anti-detection mechanisms, resource management, and various browser tools. It aims to streamline complex browsing tasks programmatically, enabling users to focus on their AI applications while Steel handles the underlying complexity.

ChatOpsLLM

ChatOpsLLM is a project designed to empower chatbots with effortless DevOps capabilities. It provides an intuitive interface and streamlined workflows for managing and scaling language models. The project incorporates robust MLOps practices, including CI/CD pipelines with Jenkins and Ansible, monitoring with Prometheus and Grafana, and centralized logging with the ELK stack. Developers can find detailed documentation and instructions on the project's website.

middleware

Middleware is an open-source engineering management tool that helps engineering leaders measure and analyze team effectiveness using DORA metrics. It integrates with CI/CD tools, automates DORA metric collection and analysis, visualizes key performance indicators, provides customizable reports and dashboards, and integrates with project management platforms. Users can set up Middleware using Docker or manually, generate encryption keys, set up backend and web servers, and access the application to view DORA metrics. The tool calculates DORA metrics using GitHub data, including Deployment Frequency, Lead Time for Changes, Mean Time to Restore, and Change Failure Rate. Middleware aims to provide DORA metrics to users based on their Git data, simplifying the process of tracking software delivery performance and operational efficiency.

sosumi.ai

sosumi.ai provides Apple Developer documentation in an AI-readable format by converting JavaScript-rendered pages into Markdown. It offers an HTTP API to access Apple docs, supports external Swift-DocC sites, integrates with MCP server, and provides tools like searchAppleDocumentation and fetchAppleDocumentation. The project can be self-hosted and is currently hosted on Cloudflare Workers. It is built with Hono and supports various runtimes. The application is designed for accessibility-first, on-demand rendering of Apple Developer pages to Markdown.

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

langstream

LangStream is a tool for natural language processing tasks, providing a CLI for easy installation and usage. Users can try sample applications like Chat Completions and create their own applications using the developer documentation. It supports running on Kubernetes for production-ready deployment, with support for various Kubernetes distributions and external components like Apache Kafka or Apache Pulsar cluster. Users can deploy LangStream locally using minikube and manage the cluster with mini-langstream. Development requirements include Docker, Java 17, Git, Python 3.11+, and PIP, with the option to test local code changes using mini-langstream.

iffy

Iffy is a tool for intelligent content moderation at scale, allowing users to keep unwanted content off their platform without the need to manage a team of moderators. It provides features such as a Moderation Dashboard to view and manage all moderation activity, User Lifecycle to automatically suspend users with flagged content, Appeals Management for efficient handling of user appeals, and Powerful Rules & Presets to create custom moderation rules. Users can choose between the managed Iffy Cloud or the free self-hosted Iffy Community version, each offering different features and setup requirements.

mcp-server

The UI5 Model Context Protocol server offers tools to improve the developer experience when working with agentic AI tools. It helps with creating new UI5 projects, detecting and fixing UI5-specific errors, and providing additional UI5-specific information for agentic AI tools. The server supports various tools such as scaffolding new UI5 applications, fetching UI5 API documentation, providing UI5 development best practices, extracting metadata and configuration from UI5 projects, retrieving version information for the UI5 framework, analyzing and reporting issues in UI5 code, offering guidelines for converting UI5 applications to TypeScript, providing UI Integration Cards development best practices, scaffolding new UI Integration Cards, and validating the manifest against the UI5 Manifest schema. The server requires Node.js and npm versions specified, along with an MCP client like VS Code or Cline. Configuration options are available for customizing the server's behavior, and specific setup instructions are provided for MCP clients like VS Code and Cline.

todoist-ai

Library for connecting AI agents to Todoist, enabling them to access and modify a Todoist account on the user's behalf. Tools can be used through an MCP server or integrated into other projects for AI conversational interfaces. Reusable tools allow for complete workflows, balancing flexibility and efficiency for LLMs. Early-stage project with more tools planned. Designed to provide a small set of tools for various AI interfaces.

sandbox

Sandbox is an open-source cloud-based code editing environment with custom AI code autocompletion and real-time collaboration. It consists of a frontend built with Next.js, TailwindCSS, Shadcn UI, Clerk, Monaco, and Liveblocks, and a backend with Express, Socket.io, Cloudflare Workers, D1 database, R2 storage, Workers AI, and Drizzle ORM. The backend includes microservices for database, storage, and AI functionalities. Users can run the project locally by setting up environment variables and deploying the containers. Contributions are welcome following the commit convention and structure provided in the repository.

iffy

Iffy is a tool for intelligent content moderation at scale, allowing users to keep unwanted content off their platform without the need to manage a team of moderators. It features a Moderation Dashboard to view and manage all moderation activities, User Lifecycle for automatically suspending users with flagged content, Appeals Management for efficient handling of user appeals, and Powerful Rules & Presets to create custom moderation rules based on unique business needs. Users can choose between the managed Iffy Cloud or the free self-hosted Iffy Community version, each offering different features and setups.

director

Director is a context infrastructure tool for AI agents that simplifies managing MCP servers, prompts, and configurations by packaging them into portable workspaces accessible through a single endpoint. It allows users to define context workspaces once and share them across different AI clients, enabling seamless collaboration, instant context switching, and secure isolation of untrusted servers without cloud dependencies or API keys. Director offers features like workspaces, universal portability, local-first architecture, sandboxing, smart filtering, unified OAuth, observability, multiple interfaces, and compatibility with all MCP clients and servers.

For similar tasks

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

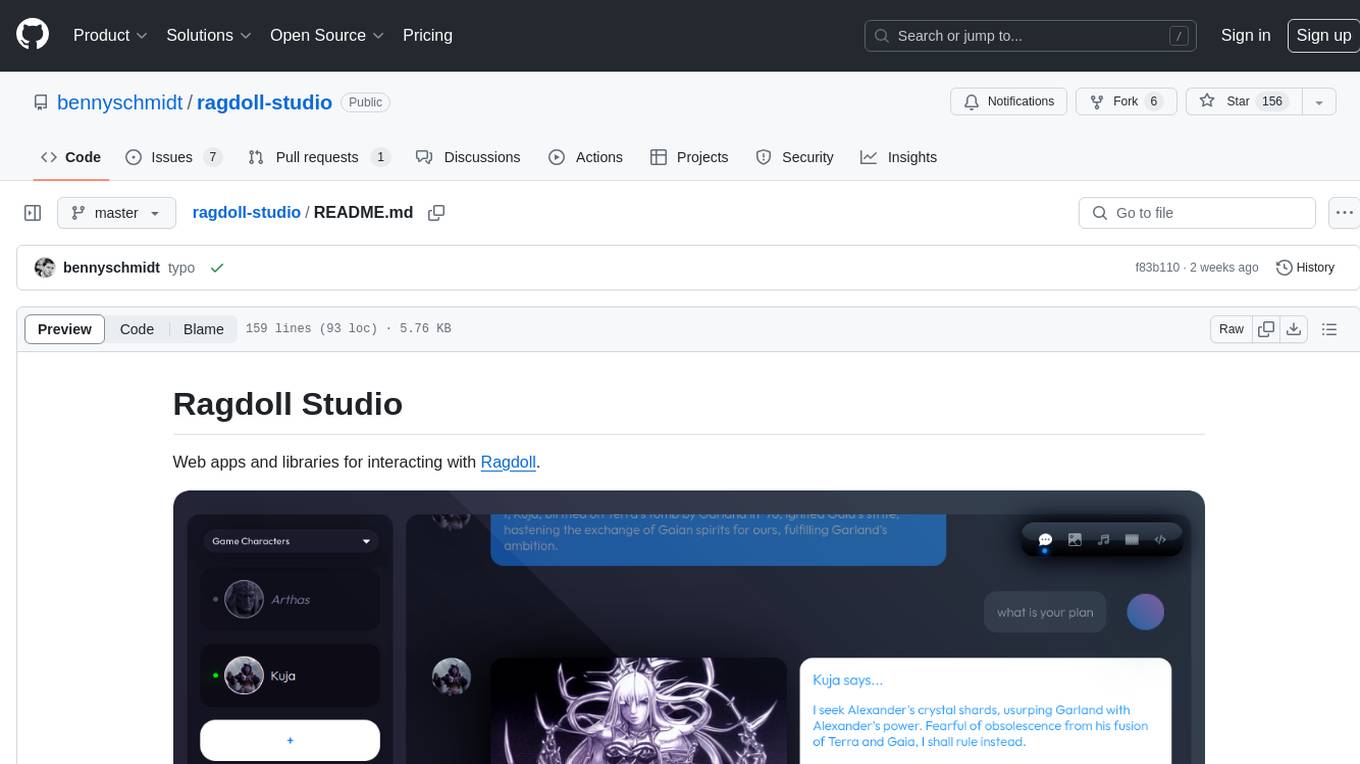

ragdoll-studio

Ragdoll Studio is a platform offering web apps and libraries for interacting with Ragdoll, enabling users to go beyond fine-tuning and create flawless creative deliverables, rich multimedia, and engaging experiences. It provides various modes such as Story Mode for creating and chatting with characters, Vector Mode for producing vector art, Raster Mode for producing raster art, Video Mode for producing videos, Audio Mode for producing audio, and 3D Mode for producing 3D objects. Users can export their content in various formats and share their creations on the community site. The platform consists of a Ragdoll API and a front-end React application for seamless usage.

LLMUnity

LLM for Unity enables seamless integration of Large Language Models (LLMs) within the Unity engine, allowing users to create intelligent characters for immersive player interactions. The tool supports major LLM models, runs locally without internet access, offers fast inference on CPU and GPU, and is easy to set up with a single line of code. It is free for both personal and commercial use, tested on Unity 2021 LTS, 2022 LTS, and 2023. Users can build multiple AI characters efficiently, use remote servers for processing, and customize model settings for text generation.

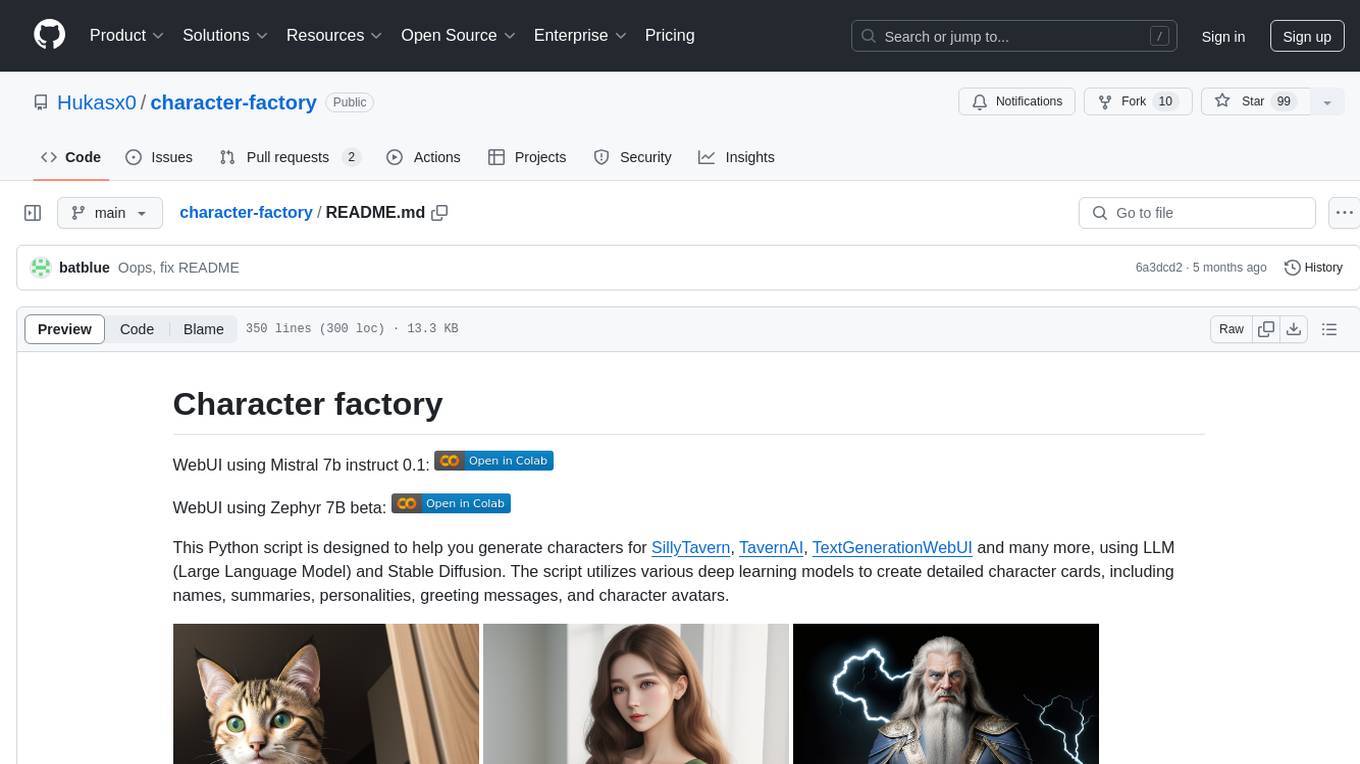

character-factory

Character Factory is a Python script designed to generate detailed character cards for SillyTavern, TavernAI, TextGenerationWebUI, and more using Large Language Model (LLM) and Stable Diffusion. It streamlines character generation by leveraging deep learning models to create names, summaries, personalities, greeting messages, and avatars for characters. The tool provides an easy way to create unique and imaginative characters for storytelling, chatting, and other purposes.

ai-anime-art-generator

AI Anime Art Generator is an AI-driven cutting-edge tool for anime arts creation. Perfect for beginners to easily create stunning anime art without any prior experience. It allows users to create detailed character designs, custom avatars for social media, and explore new artistic styles and ideas. Built on Next.js, TailwindCSS, Google Analytics, Vercel, Replicate, CloudFlare R2, and Clerk.

TavernAI

TavernAI is an atmospheric frontend tool for chat and storywriting, compatible with various backends. It offers features like character creation, online character database, group chat, story mode, world info, message swiping, configurable settings, interface themes, backgrounds, message editing, GPT-4.5, and Claude picture recognition. The tool supports backends like Kobold series, Oobabooga's Text Generation Web UI, OpenAI, NovelAI, and Claude. Users can easily install TavernAI on different operating systems and start using it for interactive storytelling and chat experiences.

Character-Engine-Discord

Character Engine is a Discord bot that aggregates various online platforms to create AI-driven characters using Discord Webhooks and LLM chatbots. It allows users to bring life and joy to their server by spawning characters, exploring embedded characters, and configuring settings on a per-server, per-channel, and per-character basis.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.