AirGo

AirGo, front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. support: vless,vmess,shadowsocks,hysteria2

Stars: 378

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

README:

Supported protocols:Vless,Vmess,shadowsocks,Hysteria2

- AirGo 前后端分离,多用户,多协议代理服务管理系统,简单易用

- 面板部分功能展示

- 目录:

- 1 部署-前后端不分离

- 2 部署-前后端分离

- 3 配置ssl(可选)

- 4 配置文件说明

- 5 对接节点

- 6 更新面板

- 7 命令行

- 8 更多说明

TG频道:https://t.me/Air_Go TG群组:https://t.me/AirGo_Group 文档上次更新日期:2024.4.8

|

|

|

|

|

|

|

- 安装核心,使用Ubuntu、Debian、Centos等Linux系统,执行以下命令,然后根据提示安装

bash <(curl -Ls https://raw.githubusercontent.com/ppoonk/AirGo/main/server/scripts/install.sh)

- 修改配置文件,配置文件目录

/usr/local/AirGo/config.yaml,首次安装,会根据配置文件config.yaml自动初始化数据,请务必修改管理员账号和密码 - 启动核心,

systemctl start AirGo - 浏览器访问:

http://ip:port,其中端口为配置文件设定的值

- 在合适的目录新建配置文件,例如:/$PWD/air/config.yaml,配置文件内容如下。首次安装,会根据配置文件config.yaml自动初始化数据,请务必修改管理员账号和密码

system:

admin-email: [email protected]

admin-password: adminadmin

http-port: 80

https-port: 443

db-type: sqlite

mysql:

address: mysql.sql.com

port: 3306

config: charset=utf8mb4&parseTime=True&loc=Local

db-name: imdemo

username: imdemo

password: xxxxxx

max-idle-conns: 10

max-open-conns: 100

sqlite:

path: ./air.db

- 启动docker命令参考如下:

docker run -tid \

-v $PWD/air/config.yaml:/air/config.yaml \

-p 80:80 \

-p 443:443 \

--name airgo \

--restart always \

--privileged=true \

ppoiuty/airgo:latest

- docker compose参考如下:

version: '3'

services:

airgo:

container_name: airgo

image: ppoiuty/airgo:latest

ports:

- "80:80"

- "443:443"

restart: "always"

privileged: true

volumes:

- ./config.yaml:/air/config.yaml

- 浏览器访问:

http://ip:port,其中端口为配置文件设定的值

bash <(curl -Ls https://raw.githubusercontent.com/ppoonk/AirGo/main/server/scripts/install.sh)

- 修改配置文件,配置文件目录

/usr/local/AirGo/config.yaml,首次安装,会根据配置文件config.yaml自动初始化数据,请务必修改管理员账号和密码 - 启动核心,

systemctl start AirGo

- 提前准备好配置文件 config.yaml,参考 config.yaml,首次安装,会根据配置文件config.yaml自动初始化数据,请务必修改管理员账号和密码

- 启动docker命令参考如下:

docker run -tid \

-v $PWD/air/config.yaml:/air/config.yaml \

-p 80:80 \

-p 443:443 \

--name airgo \

--restart always \

--privileged=true \

ppoiuty/airgo:latest

- docker compose参考如下:

version: '3'

services:

airgo:

container_name: airgo

image: ppoiuty/airgo:latest

ports:

- "80:80"

- "443:443"

restart: "always"

privileged: true

volumes:

- ./config.yaml:/air/config.yaml

- fork本项目,修改

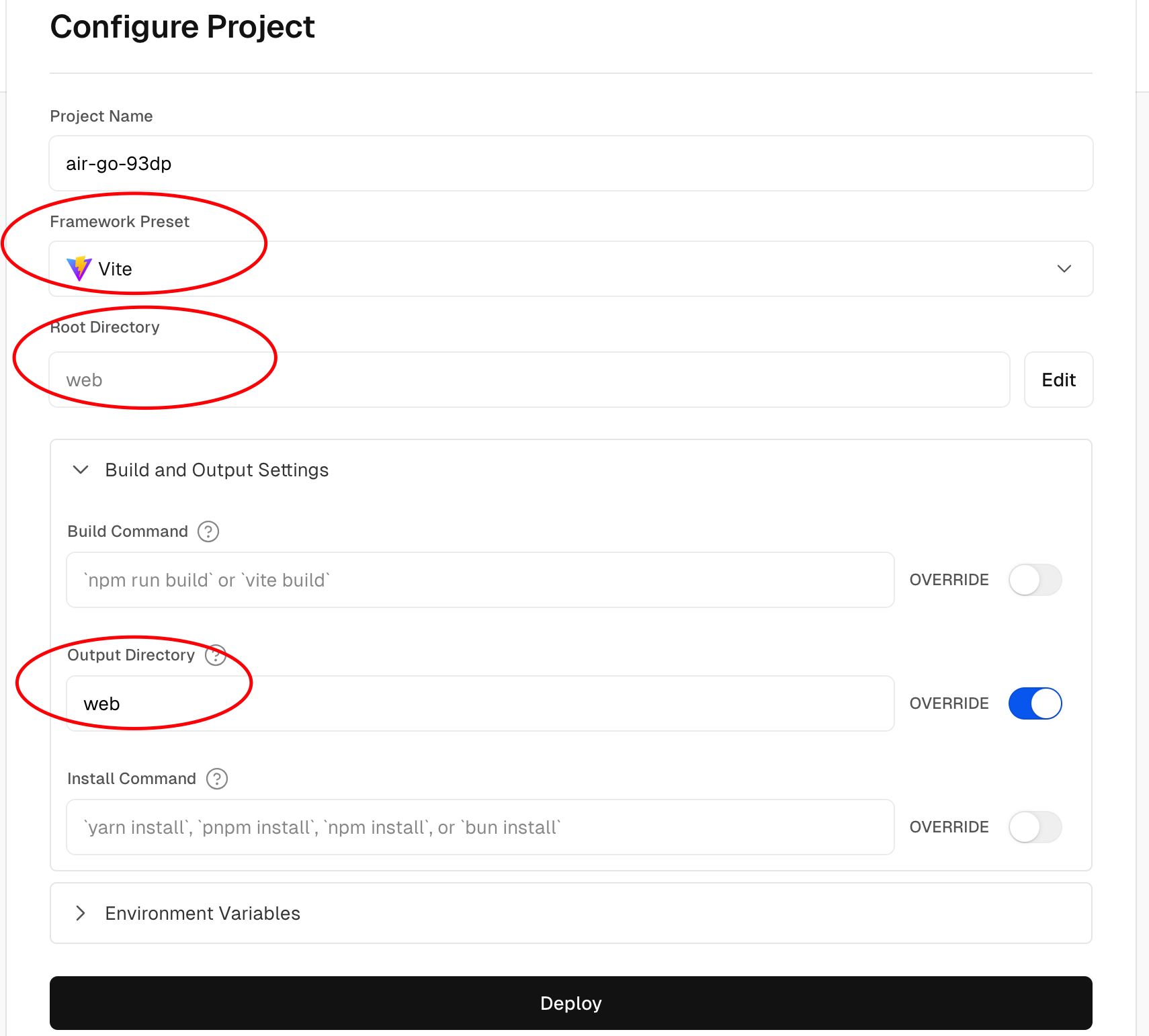

./web/index.html的window.httpurl字段为自己的后端地址,,可以设置多个,以英文符号|分割。由于vercel的限制,请填https接口地址 - 登录Vercel,Add New Project,参考下图配置,注意红圈内的设置!

- 部署成功后,自定义域名即可(域名解析到76.76.21.21)

- 下载 release 中编译好的静态资源的

AirGo-web.zip - 修改

./web/index.html的window.httpurl字段为自己的后端地址,可以设置多个,以英文符号|分割 - 在 项目/web/ 下,执行

npm i && npm run build - 打包后的静态资源文件夹为 web,将web文件夹上传到服务器合适位置。新建网站(纯静态),网站位置选择该web文件夹

通过 宝塔面板(bt.cn),1panel(1panel.cn) 等可直接申请、导入证书

- 1、通过

宝塔面板(bt.cn),1panel(1panel.cn),先申请或导入证书,再开启反向代理 - 2、如果您已经拥有证书,只需要复制在安装目录(/usr/local/AirGo/)下,将其重命名为

air.cer,air.key,然后重启 AirGo

system:

mode: release //模式,默认为 release。如果为 dev,即开发模式。控制台会输出更多信息

admin-email: [email protected] //管理员账号,初始化之前需要修改!

admin-password: adminadmin //管理员密码,初始化之前需要修改!

http-port: 8899 //核心监听端口

https-port: 443 //核心监听端口

db-type: sqlite //数据库类型,可选值:mysql,mariadb,sqlite

mysql:

address: xxx.com //mysql数据库地址

port: 3306 //mysql数据库端口

db-name: xxx //mysql数据库名称

username: xxx //mysql数据库用户名

password: xxx //mysql数据库密码

config: charset=utf8mb4&parseTime=True&loc=Local //保持默认即可

max-idle-conns: 10

max-open-conns: 100

sqlite:

path: ./air.db //sqlite数据库文件名

现支持V2bx、XrayR,暂不支持官方版本,请使用下面的版本:

bash <(curl -Ls https://raw.githubusercontent.com/ppoonk/V2bX/main/scripts/install.sh)

- 安装完成后请根据需要在

/etc/V2bX/config.json中修改配置文件 - 启动:使用管理脚本

AV或直接systemctl start AV

- 提前准备好配置文件 config.json,参考 config.json

- 启动docker命令参考如下:

docker run -tid \

-v $PWD/av/config.json:/etc/V2bX/config.json \

--name av \

--restart always \

--net=host \

--privileged=true \

ppoiuty/av:latest

- docker compose参考如下:

version: '3'

services:

AV:

container_name: AV

image: ppoiuty/av:latest

network_mode: "host"

restart: "always"

privileged: true

volumes:

- ./config.json:/etc/V2bX/config.json

bash <(curl -Ls https://raw.githubusercontent.com/ppoonk/XrayR-for-AirGo/main/scripts/manage.sh)

- 安装完成后请根据需要在

/usr/local/XrayR/config.yml中修改配置文件 - 启动:使用管理脚本

XrayR或直接systemctl start XrayR

-

提前准备好配置文件 config.yml,参考 config.yml

-

启动docker命令参考如下:

docker run -tid \

-v $PWD/xrayr/config.yml:/etc/XrayR/config.yml \

--name xrayr \

--restart always \

--net=host \

--privileged=true \

ppoiuty/xrayr:latest

- docker compose参考如下:

version: '3'

services:

xrayr:

container_name: xrayr

image: ppoiuty/xrayr:latest

network_mode: "host"

restart: "always"

privileged: true

volumes:

- ./config.yml:/etc/XrayR/config.yml

更新时,请检查 前端版本 和 后端核心版本,它们处在不同位置并且版本号保持一致,如图:

- 方式1: 下载新的二进制文件,替换旧的,然后执行 ./AirGo update 完成更新

- 方式2: 在版本

v0.2.5之后,通过面板-管理员-系统,可以点击升级按钮完成更新 - 说明:更新核心后,角色绑定的菜单和casbin权限(api权限)会设置为当前核心的默认值

按照 2-1 前端重新部署即可

./AirGo help 获取帮助

./AirGo reset --resetAdmin 重置admin password

./AirGo start 启动AirGo, 指定配置文件路径:./AirGo start --config path2/config.yaml

./AirGo update 更新数据库相关AirGo数据

./AirGo version 查看AirGo的当前版本

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AirGo

Similar Open Source Tools

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

xiaozhi-client

Xiaozhi Client is a tool that supports integration with Xiaozhi official servers, acts as a regular MCP Server integrated into various clients, allows configuration of multiple Xiaozhi access points for shared MCP configuration, aggregates multiple MCP Servers in a standard way, dynamically controls MCP Server tool visibility, supports local deployment of open-source server integration, provides web-based visual configuration allowing customization of IP and port, integrates ModelScope remote MCP services, creates Xiaozhi Client projects through templates, and supports running in the background.

ailab

The 'ailab' project is an experimental ground for code generation combining AI (especially coding agents) and Deno. It aims to manage configuration files defining coding rules and modes in Deno projects, enhancing the quality and efficiency of code generation by AI. The project focuses on defining clear rules and modes for AI coding agents, establishing best practices in Deno projects, providing mechanisms for type-safe code generation and validation, applying test-driven development (TDD) workflow to AI coding, and offering implementation examples utilizing design patterns like adapter pattern.

ZcChat

ZcChat is an AI desktop pet suitable for Galgame characters, featuring long-term memory, expressive actions, control over the computer, and voice functions. It utilizes Letta for AI long-term memory, Galgame-style character illustrations for more actions and expressions, and voice interaction with support for various voice synthesis tools like Vits. Users can configure characters, install Letta, set up voice synthesis and input, and control the pet to interact with the computer. The tool enhances visual and auditory experiences for users interested in AI desktop pets.

Nano

Nano is a Transformer-based autoregressive language model for personal enjoyment, research, modification, and alchemy. It aims to implement a specific and lightweight Transformer language model based on PyTorch, without relying on Hugging Face. Nano provides pre-training and supervised fine-tuning processes for models with 56M and 168M parameters, along with LoRA plugins. It supports inference on various computing devices and explores the potential of Transformer models in various non-NLP tasks. The repository also includes instructions for experiencing inference effects, installing dependencies, downloading and preprocessing data, pre-training, supervised fine-tuning, model conversion, and various other experiments.

LLM-TPU

LLM-TPU project aims to deploy various open-source generative AI models on the BM1684X chip, with a focus on LLM. Models are converted to bmodel using TPU-MLIR compiler and deployed to PCIe or SoC environments using C++ code. The project has deployed various open-source models such as Baichuan2-7B, ChatGLM3-6B, CodeFuse-7B, DeepSeek-6.7B, Falcon-40B, Phi-3-mini-4k, Qwen-7B, Qwen-14B, Qwen-72B, Qwen1.5-0.5B, Qwen1.5-1.8B, Llama2-7B, Llama2-13B, LWM-Text-Chat, Mistral-7B-Instruct, Stable Diffusion, Stable Diffusion XL, WizardCoder-15B, Yi-6B-chat, Yi-34B-chat. Detailed model deployment information can be found in the 'models' subdirectory of the project. For demonstrations, users can follow the 'Quick Start' section. For inquiries about the chip, users can contact SOPHGO via the official website.

FisherAI

FisherAI is a Chrome extension designed to improve learning efficiency. It supports automatic summarization, web and video translation, multi-turn dialogue, and various large language models such as gpt/azure/gemini/deepseek/mistral/groq/yi/moonshot. Users can enjoy flexible and powerful AI tools with FisherAI.

ERNIE-SDK

ERNIE SDK repository contains two projects: ERNIE Bot Agent and ERNIE Bot. ERNIE Bot Agent is a large model intelligent agent development framework based on the Wenxin large model orchestration capability introduced by Baidu PaddlePaddle, combined with the rich preset platform functions of the PaddlePaddle Star River community. ERNIE Bot provides developers with convenient interfaces to easily call the Wenxin large model for text creation, general conversation, semantic vectors, and AI drawing basic functions.

HuaTuoAI

HuaTuoAI is an artificial intelligence image classification system specifically designed for traditional Chinese medicine. It utilizes deep learning techniques, such as Convolutional Neural Networks (CNN), to accurately classify Chinese herbs and ingredients based on input images. The project aims to unlock the secrets of plants, depict the unknown realm of Chinese medicine using technology and intelligence, and perpetuate ancient cultural heritage.

build_MiniLLM_from_scratch

This repository aims to build a low-parameter LLM model through pretraining, fine-tuning, model rewarding, and reinforcement learning stages to create a chat model capable of simple conversation tasks. It features using the bert4torch training framework, seamless integration with transformers package for inference, optimized file reading during training to reduce memory usage, providing complete training logs for reproducibility, and the ability to customize robot attributes. The chat model supports multi-turn conversations. The trained model currently only supports basic chat functionality due to limitations in corpus size, model scale, SFT corpus size, and quality.

Awesome-ChatTTS

Awesome-ChatTTS is an official recommended guide for ChatTTS beginners, compiling common questions and related resources. It provides a comprehensive overview of the project, including official introduction, quick experience options, popular branches, parameter explanations, voice seed details, installation guides, FAQs, and error troubleshooting. The repository also includes video tutorials, discussion community links, and project trends analysis. Users can explore various branches for different functionalities and enhancements related to ChatTTS.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

gitmesh

GitMesh is an AI-powered Git collaboration network designed to address contributor dropout in open source projects. It offers real-time branch-level insights, intelligent contributor-task matching, and automated workflows. The platform transforms complex codebases into clear contribution journeys, fostering engagement through gamified rewards and integration with open source support programs. GitMesh's mascot, Meshy/Mesh Wolf, symbolizes agility, resilience, and teamwork, reflecting the platform's ethos of efficiency and power through collaboration.

LotteryMaster

LotteryMaster is a tool designed to fetch lottery data, save it to Excel files, and provide analysis reports including number prediction, number recommendation, and number trends. It supports multiple platforms for access such as Web and mobile App. The tool integrates AI models like Qwen API and DeepSeek for generating analysis reports and trend analysis charts. Users can configure API parameters for controlling randomness, diversity, presence penalty, and maximum tokens. The tool also includes a frontend project based on uniapp + Vue3 + TypeScript for multi-platform applications. It provides a backend service running on Fastify with Node.js, Cheerio.js for web scraping, Pino for logging, xlsx for Excel file handling, and Jest for testing. The project is still in development and some features may not be fully implemented. The analysis reports are for reference only and do not constitute investment advice. Users are advised to use the tool responsibly and avoid addiction to gambling.

For similar tasks

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

Stake-auto-bot

Stake-auto-bot is a tool designed for automated staking in the cryptocurrency space. It allows users to set up automated processes for staking their digital assets, providing a convenient way to earn rewards and secure networks. The tool simplifies the staking process by automating the necessary steps, such as selecting validators, delegating tokens, and monitoring rewards. With Stake-auto-bot, users can optimize their staking strategies and maximize their returns with minimal effort.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.