Awesome-ChatTTS

官方推荐的 ChatTTS 资源汇总项目,整理了全网相关资源和常见问题 || Officially recommended ChatTTS resource collection project

Stars: 594

Awesome-ChatTTS is an official recommended guide for ChatTTS beginners, compiling common questions and related resources. It provides a comprehensive overview of the project, including official introduction, quick experience options, popular branches, parameter explanations, voice seed details, installation guides, FAQs, and error troubleshooting. The repository also includes video tutorials, discussion community links, and project trends analysis. Users can explore various branches for different functionalities and enhancements related to ChatTTS.

README:

English | 简体中文

Awesome-ChatTTS 是官方推荐的 ChatTTS 资源汇总项目,欢迎在 issues 中推荐或者自荐。

如果觉得本项目对你了解和使用 ChatTTS 有帮助,还请打赏个 ⭐️ 支持一下。

[!NOTE] 以下项目均为社区资源,查看官方信息请到源仓库 2noise/ChatTTS 。

https://github.com/libukai/Awesome-ChatTTS/assets/5654585/532bfb80-316a-4244-9b92-301c732e8b63

| 网址 | 类型 |

|---|---|

| Original Web | 原版网页版体验 |

| Forge Web | Forge 增强版体验 |

| Linux | Python 安装包 |

| Samples | 音色种子示例 |

| Cloning | 音色克隆体验 |

| 项目 | Star | 亮点 |

|---|---|---|

| jianchang512/ChatTTS-ui |  |

提供 API 接口,可在第三方应用中调用 |

| 6drf21e/ChatTTS_colab |  |

提供流式输出,支持长音频生成和分角色阅读 |

| lenML/ChatTTS-Forge |  |

提供人声增强和背景降噪,可使用附加提示词 |

| CCmahua/ChatTTS-Enhanced |  |

支持文件批量处理,以及导出 SRT 文件 |

| HKoon/ChatTTS-OpenVoice |  |

配合 OpenVoice 进行声音克隆 |

| 项目 | Star | 亮点 |

|---|---|---|

| 6drf21e/ChatTTS_Speaker |  |

音色角色打标与稳定性评估 |

| AIFSH/ComfyUI-ChatTTS |  |

ComfyUi 版本,可作为工作流节点引入 |

| MaterialShadow/ChatTTS-manager |  |

提供了音色管理系统和 WebUI 界面 |

- 1. Input Text : 需要转换的文本,支持中文和英文混杂

- 2. Refine text : 是否对文本进行口语化处理

- 3. Text Seed : 配置文本种子值,不同种子对应不同口语化风格

- 4. 🎲 : 随机产生文本种子值

- 5. Output Text : 口语化处理后生成的文本

- 6. Timbre : 预设的音色种子值

- 7. Audio Seed : 配置音色种子值,不同种子对应不同音色

- 8. 🎲 : 随机产生音色种子值

- 9. Speaker Embedding : 音色码,详见 音色控制

- 10. temperate : 控制音频情感波动性,范围为 0-1,数字越大,波动性越大

- 11. top_P :控制音频的情感相关性,范围为 0.1-0.9,数字越大,相关性越高

- 12. top_K :控制音频的情感相似性,范围为 1-20,数字越小,相似性越高

- 13. DVAE Coefficient : 模型系数码

- 14. Reload : 重新加载模型系数

- 15. Auto Play : 是否在生成音频后自动播放

- 16. Stream Mode : 是否启用流式输出

- 17. Generate : 点击生成音频文件

- 18. Output Audio : 音频生成结果

- 19. ↓ : 点击下载音频文件

-

20.

▶️ : 点击播放音频文件

- 21. Example : 点击切换示例配置

经过实际测试,指定音色种子值每次生成 spk_emb 和重复使用预生成好的 spk_emb 效果有较显著差异,建议优先使用 .pt 音色文件或者音色码(字符串表示形式)。

在 ChatTTS_Speaker 项目中对音色种子进行了初步打标和稳定性评估,可以通过示例来快速选择合适的音色。

在官方 WebUI 中使用时,可直接将音色码复制之后,替换 9. Speaker Embedding 中的值,实现音色控制。

在 Python 脚本中使用时,参考 issue#07 中的压缩方案实现音色控制。

spk = torch.load("asset/seed_1332_restored_emb.pt", map_location=torch.device('cpu')).detach()

spk_emb_str = compress_and_encode(spk)

params_infer_code = ChatTTS.Chat.InferCodeParams(

spk_emb= spk_emb_str, # add sampled speaker

temperature=.0003, # using custom temperature

top_P=0.7, # top P decode

top_K=20, # top K decode

)| 视频 | 亮点 |

|---|---|

| 同济子豪兄 | 从入门到进阶的详细部署教程 |

| ZTFS | Mac M1 部署教程 |

| 王-寳寳 | Windows 部署教程 |

| 视频 | 亮点 |

|---|---|

| Sam Witteveen | 英文版介绍 |

经过近期的迭代,源仓库代码中的问题已经基本解决。如果遇到问题,建议先详细查看 官方说明文档中文版 ,如果还有问题可以继续查看本文档。

原版项目运行需要从 HuggingFace 下载对应的模型,如果不能顺畅科学上网,那么就无法完成这一步。作为替代方案,可以从 modelscope 上下载模型和配置,并配置本地路径。

[!Important] 魔塔上的模型库是由志愿者维护的,不保证所有模型都是最新的,如果有需要请自行验证。

- 在终端中安装 modelscope 依赖

pip install modelscope- 修改 webui.py 中的代码

# 在开头导入依赖,并下载模型和配置

from modelscope import snapshot_download

model_dir = snapshot_download('zlj2546/ChatTTS')

# 第 118 行修改模型路径

ret = chat.load_models('custom', custom_path=model_dir)在 IDE 中运行时,由于文件相对路径的问题,导致脚本无法顺利运行。

建议参照官方说明文档 快速启动 中的指令直接在终端中运行。

确保在执行以下命令时,处于项目根目录下。

python examples/web/webui.py生成的音频将保存至

./output_audio_n.mp3

python examples/cmd/run.py "Your text 1." "Your text 2."出现这个问题是因为官方代码处理中文标点符号时覆盖不全,例如 ?、… 等符号没有被处理,导致模型生成时出错。

可以手动删除类似的中文标点符号,或者修改 ChatTTS/utils/infer_utils.py 中的代码,在 103 行的 character_map 的字典中添加缺失的标点符号。

character_map = {

'…': '',

'—': ',',

'_': ',',

'?': ',',

}GPU 至少需要 4G 显存,否则将强制使用 CPU,相关问题可以参考 ChatTTS-ui 项目中的说明

1、load_models() got an unexpected keyword argument 'source'

详见 常见问题 - 模型无法下载

2、cannot import name 'CommitOperationAdd' from 'huggingface_hub'

详见 常见问题 - 模型无法下载

3、 FileNotFoundError:[Erzno 2] No such file or directory: 'C:\\Users\\xxx\\.cache\\huggingface\\hub\\models--2Noise--ChatTTS\\snapshots\

详见 常见问题 - 模型无法下载

4、local variable 'Normalizer' referenced before assignment

需要根据 安装指南 完成环境配置后,再安装 pynini 和 WeTextProcessing 依赖

conda install -c conda-forge pynini=2.1.5 && pip install WeTextProcessing5、download to Local path D:\pythonlproject\ChatTTS\ChatTTS failed.

在 IDE 中直接执行脚本,会因为文件路径问题报错,详见 常见问题 - IDE 中无法运行

6、ModuleNotFoundError : No module named'Cython'

未找到 Python 执行路径,Windows 设备需要按 教程 配置环境路径

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-ChatTTS

Similar Open Source Tools

Awesome-ChatTTS

Awesome-ChatTTS is an official recommended guide for ChatTTS beginners, compiling common questions and related resources. It provides a comprehensive overview of the project, including official introduction, quick experience options, popular branches, parameter explanations, voice seed details, installation guides, FAQs, and error troubleshooting. The repository also includes video tutorials, discussion community links, and project trends analysis. Users can explore various branches for different functionalities and enhancements related to ChatTTS.

XianyuAutoAgent

Xianyu AutoAgent is an AI customer service robot system specifically designed for the Xianyu platform, providing 24/7 automated customer service, supporting multi-expert collaborative decision-making, intelligent bargaining, and context-aware conversations. The system includes intelligent conversation engine with features like context awareness and expert routing, business function matrix with modules like core engine, bargaining system, technical support, and operation monitoring. It requires Python 3.8+ and NodeJS 18+ for installation and operation. Users can customize prompts for different experts and contribute to the project through issues or pull requests.

LLM-TPU

LLM-TPU project aims to deploy various open-source generative AI models on the BM1684X chip, with a focus on LLM. Models are converted to bmodel using TPU-MLIR compiler and deployed to PCIe or SoC environments using C++ code. The project has deployed various open-source models such as Baichuan2-7B, ChatGLM3-6B, CodeFuse-7B, DeepSeek-6.7B, Falcon-40B, Phi-3-mini-4k, Qwen-7B, Qwen-14B, Qwen-72B, Qwen1.5-0.5B, Qwen1.5-1.8B, Llama2-7B, Llama2-13B, LWM-Text-Chat, Mistral-7B-Instruct, Stable Diffusion, Stable Diffusion XL, WizardCoder-15B, Yi-6B-chat, Yi-34B-chat. Detailed model deployment information can be found in the 'models' subdirectory of the project. For demonstrations, users can follow the 'Quick Start' section. For inquiries about the chip, users can contact SOPHGO via the official website.

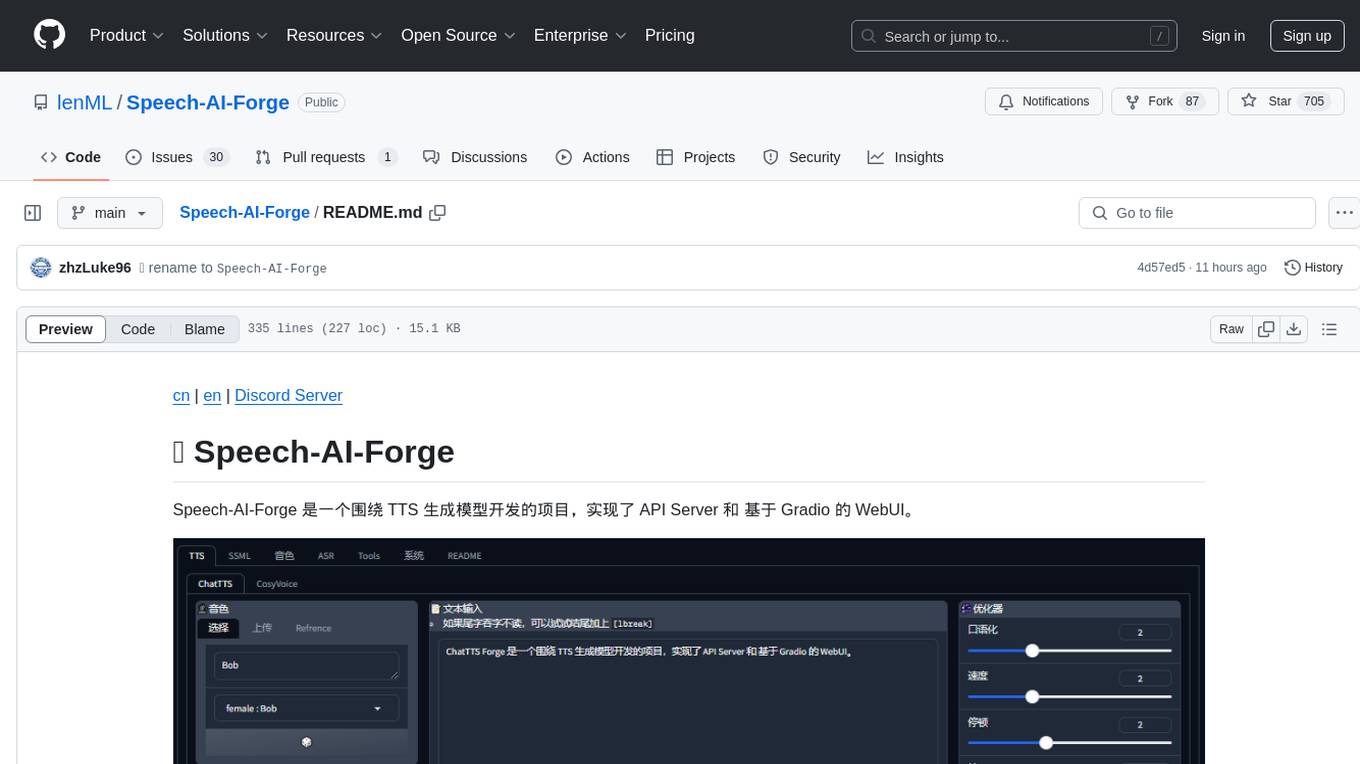

Speech-AI-Forge

Speech-AI-Forge is a project developed around TTS generation models, implementing an API Server and a WebUI based on Gradio. The project offers various ways to experience and deploy Speech-AI-Forge, including online experience on HuggingFace Spaces, one-click launch on Colab, container deployment with Docker, and local deployment. The WebUI features include TTS model functionality, speaker switch for changing voices, style control, long text support with automatic text segmentation, refiner for ChatTTS native text refinement, various tools for voice control and enhancement, support for multiple TTS models, SSML synthesis control, podcast creation tools, voice creation, voice testing, ASR tools, and post-processing tools. The API Server can be launched separately for higher API throughput. The project roadmap includes support for various TTS models, ASR models, voice clone models, and enhancer models. Model downloads can be manually initiated using provided scripts. The project aims to provide inference services and may include training-related functionalities in the future.

vscode-antigravity-cockpit

VS Code extension for monitoring Google Antigravity AI model quotas. It provides a webview dashboard, QuickPick mode, quota grouping, automatic grouping, renaming, card view, drag-and-drop sorting, status bar monitoring, threshold notifications, and privacy mode. Users can monitor quota status, remaining percentage, countdown, reset time, progress bar, and model capabilities. The extension supports local and authorized quota monitoring, multiple account authorization, and model wake-up scheduling. It also offers settings customization, user profile display, notifications, and group functionalities. Users can install the extension from the Open VSX Marketplace or via VSIX file. The source code can be built using Node.js and npm. The project is open-source under the MIT license.

prisma-ai

Prisma-AI is an open-source tool designed to assist users in their job search process by addressing common challenges such as lack of project highlights, mismatched resumes, difficulty in learning, and lack of answers in interview experiences. The tool utilizes AI to analyze user experiences, generate actionable project highlights, customize resumes for specific job positions, provide study materials for efficient learning, and offer structured interview answers. It also features a user-friendly interface for easy deployment and supports continuous improvement through user feedback and collaboration.

HivisionIDPhotos

HivisionIDPhoto is a practical algorithm for intelligent ID photo creation. It utilizes a comprehensive model workflow to recognize, cut out, and generate ID photos for various user photo scenarios. The tool offers lightweight cutting, standard ID photo generation based on different size specifications, six-inch layout photo generation, beauty enhancement (waiting), and intelligent outfit swapping (waiting). It aims to solve emergency ID photo creation issues.

k8m

k8m is an AI-driven Mini Kubernetes AI Dashboard lightweight console tool designed to simplify cluster management. It is built on AMIS and uses 'kom' as the Kubernetes API client. k8m has built-in Qwen2.5-Coder-7B model interaction capabilities and supports integration with your own private large models. Its key features include miniaturized design for easy deployment, user-friendly interface for intuitive operation, efficient performance with backend in Golang and frontend based on Baidu AMIS, pod file management for browsing, editing, uploading, downloading, and deleting files, pod runtime management for real-time log viewing, log downloading, and executing shell commands within pods, CRD management for automatic discovery and management of CRD resources, and intelligent translation and diagnosis based on ChatGPT for YAML property translation, Describe information interpretation, AI log diagnosis, and command recommendations, providing intelligent support for managing k8s. It is cross-platform compatible with Linux, macOS, and Windows, supporting multiple architectures like x86 and ARM for seamless operation. k8m's design philosophy is 'AI-driven, lightweight and efficient, simplifying complexity,' helping developers and operators quickly get started and easily manage Kubernetes clusters.

kirara-ai

Kirara AI is a chatbot that supports mainstream large language models and chat platforms. It provides features such as image sending, keyword-triggered replies, multi-account support, personality settings, and support for various chat platforms like QQ, Telegram, Discord, and WeChat. The tool also supports HTTP server for Web API, popular large models like OpenAI and DeepSeek, plugin mechanism, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, custom workflows, web management interface, and built-in Frpc intranet penetration.

Con-Nav-Item

Con-Nav-Item is a modern personal navigation system designed for digital workers. It is not just a link bookmark but also an all-in-one workspace integrated with AI smart generation, multi-device synchronization, card-based management, and deep browser integration.

ailab

The 'ailab' project is an experimental ground for code generation combining AI (especially coding agents) and Deno. It aims to manage configuration files defining coding rules and modes in Deno projects, enhancing the quality and efficiency of code generation by AI. The project focuses on defining clear rules and modes for AI coding agents, establishing best practices in Deno projects, providing mechanisms for type-safe code generation and validation, applying test-driven development (TDD) workflow to AI coding, and offering implementation examples utilizing design patterns like adapter pattern.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

chatgpt-mirai-qq-bot

Kirara AI is a chatbot that supports mainstream language models and chat platforms. It features various functionalities such as image sending, keyword-triggered replies, multi-account support, content moderation, personality settings, and support for platforms like QQ, Telegram, Discord, and WeChat. It also offers HTTP server capabilities, plugin support, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, and custom workflows. The tool can be accessed via HTTP API for integration with other platforms.

OpenClawChineseTranslation

OpenClaw Chinese Translation is a localization project that provides a fully Chinese interface for the OpenClaw open-source personal AI assistant platform. It allows users to interact with their AI assistant through chat applications like WhatsApp, Telegram, and Discord to manage daily tasks such as emails, calendars, and files. The project includes both CLI command-line and dashboard web interface fully translated into Chinese.

astrbot_plugin_qq_group_daily_analysis

AstrBot Plugin QQ Group Daily Analysis is an intelligent chat analysis plugin based on AstrBot. It provides comprehensive statistics on group chat activity and participation, extracts hot topics and discussion points, analyzes user behavior to assign personalized titles, and identifies notable messages in the chat. The plugin generates visually appealing daily chat analysis reports in various formats including images and PDFs. Users can customize analysis parameters, manage specific groups, and schedule automatic daily analysis. The plugin requires configuration of an LLM provider for intelligent analysis and adaptation to the QQ platform adapter.

MINI_LLM

This project is a personal implementation and reproduction of a small-parameter Chinese LLM. It mainly refers to these two open source projects: https://github.com/charent/Phi2-mini-Chinese and https://github.com/DLLXW/baby-llama2-chinese. It includes the complete process of pre-training, SFT instruction fine-tuning, DPO, and PPO (to be done). I hope to share it with everyone and hope that everyone can work together to improve it!

For similar tasks

Awesome-ChatTTS

Awesome-ChatTTS is an official recommended guide for ChatTTS beginners, compiling common questions and related resources. It provides a comprehensive overview of the project, including official introduction, quick experience options, popular branches, parameter explanations, voice seed details, installation guides, FAQs, and error troubleshooting. The repository also includes video tutorials, discussion community links, and project trends analysis. Users can explore various branches for different functionalities and enhancements related to ChatTTS.

openllmetry-js

OpenLLMetry-JS is a set of extensions built on top of OpenTelemetry that gives you complete observability over your LLM application. Because it uses OpenTelemetry under the hood, it can be connected to your existing observability solutions - Datadog, Honeycomb, and others. It's built and maintained by Traceloop under the Apache 2.0 license. The repo contains standard OpenTelemetry instrumentations for LLM providers and Vector DBs, as well as a Traceloop SDK that makes it easy to get started with OpenLLMetry-JS, while still outputting standard OpenTelemetry data that can be connected to your observability stack. If you already have OpenTelemetry instrumented, you can just add any of our instrumentations directly.

ClaudeSync

ClaudeSync is a powerful tool designed to seamlessly synchronize local files with Claude.ai projects. It bridges the gap between local development environment and Claude.ai's knowledge base, offering real-time synchronization, CLI for easy management, support for multiple organizations and projects, intelligent file filtering, configurable sync interval, two-way synchronization, and more. It ensures data privacy, open source transparency, and comes with disclaimers for use at own risk. Users can quickly start syncing by installing, logging in, selecting organization and project, and running sync. Advanced features include API, organization, project, file, chat management, configuration, synchronization modes, scheduled sync, providers, custom ignore file, and troubleshooting. Contributions are welcome, and communication channels include GitHub Issues and Discord. Licensed under MIT License.

ai-research-assistant

Aria is a Zotero plugin that serves as an AI Research Assistant powered by Large Language Models (LLMs). It offers features like drag-and-drop referencing, autocompletion for creators and tags, visual analysis using GPT-4 Vision, and saving chats as notes and annotations. Aria requires the OpenAI GPT-4 model family and provides a configurable interface through preferences. Users can install Aria by downloading the latest release from GitHub and activating it in Zotero. The tool allows users to interact with Zotero library through conversational AI and probabilistic models, with the ability to troubleshoot errors and provide feedback for improvement.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

chat-mcp

A Cross-Platform Interface for Large Language Models (LLMs) utilizing the Model Context Protocol (MCP) to connect and interact with various LLMs. The desktop app, built on Electron, ensures compatibility across Linux, macOS, and Windows. It simplifies understanding MCP principles, facilitates testing of multiple servers and LLMs, and supports dynamic LLM configuration and multi-client management. The UI can be extracted for web use, ensuring consistency across web and desktop versions.

Everywhere

Everywhere is an interactive AI assistant with context-aware capabilities, featuring a sleek, modern UI and powerful integrated functionality. It instantly perceives and understands anything on your screen, providing seamless AI assistant support without the need for screenshots or app switching. The tool offers troubleshooting expertise, quick web summarization, instant translation, and email draft assistance. It supports LLM from various providers, integrates with web browsers, file systems, terminals, and more, and provides an interactive experience with a modern UI, context-aware invocation, keyboard shortcuts, and markdown rendering. Everywhere is available on Windows and macOS, with Linux support coming soon. Language support includes Simplified Chinese, English, German, Spanish, French, Italian, Japanese, Korean, Russian, Turkish, Traditional Chinese, and Traditional Chinese (Hong Kong).

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.