HaE

HaE - Highlighter and Extractor, Empower ethical hacker for efficient operations.

Stars: 2746

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

README:

HaE是一款网络安全(数据安全)领域下的框架式项目,采用了乐高积木式模块化设计理念,巧妙地融入了人工智能大模型辅助技术,实现对HTTP消息(包含WebSocket)精细化的标记和提取。

通过运用多引擎的自定义正则表达式,HaE能够准确匹配并处理HTTP请求与响应报文(包含WebSocket),对匹配成功的内容进行有效的标记和信息抽取,从而提升网络安全(数据安全)领域下的漏洞和数据分析效率。

随着现代化Web应用采用前后端分离的开发模式,日常漏洞挖掘的过程中,捕获的HTTP请求流量也相应增加。若想全面评估一个Web应用,会花费大量时间在无用的报文上。HaE的出现旨在解决这类情况,借助HaE,您能够有效减少测试时间,将更多精力集中在有价值且有意义的报文上,从而提高漏洞挖掘效率。

所获荣誉:

注意事项:

- HaE 3.3版本开启了AI+新功能,该功能目前仅支持阿里的

Qwen-Long模型(支持超长文本)和月之暗面的moonshot-v1-128k模型(支持短文本),请配置和使用时注意。 - HaE 3.0版本开始采用

Montoya API进行开发,使用新版HaE需要升级你的BurpSuite版本(>=2023.12.1)。 - HaE 2.6版本后对规则字段进行了更新,因此无法适配<=2.6版本的规则,请用户自行前往规则转换页面进行转换。

- HaE官方规则库存放在Github上,因此点击

Update升级HaE官方规则库时需使用代理(BApp审核考虑安全性,不允许使用CDN)。 - 自定义HaE规则必须用左右括号

()将所需提取的表达式内容包含,例如你要匹配一个Shiro应用的响应报文,正常匹配规则为rememberMe=delete,在HaE的规则中就需要变成(rememberMe=delete)。

插件装载: Extender - Extensions - Add - Select File - Next

初次装载HaE会从Jar包中加载离线的规则库,如果更新的话则会向官方规则库地址拉取https://raw.githubusercontent.com/gh0stkey/HaE/gh-pages/Rules.yml,配置文件(Config.yml)和规则文件(Rules.yml)会放在固定目录下:

- Linux/Mac用户的配置文件目录:

~/.config/HaE/ - Windows用户的配置文件目录:

%USERPROFILE%/.config/HaE/

除此之外,您也可以选择将配置文件存放在HaE Jar包的同级目录下的/.config/HaE/中,以便于离线携带。

HaE目前的规则一共有8个字段,详细的含义如下所示:

| 字段 | 含义 |

|---|---|

| Name | 规则名称,主要用于简短概括当前规则的作用。 |

| F-Regex | 规则正则,主要用于填写正则表达式。在HaE中所需提取匹配的内容需要用(、)将正则表达式进行包裹。 |

| S-Regex | 规则正则,作用及使用同F-Regex。S-Regex为二次正则,可以用于对F-Regex匹配的数据结果进行二次的匹配提取,如不需要的情况下可以留空。 |

| Format | 格式化输出,在NFA引擎的正则表达式中,我们可以通过{0}、{1}、{2}…的方式进行取分组格式化输出。默认情况下使用{0}即可。 |

| Scope | 规则作用域,主要用于表示当前规则作用于HTTP报文的哪个部分。支持请求、响应的行、头、体,以及完整的报文。 |

| Engine | 正则引擎,主要用于表示当前规则的正则表达式所使用的引擎。DFA引擎:对于文本串里的每一个字符只需扫描一次,速度快、特性少;NFA引擎:要翻来覆去标注字符、取消标注字符,速度慢,但是特性(如:分组、替换、分割)丰富。 |

| Color | 规则匹配颜色,主要用于表示当前规则匹配到对应HTTP报文时所需标记的高亮颜色。在HaE中具备颜色升级算法,当出现相同颜色时会自动向上升级一个颜色进行标记。 |

| Sensitive | 规则敏感性,主要用于表示当前规则对于大小写字母是否敏感,敏感(True)则严格按照大小写要求匹配,不敏感(False)则反之。 |

- 功能:通过对HTTP报文的颜色高亮、注释和提取,帮助使用者获取有意义的信息,聚焦高价值报文。

-

界面:清晰可视的界面设计,以及简洁的界面交互,帮助使用者更轻松的了解和配置项目,避免

多按钮式的复杂体验。 - 查询:将HTTP报文的高亮、注释和提取到的相关信息集中在一个数据面板,可以一键查询、提取信息,从而提高测试和梳理效率。

-

算法:内置高亮颜色的升级算法,当出现相同颜色时会自动向上升级一个颜色进行标记,避免

屠龙者终成恶龙场景。 -

管理:支持对数据的一键导出、导入,以自定义

.hae文件的方式进行项目数据存储,便于存储和共享项目数据。 - 实战:官方规则库和规则字段作用功能,都是基于实战化场景总结输出的,以此提高数据的有效性、精准性发现。

- 智能:融入人工智能(AI)大模型API,对匹配的数据进行优化处理,提高数据式漏洞挖掘效率。

| 界面名称 | 界面展示 |

|---|---|

| Rules(规则管理) |  |

| Config-Setting(Setting配置管理) |  |

| Config-AI+(AI+配置管理) |  |

| Databoard(数据集合) |  |

| MarkInfo(数据展示) |  |

如果你觉得HaE好用,可以打赏一下作者,给作者持续更新下去的动力!

HaE 是 404Team 星链计划2.0 中的一环,如果对 HaE 有任何疑问又或是想要找小伙伴交流,可以参考星链计划的加群方式。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for HaE

Similar Open Source Tools

HaE

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

CTFCrackTools

CTFCrackTools X is the next generation of CTFCrackTools, featuring extreme performance and experience, extensible node-based architecture, and future-oriented technology stack. It offers a visual node-based workflow for encoding and decoding processes, with 43+ built-in algorithms covering common CTF needs like encoding, classical ciphers, modern encryption, hashing, and text processing. The tool is lightweight (< 15MB), high-performance, and cross-platform, supporting Windows, macOS, and Linux without the need for a runtime environment. It aims to provide a beginner-friendly tool for CTF enthusiasts to easily work on challenges and improve their skills.

bailing

Bailing is an open-source voice assistant designed for natural conversations with users. It combines Automatic Speech Recognition (ASR), Voice Activity Detection (VAD), Large Language Model (LLM), and Text-to-Speech (TTS) technologies to provide a high-quality voice interaction experience similar to GPT-4o. Bailing aims to achieve GPT-4o-like conversation effects without the need for GPU, making it suitable for various edge devices and low-resource environments. The project features efficient open-source models, modular design allowing for module replacement and upgrades, support for memory function, tool integration for information retrieval and task execution via voice commands, and efficient task management with progress tracking and reminders.

MaiBot

MaiBot is an interactive intelligent agent based on a large language model. It aims to be an 'entity' active in QQ group chats, focusing on human-like interactions. It features personification in language style, behavior planning, expression learning, plugin system for unlimited extensions, and emotion expression. The project's design philosophy emphasizes creating a 'life form' in group chats that feels real rather than perfect, with the goal of providing companionship through an AI that makes mistakes and has its own perceptions and thoughts. The code is open-source, but the runtime data of MaiBot is intended to remain closed to maintain its autonomy and conversational nature.

ChuanhuChatGPT

Chuanhu Chat is a user-friendly web graphical interface that provides various additional features for ChatGPT and other language models. It supports GPT-4, file-based question answering, local deployment of language models, online search, agent assistant, and fine-tuning. The tool offers a range of functionalities including auto-solving questions, online searching with network support, knowledge base for quick reading, local deployment of language models, GPT 3.5 fine-tuning, and custom model integration. It also features system prompts for effective role-playing, basic conversation capabilities with options to regenerate or delete dialogues, conversation history management with auto-saving and search functionalities, and a visually appealing user experience with themes, dark mode, LaTeX rendering, and PWA application support.

LLMOne

LLMOne is an open-source, lightweight enterprise-level platform for deploying and serving large language models. It aims to address pain points in traditional large model private deployment such as long cycles, complex configurations, performance challenges, and high operational costs. LLMOne simplifies the deployment process with highly automated workflows and optimized runtime environments, ensuring enterprise-level performance and stability. It caters to developers, manufacturers, and users of large language models, providing features like rapid deployment, professional inference performance, broad compatibility with AI hardware, flexible model and application management, visual operational monitoring, and an open application ecosystem.

matrixone

MatrixOne is the industry's first database to bring Git-style version control to data, combined with MySQL compatibility, AI-native capabilities, and cloud-native architecture. It is a HTAP (Hybrid Transactional/Analytical Processing) database with a hyper-converged HSTAP engine that seamlessly handles transactional, analytical, full-text search, and vector search workloads in a single unified system—no data movement, no ETL, no compromises. Manage your database like code with features like instant snapshots, time travel, branch & merge, instant rollback, and complete audit trail. Built for the AI era, MatrixOne is MySQL-compatible, AI-native, and cloud-native, offering storage-compute separation, elastic scaling, and Kubernetes-native deployment. It serves as one database for everything, replacing multiple databases and ETL jobs with native OLTP, OLAP, full-text search, and vector search capabilities.

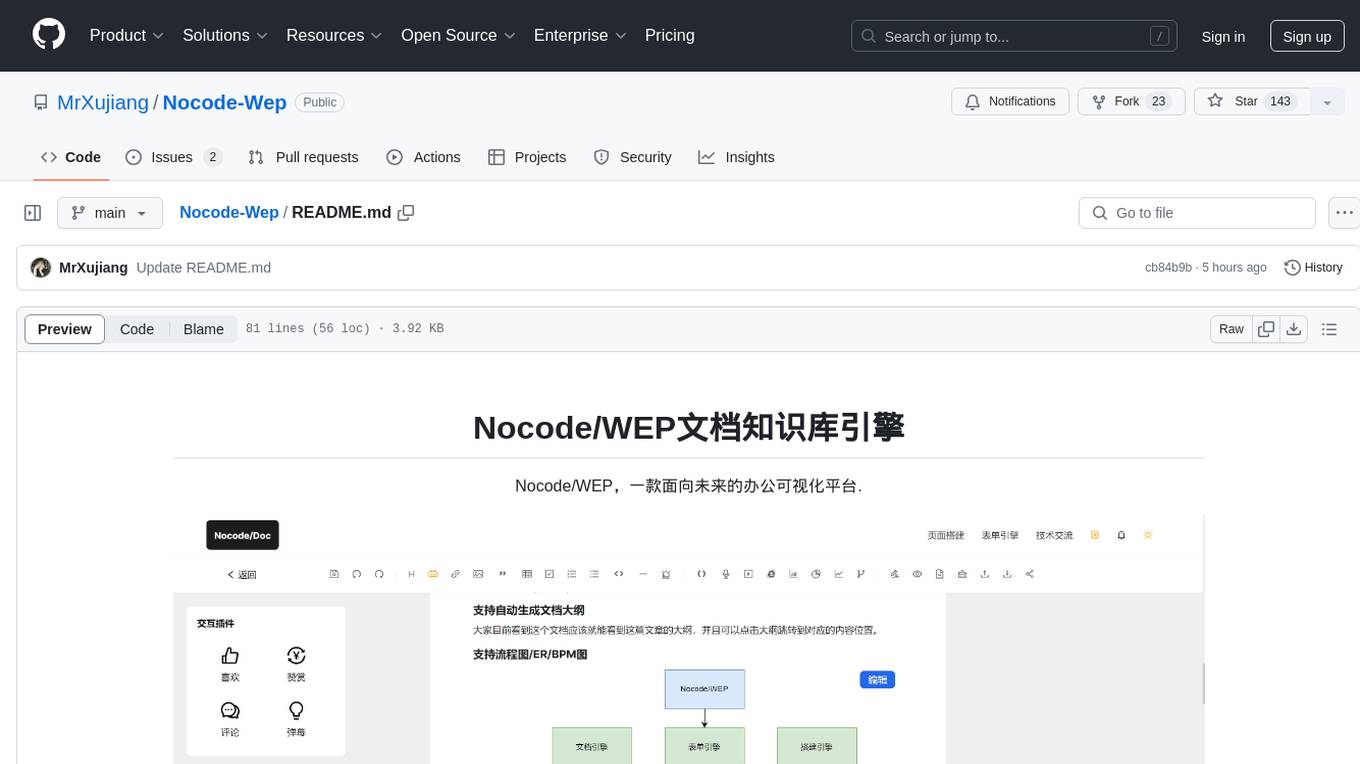

Nocode-Wep

Nocode/WEP is a forward-looking office visualization platform that includes modules for document building, web application creation, presentation design, and AI capabilities for office scenarios. It supports features such as configuring bullet comments, global article comments, multimedia content, custom drawing boards, flowchart editor, form designer, keyword annotations, article statistics, custom appreciation settings, JSON import/export, content block copying, and unlimited hierarchical directories. The platform is compatible with major browsers and aims to deliver content value, iterate products, share technology, and promote open-source collaboration.

AIGC-Interview-Book

AIGC-Interview-Book is the ultimate guide for AIGC algorithm and development job interviews, covering a wide range of topics such as AIGC, traditional deep learning, autonomous driving, AI agent, machine learning, computer vision, natural language processing, reinforcement learning, embodied intelligence, metaverse, AGI, Python, Java, C/C++, Go, embedded systems, front-end, back-end, testing, and operations. The repository consolidates industry experience and insights from frontline AIGC algorithm experts, providing resources on AIGC knowledge framework, internal referrals at AIGC big companies, interview experiences, company guides, AI campus recruitment schedule, interview preparation, salary insights, coding guide, and job-seeking Q&A. It serves as a valuable resource for AIGC-related professionals, students, and job seekers, offering insights and guidance for career advancement and job interviews in the AIGC field.

LotteryMaster

LotteryMaster is a tool designed to fetch lottery data, save it to Excel files, and provide analysis reports including number prediction, number recommendation, and number trends. It supports multiple platforms for access such as Web and mobile App. The tool integrates AI models like Qwen API and DeepSeek for generating analysis reports and trend analysis charts. Users can configure API parameters for controlling randomness, diversity, presence penalty, and maximum tokens. The tool also includes a frontend project based on uniapp + Vue3 + TypeScript for multi-platform applications. It provides a backend service running on Fastify with Node.js, Cheerio.js for web scraping, Pino for logging, xlsx for Excel file handling, and Jest for testing. The project is still in development and some features may not be fully implemented. The analysis reports are for reference only and do not constitute investment advice. Users are advised to use the tool responsibly and avoid addiction to gambling.

Code-Review-GPT-Gitlab

A project that utilizes large models to help with Code Review on Gitlab, aimed at improving development efficiency. The project is customized for Gitlab and is developing a Multi-Agent plugin for collaborative review. It integrates various large models for code security issues and stays updated with the latest Code Review trends. The project architecture is designed to be powerful, flexible, and efficient, with easy integration of different models and high customization for developers.

PyTorch-Tutorial-2nd

The second edition of "PyTorch Practical Tutorial" was completed after 5 years, 4 years, and 2 years. On the basis of the essence of the first edition, rich and detailed deep learning application cases and reasoning deployment frameworks have been added, so that this book can more systematically cover the knowledge involved in deep learning engineers. As the development of artificial intelligence technology continues to emerge, the second edition of "PyTorch Practical Tutorial" is not the end, but the beginning, opening up new technologies, new fields, and new chapters. I hope to continue learning and making progress in artificial intelligence technology with you in the future.

all-api-hub

All API Hub is an open-source browser extension that serves as a centralized management tool for third-party AI aggregation hubs and self-built New APIs. It automatically identifies accounts, checks balances, synchronizes models, manages keys, and supports cross-platform and cloud backups. The extension supports various aggregation hubs like one-api, new-api, Veloera, one-hub, done-hub, Neo-API, Super-API, RIX_API, and VoAPI. It offers features such as intelligent site recognition, multi-account overview panel, automatic check-ins, token and key management, model information and pricing display, model and interface validation, usage analysis and visualization, quick export integration, self-built New API and Veloera management tools, Cloudflare challenge assistant, data backup and synchronization, multi-platform support, and privacy-focused local storage.

lawyer-llama

Lawyer LLaMA is a large language model that has been specifically trained on legal data, including Chinese laws, regulations, and case documents. It has been fine-tuned on a large dataset of legal questions and answers, enabling it to understand and respond to legal inquiries in a comprehensive and informative manner. Lawyer LLaMA is designed to assist legal professionals and individuals with a variety of law-related tasks, including: * **Legal research:** Quickly and efficiently search through vast amounts of legal information to find relevant laws, regulations, and case precedents. * **Legal analysis:** Analyze legal issues, identify potential legal risks, and provide insights on how to proceed. * **Document drafting:** Draft legal documents, such as contracts, pleadings, and legal opinions, with accuracy and precision. * **Legal advice:** Provide general legal advice and guidance on a wide range of legal matters, helping users understand their rights and options. Lawyer LLaMA is a powerful tool that can significantly enhance the efficiency and effectiveness of legal research, analysis, and decision-making. It is an invaluable resource for lawyers, paralegals, law students, and anyone else who needs to navigate the complexities of the legal system.

Embodied-AI-Guide

Embodied-AI-Guide is a comprehensive guide for beginners to understand Embodied AI, focusing on the path of entry and useful information in the field. It covers topics such as Reinforcement Learning, Imitation Learning, Large Language Model for Robotics, 3D Vision, Control, Benchmarks, and provides resources for building cognitive understanding. The repository aims to help newcomers quickly establish knowledge in the field of Embodied AI.

fastapi-admin

智元 Fast API is a one-stop API management system that unifies various LLM APIs in terms of format, standards, and management to achieve the ultimate in functionality, performance, and user experience. It includes features such as model management with intelligent and regex matching, backup model functionality, key management, proxy management, company management, user management, and chat management for both admin and user ends. The project supports cluster deployment, multi-site deployment, and cross-region deployment. It also provides a public API site for registration with a contact to the author for a 10 million quota. The tool offers a comprehensive dashboard, model management, application management, key management, and chat management functionalities for users.

For similar tasks

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

x-crawl

x-crawl is a flexible Node.js AI-assisted crawler library that offers powerful AI assistance functions to make crawler work more efficient, intelligent, and convenient. It consists of a crawler API and various functions that can work normally even without relying on AI. The AI component is currently based on a large AI model provided by OpenAI, simplifying many tedious operations. The library supports crawling dynamic pages, static pages, interface data, and file data, with features like control page operations, device fingerprinting, asynchronous sync, interval crawling, failed retry handling, rotation proxy, priority queue, crawl information control, and TypeScript support.

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

sycamore

Sycamore is a conversational search and analytics platform for complex unstructured data, such as documents, presentations, transcripts, embedded tables, and internal knowledge repositories. It retrieves and synthesizes high-quality answers through bringing AI to data preparation, indexing, and retrieval. Sycamore makes it easy to prepare unstructured data for search and analytics, providing a toolkit for data cleaning, information extraction, enrichment, summarization, and generation of vector embeddings that encapsulate the semantics of data. Sycamore uses your choice of generative AI models to make these operations simple and effective, and it enables quick experimentation and iteration. Additionally, Sycamore uses OpenSearch for indexing, enabling hybrid (vector + keyword) search, retrieval-augmented generation (RAG) pipelining, filtering, analytical functions, conversational memory, and other features to improve information retrieval.

langroid

Langroid is a Python framework that makes it easy to build LLM-powered applications. It uses a multi-agent paradigm inspired by the Actor Framework, where you set up Agents, equip them with optional components (LLM, vector-store and tools/functions), assign them tasks, and have them collaboratively solve a problem by exchanging messages. Langroid is a fresh take on LLM app-development, where considerable thought has gone into simplifying the developer experience; it does not use Langchain.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

document-ai-samples

The Google Cloud Document AI Samples repository contains code samples and Community Samples demonstrating how to analyze, classify, and search documents using Google Cloud Document AI. It includes various projects showcasing different functionalities such as integrating with Google Drive, processing documents using Python, content moderation with Dialogflow CX, fraud detection, language extraction, paper summarization, tax processing pipeline, and more. The repository also provides access to test document files stored in a publicly-accessible Google Cloud Storage Bucket. Additionally, there are codelabs available for optical character recognition (OCR), form parsing, specialized processors, and managing Document AI processors. Community samples, like the PDF Annotator Sample, are also included. Contributions are welcome, and users can seek help or report issues through the repository's issues page. Please note that this repository is not an officially supported Google product and is intended for demonstrative purposes only.

llm-graph-builder

Knowledge Graph Builder App is a tool designed to convert PDF documents into a structured knowledge graph stored in Neo4j. It utilizes OpenAI's GPT/Diffbot LLM to extract nodes, relationships, and properties from PDF text content. Users can upload files from local machine or S3 bucket, choose LLM model, and create a knowledge graph. The app integrates with Neo4j for easy visualization and querying of extracted information.

For similar jobs

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

beelzebub

Beelzebub is an advanced honeypot framework designed to provide a highly secure environment for detecting and analyzing cyber attacks. It offers a low code approach for easy implementation and utilizes virtualization techniques powered by OpenAI Generative Pre-trained Transformer. Key features include OpenAI Generative Pre-trained Transformer acting as Linux virtualization, SSH Honeypot, HTTP Honeypot, TCP Honeypot, Prometheus openmetrics integration, Docker integration, RabbitMQ integration, and kubernetes support. Beelzebub allows easy configuration for different services and ports, enabling users to create custom honeypot scenarios. The roadmap includes developing Beelzebub into a robust PaaS platform. The project welcomes contributions and encourages adherence to the Code of Conduct for a supportive and respectful community.

admyral

Admyral is an open-source Cybersecurity Automation & Investigation Assistant that provides a unified console for investigations and incident handling, workflow automation creation, automatic alert investigation, and next step suggestions for analysts. It aims to tackle alert fatigue and automate security workflows effectively by offering features like workflow actions, AI actions, case management, alert handling, and more. Admyral combines security automation and case management to streamline incident response processes and improve overall security posture. The tool is open-source, transparent, and community-driven, allowing users to self-host, contribute, and collaborate on integrations and features.

galah

Galah is an LLM-powered web honeypot designed to mimic various applications and dynamically respond to arbitrary HTTP requests. It supports multiple LLM providers, including OpenAI. Unlike traditional web honeypots, Galah dynamically crafts responses for any HTTP request, caching them to reduce repetitive generation and API costs. The honeypot's configuration is crucial, directing the LLM to produce responses in a specified JSON format. Note that Galah is a weekend project exploring LLM capabilities and not intended for production use, as it may be identifiable through network fingerprinting and non-standard responses.

HaE

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

PyWxDump

PyWxDump is a Python tool designed for obtaining WeChat account information, decrypting databases, viewing WeChat chats, and exporting chats as HTML backups. It provides core features such as extracting base address offsets of various WeChat data, decrypting databases, and combining multiple database types for unified viewing. Additionally, it offers extended functions like viewing chat history through the web, exporting chat logs in different formats, and remote viewing of WeChat chat history. The tool also includes document classes for database field descriptions, base address offset methods, and decryption methods for MAC databases. PyWxDump is suitable for network security, daily backup archiving, remote chat history viewing, and more.

quark-engine

Quark Engine is an AI-powered tool designed for analyzing Android APK files. It focuses on enhancing the detection process for auto-suggestion, enabling users to create detection workflows without coding. The tool offers an intuitive drag-and-drop interface for workflow adjustments and updates. Quark Agent, the core component, generates Quark Script code based on natural language input and feedback. The project is committed to providing a user-friendly experience for designing detection workflows through textual and visual methods. Various features are still under development and will be rolled out gradually.

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.