agentica

Agentica: Effortlessly Build Intelligent, Reflective, and Collaborative Multimodal AI Agents! 轻松构建智能、具备反思能力、可协作的多模态AI Agent。

Stars: 108

Agentica is a human-centric framework for building large language model agents. It provides functionalities for planning, memory management, tool usage, and supports features like reflection, planning and execution, RAG, multi-agent, multi-role, and workflow. The tool allows users to quickly code and orchestrate agents, customize prompts, and make API calls to various services. It supports API calls to OpenAI, Azure, Deepseek, Moonshot, Claude, Ollama, and Together. Agentica aims to simplify the process of building AI agents by providing a user-friendly interface and a range of functionalities for agent development.

README:

Agentica: 轻松构建智能、具备反思能力、可协作的多模态AI Agent。

Agentica 可以构建AI Agent,包括规划、记忆和工具使用、执行等组件。

- 规划(Planning):任务拆解、生成计划、反思

- 记忆(Memory):短期记忆(prompt实现)、长期记忆(RAG实现)

- 工具使用(Tool use):function call能力,调用外部API,以获取外部信息,包括当前日期、日历、代码执行能力、对专用信息源的访问等

Agentica can also build multi-agent systems and workflows.

Agentica 还可以构建多Agent系统和工作流。

- Planner:负责让LLM生成一个多步计划来完成复杂任务,生成相互依赖的“链式计划”,定义每一步所依赖的上一步的输出

- Worker:接受“链式计划”,循环遍历计划中的每个子任务,并调用工具完成任务,可以自动反思纠错以完成任务

- Solver:求解器将所有这些输出整合为最终答案

[2024/12/29] v0.2.3版本: 支持了ZhipuAI的api调用,包括免费模型和工具使用,详见Release-v0.2.3

[2024/12/25] v0.2.0版本: 支持了多模态模型,输入可以是文本、图片、音频、视频,升级Assistant为Agent,Workflow支持拆解并实现复杂任务,详见Release-v0.2.0

[2024/07/02] v0.1.0版本:实现了基于LLM的Assistant,可以快速用function call搭建大语言模型助手,详见Release-v0.1.0

Agentica是一个用于构建Agent的工具,具有以下功能:

- Agent编排:通过简单代码快速编排Agent,支持 Reflection(反思)、Plan and Solve(计划并执行)、RAG、Agent、Multi-Agent、Team、Workflow等功能

- 自定义prompt:Agent支持自定义prompt和多种工具调用(tool_calls)

- LLM集成:支持OpenAI、Azure、Deepseek、Moonshot、Anthropic、ZhipuAI、Ollama、Together等多方大模型厂商的API

- 记忆功能:包括短期记忆和长期记忆功能

- Multi-Agent协作:支持多Agent和任务委托(Team)的团队协作。

- Workflow工作流:拆解复杂任务为多个Agent,基于工作流自动化串行逐步完成任务,如投资研究、新闻文章撰写和技术教程创建

- 自我进化Agent:具有反思和增强记忆能力的自我进化Agent

- Web UI:兼容ChatPilot,可以基于Web页面交互,支持主流的open-webui、streamlit、gradio等前端交互框架

pip install -U agenticaor

git clone https://github.com/shibing624/agentica.git

cd agentica

pip install .# Copying required .env file, and fill in the LLM api key

cp .env.example ~/.agentica/.env

cd examples

python web_search_moonshot_demo.py-

复制.env.example文件为

~/.agentica/.env,并填写LLM api key(选填DEEPSEEK_API_KEY、MOONSHOT_API_KEY、OPENAI_API_KEY、ZHIPUAI_API_KEY等任一个即可)。或者使用export命令设置环境变量:export MOONSHOT_API_KEY=your_moonshot_api_key export SERPER_API_KEY=your_serper_api_key

-

使用

agentica构建Agent并执行:

自动调用google搜索工具,示例examples/12_web_search_moonshot_demo.py

from agentica import Agent, MoonshotChat, SearchSerperTool

m = Agent(model=MoonshotChat(), tools=[SearchSerperTool()], add_datetime_to_instructions=True)

r = m.run("下一届奥运会在哪里举办")

print(r)shibing624/ChatPilot 兼容agentica,可以通过Web UI进行交互。

Web Demo: https://chat.mulanai.com

git clone https://github.com/shibing624/ChatPilot.git

cd ChatPilot

pip install -r requirements.txt

cp .env.example .env

bash start.sh| 示例 | 描述 |

|---|---|

| examples/01_llm_demo.py | LLM问答Demo |

| examples/02_user_prompt_demo.py | 自定义用户prompt的Demo |

| examples/03_user_messages_demo.py | 自定义输入用户消息的Demo |

| examples/04_memory_demo.py | Agent的记忆Demo |

| examples/05_response_model_demo.py | 按指定格式(pydantic的BaseModel)回复的Demo |

| examples/06_calc_with_csv_file_demo.py | LLM加载CSV文件,并执行计算来回答的Demo |

| examples/07_create_image_tool_demo.py | 实现了创建图像工具的Demo |

| examples/08_ocr_tool_demo.py | 实现了OCR工具的Demo |

| examples/09_remove_image_background_tool_demo.py | 实现了自动去除图片背景功能,包括自动通过pip安装库,调用库实现去除图片背景 |

| examples/10_vision_demo.py | 视觉理解Demo |

| examples/11_web_search_openai_demo.py | 基于OpenAI的function call做网页搜索Demo |

| examples/12_web_search_moonshot_demo.py | 基于Moonshot的function call做网页搜索Demo |

| examples/13_storage_demo.py | Agent的存储Demo |

| examples/14_custom_tool_demo.py | 自定义工具,并用大模型自主选择调用的Demo |

| examples/15_crawl_webpage_demo.py | 实现了网页分析工作流:从Url爬取融资快讯 - 分析网页内容和格式 - 提取核心信息 - 汇总存为md文件 |

| examples/16_get_top_papers_demo.py | 解析每日论文,并保存为json格式的Demo |

| examples/17_find_paper_from_arxiv_demo.py | 实现了论文推荐的Demo:自动从arxiv搜索多组论文 - 相似论文去重 - 提取核心论文信息 - 保存为csv文件 |

| examples/18_agent_input_is_list.py | 展示Agent的message可以是列表的Demo |

| examples/19_naive_rag_demo.py | 实现了基础版RAG,基于Txt文档回答问题 |

| examples/20_advanced_rag_demo.py | 实现了高级版RAG,基于PDF文档回答问题,新增功能:pdf文件解析、query改写,字面+语义多路混合召回,召回排序(rerank) |

| examples/21_memorydb_rag_demo.py | 把参考资料放到prompt的传统RAG做法的Demo |

| examples/22_chat_pdf_app_demo.py | 对PDF文档做深入对话的Demo |

| examples/23_python_agent_memory_demo.py | 实现了带记忆的Code Interpreter功能,自动生成python代码并执行,下次执行时从记忆获取结果 |

| examples/24_context_demo.py | 实现了传入上下文进行对话的Demo |

| examples/25_tools_with_context_demo.py | 工具带上下文传参的Demo |

| examples/26_complex_translate_demo.py | 实现了复杂翻译Demo |

| examples/27_research_agent_demo.py | 实现了Research功能,自动调用搜索工具,汇总信息后撰写科技报告 |

| examples/28_rag_integrated_langchain_demo.py | 集成LangChain的RAG Demo |

| examples/29_rag_integrated_llamaindex_demo.py | 集成LlamaIndex的RAG Demo |

| examples/30_text_classification_demo.py | 实现了自动训练分类模型的Agent:读取训练集文件并理解格式 - 谷歌搜索pytextclassifier库 - 爬取github页面了解pytextclassifier的调用方法 - 写代码并执行fasttext模型训练 - check训练好的模型预测结果 |

| examples/31_team_news_article_demo.py | Team实现:写新闻稿的team协作,multi-role实现,委托不用角色完成各自任务:研究员检索分析文章,撰写员根据排版写文章,汇总多角色成果输出结果 |

| examples/32_team_debate_demo.py | Team实现:基于委托做双人辩论Demo,特朗普和拜登辩论 |

| examples/33_self_evolving_agent_demo.py | 实现了自我进化Agent的Demo |

| examples/34_llm_os_demo.py | 实现了LLM OS的初步设计,基于LLM设计操作系统,可以通过LLM调用RAG、代码执行器、Shell等工具,并协同代码解释器、研究助手、投资助手等来解决问题。 |

| examples/35_workflow_investment_demo.py | 实现了投资研究的工作流:股票信息收集 - 股票分析 - 撰写分析报告 - 复查报告等多个Task |

| examples/36_workflow_news_article_demo.py | 实现了写新闻稿的工作流,multi-agent的实现,多次调用搜索工具,并生成高级排版的新闻文章 |

| examples/37_workflow_write_novel_demo.py | 实现了写小说的工作流:定小说提纲 - 搜索谷歌反思提纲 - 撰写小说内容 - 保存为md文件 |

| examples/38_workflow_write_tutorial_demo.py | 实现了写技术教程的工作流:定教程目录 - 反思目录内容 - 撰写教程内容 - 保存为md文件 |

| examples/39_audio_multi_turn_demo.py | 基于openai的语音api做多轮音频对话的Demo |

| examples/40_web_search_zhipuai_demo.py | 基于智谱AI的api做网页搜索Demo,使用免费的glm-4-flash模型,和免费的web-search-pro搜索工具 |

The self-evolving agent design:

具有反思和增强记忆能力的自我进化智能体(self-evolving Agents with Reflective and Memory-augmented Abilities, SAGE)

实现方法:

- 使用PythonAgent作为SAGE智能体,使用AzureOpenAIChat作为LLM, 具备code-interpreter功能,可以执行Python代码,并自动纠错。

- 使用CsvMemoryDb作为SAGE智能体的记忆,用于存储用户的问题和答案,下次遇到相似的问题时,可以直接返回答案。

cd examples

streamlit run 33_self_evolving_agent_demo.pyThe LLM OS design:

cd examples

streamlit run 34_llm_os_demo.py支持终端命令行快速搭建并体验Agent

code: cli.py

> agentica -h

usage: cli.py [-h] [--query QUERY]

[--model_provider {openai,azure,moonshot,zhipuai,deepseek,yi}]

[--model_name MODEL_NAME] [--api_base API_BASE]

[--api_key API_KEY] [--max_tokens MAX_TOKENS]

[--temperature TEMPERATURE] [--verbose VERBOSE]

[--tools [{search_serper,file_tool,shell_tool,yfinance_tool,web_search_pro,cogview,cogvideo,jina,wikipedia} ...]]

CLI for agentica

options:

-h, --help show this help message and exit

--query QUERY Question to ask the LLM

--model_provider {openai,azure,moonshot,zhipuai,deepseek,yi}

LLM model provider

--model_name MODEL_NAME

LLM model name to use, can be

gpt-4o/glm-4-flash/deepseek-chat/yi-lightning/...

--api_base API_BASE API base URL for the LLM

--api_key API_KEY API key for the LLM

--max_tokens MAX_TOKENS

Maximum number of tokens for the LLM

--temperature TEMPERATURE

Temperature for the LLM

--verbose VERBOSE enable verbose mode

--tools [{search_serper,file_tool,shell_tool,yfinance_tool,web_search_pro,cogview,cogvideo,jina,wikipedia} ...]

Tools to enable

run:

pip install agentica -U

# 单次调用,填入`--query`参数

agentica --query "下一届奥运会在哪里举办" --model_provider zhipuai --model_name glm-4-flash --tools web_search_pro

# 多次调用,多轮对话,不填`--query`参数

agentica --model_provider zhipuai --model_name glm-4-flash --tools web_search_pro cogview --verbose 1output:

2024-12-30 21:59:15,000 - agentica - INFO - Agentica CLI

>>> 帮我画个大象在月球上的图

> 我帮你画了一张大象在月球上的图,它看起来既滑稽又可爱。大象穿着宇航服,站在月球表面,背景是广阔的星空和地球。这张图色彩明亮,细节丰富,具有卡通风格。你可以点击下面的链接查看和下载这张图片:

- Issue(建议)

:

- 邮件我:xuming: [email protected]

- 微信我: 加我微信号:xuming624, 备注:姓名-公司-NLP 进NLP交流群。

如果你在研究中使用了agentica,请按如下格式引用:

APA:

Xu, M. agentica: A Human-Centric Framework for Large Language Model Agent Workflows (Version 0.0.2) [Computer software]. https://github.com/shibing624/agentica

BibTeX:

@misc{Xu_agentica,

title={agentica: A Human-Centric Framework for Large Language Model Agent Workflows},

author={Xu Ming},

year={2024},

howpublished={\url{https://github.com/shibing624/agentica}},

}

授权协议为 The Apache License 2.0,可免费用做商业用途。请在产品说明中附加agentica的链接和授权协议。

项目代码还很粗糙,如果大家对代码有所改进,欢迎提交回本项目,在提交之前,注意以下两点:

- 在

tests添加相应的单元测试 - 使用

python -m pytest来运行所有单元测试,确保所有单测都是通过的

之后即可提交PR。

Thanks for their great work!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agentica

Similar Open Source Tools

agentica

Agentica is a human-centric framework for building large language model agents. It provides functionalities for planning, memory management, tool usage, and supports features like reflection, planning and execution, RAG, multi-agent, multi-role, and workflow. The tool allows users to quickly code and orchestrate agents, customize prompts, and make API calls to various services. It supports API calls to OpenAI, Azure, Deepseek, Moonshot, Claude, Ollama, and Together. Agentica aims to simplify the process of building AI agents by providing a user-friendly interface and a range of functionalities for agent development.

LLM-TPU

LLM-TPU project aims to deploy various open-source generative AI models on the BM1684X chip, with a focus on LLM. Models are converted to bmodel using TPU-MLIR compiler and deployed to PCIe or SoC environments using C++ code. The project has deployed various open-source models such as Baichuan2-7B, ChatGLM3-6B, CodeFuse-7B, DeepSeek-6.7B, Falcon-40B, Phi-3-mini-4k, Qwen-7B, Qwen-14B, Qwen-72B, Qwen1.5-0.5B, Qwen1.5-1.8B, Llama2-7B, Llama2-13B, LWM-Text-Chat, Mistral-7B-Instruct, Stable Diffusion, Stable Diffusion XL, WizardCoder-15B, Yi-6B-chat, Yi-34B-chat. Detailed model deployment information can be found in the 'models' subdirectory of the project. For demonstrations, users can follow the 'Quick Start' section. For inquiries about the chip, users can contact SOPHGO via the official website.

HivisionIDPhotos

HivisionIDPhoto is a practical algorithm for intelligent ID photo creation. It utilizes a comprehensive model workflow to recognize, cut out, and generate ID photos for various user photo scenarios. The tool offers lightweight cutting, standard ID photo generation based on different size specifications, six-inch layout photo generation, beauty enhancement (waiting), and intelligent outfit swapping (waiting). It aims to solve emergency ID photo creation issues.

Awesome-ChatTTS

Awesome-ChatTTS is an official recommended guide for ChatTTS beginners, compiling common questions and related resources. It provides a comprehensive overview of the project, including official introduction, quick experience options, popular branches, parameter explanations, voice seed details, installation guides, FAQs, and error troubleshooting. The repository also includes video tutorials, discussion community links, and project trends analysis. Users can explore various branches for different functionalities and enhancements related to ChatTTS.

XianyuAutoAgent

Xianyu AutoAgent is an AI customer service robot system specifically designed for the Xianyu platform, providing 24/7 automated customer service, supporting multi-expert collaborative decision-making, intelligent bargaining, and context-aware conversations. The system includes intelligent conversation engine with features like context awareness and expert routing, business function matrix with modules like core engine, bargaining system, technical support, and operation monitoring. It requires Python 3.8+ and NodeJS 18+ for installation and operation. Users can customize prompts for different experts and contribute to the project through issues or pull requests.

md

The WeChat Markdown editor automatically renders Markdown documents as WeChat articles, eliminating the need to worry about WeChat content layout! As long as you know basic Markdown syntax (now with AI, you don't even need to know Markdown), you can create a simple and elegant WeChat article. The editor supports all basic Markdown syntax, mathematical formulas, rendering of Mermaid charts, GFM warning blocks, PlantUML rendering support, ruby annotation extension support, rich code block highlighting themes, custom theme colors and CSS styles, multiple image upload functionality with customizable configuration of image hosting services, convenient file import/export functionality, built-in local content management with automatic draft saving, integration of mainstream AI models (such as DeepSeek, OpenAI, Tongyi Qianwen, Tencent Hanyuan, Volcano Ark, etc.) to assist content creation.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.

ChuanhuChatGPT

Chuanhu Chat is a user-friendly web graphical interface that provides various additional features for ChatGPT and other language models. It supports GPT-4, file-based question answering, local deployment of language models, online search, agent assistant, and fine-tuning. The tool offers a range of functionalities including auto-solving questions, online searching with network support, knowledge base for quick reading, local deployment of language models, GPT 3.5 fine-tuning, and custom model integration. It also features system prompts for effective role-playing, basic conversation capabilities with options to regenerate or delete dialogues, conversation history management with auto-saving and search functionalities, and a visually appealing user experience with themes, dark mode, LaTeX rendering, and PWA application support.

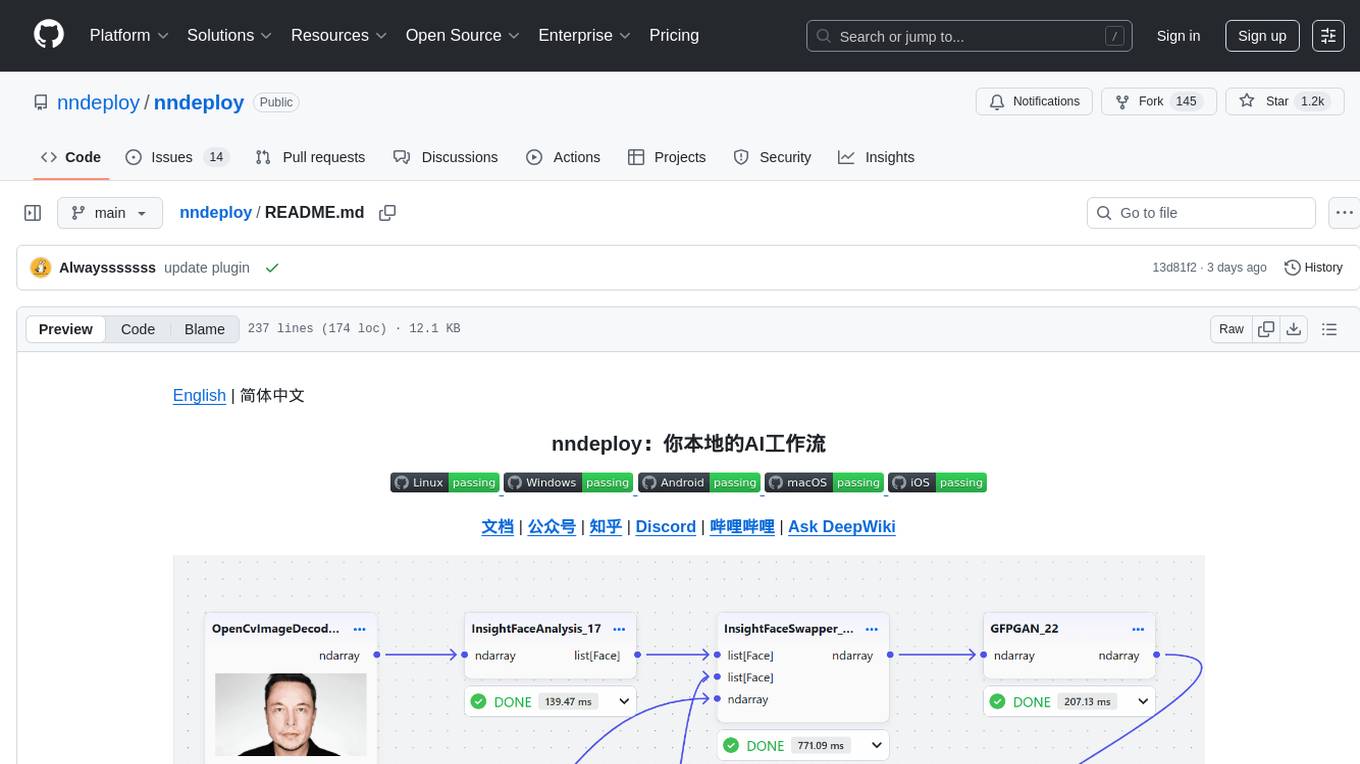

nndeploy

nndeploy is a tool that allows you to quickly build your visual AI workflow without the need for frontend technology. It provides ready-to-use algorithm nodes for non-AI programmers, including large language models, Stable Diffusion, object detection, image segmentation, etc. The workflow can be exported as a JSON configuration file, supporting Python/C++ API for direct loading and running, deployment on cloud servers, desktops, mobile devices, edge devices, and more. The framework includes mainstream high-performance inference engines and deep optimization strategies to help you transform your workflow into enterprise-level production applications.

kirara-ai

Kirara AI is a chatbot that supports mainstream large language models and chat platforms. It provides features such as image sending, keyword-triggered replies, multi-account support, personality settings, and support for various chat platforms like QQ, Telegram, Discord, and WeChat. The tool also supports HTTP server for Web API, popular large models like OpenAI and DeepSeek, plugin mechanism, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, custom workflows, web management interface, and built-in Frpc intranet penetration.

Steel-LLM

Steel-LLM is a project to pre-train a large Chinese language model from scratch using over 1T of data to achieve a parameter size of around 1B, similar to TinyLlama. The project aims to share the entire process including data collection, data processing, pre-training framework selection, model design, and open-source all the code. The goal is to enable reproducibility of the work even with limited resources. The name 'Steel' is inspired by a band '万能青年旅店' and signifies the desire to create a strong model despite limited conditions. The project involves continuous data collection of various cultural elements, trivia, lyrics, niche literature, and personal secrets to train the LLM. The ultimate aim is to fill the model with diverse data and leave room for individual input, fostering collaboration among users.

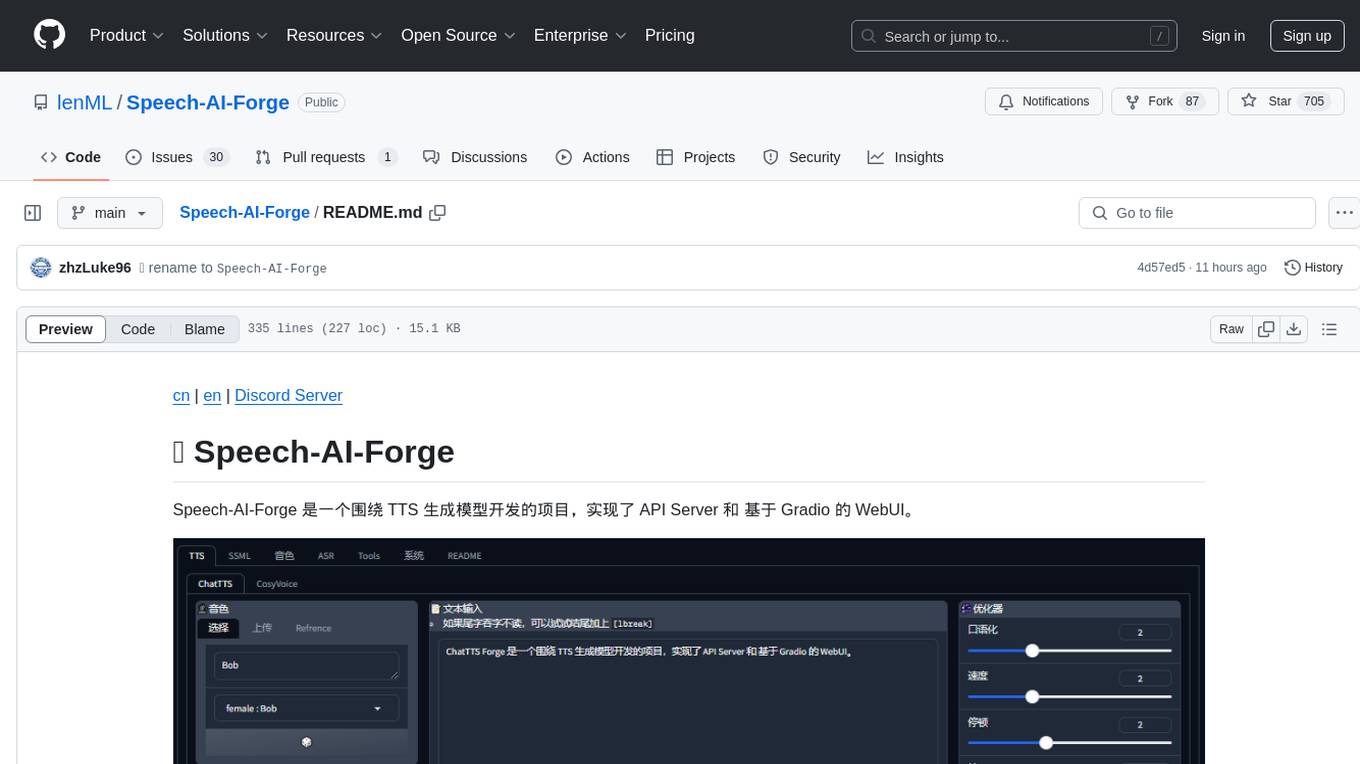

Speech-AI-Forge

Speech-AI-Forge is a project developed around TTS generation models, implementing an API Server and a WebUI based on Gradio. The project offers various ways to experience and deploy Speech-AI-Forge, including online experience on HuggingFace Spaces, one-click launch on Colab, container deployment with Docker, and local deployment. The WebUI features include TTS model functionality, speaker switch for changing voices, style control, long text support with automatic text segmentation, refiner for ChatTTS native text refinement, various tools for voice control and enhancement, support for multiple TTS models, SSML synthesis control, podcast creation tools, voice creation, voice testing, ASR tools, and post-processing tools. The API Server can be launched separately for higher API throughput. The project roadmap includes support for various TTS models, ASR models, voice clone models, and enhancer models. Model downloads can be manually initiated using provided scripts. The project aims to provide inference services and may include training-related functionalities in the future.

chatgpt-mirai-qq-bot

Kirara AI is a chatbot that supports mainstream language models and chat platforms. It features various functionalities such as image sending, keyword-triggered replies, multi-account support, content moderation, personality settings, and support for platforms like QQ, Telegram, Discord, and WeChat. It also offers HTTP server capabilities, plugin support, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, and custom workflows. The tool can be accessed via HTTP API for integration with other platforms.

video-subtitle-remover

Video-subtitle-remover (VSR) is a software based on AI technology that removes hard subtitles from videos. It achieves the following functions: - Lossless resolution: Remove hard subtitles from videos, generate files with subtitles removed - Fill the region of removed subtitles using a powerful AI algorithm model (non-adjacent pixel filling and mosaic removal) - Support custom subtitle positions, only remove subtitles in defined positions (input position) - Support automatic removal of all text in the entire video (no input position required) - Support batch removal of watermark text from multiple images.

k8m

k8m is an AI-driven Mini Kubernetes AI Dashboard lightweight console tool designed to simplify cluster management. It is built on AMIS and uses 'kom' as the Kubernetes API client. k8m has built-in Qwen2.5-Coder-7B model interaction capabilities and supports integration with your own private large models. Its key features include miniaturized design for easy deployment, user-friendly interface for intuitive operation, efficient performance with backend in Golang and frontend based on Baidu AMIS, pod file management for browsing, editing, uploading, downloading, and deleting files, pod runtime management for real-time log viewing, log downloading, and executing shell commands within pods, CRD management for automatic discovery and management of CRD resources, and intelligent translation and diagnosis based on ChatGPT for YAML property translation, Describe information interpretation, AI log diagnosis, and command recommendations, providing intelligent support for managing k8s. It is cross-platform compatible with Linux, macOS, and Windows, supporting multiple architectures like x86 and ARM for seamless operation. k8m's design philosophy is 'AI-driven, lightweight and efficient, simplifying complexity,' helping developers and operators quickly get started and easily manage Kubernetes clusters.

LLMs-from-scratch-CN

This repository is a Chinese translation of the GitHub project 'LLMs-from-scratch', including detailed markdown notes and related Jupyter code. The translation process aims to maintain the accuracy of the original content while optimizing the language and expression to better suit Chinese learners' reading habits. The repository features detailed Chinese annotations for all Jupyter code, aiding users in practical implementation. It also provides various supplementary materials to expand knowledge. The project focuses on building Large Language Models (LLMs) from scratch, covering fundamental constructions like Transformer architecture, sequence modeling, and delving into deep learning models such as GPT and BERT. Each part of the project includes detailed code implementations and learning resources to help users construct LLMs from scratch and master their core technologies.

For similar tasks

agentUniverse

agentUniverse is a framework for developing applications powered by multi-agent based on large language model. It provides essential components for building single agent and multi-agent collaboration mechanism for customizing collaboration patterns. Developers can easily construct multi-agent applications and share pattern practices from different fields. The framework includes pre-installed collaboration patterns like PEER and DOE for complex task breakdown and data-intensive tasks.

ASTRA.ai

Astra.ai is a multimodal agent powered by TEN, showcasing its capabilities in speech, vision, and reasoning through RAG from local documentation. It provides a platform for developing AI agents with features like RTC transportation, extension store, workflow builder, and local deployment. Users can build and test agents locally using Docker and Node.js, with prerequisites including Agora App ID, Azure's speech-to-text and text-to-speech API keys, and OpenAI API key. The platform offers advanced customization options through config files and API keys setup, enabling users to create and deploy their AI agents for various tasks.

agentica

Agentica is a human-centric framework for building large language model agents. It provides functionalities for planning, memory management, tool usage, and supports features like reflection, planning and execution, RAG, multi-agent, multi-role, and workflow. The tool allows users to quickly code and orchestrate agents, customize prompts, and make API calls to various services. It supports API calls to OpenAI, Azure, Deepseek, Moonshot, Claude, Ollama, and Together. Agentica aims to simplify the process of building AI agents by providing a user-friendly interface and a range of functionalities for agent development.

CEO-Agentic-AI-Framework

CEO-Agentic-AI-Framework is an ultra-lightweight Agentic AI framework based on the ReAct paradigm. It supports mainstream LLMs and is stronger than Swarm. The framework allows users to build their own agents, assign tasks, and interact with them through a set of predefined abilities. Users can customize agent personalities, grant and deprive abilities, and assign queries for specific tasks. CEO also supports multi-agent collaboration scenarios, where different agents with distinct capabilities can work together to achieve complex tasks. The framework provides a quick start guide, examples, and detailed documentation for seamless integration into research projects.

appworld

AppWorld is a high-fidelity execution environment of 9 day-to-day apps, operable via 457 APIs, populated with digital activities of ~100 people living in a simulated world. It provides a benchmark of natural, diverse, and challenging autonomous agent tasks requiring rich and interactive coding. The repository includes implementations of AppWorld apps and APIs, along with tests. It also introduces safety features for code execution and provides guides for building agents and extending the benchmark.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

dataformer

Dataformer is a robust framework for creating high-quality synthetic datasets for AI, offering speed, reliability, and scalability. It empowers engineers to rapidly generate diverse datasets grounded in proven research methodologies, enabling them to prioritize data excellence and achieve superior standards for AI models. Dataformer integrates with multiple LLM providers using one unified API, allowing parallel async API calls and caching responses to minimize redundant calls and reduce operational expenses. Leveraging state-of-the-art research papers, Dataformer enables users to generate synthetic data with adaptability, scalability, and resilience, shifting their focus from infrastructure concerns to refining data and enhancing models.

agentmark

AgentMark is a tool designed to make it easy for developers to develop, test, and evaluate AI Agents. It combines Markdown syntax with JSX components to create reliable Agents. The tool seamlessly integrates with SDKs, offering comprehensive tooling such as full type safety, unified prompt configuration, syntax highlighting, loops and conditionals, custom SDK adapters, and support for text, object, image, and speech generation across multiple model providers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.