ipex-llm

Accelerate local LLM inference and finetuning (LLaMA, Mistral, ChatGLM, Qwen, Mixtral, Gemma, Phi, MiniCPM, Qwen-VL, MiniCPM-V, etc.) on Intel XPU (e.g., local PC with iGPU and NPU, discrete GPU such as Arc, Flex and Max); seamlessly integrate with llama.cpp, Ollama, HuggingFace, LangChain, LlamaIndex, vLLM, GraphRAG, DeepSpeed, Axolotl, etc

Stars: 6919

IPEX-LLM is a PyTorch library for running Large Language Models (LLMs) on Intel CPUs and GPUs with very low latency. It provides seamless integration with various LLM frameworks and tools, including llama.cpp, ollama, Text-Generation-WebUI, HuggingFace transformers, and more. IPEX-LLM has been optimized and verified on over 50 LLM models, including LLaMA, Mistral, Mixtral, Gemma, LLaVA, Whisper, ChatGLM, Baichuan, Qwen, and RWKV. It supports a range of low-bit inference formats, including INT4, FP8, FP4, INT8, INT2, FP16, and BF16, as well as finetuning capabilities for LoRA, QLoRA, DPO, QA-LoRA, and ReLoRA. IPEX-LLM is actively maintained and updated with new features and optimizations, making it a valuable tool for researchers, developers, and anyone interested in exploring and utilizing LLMs.

README:

[!IMPORTANT]

bigdl-llmhas now becomeipex-llm(see the migration guide here); you may find the originalBigDLproject here.

< English | 中文 >

IPEX-LLM is an LLM acceleration library for Intel GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max), NPU and CPU 1.

[!NOTE]

- It is built on top of the excellent work of

llama.cpp,transformers,bitsandbytes,vLLM,qlora,AutoGPTQ,AutoAWQ, etc.- It provides seamless integration with llama.cpp, Ollama, HuggingFace transformers, LangChain, LlamaIndex, vLLM, Text-Generation-WebUI, DeepSpeed-AutoTP, FastChat, Axolotl, HuggingFace PEFT, HuggingFace TRL, AutoGen, ModeScope, etc.

- 70+ models have been optimized/verified on

ipex-llm(e.g., Llama, Phi, Mistral, Mixtral, Whisper, Qwen, MiniCPM, Qwen-VL, MiniCPM-V and more), with state-of-art LLM optimizations, XPU acceleration and low-bit (FP8/FP6/FP4/INT4) support; see the complete list here.

- [2025/01] We added the guide for running

ipex-llmon Intel Arc B580 GPU - [2024/12] We added support for running Ollama 0.4.6 on Intel GPU.

- [2024/12] We added both Python and C++ support for Intel Core Ultra NPU (including 100H, 200V and 200K series).

- [2024/11] We added support for running vLLM 0.6.2 on Intel Arc GPUs.

More updates

- [2024/07] We added support for running Microsoft's GraphRAG using local LLM on Intel GPU; see the quickstart guide here.

- [2024/07] We added extensive support for Large Multimodal Models, including StableDiffusion, Phi-3-Vision, Qwen-VL, and more.

- [2024/07] We added FP6 support on Intel GPU.

- [2024/06] We added experimental NPU support for Intel Core Ultra processors; see the examples here.

- [2024/06] We added extensive support of pipeline parallel inference, which makes it easy to run large-sized LLM using 2 or more Intel GPUs (such as Arc).

- [2024/06] We added support for running RAGFlow with

ipex-llmon Intel GPU. - [2024/05]

ipex-llmnow supports Axolotl for LLM finetuning on Intel GPU; see the quickstart here. - [2024/05] You can now easily run

ipex-llminference, serving and finetuning using the Docker images. - [2024/05] You can now install

ipex-llmon Windows using just "one command". - [2024/04] You can now run Open WebUI on Intel GPU using

ipex-llm; see the quickstart here. - [2024/04] You can now run Llama 3 on Intel GPU using

llama.cppandollamawithipex-llm; see the quickstart here. - [2024/04]

ipex-llmnow supports Llama 3 on both Intel GPU and CPU. - [2024/04]

ipex-llmnow provides C++ interface, which can be used as an accelerated backend for running llama.cpp and ollama on Intel GPU. - [2024/03]

bigdl-llmhas now becomeipex-llm(see the migration guide here); you may find the originalBigDLproject here. - [2024/02]

ipex-llmnow supports directly loading model from ModelScope (魔搭). - [2024/02]

ipex-llmadded initial INT2 support (based on llama.cpp IQ2 mechanism), which makes it possible to run large-sized LLM (e.g., Mixtral-8x7B) on Intel GPU with 16GB VRAM. - [2024/02] Users can now use

ipex-llmthrough Text-Generation-WebUI GUI. - [2024/02]

ipex-llmnow supports Self-Speculative Decoding, which in practice brings ~30% speedup for FP16 and BF16 inference latency on Intel GPU and CPU respectively. - [2024/02]

ipex-llmnow supports a comprehensive list of LLM finetuning on Intel GPU (including LoRA, QLoRA, DPO, QA-LoRA and ReLoRA). - [2024/01] Using

ipex-llmQLoRA, we managed to finetune LLaMA2-7B in 21 minutes and LLaMA2-70B in 3.14 hours on 8 Intel Max 1550 GPU for Standford-Alpaca (see the blog here). - [2023/12]

ipex-llmnow supports ReLoRA (see "ReLoRA: High-Rank Training Through Low-Rank Updates"). - [2023/12]

ipex-llmnow supports Mixtral-8x7B on both Intel GPU and CPU. - [2023/12]

ipex-llmnow supports QA-LoRA (see "QA-LoRA: Quantization-Aware Low-Rank Adaptation of Large Language Models"). - [2023/12]

ipex-llmnow supports FP8 and FP4 inference on Intel GPU. - [2023/11] Initial support for directly loading GGUF, AWQ and GPTQ models into

ipex-llmis available. - [2023/11]

ipex-llmnow supports vLLM continuous batching on both Intel GPU and CPU. - [2023/10]

ipex-llmnow supports QLoRA finetuning on both Intel GPU and CPU. - [2023/10]

ipex-llmnow supports FastChat serving on on both Intel CPU and GPU. - [2023/09]

ipex-llmnow supports Intel GPU (including iGPU, Arc, Flex and MAX). - [2023/09]

ipex-llmtutorial is released.

See demos of running local LLMs on Intel Core Ultra iGPU, Intel Core Ultra NPU, single-card Arc GPU, or multi-card Arc GPUs using ipex-llm below.

| Intel Core Ultra (Series 1) iGPU | Intel Core Ultra (Series 2) NPU | Intel Arc dGPU | 2-Card Intel Arc dGPUs |

|

|

|

|

|

Ollama (Mistral-7B Q4_K) |

HuggingFace (Llama3.2-3B SYM_INT4) |

TextGeneration-WebUI (Llama3-8B FP8) |

FastChat (QWen1.5-32B FP6) |

See the Token Generation Speed on Intel Core Ultra and Intel Arc GPU below1 (and refer to [2][3][4] for more details).

|

|

You may follow the Benchmarking Guide to run ipex-llm performance benchmark yourself.

Please see the Perplexity result below (tested on Wikitext dataset using the script here).

| Perplexity | sym_int4 | q4_k | fp6 | fp8_e5m2 | fp8_e4m3 | fp16 |

|---|---|---|---|---|---|---|

| Llama-2-7B-chat-hf | 6.364 | 6.218 | 6.092 | 6.180 | 6.098 | 6.096 |

| Mistral-7B-Instruct-v0.2 | 5.365 | 5.320 | 5.270 | 5.273 | 5.246 | 5.244 |

| Baichuan2-7B-chat | 6.734 | 6.727 | 6.527 | 6.539 | 6.488 | 6.508 |

| Qwen1.5-7B-chat | 8.865 | 8.816 | 8.557 | 8.846 | 8.530 | 8.607 |

| Llama-3.1-8B-Instruct | 6.705 | 6.566 | 6.338 | 6.383 | 6.325 | 6.267 |

| gemma-2-9b-it | 7.541 | 7.412 | 7.269 | 7.380 | 7.268 | 7.270 |

| Baichuan2-13B-Chat | 6.313 | 6.160 | 6.070 | 6.145 | 6.086 | 6.031 |

| Llama-2-13b-chat-hf | 5.449 | 5.422 | 5.341 | 5.384 | 5.332 | 5.329 |

| Qwen1.5-14B-Chat | 7.529 | 7.520 | 7.367 | 7.504 | 7.297 | 7.334 |

-

Arc B580: running

ipex-llmon Intel Arc B580 GPU for Ollama, llama.cpp, PyTorch, HuggingFace, etc. -

NPU: running

ipex-llmon Intel NPU in both Python and C++ -

llama.cpp: running llama.cpp (using C++ interface of

ipex-llm) on Intel GPU -

Ollama: running ollama (using C++ interface of

ipex-llm) on Intel GPU -

PyTorch/HuggingFace: running PyTorch, HuggingFace, LangChain, LlamaIndex, etc. (using Python interface of

ipex-llm) on Intel GPU for Windows and Linux -

vLLM: running

ipex-llmin vLLM on both Intel GPU and CPU -

FastChat: running

ipex-llmin FastChat serving on on both Intel GPU and CPU -

Serving on multiple Intel GPUs: running

ipex-llmserving on multiple Intel GPUs by leveraging DeepSpeed AutoTP and FastAPI -

Text-Generation-WebUI: running

ipex-llminoobaboogaWebUI -

Axolotl: running

ipex-llmin Axolotl for LLM finetuning -

Benchmarking: running (latency and throughput) benchmarks for

ipex-llmon Intel CPU and GPU

-

GPU Inference in C++: running

llama.cpp,ollama, etc., withipex-llmon Intel GPU -

GPU Inference in Python : running HuggingFace

transformers,LangChain,LlamaIndex,ModelScope, etc. withipex-llmon Intel GPU -

vLLM on GPU: running

vLLMserving withipex-llmon Intel GPU -

vLLM on CPU: running

vLLMserving withipex-llmon Intel CPU -

FastChat on GPU: running

FastChatserving withipex-llmon Intel GPU -

VSCode on GPU: running and developing

ipex-llmapplications in Python using VSCode on Intel GPU

-

GraphRAG: running Microsoft's

GraphRAGusing local LLM withipex-llm -

RAGFlow: running

RAGFlow(an open-source RAG engine) withipex-llm -

LangChain-Chatchat: running

LangChain-Chatchat(Knowledge Base QA using RAG pipeline) withipex-llm -

Coding copilot: running

Continue(coding copilot in VSCode) withipex-llm -

Open WebUI: running

Open WebUIwithipex-llm -

PrivateGPT: running

PrivateGPTto interact with documents withipex-llm -

Dify platform: running

ipex-llminDify(production-ready LLM app development platform)

-

Windows GPU: installing

ipex-llmon Windows with Intel GPU -

Linux GPU: installing

ipex-llmon Linux with Intel GPU - For more details, please refer to the full installation guide

-

- INT4 inference: INT4 LLM inference on Intel GPU and CPU

- FP8/FP6/FP4 inference: FP8, FP6 and FP4 LLM inference on Intel GPU

- INT8 inference: INT8 LLM inference on Intel GPU and CPU

- INT2 inference: INT2 LLM inference (based on llama.cpp IQ2 mechanism) on Intel GPU

-

- FP16 LLM inference on Intel GPU, with possible self-speculative decoding optimization

- BF16 LLM inference on Intel CPU, with possible self-speculative decoding optimization

-

-

Low-bit models: saving and loading

ipex-llmlow-bit models (INT4/FP4/FP6/INT8/FP8/FP16/etc.) -

GGUF: directly loading GGUF models into

ipex-llm -

AWQ: directly loading AWQ models into

ipex-llm -

GPTQ: directly loading GPTQ models into

ipex-llm

-

Low-bit models: saving and loading

- Tutorials

Over 70 models have been optimized/verified on ipex-llm, including LLaMA/LLaMA2, Mistral, Mixtral, Gemma, LLaVA, Whisper, ChatGLM2/ChatGLM3, Baichuan/Baichuan2, Qwen/Qwen-1.5, InternLM and more; see the list below.

| Model | CPU Example | GPU Example | NPU Example |

|---|---|---|---|

| LLaMA | link1, link2 | link | |

| LLaMA 2 | link1, link2 | link | Python link, C++ link |

| LLaMA 3 | link | link | Python link, C++ link |

| LLaMA 3.1 | link | link | |

| LLaMA 3.2 | link | Python link, C++ link | |

| LLaMA 3.2-Vision | link | ||

| ChatGLM | link | ||

| ChatGLM2 | link | link | |

| ChatGLM3 | link | link | |

| GLM-4 | link | link | |

| GLM-4V | link | link | |

| GLM-Edge | link | Python link | |

| GLM-Edge-V | link | ||

| Mistral | link | link | |

| Mixtral | link | link | |

| Falcon | link | link | |

| MPT | link | link | |

| Dolly-v1 | link | link | |

| Dolly-v2 | link | link | |

| Replit Code | link | link | |

| RedPajama | link1, link2 | ||

| Phoenix | link1, link2 | ||

| StarCoder | link1, link2 | link | |

| Baichuan | link | link | |

| Baichuan2 | link | link | Python link |

| InternLM | link | link | |

| InternVL2 | link | ||

| Qwen | link | link | |

| Qwen1.5 | link | link | |

| Qwen2 | link | link | Python link, C++ link |

| Qwen2.5 | link | Python link, C++ link | |

| Qwen-VL | link | link | |

| Qwen2-VL | link | ||

| Qwen2-Audio | link | ||

| Aquila | link | link | |

| Aquila2 | link | link | |

| MOSS | link | ||

| Whisper | link | link | |

| Phi-1_5 | link | link | |

| Flan-t5 | link | link | |

| LLaVA | link | link | |

| CodeLlama | link | link | |

| Skywork | link | ||

| InternLM-XComposer | link | ||

| WizardCoder-Python | link | ||

| CodeShell | link | ||

| Fuyu | link | ||

| Distil-Whisper | link | link | |

| Yi | link | link | |

| BlueLM | link | link | |

| Mamba | link | link | |

| SOLAR | link | link | |

| Phixtral | link | link | |

| InternLM2 | link | link | |

| RWKV4 | link | ||

| RWKV5 | link | ||

| Bark | link | link | |

| SpeechT5 | link | ||

| DeepSeek-MoE | link | ||

| Ziya-Coding-34B-v1.0 | link | ||

| Phi-2 | link | link | |

| Phi-3 | link | link | |

| Phi-3-vision | link | link | |

| Yuan2 | link | link | |

| Gemma | link | link | |

| Gemma2 | link | ||

| DeciLM-7B | link | link | |

| Deepseek | link | link | |

| StableLM | link | link | |

| CodeGemma | link | link | |

| Command-R/cohere | link | link | |

| CodeGeeX2 | link | link | |

| MiniCPM | link | link | Python link, C++ link |

| MiniCPM3 | link | ||

| MiniCPM-V | link | ||

| MiniCPM-V-2 | link | link | |

| MiniCPM-Llama3-V-2_5 | link | Python link | |

| MiniCPM-V-2_6 | link | link | Python link |

| StableDiffusion | link | ||

| Bce-Embedding-Base-V1 | Python link | ||

| Speech_Paraformer-Large | Python link |

- Please report a bug or raise a feature request by opening a Github Issue

- Please report a vulnerability by opening a draft GitHub Security Advisory

-

Performance varies by use, configuration and other factors.

ipex-llmmay not optimize to the same degree for non-Intel products. Learn more at www.Intel.com/PerformanceIndex. ↩ ↩2

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ipex-llm

Similar Open Source Tools

ipex-llm

IPEX-LLM is a PyTorch library for running Large Language Models (LLMs) on Intel CPUs and GPUs with very low latency. It provides seamless integration with various LLM frameworks and tools, including llama.cpp, ollama, Text-Generation-WebUI, HuggingFace transformers, and more. IPEX-LLM has been optimized and verified on over 50 LLM models, including LLaMA, Mistral, Mixtral, Gemma, LLaVA, Whisper, ChatGLM, Baichuan, Qwen, and RWKV. It supports a range of low-bit inference formats, including INT4, FP8, FP4, INT8, INT2, FP16, and BF16, as well as finetuning capabilities for LoRA, QLoRA, DPO, QA-LoRA, and ReLoRA. IPEX-LLM is actively maintained and updated with new features and optimizations, making it a valuable tool for researchers, developers, and anyone interested in exploring and utilizing LLMs.

ipex-llm

The `ipex-llm` repository is an LLM acceleration library designed for Intel GPU, NPU, and CPU. It provides seamless integration with various models and tools like llama.cpp, Ollama, HuggingFace transformers, LangChain, LlamaIndex, vLLM, Text-Generation-WebUI, DeepSpeed-AutoTP, FastChat, Axolotl, and more. The library offers optimizations for over 70 models, XPU acceleration, and support for low-bit (FP8/FP6/FP4/INT4) operations. Users can run different models on Intel GPUs, NPU, and CPUs with support for various features like finetuning, inference, serving, and benchmarking.

agentica

Agentica is a human-centric framework for building large language model agents. It provides functionalities for planning, memory management, tool usage, and supports features like reflection, planning and execution, RAG, multi-agent, multi-role, and workflow. The tool allows users to quickly code and orchestrate agents, customize prompts, and make API calls to various services. It supports API calls to OpenAI, Azure, Deepseek, Moonshot, Claude, Ollama, and Together. Agentica aims to simplify the process of building AI agents by providing a user-friendly interface and a range of functionalities for agent development.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.

runanywhere-sdks

RunAnywhere is an on-device AI tool for mobile apps that allows users to run LLMs, speech-to-text, text-to-speech, and voice assistant features locally, ensuring privacy, offline functionality, and fast performance. The tool provides a range of AI capabilities without relying on cloud services, reducing latency and ensuring that no data leaves the device. RunAnywhere offers SDKs for Swift (iOS/macOS), Kotlin (Android), React Native, and Flutter, making it easy for developers to integrate AI features into their mobile applications. The tool supports various models for LLM, speech-to-text, and text-to-speech, with detailed documentation and installation instructions available for each platform.

swift

SWIFT (Scalable lightWeight Infrastructure for Fine-Tuning) supports training, inference, evaluation and deployment of nearly **200 LLMs and MLLMs** (multimodal large models). Developers can directly apply our framework to their own research and production environments to realize the complete workflow from model training and evaluation to application. In addition to supporting the lightweight training solutions provided by [PEFT](https://github.com/huggingface/peft), we also provide a complete **Adapters library** to support the latest training techniques such as NEFTune, LoRA+, LLaMA-PRO, etc. This adapter library can be used directly in your own custom workflow without our training scripts. To facilitate use by users unfamiliar with deep learning, we provide a Gradio web-ui for controlling training and inference, as well as accompanying deep learning courses and best practices for beginners. Additionally, we are expanding capabilities for other modalities. Currently, we support full-parameter training and LoRA training for AnimateDiff.

DownEdit

DownEdit is a powerful program that allows you to download videos from various social media platforms such as TikTok, Douyin, Kuaishou, and more. With DownEdit, you can easily download videos from user profiles and edit them in bulk. You have the option to flip the videos horizontally or vertically throughout the entire directory with just a single click. Stay tuned for more exciting features coming soon!

langfuse

Langfuse is a powerful tool that helps you develop, monitor, and test your LLM applications. With Langfuse, you can: * **Develop:** Instrument your app and start ingesting traces to Langfuse, inspect and debug complex logs, and manage, version, and deploy prompts from within Langfuse. * **Monitor:** Track metrics (cost, latency, quality) and gain insights from dashboards & data exports, collect and calculate scores for your LLM completions, run model-based evaluations, collect user feedback, and manually score observations in Langfuse. * **Test:** Track and test app behaviour before deploying a new version, test expected in and output pairs and benchmark performance before deploying, and track versions and releases in your application. Langfuse is easy to get started with and offers a generous free tier. You can sign up for Langfuse Cloud or deploy Langfuse locally or on your own infrastructure. Langfuse also offers a variety of integrations to make it easy to connect to your LLM applications.

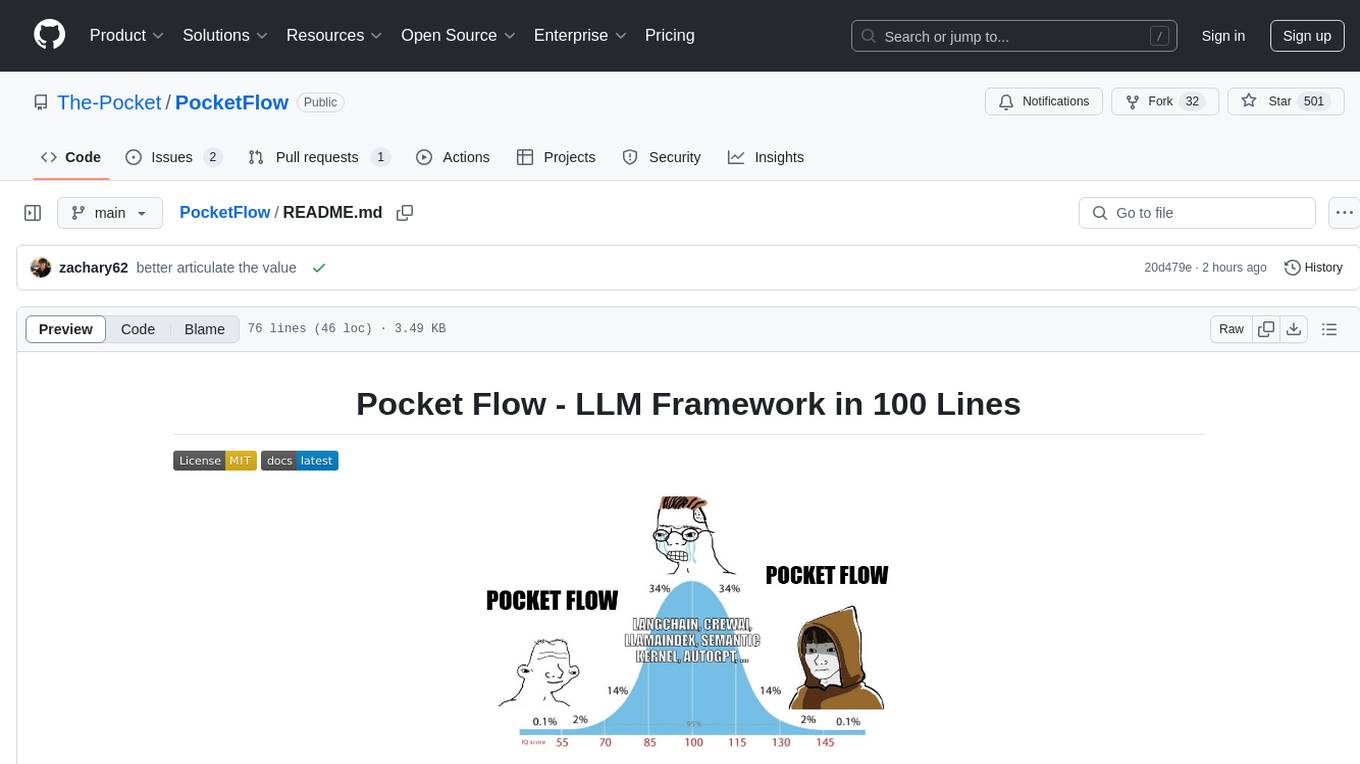

PocketFlow

Pocket Flow is a 100-line minimalist LLM framework designed for (Multi-)Agents, Workflow, RAG, etc. It provides a core abstraction for LLM projects by focusing on computation and communication through a graph structure and shared store. The framework aims to support the development of LLM Agents, such as Cursor AI, by offering a minimal and low-level approach that is well-suited for understanding and usage. Users can install Pocket Flow via pip or by copying the source code, and detailed documentation is available on the project website.

DownEdit

DownEdit is a fast and powerful program for downloading and editing videos from platforms like TikTok, Douyin, and Kuaishou. It allows users to effortlessly grab videos, make bulk edits, and utilize advanced AI features for generating videos, images, and sounds in bulk. The tool offers features like video, photo, and sound editing, downloading videos without watermarks, bulk AI generation, and AI editing for content enhancement.

VideoLLaMA2

VideoLLaMA 2 is a project focused on advancing spatial-temporal modeling and audio understanding in video-LLMs. It provides tools for multi-choice video QA, open-ended video QA, and video captioning. The project offers model zoo with different configurations for visual encoder and language decoder. It includes training and evaluation guides, as well as inference capabilities for video and image processing. The project also features a demo setup for running a video-based Large Language Model web demonstration.

ReGraph

ReGraph is a decentralized AI compute marketplace that connects hardware providers with developers who need inference and training resources. It democratizes access to AI computing power by creating a global network of distributed compute nodes. It is cost-effective, decentralized, easy to integrate, supports multiple models, and offers pay-as-you-go pricing.

For similar tasks

maxtext

MaxText is a high-performance, highly scalable, open-source LLM written in pure Python/Jax and targeting Google Cloud TPUs and GPUs for training and inference. MaxText achieves high MFUs and scales from single host to very large clusters while staying simple and "optimization-free" thanks to the power of Jax and the XLA compiler. MaxText aims to be a launching off point for ambitious LLM projects both in research and production. We encourage users to start by experimenting with MaxText out of the box and then fork and modify MaxText to meet their needs.

swift

SWIFT (Scalable lightWeight Infrastructure for Fine-Tuning) supports training, inference, evaluation and deployment of nearly **200 LLMs and MLLMs** (multimodal large models). Developers can directly apply our framework to their own research and production environments to realize the complete workflow from model training and evaluation to application. In addition to supporting the lightweight training solutions provided by [PEFT](https://github.com/huggingface/peft), we also provide a complete **Adapters library** to support the latest training techniques such as NEFTune, LoRA+, LLaMA-PRO, etc. This adapter library can be used directly in your own custom workflow without our training scripts. To facilitate use by users unfamiliar with deep learning, we provide a Gradio web-ui for controlling training and inference, as well as accompanying deep learning courses and best practices for beginners. Additionally, we are expanding capabilities for other modalities. Currently, we support full-parameter training and LoRA training for AnimateDiff.

ipex-llm

IPEX-LLM is a PyTorch library for running Large Language Models (LLMs) on Intel CPUs and GPUs with very low latency. It provides seamless integration with various LLM frameworks and tools, including llama.cpp, ollama, Text-Generation-WebUI, HuggingFace transformers, and more. IPEX-LLM has been optimized and verified on over 50 LLM models, including LLaMA, Mistral, Mixtral, Gemma, LLaVA, Whisper, ChatGLM, Baichuan, Qwen, and RWKV. It supports a range of low-bit inference formats, including INT4, FP8, FP4, INT8, INT2, FP16, and BF16, as well as finetuning capabilities for LoRA, QLoRA, DPO, QA-LoRA, and ReLoRA. IPEX-LLM is actively maintained and updated with new features and optimizations, making it a valuable tool for researchers, developers, and anyone interested in exploring and utilizing LLMs.

llm-twin-course

The LLM Twin Course is a free, end-to-end framework for building production-ready LLM systems. It teaches you how to design, train, and deploy a production-ready LLM twin of yourself powered by LLMs, vector DBs, and LLMOps good practices. The course is split into 11 hands-on written lessons and the open-source code you can access on GitHub. You can read everything and try out the code at your own pace.

Awesome-LLM-Inference

Awesome-LLM-Inference: A curated list of 📙Awesome LLM Inference Papers with Codes, check 📖Contents for more details. This repo is still updated frequently ~ 👨💻 Welcome to star ⭐️ or submit a PR to this repo!

lingo

Lingo is a lightweight ML model proxy that runs on Kubernetes, allowing you to run text-completion and embedding servers without changing OpenAI client code. It supports serving OSS LLMs, is compatible with OpenAI API, plug-and-play with messaging systems, scales from zero based on load, and has zero dependencies. Namespaced with no cluster privileges needed.

unsloth

Unsloth is a tool that allows users to fine-tune large language models (LLMs) 2-5x faster with 80% less memory. It is a free and open-source tool that can be used to fine-tune LLMs such as Gemma, Mistral, Llama 2-5, TinyLlama, and CodeLlama 34b. Unsloth supports 4-bit and 16-bit QLoRA / LoRA fine-tuning via bitsandbytes. It also supports DPO (Direct Preference Optimization), PPO, and Reward Modelling. Unsloth is compatible with Hugging Face's TRL, Trainer, Seq2SeqTrainer, and Pytorch code. It is also compatible with NVIDIA GPUs since 2018+ (minimum CUDA Capability 7.0).

llm-finetuning

llm-finetuning is a repository that provides a serverless twist to the popular axolotl fine-tuning library using Modal's serverless infrastructure. It allows users to quickly fine-tune any LLM model with state-of-the-art optimizations like Deepspeed ZeRO, LoRA adapters, Flash attention, and Gradient checkpointing. The repository simplifies the fine-tuning process by not exposing all CLI arguments, instead allowing users to specify options in a config file. It supports efficient training and scaling across multiple GPUs, making it suitable for production-ready fine-tuning jobs.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.