Awesome-LLM-Inference

📖A curated list of Awesome LLM/VLM Inference Papers with codes: WINT8/4, Flash-Attention, Paged-Attention, Parallelism, etc. 🎉🎉

Stars: 3544

Awesome-LLM-Inference: A curated list of 📙Awesome LLM Inference Papers with Codes, check 📖Contents for more details. This repo is still updated frequently ~ 👨💻 Welcome to star ⭐️ or submit a PR to this repo!

README:

Awesome-LLM-Inference: A curated list of 📙Awesome LLM Inference Papers with Codes. For Awesome Diffusion Inference, please check 📖Awesome-Diffusion-Inference

@misc{Awesome-LLM-Inference@2024,

title={Awesome-LLM-Inference: A curated list of Awesome LLM Inference Papers with codes},

url={https://github.com/DefTruth/Awesome-LLM-Inference},

note={Open-source software available at https://github.com/DefTruth/Awesome-LLM-Inference},

author={DefTruth, liyucheng09 etc},

year={2024}

}Awesome LLM Inference for Beginners.pdf: 500 pages, FastServe, FlashAttention 1/2, FlexGen, FP8, LLM.int8(), PagedAttention, RoPE, SmoothQuant, WINT8/4, Continuous Batching, ZeroQuant 1/2/FP, AWQ etc.

- 📖Trending LLM/VLM Topics🔥🔥🔥

- 📖DeepSeek/MLA Topics🔥🔥🔥

- 📖DP/MP/PP/TP/SP/CP Parallelism🔥🔥🔥

- 📖Disaggregating Prefill and Decoding🔥🔥🔥

- 📖LLM Algorithmic/Eval Survey

- 📖LLM Train/Inference Framework/Design

- 📖Weight/Activation Quantize/Compress🔥

- 📖Continuous/In-flight Batching

- 📖IO/FLOPs-Aware/Sparse Attention🔥

- 📖KV Cache Scheduling/Quantize/Dropping🔥

- 📖Prompt/Context Compression🔥

- 📖Long Context Attention/KV Cache Optimization🔥🔥

- 📖Early-Exit/Intermediate Layer Decoding

- 📖Parallel Decoding/Sampling🔥

- 📖Structured Prune/KD/Weight Sparse

- 📖Mixture-of-Experts(MoE) LLM Inference🔥

- 📖CPU/NPU/FPGA/Mobile Inference

- 📖Non Transformer Architecture🔥

- 📖GEMM/Tensor Cores/WMMA/Parallel

- 📖VLM/Position Embed/Others

📖Trending LLM/VLM Topics (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2024.04 | 🔥🔥🔥[Open-Sora] Open-Sora: Democratizing Efficient Video Production for All(@hpcaitech) | [docs] |

[Open-Sora]

|

⭐️⭐️ |

| 2024.04 | 🔥🔥🔥[Open-Sora Plan] Open-Sora Plan: This project aim to reproduce Sora (Open AI T2V model)(@PKU) | [report] |

[Open-Sora-Plan]

|

⭐️⭐️ |

| 2024.05 | 🔥🔥🔥[DeepSeek-V2] DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model(@DeepSeek-AI) | [pdf] |

[DeepSeek-V2]

|

⭐️⭐️ |

| 2024.05 | 🔥🔥[YOCO] You Only Cache Once: Decoder-Decoder Architectures for Language Models(@Microsoft) | [pdf] |

[unilm-YOCO]

|

⭐️⭐️ |

| 2024.06 | 🔥[Mooncake] Mooncake: A KVCache-centric Disaggregated Architecture for LLM Serving(@Moonshot AI) | [pdf] |

[Mooncake]

|

⭐️⭐️ |

| 2024.07 | 🔥🔥[FlashAttention-3] FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precision(@TriDao etc) | [pdf] |

[flash-attention]

|

⭐️⭐️ |

| 2024.07 | 🔥🔥[MInference 1.0] MInference 1.0: Accelerating Pre-filling for Long-Context LLMs via Dynamic Sparse Attention(@Microsoft) | [pdf] |

[MInference 1.0]

|

⭐️⭐️ |

| 2024.11 | 🔥🔥🔥[Star-Attention: 11x~ speedup] Star Attention: Efficient LLM Inference over Long Sequences(@NVIDIA) | [pdf] |

[Star-Attention]

|

⭐️⭐️ |

| 2024.12 | 🔥🔥🔥[DeepSeek-V3] DeepSeek-V3 Technical Report(@deepseek-ai) | [pdf] |

[DeepSeek-V3]

|

⭐️⭐️ |

| 2025.01 | 🔥🔥🔥 [MiniMax-Text-01] MiniMax-01: Scaling Foundation Models with Lightning Attention | [report] |

[MiniMax-01]

|

⭐️⭐️ |

| 2025.01 | 🔥🔥🔥[DeepSeek-R1] DeepSeek-R1 Technical Report(@deepseek-ai) | [pdf] |

[DeepSeek-R1]

|

⭐️⭐️ |

📖DeepSeek/Multi-head Latent Attention(MLA) (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2024.05 | 🔥🔥🔥[DeepSeek-V2] DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model(@DeepSeek-AI) | [pdf] |

[DeepSeek-V2]

|

⭐️⭐️ |

| 2024.12 | 🔥🔥🔥[DeepSeek-V3] DeepSeek-V3 Technical Report(@deepseek-ai) | [pdf] |

[DeepSeek-V3]

|

⭐️⭐️ |

| 2025.01 | 🔥🔥🔥[DeepSeek-R1] DeepSeek-R1 Technical Report(@deepseek-ai) | [pdf] |

[DeepSeek-R1]

|

⭐️⭐️ |

| 2025.02 | 🔥🔥🔥[TransMLA] TransMLA: Multi-head Latent Attention Is All You Need(@PKU) | [pdf] |

[TransMLA]

|

⭐️⭐️ |

| 2025.02 | 🔥🔥🔥[DeepSeek-NSA] Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention(@deepseek-ai) | [pdf] | ⭐️⭐️ | |

| 2025.02 | 🔥🔥🔥[FlashMLA] FlashMLA(@deepseek-ai) |

[FlashMLA]

|

⭐️⭐️ | |

| 2025.02 | 🔥🔥🔥[MHA2MLA] Towards Economical Inference: Enabling DeepSeek’s Multi-Head Latent Attention in Any Transformer-based LLMs(@fudan.edu.cn) | [pdf] |

[MHA2MLA]

|

⭐️⭐️ |

| 2025.02 | 🔥🔥🔥[DualPipe] DualPipe(@deepseek-ai) |

[DualPipe]

|

⭐️⭐️ | |

| 2025.02 | 🔥🔥🔥[DeepEP] DeepEP(@deepseek-ai) |

[DeepEP]

|

⭐️⭐️ | |

| 2025.02 | 🔥🔥🔥[DeepGEMM] DeepGEMM(@deepseek-ai) |

[DeepGEMM]

|

⭐️⭐️ | |

| 2025.02 | 🔥🔥🔥[EPLB] EPLB(@deepseek-ai) |

[EPLB]

|

⭐️⭐️ | |

| 2025.02 | 🔥🔥🔥[3FS] 3FS(@deepseek-ai) |

[3FS]

|

⭐️⭐️ | |

| 2025.03 | 🔥🔥🔥[推理系统] DeepSeek-V3 / R1 推理系统概览 (@deepseek-ai) | [blog] | ⭐️⭐️ |

📖DP/MP/PP/TP/SP/CP Parallelism (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2019.10 | 🔥🔥[MP: ZeRO] DeepSpeed-ZeRO: Memory Optimizations Toward Training Trillion Parameter Models(@microsoft.com) | [pdf] |

[deepspeed]

|

⭐️⭐️ |

| 2020.05 | 🔥🔥[TP: Megatron-LM] Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism(@NVIDIA) | [pdf] |

[Megatron-LM]

|

⭐️⭐️ |

| 2022.05 | 🔥🔥[SP: Megatron-LM] Megatron-LM: Reducing Activation Recomputation in Large Transformer Models(@NVIDIA) | [pdf] |

[Megatron-LM]

|

⭐️⭐️ |

| 2023.05 | 🔥🔥[SP: BPT] Blockwise Parallel Transformer for Large Context Models(@UC Berkeley) | [pdf] |

[RingAttention]

|

⭐️⭐️ |

| 2023.10 | 🔥🔥[SP: Ring Attention] Ring Attention with Blockwise Transformers for Near-Infinite Context(@UC Berkeley) | [pdf] |

[RingAttention]

|

⭐️⭐️ |

| 2023.11 | 🔥🔥[SP: STRIPED ATTENTION] STRIPED ATTENTION: FASTER RING ATTENTION FOR CAUSAL TRANSFORMERS(@MIT etc) | [pdf] |

[striped_attention]

|

⭐️⭐️ |

| 2023.10 | 🔥🔥[SP: DEEPSPEED ULYSSES] DEEPSPEED ULYSSES: SYSTEM OPTIMIZATIONS FOR ENABLING TRAINING OF EXTREME LONG SEQUENCE TRANSFORMER MODELS(@microsoft.com) | [pdf] |

[deepspeed]

|

⭐️⭐️ |

| 2024.03 | 🔥🔥[CP: Megatron-LM] Megatron-LM: Context parallelism overview(@NVIDIA) | [docs] |

[Megatron-LM]

|

⭐️⭐️ |

| 2024.05 | 🔥🔥[SP: Unified Sequence Parallel (USP)] YunChang: A Unified Sequence Parallel (USP) Attention for Long Context LLM Model Training and Inference(@Tencent) | [pdf] |

[long-context-attention]

|

⭐️⭐️ |

| 2024.11 | 🔥🔥[CP: Meta] Context Parallelism for Scalable Million-Token Inference(@Meta Platforms, Inc) | [pdf] | ⭐️⭐️ | |

| 2024.11 | 🔥🔥[TP: Comm Compression] Communication Compression for Tensor Parallel LLM Inference(@recogni.com) | [pdf] | ⭐️⭐️ | |

| 2024.11 | 🔥🔥🔥[SP: Star-Attention, 11x~ speedup] Star Attention: Efficient LLM Inference over Long Sequences(@NVIDIA) | [pdf] |

[Star-Attention]

|

⭐️⭐️ |

| 2024.12 | 🔥🔥[SP: TokenRing] TokenRing: An Efficient Parallelism Framework for Infinite-Context LLMs via Bidirectional Communication(@SJTU) | [pdf] |

[token-ring]

|

⭐️⭐️ |

📖Disaggregating Prefill and Decoding (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2024.01 | 🔥🔥[DistServe] DistServe: Disaggregating Prefill and Decoding for Goodput-optimized Large Language Model Serving(@PKU) | [pdf] |

[DistServe]

|

⭐️⭐️ |

| 2024.06 | 🔥🔥[Mooncake] Mooncake: A KVCache-centric Disaggregated Architecture for LLM Serving(@Moonshot AI) | [pdf] |

[Mooncake]

|

⭐️⭐️ |

| 2024.12 | 🔥🔥[KVDirect] KVDirect: Distributed Disaggregated LLM Inference(@ByteDance) | [pdf] | ⭐️ | |

| 2025.01 | 🔥🔥[DeServe] DESERVE: TOWARDS AFFORDABLE OFFLINE LLM INFERENCE VIA DECENTRALIZATION(@Berkeley) | [pdf] | ⭐️ |

📖LLM Algorithmic/Eval Survey (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2023.10 | [Evaluating] Evaluating Large Language Models: A Comprehensive Survey(@tju.edu.cn) | [pdf] |

[Awesome-LLMs-Evaluation]

|

⭐️ |

| 2023.11 | 🔥[Runtime Performance] Dissecting the Runtime Performance of the Training, Fine-tuning, and Inference of Large Language Models(@hkust-gz.edu.cn) | [pdf] | ⭐️⭐️ | |

| 2023.11 | [ChatGPT Anniversary] ChatGPT’s One-year Anniversary: Are Open-Source Large Language Models Catching up?(@e.ntu.edu.sg) | [pdf] | ⭐️ | |

| 2023.12 | [Algorithmic Survey] The Efficiency Spectrum of Large Language Models: An Algorithmic Survey(@Microsoft) | [pdf] | ⭐️ | |

| 2023.12 | [Security and Privacy] A Survey on Large Language Model (LLM) Security and Privacy: The Good, the Bad, and the Ugly(@Drexel University) | [pdf] | ⭐️ | |

| 2023.12 | 🔥[LLMCompass] A Hardware Evaluation Framework for Large Language Model Inference(@princeton.edu) | [pdf] | ⭐️⭐️ | |

| 2023.12 | 🔥[Efficient LLMs] Efficient Large Language Models: A Survey(@Ohio State University etc) | [pdf] |

[Efficient-LLMs-Survey]

|

⭐️⭐️ |

| 2023.12 | [Serving Survey] Towards Efficient Generative Large Language Model Serving: A Survey from Algorithms to Systems(@Carnegie Mellon University) | [pdf] | ⭐️⭐️ | |

| 2024.01 | [Understanding LLMs] Understanding LLMs: A Comprehensive Overview from Training to Inference(@Shaanxi Normal University etc) | [pdf] | ⭐️⭐️ | |

| 2024.02 | [LLM-Viewer] LLM Inference Unveiled: Survey and Roofline Model Insights(@Zhihang Yuan etc) | [pdf] |

[LLM-Viewer]

|

⭐️⭐️ |

| 2024.07 | [Internal Consistency & Self-Feedback] Internal Consistency and Self-Feedback in Large Language Models: A Survey | [pdf] |

[ICSF-Survey]

|

⭐️⭐️ |

| 2024.09 | [Low-bit] A Survey of Low-bit Large Language Models: Basics, Systems, and Algorithms(@Beihang etc) | [pdf] | ⭐️⭐️ | |

| 2024.10 | [LLM Inference] LARGE LANGUAGE MODEL INFERENCE ACCELERATION: A COMPREHENSIVE HARDWARE PERSPECTIVE(@SJTU etc) | [pdf] | ⭐️⭐️ |

📖LLM Train/Inference Framework/Design (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2020.05 | 🔥[Megatron-LM] Training Multi-Billion Parameter Language Models Using Model Parallelism(@NVIDIA) | [pdf] |

[Megatron-LM]

|

⭐️⭐️ |

| 2023.03 | [FlexGen] High-Throughput Generative Inference of Large Language Models with a Single GPU(@Stanford University etc) | [pdf] |

[FlexGen]

|

⭐️ |

| 2023.05 | [SpecInfer] Accelerating Generative Large Language Model Serving with Speculative Inference and Token Tree Verification(@Peking University etc) | [pdf] |

[FlexFlow]

|

⭐️ |

| 2023.05 | [FastServe] Fast Distributed Inference Serving for Large Language Models(@Peking University etc) | [pdf] | ⭐️ | |

| 2023.09 | 🔥[vLLM] Efficient Memory Management for Large Language Model Serving with PagedAttention(@UC Berkeley etc) | [pdf] |

[vllm]

|

⭐️⭐️ |

| 2023.09 | [StreamingLLM] EFFICIENT STREAMING LANGUAGE MODELS WITH ATTENTION SINKS(@Meta AI etc) | [pdf] |

[streaming-llm]

|

⭐️ |

| 2023.09 | [Medusa] Medusa: Simple Framework for Accelerating LLM Generation with Multiple Decoding Heads(@Tianle Cai etc) | [blog] |

[Medusa]

|

⭐️ |

| 2023.10 | 🔥[TensorRT-LLM] NVIDIA TensorRT LLM(@NVIDIA) | [docs] |

[TensorRT-LLM]

|

⭐️⭐️ |

| 2023.11 | 🔥[DeepSpeed-FastGen 2x vLLM?] DeepSpeed-FastGen: High-throughput Text Generation for LLMs via MII and DeepSpeed-Inference(@Microsoft) | [pdf] |

[deepspeed-fastgen]

|

⭐️⭐️ |

| 2023.12 | 🔥[PETALS] Distributed Inference and Fine-tuning of Large Language Models Over The Internet(@HSE Univesity etc) | [pdf] |

[petals]

|

⭐️⭐️ |

| 2023.10 | [LightSeq] LightSeq: Sequence Level Parallelism for Distributed Training of Long Context Transformers(@UC Berkeley etc) | [pdf] |

[LightSeq]

|

⭐️ |

| 2023.12 | [PowerInfer] PowerInfer: Fast Large Language Model Serving with a Consumer-grade GPU(@SJTU) | [pdf] |

[PowerInfer]

|

⭐️ |

| 2024.01 | [inferflow]INFERFLOW: AN EFFICIENT AND HIGHLY CONFIGURABLE INFERENCE ENGINE FOR LARGE LANGUAGE MODELS(@Tencent AI Lab) | [pdf] |

[inferflow]

|

⭐️ |

| 2024.06 | 🔥[Mooncake] Mooncake: A KVCache-centric Disaggregated Architecture for LLM Serving(@Moonshot AI) | [pdf] |

[Mooncake]

|

⭐️⭐️ |

| 2023.06 | 🔥[LMDeploy] LMDeploy: LMDeploy is a toolkit for compressing, deploying, and serving LLMs(@InternLM) | [docs] |

[lmdeploy]

|

⭐️⭐️ |

| 2023.05 | 🔥[MLC-LLM]Universal LLM Deployment Engine with ML Compilation(@mlc-ai) | [docs] |

[mlc-llm]

|

⭐️⭐️ |

| 2023.08 | 🔥[LightLLM] LightLLM is a Python-based LLM (Large Language Model) inference and serving framework(@ModelTC) | [docs] |

[lightllm]

|

⭐️⭐️ |

| 2023.03 | 🔥[llama.cpp] llama.cpp: Inference of Meta's LLaMA model (and others) in pure C/C++(@ggerganov) | [docs] |

[llama.cpp]

|

⭐️⭐️ |

| 2024.02 | 🔥[flashinfer] FlashInfer: Kernel Library for LLM Serving(@flashinfer-ai) | [docs] |

[flashinfer]

|

⭐️⭐️ |

| 2024.06 | 🔥[Mooncake] Mooncake: A KVCache-centric Disaggregated Architecture for LLM Serving(@Moonshot AI) | [pdf] |

[Mooncake]

|

⭐️⭐️ |

| 2024.07 | 🔥[DynamoLLM] DynamoLLM: Designing LLM Inference Clusters for Performance and Energy Efficiency(@Microsoft Azure Research) | [pdf] | ⭐️ | |

| 2024.08 | 🔥[NanoFlow] NanoFlow: Towards Optimal Large Language Model Serving Throughput(@University of Washington) | [pdf] |

[Nanoflow]

|

⭐️⭐️ |

| 2024.08 | 🔥[Decentralized LLM] Decentralized LLM Inference over Edge Networks with Energy Harvesting(@Padova) | [pdf] | ⭐️ | |

| 2024.11 | 🔥[SparseInfer] SparseInfer: Training-free Prediction of Activation Sparsity for Fast LLM Inference(@University of Seoul, etc) | [pdf] | ⭐️ |

📖Continuous/In-flight Batching (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2022.07 | 🔥[Continuous Batching] Orca: A Distributed Serving System for Transformer-Based Generative Models(@Seoul National University etc) | [pdf] | ⭐️⭐️ | |

| 2023.10 | 🔥[In-flight Batching] NVIDIA TensorRT LLM Batch Manager(@NVIDIA) | [docs] |

[TensorRT-LLM]

|

⭐️⭐️ |

| 2023.11 | 🔥[DeepSpeed-FastGen 2x vLLM?] DeepSpeed-FastGen: High-throughput Text Generation for LLMs via MII and DeepSpeed-Inference(@Microsoft) | [blog] |

[deepspeed-fastgen]

|

⭐️⭐️ |

| 2023.11 | [Splitwise] Splitwise: Efficient Generative LLM Inference Using Phase Splitting(@Microsoft etc) | [pdf] | ⭐️ | |

| 2023.12 | [SpotServe] SpotServe: Serving Generative Large Language Models on Preemptible Instances(@cmu.edu etc) | [pdf] |

[SpotServe]

|

⭐️ |

| 2023.10 | [LightSeq] LightSeq: Sequence Level Parallelism for Distributed Training of Long Context Transformers(@UC Berkeley etc) | [pdf] |

[LightSeq]

|

⭐️ |

| 2024.05 | 🔥[vAttention] vAttention: Dynamic Memory Management for Serving LLMs without PagedAttention(@Microsoft Research India) | [pdf] |

[vAttention]

|

⭐️⭐️ |

| 2024.07 | 🔥🔥[vTensor] vTensor: Flexible Virtual Tensor Management for Efficient LLM Serving(@Shanghai Jiao Tong University etc) | [pdf] |

[vTensor]

|

⭐️⭐️ |

| 2024.08 | 🔥[Automatic Inference Engine Tuning] Towards SLO-Optimized LLM Serving via Automatic Inference Engine Tuning(@Nanjing University etc) | [pdf] | ⭐️⭐️ | |

| 2024.08 | 🔥[SJF Scheduling] Efficient LLM Scheduling by Learning to Rank(@UCSD etc) | [pdf] | ⭐️⭐️ | |

| 2024.12 | 🔥[BatchLLM] BatchLLM: Optimizing Large Batched LLM Inference with Global Prefix Sharing and Throughput-oriented Token Batching(@Microsoft) | [pdf] | ⭐️⭐️ |

📖Weight/Activation Quantize/Compress (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2022.06 | 🔥[ZeroQuant] Efficient and Affordable Post-Training Quantization for Large-Scale Transformers(@Microsoft) | [pdf] |

[DeepSpeed]

|

⭐️⭐️ |

| 2022.08 | [FP8-Quantization] FP8 Quantization: The Power of the Exponent(@Qualcomm AI Research) | [pdf] |

[FP8-quantization]

|

⭐️ |

| 2022.08 | [LLM.int8()] 8-bit Matrix Multiplication for Transformers at Scale(@Facebook AI Research etc) | [pdf] |

[bitsandbytes]

|

⭐️ |

| 2022.10 | 🔥[GPTQ] GPTQ: ACCURATE POST-TRAINING QUANTIZATION FOR GENERATIVE PRE-TRAINED TRANSFORMERS(@IST Austria etc) | [pdf] |

[gptq]

|

⭐️⭐️ |

| 2022.11 | 🔥[WINT8/4] Who Says Elephants Can’t Run: Bringing Large Scale MoE Models into Cloud Scale Production(@NVIDIA&Microsoft) | [pdf] |

[FasterTransformer]

|

⭐️⭐️ |

| 2022.11 | 🔥[SmoothQuant] Accurate and Efficient Post-Training Quantization for Large Language Models(@MIT etc) | [pdf] |

[smoothquant]

|

⭐️⭐️ |

| 2023.03 | [ZeroQuant-V2] Exploring Post-training Quantization in LLMs from Comprehensive Study to Low Rank Compensation(@Microsoft) | [pdf] |

[DeepSpeed]

|

⭐️ |

| 2023.06 | 🔥[AWQ] AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration(@MIT etc) | [pdf] |

[llm-awq]

|

⭐️⭐️ |

| 2023.06 | [SpQR] SpQR: A Sparse-Quantized Representation for Near-Lossless LLM Weight Compression(@University of Washington etc) | [pdf] |

[SpQR]

|

⭐️ |

| 2023.06 | [SqueezeLLM] SQUEEZELLM: DENSE-AND-SPARSE QUANTIZATION(@berkeley.edu) | [pdf] |

[SqueezeLLM]

|

⭐️ |

| 2023.07 | [ZeroQuant-FP] A Leap Forward in LLMs Post-Training W4A8 Quantization Using Floating-Point Formats(@Microsoft) | [pdf] |

[DeepSpeed]

|

⭐️ |

| 2023.09 | [KV Cache FP8 + WINT4] Exploration on LLM inference performance optimization(@HPC4AI) | [blog] | ⭐️ | |

| 2023.10 | [FP8-LM] FP8-LM: Training FP8 Large Language Models(@Microsoft etc) | [pdf] |

[MS-AMP]

|

⭐️ |

| 2023.10 | [LLM-Shearing] SHEARED LLAMA: ACCELERATING LANGUAGE MODEL PRE-TRAINING VIA STRUCTURED PRUNING(@cs.princeton.edu etc) | [pdf] |

[LLM-Shearing]

|

⭐️ |

| 2023.10 | [LLM-FP4] LLM-FP4: 4-Bit Floating-Point Quantized Transformers(@ust.hk&meta etc) | [pdf] |

[LLM-FP4]

|

⭐️ |

| 2023.11 | [2-bit LLM] Enabling Fast 2-bit LLM on GPUs: Memory Alignment, Sparse Outlier, and Asynchronous Dequantization(@Shanghai Jiao Tong University etc) | [pdf] | ⭐️ | |

| 2023.12 | [SmoothQuant+] SmoothQuant+: Accurate and Efficient 4-bit Post-Training Weight Quantization for LLM(@ZTE Corporation) | [pdf] |

[smoothquantplus]

|

⭐️ |

| 2023.11 | [OdysseyLLM W4A8] A Speed Odyssey for Deployable Quantization of LLMs(@meituan.com) | [pdf] | ⭐️ | |

| 2023.12 | 🔥[SparQ] SPARQ ATTENTION: BANDWIDTH-EFFICIENT LLM INFERENCE(@graphcore.ai) | [pdf] | ⭐️⭐️ | |

| 2023.12 | [Agile-Quant] Agile-Quant: Activation-Guided Quantization for Faster Inference of LLMs on the Edge(@Northeastern University&Oracle) | [pdf] | ⭐️ | |

| 2023.12 | [CBQ] CBQ: Cross-Block Quantization for Large Language Models(@ustc.edu.cn) | [pdf] | ⭐️ | |

| 2023.10 | [QLLM] QLLM: ACCURATE AND EFFICIENT LOW-BITWIDTH QUANTIZATION FOR LARGE LANGUAGE MODELS(@ZIP Lab&SenseTime Research etc) | [pdf] | ⭐️ | |

| 2024.01 | [FP6-LLM] FP6-LLM: Efficiently Serving Large Language Models Through FP6-Centric Algorithm-System Co-Design(@Microsoft etc) | [pdf] | ⭐️ | |

| 2024.05 | 🔥🔥[W4A8KV4] QServe: W4A8KV4 Quantization and System Co-design for Efficient LLM Serving(@MIT&NVIDIA) | [pdf] |

[qserve]

|

⭐️⭐️ |

| 2024.05 | 🔥[SpinQuant] SpinQuant: LLM Quantization with Learned Rotations(@Meta) | [pdf] | ⭐️ | |

| 2024.05 | 🔥[I-LLM] I-LLM: Efficient Integer-Only Inference for Fully-Quantized Low-Bit Large Language Models(@Houmo AI) | [pdf] | ⭐️ | |

| 2024.06 | 🔥[OutlierTune] OutlierTune: Efficient Channel-Wise Quantization for Large Language Models(@Beijing University) | [pdf] | ⭐️ | |

| 2024.06 | 🔥[GPTQT] GPTQT: Quantize Large Language Models Twice to Push the Efficiency(@zju) | [pdf] | ⭐️ | |

| 2024.08 | 🔥[ABQ-LLM] ABQ-LLM: Arbitrary-Bit Quantized Inference Acceleration for Large Language Models(@ByteDance) | [pdf] |

[ABQ-LLM]

|

⭐️ |

| 2024.08 | 🔥[1-bit LLMs] Matmul or No Matmal in the Era of 1-bit LLMs(@University of South Carolina) | [pdf] | ⭐️ | |

| 2024.08 | 🔥[ACTIVATION SPARSITY] TRAINING-FREE ACTIVATION SPARSITY IN LARGE LANGUAGE MODELS(@MIT etc) | [pdf] |

[TEAL]

|

⭐️ |

| 2024.09 | 🔥[VPTQ] VPTQ: EXTREME LOW-BIT VECTOR POST-TRAINING QUANTIZATION FOR LARGE LANGUAGE MODELS(@Microsoft) | [pdf] |

[VPTQ]

|

⭐️ |

| 2024.11 | 🔥[BitNet] BitNet a4.8: 4-bit Activations for 1-bit LLMs(@Microsoft) | [pdf] |

[bitnet]

|

⭐️ |

📖IO/FLOPs-Aware/Sparse Attention (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2018.05 | [Online Softmax] Online normalizer calculation for softmax(@NVIDIA) | [pdf] | ⭐️ | |

| 2019.11 | 🔥[MQA] Fast Transformer Decoding: One Write-Head is All You Need(@Google) | [pdf] | ⭐️⭐️ | |

| 2020.10 | [Hash Attention] REFORMER: THE EFFICIENT TRANSFORMER(@Google) | [pdf] |

[reformer]

|

⭐️⭐️ |

| 2022.05 | 🔥[FlashAttention] Fast and Memory-Efficient Exact Attention with IO-Awareness(@Stanford University etc) | [pdf] |

[flash-attention]

|

⭐️⭐️ |

| 2022.10 | [Online Softmax] SELF-ATTENTION DOES NOT NEED O(n^2) MEMORY(@Google) | [pdf] | ⭐️ | |

| 2023.05 | [FlashAttention] From Online Softmax to FlashAttention(@cs.washington.edu) | [pdf] | ⭐️⭐️ | |

| 2023.05 | [FLOP, I/O] Dissecting Batching Effects in GPT Inference(@Lequn Chen) | [blog] | ⭐️ | |

| 2023.05 | 🔥🔥[GQA] GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints(@Google) | [pdf] |

[flaxformer]

|

⭐️⭐️ |

| 2023.06 | [Sparse FlashAttention] Faster Causal Attention Over Large Sequences Through Sparse Flash Attention(@EPFL etc) | [pdf] |

[dynamic-sparse-flash-attention]

|

⭐️ |

| 2023.07 | 🔥[FlashAttention-2] Faster Attention with Better Parallelism and Work Partitioning(@Stanford University etc) | [pdf] |

[flash-attention]

|

⭐️⭐️ |

| 2023.10 | 🔥[Flash-Decoding] Flash-Decoding for long-context inference(@Stanford University etc) | [blog] |

[flash-attention]

|

⭐️⭐️ |

| 2023.11 | [Flash-Decoding++] FLASHDECODING++: FASTER LARGE LANGUAGE MODEL INFERENCE ON GPUS(@Tsinghua University&Infinigence-AI) | [pdf] | ⭐️ | |

| 2023.01 | [SparseGPT] SparseGPT: Massive Language Models Can be Accurately Pruned in One-Shot(@ISTA etc) | [pdf] |

[sparsegpt]

|

⭐️ |

| 2023.12 | 🔥[GLA] Gated Linear Attention Transformers with Hardware-Efficient Training(@MIT-IBM Watson AI) | [pdf] |

gated_linear_attention

|

⭐️⭐️ |

| 2023.12 | [SCCA] SCCA: Shifted Cross Chunk Attention for long contextual semantic expansion(@Beihang University) | [pdf] | ⭐️ | |

| 2023.12 | 🔥[FlashLLM] LLM in a flash: Efficient Large Language Model Inference with Limited Memory(@Apple) | [pdf] | ⭐️⭐️ | |

| 2024.03 | 🔥🔥[CHAI] CHAI: Clustered Head Attention for Efficient LLM Inference(@cs.wisc.edu etc) | [pdf] | ⭐️⭐️ | |

| 2024.04 | 🔥🔥[DeFT] DeFT: Decoding with Flash Tree-Attention for Efficient Tree-structured LLM Inference(@Westlake University etc) | [pdf] | ⭐️⭐️ | |

| 2024.04 | [MoA] MoA: Mixture of Sparse Attention for Automatic Large Language Model Compression(@thu et el.) | [pdf] |

[MoA]

|

⭐️ |

| 2024.07 | 🔥🔥[FlashAttention-3] FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precision(@TriDao etc) | [pdf] |

[flash-attention]

|

⭐️⭐️ |

| 2024.07 | 🔥🔥[MInference 1.0] MInference 1.0: Accelerating Pre-filling for Long-Context LLMs via Dynamic Sparse Attention(@Microsoft) | [pdf] |

[MInference 1.0]

|

⭐️⭐️ |

| 2024.07 | 🔥🔥[Shared Attention] Beyond KV Caching: Shared Attention for Efficient LLMs(@Kyushu University etc) | [pdf] |

[shareAtt]

|

⭐️ |

| 2024.09 | 🔥🔥[CHESS] CHESS : Optimizing LLM Inference via Channel-Wise Thresholding and Selective Sparsification(@Wuhan University) | [pdf] | ⭐️⭐️ | |

| 2024.09 | 🔥🔥[INT-FLASHATTENTION] INT-FLASHATTENTION: ENABLING FLASH ATTENTION FOR INT8 QUANTIZATION(@PKU etc) | [pdf] |

[INT-FlashAttention]

|

⭐️ |

| 2024.10 | 🔥🔥[SageAttention] SAGEATTENTION: ACCURATE 8-BIT ATTENTION FOR PLUG-AND-PLAY INFERENCE ACCELERATION(@thu-ml) | [pdf] |

[SageAttention]

|

⭐️⭐️ |

| 2024.11 | 🔥🔥[SageAttention-2] SageAttention2: Efficient Attention with Thorough Outlier Smoothing and Per-thread INT4 Quantization(@thu-ml) | [pdf] |

[SageAttention]

|

⭐️⭐️ |

| 2024.11 | 🔥🔥[Squeezed Attention] SQUEEZED ATTENTION: Accelerating Long Context Length LLM Inference(@UC Berkeley) | [pdf] |

[SqueezedAttention]

|

⭐️⭐️ |

| 2024.12 | 🔥🔥[TurboAttention] TURBOATTENTION: EFFICIENT ATTENTION APPROXIMATION FOR HIGH THROUGHPUTS LLMS(@Microsoft) | [pdf] | ⭐️⭐️ | |

| 2025.01 | 🔥🔥[FFPA] FFPA: Yet another Faster Flash Prefill Attention with O(1) SRAM complexity for headdim > 256, ~1.5x faster than SDPA EA(@DefTruth) | [docs] |

[ffpa-attn-mma]

|

⭐️⭐️ |

| 2024.11 | 🔥🔥[SpargeAttention] SpargeAttn: Accurate Sparse Attention Accelerating Any Model Inference(@thu-ml) | [pdf] |

[SpargeAttn]

|

⭐️⭐️ |

📖KV Cache Scheduling/Quantize/Dropping (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2019.11 | 🔥[MQA] Fast Transformer Decoding: One Write-Head is All You Need(@Google) | [pdf] | ⭐️⭐️ | |

| 2022.06 | [LTP] Learned Token Pruning for Transformers(@UC Berkeley etc) | [pdf] |

[LTP]

|

⭐️ |

| 2023.05 | 🔥🔥[GQA] GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints(@Google) | [pdf] |

[flaxformer]

|

⭐️⭐️ |

| 2023.05 | [KV Cache Compress] Scissorhands: Exploiting the Persistence of Importance Hypothesis for LLM KV Cache Compression at Test Time(@) | [pdf] | ⭐️⭐️ | |

| 2023.06 | [H2O] H2O: Heavy-Hitter Oracle for Efficient Generative Inference of Large Language Models(@Rice University etc) | [pdf] |

[H2O]

|

⭐️ |

| 2023.06 | [QK-Sparse/Dropping Attention] Faster Causal Attention Over Large Sequences Through Sparse Flash Attention(@EPFL etc) | [pdf] |

[dynamic-sparse-flash-attention]

|

⭐️ |

| 2023.08 | 🔥🔥[Chunked Prefills] SARATHI: Efficient LLM Inference by Piggybacking Decodes with Chunked Prefills(@Microsoft etc) | [pdf] | ⭐️⭐️ | |

| 2023.09 | 🔥🔥[PagedAttention] Efficient Memory Management for Large Language Model Serving with PagedAttention(@UC Berkeley etc) | [pdf] |

[vllm]

|

⭐️⭐️ |

| 2023.09 | [KV Cache FP8 + WINT4] Exploration on LLM inference performance optimization(@HPC4AI) | [blog] | ⭐️ | |

| 2023.10 | 🔥[TensorRT-LLM KV Cache FP8] NVIDIA TensorRT LLM(@NVIDIA) | [docs] |

[TensorRT-LLM]

|

⭐️⭐️ |

| 2023.10 | 🔥[Adaptive KV Cache Compress] MODEL TELLS YOU WHAT TO DISCARD: ADAPTIVE KV CACHE COMPRESSION FOR LLMS(@illinois.eduµsoft) | [pdf] | ⭐️⭐️ | |

| 2023.10 | [CacheGen] CacheGen: Fast Context Loading for Language Model Applications(@Chicago University&Microsoft) | [pdf] |

[LMCache]

|

⭐️ |

| 2023.12 | [KV-Cache Optimizations] Leveraging Speculative Sampling and KV-Cache Optimizations Together for Generative AI using OpenVINO(@Haim Barad etc) | [pdf] | ⭐️ | |

| 2023.12 | [KV Cache Compress with LoRA] Compressed Context Memory for Online Language Model Interaction (@SNU & NAVER AI) | [pdf] |

[Compressed-Context-Memory]

|

⭐️⭐️ |

| 2023.12 | 🔥🔥[RadixAttention] Efficiently Programming Large Language Models using SGLang(@Stanford University etc) | [pdf] |

[sglang]

|

⭐️⭐️ |

| 2024.01 | 🔥🔥[DistKV-LLM] Infinite-LLM: Efficient LLM Service for Long Context with DistAttention and Distributed KVCache(@Alibaba etc) | [pdf] | ⭐️⭐️ | |

| 2024.02 | 🔥🔥[Prompt Caching] Efficient Prompt Caching via Embedding Similarity(@UC Berkeley) | [pdf] | ⭐️⭐️ | |

| 2024.02 | 🔥🔥[Less] Get More with LESS: Synthesizing Recurrence with KV Cache Compression for Efficient LLM Inference(@CMU etc) | [pdf] | ⭐️ | |

| 2024.02 | 🔥🔥[MiKV] No Token Left Behind: Reliable KV Cache Compression via Importance-Aware Mixed Precision Quantization(@KAIST) | [pdf] | ⭐️ | |

| 2024.02 | 🔥🔥[Shared Prefixes] Hydragen: High-Throughput LLM Inference with Shared Prefixes | [pdf] | ⭐️⭐️ | |

| 2024.02 | 🔥🔥[ChunkAttention] ChunkAttention: Efficient Self-Attention with Prefix-Aware KV Cache and Two-Phase Partition(@microsoft.com) | [pdf] |

[chunk-attention]

|

⭐️⭐️ |

| 2024.03 | 🔥[QAQ] QAQ: Quality Adaptive Quantization for LLM KV Cache(@@smail.nju.edu.cn) | [pdf] |

[QAQ-KVCacheQuantization]

|

⭐️⭐️ |

| 2024.03 | 🔥🔥[DMC] Dynamic Memory Compression: Retrofitting LLMs for Accelerated Inference(@NVIDIA etc) | [pdf] | ⭐️⭐️ | |

| 2024.03 | 🔥🔥[Keyformer] Keyformer: KV Cache reduction through key tokens selection for Efficient Generative Inference(@ece.ubc.ca etc) | [pdf] |

[Keyformer]

|

⭐️⭐️ |

| 2024.03 | [FASTDECODE] FASTDECODE: High-Throughput GPU-Efficient LLM Serving using Heterogeneous(@Tsinghua University) | [pdf] | ⭐️⭐️ | |

| 2024.03 | [Sparsity-Aware KV Caching] ALISA: Accelerating Large Language Model Inference via Sparsity-Aware KV Caching(@ucf.edu) | [pdf] | ⭐️⭐️ | |

| 2024.03 | 🔥[GEAR] GEAR: An Efficient KV Cache Compression Recipe for Near-Lossless Generative Inference of LLM(@gatech.edu) | [pdf] |

[GEAR]

|

⭐️ |

| 2024.04 | [SqueezeAttention] SQUEEZEATTENTION: 2D Management of KV-Cache in LLM Inference via Layer-wise Optimal Budget(@lzu.edu.cn etc) | [pdf] |

[SqueezeAttention]

|

⭐️⭐️ |

| 2024.04 | [SnapKV] SnapKV: LLM Knows What You are Looking for Before Generation(@UIUC) | [pdf] |

[SnapKV]

|

⭐️ |

| 2024.05 | 🔥[vAttention] vAttention: Dynamic Memory Management for Serving LLMs without PagedAttention(@Microsoft Research India) | [pdf] |

[vAttention]

|

⭐️⭐️ |

| 2024.05 | 🔥[KVCache-1Bit] KV Cache is 1 Bit Per Channel: Efficient Large Language Model Inference with Coupled Quantization(@Rice University) | [pdf] | ⭐️⭐️ | |

| 2024.05 | 🔥[KV-Runahead] KV-Runahead: Scalable Causal LLM Inference by Parallel Key-Value Cache Generation(@Apple etc) | [pdf] | ⭐️⭐️ | |

| 2024.05 | 🔥[ZipCache] ZipCache: Accurate and Efficient KV Cache Quantization with Salient Token Identification(@Zhejiang University etc) | [pdf] | ⭐️⭐️ | |

| 2024.05 | 🔥[MiniCache] MiniCache: KV Cache Compression in Depth Dimension for Large Language Models(@ZIP Lab) | [pdf] | ⭐️⭐️ | |

| 2024.05 | 🔥[CacheBlend] CacheBlend: Fast Large Language Model Serving with Cached Knowledge Fusion(@University of Chicago) | [pdf] |

[LMCache]

|

⭐️⭐️ |

| 2024.06 | 🔥[CompressKV] Effectively Compress KV Heads for LLM(@alibaba etc) | [pdf] | ⭐️⭐️ | |

| 2024.06 | 🔥[MemServe] MemServe: Context Caching for Disaggregated LLM Serving with Elastic Memory Pool(@Huawei Cloud etc) | [pdf] | ⭐️⭐️ | |

| 2024.07 | 🔥[MLKV] MLKV: Multi-Layer Key-Value Heads for Memory Efficient Transformer Decoding(@Institut Teknologi Bandung) | [pdf] |

[pythia-mlkv]

|

⭐️ |

| 2024.07 | 🔥[ThinK] ThinK: Thinner Key Cache by Query-Driven Pruning(@Salesforce AI Research etc) | [pdf] | ⭐️⭐️ | |

| 2024.07 | 🔥[Palu] Palu: Compressing KV-Cache with Low-Rank Projection(@nycu.edu.tw) | [pdf] |

[Palu]

|

⭐️⭐️ |

| 2024.08 | 🔥[Zero-Delay QKV Compression] Zero-Delay QKV Compression for Mitigating KV Cache and Network Bottlenecks in LLM Inference(@University of Virginia) | [pdf] | ⭐️⭐️ | |

| 2024.09 | 🔥[AlignedKV] AlignedKV: Reducing Memory Access of KV-Cache with Precision-Aligned Quantization(@Tsinghua University) | [pdf] |

[AlignedKV]

|

⭐️ |

| 2024.10 | 🔥[LayerKV] Optimizing Large Language Model Serving with Layer-wise KV Cache Management(@Ant Group) | [pdf] | ⭐️⭐️ | |

| 2024.10 | 🔥[AdaKV] Ada-KV: Optimizing KV Cache Eviction by Adaptive Budget Allocation for Efficient LLM Inference (@USTC) | [pdf] |

[AdaKV]

|

⭐️⭐️ |

| 2024.11 | 🔥[KV Cache Recomputation] Efficient LLM Inference with I/O-Aware Partial KV Cache Recomputation(@University of Southern California) | [pdf] | ⭐️⭐️ | |

| 2024.12 | 🔥[ClusterKV] ClusterKV: Manipulating LLM KV Cache in Semantic Space for Recallable Compression(@sjtu) | [pdf] | ⭐️⭐️ | |

| 2024.12 | 🔥[DynamicKV] DynamicKV: Task-Aware Adaptive KV Cache Compression for Long Context LLMs(@xiabinzhou0625 etc) | [pdf] | ⭐️⭐️ | |

| 2025.02 | 🔥[DynamicLLaVA] [ICLR2025] Dynamic-LLaVA: Efficient Multimodal Large Language Models via Dynamic Vision-language Context Sparsification (@ECNU, Xiaohongshu) | [pdf] |

[DynamicLLaVA]

|

⭐️⭐️ |

📖Prompt/Context/KV Compression (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2023.04 | 🔥[Selective-Context] Compressing Context to Enhance Inference Efficiency of Large Language Models(@Surrey) | [pdf] |

Selective-Context

|

⭐️⭐️ |

| 2023.05 | [AutoCompressor] Adapting Language Models to Compress Contextss(@Princeton) | [pdf] |

AutoCompressor

|

⭐️ |

| 2023.10 | 🔥[LLMLingua] LLMLingua: Compressing Prompts for Accelerated Inference of Large Language Models(@Microsoft) | [pdf] |

LLMLingua

|

⭐️⭐️ |

| 2023.10 | 🔥🔥[LongLLMLingua] LongLLMLingua: Accelerating and Enhancing LLMs in Long Context Scenarios via Prompt Compression(@Microsoft) | [pdf] |

LLMLingua

|

⭐️⭐️ |

| 2024.03 | 🔥[LLMLingua-2] LLMLingua-2: Data Distillation for Efficient and Faithful Task-Agnostic Prompt Compression(@Microsoft) | [pdf] |

LLMLingua series

|

⭐️ |

| 2024.08 | 🔥🔥[500xCompressor] 500xCompressor: Generalized Prompt Compression for Large Language Models(@University of Cambridge) | [pdf] | ⭐️⭐️ | |

| 2024.08 | 🔥🔥[Eigen Attention] Eigen Attention: Attention in Low-Rank Space for KV Cache Compression(@purdue.edu) | [pdf] | ⭐️⭐️ | |

| 2024.09 | 🔥🔥[Prompt Compression] Prompt Compression with Context-Aware Sentence Encoding for Fast and Improved LLM Inference(@Alterra AI) | [pdf] | ⭐️⭐️ | |

| 2024.09 | 🔥🔥[Context Distillation] Efficient LLM Context Distillation(@gatech.edu) | [pdf] | ⭐️⭐️ | |

| 2024.09 | 🔥🔥[CRITIPREFILL] CRITIPREFILL: A SEGMENT-WISE CRITICALITYBASED APPROACH FOR PREFILLING ACCELERATION IN LLMS(@OPPO) | [pdf] |

CritiPrefill

|

⭐️ |

| 2024.10 | 🔥🔥[KV-COMPRESS] PAGED KV-CACHE COMPRESSION WITH VARIABLE COMPRESSION RATES PER ATTENTION HEAD(@Cloudflare, inc.) | [pdf] |

vllm-kvcompress

|

⭐️⭐️ |

| 2024.10 | 🔥🔥[LORC] Low-Rank Compression for LLMs KV Cache with a Progressive Compression Strategy(@gatech.edu) | [pdf] | ⭐️⭐️ |

📖Long Context Attention/KV Cache Optimization (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2023.05 | 🔥🔥[Blockwise Attention] Blockwise Parallel Transformer for Large Context Models(@UC Berkeley) | [pdf] | ⭐️⭐️ | |

| 2023.05 | 🔥[Landmark Attention] Random-Access Infinite Context Length for Transformers(@epfl.ch) | [pdf] |

landmark-attention

|

⭐️⭐️ |

| 2023.07 | 🔥[LightningAttention-1] TRANSNORMERLLM: A FASTER AND BETTER LARGE LANGUAGE MODEL WITH IMPROVED TRANSNORMER(@OpenNLPLab) | [pdf] |

TransnormerLLM

|

⭐️⭐️ |

| 2023.07 | 🔥[LightningAttention-2] Lightning Attention-2: A Free Lunch for Handling Unlimited Sequence Lengths in Large Language Models(@OpenNLPLab) | [pdf] |

lightning-attention

|

⭐️⭐️ |

| 2023.10 | 🔥🔥[RingAttention] Ring Attention with Blockwise Transformers for Near-Infinite Context(@UC Berkeley) | [pdf] |

[RingAttention]

|

⭐️⭐️ |

| 2023.11 | 🔥[HyperAttention] HyperAttention: Long-context Attention in Near-Linear Time(@yale&Google) | [pdf] |

hyper-attn

|

⭐️⭐️ |

| 2023.11 | [Streaming Attention] One Pass Streaming Algorithm for Super Long Token Attention Approximation in Sublinear Space(@Adobe Research etc) | [pdf] | ⭐️ | |

| 2023.11 | 🔥[Prompt Cache] PROMPT CACHE: MODULAR ATTENTION REUSE FOR LOW-LATENCY INFERENCE(@Yale University etc) | [pdf] | ⭐️⭐️ | |

| 2023.11 | 🔥🔥[StripedAttention] STRIPED ATTENTION: FASTER RING ATTENTION FOR CAUSAL TRANSFORMERS(@MIT etc) | [pdf] |

[striped_attention]

|

⭐️⭐️ |

| 2024.01 | 🔥🔥[KVQuant] KVQuant: Towards 10 Million Context Length LLM Inference with KV Cache Quantization(@UC Berkeley) | [pdf] |

[KVQuant]

|

⭐️⭐️ |

| 2024.02 | 🔥[RelayAttention] RelayAttention for Efficient Large Language Model Serving with Long System Prompts(@sensetime.com etc) | [pdf] | ⭐️⭐️ | |

| 2024.04 | 🔥🔥[Infini-attention] Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention(@Google) | [pdf] | ⭐️⭐️ | |

| 2024.04 | 🔥🔥[RAGCache] RAGCache: Efficient Knowledge Caching for Retrieval-Augmented Generation(@Peking University&ByteDance Inc) | [pdf] | ⭐️⭐️ | |

| 2024.04 | 🔥🔥[KCache] EFFICIENT LLM INFERENCE WITH KCACHE(@Qiaozhi He, Zhihua Wu) | [pdf] | ⭐️⭐️ | |

| 2024.05 | 🔥🔥[YOCO] You Only Cache Once: Decoder-Decoder Architectures for Language Models(@Microsoft) | [pdf] |

[unilm-YOCO]

|

⭐️⭐️ |

| 2024.05 | 🔥🔥[SKVQ] SKVQ: Sliding-window Key and Value Cache Quantization for Large Language Models(@Shanghai AI Laboratory) | [pdf] | ⭐️⭐️ | |

| 2024.05 | 🔥🔥[CLA] Reducing Transformer Key-Value Cache Size with Cross-Layer Attention(@MIT-IBM) | [pdf] | ⭐️⭐️ | |

| 2024.06 | 🔥[LOOK-M] LOOK-M: Look-Once Optimization in KV Cache for Efficient Multimodal Long-Context Inference(@osu.edu etc) | [pdf] |

[LOOK-M]

|

⭐️⭐️ |

| 2024.06 | 🔥🔥[MInference] MInference 1.0: Accelerating Pre-filling for Long-Context LLMs via Dynamic Sparse Attention(@Microsoft etc) | [pdf] |

[MInference]

|

⭐️⭐️ |

| 2024.06 | 🔥🔥[InfiniGen] InfiniGen: Efficient Generative Inference of Large Language Models with Dynamic KV Cache Management(@snu) | [pdf] | ⭐️⭐️ | |

| 2024.06 | 🔥🔥[Quest] Quest: Query-Aware Sparsity for Efficient Long-Context LLM Inference(@mit-han-lab etc) | [pdf] |

[Quest]

|

⭐️⭐️ |

| 2024.07 | 🔥[PQCache] PQCache: Product Quantization-based KVCache for Long Context LLM Inference(@PKU etc) | [pdf] | ⭐️⭐️ | |

| 2024.08 | 🔥[SentenceVAE] SentenceVAE: Faster, Longer and More Accurate Inference with Next-sentence Prediction for Large Language Models(@TeleAI) | [pdf] | ⭐️⭐️ | |

| 2024.09 | 🔥[InstInfer] InstInfer: In-Storage Attention Offloading for Cost-Effective Long-Context LLM Inference(@PKU etc) | [pdf] | ⭐️⭐️ | |

| 2024.09 | 🔥[RetrievalAttention] RetrievalAttention: Accelerating Long-Context LLM Inference via Vector Retrieval(@microsoft.com) | [pdf] | ⭐️⭐️ | |

| 2024.10 | 🔥[ShadowKV] ShadowKV: KV Cache in Shadows for High-Throughput Long-Context LLM Inference(@CMU & bytedance) | [pdf] |

[ShadowKV]

|

⭐️⭐️ |

| 2025.01 | 🔥🔥🔥 [Lightning Attention] MiniMax-01: Scaling Foundation Models with Lightning Attention | [report] |

[MiniMax-01]

|

⭐️⭐️ |

📖Early-Exit/Intermediate Layer Decoding (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2020.04 | [DeeBERT] DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference(@uwaterloo.ca) | [pdf] | ⭐️ | |

| 2020.04 | [FastBERT] FastBERT: a Self-distilling BERT with Adaptive Inference Time(@PKU) | [pdf] |

[FastBERT]

|

⭐️ |

| 2021.06 | [BERxiT] BERxiT: Early Exiting for BERT with Better Fine-Tuning and Extension to Regression(@uwaterloo.ca) | [pdf] |

[berxit]

|

⭐️ |

| 2023.06 | 🔥[SkipDecode] SkipDecode: Autoregressive Skip Decoding with Batching and Caching for Efficient LLM Inference(@Microsoft) | [pdf] | ⭐️ | |

| 2023.10 | 🔥[LITE] Accelerating LLaMA Inference by Enabling Intermediate Layer Decoding via Instruction Tuning with LITE(@Arizona State University) | [pdf] | ⭐️⭐️ | |

| 2023.12 | 🔥🔥[EE-LLM] EE-LLM: Large-Scale Training and Inference of Early-Exit Large Language Models with 3D Parallelism(@alibaba-inc.com) | [pdf] |

[EE-LLM]

|

⭐️⭐️ |

| 2023.10 | 🔥[FREE] Fast and Robust Early-Exiting Framework for Autoregressive Language Models with Synchronized Parallel Decoding(@KAIST AI&AWS AI) | [pdf] |

[fast_robust_early_exit]

|

⭐️⭐️ |

| 2024.02 | 🔥[EE-Tuning] EE-Tuning: An Economical yet Scalable Solution for Tuning Early-Exit Large Language Models(@alibaba-inc.com) | [pdf] |

[EE-Tuning]

|

⭐️⭐️ |

| 2024.07 | [Skip Attention] Attention Is All You Need But You Don’t Need All Of It For Inference of Large Language Models(@University College London) | [pdf] | ⭐️⭐️ | |

| 2024.08 | [KOALA] KOALA: Enhancing Speculative Decoding for LLM via Multi-Layer Draft Heads with Adversarial Learning(@Dalian University) | [pdf] | ⭐️⭐️ |

📖Parallel Decoding/Sampling (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2018.11 | 🔥[Parallel Decoding] Blockwise Parallel Decoding for Deep Autoregressive Models(@Berkeley&Google) | [pdf] | ⭐️⭐️ | |

| 2023.02 | 🔥[Speculative Sampling] Accelerating Large Language Model Decoding with Speculative Sampling(@DeepMind) | [pdf] | ⭐️⭐️ | |

| 2023.05 | 🔥[Speculative Sampling] Fast Inference from Transformers via Speculative Decoding(@Google Research etc) | [pdf] |

[LLMSpeculativeSampling]

|

⭐️⭐️ |

| 2023.09 | 🔥[Medusa] Medusa: Simple Framework for Accelerating LLM Generation with Multiple Decoding Heads(@Tianle Cai etc) | [pdf] |

[Medusa]

|

⭐️⭐️ |

| 2023.10 | [OSD] Online Speculative Decoding(@UC Berkeley etc) | [pdf] | ⭐️⭐️ | |

| 2023.12 | [Cascade Speculative] Cascade Speculative Drafting for Even Faster LLM Inference(@illinois.edu) | [pdf] | ⭐️ | |

| 2024.02 | 🔥[LookaheadDecoding] Break the Sequential Dependency of LLM Inference Using LOOKAHEAD DECODING(@UCSD&Google&UC Berkeley) | [pdf] |

[LookaheadDecoding]

|

⭐️⭐️ |

| 2024.02 | 🔥🔥[Speculative Decoding] Decoding Speculative Decoding(@cs.wisc.edu) | [pdf] |

Decoding Speculative Decoding

|

⭐️ |

| 2024.04 | 🔥🔥[TriForce] TriForce: Lossless Acceleration of Long Sequence Generation with Hierarchical Speculative Decoding(@cmu.edu&Meta AI) | [pdf] |

[TriForce]

|

⭐️⭐️ |

| 2024.04 | 🔥🔥[Hidden Transfer] Parallel Decoding via Hidden Transfer for Lossless Large Language Model Acceleration(@pku.edu.cn etc) | [pdf] | ⭐️ | |

| 2024.05 | 🔥[Instructive Decoding] INSTRUCTIVE DECODING: INSTRUCTION-TUNED LARGE LANGUAGE MODELS ARE SELF-REFINER FROM NOISY INSTRUCTIONS(@KAIST AI) | [pdf] |

[Instructive-Decoding]

|

⭐️ |

| 2024.05 | 🔥[S3D] S3D: A Simple and Cost-Effective Self-Speculative Decoding Scheme for Low-Memory GPUs(@lge.com) | [pdf] | ⭐️ | |

| 2024.06 | 🔥[Parallel Decoding] Exploring and Improving Drafts in Blockwise Parallel Decoding(@KAIST&Google Research) | [pdf] | ⭐️⭐️ | |

| 2024.07 | 🔥[Multi-Token Speculative Decoding] Multi-Token Joint Speculative Decoding for Accelerating Large Language Model Inference(@University of California, etc) | [pdf] | ⭐️⭐️ | |

| 2024.08 | 🔥[Token Recycling] Turning Trash into Treasure: Accelerating Inference of Large Language Models with Token Recycling(@ir.hit.edu.cn etc) | [pdf] | ⭐️⭐️ | |

| 2024.08 | 🔥[Speculative Decoding] Parallel Speculative Decoding with Adaptive Draft Length(@USTC etc) | [pdf] |

[PEARL]

|

⭐️⭐️ |

| 2024.08 | 🔥[FocusLLM] FocusLLM: Scaling LLM’s Context by Parallel Decoding(@Tsinghua University etc) | [pdf] |

[FocusLLM]

|

⭐️ |

| 2024.08 | 🔥[MagicDec] MagicDec: Breaking the Latency-Throughput Tradeoff for Long Context Generation with Speculative Decoding(@CMU etc) | [pdf] |

[MagicDec]

|

⭐️ |

| 2024.08 | 🔥[Speculative Decoding] Boosting Lossless Speculative Decoding via Feature Sampling and Partial Alignment Distillation(@BIT) | [pdf] | ⭐️⭐️ | |

| 2024.09 | 🔥[Hybrid Inference] Efficient Hybrid Inference for LLMs: Reward-Based Token Modelling with Selective Cloud Assistance | [pdf] | ⭐️⭐️ | |

| 2024.10 | 🔥[PARALLELSPEC] PARALLELSPEC: PARALLEL DRAFTER FOR EFFICIENT SPECULATIVE DECODING(@Tencent AI Lab etc) | [pdf] | ⭐️⭐️ | |

| 2024.10 | 🔥[Fast Best-of-N] Fast Best-of-N Decoding via Speculative Rejection(@CMU etc) | [pdf] | ⭐️⭐️ |

📖Structured Prune/KD/Weight Sparse (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2023.12 | [FLAP] Fluctuation-based Adaptive Structured Pruning for Large Language Models(@Chinese Academy of Sciences etc) | [pdf] |

[FLAP]

|

⭐️⭐️ |

| 2023.12 | 🔥[LASER] The Truth is in There: Improving Reasoning in Language Models with Layer-Selective Rank Reduction(@mit.edu) | [pdf] |

[laser]

|

⭐️⭐️ |

| 2023.12 | [PowerInfer] PowerInfer: Fast Large Language Model Serving with a Consumer-grade GPU(@SJTU) | [pdf] |

[PowerInfer]

|

⭐️ |

| 2024.01 | [Admm Pruning] Fast and Optimal Weight Update for Pruned Large Language Models(@fmph.uniba.sk) | [pdf] |

[admm-pruning]

|

⭐️ |

| 2024.01 | [FFSplit] FFSplit: Split Feed-Forward Network For Optimizing Accuracy-Efficiency Trade-off in Language Model Inference(@1Rice University etc) | [pdf] | ⭐️ |

📖Mixture-of-Experts(MoE) LLM Inference (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2022.11 | 🔥[WINT8/4] Who Says Elephants Can’t Run: Bringing Large Scale MoE Models into Cloud Scale Production(@NVIDIA&Microsoft) | [pdf] |

[FasterTransformer]

|

⭐️⭐️ |

| 2023.12 | 🔥 [Mixtral Offloading] Fast Inference of Mixture-of-Experts Language Models with Offloading(@Moscow Institute of Physics and Technology etc) | [pdf] |

[mixtral-offloading]

|

⭐️⭐️ |

| 2024.01 | [MoE-Mamba] MoE-Mamba: Efficient Selective State Space Models with Mixture of Experts(@uw.edu.pl) | [pdf] | ⭐️ | |

| 2024.04 | [MoE Inference] Toward Inference-optimal Mixture-of-Expert Large Language Models(@UC San Diego etc) | [pdf] | ⭐️ | |

| 2024.05 | 🔥🔥🔥[DeepSeek-V2] DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model(@DeepSeek-AI) | [pdf] |

[DeepSeek-V2]

|

⭐️⭐️ |

| 2024.06 | [MoE] A Survey on Mixture of Experts(@HKU) | [pdf] | ⭐️ |

📖CPU/Single GPU/FPGA/NPU/Mobile Inference (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2023.03 | [FlexGen] High-Throughput Generative Inference of Large Language Models with a Single GPU(@Stanford University etc) | [pdf] |

[FlexGen]

|

⭐️ |

| 2023.11 | [LLM CPU Inference] Efficient LLM Inference on CPUs(@intel) | [pdf] |

[intel-extension-for-transformers]

|

⭐️ |

| 2023.12 | [LinguaLinked] LinguaLinked: A Distributed Large Language Model Inference System for Mobile Devices(@University of California Irvine) | [pdf] | ⭐️ | |

| 2023.12 | [OpenVINO] Leveraging Speculative Sampling and KV-Cache Optimizations Together for Generative AI using OpenVINO(@Haim Barad etc) | [pdf] | ⭐️ | |

| 2024.03 | [FlightLLM] FlightLLM: Efficient Large Language Model Inference with a Complete Mapping Flow on FPGAs(@Infinigence-AI) | [pdf] | ⭐️ | |

| 2024.03 | [Transformer-Lite] Transformer-Lite: High-efficiency Deployment of Large Language Models on Mobile Phone GPUs(@OPPO) | [pdf] | ⭐️ | |

| 2024.07 | 🔥🔥[xFasterTransformer] Inference Performance Optimization for Large Language Models on CPUs(@Intel) | [pdf] |

[xFasterTransformer]

|

⭐️ |

| 2024.07 | [Summary] Inference Optimization of Foundation Models on AI Accelerators(@AWS AI) | [pdf] | ⭐️ | |

| 2024.10 | Large Language Model Performance Benchmarking on Mobile Platforms: A Thorough Evaluation(@SYSU) | [pdf] | ⭐️ | |

| 2024.10 | 🔥🔥[FastAttention] FastAttention: Extend FlashAttention2 to NPUs and Low-resource GPUs for Efficient Inference(@huawei etc) | [pdf] | ⭐️ | |

| 2024.12 | 🔥🔥[NITRO] NITRO: LLM INFERENCE ON INTEL® LAPTOP NPUS(@cornell.edu) | [pdf] |

[nitro]

|

⭐️ |

📖Non Transformer Architecture (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2023.05 | 🔥🔥[RWKV] RWKV: Reinventing RNNs for the Transformer Era(@Bo Peng etc) | [pdf] |

[RWKV-LM]

|

⭐️⭐️ |

| 2023.12 | 🔥🔥[Mamba] Mamba: Linear-Time Sequence Modeling with Selective State Spaces(@cs.cmu.edu etc) | [pdf] |

[mamba]

|

⭐️⭐️ |

| 2024.06 | 🔥🔥[RWKV-CLIP] RWKV-CLIP: A Robust Vision-Language Representation Learner(@DeepGlint etc) | [pdf] |

[RWKV-CLIP]

|

⭐️⭐️ |

| 2024.08 | 🔥🔥[Kraken] Kraken: Inherently Parallel Transformers For Efficient Multi-Device Inference(@Princeton) | [pdf] | ⭐️ | |

| 2024.08 | 🔥🔥[FLA] FLA: A Triton-Based Library for Hardware-Efficient Implementations of Linear Attention Mechanism(@sustcsonglin) | [docs] |

[flash-linear-attention]

|

⭐️⭐️ |

📖GEMM/Tensor Cores/MMA/Parallel (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2018.03 | 🔥🔥[Tensor Core] NVIDIA Tensor Core Programmability, Performance & Precision(@KTH Royal etc) | [pdf] | ⭐️ | |

| 2021.05 | 🔥[Intra-SM Parallelism] Exploiting Intra-SM Parallelism in GPUs via Persistent and Elastic Blocks(@sjtu.edu.cn) | [pdf] | ⭐️ | |

| 2022.06 | [Microbenchmark] Dissecting Tensor Cores via Microbenchmarks: Latency, Throughput and Numeric Behaviors(@tue.nl etc) | [pdf] |

[DissectingTensorCores]

|

⭐️ |

| 2022.09 | 🔥🔥[FP8] FP8 FORMATS FOR DEEP LEARNING(@NVIDIA) | [pdf] | ⭐️ | |

| 2023.08 | 🔥[Tensor Cores] Reducing shared memory footprint to leverage high throughput on Tensor Cores and its flexible API extension library(@Tokyo Institute etc) | [pdf] |

[wmma_extension]

|

⭐️ |

| 2023.03 | 🔥🔥[cutlass/cute] Graphene: An IR for Optimized Tensor Computations on GPUs(@NVIDIA) | [pdf] |

[cutlass]

|

⭐️ |

| 2024.02 | [QUICK] QUICK: Quantization-aware Interleaving and Conflict-free Kernel for efficient LLM inference(@SqueezeBits Inc) | [pdf] |

[QUICK]

|

⭐️⭐️ |

| 2024.02 | [Tensor Parallel] TP-AWARE DEQUANTIZATION(@IBM T.J. Watson Research Center) | [pdf] | ⭐️ | |

| 2024.07 | 🔥🔥[flute] Fast Matrix Multiplications for Lookup Table-Quantized LLMs(@mit.edu etc) | [pdf] |

[flute]

|

⭐️⭐️ |

| 2024.08 | 🔥🔥[LUT TENSOR CORE] LUT TENSOR CORE: Lookup Table Enables Efficient Low-Bit LLM Inference Acceleration(@SJTU&PKU etc) | [pdf] | ⭐️ | |

| 2024.08 | 🔥🔥[MARLIN] MARLIN: Mixed-Precision Auto-Regressive Parallel Inference on Large Language Models(@ISTA) | [pdf] |

[marlin]

|

⭐️⭐️ |

| 2024.08 | 🔥🔥[SpMM] High Performance Unstructured SpMM Computation Using Tensor Cores(@ETH Zurich) | [pdf] | ⭐️ | |

| 2024.09 | 🔥🔥[TEE]Confidential Computing on nVIDIA H100 GPU: A Performance Benchmark Study(@phala.network) | [pdf] | ⭐️ | |

| 2024.09 | 🔥🔥[HiFloat8] Ascend HiFloat8 Format for Deep Learning(@Huawei) | [pdf] | ⭐️ | |

| 2024.09 | 🔥🔥[Tensor Cores] Efficient Arbitrary Precision Acceleration for Large Language Models on GPU Tensor Cores(@nju.edu.cn) | [pdf] | ⭐️ | |

| 2024.07 | 🔥🔥[Tensor Product] Acceleration of Tensor-Product Operations with Tensor Cores(@Heidelberg University) | [pdf] | ⭐️ | |

| 2024.12 | 🔥🔥[HADACORE] HADACORE: TENSOR CORE ACCELERATED HADAMARD TRANSFORM KERNEL(@Meta) | [pdf] |

[hadamard_transform]

|

⭐️ |

| 2024.10 | 🔥🔥[FLASH-ATTENTION RNG] Reducing the Cost of Dropout in Flash-Attention by Hiding RNG with GEMM(@Princeton University) | [pdf] | ⭐️ |

📖VLM/Position Embed/Others (©️back👆🏻)

| Date | Title | Paper | Code | Recom |

|---|---|---|---|---|

| 2021.04 | 🔥[RoPE] ROFORMER: ENHANCED TRANSFORMER WITH ROTARY POSITION EMBEDDING(@Zhuiyi Technology Co., Ltd.) | [pdf] |

[transformers]

|

⭐️ |

| 2022.10 | [ByteTransformer] A High-Performance Transformer Boosted for Variable-Length Inputs(@ByteDance&NVIDIA) | [pdf] |

[ByteTransformer]

|

⭐️ |

| 2024.09 | 🔥[Inf-MLLM] Inf-MLLM: Efficient Streaming Inference of Multimodal Large Language Models on a Single GPU(@sjtu) | [pdf] | ⭐️ | |

| 2024.11 | 🔥[VL-CACHE] VL-CACHE: SPARSITY AND MODALITY-AWARE KV CACHE COMPRESSION FOR VISION-LANGUAGE MODEL INFERENCE ACCELERATION(@g.ucla.edu etc) | [pdf] | ⭐️ | |

| 2025.02 | 🔥[DynamicLLaVA] [ICLR2025] Dynamic-LLaVA: Efficient Multimodal Large Language Models via Dynamic Vision-language Context Sparsification (@ECNU, Xiaohongshu) | [pdf] |

[DynamicLLaVA]

|

⭐️⭐️ |

GNU General Public License v3.0

Welcome to star & submit a PR to this repo!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-LLM-Inference

Similar Open Source Tools

Awesome-LLM-Inference

Awesome-LLM-Inference: A curated list of 📙Awesome LLM Inference Papers with Codes, check 📖Contents for more details. This repo is still updated frequently ~ 👨💻 Welcome to star ⭐️ or submit a PR to this repo!

LLM-Agents-Papers

A repository that lists papers related to Large Language Model (LLM) based agents. The repository covers various topics including survey, planning, feedback & reflection, memory mechanism, role playing, game playing, tool usage & human-agent interaction, benchmark & evaluation, environment & platform, agent framework, multi-agent system, and agent fine-tuning. It provides a comprehensive collection of research papers on LLM-based agents, exploring different aspects of AI agent architectures and applications.

Awesome-LWMs

Awesome Large Weather Models (LWMs) is a curated collection of articles and resources related to large weather models used in AI for Earth and AI for Science. It includes information on various cutting-edge weather forecasting models, benchmark datasets, and research papers. The repository serves as a hub for researchers and enthusiasts to explore the latest advancements in weather modeling and forecasting.

dive-into-llms

The 'Dive into Large Language Models' series programming practice tutorial is an extension of the 'Artificial Intelligence Security Technology' course lecture notes from Shanghai Jiao Tong University (Instructor: Zhang Zhuosheng). It aims to provide introductory programming references related to large models. Through simple practice, it helps students quickly grasp large models, better engage in course design, or academic research. The tutorial covers topics such as fine-tuning and deployment, prompt learning and thought chains, knowledge editing, model watermarking, jailbreak attacks, multimodal models, large model intelligent agents, and security. Disclaimer: The content is based on contributors' personal experiences, internet data, and accumulated research work, provided for reference only.

langfuse

Langfuse is a powerful tool that helps you develop, monitor, and test your LLM applications. With Langfuse, you can: * **Develop:** Instrument your app and start ingesting traces to Langfuse, inspect and debug complex logs, and manage, version, and deploy prompts from within Langfuse. * **Monitor:** Track metrics (cost, latency, quality) and gain insights from dashboards & data exports, collect and calculate scores for your LLM completions, run model-based evaluations, collect user feedback, and manually score observations in Langfuse. * **Test:** Track and test app behaviour before deploying a new version, test expected in and output pairs and benchmark performance before deploying, and track versions and releases in your application. Langfuse is easy to get started with and offers a generous free tier. You can sign up for Langfuse Cloud or deploy Langfuse locally or on your own infrastructure. Langfuse also offers a variety of integrations to make it easy to connect to your LLM applications.

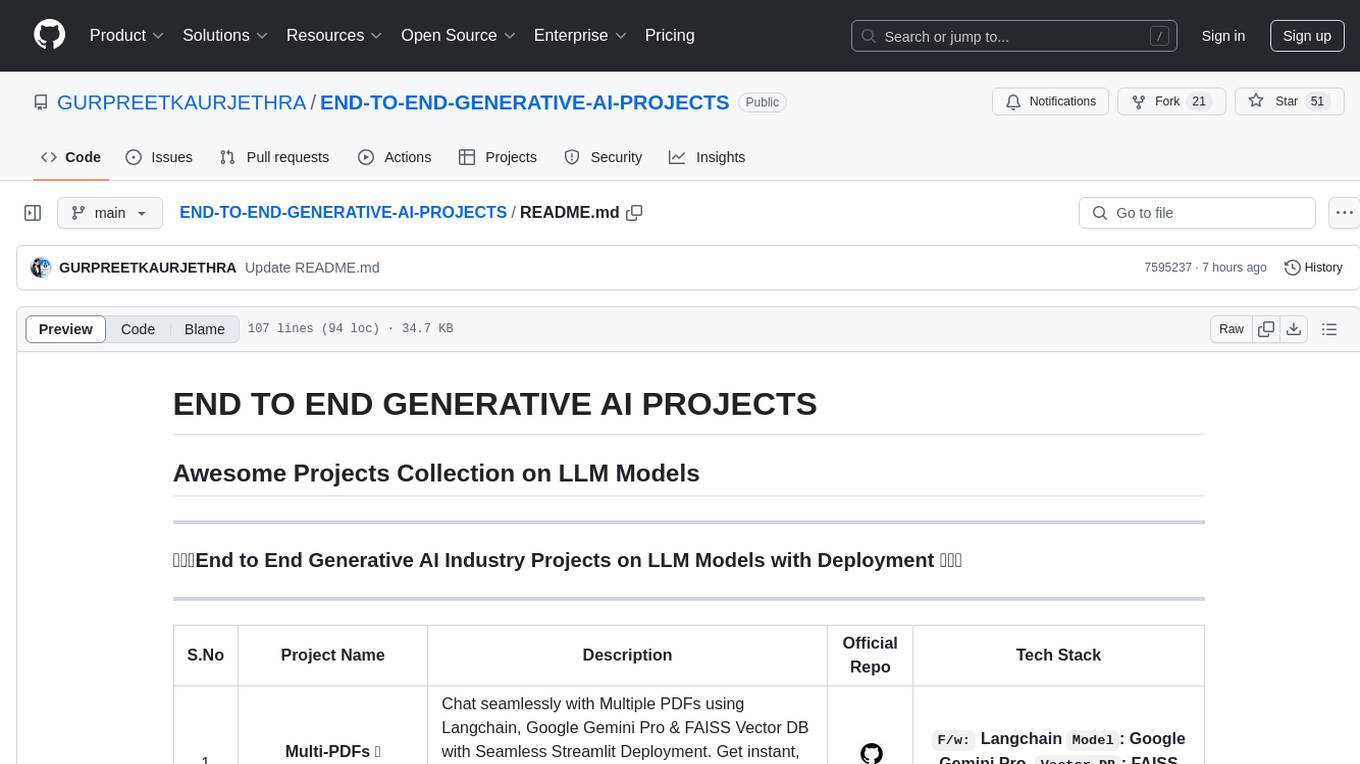

END-TO-END-GENERATIVE-AI-PROJECTS

The 'END TO END GENERATIVE AI PROJECTS' repository is a collection of awesome industry projects utilizing Large Language Models (LLM) for various tasks such as chat applications with PDFs, image to speech generation, video transcribing and summarizing, resume tracking, text to SQL conversion, invoice extraction, medical chatbot, financial stock analysis, and more. The projects showcase the deployment of LLM models like Google Gemini Pro, HuggingFace Models, OpenAI GPT, and technologies such as Langchain, Streamlit, LLaMA2, LLaMAindex, and more. The repository aims to provide end-to-end solutions for different AI applications.

Efficient-Multimodal-LLMs-Survey

Efficient Multimodal Large Language Models: A Survey provides a comprehensive review of efficient and lightweight Multimodal Large Language Models (MLLMs), focusing on model size reduction and cost efficiency for edge computing scenarios. The survey covers the timeline of efficient MLLMs, research on efficient structures and strategies, and their applications, while also discussing current limitations and future directions.

Efficient-Multimodal-LLMs-Survey

Efficient Multimodal Large Language Models: A Survey provides a comprehensive review of efficient and lightweight Multimodal Large Language Models (MLLMs), focusing on model size reduction and cost efficiency for edge computing scenarios. The survey covers the timeline of efficient MLLMs, research on efficient structures and strategies, and applications. It discusses current limitations and future directions in efficient MLLM research.

llm-app-stack

LLM App Stack, also known as Emerging Architectures for LLM Applications, is a comprehensive list of available tools, projects, and vendors at each layer of the LLM app stack. It covers various categories such as Data Pipelines, Embedding Models, Vector Databases, Playgrounds, Orchestrators, APIs/Plugins, LLM Caches, Logging/Monitoring/Eval, Validators, LLM APIs (proprietary and open source), App Hosting Platforms, Cloud Providers, and Opinionated Clouds. The repository aims to provide a detailed overview of tools and projects for building, deploying, and maintaining enterprise data solutions, AI models, and applications.

MOSS-TTS

MOSS-TTS Family is an open-source speech and sound generation model family designed for high-fidelity, high-expressiveness, and complex real-world scenarios. It includes five production-ready models: MOSS-TTS, MOSS-TTSD, MOSS-VoiceGenerator, MOSS-TTS-Realtime, and MOSS-SoundEffect, each serving specific purposes in speech generation, dialogue, voice design, real-time interactions, and sound effect generation. The models offer features like long-speech generation, fine-grained control over phonemes and duration, multilingual synthesis, voice cloning, and real-time voice agents.

oumi

Oumi is an open-source platform for building state-of-the-art foundation models, offering tools for data preparation, training, evaluation, and deployment. It supports training and fine-tuning models with various parameters, working with text and multimodal models, synthesizing and curating training data, deploying models efficiently, evaluating models comprehensively, and running on different platforms. Oumi provides a consistent API, reliability, and flexibility for research purposes.

RLinf

RLinf is a flexible and scalable open-source infrastructure designed for post-training foundation models via reinforcement learning. It provides a robust backbone for next-generation training, supporting open-ended learning, continuous generalization, and limitless possibilities in intelligence development. The tool offers unique features like Macro-to-Micro Flow, flexible execution modes, auto-scheduling strategy, embodied agent support, and fast adaptation for mainstream VLA models. RLinf is fast with hybrid mode and automatic online scaling strategy, achieving significant throughput improvement and efficiency. It is also flexible and easy to use with multiple backend integrations, adaptive communication, and built-in support for popular RL methods. The roadmap includes system-level enhancements and application-level extensions to support various training scenarios and models. Users can get started with complete documentation, quickstart guides, key design principles, example gallery, advanced features, and guidelines for extending the framework. Contributions are welcome, and users are encouraged to cite the GitHub repository and acknowledge the broader open-source community.

LLMs-from-scratch-CN

This repository is a Chinese translation of the GitHub project 'LLMs-from-scratch', including detailed markdown notes and related Jupyter code. The translation process aims to maintain the accuracy of the original content while optimizing the language and expression to better suit Chinese learners' reading habits. The repository features detailed Chinese annotations for all Jupyter code, aiding users in practical implementation. It also provides various supplementary materials to expand knowledge. The project focuses on building Large Language Models (LLMs) from scratch, covering fundamental constructions like Transformer architecture, sequence modeling, and delving into deep learning models such as GPT and BERT. Each part of the project includes detailed code implementations and learning resources to help users construct LLMs from scratch and master their core technologies.

For similar tasks

maxtext

MaxText is a high-performance, highly scalable, open-source LLM written in pure Python/Jax and targeting Google Cloud TPUs and GPUs for training and inference. MaxText achieves high MFUs and scales from single host to very large clusters while staying simple and "optimization-free" thanks to the power of Jax and the XLA compiler. MaxText aims to be a launching off point for ambitious LLM projects both in research and production. We encourage users to start by experimenting with MaxText out of the box and then fork and modify MaxText to meet their needs.

swift

SWIFT (Scalable lightWeight Infrastructure for Fine-Tuning) supports training, inference, evaluation and deployment of nearly **200 LLMs and MLLMs** (multimodal large models). Developers can directly apply our framework to their own research and production environments to realize the complete workflow from model training and evaluation to application. In addition to supporting the lightweight training solutions provided by [PEFT](https://github.com/huggingface/peft), we also provide a complete **Adapters library** to support the latest training techniques such as NEFTune, LoRA+, LLaMA-PRO, etc. This adapter library can be used directly in your own custom workflow without our training scripts. To facilitate use by users unfamiliar with deep learning, we provide a Gradio web-ui for controlling training and inference, as well as accompanying deep learning courses and best practices for beginners. Additionally, we are expanding capabilities for other modalities. Currently, we support full-parameter training and LoRA training for AnimateDiff.

ipex-llm

IPEX-LLM is a PyTorch library for running Large Language Models (LLMs) on Intel CPUs and GPUs with very low latency. It provides seamless integration with various LLM frameworks and tools, including llama.cpp, ollama, Text-Generation-WebUI, HuggingFace transformers, and more. IPEX-LLM has been optimized and verified on over 50 LLM models, including LLaMA, Mistral, Mixtral, Gemma, LLaVA, Whisper, ChatGLM, Baichuan, Qwen, and RWKV. It supports a range of low-bit inference formats, including INT4, FP8, FP4, INT8, INT2, FP16, and BF16, as well as finetuning capabilities for LoRA, QLoRA, DPO, QA-LoRA, and ReLoRA. IPEX-LLM is actively maintained and updated with new features and optimizations, making it a valuable tool for researchers, developers, and anyone interested in exploring and utilizing LLMs.

llm-twin-course

The LLM Twin Course is a free, end-to-end framework for building production-ready LLM systems. It teaches you how to design, train, and deploy a production-ready LLM twin of yourself powered by LLMs, vector DBs, and LLMOps good practices. The course is split into 11 hands-on written lessons and the open-source code you can access on GitHub. You can read everything and try out the code at your own pace.

Awesome-LLM-Inference

Awesome-LLM-Inference: A curated list of 📙Awesome LLM Inference Papers with Codes, check 📖Contents for more details. This repo is still updated frequently ~ 👨💻 Welcome to star ⭐️ or submit a PR to this repo!

lingo

Lingo is a lightweight ML model proxy that runs on Kubernetes, allowing you to run text-completion and embedding servers without changing OpenAI client code. It supports serving OSS LLMs, is compatible with OpenAI API, plug-and-play with messaging systems, scales from zero based on load, and has zero dependencies. Namespaced with no cluster privileges needed.

Awesome-LLM-Compression

Awesome LLM compression research papers and tools to accelerate LLM training and inference.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.