chatluna

多平台模型接入,可扩展,多种输出格式,提供大语言模型聊天服务的插件 | A bot plugin for LLM chat with multi-model integration, extensibility, and various output formats

Stars: 396

Chatluna is a machine learning model plugin that provides chat services with large language models. It is highly extensible, supports multiple output formats, and offers features like custom conversation presets, rate limiting, and context awareness. Users can deploy Chatluna under Koishi without additional configuration. The plugin supports various models/platforms like OpenAI, Azure OpenAI, Google Gemini, and more. It also provides preset customization using YAML files and allows for easy forking and development within Koishi projects. However, the project lacks web UI, HTTP server, and project documentation, inviting contributions from the community.

README:

| 预设 | Agent 模式 & 流式输出 | 图像渲染输出 |

|---|---|---|

|

|

|

- 🚀 高度可扩展(基于 LangChain 和 Koishi API)

- 🎭 自定义对话预设

- 🛡️ 速率限制和黑名单系统

- 🎨 多格式输出(文本、语音、图像、混合)

- 🧠 上下文感知和 长期记忆

- 🔀 三种模式:聊天、浏览、Agent

- 🔒 内容审核(通过 Koishi 审核服务)

- [x] 基于房间的对话系统

- [x] 回复内容审核 (基于其他插件提供的 censor 服务)

- [x] 语音输出支持(即文字转语音,基于 initialencounter 佬的 vits服务)

- [x] 图片渲染回复

- [x] 接入更多模型/平台

- [x] 预设系统

- [x]

导入或导出会话记录(实际未完成,已放弃支持) - [x] 重构到 v1 版本

- [x] 流式响应支持

- [x] 图片多模态输入支持

- [x] MCP 协议客户端支持

- [x] i18n 本地化支持

我们可在 Koishi 下直接安装本插件使用基础功能而无需额外配置。

阅读 此文档 了解更多。

我们目前支持以下模型/平台:

| 模型/平台 | 接入方式 | 特性 | 注意事项 |

|---|---|---|---|

| OpenAI | 本地 Client,官方 API 接入 | 可自定义人格,支持插件/浏览模式等聊天模式 | API 接入需要付费 |

| Azure OpenAI | 本地 Client,官方 API 接入 | 可自定义人格,支持插件/浏览模式等聊天模式 | API 接入需要付费 |

| Google Gemini | 本地 Client,官方 API 接入 | 速度快,性能超越 GPT-3.5 | 需要有 Gemini 访问权限账号,可能收费 |

| Claude API | 本地 Client, 官方 API 接入 | 超大上下文,大部分情况下能超过 GPT 3.5,需要 API KEY,收费 | 可能较贵 |

| Deepseek | 本地 Client,官方 API 接入 | 国产知名模型 | 实测效果逼近或超越 gpt-4o |

| 通义千问 | 本地 Client,官方 API 接入 | 阿里出品国产模型,有开源版本,有免费额度 | 实测效果比智谱略好 |

| 豆包 | 本地 Client,官方 API 接入 | 字节出品模型,送免费额度 | 实测效果比智谱略好 |

| 智谱 | 本地 Client,官方 API 接入 | ChatGLM,新人注册可获取免费 Token 额度 | 实测效果比讯飞星火略好 |

| 讯飞星火 | 本地 Client,官方 API 接入 | 国产模型,新人注册可获取免费 Token 额度 | 实测效果约等于 GPT 3.5 |

| 文心一言 | 本地 Client,官方 API 接入 | 百度出品系列模型模型 | 实测效果比讯飞星火略差 |

| 混元大模型 | 本地 Client,官方 API 接入 | 腾讯出品系列大模型 | 实测效果比文心一言好 |

| Ollama | 本地 Client,自搭建 API 接入 | 知名开源模型合集,支持 CPU / GPU 混合部署,可本地搭建 | 需要自己搭建后端 API,要求一定的配置 |

| RWKV | 本地 Client,自搭建 API 接入 | 知名开源模型,可本地搭建 | 需要自己搭建后端 API,要求一定的配置 |

为模型提供网络搜索能力 我们支持:

- Google (API)

- Bing (API / Web)

- DuckDuckGO (Lite)

- Tavily (API)

从 1.0.0-alpha.10 版本开始,我们使用更加可定制化的预设。新的人格预设使用 yaml 做为配置文件。

你可以点这里来查看我们默认附带的人格文件:catgirl.yml

我们默认的预设文件夹路径为 你当前运行插件的 koishi 目录的路径+/data/chathub/presets 。

所有的预设文件都是从上面的文件夹上加载的。因此你可以自由添加和编辑预设文件在这个文件夹下,然后使用命令来切换人格预设。

如需了解更多,可查看此文档。

在任意 Koishi 模版项目上运行下列指令来克隆 ChatLuna:

# yarn

yarn clone ChatLunaLab/chatluna

# npm

npm run clone ChatLunaLab/chatluna可将上面 ChatLunaLab/chatluna 替换成你自己 Fork 后的项目地址。

编辑模版项目根目录下的 tsconfig.json 文件,在 compilerOptions.paths 中添加 ChatLuna 项目路径。

{

"extends": "./tsconfig.base",

"compilerOptions": {

"baseUrl": ".",

"paths": {

"koishi-plugin-chatluna-*": ["external/chatluna/packages/*/src"]

}

}

}由于项目本身比较复杂,初始使用必须构建一次。

# yarn

yarn workspace @root/chatluna-koishi build

# npm

npm run build -w @root/chatluna-koishi完成!现在即可在根项目中使用 yarn dev 或 npm run dev 启动模版项目并二次开发 ChatLuna。

虽然 Koishi 支持模块热替换(hmr),但本项目可能并未完全兼容。

如果你在使用 hmr 二次开发本项目时出现了 Bug,请在 Issue 中提出,并按上面步骤重新构建项目并重启 Koishi 以尝试修复。

目前 ChatLuna 项目组产能极为稀缺,没有更多产能来完成下面的目标:

- [ ] Web UI

- [ ] Http Server

- [x] Project Documentation

欢迎提交 Pull Request 或进行讨论,我们非常欢迎您的贡献!

本项目由ChatLunaLab 开发。

ChatLuna(下称本项目) 是一个基于大型语言模型的对话机器人框架。我们致力于与开源社区合作,推动大模型技术的发展。我们强烈呼吁开发者和其他使用者遵守开源协议,确保不将本项目(以及社区推动的基于本项目的其他衍生产品)的框架和代码以及相关衍生物用于可能对国家和社会造成危害的任何目的,以及未经过安全评估和备案的服务。

本项目不直接提供任何生成式人工智能服务的支持,需要使用者自行从提供了生产式人工智能服务的组织或个人获取使用的算法 API。

如果您使用了本项目,请您遵循当地地区的法律法规,使用在当地地区内可用的生产式人工智能服务算法。

本项目不对算法生成的结果负责,所有结果和操作均由使用者自行负责。

本项目的相关信息存储均由用户自行配置来源,项目本身不提供直接的信息存储。

本项目不承担使用者导致的数据安全、舆情风险或发生任何模型被误导、滥用、传播、不当利用而产生的风险和责任。

本项目在编写时也参考了其他的开源项目,特别感谢以下项目:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chatluna

Similar Open Source Tools

chatluna

Chatluna is a machine learning model plugin that provides chat services with large language models. It is highly extensible, supports multiple output formats, and offers features like custom conversation presets, rate limiting, and context awareness. Users can deploy Chatluna under Koishi without additional configuration. The plugin supports various models/platforms like OpenAI, Azure OpenAI, Google Gemini, and more. It also provides preset customization using YAML files and allows for easy forking and development within Koishi projects. However, the project lacks web UI, HTTP server, and project documentation, inviting contributions from the community.

llamabot

LlamaBot is a Pythonic bot interface to Large Language Models (LLMs), providing an easy way to experiment with LLMs in Jupyter notebooks and build Python apps utilizing LLMs. It supports all models available in LiteLLM. Users can access LLMs either through local models with Ollama or by using API providers like OpenAI and Mistral. LlamaBot offers different bot interfaces like SimpleBot, ChatBot, QueryBot, and ImageBot for various tasks such as rephrasing text, maintaining chat history, querying documents, and generating images. The tool also includes CLI demos showcasing its capabilities and supports contributions for new features and bug reports from the community.

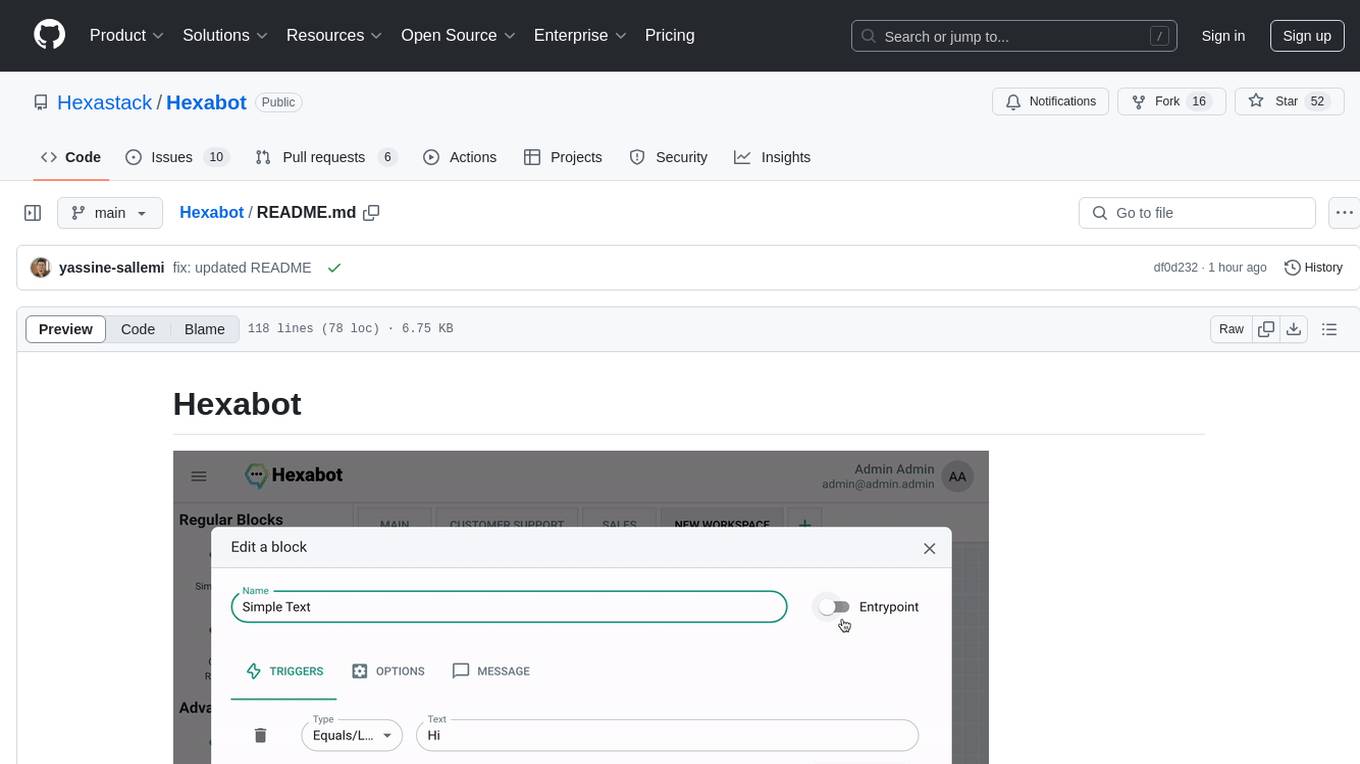

Hexabot

Hexabot Community Edition is an open-source chatbot solution designed for flexibility and customization, offering powerful text-to-action capabilities. It allows users to create and manage AI-powered, multi-channel, and multilingual chatbots with ease. The platform features an analytics dashboard, multi-channel support, visual editor, plugin system, NLP/NLU management, multi-lingual support, CMS integration, user roles & permissions, contextual data, subscribers & labels, and inbox & handover functionalities. The directory structure includes frontend, API, widget, NLU, and docker components. Prerequisites for running Hexabot include Docker and Node.js. The installation process involves cloning the repository, setting up the environment, and running the application. Users can access the UI admin panel and live chat widget for interaction. Various commands are available for managing the Docker services. Detailed documentation and contribution guidelines are provided for users interested in contributing to the project.

LocalLLMClient

LocalLLMClient is a Swift package designed to interact with local Large Language Models (LLMs) on Apple platforms. It supports GGUF, MLX models, and the FoundationModels framework, providing streaming API, multimodal capabilities, and tool calling functionalities. Users can easily integrate this tool to work with various models for text generation and processing. The package also includes advanced features for low-level API control and multimodal image processing. LocalLLMClient is experimental and subject to API changes, offering support for iOS, macOS, and Linux platforms.

nekro-agent

Nekro Agent is an AI chat plugin and proxy execution bot that is highly scalable, offers high freedom, and has minimal deployment requirements. It features context-aware chat for group/private chats, custom character settings, sandboxed execution environment, interactive image resource handling, customizable extension development interface, easy deployment with docker-compose, integration with Stable Diffusion for AI drawing capabilities, support for various file types interaction, hot configuration updates and command control, native multimodal understanding, visual application management control panel, CoT (Chain of Thought) support, self-triggered timers and holiday greetings, event notification understanding, and more. It allows for third-party extensions and AI-generated extensions, and includes features like automatic context trigger based on LLM, and a variety of basic commands for bot administrators.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

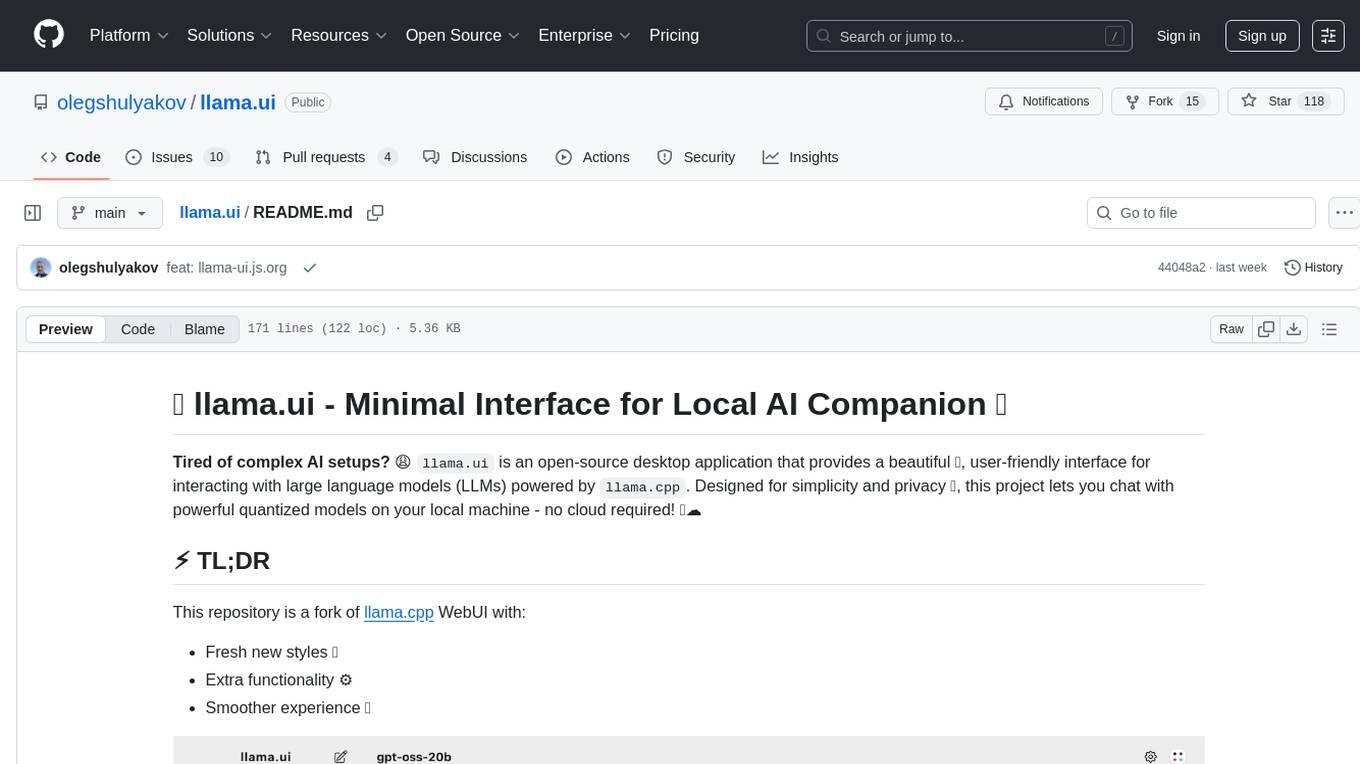

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

arcade-ai

Arcade AI is a developer-focused tooling and API platform designed to enhance the capabilities of LLM applications and agents. It simplifies the process of connecting agentic applications with user data and services, allowing developers to concentrate on building their applications. The platform offers prebuilt toolkits for interacting with various services, supports multiple authentication providers, and provides access to different language models. Users can also create custom toolkits and evaluate their tools using Arcade AI. Contributions are welcome, and self-hosting is possible with the provided documentation.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

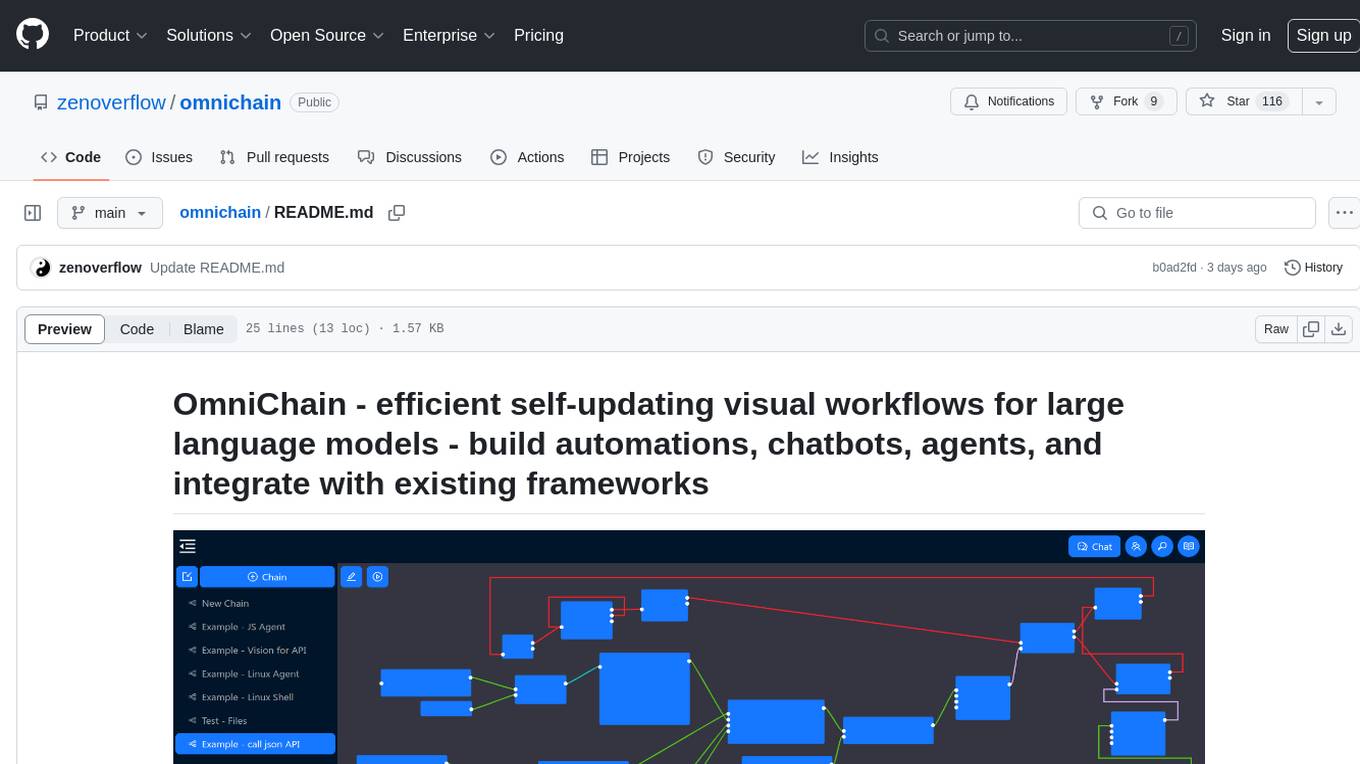

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

ai-manus

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment. It offers deployment with minimal dependencies, supports multiple tools like Terminal, Browser, File, Web Search, and messaging tools, allocates separate sandboxes for tasks, manages session history, supports stopping and interrupting conversations, file upload and download, and is multilingual. The system also provides user login and authentication. The project primarily relies on Docker for development and deployment, with model capability requirements and recommended Deepseek and GPT models.

genkit

Firebase Genkit (beta) is a framework with powerful tooling to help app developers build, test, deploy, and monitor AI-powered features with confidence. Genkit is cloud optimized and code-centric, integrating with many services that have free tiers to get started. It provides unified API for generation, context-aware AI features, evaluation of AI workflow, extensibility with plugins, easy deployment to Firebase or Google Cloud, observability and monitoring with OpenTelemetry, and a developer UI for prototyping and testing AI features locally. Genkit works seamlessly with Firebase or Google Cloud projects through official plugins and templates.

vibe

Vibe Design System is a collection of packages for React.js development, providing components, styles, and guidelines to streamline the development process and enhance user experience. It includes a Core component library, Icons library, Testing utilities, Codemods, and more. The system also features an MCP server for intelligent assistance with component APIs, usage examples, icons, and best practices. Vibe 2 is no longer actively maintained, with users encouraged to upgrade to Vibe 3 for the latest improvements and ongoing support.

For similar tasks

chatluna

Chatluna is a machine learning model plugin that provides chat services with large language models. It is highly extensible, supports multiple output formats, and offers features like custom conversation presets, rate limiting, and context awareness. Users can deploy Chatluna under Koishi without additional configuration. The plugin supports various models/platforms like OpenAI, Azure OpenAI, Google Gemini, and more. It also provides preset customization using YAML files and allows for easy forking and development within Koishi projects. However, the project lacks web UI, HTTP server, and project documentation, inviting contributions from the community.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.