burpference

A research project to add some brrrrrr to Burp

Stars: 92

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

README:

Experimenting with yarrr' Burp Proxy tab going brrrrrrrrrrrrr.

• Report Bug •

- burpference

"burpference" started as a research idea of offensive agent capabilities and is a fun take on Burp Suite and running inference. The extension is open-source and designed to capture in-scope HTTP requests and responses from Burp's proxy history and ship them to a remote LLM API in JSON format. It's designed with a flexible approach where you can configure custom system prompts, store API keys and select remote hosts from numerous model providers as well as the ability for you to create your own API configuration. The idea is for an LLM to act as an agent in an offensive web application engagement to leverage your skills and surface findings and lingering vulnerabilities. By being able to create your own configuration and model provider allows you to also host models locally via Ollama to prevent potential high inference costs and potential network delays or rate limits.

Some key features:

- Automated Response Capture: Burp Suite acts as your client monitor, automatically capturing responses that fall within your defined scope. This extension listens for, captures, and processes these details with an offensive-focused agent.

-

API Integration: Once requests and response streams are captured, they are packaged and forwarded to your configured API endpoint in JSON format, including any necessary system-level prompts or authentication tokens.

- Only in-scope items are sent, optimizing resource usage and avoiding unnecessary API calls.

- By default, certain MIME types are excluded.

- Color-coded tabs display

critical/high/medium/low/informationalfindings from your model for easy visualization.

-

Comprehensive Logging: A logging system allows you to review intercepted responses, API requests sent, and replies received—all clearly displayed for analysis.

- A clean table interface displaying all logs, intercepted responses, API calls, and status codes for comprehensive engagement tracking.

- Stores inference logs in both the "Inference Logger" tab as a live preview and a timestamped file in the /logs directory.

-

Native Burp Reporting: burpference' system prompt invokes the model to make an assessment based on severity level of the finding which is color-coded (a heatmap related to the severity level) in the extenstion tab.

- Additionally, burpference "findings" are created as issues in the Burp Scanner navigation bar available across all tabs in the Burp UI.

-

Flexible Configuration: Customize system prompts, API keys, or remote hosts as needed. Use your own configuration files for seamless integration with your workflow.

- Supports custom configurations, allowing you to load and switch between system prompts, API keys, and remote hosts

- Several examples are provided in the repository, and contributions for additional provider plugins are welcome.

So grab yer compass, hoist the mainsail, and let burpference be yer guide as ye plunder the seven seas of HTTP traffic! Yarrr'!

Before using Burpference, ensure you have the following:

- Due to it's awesomeness, burpference may require higher system resources to run optimally, especially if using local models. Trust the process and make the machines go brrrrrrrrrrrrr!

- Installed Burp Suite (Community or Professional edition).

- Downloaded and set up Jython standalone

.jarfile (a Python interpreter compatible with Java) to run Python-based extensions in Burp Suite.- You do not need Python2.x runtime in your environment for this to work.

- The

registerExtenderCallbacksreads a configuration file specific to the remote endpoint's input requirements. Ensure this exists in your environment and Burp has the necessary permissions to access it's location on the filesystem.-

Important: as Burp Suite cannot read from a filesystem's

osenvironment, you will need to explicitly include API key values in the configuration.jsonfiles per-provider. - If you intend to fork or contribute to burpference, ensure that you have excluded the files from git tracking via

.gitignore. - There's also a pre-commit hook in the repo as an additional safety net. Install pre-commit hooks here.

-

Important: as Burp Suite cannot read from a filesystem's

- Setup relevant directory permissions for burpference to create log files:

chmod -R 755 logs configs

In some cases when loading the extension you may experience directory permission write issues and as such its recommended to restart Burp Suite following the above.

- Ollama locally installed if using this provider plugin, example config and the model running locally - ie

ollama run mistral-small(model docs).

If Burp Suite is not already installed, download it from: Burp Suite Community/Professional

Jython enables Burp Suite to run Python-based extensions. You will need to download and configure it within Burp Suite.

- Go to the Jython Downloads Page.

- Download the standalone Jython

.jarfile (e.g.,jython-standalone-2.7.4.jar). - Open Burp Suite.

- Go to the

Extensionstab in Burp Suite. - Under the

Optionstab, scroll down to the Python Environment section. - Click Select File, and choose the

jython-standalone-2.7.4.jarfile you just downloaded. - Click Apply to load the Jython environment into Burp Suite.

Download the latest supported release from the repo, unzip it and add it as a python-based extension in Burp Suite. It's recommended to save this in a ~/git directory based on the current code and how the logs and configs are structured.

- Open Burp Suite.

- Navigate to the Extensions tab.

- Click on Add to install a new extension.

- In the dialog box:

- Extension Type: Choose Python and the

burpference/burpference.pyfile, this will instruct Burp Suite to initialize the extension by invoking theregisterExtenderCallbacksmethod. Click Next and the extension will be loaded. 🚀

- Extension Type: Choose Python and the

If you prefer to build from source, clone the repo and follow the steps above:

-

Download or clone the Burpference project from GitHub:

git clone https://github.com/dreadnode/burpference.git

Head over to the configuration docs!

We also recommend setting up a custom hotkey in Burp to save clicks.

Longer-term roadmap is a potential Kotlin-based successor (mainly due to the limitations of Jython with the Extender API) or additionally, compliment burpference.

The below bullets are cool ideas for the repo at a further stage or still actively developing.

-

Scanner

- An additional custom one-click "scanner" tab which scans an API target/schema with a selected model and reports findings/payloads and PoCs.

-

Conversations

- Enhanced conversation turns with the model to reflect turns for both HTTP requests and responses to build context.

-

Prompt Tuning:

- Modularize a centralized source of prompts sent to all models.

- Grounding and context: Equip the model with context, providing links to OpenAPI schemas and developer documentation.

-

Offensive Agents and Tool Use

- Equip agents with burpference results detail and tool use for weaponization and exploitation phase.

-

Optimization:

- Extend functionality of selecting multiple configurations and sending results across multiple endpoints for optimal results.

- Introduce judge reward systems for findings.

The following known issues are something that have been reported so far and marked against issues in the repo.

We welcome any issues or contributions to the project, share the treasure! If you like our project, please feel free to drop us some love <3

By watching the repo, you can also be notified of any upcoming releases.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for burpference

Similar Open Source Tools

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

Local-Multimodal-AI-Chat

Local Multimodal AI Chat is a multimodal chat application that integrates various AI models to manage audio, images, and PDFs seamlessly within a single interface. It offers local model processing with Ollama for data privacy, integration with OpenAI API for broader AI capabilities, audio chatting with Whisper AI for accurate voice interpretation, and PDF chatting with Chroma DB for efficient PDF interactions. The application is designed for AI enthusiasts and developers seeking a comprehensive solution for multimodal AI technologies.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

coral-cloud

Coral Cloud Resorts is a sample hospitality application that showcases Data Cloud, Agents, and Prompts. It provides highly personalized guest experiences through smart automation, content generation, and summarization. The app requires licenses for Data Cloud, Agents, Prompt Builder, and Einstein for Sales. Users can activate features, deploy metadata, assign permission sets, import sample data, and troubleshoot common issues. Additionally, the repository offers integration with modern web development tools like Prettier, ESLint, and pre-commit hooks for code formatting and linting.

AIOLists

AIOLists is a stateless open source list management addon for Stremio that allows users to import and manage lists from various sources in one place. It offers unified search, metadata customization, Trakt integration, MDBList integration, external lists import, list sorting, customization options, watchlist updates, RPDB support, genre filtering, discovery lists, and shareable configurations. The addon aims to enhance the list management experience for Stremio users by providing a comprehensive set of features and functionalities.

aiCoder

aiCoder is an AI-powered tool designed to streamline the coding process by automating repetitive tasks, providing intelligent code suggestions, and facilitating the integration of new features into existing codebases. It offers a chat interface for natural language interactions, methods and stubs lists for code modification, and settings customization for project-specific prompts. Users can leverage aiCoder to enhance code quality, focus on higher-level design, and save time during development.

OpenCopilot

OpenCopilot allows you to have your own product's AI copilot. It integrates with your underlying APIs and can execute API calls whenever needed. It uses LLMs to determine if the user's request requires calling an API endpoint. Then, it decides which endpoint to call and passes the appropriate payload based on the given API definition.

langdrive

LangDrive is an open-source AI library that simplifies training, deploying, and querying open-source large language models (LLMs) using private data. It supports data ingestion, fine-tuning, and deployment via a command-line interface, YAML file, or API, with a quick, easy setup. Users can build AI applications such as question/answering systems, chatbots, AI agents, and content generators. The library provides features like data connectors for ingestion, fine-tuning of LLMs, deployment to Hugging Face hub, inference querying, data utilities for CRUD operations, and APIs for model access. LangDrive is designed to streamline the process of working with LLMs and making AI development more accessible.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

AntSK

AntSK is an AI knowledge base/agent built with .Net8+Blazor+SemanticKernel. It features a semantic kernel for accurate natural language processing, a memory kernel for continuous learning and knowledge storage, a knowledge base for importing and querying knowledge from various document formats, a text-to-image generator integrated with StableDiffusion, GPTs generation for creating personalized GPT models, API interfaces for integrating AntSK into other applications, an open API plugin system for extending functionality, a .Net plugin system for integrating business functions, real-time information retrieval from the internet, model management for adapting and managing different models from different vendors, support for domestic models and databases for operation in a trusted environment, and planned model fine-tuning based on llamafactory.

azure-search-openai-demo

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access a GPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval. The repo includes sample data so it's ready to try end to end. In this sample application we use a fictitious company called Contoso Electronics, and the experience allows its employees to ask questions about the benefits, internal policies, as well as job descriptions and roles.

Instrukt

Instrukt is a terminal-based AI integrated environment that allows users to create and instruct modular AI agents, generate document indexes for question-answering, and attach tools to any agent. It provides a platform for users to interact with AI agents in natural language and run them inside secure containers for performing tasks. The tool supports custom AI agents, chat with code and documents, tools customization, prompt console for quick interaction, LangChain ecosystem integration, secure containers for agent execution, and developer console for debugging and introspection. Instrukt aims to make AI accessible to everyone by providing tools that empower users without relying on external APIs and services.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

your-source-to-prompt.html

Your Source to Prompt is a single HTML file tool that allows users to easily select code files and combine them into a single text output. It runs entirely in the browser, ensuring local and secure operation without any external dependencies. The tool offers features like preset management, efficient file selection, context size awareness, hierarchical structure preview, minification, and user-friendly UI with dark mode. It aims to simplify the process of preparing code for Large Language Models (LLMs) by providing a well-structured prompt context.

prompty

Prompty is an asset class and format for LLM prompts designed to enhance observability, understandability, and portability for developers. The primary goal is to accelerate the developer inner loop. This repository contains the Prompty Language Specification and a documentation site. The Visual Studio Code extension offers a prompt playground to streamline the prompt engineering process.

ComfyUI-Tara-LLM-Integration

Tara is a powerful node for ComfyUI that integrates Large Language Models (LLMs) to enhance and automate workflow processes. With Tara, you can create complex, intelligent workflows that refine and generate content, manage API keys, and seamlessly integrate various LLMs into your projects. It comprises nodes for handling OpenAI-compatible APIs, saving and loading API keys, composing multiple texts, and using predefined templates for OpenAI and Groq. Tara supports OpenAI and Grok models with plans to expand support to together.ai and Replicate. Users can install Tara via Git URL or ComfyUI Manager and utilize it for tasks like input guidance, saving and loading API keys, and generating text suitable for chaining in workflows.

For similar tasks

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

obsidian-smart-composer

Smart Composer is an Obsidian plugin that enhances note-taking and content creation by integrating AI capabilities. It allows users to efficiently write by referencing their vault content, providing contextual chat with precise context selection, multimedia context support for website links and images, document edit suggestions, and vault search for relevant notes. The plugin also offers features like custom model selection, local model support, custom system prompts, and prompt templates. Users can set up the plugin by installing it through the Obsidian community plugins, enabling it, and configuring API keys for supported providers like OpenAI, Anthropic, and Gemini. Smart Composer aims to streamline the writing process by leveraging AI technology within the Obsidian platform.

swift-chat

SwiftChat is a fast and responsive AI chat application developed with React Native and powered by Amazon Bedrock. It offers real-time streaming conversations, AI image generation, multimodal support, conversation history management, and cross-platform compatibility across Android, iOS, and macOS. The app supports multiple AI models like Amazon Bedrock, Ollama, DeepSeek, and OpenAI, and features a customizable system prompt assistant. With a minimalist design philosophy and robust privacy protection, SwiftChat delivers a seamless chat experience with various features like rich Markdown support, comprehensive multimodal analysis, creative image suite, and quick access tools. The app prioritizes speed in launch, request, render, and storage, ensuring a fast and efficient user experience. SwiftChat also emphasizes app privacy and security by encrypting API key storage, minimal permission requirements, local-only data storage, and a privacy-first approach.

aiaio

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

langroid-examples

Langroid-examples is a repository containing examples of using the Langroid Multi-Agent Programming framework to build LLM applications. It provides a collection of scripts and instructions for setting up the environment, working with local LLMs, using OpenAI LLMs, and running various examples. The repository also includes optional setup instructions for integrating with Qdrant, Redis, Momento, GitHub, and Google Custom Search API. Users can explore different scenarios and functionalities of Langroid through the provided examples and documentation.

copilot

OpenCopilot is a tool that allows users to create their own AI copilot for their products. It integrates with APIs to execute calls as needed, using LLMs to determine the appropriate endpoint and payload. Users can define API actions, validate schemas, and integrate a user-friendly chat bubble into their SaaS app. The tool is capable of calling APIs, transforming responses, and populating request fields based on context. It is not suitable for handling large APIs without JSON transformers. Users can teach the copilot via flows and embed it in their app with minimal code.

DeepPavlov

DeepPavlov is an open-source conversational AI library built on PyTorch. It is designed for the development of production-ready chatbots and complex conversational systems, as well as for research in the area of NLP and dialog systems. The library offers a wide range of models for tasks such as Named Entity Recognition, Intent/Sentence Classification, Question Answering, Sentence Similarity/Ranking, Syntactic Parsing, and more. DeepPavlov also provides embeddings like BERT, ELMo, and FastText for various languages, along with AutoML capabilities and integrations with REST API, Socket API, and Amazon AWS.

For similar jobs

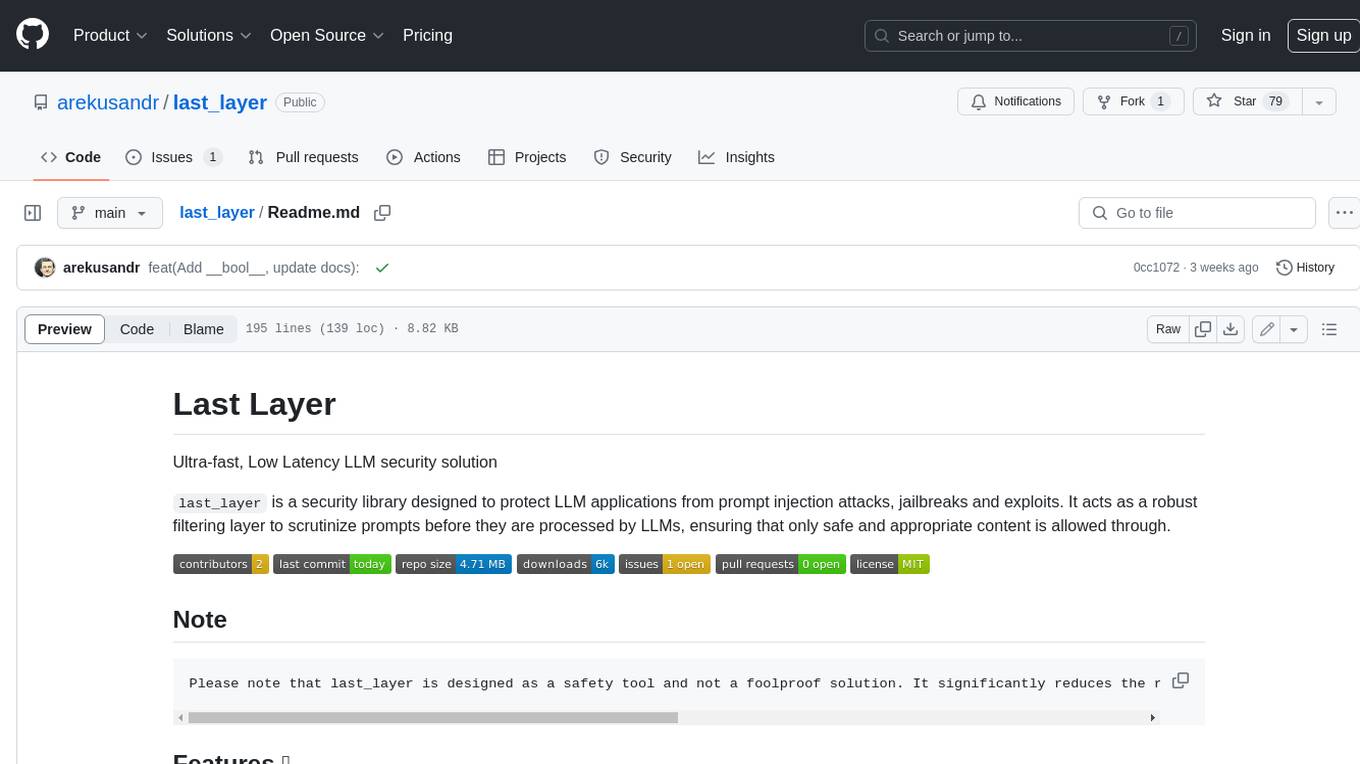

last_layer

last_layer is a security library designed to protect LLM applications from prompt injection attacks, jailbreaks, and exploits. It acts as a robust filtering layer to scrutinize prompts before they are processed by LLMs, ensuring that only safe and appropriate content is allowed through. The tool offers ultra-fast scanning with low latency, privacy-focused operation without tracking or network calls, compatibility with serverless platforms, advanced threat detection mechanisms, and regular updates to adapt to evolving security challenges. It significantly reduces the risk of prompt-based attacks and exploits but cannot guarantee complete protection against all possible threats.

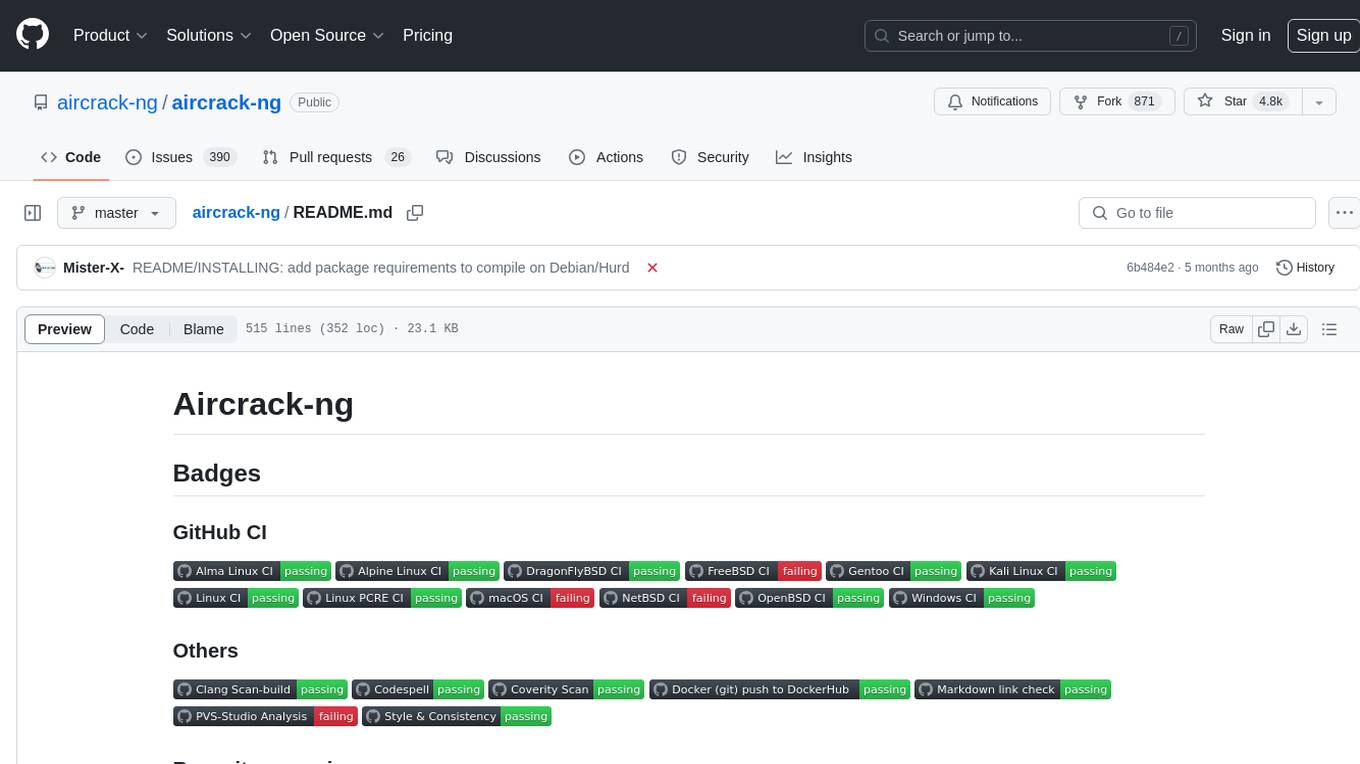

aircrack-ng

Aircrack-ng is a comprehensive suite of tools designed to evaluate the security of WiFi networks. It covers various aspects of WiFi security, including monitoring, attacking (replay attacks, deauthentication, fake access points), testing WiFi cards and driver capabilities, and cracking WEP and WPA PSK. The tools are command line-based, allowing for extensive scripting and have been utilized by many GUIs. Aircrack-ng primarily works on Linux but also supports Windows, macOS, FreeBSD, OpenBSD, NetBSD, Solaris, and eComStation 2.

reverse-engineering-assistant

ReVA (Reverse Engineering Assistant) is a project aimed at building a disassembler agnostic AI assistant for reverse engineering tasks. It utilizes a tool-driven approach, providing small tools to the user to empower them in completing complex tasks. The assistant is designed to accept various inputs, guide the user in correcting mistakes, and provide additional context to encourage exploration. Users can ask questions, perform tasks like decompilation, class diagram generation, variable renaming, and more. ReVA supports different language models for online and local inference, with easy configuration options. The workflow involves opening the RE tool and program, then starting a chat session to interact with the assistant. Installation includes setting up the Python component, running the chat tool, and configuring the Ghidra extension for seamless integration. ReVA aims to enhance the reverse engineering process by breaking down actions into small parts, including the user's thoughts in the output, and providing support for monitoring and adjusting prompts.

AutoAudit

AutoAudit is an open-source large language model specifically designed for the field of network security. It aims to provide powerful natural language processing capabilities for security auditing and network defense, including analyzing malicious code, detecting network attacks, and predicting security vulnerabilities. By coupling AutoAudit with ClamAV, a security scanning platform has been created for practical security audit applications. The tool is intended to assist security professionals with accurate and fast analysis and predictions to combat evolving network threats.

aif

Arno's Iptables Firewall (AIF) is a single- & multi-homed firewall script with DSL/ADSL support. It is a free software distributed under the GNU GPL License. The script provides a comprehensive set of configuration files and plugins for setting up and managing firewall rules, including support for NAT, load balancing, and multirouting. It offers detailed instructions for installation and configuration, emphasizing security best practices and caution when modifying settings. The script is designed to protect against hostile attacks by blocking all incoming traffic by default and allowing users to configure specific rules for open ports and network interfaces.

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

Academic_LLM_Sec_Papers

Academic_LLM_Sec_Papers is a curated collection of academic papers related to LLM Security Application. The repository includes papers sorted by conference name and published year, covering topics such as large language models for blockchain security, software engineering, machine learning, and more. Developers and researchers are welcome to contribute additional published papers to the list. The repository also provides information on listed conferences and journals related to security, networking, software engineering, and cryptography. The papers cover a wide range of topics including privacy risks, ethical concerns, vulnerabilities, threat modeling, code analysis, fuzzing, and more.

DeGPT

DeGPT is a tool designed to optimize decompiler output using Large Language Models (LLM). It requires manual installation of specific packages and setting up API key for OpenAI. The tool provides functionality to perform optimization on decompiler output by running specific scripts.